Considerations for Deploying Hadoop on Oracle Cloud Infrastructure

Many customers who run Hadoop have similar questions when exploring a migration to the cloud:

- How do we deploy, or migrate, Hadoop to the cloud?

- How do we secure Hadoop in the cloud?

- How do we implement HA and DR for Hadoop in the cloud?

- How do we achieve similar performance for Hadoop deployments in the cloud compared to on premise?

- How do we track and manage our costs while deploying multiple environments?

This article provides Oracle Cloud Infrastructure’s answers to these questions.

Deployment

When you subscribe to Oracle’s infrastructure as a service (IaaS), you have access to all the compute, storage, and network services associated with it. Deployments on Oracle Cloud Infrastructure are just like on premise deployments, in that the same versions and features are available for each Hadoop distribution.

Teraform and Resource Manager

Oracle Cloud Infrastructure engineering teams have partnered with each Hadoop ISV to enable deployment that leverages Terraform. Terraform allows you to deploy infrastructure as code (IaC), and this includes all aspects of a Hadoop ecosystem, from networking (virtual cloud networks, subnets, VNICs) and security access control lists, to compute and storage provisioning. Terraform is flexible, highly scalable, and a standard among many cloud providers.

You can choose whether to use these templates as a framework for deploying Hadoop on Oracle Cloud Infrastructure, or you can stay with existing deployment tooling that you used on premise. Both methods are valid.

If you want to use Terraform to deploy Hadoop, consider using Oracle Resource Manager. Consider the key benefits of using Resource Manager:

- Terraform state metadata is kept in a highly available location.

- Access to Resource Manager can be managed with the same security and audit tooling included for other Oracle Cloud Infrastructure services.

- Resource Manager removes the complexity associated with configuring Terraform for deployment on Oracle Cloud Infrastructure.

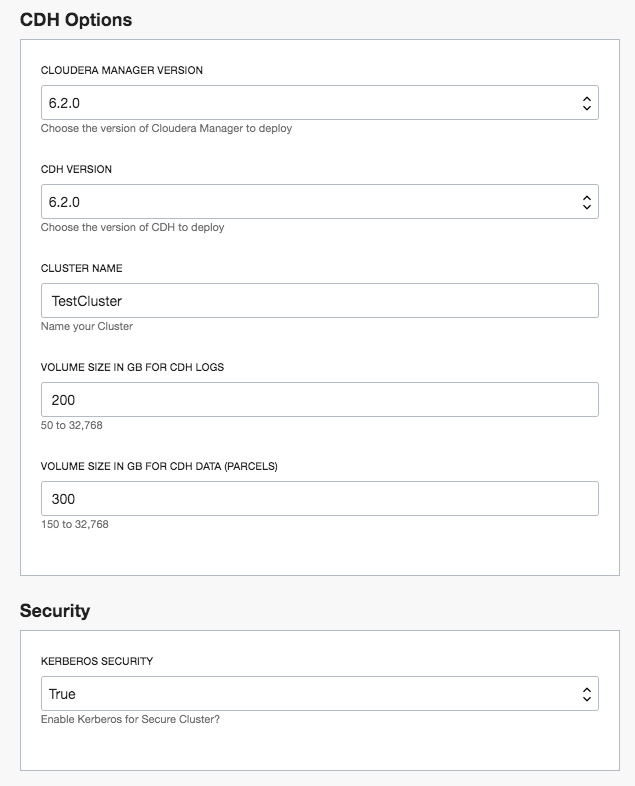

The Resource Manager interface supports YAML-based schema files populated with expected values for stack variables. This lets you define the shapes, software versions, and other parameters that are allowed for each variable in the stack.

Description of the illustration resource-manager-ui.png

After the schema file is populated, values are show in an easy-to-use UI. The schema file lets you have drop-down lists with these values, as well as custom entry fields where users can type or paste input.

Fields in the schema file can also have dependencies, so that if a user chooses a value in one field, other fields are shown or hidden based on that choice.

Cluster Service Topology

When deploying Hadoop, consider the following mapping of cluster service topology to node roles:

| Node Type | Hadoop Role | Hadoop Services |

|---|---|---|

| Edge | User Access from perimiter | Client Libraries, Oozie |

| Utility | Cluster Management | Cloudera Manager, Ambari, Metadata database |

| Master | Core Cluster Services | Zookeeper, NameNode, JournalNode, HIVE, HBase Master, Spark History, Job History, Resource Manager, HTTPFS, SOLR |

| Worker | Workload Execution, Data Storage | HDFS, NodeManager, Region Server, Kafka Broker, Spark Executor, Map/Reduce |

When choosing which compute shapes to use for these roles, best practices are described later in this solution. Also consider the following criteria:

- How many concurrent users will use the cluster?

Edge nodes should be scaled both in quantity and OCPU based on the number of concurrent users. Two concurrent users per OCPU is a good guideline, allowing for one thread per user. Additional edge nodes across fault domains also provides redundancy.

- Do you have specific Spark or HBase region server memory requirements?

Such requirements directly impact the quantity and composition of worker nodes. Sizing for HBase requires an understanding of the underlying application, and varies. Most customers already know their requirements for HBase deployment, specifically the number of region servers and memory allocated per server. Spark workloads similarly have an aggregate memory target that is achieved by factoring the number of worker nodes multiplied by available memory per worker.

- Do you have a specific HDFS capacity requirement?

Most customers have this requirement. But before you scale wide on a large number of bare metal DenseIO worker nodes, consider that NVMe-backed HDFS can be augmented by block volumes to achieve the required HDFS density, as described later in this solution. Oracle recommends that you understand HDFS requirements but also factor workload requirements so that you can “right size” the cluster to hit both targets while minimizing cost.

Migration

When customers running Hadoop decide to deploy to Oracle Cloud Infrastructure, they typically have a large volume of data to migrate. The bulk of this data is present in HDFS, with supporting cluster metadata present in a database.

Copying HDFS data directly over the internet can be challenging for several reasons:

- Data volume is too large, or available bandwidth is too constrained, to support over-the-wire data copy in any meaningful timeframe.

- Data is sensitive, and copying it over the Internet might not be allowed by governance or regulatory requirements.

- On premise cluster resources are constrained, and data copy would impact the ongoing workload.

Several approaches to data migration are supported. They are defined by the type of data being migrated.

HDFS Data Migration

There are three common ways to copy HDFS data to Oracle Cloud Infrastructure:

- Copy data directly from an on premise cluster to Object Storage in an Oracle Cloud Infrastructure region by using VPN over the Internet or with FastConnect. After this process is complete, an Oracle Cloud Infrastructure cluster can be rehydrated with the data from Object Storage.

- Copy data directly from an on premise cluster to an Oracle Cloud Infrastructure cluster either over the internet or by using FastConnect.

- Copy data to a Data Transfer Appliance, which is deployed to the customer data center, filled with data, and then shipped to Oracle and uploaded to Object Storage. An Oracle Cloud Infrastructure cluster can then be rehydrated with this data.

Technical details about each of these methods are discussed later in this solution.

Metadata Migration

Cluster metadata is usually much smaller than HDFS data and exists in a database in the on premise cluster.

Migration of cluster metadata depends on two factors: the Hadoop distribution in the source cluster, and which database is being used to store metadata. Transfer of this data is straightforward, and specific to each Hadoop distribution.

Specifics about each Hadoop distribution are presented later in this solution.

Security

Security in the cloud is especially important for Hadoop, and there are many ways to ensure that your deployment on Oracle Cloud Infrastructure remains secure.

First consider some Oracle Cloud Infrastructure-specific security controls:

- Leverage Identity and Access Management (IAM) to control who has access to cloud

resources, what type of access a group of users has, and to which specific

resources. This architecture can provide the following outcomes:

- Securely isolate cloud resources based on organizational structure

- Authenticate users to access cloud services over a browser interface, REST API, SDK, or CLI

- Authorize groups of users to perform actions on appropriate cloud resources

- Federation with existing identity providers

- Enable a managed service provider (MSP) or systems integrator (SI) to manage infrastructure assets while still allowing your operators to access resources

- Authorize application instances to make API calls against cloud services

- Audit security lists for all networks in the virtual cloud network (VCN). Make these rules as restrictive as possible, and allow only trusted traffic into Internet-facing subnets.

- Enable host firewalls on Internet-facing hosts, and allow only necessary traffic.

- Consider using security auditing regularly.

Encryption is also a good option for both data at rest and data in motion. Consider the following resources:

- Cloudera encryption mechanisms

- Hortonworks

- HDFS encryption at rest

- Wire encryption

- MapR encryption

Other Hadoop-specific security considerations include:

- Deploy clusters on subnets with private IP addresses, which exposes only necessary APIs or UIs to internet-facing subnets.

- Run Hadoop using cluster security

- Use edge nodes to access cluster services via SSH tunneling. This is even easier on Mac or Linux.

- Leverage Apache Knox to secure API endpoints.

- Leverage Apache Sentry or Cloudera Navigator for role-based access to cluster data and metadata.

Network Security

Because of the open nature of Hadoop and security considerations, most customers prefer to deploy their Hadoop cluster in a private subnet. Access to cluster services is then available only by using edge nodes, load balancing access to UIs, APIs, and service dashboards, or by direct access through FastConnect VPN or SSH tunneling through an edge proxy.

Public subnets augment the cluster deployment for edge nodes and utility nodes, which run internet-facing services (like Cloudera Manager or Ambari). This is entirely optional. You can choose to leverage FastConnect VPN and run your entire deployment as an extension of your on premise network topology. That requires only a customer-routable private IP segment, which is associated at the VCN level and then split into appropriate subnets in Oracle Cloud Infrastructure. Access is controlled using security lists, which apply at the subnet level. The best practice is to group resources that have similar access requirements into the same subnets.

Subnet Topology

The VCN covers a single, contiguous IPv4 CIDR block of your choice. Inside the VCN, individual IPv4 subnets can be deployed for cluster hosts. Access into each subnet is controlled by security lists. Additional security is controlled at the host level by firewalls.

The best practice is to segregate Hadoop cluster resources into a private subnet that is not directly accessible from the internet. Access to the cluster should be controlled through additional hosts in public-facing subnets and secured using appropriate security list rules to govern traffic between the public and private network segments. This model provides the best security for your Hadoop cluster.

- Public subnets can be used for edge nodes (user access) and for any services that need to expose a UI or API (such as Cloudera Manager or Ambari) for external access.

- Private subnets should be used for cluster hosts (master, worker) and are not directly accessible from the Internet. Instead, these require an intermediary host in a public subnet for access, a load balancer, or direct access by VPN, FastConnect, or SSH proxy.

Security Lists

Security lists control ingress and egress traffic at the subnet level. For Hadoop, it is best to have full unrestricted bi-directional access between subnets with cluster hosts for both TCP and UDP traffic. Public subnets should have highly restrictive security lists to allow only trusted ports (and even source IP addresses) for access to APIs and UIs.

High Availability

Hadoop has built-in methods for high availability (HA), and those aren’t covered here. What follows are best practices for leveraging Oracle Cloud Infrastructure for Hadoop to achieve HA.

Region Selection

Each region in Oracle Cloud Infrastructure consists of one to three availability domains. Each availability domain also consists of three fault domains. Choose a home region that is close to you and your business resources (like your data center). The composition of the region that you choose determines whether you can use a single-availability-domain deployment or a multiple-availability-domain deployment.

Single-Availability-Domain Deployment

Oracle recommends single-availability-domain deployment for Hadoop deployments on Oracle Cloud Infrastructure. For MapR deployments, this architecture is the only one supported. This deployment model is the most performant from a network perspective because intracluster traffic is contained to local network segments, which maintains low latency and high throughput. Resources in the availability domain are striped between fault domains to achieve HA of physical infrastructure.

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain contains three fault domains. Fault domains let you distribute your instances so that they aren’t on the same physical hardware within a single availability domain. A hardware failure or compute hardware maintenance that affects one fault domain doesn’t affect instances in other fault domains.

Multiple-Availability-Domain Deployment

Multiple-availability-domain deployments (availability domain spanning) are supported only by Cloudera and Hortonworks, but they’re also possible using Apache Hadoop.

However, consider the following caveats for availability domain spanning:

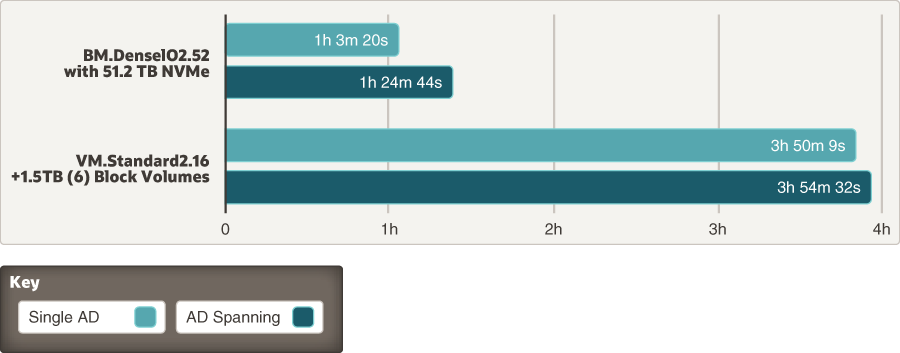

- Intracluster connectivity has to traverse WAN segments, which increases latency and lowers optimal throughput. This has a measurable impact on performance, especially with bare metal worker nodes. For smaller VM worker nodes, the performance impact is less noticeable.

- Not all Oracle Cloud Infrastructure regions support this model.

The following diagram shows the measured performance impact when using 10-TB TeraSort in a single availability domain versus availability domain spanning with a cluster that consists of five worker nodes and three master nodes:

Description of the illustration comparison-availability-domain-spanning.png

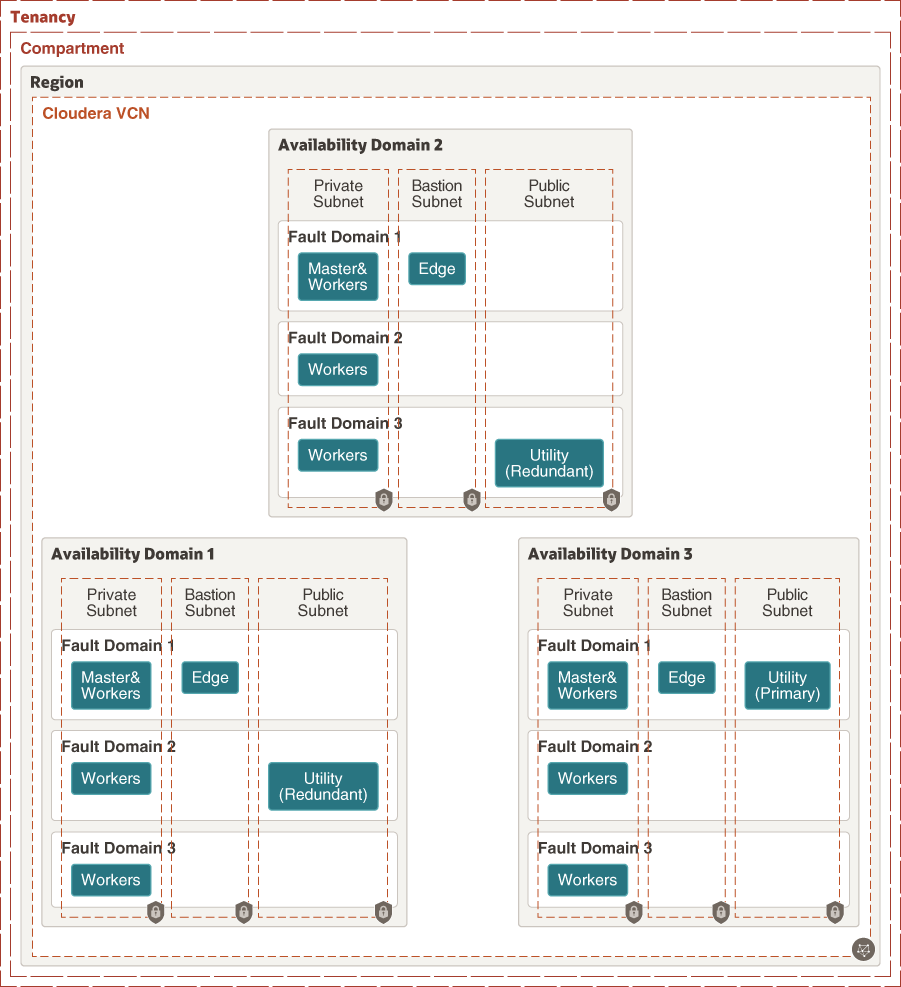

Availability domain spanning does provide additional redundancy of cluster services in a single region. Cluster resources can also leverage fault domains in each availability domain for additional logical “rack topology”; each fault domain is considered a “rack” for rack awareness. These benefits are illustrated by the following diagram.

Description of the illustration architecture-multiple-availability-domains.png

Disaster Recovery

Disaster recovery (DR) in Oracle Cloud Infrastructure depends directly on your Recovery Point Objective (RPO) and Recovery Time Objective (RTO) requirements.

If either the RPO or the RTO is near real-time, then your only option for Hadoop is to create a DR cluster in another availability domain or region, and then replicate data between the production and DR clusters. If the RPO and RTO requirements are more flexible, then we recommend leveraging Object Storage as a backup target for HDFS and cluster metadata. Schedule the copy process as often as needed to meet your RPO target, pushing data into Object Storage by using a tool such as DistCp (distributed copy). You can also back up databases (Oracle, MySQL) into Object Storage buckets, or replicate to additional database instances in other availability domains or regions.

If your DR requirements specify georedundancy for data that a single region can’t provide, you can also copy data in Object Storage between regions. If a disaster occurs, consider using Terraform to quickly provision resources in another availability domain (either in the same region or a different region) and rehydrate the DR cluster from data in Object Storage.

Cost Management

As detailed in the rest of this solution, there are several ways to “right-size” the compute versus storage requirements to reduce your infrastructure costs. Oracle Cloud Infrastructure has additional tools to help you manage the cost associated with a Hadoop deployment:

- You can leverage tagging for your deployments to make it easier to track consumption.

- You can set quotas at the compartment level to limit consumption by different lines of business. Consider using multiple compartments to manage production, QA, and development environments, and restrict the quotas as appropriate.

Leveraging the dynamic nature of cloud is also great for environments that might not need to be persistent. If you’re using Object Storage as a backup (or data lake) for your data, it’s easy to create environments when you need them by using Terraform, rehydrate HDFS with data from Object Storage, and destroy the environment when you no longer need it.

VM environments with block volumes can also be paused to halt Compute billing, and you are charged only for storage consumption.