Modern App Development - Machine Learning and Artificial Intelligence

Most modern machine learning toolkits are open source and written in Python. As such, a machine learning platform should provide native support for open source frameworks and Python. It should also give users the ability to customize their machine learning environments by either installing their own libraries or upgrading the ones already installed. The platform should let data scientists train their models on structured, unstructured, or semi-structured data while scaling the extract, transform, and load (ETL) or training steps vertically or horizontally across a number of compute resources.

Finally, a machine learning platform should ensure that models can be easily deployed for real-time consumption with minimal friction (ideally through a simple REST call), while preserving the lineage of the deployed model to ensure that it can be audited and reproduced.

This document presents the design principles associated with creating a machine learning platform and an optimal implementation path. Use this pattern to create machine learning platforms that meet the needs of your data scientist users.

Design Principles

This architecture implements modern app development principles in the following ways:

- Principle: Use lightweight open-source frameworks and mature programming

languages

Use conda environments instead of Python virtual environments or Docker images. We provide curated Data Science conda environments that contain a collection of Python libraries selected to address a particular use case. Conda environments typically provide the right level of isolation in Python, offer flexibility by allowing multiple environments to run in the same notebook environment or batch job, and are easier to build than Docker images. Conda environments are also easy to export and import because they are simple archives (gzip and zip).

Start with our ready-to-use set of conda environments. These environments are regularly upgraded to ensure that the packages that they contain reflect the latest version available. Each environment comes with documentation and code examples. The environments can be used for building, training, and deploying machine learning models in Python 3.6 and later. Adopt these environments to ensure compatibility between different architecture components.

- Principle: Build apps as services that communicate through APIs

Adopt the model deployment feature of Data Science, which deploys a model from the model catalog as a scalable HTTP endpoint that other apps can consume. Ensuring that the model training environment contains the same third-party dependencies as the deployment environment can be a challenge. Data Science model deployments address that challenge by pulling the right conda environment to run your model behind the HTTP endpoint. Model deployments also provide Oracle Cloud Infrastructure (OCI) Identity and Access Management (IAM) authentication and authorization so you don’t have to build and configure your own user and access management protocol.

An acceptable alternative is the deployment of machine learning models through Oracle Functions, which can be used as a backend to API Gateway. This option provides flexibility, especially when defining the API or using a different authorization protocol like OAuth or basic auth. However, it requires more work for you, is less automated, and is more prone to user error. Oracle Functions requires you to build your own Docker image and ensure that all the required dependencies and the Python runtime version match the training environment. Most data science teams should avoid this option.

- Principle: Automate build, test, and deployment

DevOps is to software development what MLOps is to machine learning: a series of processes and automation centered on building, testing, evaluating, deploying, and monitoring models in production. Favor the use of repeatable, auditable, and reproducible patterns through jobs and model deployments in Data Science. Avoid the training and deployment of models directly from a notebook session where it’s more difficult to build, run, and automate tests. Ensure that all code to prepare the data, train the model, and define the tests is version controlled. Run build and deployment pipelines through the OCI DevOps service or directly from jobs in Data Science. Run the series of model introspection tests that are included in the model catalog artifact boilerplate code. When run successfully, these tests minimize the number of errors that occur when the model is deployed to production.

- Principle: Use fully managed services to eliminate complexity across application

development, runtimes and data management

Whether a data scientist documents their data assets through OCI Data Catalog, performs large-scale data processing jobs with OCI Data Flow, or builds, trains, and deploys machine learning models through Data Science, all these solutions are fully managed. We take care of provisioning, patching, and securing these environments for you. A fully managed solution significantly lowers the operational burden on teams of data scientists, whose goals are to train and deploy models, not manage infrastructure. A good machine learning platform provides various infrastructure offerings (CPUs, GPUs, flexible shapes) with no additional configuration needed.

- Principle: Instrument end-to-end monitoring and tracing

All workloads should have basic health metrics emitted through the OCI Monitoring service. Users can define custom metric thresholds for alerts, and be notified or act when a given threshold is reached. This lets them monitor the operational health of their jobs, notebook sessions, or model deployments.

Enable users to emit custom logs to the OCI Logging service from jobs and model deployments. In most cases, data scientists also want to monitor the processes running in jobs (for example, training iteration or offline validation score) or capture feature vectors or model predictions from a model deployment. This is a common pattern. Ensure that they can access the OCI Logging service and perform simple exploratory analysis through the OCI Logging Analytics service.

- Principle: Implement a defense-in-depth approach to secure apps and

data

A good machine learning platform limits the access of data scientists to only the resources that they need to do their work. Follow the principle of least privilege on groups of data scientists. In addition, use resource principals over user principals for authenticating or authorizing to other resources. This practice prevents users from inserting their user principal credentials inside notebook sessions or jobs.

Ensure that all users have access to OCI Vault and that their credentials to access third-party data sources are stored and encrypted in Vault. Notebook sessions in particular should be accessible only to the users who created them; configure the access policies to grant access to notebook sessions and jobs only to the creator of those resources. This practice prevents multiple users from accessing the same notebook environment, sharing private keys, and overwriting the same piece of code. Limit egress access to the public internet whenever it makes sense. Configure and select secondary VNICs (virtual cloud network and subnet selection) for launching notebook sessions and jobs, and prevent users from downloading datasets or libraries from unsecured sites.

Architecture

You can implement the design principles by using a deployment based on this opinionated architecture.

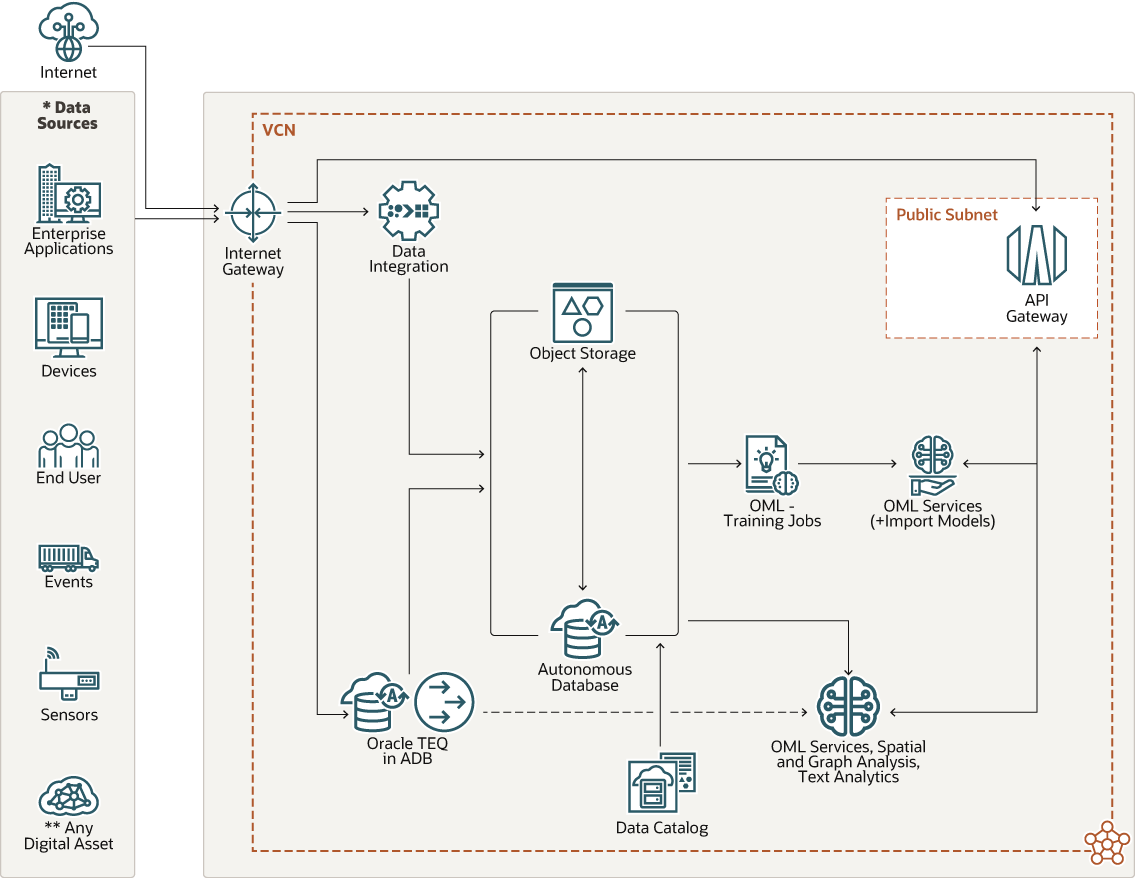

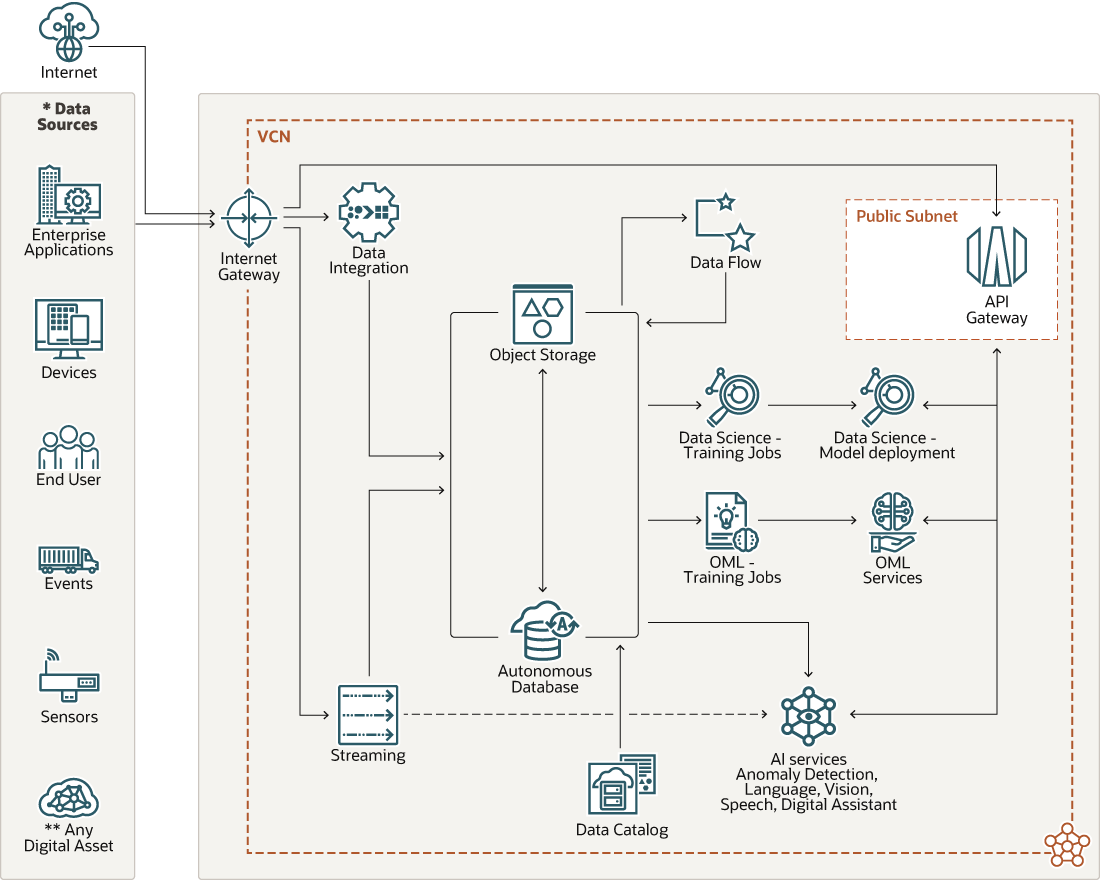

Description of the illustration maf_ai_ml_design_pattern.png

The architecture has the following components:

- Data Integration Service

Oracle Cloud Infrastructure Data Integration is a fully managed, multitenant, serverless, native cloud service that helps you with common ETL tasks such as ingesting data from different sources; cleansing, transforming, and reshaping that data; and efficiently loading it to target data sources on OCI.

Ingestion of data from various sources (for example Amazon Redshift, Azure SQL Database, and Amazon S3) into Object Storage and Autonomous Data Warehouse is the first step in this process.

- Object Storage and Autonomous Database

Object Storage and Autonomous Data Warehouse provide the storage layer for the historical data used to train the machine learning models. Object Storage can be used as both a source and target data storage layer to perform custom data processing and ETL jobs using Data Flow.

- OCI Data Catalog

A data lake that consists of multiple tables in Autonomous Data Warehouse, buckets in Object Storage, and thousands of data assets becomes unwieldy quickly. For data scientists, the problem is one of discoverability. They are not DBAs, and yet they need to quickly identify which data assets are relevant to solve a particular business problem. Oracle Cloud Infrastructure Data Catalog allows data scientists to quickly identify candidate data assets for training machine learning models.

Data Catalog is a fully managed, self-service data discovery and governance solution for enterprise data. It provides a single collaborative environment for managing technical, business, and operational metadata. You can collect, organize, find, access, understand, enrich, and activate this metadata.

- OCI Data Flow service

Before data is ready for training machine learning, several data “wrangling” operations need to be applied, at scale, on the raw data in either Object Storage or Autonomous Data Warehouse. Data scientists need the data to be clean, joined, denormalized, deduplicated, imputed, scaled, and shaped in a format that is suitable for model training (usually a data frame). This step is usually done by data engineers, but more data scientists are getting involved in this step. The de facto toolkit for performing these steps is Apache Spark, and we recommend using Oracle Cloud Infrastructure Data Flow.

Data Flow is a fully managed service for running Spark applications. It allows you to focus on your apps and provides an easy runtime environment for running them. It has an easy and simple user interface with API support for integration with apps and workflows. You don't need to spend any time on the underlying infrastructure, cluster provisioning, or software installation.

- OCI Data Science

Oracle Cloud Infrastructure Data Science is a fully managed platform that data science teams can use to build, train, manage, and deploy their machine learning models on OCI. Data Science includes notebook sessions, jobs, a model catalog, model deployments, and the Accelerated Data Science (ADS) Python SDK. Data can be ingested in either notebook sessions or jobs from various data sources and transformed into predictive features for model training. Notebook sessions are IDEs in which data scientists can prototype the pipeline of transformations required to engineer features and train the model. Those steps can be productionized at scale with jobs. After models are trained, they’re stored in a managed model storage layer (model catalog) and staged for deployment. Models can be deployed as HTTP endpoints through model deployments. After they are deployed, models can be consumed by third-party applications, whether these applications are hosted on OCI or elsewhere.

- Oracle Machine Learning

Oracle Machine Learning also offers features to build, train, and deploy models for data in the database. Oracle Machine Learning provides a Zeppelin notebook interface that lets data scientists train models using the OML4Py Python client library. OML also offers a no-code approach to model training with the AutoML UI. The deployment of models as REST APIs can be done through Oracle Machine Learning Services. There is, however, limited support for open source software.

- AI Services

AI services provide a collection of pre-trained and customizable model APIs over use cases spanning from language, vision, speech, decision, and forecasting. AI services provide model predictions that are accessible via REST API endpoints. These services provide state-of-the-art pre-trained models and should be considered and evaluated before training custom machine learning models using services 1-6. Alternatively, Oracle Machine Learning services also provide a series of pre-trained models for language (topic, keywords, summary, similarity) and vision.

Considerations

When implementing machine learning and artificial intelligence, consider these options.

- Provide horizontal scalability at every step in the model development

lifecycle

Provide horizontal scalability for the ETL and data processing steps (through OCI Data Flow), the model training itself, and the model deployment. Also, consider using GPUs on both the model training side and the deployment side.

- Ensure model reproducibility

Models are audited and need to be reproduced. Reproducing a model requires that references to the source code, training and validation datasets, and environment (third-party libraries and architecture) are provided when a model is saved. Use references to Git repos and commit hashes to track code. Use Object Storage to save snapshots of training and validation datasets. Include a reference to a published conda environment as part of the model metadata.

- Version-control code, features, and models

This consideration is related to model reproducibility. You can version models directly in the Data Science model catalog. Integrate the use of Git as part of a Data Science IDE (for example, JupyterLab) or in a training execution engine like Data Science jobs. You can version features as datasets either through a tool like Object Storage or Git Large File Storage (LFS), which supports object versioning by default.

- Package, share and reuse third-party runtime dependencies

Reuse the same conda environments in notebooks, jobs, and model deployments. Doing so also minimizes the risk of third-party dependency mismatches between those steps.

- Be data source agnostic while limiting data transfers

Transferring data to a model training environment is time-consuming. Use block volumes that can be shared across notebook environments or training jobs as much as possible. Keep local dataset snapshots for model training and validation purposes.

Alternative Patterns

- Deploying a Data Science VM from the OCI Marketplace as an alternative to the

managed Data Science service

The Data Science VM image is available for both CPU and NVIDIA GPU shape families. The environment provides a comprehensive series of machine learning libraries and IDEs that can be used by data scientists. It does not, however, provide model deployment capabilities. Model deployment can be achieved through OCI Data Science Model Deployment or through Oracle Functions.

- Oracle Machine Learning (OML Notebooks, AutoML UI, Services, OML4Py) in the

database for building, training, and deploying machine learning models in the

database

This alternative pattern provides powerful capabilities when the data required to train the model is in the database. This pattern has limitations on machine learning open source software support.

- Deployment of models as REST APIs through the OCI Registry, Oracle Functions,

and the API Gateway

This pattern is applicable to customers who are training their models in the Data Science VM image environment or through OCI Data Science. This pattern provides an alternative path to the deployment of machine learning models as REST API endpoints. A Docker image with the trained model artifact must be built and deployed to the OCI Registry, an Oracle Function can be created from the docker image and deployed as a backend resource of the API Gateway.

- Deployment of OSS Kubeflow ML platform on OKE

This pattern offers a standalone solution and gives the customer the option to execute container-based machine learning workloads on Oracle Container Engine for Kubernetes. Kubeflow is an OSS offering that offers all the key capabilities of a machine learning platform.

Antipattern

We don't recommend a fleet of VMs built with a custom machine learning OSS stack. Machine learning projects are typically built on top of multiple OSS libraries that have complex and often conflicting dependencies and security vulnerabilities that require constant package upgrades. We recommend managed services that take on the burden of building ready-to-use and secure environments.