Implement Oracle Database File System (DBFS) Replication

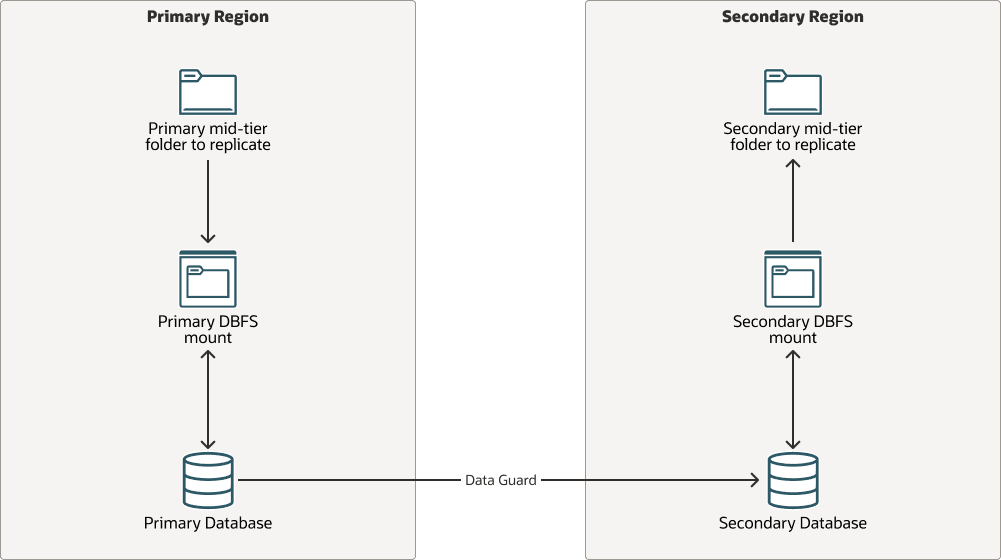

Any replication to standby implies two steps in this model: from the primary's origin folder to the intermediate DBFS mount, and then, in the secondary site, from the DBFS mount to the standby's destination folder. The intermediate copies are done using rsync. As this is a low-latency and local rsync copy, some of the problems that arise in a remote rsync copy operation are avoided with this model.

Note:

This method is not supported with Oracle Autonomous Database, which doesn’t allow DBFS connections.replica-mid-tier-dbfs-oracle.zip

The advantages of implementing the mid-tier replica with DBFS are:

- This method takes advantage of the robustness of the Oracle Data Guard replica.

- The real mid-tier storage can remain mounted in the secondary nodes. There are no additional steps to attach or mount the storage in the secondary in every switchover or failover operation.

The following are considerations for implementing the mid-tier replica with DBFS:

- This method requires an Oracle Database with Oracle Data Guard.

- The mid-tier hosts need the Oracle Database client to mount the DBFS.

- The use of DBFS for replication has implications from the setup, database storage, and lifecycle perspectives. It requires an installation of the Oracle Database client in the mid-tier hosts, certain database maintenance (to clean, compress, and reduce table storage), and a good understanding of how DBFS mount points behave.

- The DBFS directories can be mounted only if the database is open. When Oracle Data Guard is not an Active Data Guard, the standby database is in mount state. Hence, to access the DBFS mount in the secondary site, you must convert the database to a snapshot standby. When Active Data Guard is used, the file system can be mounted for reads, and there is no need to transition to a snapshot.

- It is not recommended to use DBFS as a general-purpose solution to replicate all the artifacts (especially runtime files) to the standby. Using DBFS to replicate the binaries is overkill. However, this approach is suitable to replicate a few artifacts, like the configuration, when other methods like storage replication or

rsyncdo not fit the system's needs. - It is the user’s responsibility to create the custom scripts for each environment and run them periodically.

- It is the user’s responsibility to implement a way to reverse the replica direction.

Set Up Replication for Database File System

This implementation uses the rsync technology and follows the peer-to-peer model. In this model, the copy is done directly between the mid-tier peer hosts. Each node has SSH connectivity to its peer and uses rsync commands over SSH to replicate the primary mid-tier file artifacts.

The following is required to implement mid-tier replica with DBFS:

- An Oracle Database client installation on the mid-tier hosts that perform the copy, both in the primary and secondary.

- A DBFS file system created in the database.

- A DBFS mount in the mid-tier hosts that perform the copies, both in primary and secondary. This mounts the database’s DBFS file system. This file system can be mounted in more than one host, since DBFS is a shareable file system.

- Scripts that copy the mid-tier file artifacts to the DBFS mount in the primary site.

- Scripts that copy the mid-tier file artifacts from the DBFS mount to the folders in the secondary site. Depending on the implementation, this method may require SQL*net connectivity between the mid-tier hosts and the remote database for database operations such as role conversions.

- A way to manage the site-specific information, either excluding that info from the copy or updating it with the appropriate info after the replica.

- Schedule these scripts to run on an ongoing basis.

- A mechanism to change the direction of the replica after a switchover or failover.

Note:

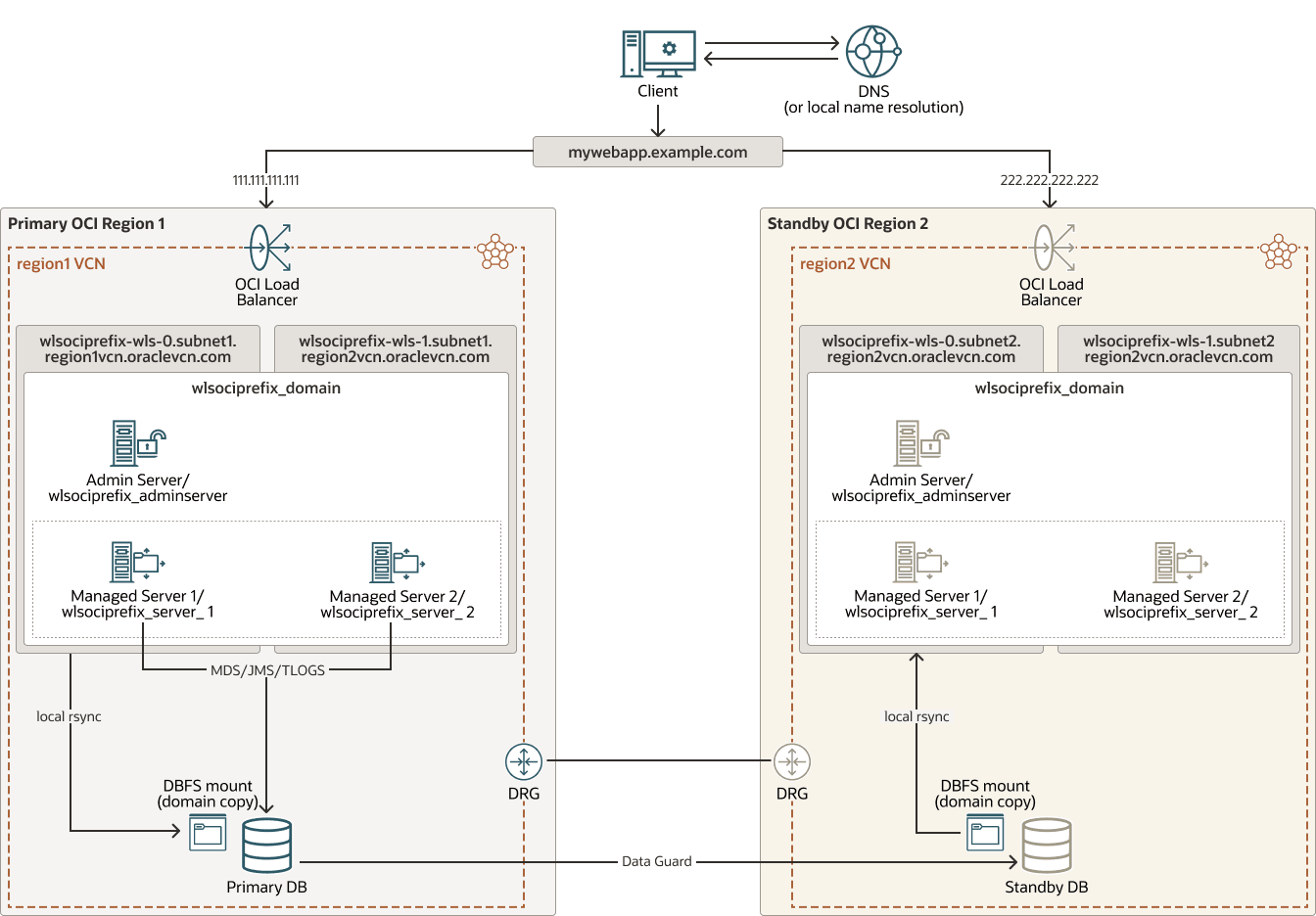

The following example applies to Oracle WebLogic systems. You can use it as a reference for copying other folders of the mid-tier system through DBFS, but this particular example uses a script that replicates the WebLogic Administrator’s domain folder to the secondary through DBFS.This example shows how to replicate the domain folder of the WebLogic Administration host through DBFS. The content located outside the domain folder, as well as content on other hosts, is not included in this example. The domain folder doesn’t reside directly on DBFS; the DBFS mount is only an intermediate staging folder to store a copy of the domain folder.

This example provides a script to perform these actions, which must run periodically in primary and standby sites. This script copies the WebLogic Administration domain folder, skipping some items like tmp, .lck, .state files, and the tnsnames.ora file. The procedure consists of the following:

- When the script runs on the WebLogic Administration host of the primary site, the script copies the WebLogic domain folder to the DBFS folder.

- The files copied into the DBFS, as they are stored in the database, are automatically transferred to the standby database through Oracle Data Guard.

- When the script runs on the WebLogic administration host of the secondary site:

- The script converts the standby database to a snapshot standby.

- Then, it mounts the DBFS file system from the standby database.

- The replicated domain folder is now available in this DBFS folder. The script copies it from the DBFS mount to the real domain folder.

- Finally, the script converts the standby database to a physical standby again.

- In case of a role change, the script automatically adapts the execution to the new role. It gathers the actual role of the site by checking the database role.

This script only replicates the domain folder of the WebLogic Administration host. The content under the DOMAIN_HOME/config folder is automatically copied over to all other nodes that are part of the WebLogic domain when the managed servers start. The files outside this folder and the files located on other hosts are not replicated and need to be synchronized separately.

For application deployment operations, use the Upload your files deployment option in the WebLogic Administration Console. This way, the deployed files are placed under the upload directory of the Administration Server ($DOMAIN_HOME/servers/admin_server_name/upload), and the config replica script will sync them to the standby site.

This example provides another script to install the DB Client and to configure a DBFS mount in the mid-tier hosts. The image is an example of an Oracle WebLogic Server for OCI system with DBFS replication.

wls-dbfs-replication-oracle.zip

Perform the following to use the DBFS method to replicate the WebLogic domain:

Validate Replication for Database File System

In a switchover or failover operation, the replicated information must be available and usable in the standby site before the processes are started. This is also required when you validate the secondary system (by opening the standby database in snapshot mode).

In this implementation, the storage is always available in the standby; you don’t need to attach or mount any volume. The only action you need is to ensure that it contains the latest version of the contents.

Perform the following to use the replicated contents in standby:

Perform Ongoing Replication for Database File System

Run the replication script periodically to keep the secondary domain in sync with the primary.

rsync from the mid-tier hosts:

- Use the OS crontab or another scheduling tool to schedule replication. It must allow the scripts to complete the replication. Otherwise, the subsequent jobs may overlap.

- Keep the mid-tier processes stopped in the standby site. If the servers are up in the standby site while the changes are replicated, the changes will take effect the next time they are started. Start them only when validating the standby site or during the switchover or failover procedure.

- Maintain the information that is specific to each site and keep it up-to-date. For example, skip the

tnsnames.orafrom the copy, so each system has its connectivity details. If you perform a change in thetnsnames.orain primary (for example, adding a new alias), manually update thetnsnames.orain secondary accordingly. - After a switchover or failover, reverse the replica direction. This depends on the specific implementation. The scripts can use a dynamic check to identify who is the active site, or you can perform a manual change after a switchover or failover (for example, disabling and enabling the appropriate scripts). In the example provided, the

config_replica.shscript automatically adapts the execution to the actual role of the site by checking the local database role.