Implement rsync with a Central Staging Location

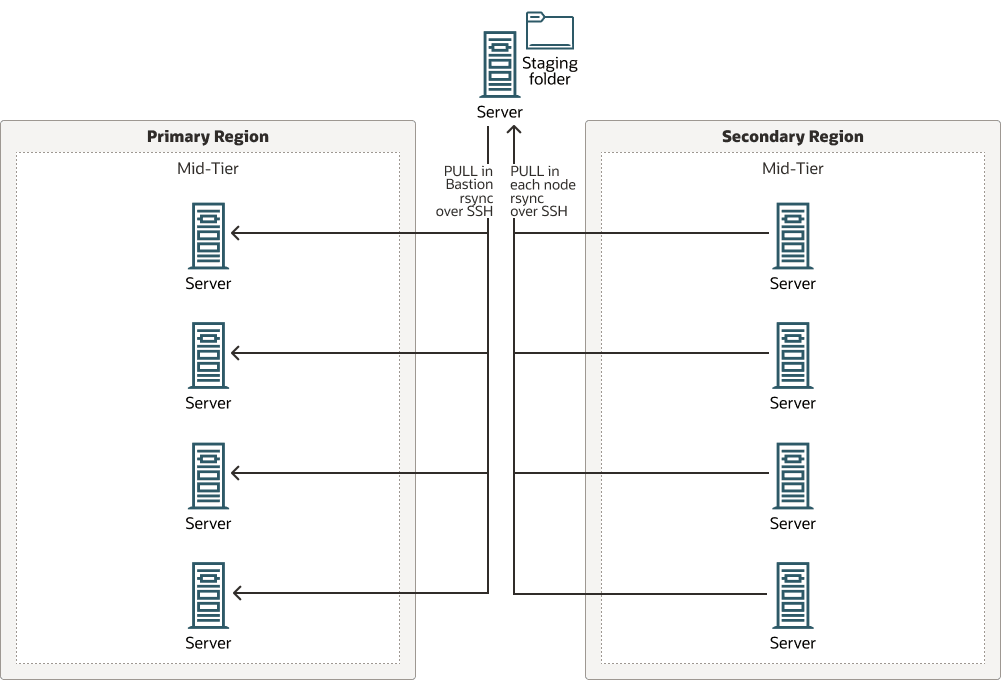

This implementation uses the rsync technology and follows the model based on a central staging location. In this model, there is a bastion host node that acts as a coordinator. It connects to each host that needs to be replicated and copies the contents to a common staging location.

The advantages of implementing rsync with a central staging location are:

- It is a general-purpose solution applicable to any mid-tier, so if you have multiple systems, you can use the same approach in all of them.

- It doesn’t depend on the underlying storage type; it is valid for replicating file artifacts that reside in block volumes, in NFS, and so on.

- The storage can remain mounted in the secondary nodes. Hence, it doesn’t require additional steps to attach or mount the storage in the secondary in every switchover or failover operation.

- Compared to peer-to-peer implementation, the maintenance is simpler, since there is a central node for running the scripts.

The considerations for implementing the rsync with a central staging location are:

- It is the user’s responsibility to create the custom scripts for each environment and run them periodically.

- It is the user’s responsibility to implement a way to reverse the replica direction.

- This model requires an additional host and storage for the central staging location.

Similar to the peer-to-peer model, the rsync scripts can use a pull or a push model. In the “pull” model, the script copies the files from the remote node to the local node. In the “push” model, the script copies the file from the local node to the remote node. Oracle recommends using a pull model to retrieve the contents from primary hosts, because it offloads the primary nodes from the overhead of the copies.

Set Up Replication for rsync with Central Staging

The following is required to implement rsync with a central staging location:

- A bastion host with SSH connectivity to all the hosts (both primary and secondary).

- A staging folder in the bastion host, with enough space to store the mid-tier file system contents that are replicated.

- Scripts that use

rsyncto copy the mid-tier file artifacts from and to this staging folder. Thersyncscripts can skip certain folders from the copy (like lock files, logs, temporary files, and so on). - A way to manage the site-specific information, either excluding that info from the copy or updating it with the appropriate info after the replica.

- Schedule these scripts to run periodically.

- A mechanism to change the direction of the replica after a switchover or failover. This mechanism can be a dynamic check that identifies the role of the site, or a manual change after a switchover or failover (for example, disabling and enabling the appropriate scripts).

- Example 1: Use the Oracle Fusion Middleware Disaster Recovery Guide scripts

- Example 2: Use the WLS-HYDR framework

Note:

This example applies to any mid-tier system. As a reference, it uses the scripts provided by the Oracle Fusion Middleware Disaster Recovery Guide to perform the mid-tier replica for an Oracle WebLogic DR system:rsync_for_WLS.sh and

rsync_copy_and_validate.sh. But these scripts are generally

applicable and provide enough flexibility to synchronize the mid-tier file

system artifacts in OCI.

The Oracle Fusion Middleware Disaster Recovery

Guide provides rsync scripts to perform remote

copies in a mid-tier system. These scripts are valid for any rsync

model. This particular example shows how to use them for the central staging model.

This implementation uses pull operations in two steps:

- A bastion host pulls the contents from all the primary hosts and stores them in the central staging.

- Then, all the secondary nodes perform a pull operation to gather the contents from the central staging.

To set up the mid-tier replication with these scripts, see Replicating the Primary File Systems to the Secondary Site in the Oracle Fusion Middleware Disaster Recovery Guide, and the Rsync Replication Approach section and Using a Staging Location steps in particular.

replica-rsync-scripts-oracle.zip

Note:

This example applies to an Oracle WebLogic Server system. It uses the replication module of theWLS-HYDR framework, but it applies to any Oracle WebLogic

Server DR environment, regardless of whether it was created with the WLS-HYDR framework or not.

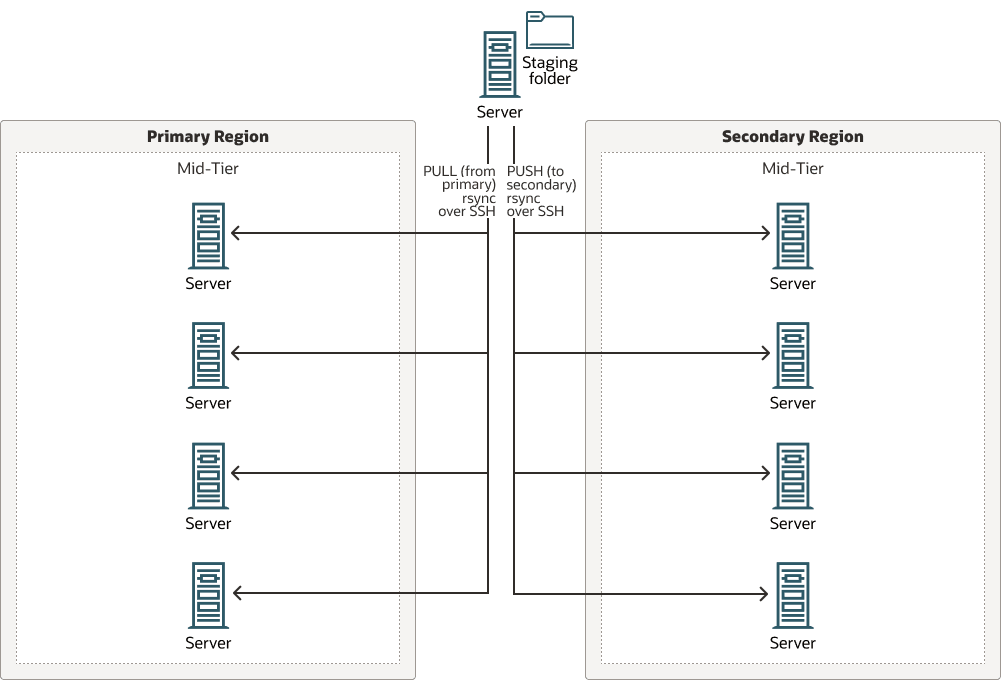

In this model, a central host node acts as a total coordinator, performing pull and push operations. It connects to each host that needs to be replicated and copies the contents to a common staging location. This node also coordinates the copy from the staging location to the destination hosts. This approach offloads the individual nodes from the overhead of the copies.

The WLS-HYDR framework uses this approach for the initial copy

during the DR setup. Then, you can reuse the replication module of the framework to

repeat the pull and push periodically. Refer to Explore More in this playbook for

links to the WLS-HYDR framework and other resources..

The bastion node performs the replica in two steps:

- A pull operation, where it connects to the primary hosts and copies the file system contents to a staging folder in the bastion host.

- A push operation, where it copies the contents from the bastion’s staging folder to all the secondary hosts.

A central node performs all the operations, so scheduling, logs, maintenance, etc., are centralized on that node. When the system has many nodes, this is more efficient compared to the peer-to-peer model or the previous example.

replica-wls-hydr-framework-oracle.zip

If you used the WLS-HYDR framework for creating the secondary system, the bastion host is already prepared to perform the replica. Otherwise, you can configure it at this point. Follow these steps for setting up the replica:

Validate Replication for rsync with Central Staging

In a switchover or failover operation, the replicated information must be available and usable in the standby site before the processes are started. This is also required when you validate the secondary system (by opening the standby database in snapshot mode).

In this implementation, the storage is always available in the secondary site, you don’t need to attach or mount any volume. The only action you may need is to ensure that it contains the latest version of the contents is the following.

Then you can perform additional steps required to validate the system.

Perform Ongoing Replication for rsync with Central Staging Location

Run the replication scripts periodically to keep the secondary domain in sync with the primary.

Follow these recommendations for the ongoing replication when using this implementation:

- Use the OS

crontabor another scheduling tool to run the replication scripts periodically. For example, when using thersyncscripts provided by the disaster recovery guide, follow the steps in the Scheduling Ongoing Replication With Rsync Scripts section of the Oracle Fusion Middleware Disaster Recovery Guide. Refer to Explore More in this playbook for links to these and other resources. For the replication frequency, follow the guidelines described in Mid-tier File Artifacts earlier in this playbook. - Keep the mid-tier processes stopped in the standby site. If the servers are up in the standby site while the changes are replicated, the changes will take effect the next time they are started. Start them only when you are validating the standby site or during the switchover or failover procedures.

- Maintain the information that is specific to each site up to date. For example, if the file system contains a folder with the artifacts to connect to an Autonomous Database, maintain a backup copy of this folder. Ensure that you update the backup of the wallet folder when you perform an update in the wallet. This way, it will be correctly restored in subsequent switchover and failovers.

- After a switchover or failover, reverse the replica direction. This depends on the specific implementation. This can be done using a dynamic check that identifies who is the active site, or with a manual change after a switchover or failover, disabling and enabling the appropriate scripts.

Tip:

- When using the

rsyncscripts provided by the DR Guide (example 1), make sure you create the equivalent scripts to perform the replica in the other direction. In the crontab or the scheduled tool, enable only the appropriate scripts for the actual role. - When using the WLS-HYDR (example 2), change the role of the primary in the WLS-HYDR framework, so the next replications go in the other direction. For this, edit the

WLS-HYRDR/lib/DataReplication.pyand change from these:if True: PRIMARY = PREM STANDBY = OCI else: PRIMARY = OCI STANDBY = PREMto the following:if False: PRIMARY = PREM STANDBY = OCI else: PRIMARY = OCI STANDBY = PREM

- When using the