Migrate an On-Premises Database to an Exadata DB System

Simplify your database provisioning, maintenance, and management operations by moving your large on-premises deployment of Oracle Database Enterprise Edition to an Exadata DB System in Oracle Cloud Infrastructure. An Exadata DB system consists of multiple compute nodes and storage servers, tied together by a high-speed, low-latency InfiniBand network and intelligent Exadata software.

Architecture

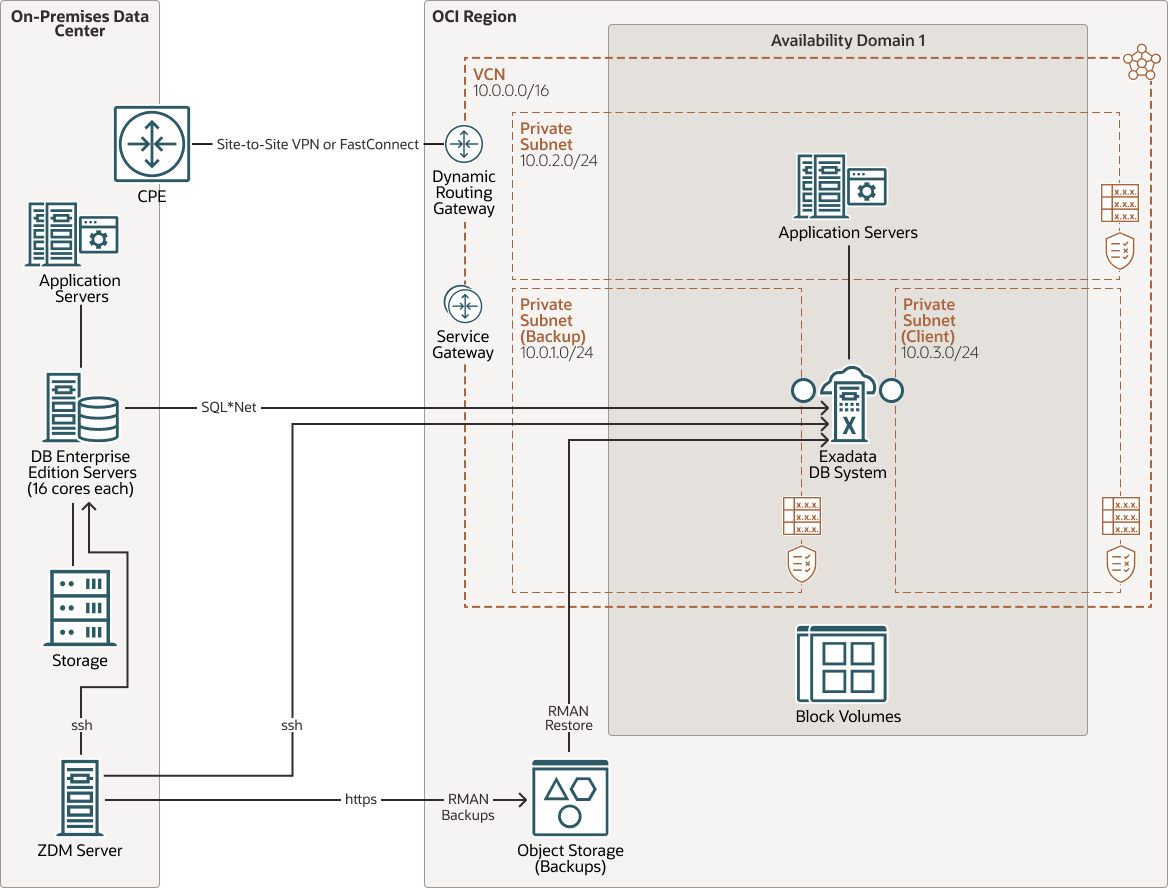

This architecture shows the resources and topology required to migrate multiple on-premises deployments of Oracle Database Enterprise Edition to an Exadata DB System in Oracle Cloud Infrastructure.

Description of the illustration migrate-exadata.png

The architecture has the following components:

- On-premises deployment

The on-premises deployment has two application servers running on 4-core Intel servers and two Oracle Database Standard Edition servers on 16-core Intel servers. The database servers are connected to storage devices. The on-premises network is connected to an Oracle Cloud region by using Oracle Cloud Infrastructure FastConnect or IPSec VPN. The architecture assumes that the on-premises servers are Oracle Linux hosts.

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

In this architecture, the application tier is in a private subnet. The Exadata DB system uses separate private subnets for the backup and client traffic.

- Route tables

Virtual route tables contain rules to route traffic from subnets to destinations outside a VCN, typically through gateways.

This architecture uses a route rule to send traffic from the database subnet to Oracle Cloud Infrastructure Object Storage through the service gateway.

- Security lists

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

This architecture uses ingress and egress rules in the security lists attached to the application server and database subnets. These rules enable connectivity between the application and database. Ingress rules are temporarily added in the security lists attached to the application server and database server subnets during migration, for transferring application files, shell scripts, and configuration data.

- Dynamic routing gateway (DRG)

The DRG is a virtual router that provides a path for private network traffic between VCNs in the same region, between a VCN and a network outside the region, such as a VCN in another Oracle Cloud Infrastructure region, an on-premises network, or a network in another cloud provider.

- Service gateway

The service gateway provides access from a VCN to other services, such as Oracle Cloud Infrastructure Object Storage. The traffic from the VCN to the Oracle service travels over the Oracle network fabric and never traverses the internet.

- Block volume

With block storage volumes, you can create, attach, connect, and move storage volumes, and change volume performance to meet your storage, performance, and application requirements. After you attach and connect a volume to an instance, you can use the volume like a regular hard drive. You can also disconnect a volume and attach it to another instance without losing data.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

- Database system

The on-premises databases are migrated to an Exadata DB system running Oracle Database Enterprise Edition, with 32 cores enabled with Oracle Real Application Clusters (RAC) licenses.

- Application server

The on-premises application servers are migrated to two 4-core compute instances.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- Compute shapes

This architecture uses Oracle Linux compute instances with the VM.Standard2.4 shape for the application servers. If the application needs more processing power, memory, or network bandwidth, then choose a larger shape.

- Block volumes

This architecture uses 100-GB block volumes for the application servers. You can use the volumes for installing the application, or to store application logs and data.

- DB system shapes

This architecture uses the Exadata.Quarter2.92 (Quarter Rack) shape for the DB system, with 32 cores enabled. If you need more processing power, you can enable additional cores in multiples of two, up to a total of 92 enabled cores.

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select an address range that doesn’t overlap with your on-premises network, so that you can set up a connection between the VCN and your on-premises network using IPSec VPN or FastConnect.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

Use regional subnets.

- Database migration method

In this reference architecture, Oracle Zero Downtime Migration (ZDM) is used to migrate the on-premises Oracle Database Enterprise Edition deployments to Oracle Cloud Infrastructure with zero to minimal downtime. This method greatly reduces the impact of the database migration on application availability, especially if the backup and copy operations use connections with limited bandwidth.

Here's an overview of the migration process:- You download the ZDM software, install it on a standalone Linux 7 (or higher) server to coordinate migrations, and start the database migration process by using the

zdmcli migrate databasecommand. - ZDM connects to the source and target database servers by using the provided SSH keys. It then establishes connectivity between the source database and a bucket in Oracle Cloud Infrastructure Object Storage.

- ZDM orchestrates the transfer of the database backup files from the source database to the object storage bucket, starts a Data Guard standby database in the cloud by using the backup files, and synchronizes the source and standby databases. ZDM has special features to work over low-bandwidth connections and resume data transmission after network interruptions.

- This reference architecture focuses on the database migration part of moving your on-premises application stack to Oracle Cloud Infrastructure. Your applications might use middleware and presentation layer servers that typically depend on low-latency connectivity to the databases. So, before switching over to the databases provisioned in Oracle Cloud Infrastructure, migrate the application servers

- When you are ready to switch over to the cloud, use ZDM to perform a Data Guard switchover, and transition the role of the databases. The on-premises database becomes the standby, and the Exadata DB System becomes the primary database.

- As the final step in the migration process, ZDM terminates the Data Guard connectivity between the source and target databases, and performs cleanup operations.

- Run the migration process twice to migrate both the on-premises databases to the Exadata DB System.

Note:

To minimize the time required to migrate large databases, use Oracle Cloud Infrastructure FastConnect. - You download the ZDM software, install it on a standalone Linux 7 (or higher) server to coordinate migrations, and start the database migration process by using the

Considerations

- Scalability

- Application tier:

You can scale the application servers vertically by changing the shape of the compute instances. A shape with a higher core count provides more memory and network bandwidth as well. If more storage is required, increase the size of the block volumes attached to the application server.

- Database tier

You can scale the database vertically by enabling additional cores for the Exadata DB system. You can add OCPUs in multiples of two for Exadata Quarter Rack. The databases continue to be available during the scaling. If you outgrow the available CPUs and storage, you can migrate to a larger Exadata rack.

- Application tier:

- Availability

Fault domains provide the best resilience for workloads deployed within a single availability domain. This architecture doesn’t show redundant resources, because the focus is on the migration approach. For high availability in the application tier, deploy the application servers in different fault domains, and use a load balancer to distribute client traffic across the application servers.

The Exadata DB System provides high availability for the database tier.

- Cost

- Application tier

Select the compute shape based on the cores, memory, and network bandwidth that your application needs. You can start with a 4-core shape for the application server. If you need more performance, memory, or network bandwidth, you can change to a larger shape.

- Database tier

Every OCPU that you enable on the Exadata rack needs licensing for Oracle Database Enterprise Edition, along with the database options and management packs that you plan to use.

- Application tier

Deploy

To deploy this reference architecture, create the required resources in Oracle Cloud Infrastructure, and then migrate the on-premises database by using Oracle Zero Downtime Migration.