Analyze Data from External Object Storage Sources Using Oracle Cloud Infrastructure Data Flow

Your data resides in different clouds, such as Amazon Web Services S3 or Azure Blob Storage, but you want to analyze it from a common analysis platform. Oracle Cloud Infrastructure Data Flow is a fully managed Spark service that lets you develop and run big data analytics, regardless of where your data resides, without having to deploy or manage a big data cluster.

Architecture

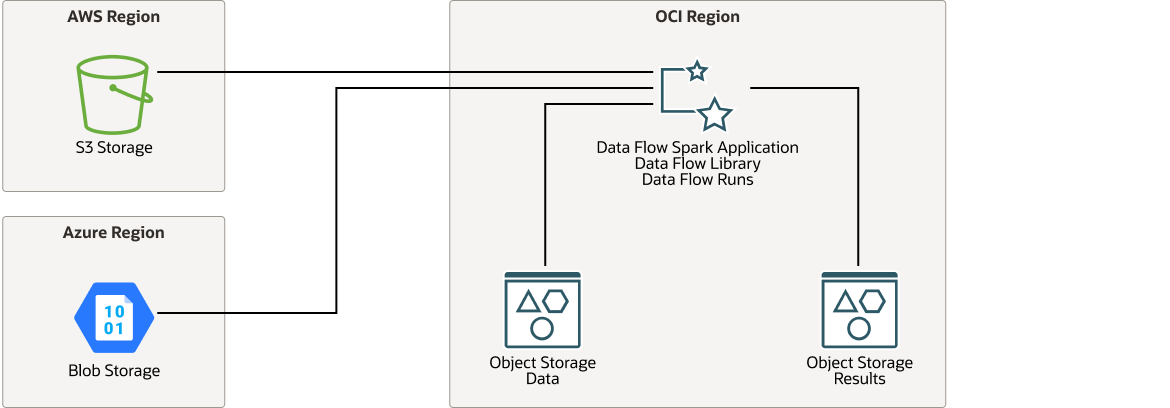

This architecture shows Oracle Cloud Infrastructure Data Flow connecting to Amazon Web Service (AWS) S3 buckets or Azure Blob Storage buckets, analyzing the data, and saving the results in Oracle Cloud Infrastructure Object Storage.

To connect to AWS, the Data Flow application requires an AWS access key and a secret key. To connect to Azure, Data Flow requires the Azure account name and account key.

The following diagram illustrates this reference architecture.

oci-dataflow-architecture-oracle.zip

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Oracle Cloud Infrastructure Data Flow

Oracle Cloud Infrastructure Data Flow is a cloud-based, serverless platform that allows you to create, edit, and run Spark jobs at any scale without the need for clusters, an operations team, or highly specialized Spark knowledge. During runtime, Data Flow obtains the application source, creates the connection, retrieves the data, processes it, and writes the output to Oracle Cloud Infrastructure Object Storage.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

Recommendations

Use the following recommendations as a starting point to analyze data from external object storage sources using Oracle Cloud Infrastructure Data Flow.

Your requirements might differ from the architecture described here.

- Data Location

This architecture is intended for users to quickly and easily test Spark applications using Data Flow. After successful feasibility testing, we recommend transferring the source data to Oracle Cloud Infrastructure Object Storage to improve performance and reduce cost.

- Object Storage

This architecture uses standard Oracle Cloud Infrastructure Object Storage to store processed output so that other cloud services can access the output for further analysis and display.

Considerations

When analyzing data from external object storage sources using Oracle Cloud Infrastructure Data Flow, consider these deployment options.

- Spark application

If you have an existing Spark application executing against the data in Amazon Web Services S3 or Azure Blob Storage, you can use the same Spark application in Oracle Cloud Infrastructure Data Flow.

- Performance

Reading data across data centers is inherently slow. This architecture is suitable for a proof-of-concept or for applications that are CPU-intensive, such as machine learning jobs. If your proof-of-concept is successful, transfer the source data locally into Oracle Cloud Infrastructure Object Storage before running large production jobs.

- Security

Use policies to restrict who can access Oracle Cloud Infrastructure resources and to what degree.

Use Oracle Cloud Infrastructure Identity and Access Management (IAM) to assign privileges to specific users and user groups for both Data Flow and run management within Data Flow.

Encryption is enabled for Oracle Cloud Infrastructure Object Storage by default and can’t be turned off.

- Cost

Oracle Cloud Infrastructure Data Flow is pay-per-use; so you pay only when you run the Data Flow application, not when you create it.

Processing a large volume of Amazon Web Services S3 data may result in high data egress costs.

Deploy

The Terraform code for this reference architecture is available as a sample stack in Oracle Cloud Infrastructure Resource Manager. This sample Terraform stack deploys an OCI Data Flow application environment, together with IAM policies and OCI Object Storage buckets (not third-party storage). By default, a demo Python Spark application will also be deployed. You can also download the code from GitHub, and customize it to suit your specific requirements.

- Deploy using the sample stack in the Oracle Cloud Infrastructure Resource

Manager:

- Click

.

.

If you aren't already signed in, enter the tenancy and user credentials.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy using the Terraform code in GitHub:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.

In addition to the Terraform code provided on GitHub, the code snippets below illustrate how to connect to Amazon Web Services S3 and how to query the data.

- To connect to and query data from S3, you need to include the

hadoop-aws.jarandaws-java-sdk.jarpackages. You can reference these packages in thepom.xmlfile as follows:<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-aws</artifactId> <version>2.9.2</version> <exclusions> <exclusion> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>com.amazonaws</groupId> <artifactId>aws-java-sdk</artifactId> <version>1.7.4</version> </dependency> - Use code similar to the following to connect to Amazon Web Services

S3. You must provide your access key and your secret key. In the snippet below,

these values are represented by the variables

ACCESSandSECRETrespectively:SparkSession spark = SparkSession.builder().master("local") .config("spark.hadoop.fs.s3a.impl", "org.apache.hadoop.fs.s3a.S3AFileSystem") .config("spark.hadoop.fs.s3a.access.key", ACCESS) .config("spark.hadoop.fs.s3a.secret.key", SECRET) .config("fs.s3a.connection.ssl.enabled", "false") .getOrCreate(); - Use code similar to the following to query the data using the S3

location and the table name you

specify.

Dataset<Row> ds = sqlContext.read().format("csv").option("header","true").load("<S3 Location>"); ds.createOrReplaceTempView("<Table Name>"); Dataset<Row> result_ds = sqlContext.sql("<SQL Query Using <Table Name>>");

Explore More

Learn more about the features of this architecture.

-

To view product information, see Oracle Cloud Infrastructure Data Flow.

-

To give Data Flow a try, use the tutorial Getting Started with Oracle Cloud Infrastructure Data Flow.

- To learn more about Python and Oracle Cloud Infrastructure Data Flow, use the Oracle LiveLabs workshop Sample Python Application with OCI Data Flow

-

To learn more about the SparkSession and Dataset classes shown in the code snippets, see the Spark Java API documentation.

-

For information about the other APIs supported by Apache Spark, see Spark API Documentation.

For general Oracle Cloud Infrastructure architectural guidelines, see Best practices framework for Oracle Cloud Infrastructure.