Implement Oracle Modern Data Platform for Business Reporting and Forecasting

This section describes how to provision OCI services, implement the use case, visualize data, and create forecasts.

Provision OCI Services

Let's go through the main steps for provisioning resources for a simple demo of this use case.

Implement the Oracle Modern Data Platform Use Case

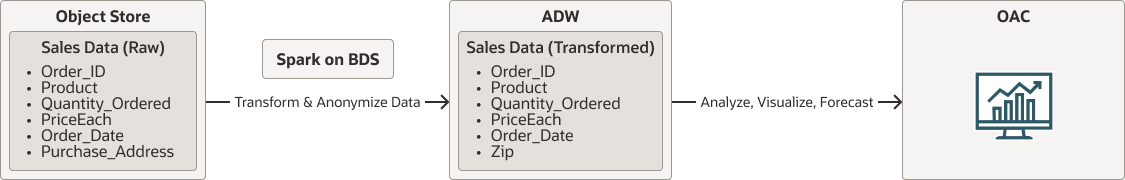

Before going into the implementation details, let us look at the overall flow.

- Raw data will reside in OCI Object Storage.

- Spark running natively on Oracle Big Data Service will read data from OCI Object Storage. It will cleanse, transform and anonymize data and finally persist data into Autonomous Data Warehouse.

- Users will utilize Oracle Analytics Cloud to visualize data and make forecasts.

oci-modern-data-reporting-flow-oracle.zip

Note:

The chosen data flow is a simplistic example, actual business use cases can be expected to more involved.The following section describes how to upload sample data, configure OCI Object Storage, Oracle Big Data Service, and invoke a simple Spark scala job to transform data and move data to Autonomous Data Warehouse