Learn About Deploying a VMware vSAN Stretched Cluster across OCI Regions with Oracle Cloud VMware Solution

Oracle Cloud Infrastructure (OCI) offers high availability and fault tolerance across all its multiple Availability Domain regions. These regions inherently provide data center level fault isolation, with each Availability Domain subdivided into multiple Fault Domains to protect against rack-level outages. This built-in architecture addresses the resilience requirements of most enterprise workloads.

For VMware workloads, Oracle Cloud VMware Solution supports multiple Availability Domain deployments in regions with three Availability Domains. In this case, you can natively deploy VMware vSAN stretched clusters within a single region, leveraging VMware HA and VMware vSAN without the need for complex cross-site configurations.

However, in OCI Public Regions with only a single Availability Domain, or in Oracle Cloud Infrastructure Dedicated Regions (OCI Dedicated Regions, formerly known as Oracle Dedicated Region Cloud@Customer), multiple Availability Domain configurations are not available. For customers in these environments who require region-level protection against full site outages, a different approach is necessary. This solution playbook presents a validated, customer-managed architecture for deploying VMWare vSAN stretched clusters across multiple OCI regions, a solution enabled by the full-stack control offered by Oracle Cloud VMware Solution.

Note:

This deployment model has been successfully tested in OCI Dedicated Regions. If the necessary latency, host shape, and network connectivity requirements are met, it can also be replicated in OCI Public Regions.While OCI does not offer a native or automated method for deploying a cross-region VMware vSAN stretched cluster, Oracle Cloud VMware Solution makes it possible through its unique flexibility. Customers retain full administrative control over VMware vCenter, VMware NSX, and VMware ESXi hosts, enabling them to design and implement advanced configurations that would otherwise be difficult or impossible in more restricted managed cloud VMware offerings.

This solution playbook provides architectural guidance and detailed steps to build this powerful configuration using Oracle Cloud VMware Solution.

Understand the Core Concepts

What is a VMware vSAN stretched cluster?

A vSAN stretched cluster is a VMware configuration that extends a single logical VMware vSAN datastore across two physically separate locations. Both locations are considered active-active sites, and the configuration ensures continuous availability in the event that one site becomes unavailable. Virtual machines (VMs) can failover between sites automatically, thanks to VMware's native features vSphere HA, and vSAN will ensure storage availability as long as one site and the witness node remain operational.

In the context of OCI, this architecture maps well to OCI Dedicated Regions, which are generally geographically close enough to meet the stringent low-latency requirements of VMware vSAN stretched deployments.

For more background, refer to Broadcom’s official documentation: Introduction to vSAN Stretched Clusters.

Extending vSAN stretched clusters to OCI and Oracle Cloud VMware Solution

While VMware vSAN stretched clusters typically span two physically separate sites, within OCI, Oracle Cloud VMware Solution can deploy a VMware Software-Defined Data Center (SDDC) either in a single Availability Domain by default or across multiple Availability Domains within the same region when configured accordingly. This deployment model aligns with the regional scope of the underlying Virtual Cloud Network (VCN), which operates within, but not across, OCI regions.

To achieve region-level resiliency and protect against regional outages, customers using OCI Dedicated Regions can deploy two separate Oracle Cloud VMware Solution SDDCs in distinct OCI Dedicated Regions. These SDDCs are interconnected via OCI’s private backbone network, enabling secure, low-latency communication. The required VMware vSAN Witness node is deployed in a third, geographically nearby region (like an OCI Public Region) to complete the stretched cluster configuration.

This design enables active-active site availability within the VMware environment and ensures continuous operations even in the event of a regional failure. It aligns with both VMware’s and Oracle’s best practices for high availability and disaster recovery.

Architecture

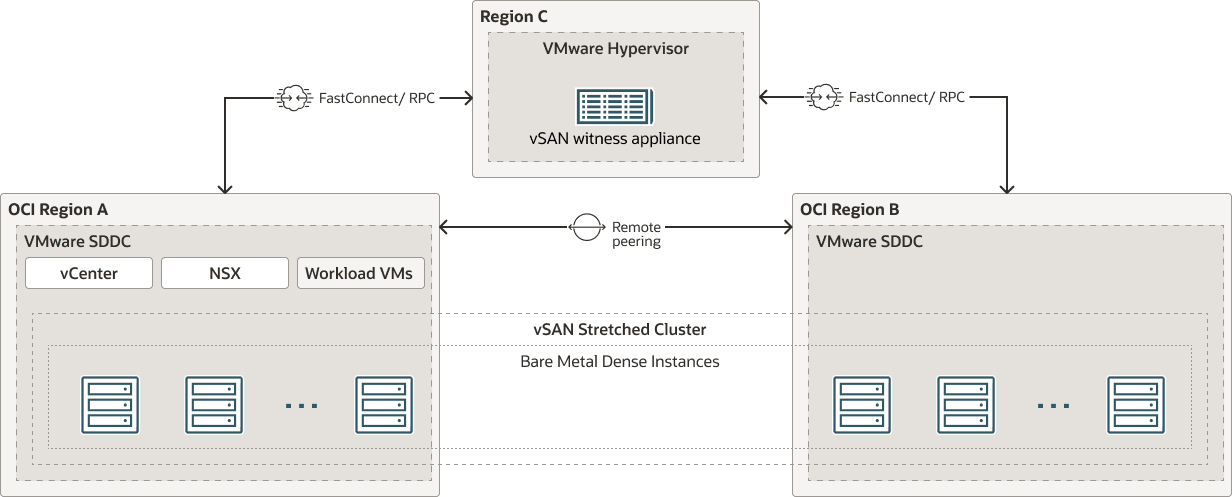

This architecture shows how you can deploy custom VMware vSAN stretched clusters across multiple OCI regions.

The high-level topology consists of:

- Primary Site: Oracle Cloud VMware Solution SDDC deployed in OCI Dedicated Region A.

- Secondary Site: Oracle Cloud VMware Solution SDDC deployed in OCI Dedicated Region B.

- Witness Site: A regionally separate location for deploying the VMware vSAN Witness Appliance.

Communication across these sites is established through OCI’s private backbone and OCI FastConnect, both of which are mandatory to meet the low-latency and high-bandwidth requirements of a stable VMware vSAN stretched cluster.

Note:

IPSec VPN is not supported for this configuration.The following diagram illustrates this architecture.

ocvs-vsan-stretched-cluster-oracle.zip

The following sections outline the key technical considerations that influence a successful deployment of a VMware vSAN stretched cluster in Oracle Cloud VMware Solution across OCI Dedicated Regions.

Networking Considerations

A key enabler of this architecture is the robust OCI backbone network that interconnects OCI Dedicated Regions within a customer tenancy. This backbone ensures the high-speed, low-latency communication necessary for VMware vSAN replication traffic and heartbeat signaling between sites.

Key factors to plan for:

- Establish Remote Peering Connections (RPCs) between the VCNs in OCI Dedicated Region A and OCI Dedicated Region B using Dynamic Routing Gateways (DRGs). This enables full mesh connectivity across all VMware ESXi hosts.

- Use OCI FastConnect (not IPSec VPN) to connect both OCI Dedicated Regions to the public OCI region hosting the Witness. This ensures consistent low-latency and reliable throughput to support witness communication.

- Reference documentation: Remote Peering, Managing DRGs, OCI FastConnect

Compute and Storage Considerations

Infrastructure planning across all three regions involves several decisions:

- Region Selection

- Choose two OCI Dedicated Regions with < 5 ms RTT latency between them.

- Select a public OCI region with < 200 ms RTT latency to both OCI Dedicated Regions for Witness deployment.

- Shape Selection

- Use Dense Bare Metal shapes (e.g., BM.DenseIO.E5.128) with local NVMe storage for VMware vSAN.

- Avoid Standard shapes that use Block Volumes, as they are not suitable for stretched vSAN deployments.

- Minimum Host Requirements

- Primary Region: Minimum three Dense Bare Metal hosts

- Secondary Region: Minimum three Dense Bare Metal hosts

- Witness Region: One Bare Metal host

- Witness Appliance Guidelines

- Follow vSAN Witness Design guidance.

- Always refer to the official documentation from Broadcom to get the latest updates as the requirements could change. Below are some references:

Stretched Cluster Requirements

- RTT latency < 5 ms between Primary and Secondary Regions

- RTT latency < 200 ms between either site and the Witness node

- All hosts (including Witness) must belong to the same VMware vSAN cluster

- Host hardware and configuration must be identical across regions

- Witness must reside in a third, separate location

Operational Considerations

Customers are responsible for completing Day 2 operations manually. Key notes:

- Oracle Cloud VMware Solution environments are deployed separately in each OCI Dedicated Region. The secondary site’s VMware vCenter and VMware NSX Manager must be manually detached and integrated with the primary cluster.

- Manual failover and route updates are required in case of a site failure.

- VMware NSX Tier-0 Gateway is active only in one site, implying an active-passive model for North-South traffic routing.

Design Overview

Building on the previous sections that covered architecture and requirements for a stretched vSAN configuration with Oracle Cloud VMware Solution, this section explains how to implement a highly available design capable of withstanding the failure of an OCI Dedicated Region.

This design uses two VCNs per site, resulting in a total of four VCNs:

OCI Dedicated Region A

VCN Primarywith two CIDR blocks; for example,10.16.0.0/16as the primary and172.45.0.0/16as the secondary CIDR (added after VCN creation). The secondary CIDR is required only for the initial SDDC deployment.Since an Oracle Cloud VMware Solution SDDC cannot span multiple VCNs, a secondary CIDR block (

172.45.0.0/16) is attached to the Primary VCN within OCI Dedicated Region A. This enables VLAN definitions for management and services subnets while keeping them logically grouped within a single VCN.VCN MGMT Active, using the same CIDR block as the secondary CIDR attached to VCN Primary; i.e.,172.45.0.0/16.

OCI Dedicated Region B

VCN Secondarywith a CIDR block distinct from and non-overlapping withVCN Primary; for example,10.17.0.0/16.VCN MGMT Failover, using the same CIDR block asVCN MGMT Active; i.e.,172.45.0.0/16.

Oracle Cloud VMware Solution provides flexibility in network provisioning. During SDDC creation, users can either:

- Specify a CIDR block and allow Oracle Cloud VMware Solution automation to create required networking components, or

- Manually create VCNs, subnets, VLANs, route tables, and NSGs beforehand, then select these existing resources during deployment.

For this stretched vSAN design, the latter approach is necessary. Precise control over network segmentation across multiple VCNs and regions requires pre-creation of route tables, NSGs, and VLANs. This separation supports clear responsibilities between VCNs and enables seamless failover behavior.

A critical aspect is that the management subnet

(172.45.0.0/16) must be accessible in both OCI Dedicated Regions. To support failover, the design allows this VCN MGMT network to “float” between the

two sites via manual network updates during failover events, such as modifying route

tables and re-advertising the subnet through DRG attachments.

DNS resolution is vital for failover and service availability. Therefore, a dedicated services subnet will be created in each VCN to host DNS and supporting infrastructure.

For VLAN tagging simplicity:

- VLAN tags in the 100 range are region-specific, confined to their respective sites.

- VLAN tags in the 200 range are associated with the

172.45.0.0/16subnet and will float between sites.

With the high-level design defined, we now step into the practical configuration of each site, starting with the Primary region.