Deploy an Apache Spark Cluster in Manager/Worker Mode

Apache Spark is an open-source, cluster-computing framework for data analytics. Oracle Cloud Infrastructure provides a reliable, high-performance platform for running and managing your Apache Spark-based Big Data applications.

Architecture

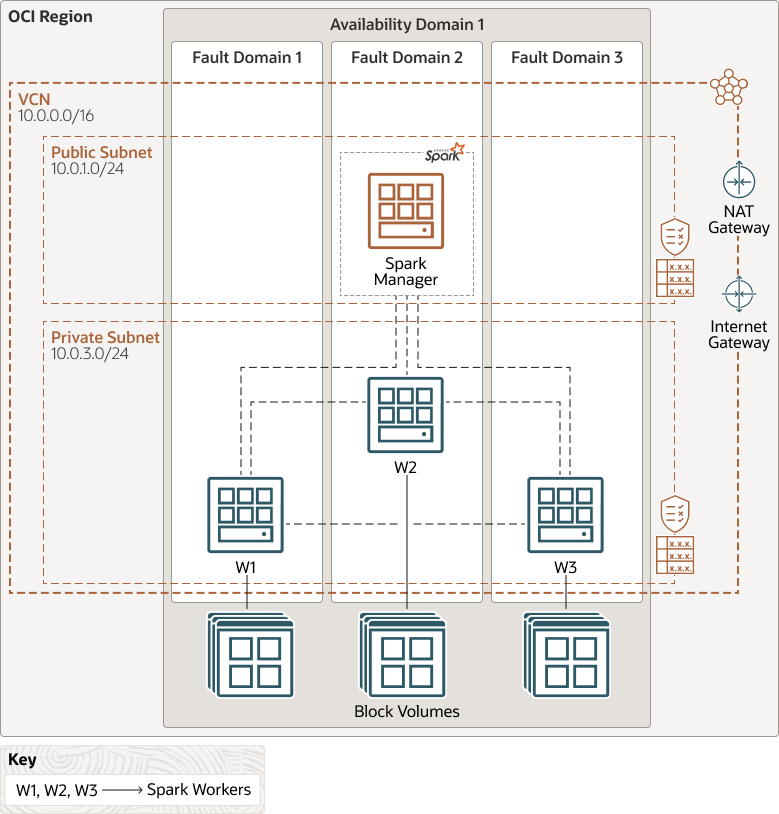

This architecture deploys an Apache Spark cluster on Oracle Cloud Infrastructure using the manager/worker model. It has a manager node and three worker nodes, running on compute instances.

The following diagram illustrates this reference architecture.

Description of the illustration spark-oci-png.png

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domain

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domain

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Apache Spark manager and workers

The compute instance that hosts the Apache Spark manager is attached to a regional public subnet. The workers are attached to a regional private subnet.

- Block storage

With block storage volumes, you can create, attach, connect, and move storage volumes, and change volume performance to meet your storage, performance, and application requirements. After you attach and connect a volume to an instance, you can use the volume like a regular hard drive. You can also disconnect a volume and attach it to another instance without losing data.

The Terraform quick-start template provided for this architecture provisions a 700-GB block volume for each worker node. While deploying the architecture, you can choose the number and size of the block volumes.

The architecture uses iSCSI, a TCP/IP-based standard, for communication between the volumes and the attached instances.

- Internet gateway

The internet gateway allows traffic between the public subnets in a VCN and the public internet.

- Network address translation (NAT) gateway

A NAT gateway enables private resources in a VCN to access hosts on the internet, without exposing those resources to incoming internet connections.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

Use regional subnets.

- Compute shapes

This architecture uses an Oracle Linux 7.7 OS image with a VM.Standard2.1 shape for both the manager and worker nodes. If your application needs more memory, cores, or network bandwidth, you can choose a different shape.

- Apache Spark and Hadoop

Even though Apache Spark can run alone, in this architecture, it runs on Hadoop.

In this architecture, a single manager node and three worker nodes are deployed as part of the Apache Spark cluster.

- Security

-

Use Oracle Cloud Guard to monitor and maintain the security of your resources in Oracle Cloud Infrastructure proactively. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with taking those actions, based on responder recipes that you can define.

-

For resources that require maximum security, Oracle recommends that you use security zones. A security zone is a compartment associated with an Oracle-defined recipe of security policies that are based on best practices. For example, the resources in a security zone must not be accessible from the public internet and they must be encrypted using customer-managed keys. When you create and update resources in a security zone, Oracle Cloud Infrastructure validates the operations against the policies in the security-zone recipe, and denies operations that violate any of the policies.

-

Considerations

- Performance

Consider using bare metal shapes for Compute instances for both the manager and worker nodes. You can achieve significant performance benefits by running Big Data applications on a bare metal Spark cluster.

- Availability

Fault domains provide the best resilience within a single availability domain. You can deploy compute instances that perform the same tasks in multiple availability domains. This design removes a single point of failure by introducing redundancy.

You might also consider creating an extra Spark manager node as a backup for high availability.

- Scalability

You can scale your application by using the instance pool and autoscaling features.

- Using instance pools you can provision and create multiple compute instances based on the same configuration within the same region.

- Autoscaling enables you to automatically adjust the number of compute instances in an instance pool, based on performance metrics like CPU utilization.

- Storage

You can use Oracle Cloud Infrastructure Object Storage to store the data instead of block volumes. If you use object storage, create a service gateway for connectivity from nodes in private subnets.

Oracle also offers the Hadoop Distributed File System (HDFS) Connector for Oracle Cloud Infrastructure Object Storage. Using the HDFS connector, your Apache Hadoop applications can read and write data to and from object storage.

- Manageability

This architecture uses Terraform to create the infrastructure and deploy the Spark cluster.

You can, instead, use the fully managed service, Oracle Cloud Infrastructure Data Flow, which provides a rich user interface to allow developers and data scientists to create, edit, and run Apache Spark applications at any scale without the need for clusters, an operations team, or highly specialized Spark knowledge. As a fully managed service, there’s no infrastructure to deploy or manage.

- Security

Use policies to restrict who can access your Oracle Cloud Infrastructure resources and what actions they can perform.

Deploy

The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI.

- Deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy by using the Terraform CLI:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.

Change Log

This log lists significant changes:

| April 27, 2022 |

Added the option to download editable versions (.SVG and .DRAWIO) of the architecture diagram. |

| January 29, 2021 |

|