4 Oracle JCA Adapter for Files/FTP

This chapter describes how to use the Oracle File and FTP Adapters, which work with Oracle BPEL Process Manager and Oracle Mediator and Oracle Mediator. Information on concepts, features, configuration and use cases for the Oracle File and FTP Adapters is also provided.

This chapter includes the following sections:

Note:

The term Oracle JCA Adapter for Files/FTP is used for the Oracle File and FTP Adapters, which are separate adapters with very similar functionality.

4.1 Introduction to Oracle File and FTP Adapters

Oracle BPEL PM and Mediator include the Oracle File and FTP Adapters. The Oracle File and FTP Adapters enable a BPEL process or a Mediator to exchange (read and write) files on local file systems and remote file systems (through use of the file transfer protocol (FTP)). The file contents can be both XML and non-XML data formats.

This section includes the following topics:

4.1.1 Oracle File and FTP Adapters Architecture

The Oracle File and FTP Adapters are based on JCA 1.5 architecture. JCA provides a standard architecture for integrating heterogeneous enterprise information systems (EIS). The JCA Binding Component of the Oracle File and FTP Adapters expose the underlying JCA interactions as services (WSDL with JCA binding) for Oracle BPEL PM integration. For details about Oracle JCA Adapter architecture, see Introduction to Oracle JCA Adapters.

4.1.2 Oracle File and FTP Adapters Integration with Oracle BPEL PM

The Oracle File and FTP Adapters are automatically integrated with Oracle BPEL PM. When you drag and drop File Adapter for FTP Adapter from the Components window of JDeveloper BPEL Designer, the Adapter Configuration Wizard starts with a Welcome page, as shown in Figure 4-1.

Figure 4-1 The Adapter Configuration Wizard - Welcome Page

Description of "Figure 4-1 The Adapter Configuration Wizard - Welcome Page"

This wizard enables you to select and configure the Oracle File and FTP Adapters. The Adapter Configuration Wizard then prompts you to enter a service name, as shown in Figure 4-2.

Figure 4-2 The Adapter Configuration Wizard - Service Name Page

Description of "Figure 4-2 The Adapter Configuration Wizard - Service Name Page"

When configuration is complete, a WSDL and JCA file pair is created in the Application Navigator section of Oracle JDeveloper. (JDeveloper) This JCA file contains the configuration information you specify in the Adapter Configuration Wizard.

The Operation Type page of the Adapter Configuration Wizard prompts you to select an operation to perform. Based on your selection, different Adapter Configuration Wizard pages appear and prompt you for configuration information. Table 4-1 lists the available operations and provides references to sections that describe the configuration information you must provide.

Table 4-1 Supported Operations for Oracle BPEL Process Manager

| Operation | Section |

|---|---|

|

Oracle File Adapter |

- |

|

|

|

|

|

|

|

|

|

Oracle FTP Adapter |

- |

|

|

|

|

|

|

|

For more information about Oracle JCA Adapter integration with Oracle BPEL PM, see Introduction to Oracle JCA Adapters.

4.1.3 Oracle File and FTP Adapters Integration with Mediator

The Oracle File and FTP Adapters are automatically integrated with Mediator. When you create an Oracle File or FTP Adapter service in JDeveloper Designer, the Adapter Configuration Wizard is started.

This wizard enables you to select and configure the Oracle File and FTP Adapters. When configuration is complete, a WSDL, JCA file pair is created in the Application Navigator section of JDeveloper. This JCA file contains the configuration information you specify in the Adapter Configuration Wizard.

The Operation Type page of the Adapter Configuration Wizard prompts you to select an operation to perform. Based on your selection, different Adapter Configuration Wizard pages appear and prompt you for configuration information. Table 4-2 lists the available operations and provides references to sections that describe the configuration information you must provide. For more information about Adapters and Mediator, see Introduction to Oracle JCA Adapters.

Table 4-2 Supported Operations for Oracle Mediator

| Operation | Section |

|---|---|

|

Oracle File Adapter |

- |

|

|

|

|

|

|

|

|

|

Oracle FTP Adapter |

- |

|

|

|

|

|

|

|

4.1.4 Oracle File and FTP Adapters Integration with SOA Composite

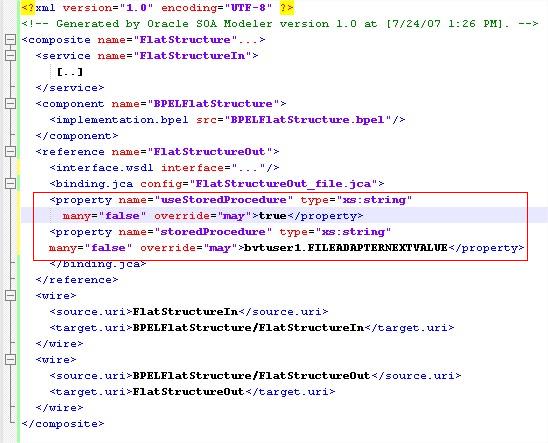

A composite is an assembly of services, service components (Oracle BPEL PM and Mediator), wires, and references designed and deployed in a single application. The composite processes the information described in the messages. The details of the composite are stored in the composite.xml file. For more information about integration of the Oracle File and FTP Adapters with SOA composite, see Oracle SOA Composite Integration with Adapters.

4.2 Oracle File and FTP Adapters Features

The Oracle File and FTP Adapters enable you to configure a BPEL process or a Mediator to interact with local and remote file system directories. This section explains the following features of the Oracle File and FTP Adapters:

Note:

For composites with Oracle File and FTP Adapters, which are designed to consume very large number of concurrent messages, you must set the number of open files parameter for your operating system to a larger value. For example, to set the number of open files parameter to 8192 for Linux, use the ulimit -n 8192 command.

4.2.1 File Formats

The Oracle File and FTP Adapters can read and write the following file formats and use the adapter translator component at runtime:

-

XML (both XSD- and DTD-based)

-

Delimited

-

Fixed positional

-

Binary data

-

COBOL Copybook data

The Oracle File and FTP Adapters can also treat file contents as an opaque object and pass the contents in their original format (without performing translation). The opaque option handles binary data such as Jpgs and GIFs, whose structure cannot be captured in an XSD or data you do not want to have translated.

Note that opaque representation base-64 encodes the payload and increase the size of the payload in-memory by a third. Opaque/base-64 representation is usually used for passing binary data within XML. See also Large Payload Support, for a description of attachment support.

The translator enables the Oracle File and FTP Adapters to convert native data in various formats to XML, and from XML to other formats. The native data can be simple (just a flat structure) or complex (with parent-child relationships). The translator can handle both XML and non-XML (native) formats of data.

4.2.2 FTP Servers

Oracle FTP Adapter supports most RFC 959 compliant FTP servers on all platforms. It also provides a pluggable mechanism that enables Oracle FTP Adapter to support additional FTP servers. In addition, Oracle FTP Adapter supports FTP over SSL (FTPS) on Solaris and Linux. Oracle FTP Adapter also supports SFTP (Secure FTP) using SSH transport.

Note:

Oracle FTP Adapter supports SFTP server version 3 or later.

4.2.3 Inbound and Outbound Interactions

The Oracle File and FTP Adapters exchange files in the inbound and outbound directions. Based on the direction, the Oracle File and FTP Adapters perform different sets of tasks.

For inbound files sent to Oracle BPEL PM or Mediator, the Oracle File and FTP Adapters perform the following operations:

-

Poll the file system looking for matches.

-

Read and translate the file content. Native data is translated based on the native schema (NXSD) defined at design time.

-

Publish the translated content as an XML message.

This functionality of the Oracle File and FTP Adapters is referred to as the file read operation.

For outbound files sent from Oracle BPEL PM or Mediator, the Oracle File and FTP Adapters perform the following operations:

-

Receive messages from BPEL or Mediator.

-

Format the XML contents as specified at design time.

-

Produce output files. The output files can be created based on the following criteria: time elapsed, file size, and number of messages. You can also specify a combination of these criteria for output files.

This functionality of the Oracle File and FTP Adapters is referred to as the file write operation. This operation is known as a JCA outbound interaction.

For the inbound and outbound directions, the Oracle File and FTP Adapters use a set of configuration parameters. For example:

-

The inbound Oracle File and FTP Adapters have parameters for the inbound directory where the input file appears and the frequency with which to poll the directory.

-

The outbound Oracle File and FTP Adapters have parameters for the outbound directory in which to write the file and the file naming convention to use.

Note:

You must use the Adapter Configuration Wizard to modify the configuration parameters, such as publish size, number of messages, and polling frequency.

You must not manually change the value of these parameters in JCA files.

The file reader supports polling conventions and offers several postprocessing options. You can specify to delete, move, or leave the file as it is after processing the file. The file reader can split the contents of a file and publish it in batches, instead of as a single message. You can use this feature for performance tuning of the Oracle File and FTP Adapters. The file reader guarantees once and once-only delivery.

following sections for details about the read and write functionality of the Oracle File and FTP Adapters:

4.2.4 File Debatching

You can define the batch size using the publishSize parameter in the .jca file.

This property specifies if the file contains multiple messages and how many messages to publish to the BPEL process at a time.

For example, if a certain file has 11 records and this parameter is set to 2, then the file processes 2 records at a time and the final record is processed in the sixth iteration.

When a file contains multiple messages, you can choose to publish messages in a specific number of batches. This is referred to as debatching. During debatching, the file reader, on restart, proceeds from where it left off in the previous run, thereby avoiding duplicate messages. File debatching is supported for files in XML and native formats.

You can register a batch notification callback (Java class) which is invoked when the last batch is reached in a debatching scenario.

<service ...

<binding.jca ...

<property name="batchNotificationHandler">java://oracle.sample.SampleBatchCalloutHandler </property>

where the property value must be java://{custom_class} and where oracle.sample.SampleBatchCalloutHandler must implement

package oracle.tip.adapter.api.callout.batch;

public interface BatchNotificationCallout extends Callout

{

public void onInitiateBatch(String rootId,

String metaData)

throws ResourceException;

public void onFailedBatch(String rootId,

String metaData,

long currentBatchSize,

Throwable reason)

throws ResourceException;

public void onCompletedBatch(String rootId,

String metaData,

long finalBatchSize)

throws ResourceException;

4.2.5 File ChunkedRead

The File Chunked Read operation enables you to process large files and uses a BPEL Invoke activity within a while loop to process the target file.

Specifically, the FileAdapter allows the BPEL process modeler to use an Invoke activity to retrieve a logical chunk from a huge file, enabling the file to stay within memory constraints. The process calls the chunked-interaction in a loop in order to process the entire file, one logical chunk at a time. The intent is to achieve de-batchability on a file's outbound processing.

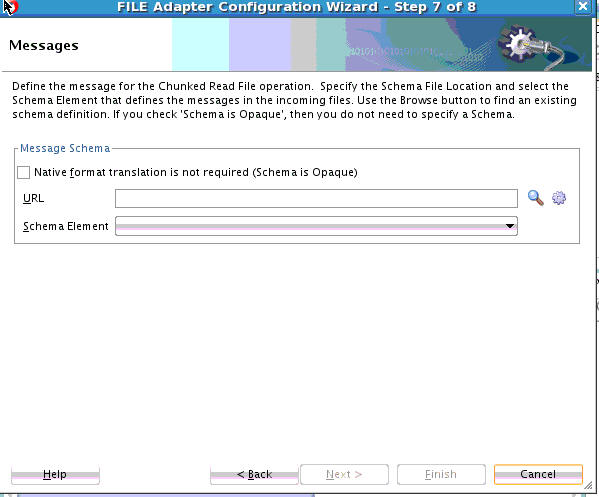

You use the File Adapter Configuration Wizard to define a chunked file .jca file and the WSDL file.

4.2.5.1 Chunked Interaction File Adapter Processing

The FileAdapter translates the native data for a Chunked Read operation to XML and returns it as a BPEL variable.

To perform a Chunked Read, you typically create an Invoke activity within BPEL.

You also select Chunked Synchronous Read as the WSDL operation, using the File Adapter Configuration Wizard; you optionally use the Configuration Wizard to configure the file input directory and the filename which are placed in the ChunkedInteractionSpec.

Each call to the Invoke activity returns header values in addition to the payload.

These header values include: line number, column number, and indicators that specify if the End of File has been reached. You must ensure to copy the line/column numbers from the return header to the outbound headers for the subsequent call to the File Adapter. You can also specify the input directory/filename as header values if you want to.

4.2.5.1.1 File Chunked Interaction BPEL Invocation

The FileAdapter chunked interaction is invoked from BPEL. For native data files, line number and column number are additionally passed as header values.

The first time that the chunked interaction is called within the loop, the values for LineNumber and ColumnNumber are blank; for subsequent calls, these values come from the return values from the Invoke minus one (that is, the prior Invoke).

The BPEL Invoke calls ChunkedInteraction with the parameters provided in Table 4-3.

Table 4-3 BPEL Invoke Parameters for Chunked Interaction

| Parameter | Where Obtained |

|---|---|

|

|

ChunkedInteractionSpec or from the BPEL header |

|

|

ChunkedInteractionSpec or from BPEL header |

|

|

ChunkedInteractionSpec, analogous to PublishSize in de-batching |

|

|

Header (optional) |

|

|

Header (optional) |

|

|

Header (optional) |

4.2.5.1.2 The ChunkSize Parameter

The ChunkSize parameter provides information related to the size of the file chunk for the Chunked Read operation; it defaults to 1 if you do not configure another value in ChunkedInteractionSpec (that is, using the File Adapter Configuration Wizard).

Specifically, the ChunkSize parameter governs the number of nodes or records (not lines) that are returned.

For example, if you have an address book as a native CSV file and you have specified a ChunkSize of 5, each call to the Invoke activity returns an XML file containing 5 address book nodes; that is, five rows of CSV records in XML format.

In that sense, the ChunkSize parameter is analogous to the PublishSize parameter used by the FileAdapter for an inbound transaction.

4.2.5.1.2.1 Example Rejection Handler Binding for Chunked Read

The following example shows how to configure a rejection handler for the chunked read reference binding.

Example - Rejection Handler Binding for Chunked Read

<reference name="ReadAddressChunk">

<interface.wsdl

interface="http://xmlns.oracle.com/pcbpel/adapter

/file/ReadAddressChunk/

#wsdl.interface(ChunkedRead_ptt)"/>

<binding.jca config="ReadAddressChunk_file.jca">

<property name="rejectedMessageHandlers" source=""

type="xs:string" many="false" override="may"

>file:///c:/temp</property>

</binding.jca>

</reference>

4.2.5.1.2.2 Using Line Number//Column Number or Record Number

After every Invoke, you must copy the return headers over to the outbound headers for the subsequent invoke. The LineNumber/ColumnNumber are used by the Adapter for book-keeping purposes only, and you must ensure that you copy these values from the return-headers back to the headers before the call to the chunked interaction.

RecordNumber, on the other hand, is used when the data is in XML format (as distinct from native data). In that sense, RecordNumber is mutually exclusive with LineNumber/ColumnNumber, which is used for native data book-keeping.

4.2.5.1.2.3 File Chunked Read Interaction Artifacts

See the below example for JCA file for Chunked Read.

Example - JCA File for Chunked Read

<interaction-spec className="oracle.tip.adapter.file.

outbound.ChunkedInteractionSpec">

<property name="PhysicalDirectory"

value="/tmp/chunked/in"/>

<property name="FileName" value="dummy.txt"/>

<property name="ChunkSize" value="10"/>

</interaction-spec>

The below example shows the generated Adapter WSDL file for the Chunked Read interaction:

Example - Chunked Read WSDL File

<?xml version = '1.0' encoding = 'UTF-8'?>

<definitions name="ReadAddressChunk"

targetNamespace="http://xmlns.oracle.com/pcbpel

/adapter/file/ReadAddressChunk/"

xmlns="http://schemas.xmlsoap.org/wsdl/" xmlns:tns="http://xmlns.oracle.com/pcbpel/adapter/

file/ReadAddressChunk/" xmlns:plt="http://schemas.xmlsoap.org/ws/2003/05/partner-link/" xmlns:imp1="http://xmlns.oracle.com/pcbpel/demoSchema/csv">

<documentation>Returns a finite chunk from the target

file based on the chunk size parameter</documentation>

<types>

<schema targetNamespace="http://xmlns.oracle.com/

pcbpel/adapter/

file/ReadAddressChunk/"

xmlns="http://www.w3.org/2001/XMLSchema">

<import namespace="http://xmlns.oracle.com/pcbpel

/demoSchema/csv"

schemaLocation="xsd/address-csv.xsd"/>

<element name="empty">

<complexType/>

</element>

</schema>

</types>

<message name="Empty_msg">

<part name="Empty" element="tns:empty"/>

</message>

<message name="Root-Element_msg">

<part name=

"Root-Element" element="imp1:Root-Element"/>

</message>

<portType name="ChunkedRead_ptt">

<operation name="ChunkedRead">

<input message="tns:Empty_msg"/>

<output message="tns:Root-Element_msg"/>

</operation>

</portType>

<plt:partnerLinkType name="ChunkedRead_plt">

<plt:role name="ChunkedRead_role">

<plt:portType

name="tns:ChunkedRead_ptt"/>

</plt:role>

</plt:partnerLinkType>

</definitions>

4.2.5.2 Using the File Adapter Configuration Wizard to Perform Chunked Read Interaction Modelling

You use the File Adapter Configuration Wizard to model chunked red interaction.

You can use the initial three screens of the File Adapter as you would to configure any other File Adapter operation.

4.2.5.2.1 Chunked Interaction Error Handling Summary

When a translation exception (that is, a bad record that violates the nXSD specification) is encountered, the return header is populated with the translation exception message that includes details such as the line/column where the error occurred.

However, a specific translation error does not result in a fault. Instead, it becomes a value in the return header. You must check the jca.file.IsMessageRejected and the jca.file.RejectionReason header values to check if rejection did happen. Additionally, you can also check the jca.file.NoDataFound header value

4.2.5.2.2 Skipping Bad Records

Using the nxsd:uniqueMessageSeparator construct enables the Adapter to skip bad records and continue processing the next set of records. (For more information on uniqueMessageSeparator, see Native Format Builder Wizard .)

If you do not use the uniqueMessageSeparator, the Adapter returns EndOfFile and thus causes the while loop to terminate.

Thus, the uniqueMessageSeparator construct is required if you want processing to continue and not assume an End of File situation. The absence of the uniqueMessageSeparator construct causes the rest of the file to be rejected as a single chunk-to reject the entire file.

4.2.5.2.3 Examples of Chunked Interaction Header and Rejected Chunked Interaction Messages

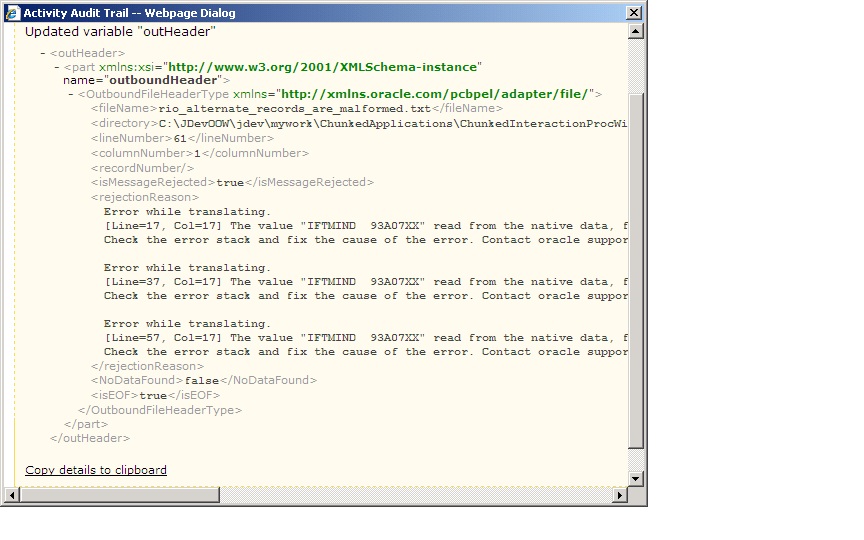

See Figure 4-7 for an example of the return header appearance in a scenario that employs the nxsd:uniqueMessageSeparator construct.

This scenario shows a chunked interaction with a file that had six records (each of which was complex) and each alternate record was malformed. In the scenario, the ChunkSize used was five.

The returnHeader shows that the messages have been rejected (isMessageRejected=true) and the rejection reason is populated for the three malformed records: specifically, the records at line 17, 37, and 57 were malformed.

The NoDataFound parameter is set to false, which means that the data for the remaining three records is returned.

Figure 4-7 Return Outbound Header Appearance when nxsd:uniqueMessageSeparator is Used

Description of "Figure 4-7 Return Outbound Header Appearance when nxsd:uniqueMessageSeparator is Used"

The same records are also rejected to the user-configured rejection folder (C:\foo in this case). See Figure 4-8.

Figure 4-8 Chunked Read Interaction Rejected Messages in Rejection Folder

Description of "Figure 4-8 Chunked Read Interaction Rejected Messages in Rejection Folder "

4.2.6 File Sorting

When files must be processed by Oracle File and FTP Adapters in a specific order, you can you use the File Sorting Functionality of the Adapter.. For example, you can configure the sorting parameters for Oracle File and FTP Adapters to process files in ascending or descending order by time stamps.

You must meet the following prerequisites for sorting scenarios of Oracle File and FTP Adapters:

-

Use a synchronous operation

-

Add the following property to the inbound JCA file:

<property name="ListSorter" value="oracle.tip.adapter.file.inbound.listing.TimestampSorterAscending"/> <property name="SingleThreadModel" value="true"/>

4.2.7 Dynamic Outbound Directory and File Name Specification

The Oracle File and FTP Adapters enable you to dynamically specify the logical or physical name of the outbound file or outbound directory. For information about how to specify dynamic outbound directory, see Outbound File Directory Creation.

4.2.8 Security

The Oracle FTP Adapter supports FTP over SSL (FTPS) and Secure FTP (SFTP) to enable secure file transfer over a network.

For more information, see Using Secure FTP with the and Using SFTP with .

4.2.9 Nontransactional

The Oracle File Adapter picks up a file from an inbound directory, processes the file, and sends the processed file to an output directory. However, during this process if a failover occurs in the Oracle RAC back end or in an SOA managed server, then the file is processed twice because of the nontransactional nature of Oracle File Adapter. As a result, there can be duplicate files in the output directory.

4.2.10 Proxy Support

You can use the proxy support feature of the Oracle FTP Adapter to transfer and retrieve data to and from the FTP servers that are located outside a firewall or can only be accessed through a proxy server. A proxy server enables the hosts in an intranet to indirectly connect to hosts on the Internet. Figure 4-9 shows how a proxy server creates connections to simulate a direct connection between the client and the remote FTP server.

Figure 4-9 Remote FTP Server Communication Through a Proxy Server

Description of "Figure 4-9 Remote FTP Server Communication Through a Proxy Server"

To use the HTTP proxy feature, your proxy server must support FTP traffic through HTTP Connection. In addition, only passive data connections are supported with this feature. For information about how to configure the Oracle FTP Adapter, see Configuring for HTTP Proxy.

4.2.11 No Payload Support

For Oracle BPEL PM and Mediator, the Oracle File and FTP Adapters provide support for publishing only file metadata such as file name, directory, file size, and last modified time to a BPEL process or Mediator and excludes the payload. The process can use this metadata for subsequent processing. For example, the process can call another reference and pass the file and directory name for further processing.You can use the Oracle File and FTP Adapters as a notification service to notify a process whenever a new file appears in the inbound directory. To use this feature, select the Do not read file content check box in the JDeveloper wizard while configuring the "Read operation."

4.2.12 Large Payload Support

For Oracle BPEL PM and Mediator, the Oracle File Adapter provides support for transferring large files as attachments. To use this feature, select the Read File As Attachment check box in the JDeveloper Configuration wizard while configuring the Read operation.

This option opaquely transfers a large amount of data from one place to another as attachments. For example, you can transfer large MS Word documents, images, and PDFs without processing their content within the composite application. For information about how to pass large payloads as attachments, see Read File As Attachments.

Additionally, the Oracle File Adapter provides you with the ability to write files as an attachment. When you write files as attachments, and also have a normal payload, it is the attached file that is written, and the payload is ignored.

Note:

You must not pass large payloads as opaque schemas.

4.2.13 File-Based Triggers

You can use the Oracle File and FTP Adapters, which provide support for file-based triggers, to control inbound adapter endpoint activation. For information about how to use file-based triggers, see File Polling.

4.2.14 Pre-Processing and Post-Processing of Files

The process modeler may encounter situations where files must be pre-processed before they are picked up for processing or post-processed before the files are written out to the destination folder. For example, the files that the Oracle File and FTP adapters receive may be compressed or encrypted and the adapter must decompress or decrypt the files before processing. In this case, you must use a custom code to decompress or decrypt the files before processing. The Oracle File and FTP Adapters supports the use of custom code that can be plugged in for pre-processing or post-processing of files.

The implementation of the pre-processing and post-processing of files is restricted to the following communication modes of the Oracle File and FTP Adapters:

-

Read File or Get File

-

Write File or Put File

-

Synchronous Read File

-

Chunked Read

This section contains the following topics:

4.2.14.1 Mechanism For Pre-Processing and Post-Processing of Files

The mechanism for pre-processing and post-processing of files is configured as pipelines and valves. This section describes the concept of pipelines and valves.

A pipeline consists of a series of custom-defined valves. A pipeline loads a stream from the file system, subjects the stream to processing by an ordered sequence of valves, and after the processing returns the modified stream to the adapter.

A valve is the primary component of execution in a processing pipeline. A valve processes the content it receives and forwards the processed content to the next valve. For example, in a scenario where the Oracle File and FTP Adapters receive files that are encrypted and zipped, you can configure a pipeline with an unzip valve followed by a decryption valve. The unzip valve extracts the file content before forwarding it to the decryption valve, which decrypts the content and the final content is made available to the Oracle File or FTP Adapter as shown in Figure 4-10.

Figure 4-10 A Sample Pre-Processing Pipeline

Description of "Figure 4-10 A Sample Pre-Processing Pipeline"

4.2.14.2 Configuring a Pipeline

Configuring the mechanism for pre-processing and post-processing of files requires defining a pipeline and configuring it in the corresponding JCA file.

To configure a pipeline, you must perform the following steps:

4.2.14.2.1 Implementing and Extending Valves

-

All valves must implement

ValveorStagedValveinterface.Tip:

You can extend either the

AbstractValveor theAbstractStagedValveclass based on business requirement rather than implementing a valve from the beginning.The below example is a sample valve interface.

Example - The Valve Interface

package oracle.tip.pc.services.pipeline; import java.io.IOException; /** <p> * Valve component is resposible for processing the input stream * and returning a modified input stream. * The <code>execute()</code> method of the valve gets invoked * by the caller (on behalf) of the pipeline. This method must * return the input stream wrapped within an InputStreamContext. * The Valve is also responsible for error handling specifically * * The Valve can be marked as reentrant in which case the caller * must call the <code>execute()</code> multiple times and each * invocation must return a new input stream. This is useful, if * you are writing an UnzipValve since each iteration of the valve * must return the input stream for a different zipped entry. * <b> You must note that only the first Valve in the pipeline can * be reentrant </b> * * The Valve has another flavor <code>StagedValve</code> and if * the valve implements StagedValve, then the valve must store * intermediate content in a staging file and return it whenever * required. * </p> */ public interface Valve { /** * Set the Pipeline instance. This parameter can be * used to get a reference to the PipelineContext instance. * @param pipeline */ public void setPipeline(Pipeline pipeline); /** Returns the Pipeline instance. * @return */ public Pipeline getPipeline(); /** Returns true if the valve has more input streams to return * For example, if the input stream is from a zipped file, then * each invocation of <code>execute()</code> returns a different * input stream once for each zipped entry. The caller calls * <code>hasNext()</code> to check if more entries are available * @return true/false */ public boolean hasNext(); /** Set to true if the caller can call the valve multiple times * e.g. in case of ZippedInputStreams * @param reentrant */ public void setReentrant(boolean reentrant); /** Returns true if the valve is reentrant. * @return */ public boolean isReentrant(); /** The method is called by pipeline to return the modified input stream * @param in * @return InputStreamContext that wraps * the input stream along with required metadata * @throws PipelineException */ public InputStreamContext execute(InputStreamContext in) throws PipelineException, IOException; /** * This method is called by the pipeline after the caller * publishes the * message to the SCA container. * In the case of a zipped file, this method * gets called repeatedly, once * for each entry in the zip file. * This should be used by the Valve to do * additional tasks such as * delete the staging file that has been processed * in a reentrant * scenario. * @param in The original InputStreamContext returned from <code>execute()</code> */ public void finalize(InputStreamContext in); /**Cleans up intermediate staging files, input streams * @throws PipelineException, IOException */ public void cleanup() throws PipelineException, IOException; }

The

StagedValvestores intermediate content in staging files. The example below shows theStagedValveinterface extending theValveinterface.Example - The StagedValve Interface Extending the Valve Interface

package oracle.tip.pc.services.pipeline; import java.io.File; /** * A special valve that stages the modified * input stream in a staging file. * If such a <code>Valve</code> exists, then * it must return the staging file containing * the intermediate data. */ public interface StagedValve extends Valve { /** * @return staging file where the valve * stores its intermediate results */ public File getStagingFile(); }

The below example is a sample of an

AbstractValveclass implementing theValveinterface.Example - The AbstractValve Class Implementing the Valve Interface

package oracle.tip.pc.services.pipeline; import java.io.IOException; /** * A bare bone implementation of Valve. The user should * extend from * AbstractValve rather than implementing a Valve from scratch * */ public abstract class AbstractValve implements Valve { /** * The pipeline instance is stored as a member */ private Pipeline pipeline = null; /** * If reentrant is set to true, then the Valve must adhere to the following: * i) It must the first valve in the pipeline ii) * Must implement hasNext * method and return true if more input * streams are available A reentrant * valve will be called by the pipeline * more than once and each time the * valve must return a different input stream, * for example Zipped entries * within a zip file */ private boolean reentrant = false; /* * Save the pipeline instance. * * @see * oracle.tip.pc.services.pipeline.Valve#setPipeline * (oracle.tip.pc.services.pipeline.Pipeline) */ public void setPipeline(Pipeline pipeline) { this.pipeline = pipeline; } /* * Return the pipeline instance (non-Javadoc) * * @see oracle.tip.pc.services.pipeline. * Valve#getPipeline() */ public Pipeline getPipeline() { return this.pipeline; } /* * Return true if the valve is reentrant (non-Javadoc) * * @see oracle.tip.pc.services.pipeline. * Valve#isReentrant() */ public boolean isReentrant() { return this.reentrant; } /* * If set to true, the valve is reentrant (non-Javadoc) * * @see oracle.tip.pc.services.pipeline. * Valve#setReentrant(boolean) * */ public void setReentrant(boolean reentrant) { this.reentrant = reentrant; } /* * By default, set to false For valves * that can return more than one * inputstreams to callers, this parameter * must return true/false depending * on the availability of input streams (non-Javadoc) * * @see oracle.tip.pc.services.pipeline.Valve#hasNext() */ public boolean hasNext() { return false; } /* * Implemented by concrete valve (non-Javadoc) * * @see oracle.tip.pc.services.pipeline. * Valve#execute(InputStreamContext) */ public abstract InputStreamContext execute(InputStreamContext in) throws PipelineException, IOException; /* * Implemented by concrete valve (non-Javadoc) * * @see * oracle.tip.pc.services.pipeline.Valve#finalize * (oracle.tip.pc.services.pipeline.In * putStreamContext) * / public abstract void finalize(InputStreamContext in); /* * Implemented by concrete valve (non-Javadoc) * * @see oracle.tip.pc.services.pipeline. * Valve#cleanup() */ public abstract void cleanup() throws PipelineException, IOException; }

The below example shows the

AbstractStagedValveclass extending theAbstractValveclass.Example - The AbstractStagedValve Class Extending the AbstractValve Class

package oracle.tip.pc.services.pipeline; import java.io.File; import java.io.IOException; public abstract class AbstractStagedValve extends AbstractValve implements StagedValve { public abstract File getStagingFile(); public abstract void cleanup() throws IOException, PipelineException; public abstract InputStreamContext execute(InputStreamContext in) throws IOException, PipelineException; }

For more information on valves, see Oracle JCA Adapter Valves.

4.2.14.2.2 Compiling the Valves

-

You must use the

bpm-infra.jarfile to compile the valves. Thebpm-infra.jarfile is located at$MW_HOME/AS11gR1SOA/soa/modules/oracle.soa.fabric_11.1.1/bpm-infra.jar.-

Reference the SOA project to the

bpm-infra.jarfile, by using the following procedure:-

In the Application Navigator, right-click the SOA project.

-

Select Project Properties. The Project Properties dialog is displayed.

-

Click Libraries and Classpath. The Libraries and Classpath pane is displayed as shown in Figure 4-11.

Figure 4-11 The Project Properties Dialog

Description of "Figure 4-11 The Project Properties Dialog" -

Click Add Jar/Directory. The Add Archive or Directory dialog is displayed.

-

Browse to select the

bpm-infra.jarfile. TheBpm-infra.jarfile is located at$MW_HOME/AS11gR1SOA/soa/modules/oracle.soa.fabric_11.1.1/bpm-infra.jar. -

Click OK. The

bpm-infra.jarfile is listed under Classpath Entries.

-

-

Compile the valves using the

bpm-infra.jarfile. -

Make the

JARfile containing the compiled valves available to the Oracle WebLogic Server classpath by adding the jar file to thesoainfradomain classpath. For example,$MW_HOME/user_projects/domains/soainfra/lib.

Note:

Ensure that you compile

bpm-infra.jarwith JDK 6.0 to avoid compilation error such asclass file has wrong version 50.0, should be 49.0. -

4.2.14.2.3 Creating a Pipeline

-

To configure a pipeline, you must create an XML file that conforms to the following schema:

Example - XML for Pipeline Creation

<?xml version="1.0" encoding="UTF-8" ?> <xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.oracle.com/adapter/pipeline/"> <xs:element name="pipeline"> <xs:complexType> <xs:sequence> <xs:element ref="valves"> <xs:complexType> <xs:sequence> <xs:element ref="valve" maxOccurs="unbounded"> <xs:complexType mixed="true"> <xs:attribute name="reentrant" type="xs:NMTOKEN" use="optional" /> </xs:complexType> </xs:element> </xs:sequence> </xs:complexType> </xs:element> </xs:sequence> </xs:complexType> <xs:attribute name="useStaging" type="xs:NMTOKEN" use="optional" /> <xs:attribute name="batchNotificationHandler" type="xs:NMTOKEN" use=" optional" /> </xs:element> </xs:schemaThe following is a sample XML file configured for a pipeline with two valves,

SimpleUnzipValveandSimpleDecryptValve:Example - XML file configured for a Pipeline with two valves

<?xml version="1.0"?> <pipeline xmlns= "http://www.oracle.com/adapter/pipeline/"> <valves> <valve>valves.SimpleUnzipValve</valve> <valve> valves.SimpleDecryptValve </valve> </valves> </pipeline>

4.2.14.2.4 Adding the Pipeline to the SOA Project Directory

-

You must add the

pipeline.xmlfile to the SOA project directory. This step is required to integrate the pipeline with the Oracle File or FTP Adapter. Figure 4-12 shows a samplepipeline.xmlfile (unzippipeline.xml) added to theInboundUnzipAndOutboundZipproject.Figure 4-12 Project with unzippipeline.xml File

Description of "Figure 4-12 Project with unzippipeline.xml File"

4.2.14.2.5 Registering the Pipeline

-

The pipeline that is a part of the SOA project must be registered by modifying the inbound JCA file, by adding the following property:

<property name="PipelineFile" value="pipeline.xml"/>

For example, in the JCA file shown in Figure 4-12,

FileInUnzip_file.jca, the following property has been added to register anUnzippipeline with an Oracle File Adapter:<property name="PipelineFile" value="unzippipeline.xml"/>

There may be scenarios involving simple valves. A simple valve is one that does not require additional metadata such as re-entrancy, and

batchNotificationHandlers. If the scenario involves simple valves, then the pipeline can be configured as anActivationSpecor anInteractionSpecproperty as shown in the following sample:Example - Pipeline Configuration with Simple Valves

<?xml version="1.0" encoding="UTF-8"?> <adapter-config name="FlatStructureIn" adapter="File Adapter" xmlns="http://platform.integration. oracle/blocks/adapter/fw/metadata"> <connection-factory location="eis/FileAdapter" UIincludeWildcard="*.txt" adapterRef=""/> <endpoint-activation operation="Read"> <activation-spec className="oracle.tip.adapter.file. inbound.FileActivationSpec"> <property name="UseHeaders" value="false"/> <property name= "LogicalDirectory" value="InputFileDir"/> <property name="Recursive" value="true"/> <property name="DeleteFile" value="true"/> <property name="IncludeFiles" value=".*\.txt"/> <property name="PollingFrequency" value="10"/> <property name="MinimumAge" value="0"/> <property name="OpaqueSchema" value="false"/> </activation-spec> </endpoint-activation> </adapter-config>Note:

There is no space after the comma (

,) in thePipelineValvespropertyvalue.Note:

If you configure a pipeline using the

PipelineValveproperty, then you cannot configure additional metadata such as Re-entrant Valve and Batch Notification Handler. Additional metadata can be configured only withPipelineFilethat is used for the XML-based approach.

4.2.14.3 Using a Re-Entrant Valve For Processing Zip Files

The re-entrant valve enables you to process individual entries within a zip file. In a scenario that involves processing all entries within a zip file, wherein each entry is encrypted using the Data Encryption Standard (DES), you can configure the valve by adding the reentrant="true" attribute to the unzip valve as follows:

Example - Configuring the reentrant=true Attribute

<?xml version="1.0"?>

<pipeline xmlns="http://www.oracle.com/adapter/pipeline/">

<valves>

<valve reentrant="true">valves.ReentrantUnzipValve</valve>

<valve> valves.SimpleDecryptValve </valve>

</valves>

</pipeline>

In this example, the pipeline invokes the ReentrantUnzipValve and then the SimpleDecryptValve repeatedly in the same order until the entire zip file has been processed. In other words, the ReentrantUnzipValve is invoked first to return the data from the first zipped entry, which is then fed to the SimpleDecryptValve for decryption, and the final content is returned to the Adapter. The process repeats until all the entries within the zip file are processed.

Additionally, the valve must set the message key using the setMessageKey() API. For more information refer to An Unzip Valve for processing Multiple Files.

Error Handling For Zip Files

If there are translation errors for individual entries within the zip file, entries with the translation errors are rejected and the other entries are processed.

If there are errors during the publish operation, the publish operation is retried and the retry semantic holds. If the retry semantic does not hold, then the original file is rejected and the pipeline ends.

4.2.14.4 Configuring the Batch Notification Handler

The BatchNotificationHandler API is used with the Oracle File and FTP Adapter inbound de-batchability. In a de-batching scenario, each file contains multiple messages, and some sort of bookkeeping is required for crash-recovery. This is facilitated by the BatchNotificationHandler API, which lets you receive notification from the pipeline whenever a batch begins, occurs, or ends. The below example is the BatchNotificationHandler interface:

Example - BatchNotification Handler

package oracle.tip.pc.services.pipeline;

/*

* Whenever the caller processes de-batchable files,

* each file can

* have multiple messages and this handler

* allows the user to plug in

* a notification mechanism into the pipeline.

*

* This is particularly useful in crash recovery

* situations

*/

public interface BatchNotificationHandler {

/*

* The Pipeline instance is set by the

* PipelineFactory when the

* BatchNotificationHandler instance is created

*/

public void setPipeline(Pipeline pipeline);

public Pipeline getPipeline();

/*

* Called when the BatchNotificationHandler

* is instantiated

*/

public void initialize();

/*

* Called by the adapter when a batch begins,

* the implementation must

* return

* a BatchContext instance with the

* following information:

* i) batchId: a unique

* id that will be returned

* every time onBatch is

* invoked by called

* ii)line/col/record/offset:

* for error recovery cases

*/

public BatchContext onBatchBegin();

/*

* Called by the adapter

* when a batch is submitted.

* The parameter holds the

* line/column/record/offset for the successful batch

* that is published.

* Here the implementation

* must save these in

* order to recover from

* crashes

*/

public void onBatch(BatchContext ctx);

/*

* Called by the adapter when a batch

* completes.

* This must be used to clean up

*/

public void onBatchCompletion

(boolean success);

}

To use a pipeline with de-batching, you must configure the pipeline with a BatchNotificationHandler instance. See the below example.

Example - Configuring the Pipeline with a BatchNotificationHandler Instance

<?xml version="1.0"?>

<pipeline xmlns="http://www.oracle.com

/adapter/pipeline" batchNotificationHandler="oracle.tip.pc.services. pipeline.ConsoleBatchNotificationHandler">

<valves>

<valve reentrant="true">valves.

SimpleUnzipValve</valve>

<valve>valves.SimpleDecryptValve</valve>

</valves>

</pipeline>

4.2.15 Error Handling

The Oracle File Adapter and Oracle FTP Adapter provide inbound error handling capabilities, such as the uniqueMessageSeparator property.

In the case of debatching (multiple messages in a single file), messages from the first bad message to the end of the file are rejected. If each message has a unique separator and that separator is not part of any data, then rejection can be more fine-grained. In these cases, you can define a uniqueMessageSeparator property in the schema element of the native schema to have the value of this unique message separator. This property controls how the adapter translator works when parsing through multiple records in one file (debatching). This property enables recovery even when detecting bad messages inside a large batch file. When a bad record is detected, the adapter translator skips to the next unique message separator boundary and continues from there. If you do not set this property, then all records that follow the record with errors are also rejected.

The below example provides an example of using the uniqueMessageSeparator property:

Example - Schema File Showing Use of uniqueMessageSeparator Property

<?xml version="1.0" ?>

<xsd:schema xmlns:xsd="http://www.w3.org/

2001/XMLSchema"

xmlns:nxsd="http://xmlns.oracle.com/

pcbpel/nxsd"

targetNamespace=

"http://TargetNamespace.com/Reader"

xmlns:tns=

"http://TargetNamespace.com/Reader"

elementFormDefault="qualified"

attributeFormDefault="unqualified"

nxsd:encoding="US-ASCII" nxsd:stream="chars"

nxsd:version="NXSD"

nxsd:uniqueMessageSeparator="${eol}">

<xsd:element name="emp-listing">

<xsd:complexType>

<xsd:sequence>

<xsd:element name="emp" minOccurs="1"

maxOccurs="unbounded">

<xsd:complexType>

<xsd:sequence>

<xsd:element name="GUID"

type="xsd:string"

nxsd:style="terminated"

nxsd:terminatedBy=","

nxsd:quotedBy=""">

</xsd:element>

<xsd:element name="Designation"

type="xsd:string"

nxsd:style="terminated"

nxsd:terminatedBy=","

nxsd:quotedBy=""">

</xsd:element>

<xsd:element name="Car" type="xsd:string"

nxsd:style="terminated"

nxsd:terminatedBy=","

nxsd:quotedBy=""">

</xsd:element>

<xsd:element name="Labtop" type="xsd:string"

nxsd:style="terminated"

nxsd:terminatedBy=","

nxsd:quotedBy=""">

</xsd:element>

<xsd:element name="Location"

type="xsd:string"

nxsd:style="terminated"

nxsd:terminatedBy=","

nxsd:quotedBy=""">

</xsd:element>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

</xsd:schema>

<!--NXSDWIZ:D:\work\jDevProjects\Temp_BPEL_process

\Sample2\note.txt:-->

<!--USE-HEADER:false:-->

For information about handling rejected messages, connection errors, and message errors, see Handling Rejected Messages .

4.2.15.1 Sending a Malformed XML File to a Local File System Folder

During an Inbound Read operation, if a malformed XML file is read, the malformed file results in an error. The errored file is by default sent to the remote file system for archival.

The errored file can be archived at a local file system by specifying the useRemoteErrorArchive property in the jca file and setting that property to false.

The default value for this property is true.

4.2.16 Threading Model

This section describes the threading models that Oracle File and FTP Adapters support. An understanding of the threading models is required to throttle or de-throttle the Oracle File and FTP Adapters. The Oracle File and FTP Adapters use the following threading models:

4.2.16.1 Default Threading Model

In the default threading model, a poller is created for each inbound Oracle File or FTP Adapter endpoint. The poller enqueues file metadata into an in-memory queue, which is processed by a global pool of processor threads. Figure 4-13 shows a default threading model.

The following steps highlight the functioning of the default threading model:

-

The poller periodically looks for files in the input directory. The interval at which the poller looks for files is specified using the

PollingFrequencyparameter in the inboundJCAfile. -

For each new file that the poller detects in the configured inbound directory, the poller enqueues information such as file name, file directory, modified time, and file size into an internal in-memory queue.

Note:

New files are ones that are not being processed.

-

A global pool of processor worker threads wait to process from the in-memory queue.

-

Processor worker threads pick up files from the internal queue, and perform the following actions:

-

Stream the file content to an appropriate translator (for example, a translator for reading text, binary, XML, or opaque data.)

-

Publish the XML result from the translator to the SCA infrastructure.

-

Perform the required postprocessing, such as deletion or archival after the file is processed.

-

4.2.16.2 Modified Threading Model

You can modify the default threading behavior of Oracle File and FTP Adapters. Modifying the threading model results in a modified throttling behavior of the Oracle File and FTP Adapters. The following sections describe the modified threading behavior of the Oracle File and FTP Adapters:

4.2.16.2.1 Single Threaded Model

The single threaded model is a modified threaded model that enables the poller to assume the role of a processor. The poller thread processes the files in the same thread. The global pool of processor threads is not used in this model. You can define the property for a single threaded model in the inbound JCA file as follows:

Example - Defining the Property for a Single-Threaded Model

<activation-spec className="oracle.tip.adapter.file. inbound.FileActivationSpec"> <property../> <property name="SingleThreadModel" value="true"/> <property../> </activation-spec>

4.2.16.2.2 Partitioned Threaded Model

The partitioned threaded model is a modified threaded model in which the in-memory queue is partitioned and each composite application receives its own in-memory queue. The Oracle File and FTP Adapters are enabled to create their own processor threads rather than depend on the global pool of processor worker threads for processing the enqueued files. You can define the property for a partitioned model in the inbound JCA file. See the example below.

Example - Defining the Property for a Partitioned Model in the Inbound JCA File

<activation-spec className="oracle.tip.adapter.file.inbound. FileActivationSpec"> <property../> <property name="ThreadCount" value="4"/> <property../> </activation-spec>

In the preceding example for defining the property for a partitioned model:

-

If the

ThreadCountproperty is set to0, the threading behavior is like that of the single threaded model. -

If the

ThreadCountproperty is set to-1, the global thread pool is used, as in the default threading model. -

The maximum value for the

ThreadCountproperty is40.

4.2.17 Performance Tuning

The Oracle File and FTP Adapters support the performance tuning feature by providing knobs to throttle the inbound and outbound operations. The Oracle File and FTP Adapters also provide parameters that you can use to tune the performance of outbound operations.

For more information about performance tuning, see Oracle JCA Adapter Tuning Guide in this document.

4.2.18 High Availability

The Oracle File and FTP Adapters support the high availability feature for the active-active topology with Oracle BPEL Process Manager and Mediator service engines. They support this feature for both inbound and outbound operations.

4.2.19 Multiple Directories

The Oracle File and FTP Adapters support polling multiple directories within a single activation. You can specify multiple directories in JDeveloper as distinct from a single directory. This is applicable to both physical and logical directories.

Note:

If the inbound Oracle File Adapter is configured for polling multiple directories for incoming files, then all the top-level directories (inbound directories where the input files appear) must exist before the file reader starts polling these directories.

After selecting the inbound directory or directories, you can also specify whether the subdirectories must be processed recursively. If you check the Process Files Recursively option, then the directories would be processed recursively. By default, this option is selected, in the File Directories page, as shown in Figure 4-14.

When you choose multiple directories, the generated JCA files use semicolon(;) as the separator for these directories. However, you can change the separator to something else. If you do so, manually add DirectorySeparator="chosen separator" in the generated JCA file. For example, to use comma (,) as the separator, you must first change the separator to "," in the Physical directory and then add <property name="DirectorySeparator" value=","/>, in the JCA file.

Additionally, if you choose to process directories recursively and one or more subdirectories do not have the appropriate permissions, the inbound adapter throws an exception during processing. To ignore this exception, you must define a binding property with the name ignoreListingErrors in your composite.xml as shown in the example below.

Example - Defining a Binding Property with the name ignoreLIstingErrors

<service name="FlatStructureIn">

<interface.wsdl

interface="http://xmlns.oracle.com/

pcbpel/adapter/file/

FlatStructureIn/#wsdl.inte

rface(Read_ptt)"/>

<binding.jca config="FlatStructureIn_file.jca">

<property name="ignoreListingErrors"

type="xs:string"

many="false">true</property>

</binding.jca>

</service>

Figure 4-14 The Adapter Configuration Wizard - File Directories Page

Description of "Figure 4-14 The Adapter Configuration Wizard - File Directories Page"

4.2.20 Append Mode

The Oracle File and FTP Adapters enable you to configure outbound interactions that append to an existing file. The Append to Existing File option enables the outbound invoke to write to the same file. There are two ways in which you can append to a file name:

-

Statically — in the JCA file for the outbound Oracle File Adapter.

-

Dynamically — using the header mechanism.

Note:

The append mode is not supported for SFTP scenarios, where instead of appending to the existing file, the file is overwritten.

When you select the Append to existing file option in the File Configuration page, the batching options such as Number of Messages Equals, Elapsed Time Exceeds, File Size Exceeds options are disabled. Figure 4-15 displays the Append to existing file option.

Figure 4-15 The Adapter Configuration Wizard - File Configuration Page

Description of "Figure 4-15 The Adapter Configuration Wizard - File Configuration Page"

Batching option is disabled if "Append" is chosen in the wizard. In addition, the following error message is displayed if the user specifies a dynamic file naming convention as opposed to a static file naming convention:

You cannot choose to Append Files and use a dynamic file naming convention at the same time

If you are using the "Append" functionality in Oracle FTP Adapter, ensure that your FTP server supports the "APPE" command.

4.2.21 Recursive Processing of Files Within Directories in Oracle FTP Adapter

In earlier versions of the Oracle SOA Suite, the inbound Oracle FTP Adapter used the NLST (Name List) FTP command to read a list of file names from the FTP server. However, the NLST command does not return directory names and therefore does not allow recursive processing within directories. Currently, the Oracle FTP Adapter uses the LIST command, instead.

However, the response from the LIST command is different for different FTP servers. To incorporate the subtle differences in results from the LIST command in a standard manner, the following parameters are added to the deployment descriptor for Oracle FTP Adapter:

-

defaultDateFormat: This parameter specifies the default date format value. On the FTP server, this is the value for files that are older. The default value for this parameter isMMM d yyyyas most UNIX-type FTP servers return the last modified time stamp for older files in theMMM d yyyyformat. For example,Jan 31 2006.You can find the default date format for your FTP server by using the

ls -lcommand by using a FTP command-line client. For example,ls -lon a vsftpd server running on Linux returns the following:-rw-r--r-- 1 500 500 377 Jan 22 2005 test.txt

For Microsoft Windows NT FTP servers, the

defaultDateFormatisMM-dd-yy hh:mma, for example,03-24-09 08:06AM <DIR> oracle. -

recentDateFormat: This parameter specifies the recent date format value. On the FTP server, this is the value for files that were recently created.The default value for this parameter is

MMM d HH:mmas most UNIX-type FTP servers return the last modified date for recently created files inMMM d HH:mmformat, for example,Jan 31 21:32.You can find the default date format for your FTP server by using the

ls -lcommand from an FTP command-line client. For example,ls -lon a vsftpd server running on Linux returns the following:150 Here comes the directory listing. -rw-r--r-- 1 500 500 377 Jan 30 21:32 address.txt -rw-r--r-- 1 500 500 580 Jan 3121:32 container.txt .....................................................................................

For Microsoft Windows NT FTP servers, the

recentDateFormatparameter is in theMM-dd-yy hh:mma, format, for example,03-24-09 08:06AM <DIR> oracle. -

serverTimeZone: The server time zone, for example, America/Los_Angeles. If this parameter is set to blank, then the default time zone of the server running the Oracle FTP Adapter is used. -

listParserKey:Directs the Oracle FTP Adapter on how it should parse the response from theLISTcommand. The default value is UNIX, in which case the Oracle FTP Adapter uses a generic parser for UNIX-like FTP servers. Apart fromUNIX, the other supported values areWINandWINDOWS, which are specific to the Microsoft Windows NT FTP server.Note:

The locale language for the FTP server can be different from the locale language for the operating system. Do not assume that the locale for the FTP server is the same locale for the operating system it is running on. You must set the

serverLocaleLanguage,serverLocaleCountry, andserverLocaleVariantparameters in such cases. -

serverLocaleLanguage: This parameter specifies the locale construct for language. -

serverLocaleCountry: This parameter specifies the locale construct for country. -

serverLocaleVariant: This parameter specifies the locale construct for variant.

4.2.21.1 Configure the Parameters in the Deployment Descriptor

The standard date formats of an FTP server are usually configured when the FTP server is installed. If your FTP server uses a format "MMM d yyyy" for defaultDateFormat and "MMM d HH:mm" for recentDateFormat, then your Oracle FTP Adapter must use the same formats in its corresponding deployment descriptor.

If you enter "ls -l" from a command-line FTP, then you can see the following:

200 PORT command successful. Consider using PASV. 150 Here comes the directory listing. -rw-r--r-- 1 500 500 377 Jan 22 21:32 1.txt -rw-r--r-- 1 500 500 580 Jan 22 21:32 2.txt .................................................................................

This is the recentDateFormat parameter for your FTP server, for example MMM d HH:mm (Jan 22 21:32). Similarly, if your server has an old file, the server does not show the hour and minute part and it shows the following:

-rw-r--r-- 1 500 500 377 Jan 22 2005 test.txt

This is the default date format, for example MMM d yyyy (Jan 22 2005).

Additionally, the serverTimeZone parameter is used to by the Oracle FTP Adapter to parse time stamps for FTP server running in a specific time zone. The value for this is either an abbreviation such as "PST" or a full name such as "America/Los_Angeles".

Additionally, the FTP server might be running on a different locale. The serverLocaleLanguage, serverLocaleCountry, and serverLocaleVariant parameters are used to construct a locale from language, country, variant where

-

language is a lowercase two-letter ISO-639 code, for example, en,

-

country is an uppercase two-letter ISO-3166 code, for example, US.

-

variant is a vendor and browser-specific code.

If these locale parameters are absent, then the Oracle FTP Adapter uses the system locale to parse the time stamp.

Additionally, if the FTP server is running on a different system than the SOA suite, then you must handle the time zone differences between them. You must convert the time difference between the FTP server and the system running the Oracle FTP Adapter to milliseconds and add the value as a binding property: timestampOffset in the composite.xml.

For example, if the FTP server is six hours ahead of your local time, you must add the following endpoint property to your service or reference. See the example below.

Example - Endpoint Property to Add if FTP Server is Ahead of Local

<service name="FTPDebatchingIn">

<interface.wsdl

interface="http://xmlns.oracle.com/pcbpel

/adapter/ftp/FTPDebatchingIn/#wsdl.

interface(Get_ptt)"/>

<binding.jca config="DebatchingIn_ftp.jca">

<property name=" timestampOffset"

type="xs:string"

many="false" source=""

override="may"> 21600000</property>

</binding.jca>

</service>

Some FTP servers do not work well with the LIST command. In such cases, use the NLST command for listing, but you cannot process directories recursively with NLST.

To use the NLST command, then you must add the following property to the JCA file. See the example below.

Example - Adding the NLST Property

<?xml version="1.0" encoding="UTF-8"?>

<adapter-config name="FTPDebatchingIn"

adapter="Ftp Adapter"

xmlns="http://platform.integration.oracle/

blocks/adapter/fw/metadata">

<connection-factory location="eis/Ftp/FtpAdapter"

UIincludeWildcard="*.txt"

adapterRef=""/>

<activation-spec

className="oracle.tip.adapter.ftp.

inbound.FTPActivationSpec">

…………………………………………..

…………………………………………..

<property name="UseNlst" value="true"/>

</activation-spec>

</endpoint-activation>

</adapter-config>

4.2.22 Securing Enterprise Information System Credentials

When a resource adapter makes an outbound connection with an Enterprise Information System (EIS), it must sign on with valid security credentials. In accordance with the J2CA 1.5 specification, Oracle WebLogic Server supports both container-managed and application-managed sign-on for outbound connections. At runtime, Oracle WebLogic Server determines the chosen sign-on mechanism, based on the information specified in either the invoking client component's deployment descriptor or the res-auth element of the resource adapter deployment descriptor. This section describes the procedure for securing the user name and password for Oracle JCA Adapters by using Oracle WebLogic Server container-managed sign-on.

Both Oracle WebLogic Server and EIS maintain independent security realms. A container-managed sign-on enables you to sign on to Oracle WebLogic Server and also be able to use applications that access EIS through a resource adapter without having to sign on separately to the EIS. Container-managed sign-on in Oracle WebLogic Server uses credential mappings. The credentials (user name/password pairs or security tokens) of Oracle WebLogic security principals (authenticated individual users or client applications) are mapped to the corresponding credentials required to access EIS. You can configure credential mappings for applicable security principals for any deployed resource adapter.

To use container-managed sign-on first you must ensure that the connection pool you use supports container-managed sign-on. You can follow these steps to turn on container-managed sign-on for an existing connection pool or create a new pool which supports container-managed sign-on.

4.3 Oracle File and FTP Adapter Concepts

The Oracle File and FTP Adapters concepts are discussed in the following sections:

4.3.1 Oracle File Adapter Read File Concepts

In the inbound direction, the Oracle File Adapter polls and reads files from a file system for processing. This section provides an overview of the inbound file reading capabilities of the Oracle File Adapter. You use the Adapter Configuration Wizard to configure the Oracle File Adapter for use with a BPEL process or a Mediator. Configuring the Oracle File Adapter creates an inbound WSDL and JCA file pair.

The following sections describe the Oracle File Adapter read file concepts:

4.3.1.1 Inbound Operation

For inbound operations with the Oracle File Adapter, select the Read File operation, as shown in Figure 4-25.

Figure 4-25 Selecting the Read File Operation

Description of "Figure 4-25 Selecting the Read File Operation"

4.3.1.2 Inbound File Directory Specifications

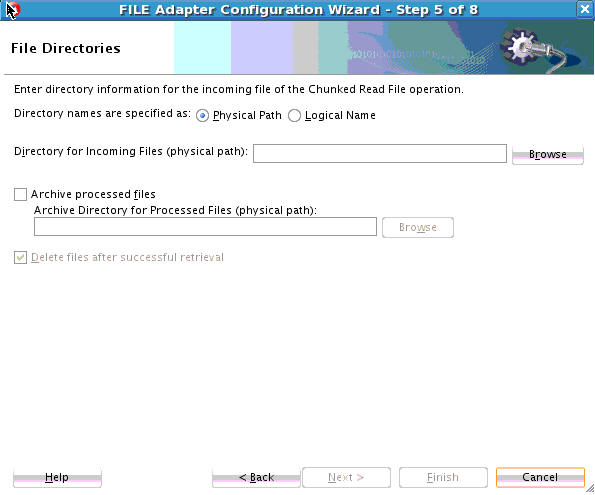

The File Directories page of the Adapter Configuration Wizard shown in Figure 4-26 enables you to specify information about the directory to use for reading inbound files and the directories in which to place successfully processed files. You can choose to process files recursively within directories. You can also specify multiple directories.

Figure 4-26 The Adapter Configuration Wizard - Specifying Incoming Files

Description of "Figure 4-26 The Adapter Configuration Wizard - Specifying Incoming Files"

The following sections describe the file directory information to specify:

4.3.1.2.1 Specifying Inbound Physical or Logical Directory Paths in SOA Composite

You can specify inbound directory names as physical or logical paths in the composite involving Oracle BPEL PM and Mediator. Physical paths are values such as c:\inputDir.

Note:

If the inbound Oracle File Adapter is configured for polling multiple directories for incoming files, then all the top-level directories (inbound directories where the input file appears) must exist before the file reader starts polling these directories.

In the composite, logical properties are specified in the inbound JCA file and their logical-physical mapping is resolved by using binding properties. You specify the logical parameters once at design time, and you can later modify the physical directory name as required.

For example, the generated inbound JCA file looks as follows for the logical input directory name InputFileDir.

Example - Generated Inbound .jca File

<?xml version="1.0" encoding="UTF-8"?>

<adapter-config name="FlatStructureIn"

adapter="File Adapter"

xmlns="http://platform.integration.oracle/

blocks/adapter/fw/metadata">

<connection-factory location="eis/FileAdapter"

UIincludeWildcard="*.txt"

adapterRef=""/>

<endpoint-activation operation="Read">

<activation-spec

className="oracle.tip.adapter.file.

inbound.FileActivationSpec">

<property name="UseHeaders" value="false"/>

<property name="LogicalDirectory"

value="InputFileDir"/>

<property name="Recursive" value="true"/>

<property name="DeleteFile" value="true"/>

<property name="IncludeFiles" value=".*\.txt"/>

<property name="PollingFrequency" value="10"/>

<property name="MinimumAge" value="0"/>

<property name="OpaqueSchema" value="false"/>

</activation-spec>

</endpoint-activation>

</adapter-config>

In the composite.xml file, you then provide the physical parameter values (in this case, the directory path) of the corresponding logical ActivationSpec or InteractionSpec. This resolves the mapping between the logical directory name and actual physical directory name. See the example below.

Example - Providing the Directory Path of the Corresponding ActivationSpec or InteractionSpec

<service name="FlatStructureIn">

<interface.wsdl

interface="http://xmlns.oracle.com/pcbpel/

adapter/file/FlatStructureIn/#wsdl.

interface(Read_ptt)"/>

<binding.jca config="FlatStructureIn_file.jca">

<property name=" InputFileDir" type="xs:string"

many="false" source=""

override="may"> /home/user/inputDir</property>

</binding.jca>

</service>

4.3.1.2.2 Archiving Successfully Processed Files

This option enables you to specify a directory in which to place successfully processed files. You can also specify the archive directory as a logical name. In this case, you must follow the logical-to-physical mappings described in Specifying Inbound Physical or Logical Directory Paths in SOA Composite.

4.3.1.2.3 Deleting Files After Retrieval

This option enables you to specify whether to delete files after a successful retrieval. If this check box is not selected, processed files remain in the inbound directory but are ignored. Only files with modification dates more recent than the last processed file are retrieved. If you place another file in the inbound directory with the same name as a file that has been processed but the modification date remains the same, then that file is not retrieved.

4.3.1.3 File Matching and Batch Processing

The File Filtering page of the Adapter Configuration Wizard shown in Figure 4-27 enables you to specify details about the files to retrieve or ignore.

The Oracle File Adapter acts as a file listener in the inbound direction. The Oracle File Adapter polls the specified directory on a local or remote file system and looks for files that match specified naming criteria.

Figure 4-27 The Adapter Configuration Wizard-File Filtering Page

Description of "Figure 4-27 The Adapter Configuration Wizard-File Filtering Page"

The following sections describe the file filtering information to specify:

4.3.1.3.1 Specifying a Naming Pattern

Specify the naming convention that the Oracle File Adapter uses to poll for inbound files. You can also specify the naming convention for files you do not want to process. Two naming conventions are available for selection. The Oracle File Adapter matches the files that appear in the inbound directory.

-

File wildcards (

po*.txt)Retrieves all files that start with

poand end with.txt. This convention conforms to Windows operating system standards.

-

Regular expressions (

po.*\.txt)Retrieves all files that start with

poand end with.txt. This convention conforms to Java Development Kit (JDK) regular expression (regex) constructs.

Note:

-

If you later select a different naming pattern, ensure that you also change the naming conventions you specify in the Include Files and Exclude Files fields. The Adapter Configuration Wizard does not automatically make this change for you.

-

Do not specify *.* as the convention for retrieving files.

-

Be aware of any file length restrictions imposed by your operating system. For example, Windows operating system file names cannot be more than 256 characters in length (the file name, plus the complete directory path). Some operating systems also have restrictions on the use of specific characters in file names. For example, Windows operating systems do not allow characters such as

backslash(\), slash (/), colon (:), asterisk (*), left angle bracket (<), right angle bracket (>), or vertical bar(|).

4.3.1.3.2 Including and Excluding Files

If you use regular expressions, the values you specify in the Include Files and Exclude Files fields must conform to JDK regular expression (regex) constructs. For both fields, different regex patterns must be provided separately. The Include Files and Exclude Files fields correspond to the IncludeFiles and ExcludeFiles parameters, respectively, of the inbound WSDL file.

Note:

The regex pattern complies with the JDK regex pattern. According to the JDK regex pattern, the correct connotation for a pattern of any characters with any number of occurrences is a period followed by a plus sign (.+). An asterisk (*) in a JDK regex is not a placeholder for a string of any characters with any number of occurrences.

For the inbound Oracle File Adapter to pick up all file names that start with po and which have the extension txt, you must specify the Include Files field as po.*\.txt when the name pattern is a regular expression. In this regex pattern example:

-

A period (

.)indicates any character. -

An asterisk (

*) indicates any number of occurrences. -

A backslash followed by a period (\.) indicates the character period (.) as indicated with the backslash escape character.

The Exclude Files field is constructed similarly.

If you have Include Files field and Exclude Files field expressions that have an overlap, then the exclude files expression takes precedence. For example, if Include Files is set to abc*.txt and Exclude Files is set to abcd*.txt, then no abcd*.txt files are received.

Note:

You must enter a name pattern in the Include Files with Name Pattern field and not leave it empty. Otherwise, the inbound adapter service reads all the files present in the inbound directory, resulting in incorrect results.

Table 4-4 lists details of Java regex constructs.

Note:

Do not begin JDK regex pattern names with the following characters: plus sign (+), question mark (?), or asterisk (*).

Table 4-4 Java Regular Expression Constructs

| Matches | Construct |

|---|---|

|

Characters |

- |

|

The character |

|

|

The backslash character |

|

|

The character with octal value |

|

|

The character with octal value |

|

|

The character with octal value |

|

|

The character with hexadecimal value |

|

|

The character with hexadecimal value |

|

|

The tab character |

|

|

The new line (line feed) character |

|

|

The carriage-return character |

|

|

The form-feed character |

|

|

The alert (bell) character |

|

|

The escape character |

|

|

The control character corresponding to |

|

|

- |

- |

|

Character classes |

- |

|

|

|

|

Any character except |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

- |

- |

|

Predefined character classes |

- |

|

Any character (may or may not match line terminators) |

- |

|

A digit: |

|

|

A nondigit: |

|

|

A white space character: |

|

|

A nonwhitespace character: |

|

|

A word character: |

|

|

A nonword character: |

|

|

Greedy quantifiers |

- |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

For details about Java regex constructs, see

4.3.1.3.3 File Include and Exclude

The FileList operation does not expose the java.file.IncludeFiles property. This property is configured while designing the adapter interaction and cannot be overridden through headers, as shown in the example tomorrow.

Example - Overriding the FileList Operation

<adapter-config name="ListFiles" adapter="File Adapter" xmlns="http://platform.integration.oracle

/blocks/adapter/fw/metadata">

<connection-factory location="eis/FileAdapter"

UIincludeWildcard="*.txt"

adapterRef=""/>

<endpoint-interaction portType="FileListing_ptt"

operation="FileListing">

<interaction-spec className=

"oracle.tip.adapter.file.outbound.

FileListInteractionSpec">

<property name="PhysicalDirectory"

value="%INP_DIR%"/>

<property name="PhysicalDirectory"

value="%INP_DIR%"/>

<property name="Recursive" value="true"/>

<property name="Recursive"

value="true"/>

<property name="IncludeFiles"

value=".*\.txt"/>

</interaction-spec>

</endpoint-interaction>

</adapter-config>

In this example, after you set the IncludeFiles, they cannot be changed.

4.3.1.3.4 Debatching Messages

You can select whether incoming files have multiple messages, and specify the number of messages in one batch file to publish. When the file contains message with repeating elements, you can choose to publish the message in a specific number of batches. Refer to Figure 4-27.

When a file contains multiple messages and this check box is selected, this is referred to as debatching. Nondebatching is applied when the file contains only a single message and the check box is not selected. Debatching is supported for native and XML files.

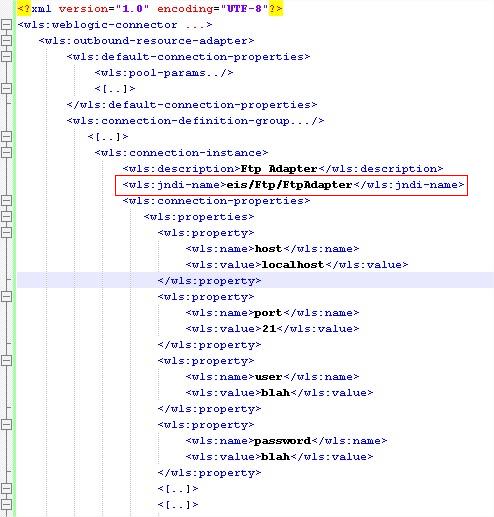

4.3.1.4 File Polling