18 Using Whole Server Migration and Service Migration in an Enterprise Deployment

- About Whole Server Migration and Automatic Service Migration in an Enterprise Deployment

Oracle WebLogic Server provides a migration framework that is integral part of any highly available environment. The following sections provide more information about how this framework can be used effectively in an enterprise deployment. - Creating a GridLink Data Source for Leasing

Both Whole Server Migration and Automatic Service Migration require a data source for the leasing table, which is a tablespace created automatically as part of the Oracle WebLogic Server schemas by the Repository Creation Utility (RCU). - Configuring Whole Server Migration for an Enterprise Deployment

After you have prepared your domain for whole server migration or automatic service migration, you can then configure Whole Server Migration for specific Managed Servers within a cluster. See the following topics for more information. - Configuring Automatic Service Migration in an Enterprise Deployment

To configure automatic service migration for specific services in an enterprise deployment, refer to the topics in this section.

18.1 About Whole Server Migration and Automatic Service Migration in an Enterprise Deployment

Oracle WebLogic Server provides a migration framework that is integral part of any highly available environment. The following sections provide more information about how this framework can be used effectively in an enterprise deployment.

18.1.1 Understanding the Difference Between Whole Server and Service Migration

The Oracle WebLogic Server migration framework supports two distinct types of automatic migration:

-

Whole Server Migration, where the Managed Server instance is migrated to a different physical system upon failure.

Whole server migration provides for the automatic restart of a server instance, with all of its services, on a different physical machine. When a failure occurs in a server that is part of a cluster that is configured with server migration, the server is restarted on any of the other machines that host members of the cluster.

For this to happen, the servers need to use a a floating IP as listen address and the required resources (transactions logs and JMS persistent stores) must be available on the candidate machines.

For more information, see "Whole Server Migration" in Administering Clusters for Oracle WebLogic Server.

-

Service Migration, where specific services are moved to a different Managed Server within the cluster.

To understand service migration, it's important to understand pinned services.

In a WebLogic Server cluster, most subsystem services are hosted homogeneously on all server instances in the cluster, enabling transparent failover from one server to another. In contrast, pinned services, such as JMS-related services, the JTA Transaction Recovery Service, and user-defined singleton services, are hosted on individual server instances within a cluster—for these services, the WebLogic Server migration framework supports failure recovery with service migration, as opposed to failover.

For more information, see "Understanding the Service Migration Framework" in Administering Clusters for Oracle WebLogic Server.

18.1.2 Implications of Using Whole Server Migration or Service Migration in an Enterprise Deployment

When a server or service is started in another system, the required resources (such as services data and logs) must be available to both the original system and to the failover system; otherwise, the service cannot resume the same operations successfully on the failover system.

For this reason, both whole server and service migration require that all members of the cluster have access to the same transaction and JMS persistent stores (whether the persistent store is file-based or database-based).

This is another reason why shared storage is important in an enterprise deployment. When you properly configure shared storage, you ensure that in the event of a manual failover (Administration Server failover) or an automatic failover (whole server migration or service migration), both the original machine and the failover machine can access the same file store with no change in service.

In the case of an automatic service migration, when a pinned service needs to be resumed, the JMS and JTA logs that it was using before failover need to be accessible.

In addition to shared storage, Whole Server Migration requires the procurement and assignment of a virtual IP address (VIP). When a Managed Server fails over to another machine, the VIP is automatically reassigned to the new machine.

Note that service migration does not require a VIP.

18.1.3 Understanding Which Products and Components Require Whole Server Migration and Service Migration

Note that the table lists the recommended best practice. It does not preclude you from using Whole Server or Automatic Server Migration for those components that support it.

| Component | Whole Server Migration (WSM) | Automatic Service Migration (ASM) |

|---|---|---|

|

Oracle WebCenter Content |

NO |

YES |

|

Oracle SOA Suite |

NO |

YES |

|

Oracle Enterprise Capture |

NO |

YES |

18.2 Creating a GridLink Data Source for Leasing

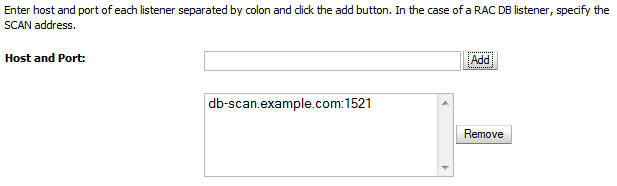

Both Whole Server Migration and Automatic Service Migration require a data source for the leasing table, which is a tablespace created automatically as part of the Oracle WebLogic Server schemas by the Repository Creation Utility (RCU).

For an enterprise deployment, you should use create a GridLink data source:

18.3 Configuring Whole Server Migration for an Enterprise Deployment

After you have prepared your domain for whole server migration or automatic service migration, you can then configure Whole Server Migration for specific Managed Servers within a cluster. See the following topics for more information.

18.3.1 Editing the Node Manager's Properties File to Enable Whole Server Migration

Use the section to edit the Node Manager properties file on the two nodes where the servers are running.

18.3.2 Setting Environment and Superuser Privileges for the wlsifconfig.sh Script

Use this section to set the environment and superuser privileges for the wlsifconfig.sh script, which is used to transfer IP addresses from one machine to another during migration. It must be able to run ifconfig, which is generally only available to superusers.

For more information about the wlsifconfig.sh script, see "Configuring Automatic Whole Server Migration" in Administering Clusters for Oracle WebLogic Server.

Refer to the following sections for instructions on preparing your system to run the wlsifconfig.sh script.

18.3.2.1 Setting the PATH Environment Variable for the wlsifconfig.sh Script

Ensure that the commands listed in the following table are included in the PATH environment variable for each host computers.

| File | Directory Location |

|---|---|

|

|

MSERVER_HOME/bin/server_migration

|

|

|

WL_HOME/common/bin

|

|

|

MSERVER_HOME/nodemanager

|

18.3.2.2 Granting Privileges to the wlsifconfig.sh Script

Grant sudo privilege to the operating system user (for example, oracle) with no password restriction, and grant execute privilege on the /sbin/ifconfig and /sbin/arping binaries.

Note:

For security reasons, sudo should be restricted to the subset of commands required to run the wlsifconfig.sh script.

Ask the system administrator for the sudo and system rights as appropriate to perform this required configuration task.

The following is an example of an entry inside /etc/sudoers granting sudo execution privilege for oracle to run ifconfig and arping:

Defaults:oracle !requiretty oracle ALL=NOPASSWD: /sbin/ifconfig,/sbin/arping

18.3.3 Configuring Server Migration Targets

To configure migration in a cluster:

-

Log in to the Oracle WebLogic Server Administration Console.

-

In the Domain Structure window, expand Environment and select Clusters. The Summary of Clusters page appears.

-

Click the cluster for which you want to configure migration in the Name column of the table.

-

Click the Migration tab.

-

Click Lock & Edit.

-

Select Database as Migration Basis. From the drop-down list, select Leasing as Data Source For Automatic Migration.

-

Under Candidate Machines For Migratable Server, in the Available filed, select the Managed Servers in the cluster and click the right arrow to move them to Chosen.

-

Select the Leasing data source that you created in Creating a GridLink Data Source for Leasing.

-

Click Save.

-

Set the Candidate Machines for Server Migration. You must perform this task for all of the managed servers as follows:

-

In Domain Structure window of the Oracle WebLogic Server Administration Console, expand Environment and select Servers.

-

Select the server for which you want to configure migration.

-

Click the Migration tab.

-

Select Automatic Server Migration Enabled and click Save.

This enables the Node Manager to start a failed server on the target node automatically.

For information on targeting applications and resources, see Using Multi Data Sources with Oracle RAC.

-

In the Available field, located in the Migration Configuration section, select the machines to which to allow migration and click the right arrow.

In this step, you are identifying host to which the Managed Server should failover if the current host is unavailable. For example, for the Managed Server on the HOST1, select HOST2; for the Managed Server on HOST2, select HOST1.

Tip:

Click Customize this table in the Summary of Servers page, move Current Machine from the Available Window to the Chosen window to view the machine on which the server is running. This is different from the configuration if the server is migrated automatically.

-

-

Click Activate Changes.

-

Restart the Administration Server and the servers for which server migration has been configured.

18.3.4 Testing Whole Server Migration

Perform the steps in this section to verify that automatic whole server migration is working properly.

To test from Node 1:

-

Stop the managed server process.

kill -9 pidpid specifies the process ID of the managed server. You can identify the pid in the node by running this command:

ps -ef | grep WLS_WCC1 -

Watch the Node Manager console (the terminal window where you performed the kill command): you should see a message indicating that the managed server's floating IP has been disabled.

-

Wait for the Node Manager to try a second restart of the Managed Server. Node Manager waits for a period of 30 seconds before trying this restart.

-

After node manager restarts the server and before it reaches "RUNNING" state, kill the associated process again.

Node Manager should log a message indicating that the server will not be restarted again locally.

Note:

The number of restarts required is determined by the

RestartMaxparameter in the following configuration file:MSERVER_HOME/servers/WLS_WCC1/data/nodemanager/startup.properties

The default value is

RestartMax=2.

To test from Node 2:

-

Watch the local Node Manager console. After 30 seconds since the last try to restart the managed server on Node 1, Node Manager on Node 2 should prompt that the floating IP for the managed server is being brought up and that the server is being restarted in this node.

-

Access a product URL using same IP address. If the URL is successful, then the migration was successful.

Verification From the Administration Console

You can also verify migration using the Oracle WebLogic Server Administration Console:

Note:

After a server is migrated, to fail it back to its original machine, stop the managed server from the Oracle WebLogic Administration Console and then start it again. The appropriate Node Manager starts the managed server on the machine to which it was originally assigned.

18.4 Configuring Automatic Service Migration in an Enterprise Deployment

To configure automatic service migration for specific services in an enterprise deployment, refer to the topics in this section.

- Setting the Leasing Mechanism and Data Source for an Enterprise Deployment Cluster

- Changing the Migration Settings for the Managed Servers in the Cluster

- About Selecting a Service Migration Policy

- Setting the Service Migration Policy for Each Managed Server in the Cluster

- Restarting the Managed Servers and Validating Automatic Service Migration

- Failing Back Services After Automatic Service Migration

18.4.1 Setting the Leasing Mechanism and Data Source for an Enterprise Deployment Cluster

Note:

The following procedure assumes you have already created the Leasing data source, as described in Creating a GridLink Data Source for Leasing.

18.4.2 Changing the Migration Settings for the Managed Servers in the Cluster

After you set the leasing mechanism and data source for the cluster, you can then enable automatic JTA migration for the Managed Servers that you want to configure for service migration. Note that this topic applies only if you are deploying JTA services as part of your enterprise deployment.

18.4.3 About Selecting a Service Migration Policy

When you configure Automatic Service Migration, you select a Service Migration Policy for each cluster. This topic provides guidelines and considerations when selecting the Service Migration Policy.

For example, products or components running singletons or using Path services can benefit from the Auto-Migrate Exactly-Once policy. With this policy, if at least one Managed Server in the candidate server list is running, the services hosted by this migratable target will be active somewhere in the cluster if servers fail or are administratively shut down (either gracefully or forcibly). This can cause multiple homogenous services to end up in one server on startup.

When you are using this policy, you should monitor the cluster startup to identify what servers are running on each server. You can then perform a manual failback, if necessary, to place the system in a balanced configuration.

Other Fusion Middleware components are better suited for the Auto-Migrate Failure-Recovery Services policy. With this policy, the services hosted by the migratable target will start only if the migratable target's User Preferred Server (UPS) is started.

Based on these guidelines, you should use Auto-Migration Failure-Recovery Services for the clusters in an Oracle WebCenter Content enterprise deployment.

For more information, see Policies for Manual and Automatic Service Migration in Administering Clusters for Oracle WebLogic Server.

18.4.4 Setting the Service Migration Policy for Each Managed Server in the Cluster

18.4.5 Restarting the Managed Servers and Validating Automatic Service Migration

18.4.6 Failing Back Services After Automatic Service Migration

When Automatic Service Migration occurs, Oracle WebLogic Server does not support failing back services to their original server when a server is back online and rejoins the cluster.

As a result, after the Automatic Service Migration migrates specific JMS services to a backup server during a fail-over, it does not migrate the services back to the original server after the original server is back online. Instead, you must migrate the services back to the original server manually.

To fail back a service to its original server, follow these steps:

-

If you have not already done so, in the Change Center of the Administration Console, click Lock & Edit.

-

In the Domain Structure tree, expand Environment, expand Clusters, and then select Migratable Targets.

-

To migrate one or more migratable targets at once, on the Summary of Migratable Targets page:

-

Click the Control tab.

-

Use the check boxes to select one or more migratable targets to migrate.

-

Click Migrate.

-

Use the New hosting server drop-down to select the original Managed Server.

-

Click OK.

A request is submitted to migrate the JMS-related service and the configuration edit lock is released. In the Migratable Targets table, the Status of Last Migration column indicates whether the requested migration has succeeded or failed.

-

-

To migrate a specific migratable target, on the Summary of Migratable Targets page:

-

Select the migratable target to migrate.

-

Click the Control tab.

-

Reselect the migratable target to migrate.

-

Click Migrate.

-

Use the New hosting server drop-down to select a new server for the migratable target.

-

Click OK.

-