21 Common Configuration and Management Tasks for an Enterprise Deployment

- Configuration and Management Tasks for All Enterprise Deployments

These are some of the typical configuration and management tasks you are likely need to perform on an Oracle Fusion Middleware enterprise deployment. - Configuration and Management Tasks for an Oracle SOA Suite Enterprise Deployment

These are some of the key configuration and management tasks that you will likely need to perform on an Oracle SOA Suite enterprise deployment.

21.1 Configuration and Management Tasks for All Enterprise Deployments

These are some of the typical configuration and management tasks you are likely need to perform on an Oracle Fusion Middleware enterprise deployment.

- Verifying Manual Failover of the Administration Server

In case a host computer fails, you can fail over the Administration Server to another host. The following sections provide the steps to verify the failover and failback of the Administration Server from SOAHOST1 and SOAHOST2. - Enabling SSL Communication Between the Middle Tier and the Hardware Load Balancer

This section describes how to enable SSL communication between the middle tier and the hardware load balancer. - Configuring Roles for Administration of an Enterprise Deployment

This section provides a summary of products with specific administration roles and provides instructions to add a product-specific administration role to the Enterprise Deployment Administration group. - Using JDBC Persistent Stores for TLOGs and JMS in an Enterprise Deployment

The following topics provide guidelines for when to use JDBC persistent stores for transaction logs (TLOGs) and JMS. They also provide the procedures you can use to configure the persistent stores in a supported database. - Performing Backups and Recoveries for an Enterprise Deployment

This section provides guidelines for making sure you back up the necessary directories and configuration data for an Oracle SOA Suite enterprise deployment.

21.1.1 Verifying Manual Failover of the Administration Server

In case a host computer fails, you can fail over the Administration Server to another host. The following sections provide the steps to verify the failover and failback of the Administration Server from SOAHOST1 and SOAHOST2.

Assumptions:

-

The Administration Server is configured to listen on ADMINVHN, and not on localhost or ANY address.

For more information about the ADMINVHN virtual IP address, see Reserving the Required IP Addresses for an Enterprise Deployment.

-

These procedures assume that the Administration Server domain home (ASERVER_HOME) has been mounted on both host computers. This ensures that the Administration Server domain configuration files and the persistent stores are saved on the shared storage device.

-

The Administration Server is failed over from SOAHOST1 to SOAHOST2, and the two nodes have these IPs:

-

SOAHOST1: 100.200.140.165

-

SOAHOST2: 100.200.140.205

-

ADMINVHN : 100.200.140.206. This is the Virtual IP where the Administration Server is running, assigned to ethX:Y, available in SOAHOST1 and SOAHOST2.

-

-

Oracle WebLogic Server and Oracle Fusion Middleware components have been installed in SOAHOST2 as described in the specific configuration chapters in this guide.

Specifically, both host computers use the exact same path to reference the binary files in the Oracle home.

- Failing Over the Administration Server When Using a Per Host Node Manager

The following procedure shows how to fail over the Administration Server to a different node (SOAHOST2). Note that even after failover, the Administration Server will still use the same Oracle WebLogic Server machine (which is a logical machine, not a physical machine). - Validating Access to the Administration Server on SOAHOST2 Through Oracle HTTP Server

After you perform a manual failover of the Administration Server, it is important to verify that you can access the Administration Server, using the standard administration URLs. - Failing the Administration Server Back to SOAHOST1 When Using a Per Host Node Manager

After you have tested a manual Administration Server failover, and after you have validated that you can access the administration URLs after the failover, you can then migrate the Administration Server back to its original host.

21.1.1.1 Failing Over the Administration Server When Using a Per Host Node Manager

The following procedure shows how to fail over the Administration Server to a different node (SOAHOST2). Note that even after failover, the Administration Server will still use the same Oracle WebLogic Server machine (which is a logical machine, not a physical machine).

This procedure assumes you’ve configured a per host Node Manager for the enterprise topology, as described in Creating a Per Host Node Manager Configuration. For more information, see About the Node Manager Configuration in a Typical Enterprise Deployment.

To fail over the Administration Server to a different host:

-

Stop the Administration Server on SOAHOST1.

-

Stop the Node Manager on SOAHOST1.

If you started the per host Node Manager using the procedure earlier in this guide, then return to the terminal window where you started the Node Manager and press Ctrl/C to stop the process.

-

Migrate the ADMINVHN virtual IP address to the second host:

-

Run the following command as root on SOAHOST1 (where X:Y is the current interface used by ADMINVHN):

/sbin/ifconfig ethX:Y down

-

Run the following command as root on SOAHOST2:

/sbin/ifconfig <interface:index> ADMINVHN netmask <netmask>For example:

/sbin/ifconfig eth0:1 100.200.140.206 netmask 255.255.255.0

Note:

Ensure that the netmask and interface to be used to match the available network configuration in SOAHOST2.

-

-

Update the routing tables using

arping, for example:/sbin/arping -q -U -c 3 -I eth0 100.200.140.206

-

From SOAHOST1, change directory to the Node Manager home directory:

cd NM_HOME -

Edit the

nodemanager.domainsfile and remove the reference to ASERVER_HOME.The resulting entry in the SOAHOST1

nodemanager.domainsfile should appear as follows:soaedg_domain=MSERVER_HOME;

-

From SOAHOST2, change directory to the Node Manager home directory:

cd NM_HOME -

Edit the

nodemanager.domainsfile and add the reference the MSERVER_HOME.The resulting entry in the SOAHOST2

nodemanager.domainsfile should appear as follows:soaedg_domain=MSERVER_HOME;ASERVER_HOME

-

Start the Node Manager on SOAHOST1 and restart the Node Manager on SOAHOST2.

-

Start the Administration Server on SOAHOST2.

-

Test that you can access the Administration Server on SOAHOST2 as follows:

-

Ensure that you can access the Oracle WebLogic Server Administration Console using the following URL:

http://ADMINVHN:7001/console -

Check that you can access and verify the status of components in Fusion Middleware Control using the following URL:

http://ADMINVHN:7001/em

-

21.1.1.2 Validating Access to the Administration Server on SOAHOST2 Through Oracle HTTP Server

After you perform a manual failover of the Administration Server, it is important to verify that you can access the Administration Server, using the standard administration URLs.

From the load balancer, access the following URLs to ensure that you can access the Administration Server when it is running on SOAHOST2:

-

http://admin.example.com/console

This URL should display the WebLogic Server Administration console.

-

http://admin.example.com/em

This URL should display Oracle Enterprise Manager Fusion Middleware Control.

21.1.1.3 Failing the Administration Server Back to SOAHOST1 When Using a Per Host Node Manager

After you have tested a manual Administration Server failover, and after you have validated that you can access the administration URLs after the failover, you can then migrate the Administration Server back to its original host.

This procedure assumes you’ve configured a per host Node Manager for the enterprise topology, as described in Creating a Per Host Node Manager Configuration. For more information, see About the Node Manager Configuration in a Typical Enterprise Deployment.

21.1.2 Enabling SSL Communication Between the Middle Tier and the Hardware Load Balancer

This section describes how to enable SSL communication between the middle tier and the hardware load balancer.

Note:

The following steps are applicable if the hardware load balancer is configured with SSL and the front end address of the system has been secured accordingly.

- When is SSL Communication Between the Middle Tier and Load Balancer Necessary?

- Generating Self-Signed Certificates Using the utils.CertGen Utility

- Creating an Identity Keystore Using the utils.ImportPrivateKey Utility

- Creating a Trust Keystore Using the Keytool Utility

- Importing the Load Balancer Certificate into the Trust Store

- Adding the Updated Trust Store to the Oracle WebLogic Server Start Scripts

- Configuring WebLogic Servers to Use the Custom Keystores

- Testing Composites Using SSL Endpoints

21.1.2.1 When is SSL Communication Between the Middle Tier and Load Balancer Necessary?

In an enterprise deployment, there are scenarios where the software running on the middle tier must access the front-end SSL address of the hardware load balancer. In these scenarios, an appropriate SSL handshake must take place between the load balancer and the invoking servers. This handshake is not possible unless the Administration Server and Managed Servers on the middle tier are started using the appropriate SSL configuration.

For example, in an Oracle SOA Suite enterprise deployment, the following examples apply:

-

Oracle Business Process Management requires access to the front-end load balancer URL when it attempts to retrieve role information through specific Web services.

-

Oracle Service Bus performs invocations to endpoints exposed in the Load Balancer SSL virtual servers.

-

Oracle SOA Suite composite applications and services often generate callbacks that need to perform invocations using the SSL address exposed in the load balancer.

-

Finally, when you test a SOA Web services endpoint in Oracle Enterprise Manager Fusion Middleware Control, the Fusion Middleware Control software running on the Administration Server must access the load balancer front-end to validate the endpoint.

21.1.2.2 Generating Self-Signed Certificates Using the utils.CertGen Utility

This section describes the procedure for creating self-signed certificates on SOAHOST1. Create these certificates using the network name or alias of the host.

The directory where keystores and trust keystores are maintained must be on shared storage that is accessible from all nodes so that when the servers fail over (manually or with server migration), the appropriate certificates can be accessed from the failover node. Oracle recommends using central or shared stores for the certificates used for different purposes (for example, SSL set up for HTTP invocations).For more information, see the information on filesystem specifications for the KEYSTORE_HOME location provided in About the Recommended Directory Structure for an Enterprise Deployment.

For information on using trust CA certificates instead, see the information about configuring identity and trust in Oracle Fusion Middleware Securing Oracle WebLogic Server.

About Passwords

The passwords used in this guide are used only as examples. Use secure passwords in a production environment. For example, use passwords that include both uppercase and lowercase characters as well as numbers.

To create self-signed certificates:

21.1.2.3 Creating an Identity Keystore Using the utils.ImportPrivateKey Utility

This section describes how to create an Identity Keystore on SOAHOST1.example.com.

In previous sections you have created certificates and keys that reside on a shared storage. In this section, the certificate and private key for both SOAHOST1 and ADMINVHN are imported into a new Identity Store. Make sure that you use a different alias for each of the certificate/key pair imported.

Note:

The Identity Store is created (if none exists) when you import a certificate and the corresponding key into the Identity Store using the utils.ImportPrivateKey utility.

21.1.2.4 Creating a Trust Keystore Using the Keytool Utility

To create the Trust Keystore on SOAHOST1.example.com.

21.1.2.5 Importing the Load Balancer Certificate into the Trust Store

For the SSL handshake to behave properly, the load balancer's certificate needs to be added to the WLS servers trust store. For adding it, follow these steps:

21.1.2.6 Adding the Updated Trust Store to the Oracle WebLogic Server Start Scripts

setDomainEnv.sh script is provided by Oracle WebLogic Server and is used to start the Administration Server and the Managed Servers in the domain. To ensure that each server accesses the updated trust store, edit the setDomainEnv.sh script in each of the domain home directories in the enterprise deployment.21.1.2.7 Configuring WebLogic Servers to Use the Custom Keystores

To configure the identity and trust keystores:

21.1.3 Configuring Roles for Administration of an Enterprise Deployment

This section provides a summary of products with specific administration roles and provides instructions to add a product-specific administration role to the Enterprise Deployment Administration group.

Each enterprise deployment consists of multiple products. Some of the products have specific administration users, roles, or groups that are used to control administration access to each product.

However, for an enterprise deployment, which consists of multiple products, you can use a single LDAP-based authorization provider and a single administration user and group to control access to all aspects of the deployment. For more information about creating the authorization provider and provisioning the enterprise deployment administration user and group, see Creating a New LDAP Authenticator and Provisioning a New Enterprise Deployment Administrator User and Group.

To be sure that you can manage each product effectively within the single enterprise deployment domain, you must understand which products require specific administration roles or groups, you must know how to add any specific product administration roles to the single, common enterprise deployment administration group, and if necessary, you must know how to add the enterprise deployment administration user to any required product-specific administration groups.

For more information, see the following topics.

- Summary of Products with Specific Administration Roles

- Summary of Oracle SOA Suite Products with Specific Administration Groups

- Adding a Product-Specific Administration Role to the Enterprise Deployment Administration Group

- Adding the Enterprise Deployment Administration User to a Product-Specific Administration Group

21.1.3.1 Summary of Products with Specific Administration Roles

The following table lists the Fusion Middleware products that have specific administration roles, which must be added to the enterprise deployment administration group (SOA Administrators), which you defined in the LDAP Authorization Provider for the enterprise deployment.

Use the information in the following table and the instructions in Adding a Product-Specific Administration Role to the Enterprise Deployment Administration Group to add the required administration roles to the enterprise deployment Administration group.

| Product | Application Stripe | Administration Role to be Assigned |

|---|---|---|

|

Oracle Web Services Manager |

wsm-pm |

policy.updater |

|

SOA Infrastructure |

soa-infra |

SOAAdmin |

|

Oracle Service Bus |

Service_Bus_Console |

MiddlewareAdministrator |

|

Enterprise Scheduler Service |

ESSAPP |

ESSAdmin |

|

Oracle B2B |

b2bui |

B2BAdmin |

|

Oracle MFT |

mftapp |

|

|

Oracle MFT |

mftess |

21.1.3.2 Summary of Oracle SOA Suite Products with Specific Administration Groups

Table 21-1 lists the Oracle SOA Suite products that need to use specific administration groups.

For each of these components, the common enterprise deployment Administration user must be added to the product-specific Administration group; otherwise, you won't be able to manage the product resources using the enterprise manager administration user that you created in Provisioning an Enterprise Deployment Administration User and Group.

Use the information in Table 21-1 and the instructions in Adding the Enterprise Deployment Administration User to a Product-Specific Administration Group to add the required administration roles to the enterprise deployment Administration group.

Table 21-1 Oracle SOA Suite Products with a Product-Specific Administration Group

| Product | Product-Specific Administration Group |

|---|---|

|

Oracle Business Activity Monitoring |

BAMAdministrators |

|

Oracle Business Process Management |

Administrators |

|

Oracle Service Bus Integration |

IntegrationAdministrators |

|

MFT |

OracleSystemGroup |

Note:

MFT requires a specific user, namely OracleSystemUser, to be added to the central LDAP. This user must belong to the OracleSystemGroup group. You must add both the user name and the user group to the central LDAP to ensure that MFT job creation and deletion work properly.21.1.3.3 Adding a Product-Specific Administration Role to the Enterprise Deployment Administration Group

For products that require a product-specific administration role, use the following procedure to add the role to the enterprise deployment administration group:

21.1.3.4 Adding the Enterprise Deployment Administration User to a Product-Specific Administration Group

For products with a product-specific administration group, use the following procedure to add the enterprise deployment administration user (weblogic_soa to the group. This will allow you to manage the product using the enterprise manager administrator user:

21.1.4 Using JDBC Persistent Stores for TLOGs and JMS in an Enterprise Deployment

The following topics provide guidelines for when to use JDBC persistent stores for transaction logs (TLOGs) and JMS. They also provide the procedures you can use to configure the persistent stores in a supported database.

- About JDBC Persistent Stores for JMS and TLOGs

Oracle Fusion Middleware supports both database-based and file-based persistent stores for Oracle WebLogic Server transaction logs (TLOGs) and JMS. Before deciding on a persistent store strategy for your environment, consider the advantages and disadvantages of each approach. - Products and Components that use JMS Persistence Stores and TLOGs

- Performance Impact of the TLOGs and JMS Persistent Stores

One of the primary considerations when selecting a storage method for Transaction Logs and JMS persistent stores is the potential impact on performance. This topic provides some guidelines and details to help you determine the performance impact of using JDBC persistent stores for TLOGs and JMS. - Roadmap for Configuring a JDBC Persistent Store for TLOGs

The following topics describe how to configure a database-based persistent store for transaction logs. - Roadmap for Configuring a JDBC Persistent Store for JMS

The following topics describe how to configure a database-based persistent store for JMS. - Creating a User and Tablespace for TLOGs

Before you can create a database-based persistent store for transaction logs, you must create a user and tablespace in a supported database. - Creating a User and Tablespace for JMS

Before you can create a database-based persistent store for JMS, you must create a user and tablespace in a supported database. - Creating GridLink Data Sources for TLOGs and JMS Stores

Before you can configure database-based persistent stores for JMS and TLOGs, you must create two data sources: one for the TLOGs persistent store and one for the JMS persistent store. - Assigning the TLOGs JDBC store to the Managed Servers

After you create the tablespace and user in the database, and you have created the datasource, you can then assign the TLOGs persistence store to each of the required Managed Servers. - Creating a JDBC JMS Store

After you create the JMS persistent store user and table space in the database, and after you create the data source for the JMS persistent store, you can then use the Administration Console to create the store. - Assigning the JMS JDBC store to the JMS Servers

After you create the JMS tablespace and user in the database, create the JMS datasource, and create the JDBC store, then you can then assign the JMS persistence store to each of the required JMS Servers. - Creating the Required Tables for the JMS JDBC Store

The final step in using a JDBC persistent store for JMS is to create the required JDBC store tables. Perform this task before restarting the Managed Servers in the domain.

21.1.4.1 About JDBC Persistent Stores for JMS and TLOGs

Oracle Fusion Middleware supports both database-based and file-based persistent stores for Oracle WebLogic Server transaction logs (TLOGs) and JMS. Before deciding on a persistent store strategy for your environment, consider the advantages and disadvantages of each approach.

Note:

Regardless of which storage method you choose, Oracle recommends that for transaction integrity and consistency, you use the same type of store for both JMS and TLOGs.

When you store your TLOGs and JMS data in an Oracle database, you can take advantage of the replication and high availability features of the database. For example, you can use OracleData Guard to simplify cross-site synchronization. This is especially important if you are deploying Oracle Fusion Middleware in a disaster recovery configuration.

Storing TLOGs and JMS data in a database also means you don’t have to identity a specific shared storage location for this data. Note, however, that shared storage is still required for other aspects of an enterprise deployment. For example, it is necessary for Administration Server configuration (to support Administration Server failover), for deployment plans, and for adapter artifacts, such as the File/FTP Adapter control and processed files.

If you are storing TLOGs and JMS stores on a shared storage device, then you can protect this data by using the appropriate replication and backup strategy to guarantee zero data loss, and you will potentially realize better system performance. However, the file system protection will always be inferior to the protection provided by an Oracle Database.

For more information about the potential performance impact of using a database-based TLOGs and JMS store, see Performance Impact of the TLOGs and JMS Persistent Stores.

21.1.4.2 Products and Components that use JMS Persistence Stores and TLOGs

Determining which installed FMW products and components utilize persistent stores can be done through the WebLogic Server Console in the Domain Structure navigation under DomainName > Services > Persistent Stores. The list will indicate the name of the store, the store type (usually FileStore), the targeted managed server, and whether the target can be migrated to or not.

The persistent stores with migratable targets are the appropriate candidates for consideration of the use of JDBC Persistent Stores. The stores listed that pertain to MDS are outside the scope of this chapter and should not be considered.

21.1.4.3 Performance Impact of the TLOGs and JMS Persistent Stores

One of the primary considerations when selecting a storage method for Transaction Logs and JMS persistent stores is the potential impact on performance. This topic provides some guidelines and details to help you determine the performance impact of using JDBC persistent stores for TLOGs and JMS.

Performance Impact of Transaction Logs Versus JMS Stores

For transaction logs, the impact of using a JDBC store is relatively small, because the logs are very transient in nature. Typically, the effect is minimal when compared to other database operations in the system.

On the other hand, JMS database stores can have a higher impact on performance if the application is JMS intensive. For example, the impact of switching from a file-based to database-based persistent store is very low when you are using the SOA Fusion Order Demo (a sample application used to test Oracle SOA Suite environments), because the JMS database operations are masked by many other SOA database invocations that are much heavier.

Factors that Affect Performance

There are multiple factors that can affect the performance of a system when it is using JMS DB stores for custom destinations. The main ones are:

-

Custom destinations involved and their type

-

Payloads being persisted

-

Concurrency on the SOA system (producers on consumers for the destinations)

Depending on the effect of each one of the above, different settings can be configured in the following areas to improve performance:

-

Type of data types used for the JMS table (using raw vs. lobs)

-

Segment definition for the JMS table (partitions at index and table level)

Impact of JMS Topics

If your system uses Topics intensively, then as concurrency increases, the performance degradation with an Oracle RAC database will increase more than for Queues. In tests conducted by Oracle with JMS, the average performance degradation for different payload sizes and different concurrency was less than 30% for Queues. For topics, the impact was more than 40%. Consider the importance of these destinations from the recovery perspective when deciding whether to use database stores.

Impact of Data Type and Payload Size

When choosing to use the RAW or SecureFiles LOB data type for the payloads, consider the size of the payload being persisted. For example, when payload sizes range between 100b and 20k, then the amount of database time required by SecureFiles LOB is slightly higher than for the RAW data type.

More specifically, when the payload size reach around 4k, then SecureFiles tend to require more database time. This is because 4k is where writes move out-of-row. At around 20k payload size, SecureFiles data starts being more efficient. When payload sizes increase to more than 20k, then the database time becomes worse for payloads set to the RAW data type.

One additional advantage for SecureFiles is that the database time incurred stabilizes with payload increases starting at 500k. In other words, at that point it is not relevant (for SecureFiles) whether the data is storing 500k, 1MB or 2MB payloads, because the write is asynchronized, and the contention is the same in all cases.

The effect of concurrency (producers and consumers) on the queue’s throughput is similar for both RAW and SecureFiles until the payload sizes reeach 50K. For small payloads, the effect on varying concurrency is practically the same, with slightly better scalability for RAW. Scalability is better for SecureFiles when the payloads are above 50k.

Impact of Concurrency, Worker Threads, and Database Partioning

Concurrency and worker threads defined for the persistent store can cause contention in the RAC database at the index and global cache level. Using a reverse index when enabling multiple worker threads in one single server or using multiple Oracle WebLogic Server clusters can improve things. However, if the Oracle Database partitioning option is available, then global hash partition for indexes should be used instead. This reduces the contention on the index and the global cache buffer waits, which in turn improves the response time of the application. Partitioning works well in all cases, some of which will not see significant improvements with a reverse index.

21.1.4.4 Roadmap for Configuring a JDBC Persistent Store for TLOGs

The following topics describe how to configure a database-based persistent store for transaction logs.

21.1.4.5 Roadmap for Configuring a JDBC Persistent Store for JMS

The following topics describe how to configure a database-based persistent store for JMS.

21.1.4.6 Creating a User and Tablespace for TLOGs

Before you can create a database-based persistent store for transaction logs, you must create a user and tablespace in a supported database.

21.1.4.7 Creating a User and Tablespace for JMS

Before you can create a database-based persistent store for JMS, you must create a user and tablespace in a supported database.

21.1.4.8 Creating GridLink Data Sources for TLOGs and JMS Stores

Before you can configure database-based persistent stores for JMS and TLOGs, you must create two data sources: one for the TLOGs persistent store and one for the JMS persistent store.

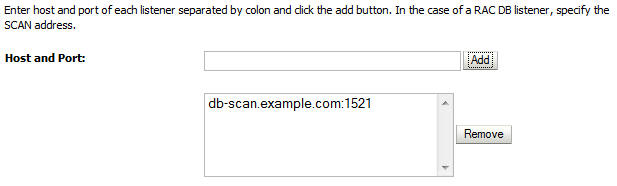

For an enterprise deployment, you should use GridLink data sources for your TLOGs and JMS stores. To create a GridLink data source:

21.1.4.9 Assigning the TLOGs JDBC store to the Managed Servers

After you create the tablespace and user in the database, and you have created the datasource, you can then assign the TLOGs persistence store to each of the required Managed Servers.

- Login in to the Oracle WebLogic Server Administration Console.

- In the Change Center, click Lock and Edit.

- In the Domain Structure tree, expand Environment, then Servers.

- Click the name of the Managed Server you want to use the TLOGs store.

- Select the Configuration > Services tab.

- Under Transaction Log Store, select JDBC from the Type menu.

- From the Data Source menu, select the data source you created for the TLOGs persistence store.

- In the Prefix Name field, specify a prefix name to form a unique JDBC TLOG store name for each configured JDBC TLOG store

- Click Save.

- Repeat steps 3 to 7 for each of the additional Managed Servers in the cluster.

- To activate these changes, in the Change Center of the Administration Console, click Activate Changes.

21.1.4.10 Creating a JDBC JMS Store

After you create the JMS persistent store user and table space in the database, and after you create the data source for the JMS persistent store, you can then use the Administration Console to create the store.

21.1.4.11 Assigning the JMS JDBC store to the JMS Servers

After you create the JMS tablespace and user in the database, create the JMS datasource, and create the JDBC store, then you can then assign the JMS persistence store to each of the required JMS Servers.

- Login in to the Oracle WebLogic Server Administration Console.

- In the Change Center, click Lock and Edit.

- In the Domain Structure tree, expand Services, then Messaging, then JMS Servers.

- Click the name of the JMS Server that you want to use the persistent store.

- From the Persistent Store menu, select the JMS persistent store you created earlier.

- Click Save.

- Repeat steps 3 to 6 for each of the additional JMS Servers in the cluster.

- To activate these changes, in the Change Center of the Administration Console, click Activate Changes.

21.1.5 Performing Backups and Recoveries for an Enterprise Deployment

This section provides guidelines for making sure you back up the necessary directories and configuration data for an Oracle SOA Suite enterprise deployment.

Note:

Some of the static and run-time artifacts listed in this section are hosted from Network Attached Storage (NAS). If possible, backup and recover these volumes from the NAS filer directly rather than from the application servers.

For general information about backing up and recovering Oracle Fusion Middleware products, see the following sections in Administering Oracle Fusion Middleware:

Table 21-2 lists the static artifacts to back up in a typical Oracle SOA Suite enterprise deployment.

Table 21-2 Static Artifacts to Back Up in the Oracle SOA Suite Enterprise Deployment

| Type | Host | Tier |

|---|---|---|

|

Database Oracle home |

DBHOST1 and DBHOST2 |

Data Tier |

|

Oracle Fusion Middleware Oracle home |

WEBHOST1 and WEBHOST2 |

Web Tier |

|

Oracle Fusion Middleware Oracle home |

SOAHOST1 and SOAHOST2 |

Application Tier |

|

Installation-related files |

WEBHOST1, WEHOST2, and shared storage |

N/A |

Table 21-3 lists the runtime artifacts to back up in a typical Oracle SOA Suite enterprise deployment.

Table 21-3 Run-Time Artifacts to Back Up in the Oracle SOA Suite Enterprise Deployment

| Type | Host | Tier |

|---|---|---|

|

Administration Server domain home (ASERVER_HOME) |

SOAHOST1 and SOAHOST2 |

Application Tier |

|

Application home (APPLICATION_HOME) |

SOAHOST1 and SOAHOST2 |

Application Tier |

|

Oracle RAC databases |

DBHOST1 and DBHOST2 |

Data Tier |

|

Scripts and Customizations |

SOAHOST1 and SOAHOST2 |

Application Tier |

|

Deployment Plan home (DEPLOY_PLAN_HOME) |

SOAHOST1 and SOAHOST2 |

Application Tier |

|

OHS Configuration directory |

WEBHOST1 and WEBHOST2 |

Web Tier |

21.2 Configuration and Management Tasks for an Oracle SOA Suite Enterprise Deployment

These are some of the key configuration and management tasks that you will likely need to perform on an Oracle SOA Suite enterprise deployment.

21.2.1 Deploying Oracle SOA Suite Composite Applications to an Enterprise Deployment

Oracle SOA Suite applications are deployed as composites, consisting of different kinds of Oracle SOA Suite components. SOA composite applications include the following:

-

Service components such as Oracle Mediator for routing, BPEL processes for orchestration, BAM processes for orchestration (if Oracle BAM Suite is also installed), human tasks for workflow approvals, spring for integrating Java interfaces into SOA composite applications, and decision services for working with business rules.

-

Binding components (services and references) for connecting SOA composite applications to external services, applications, and technologies.

These components are assembled into a single SOA composite application.

When you deploy an Oracle SOA Suite composite application to an Oracle SOA Suite enterprise deployment, be sure to deploy each composite to a specific server or cluster address and not to the load balancer address (soa.example.com).

Deploying composites to the load balancer address often requires direct connection from the deployer nodes to the external load balancer address. As a result, you will have to open additional ports in the firewalls.

For more information about Oracle SOA Suite composite applications, see the following sections in Administering Oracle SOA Suite and Oracle Business Process Management Suite:

21.2.2 Using Shared Storage for Deployment Plans and SOA Infrastructure Applications Updates

When redeploying a SOA infrastructure application or resource adapter within the SOA cluster, the deployment plan along with the application bits should be accessible to all servers in the cluster.

SOA applications and resource adapters are installed using nostage deployment mode. Because the administration sever does not copy the archive files from their source location when the nostage deployment mode is selected, each server must be able to access the same deployment plan.

To ensure deployment plan location is available to all servers in the domain, use the Deployment Plan home location described in File System and Directory Variables Used in This Guide and represented by the DEPLOY_PLAN_HOME variable in the Enterprise Deployment Workbook.

21.2.3 Managing Database Growth in an Oracle SOA Suite Enterprise Deployment

When the amount of data in the Oracle SOA Suite database grows very large, maintaining the database can become difficult, especially in an Oracle SOA Suite enterprise deployment where potentially many composite applications are deployed.

For more information, review the following sections in Administering Oracle SOA Suite and Oracle Business Process Management Suite: