1 Event Processing Overview in Oracle Stream Analytics

Oracle Stream Analytics is a high throughput and low latency platform for developing, administering, and managing applications that monitor real-time streaming events.

This guide introduces you to Oracle Stream Analytics and Oracle JDeveloper for application development. The step-by-step walkthroughs and sample applications provide a solid foundation for understanding how the parts of the platform work together and how to create an Oracle Stream Analytics application.

This chapter covers the following topics:

1.1 Oracle Stream Analytics

Oracle Stream Analytics consists of the Oracle Stream Analytics server, Oracle Stream Analytics Visualizer, a command-line administrative interface, and the Oracle JDeveloper Integrated Development Environment (IDE).

An Oracle Stream Analytics server hosts logically related resources and services for running Oracle Stream Analytics applications. Servers are grouped into and managed as domains. A domain can have one server (standalone-server domain) or many (multiserver domain). You manage the Oracle Stream Analytics domains and servers through Oracle Stream Analytics Visualizer and the Oracle Stream Analytics administrative command-line interface.

Oracle Stream Analytics Visualizer is a web-based user interface through which you can deploy, configure, test, and monitor Oracle Stream Analytics applications running on the Oracle Stream Analytics server.

Oracle Stream Analytics administrative command-line interface enables you to manage the server from the command line and through configuration files. For example, you can start and stop domains and deploy, suspend, resume, and uninstall an application.

Oracle JDeveloper for the 12c release includes an integrated framework that supports Oracle Stream Analytics application design, development, testing, and deployment.

Oracle Stream Analytics is developed to simplify the complex event processing operations and make them available even to users without any technical background.

1.2 Application Programming Model

An Oracle Stream Analytics application receives and processes data streaming from an event source. That data might be coming from one of a variety of places, such as a monitoring device, a financial services company, or a motor vehicle. While monitoring the data, the application might identify and respond to patterns, look for events that meet specified criteria and alert other applications, or do other work that requires immediate action based on quickly changing data.

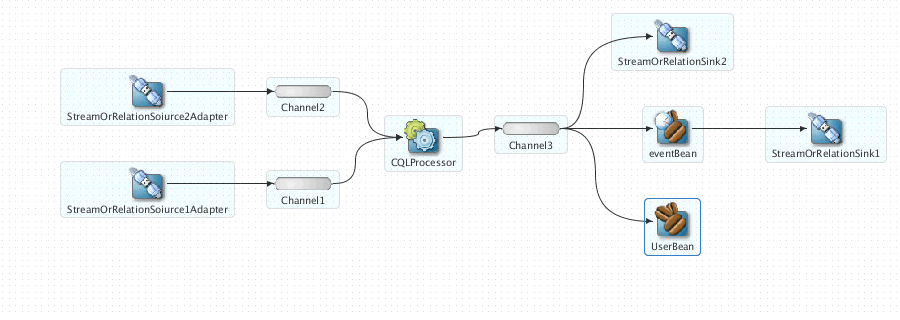

Oracle Stream Analytics uses an event-driven architecture called SEDA where an application is broken into a set of stages (nodes) connected by queues. In Oracle Stream Analytics, the channel component represents queues while all of the other components represent stages. Every component in the EPN has a role in processing the data.

The event processing network (EPN) is linear with data entering the EPN through an adapter where it is converted to an event. After the conversion, events pass through the stages from one end to the other. At various stages in the EPN, the component can execute logic or create connections with external components as needed.

Figure 1-1 Oracle Stream Analytics Application

Description of "Figure 1-1 Oracle Stream Analytics Application"

Oracle Stream Analytics applications have the following characteristics:

-

Applications leverage the database programming model: Some of the programming model in Oracle Stream Analytics applications is conceptually an extension of what you find in database programming. Events are similar to database rows in that they are tuples against which you can execute queries with Oracle Continuous Query Language (Oracle CQL). Oracle CQL is an extension to SQL, but designed to work on streaming data.

-

Stages represent discrete functional roles: The structure of an EPN enables you to execute logic against events flowing through the network. Stages also enable you to capture multiple processing paths with a network that branches into multiple downstream directions based on event patterns that your code finds.

-

Stages transmit events through an EPN by acting as event sinks and event sources: The stages in an EPN can receive events as event sinks and send events as event sources. An event sink is a Java class that implements logic to listen for and work on specific events.

-

Events are handled as streams or relations: Channels convey events from one stage to another in the EPN. A channel can convey events in a stream or in a relation. Both stream and relation channels insert events into a collection and send the stream to the next EPN stage. Events in a stream can never be deleted from the stream. Events in a relation can be inserted into, deleted from, and updated in the relation. For insert, delete, and update operations, events in a relation must always be referenced to a particular point in time.

1.3 Component Roles in an Event Processing Network

The core of Oracle Stream Analytics applications is the EPN. You build an EPN by connecting components that have a role in processing events that pass through the network. When you develop an Oracle Stream Analytics application, you identify which kinds of components are needed to achieve the desired functionality.

The best way to create an EPN is to use Oracle JDeveloper to add, configure, and connect the components. The EPN has a roughly linear shape where events enter from the left, move through the EPN to the right, and exit or terminate at the far right.

The EPN components provide ways to:

-

Exchange event data with external sources: You can connect external databases, caches, HTTP messages, Java Message Service (JMS) messages, files, and big data storage to the EPN of your application to add ways for data, including event data, to pass into or out of the EPN.

-

Model event data as event types so that it can be handled by application code: You implement or define event types that model event data so that application code can access and manipulate it.

-

Query and filter events: Oracle CQL enables you to query events as you would data in a database. Oracle CQL includes features specifically intended for querying streaming data. You can add Oracle CQL code to an EPN by adding a processor component. All EPN processors are Oracle CQL processors.

-

Execute Java logic to handle events: You can add Java classes that send and receive events the same way that other EPN stages do. Logic in these classes can retrieve values from events, create new events, and more.

1.4 Oracle CQL

Oracle CQL is an extension to Structured Query Language (SQL) with the same keywords and syntax rules, but with features to support the unique aspects of streaming data.

An event conceptually corresponds to a row in a database table. However, an important difference between an event and a table row is that with events, one event is always before or after another in time, and the stream is potentially infinite and ever-changing.

With a relational database, data is relatively static and changes when a user initiates a transaction such as an add, delete, or change operation. In contrast, event data streams constantly flow into the EPN where your query examines it as it arrives.

To make the most of the sequential, time-oriented quality of streaming data, Oracle CQL enables you to do the following:

-

Specify a window of a particular time period or range from which events should be queried. This could be for every five seconds worth of events, for example.

-

Specify a window of a particular number of events, called rows, against which to query. This might be every sequence of 10 events.

-

Specify how often the query should execute against the stream. For example, the query could slide every five seconds to a later five-second window of events.

-

Separate (partition) an incoming stream into multiple streams based on specified event characteristics. You could have the query create new streams for each of the specified stock symbols found in incoming trade events.

In addition, Oracle CQL supports common aspects of SQL that you might be familiar with, including views and joins. For example, you can write Oracle CQL code that performs a join that involves streaming event data and data in a relational database table or cache.

Oracle CQL is extensible through data cartridges, with included data cartridges that provide support for queries that incorporate functionality within Java classes. For example, there is data cartridge support for spatial calculations and JDBC queries. The spatial data cartridge supports a large number of moving objects, such as complex polygons and circles, 3D positioning, and spatial clustering.

1.5 Technologies in Oracle Stream Analytics

Oracle Stream Analytics is made up of the following standard technologies that provide functionality for developing application logic and for deploying and configuring applications.

-

Java programming language: Much of the Oracle Stream Analytics server functionality is written in the Java programming language. Java is the language you use to write logic for event beans and Spring beans.

-

Spring: Oracle Stream Analytics makes significant use of the Spring configuration model. Spring is a collection of technologies that developers use to connect and configure parts of a Java application. Oracle Stream Analytics applications also support adding logic as Spring beans, which are Java components that support Spring framework features.

You can find out more about Spring at the project's web site: http://www.springsource.org/get-started.

-

OSGi: Oracle Stream Analytics application components are assembled and deployed as OSGi bundles. You can find out more about OSGi at: http://en.wikipedia.org/wiki/OSGi.

-

XML: Oracle Stream Analytics application configuration files are written in XML. These files include the assembly file, which defines relationships between EPN stages and other design-time configurations. A separate configuration XML file that contains settings that can be modified after the application is deployed, including Oracle CQL queries.

-

SQL: Oracle CQL extends SQL with functionality designed to address the needs of applications that use streaming data.

-

Hadoop: Oracle CQL developers can access big data Hadoop data sources from query code. Hadoop is an open source technology that provides access to large data sets that are distributed across clusters. One strength of the Hadoop software is that it provides access to large quantities of data not stored in a relational database.

For more information about Hadoop, start with the Hadoop project website at http://hadoop.apache.org/.

-

NoSQL: Oracle CQL developers can access big data NoSQL data sources from query code. The Oracle NoSQL Database is a distributed key-value database. In Oracle NoSQL, data is stored as key-value pairs, which are written to particular storage stages. Storage stages are replicated to ensure high availability, rapid fail over in the event of a stage failure, and optimal load balancing of queries.

For more information about Oracle NoSQL, see the Oracle Technology Network page at http://www.oracle.com/technetwork/products/nosqldb/.

-

REST Inbound and Outbound Adapters: REST Inbound and Outbound Adapters allow to consume events from

HTTP Postrequests and receive events processed by the EPN. -

MBean API (JMX Technology): Allows administrative operations and dynamic EPN changes.

-

Oracle Business Rules: Allows to define your own business logic and build applications.

1.6 Oracle Stream Analytics High-Level Use Cases

The use cases described in this section illustrate specific uses for Oracle Stream Analytics applications.

Financial: Responsive Customer Relationship

Acting on an initiative to improve relationships with customers, a retail bank designs an effort to provide coupons tailored to each customer's purchase pattern and geography.

The bank collects automated teller machine (ATM) data, including data about the geographical region for the customer's most common ATM activity. The bank also captures credit card transaction activity. Using this data, the bank can push purchase incentives (such as coupons) to the customer in real time based on where they are and what they tend to buy.

An Oracle Stream Analytics application receives event data in the form of ATM and credit card activity. Oracle CQL queries filter incoming events for patterns that isolate the customer's geography by way of GPS coordinates and likely purchase interests nearby. This transient data is matched against the bank's stored customer profile data. If a good match is found, a purchase incentive is sent to the customer in real time, such as through their mobile device.

Telecommunications: Real-Time Billing

Due to significant growth in mobile data usage, a telecommunications company with a large mobile customer base wants to shift billing for data usage from a flat-rate system to a real-time per-use system.

The company tracks IP addresses allocated to mobile devices and correlates this with stored user account data. Additional data is collected from deep-packet inspection (DPI) devices (for finer detail about data plan usage) and IP servers, then inserted into a Hadoop-based big data warehouse.

An Oracle Stream Analytics application receives usage information as event data in real time. Through Oracle CQL queries, and by correlating transient usage data with stored customer account data, the application determines billing requirements.

Energy: Improving Efficiency Through Analysis of Big Data

A company offering data management devices and services needs to improve its data center coordination and energy management to reduce total cost of ownership. The company needs finer-grained, more detailed sensor and data center reporting.

The company receives energy usage sensor data from disparate resources. Data from each sensor must be analyzed for its local relevance, and must be aggregated with data from other sensors to identify patterns that can be used to improve efficiency.

Separate Oracle Stream Analytics applications provide a two-tiered approach.

One application, deployed in each of thousands of data centers, receives sensor data as event data. Through Oracle CQL queries against events representing the sensor data, this application analyzes local usage, filtering for fault and problem events and sending alerts when needed.

The other application, deployed in multiple central management sites, receives event data from lower-tier applications. This application aggregates and correlates data from across the system to identify consistency issues and produce data to be used in reports on patterns.