2.2 IEEE Formats

This section describes how floating-point data is stored in memory. It summarizes the precisions and ranges of the different IEEE storage formats.

2.2.1 Storage Formats

A floating-point format is a data structure specifying the fields that comprise a floating-point numeral, the layout of those fields, and their arithmetic interpretation. A floating-point storage format specifies how a floating-point format is stored in memory. The IEEE standard defines the formats, but the choice of storage formats is left to the implementers.

Assembly language software sometimes relies on using the storage formats, but higher level languages usually deal only with the linguistic notions of floating-point data types. These types have different names in different high-level languages, and correspond to the IEEE formats as shown in Table 1.

|

IEEE 754 specifies exactly the single and double floating-point formats, and it defines a class of extended formats for each of these two basic formats. The long double and REAL*16 types shown in Table 1 refer to one of the class of double extended formats defined by the IEEE standard.

The following sections describe in detail each of the storage formats used for the IEEE floating-point formats on SPARC and x86 platforms.

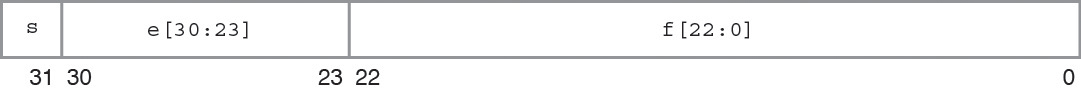

2.2.2 Single Format

The IEEE single format consists of three fields: a 23-bit fraction f; an 8-bit biased exponent e; and a 1-bit sign s. These fields are stored contiguously in one 32-bit word, as shown in the following figure. Bits 0:22 contain the 23-bit fraction, f, with bit 0 being the least significant bit of the fraction and bit 22 being the most significant; bits 23:30 contain the 8-bit biased exponent, e, with bit 23 being the least significant bit of the biased exponent and bit 30 being the most significant; and the highest-order bit 31 contains the sign bit, s.

Figure 1 Single Storage Format

Table 2 shows the correspondence between the values of the three constituent fields s, e and f, on the one hand, and the value represented by the single- format bit pattern on the other; u means that the value of the indicated field is irrelevant to the determination of the value of the particular bit patterns in single format.

|

Notice that when e < 255, the value assigned to the single format bit pattern is formed by inserting the binary radix point immediately to the left of the fraction's most significant bit, and inserting an implicit bit immediately to the left of the binary point, thus representing in binary positional notation a mixed number (whole number plus fraction, wherein 0 ≤ fraction < 1).

The mixed number thus formed is called the single-format significand. The implicit bit is so named because its value is not explicitly given in the single- format bit pattern, but is implied by the value of the biased exponent field.

For the single format, the difference between a normal number and a subnormal number is that the leading bit of the significand (the bit to left of the binary point) of a normal number is 1, whereas the leading bit of the significand of a subnormal number is 0. Single-format subnormal numbers were called single-format denormalized numbers in IEEE Standard 754.

The 23-bit fraction combined with the implicit leading significand bit provides 24 bits of precision in single-format normal numbers.

Examples of important bit patterns in the single-storage format are shown in Table 3. The maximum positive normal number is the largest finite number representable in IEEE single format. The minimum positive subnormal number is the smallest positive number representable in IEEE single format. The minimum positive normal number is often referred to as the underflow threshold. (The decimal values for the maximum and minimum normal and subnormal numbers are approximate; they are correct to the number of figures shown.)

|

A NaN (Not a Number) can be represented with any of the many bit patterns that satisfy the definition of a NaN. The hex value of the NaN shown in Table 3 is just one of the many bit patterns that can be used to represent a NaN.

2.2.3 Double Format

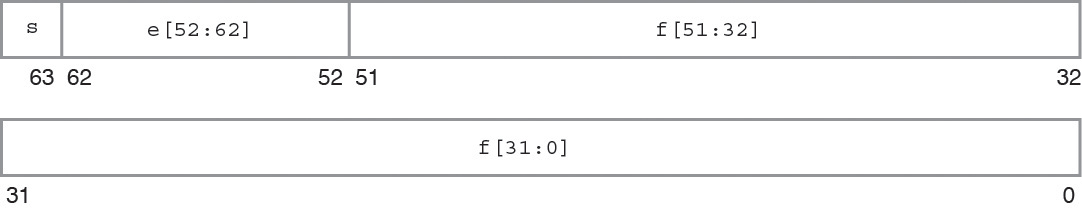

The IEEE double format consists of three fields: a 52-bit fraction, f; an 11-bit biased exponent, e; and a 1-bit sign, s. These fields are stored contiguously in two successively addressed 32-bit words, as shown in the following figure.

In the SPARC architecture, the higher address 32-bit word contains the least significant 32 bits of the fraction, while in the x86 architecture the lower address 32‐bit word contains the least significant 32 bits of the fraction.

If f[31:0] denotes the least significant 32 bits of the fraction, then bit 0 is the least significant bit of the entire fraction and bit 31 is the most significant of the 32 least significant fraction bits.

In the other 32-bit word, bits 0:19 contain the 20 most significant bits of the fraction, f[51:32], with bit 0 being the least significant of these 20 most significant fraction bits, and bit 19 being the most significant bit of the entire fraction; bits 20:30 contain the 11-bit biased exponent, e, with bit 20 being the least significant bit of the biased exponent and bit 30 being the most significant; and the highest-order bit 31 contains the sign bit, s.

The following figure numbers the bits as though the two contiguous 32-bit words were one 64‐bit word in which bits 0:51 store the 52-bit fraction, f; bits 52:62 store the 11-bit biased exponent, e; and bit 63 stores the sign bit, s.

Figure 2 Double-Storage Format

The values of the bit patterns in these three fields determine the value represented by the overall bit pattern.

Table 4 shows the correspondence between the values of the bits in the three constituent fields, on the one hand, and the value represented by the double-format bit pattern on the other; u means the value of the indicated field is irrelevant to the determination of value for the particular bit pattern in double format.

|

Notice that when e < 2047, the value assigned to the double-format bit pattern is formed by inserting the binary radix point immediately to the left of the fraction's most significant bit, and inserting an implicit bit immediately to the left of the binary point. The number thus formed is called the significand. The implicit bit is so named because its value is not explicitly given in the double-format bit pattern, but is implied by the value of the biased exponent field.

For the double format, the difference between a normal number and a subnormal number is that the leading bit of the significand (the bit to the left of the binary point) of a normal number is 1, whereas the leading bit of the significand of a subnormal number is 0. Double-format subnormal numbers were called double-format denormalized numbers in IEEE Standard 754.

The 52-bit fraction combined with the implicit leading significand bit provides 53 bits of precision in double-format normal numbers.

Examples of important bit patterns in the double-storage format are shown in Table 5. The bit patterns in the second column appear as two 8-digit hexadecimal numbers. For the SPARC architecture, the left one is the value of the lower addressed 32-bit word, and the right one is the value of the higher addressed 32-bit word, while for the x86 architecture, the left one is the higher addressed word, and the right one is the lower addressed word. The maximum positive normal number is the largest finite number representable in the IEEE double format. The minimum positive subnormal number is the smallest positive number representable in IEEE double format. The minimum positive normal number is often referred to as the underflow threshold. (The decimal values for the maximum and minimum normal and subnormal numbers are approximate; they are correct to the number of figures shown.)

|

A NaN (Not a Number) can be represented by any of the many bit patterns that satisfy the definition of NaN. The hex value of the NaN shown in Table 5 is just one of the many bit patterns that can be used to represent a NaN.

2.2.4 Quadruple Format

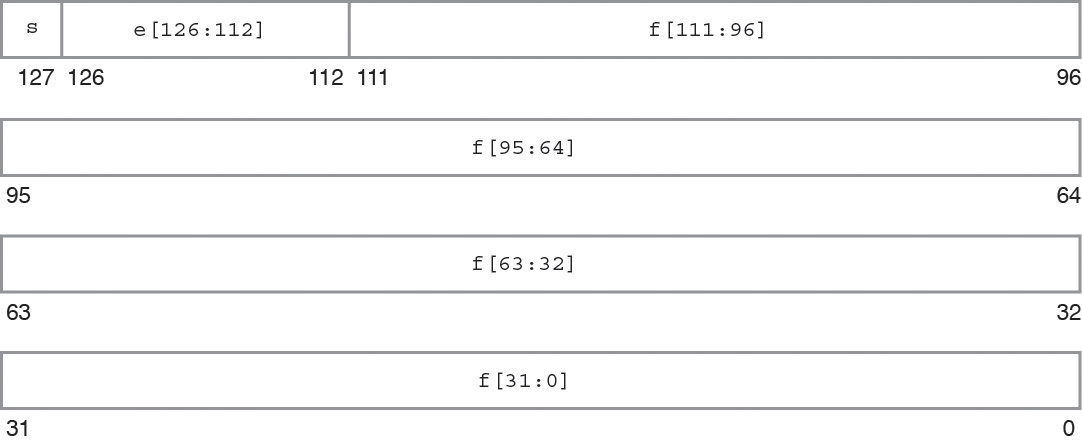

The floating-point environment's quadruple-precision format also conforms to the IEEE definition of double-extended format. This format is not in Oracle Developer Studio C/C++ compilers for x86. The quadruple-precision format occupies four 32-bit words and consists of three fields: a 112-bit fraction f; a 15-bit biased exponent e; and a 1-bit sign s. These are stored contiguously as shown in the following figure.

The highest addressed 32-bit word contains the least significant 32-bits of the fraction, denoted f[31:0]. The next two 32-bit words contain f[63:32] and f[95:64], respectively. Bits 0:15 of the next word contain the 16 most significant bits of the fraction, f[111:96], with bit 0 being the least significant of these 16 bits, and bit 15 being the most significant bit of the entire fraction. Bits 16:30 contain the 15-bit biased exponent, e, with bit 16 being the least significant bit of the biased exponent and bit 30 being the most significant; and bit 31 contains the sign bit, s.

The following figure numbers the bits as though the four contiguous 32-bit words were one 128-bit word in which bits 0:111 store the fraction, f; bits 112:126 store the 15-bit biased exponent, e; and bit 127 stores the sign bit, s.

Figure 3 Quadruple Format

The values of the bit patterns in the three fields f, e, and s, determine the value represented by the overall bit pattern.

Table 6 shows the correspondence between the values of the three constituent fields and the value represented by the bit pattern in quadruple-precision format. u means don't care, because the value of the indicated field is irrelevant to the determination of values for the particular bit patterns.

|

Examples of important bit patterns in the quadruple-precision double-extended storage format are shown in Table 7. The bit patterns in the second column appear as four 8-digit hexadecimal numbers. The left-most number is the value of the lowest addressed 32-bit word, and the right-most number is the value of the highest addressed 32-bit word. The maximum positive normal number is the largest finite number representable in the quadruple precision format. The minimum positive subnormal number is the smallest positive number representable in the quadruple precision format. The minimum positive normal number is often referred to as the underflow threshold. (The decimal values for the maximum and minimum normal and subnormal numbers are approximate; they are correct to the number of figures shown.)

|

The hex value of the NaN shown in Table 7 is just one of the many bit patterns that can be used to represent NaNs.

2.2.5 Double-Extended Format (x86)

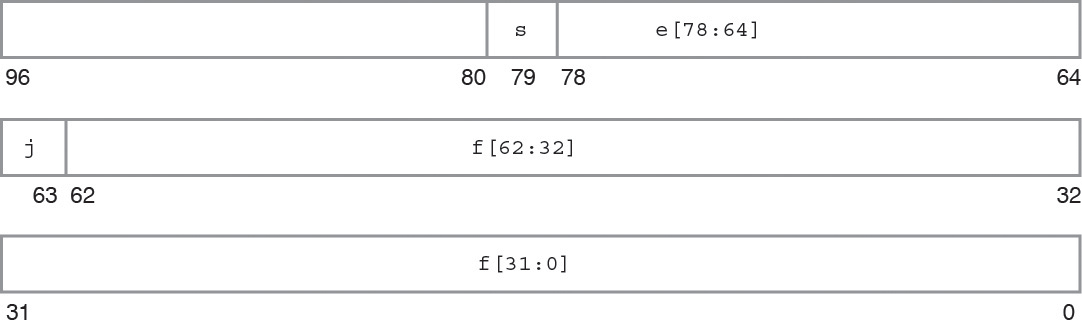

This floating-point environment's double-extended format conforms to the IEEE definition of double-extended formats. It consists of four fields: a 63-bit fraction f; a 1-bit explicit leading significand bit j; a 15-bit biased exponent e; and a 1-bit sign s. This format is not available as a language type for Oracle Developer Studio Fortran or for C/C++ for SPARC.

In the family of x86 architectures, these fields are stored contiguously in ten successively addressed 8-bit bytes. However, the UNIX System V Application Binary Interface Intel 386 Processor Supplement (Intel ABI) requires that double-extended parameters and results occupy three consecutively addressed 32-bit words in the stack, with the most significant 16 bits of the highest addressed word being unused, as shown in the following figure.

The lowest addressed 32-bit word contains the least significant 32 bits of the fraction, f[31:0], with bit 0 being the least significant bit of the entire fraction and bit 31 being the most significant of the 32 least significant fraction bits. In the middle addressed 32-bit word, bits 0:30 contain the 31 most significant bits of the fraction, f[62:32], with bit 0 being the least significant of these 31 most significant fraction bits, and bit 30 being the most significant bit of the entire fraction; bit 31 of this middle addressed 32-bit word contains the explicit leading significand bit, j.

In the highest addressed 32-bit word, bits 0:14 contain the 15-bit biased exponent, e, with bit 0 being the least significant bit of the biased exponent and bit 14 being the most significant; and bit 15 contains the sign bit, s. Although the highest order 16 bits of this highest addressed 32-bit word are unused by the family of x86 architectures, their presence is essential for conformity to the Intel ABI, as indicated above.

The following figure numbers the bits as though the three contiguous 32-bit words were one 96-bit word in which bits 0:62 store the 63-bit fraction, f; bit 63 stores the explicit leading significand bit, j; bits 64:78 store the 15-bit biased exponent, e; and bit 79 stores the sign bit, s.

Figure 4 Double-Extended Format (x86)

The values of the bit patterns in the four fields f, j, e and s, determine the value represented by the overall bit pattern.

Table 8 shows the correspondence between the hex representations of the four constituent fields and the values represented by the bit patterns. u means the value of the indicated field is irrelevant to the determination of value for the particular bit patterns.

|

Notice that bit patterns in double-extended format do nothave an implicit leading significand bit. The leading significand bit is given explicitly as a separate field, j, in the double-extended format. However, when e≠ 0, any bit pattern with j = 0 is unsupported in the sense that using such a bit pattern as an operand in floating-point operations provokes an invalid operation exception.

The union of the disjoint fields j and f in the double extended format is called the significand. When e < 32767 and j = 1, or when e = 0 and j = 0, the significand is formed by inserting the binary radix point between the leading significand bit, j, and the fraction's most significant bit.

In the x86 double-extended format, a bit pattern whose leading significand bit j is 0 and whose biased exponent field e is also 0 represents a subnormal number, whereas a bit pattern whose leading significand bit j is 1 and whose biased exponent field e is nonzero represents a normal number. Because the leading significand bit is represented explicitly rather than being inferred from the value of the exponent, this format also admits bit patterns whose biased exponent is 0, like the subnormal numbers, but whose leading significand bit is 1. Each such bit pattern actually represents the same value as the corresponding bit pattern whose biased exponent field is 1, i.e., a normal number, so these bit patterns are called pseudo-denormals. Subnormal numbers were called denormalized numbers in IEEE Standard 754-1985. Pseudo-denormals are merely an artifact of the x86 double-extended format's encoding; they are implicitly converted to the corresponding normal numbers when they appear as operands, and they are never generated as results.

|

Examples of important bit patterns in the double-extended storage format appear in the preceding table. The bit patterns in the second column appear as one 4-digit hexadecimal number, which is the value of the 16 least significant bits of the highest addressed 32-bit word (recall that the most significant 16 bits of this highest addressed 32-bit word are unused, so their value is not shown), followed by two 8-digit hexadecimal numbers, of which the left one is the value of the middle addressed 32-bit word, and the right one is the value of the lowest addressed 32-bit word. The maximum positive normal number is the largest finite number representable in the x86 double-extended format. The minimum positive subnormal number is the smallest positive number representable in the double-extended format. The minimum positive normal number is often referred to as the underflow threshold. The decimal values for the maximum and minimum normal and subnormal numbers are approximate; they are correct to the number of figures shown.

A NaN (Not a Number) can be represented by any of the many bit patterns that satisfy the definition of NaN. The hex values of the NaNs shown in the preceding table illustrate that the leading (most significant) bit of the fraction field determines whether a NaN is quiet (leading fraction bit = 1) or signaling (leading fraction bit = 0).

2.2.6 Ranges and Precisions in Decimal Representation

This section covers the notions of range and precision for a given storage format. It includes the ranges and precisions corresponding to the IEEE single, double, and quadruple formats and to the implementations of IEEE double-extended format on x86 architectures. For concreteness, in defining the notions of range and precision, refer to the IEEE single format.

The IEEE standard specifies that 32 bits be used to represent a floating-point number in single format. Because there are only finitely many combinations of 32 zeroes and ones, only finitely many numbers can be represented by 32 bits.

It is natural to ask what are the decimal representations of the largest and smallest positive numbers that can be represented in this particular format.

If you introduce the concept of range, you can rephrase the question instead to ask what is the range, in decimal notation, of numbers that can be represented by the IEEE single format?

Taking into account the precise definition of IEEE single format, you can prove that the range of floating-point numbers that can be represented in IEEE single format, if restricted to positive normalized numbers, is as follows:

1.175...× (10-38) to 3.402... ×(10+38)

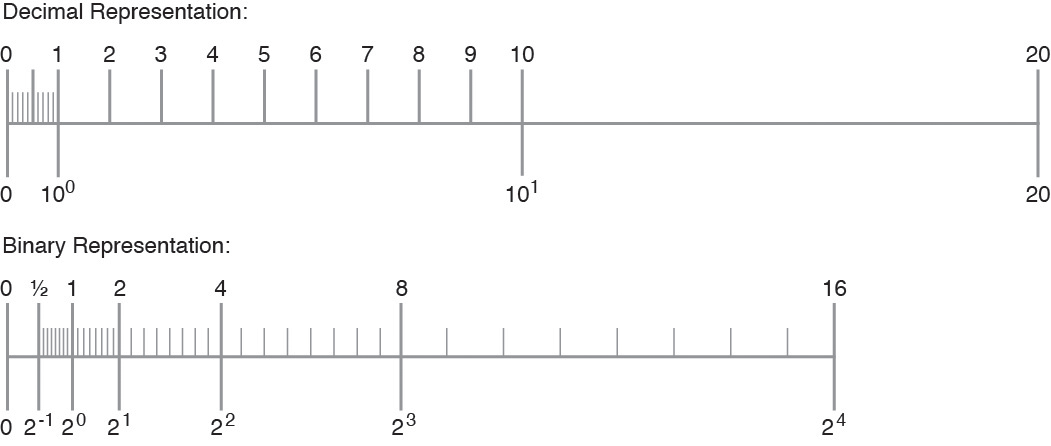

A second question refers to the precision of the numbers represented in a given format. These notions are explained by looking at some pictures and examples.

The IEEE standard for binary floating-point arithmetic specifies the set of numerical values representable in the single format. Remember that this set of numerical values is described as a set of binary floating-point numbers. The significand of the IEEE single format has 23 bits, which together with the implicit leading bit, yield 24 digits (bits) of (binary) precision.

One obtains a different set of numerical values by marking the numbers (representable by q decimal digits in the significand) on the number line:

x = (x1.x2 x3...xq) × (10n)

The following figure exemplifies this situation:

Figure 5 Comparison of a Set of Numbers Defined by Digital and Binary Representation

Notice that the two sets are different. Therefore, estimating the number of significant decimal digits corresponding to 24 significant binary digits, requires reformulating the problem.

Reformulate the problem in terms of converting floating-point numbers between binary representations (the internal format used by the computer) and the decimal format (the format users are usually interested in). In fact, you might want to convert from decimal to binary and back to decimal, as well as convert from binary to decimal and back to binary.

It is important to notice that because the sets of numbers are different, conversions are in general inexact. If done correctly, converting a number from one set to a number in the other set results in choosing one of the two neighboring numbers from the second set (which one specifically is a question related to rounding).

Consider some examples. Suppose you are trying to represent a number with the following decimal representation in IEEE single format:

x = x1.x2 x3... × 10n

Because there are only finitely many real numbers that can be represented exactly in IEEE single format, and not all numbers of the above form are among them, in general it will be impossible to represent such numbers exactly. For example, let

y = 838861.2, z = 1.3

and run the following Fortran program:

REAL Y, Z

Y = 838861.2

Z = 1.3

WRITE(*,40) Y

40 FORMAT("y: ",1PE18.11)

WRITE(*,50) Z

50 FORMAT("z: ",1PE18.11)

The output from this program should be similar to the following:

y: 8.38861187500E+05 z: 1.29999995232E+00

The difference between the value 8.388612 × 105 assigned to y and the value printed out is 0.000000125, which is seven decimal orders of magnitude smaller than y. The accuracy of representing y in IEEE single format is about 6 to 7 significant digits, or that y has about six significant digits if it is to be represented in IEEE single format.

Similarly, the difference between the value 1.3 assigned to z and the value printed out is 0.00000004768, which is eight decimal orders of magnitude smaller than z. The accuracy of representing z in IEEE single format is about 7 to 8 significant digits, or that z has about seven significant digits if it is to be represented in IEEE single format.

Assume you convert a decimal floating point number a to its IEEE single format binary representation b, and then translate b back to a decimal number c; how many orders of magnitude are between a and a - c?

Rephrase the question:

What is the number of significant decimal digits of a in the IEEE single format representation, or how many decimal digits are to be trusted as accurate when one represents x in IEEE single format?

The number of significant decimal digits is always between 6 and 9, that is, at least 6 digits, but not more than 9 digits are accurate (with the exception of cases when the conversions are exact, when infinitely many digits could be accurate).

Conversely, if you convert a binary number in IEEE single format to a decimal number, and then convert it back to binary, generally, you need to use at least 9 decimal digits to ensure that after these two conversions you obtain the number you started from.

The complete picture is given in Table 10:

|

2.2.7 Base Conversion in the Oracle Solaris Environment

Base conversion refers to the transformation of a number represented in one base to a number represented in another base. I/O routines such as printf and scanf in C and read, write, and print in Fortran involve base conversion between numbers represented in bases 2 and 10:

Base conversion from base 10 to base 2 occurs when reading in a number in conventional decimal notation and storing it in internal binary format.

Base conversion from base 2 to base 10 occurs when printing an internal binary value as an ASCII string of decimal digits.

In the Oracle Solaris environment, the fundamental routines for base conversion in all languages are contained in the standard C library, libc. These routines use table-driven algorithms that yield correctly rounded conversion between any input and output formats subject to modest restrictions on the lengths of the strings of decimal digits involved. In addition to their accuracy, table-driven algorithms reduce the worst-case times for correctly rounded base conversion.

The 1985 IEEE standard requires correct rounding for typical numbers whose magnitudes range from 10–44 to 10+44 but permits slightly incorrect rounding for larger exponents. See section 5.6 of IEEE Standard 754. The libc table-driven algorithms round correctly throughout the entire range of single, double, and double extended formats, as required by the revised 754-2008.

In C, conversions between decimal strings and binary floating-point values are always rounded correctly in accordance with IEEE 754: the converted result is the number representable in the result's format that is nearest to the original value in the direction specified by the current rounding mode. When the rounding mode is round-to-nearest and the original value lies exactly halfway between two representable numbers in the result format, the converted result is the one whose least significant digit is even. These rules apply to conversions of constants in source code performed by the compiler as well as to conversions of data performed by the program using standard library routines.

In Fortran, conversions between decimal strings and binary floating-point values are rounded correctly following the same rules as C by default. For I/O conversions, the “round-ties-to-even” rule in round-to-nearest mode can be overridden, either by using the ROUNDING= specifier in the program or by compiling with the –iorounding flag. See the Oracle Developer Studio 12.6: Fortran User’s Guide and the f95(1) man page for more information.

See References for references on base conversion, particularly Coonen's thesis and Sterbenz's book.