5 Using the In-Memory Analyst

The in-memory analyst feature of Oracle Spatial and Graph supports a set of analytical functions.

This chapter provides examples using the in-memory analyst (also referred to as Property Graph In-Memory Analytics, and often abbreviated as PGX in the Javadoc, command line, path descriptions, error messages, and examples). It contains the following major topics:

5.1 Reading a Graph into Memory

This topic provides an example of reading graph interactively into memory using the shell interface. These are the major steps:

5.1.1 Connecting to an In-Memory Analyst Server Instance

To start the in-memory analyst shell:

If the in-memory analyst software is installed correctly, you will see an engine-running log message and the in-memory analyst shell prompt (pgx>):

The variables instance, session, and analyst are ready to use.

In the preceding example in this topic, the shell started a local instance because the pgx command did not specify a remote URL.

5.1.2 Using the Shell Help

The in-memory analyst shell provides a help system, which you access using the :help command.

5.1.3 Providing Graph Metadata in a Configuration File

An example graph is included in the installation directory, under /opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/. It uses a configuration file that describes how the in-memory analyst reads the graph.

pgx> cat /opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json

===> {

"uri": "sample.adj",

"format": "adj_list",

"node_props": [{

"name": "prop",

"type": "integer"

}],

"edge_props": [{

"name": "cost",

"type": "double"

}],

"separator": " "

}

The uri field provides the location of the graph data. This path resolves relative to the parent directory of the configuration file. When the in-memory analyst loads the graph, it searches the examples/graphs directory for a file named sample.adj.

The other fields indicate that the graph data is provided in adjacency list format, and consists of one node property of type integer and one edge property of type double.

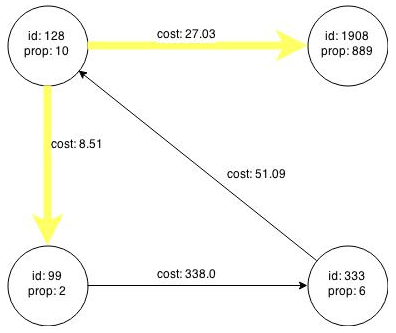

This is the graph data in adjacency list format:

pgx> cat /opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj

===> 128 10 1908 27.03 99 8.51

99 2 333 338.0

1908 889

333 6 128 51.09

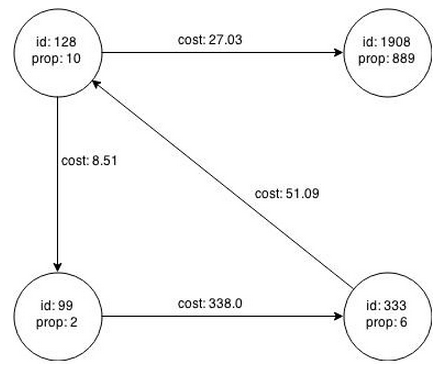

Figure 5-1 shows a property graph created from the data:

Figure 5-1 Property Graph Rendered by sample.adj Data

Description of "Figure 5-1 Property Graph Rendered by sample.adj Data"

5.1.4 Reading Graph Data into Memory

To read a graph into memory, you must pass the following information:

-

The path to the graph configuration file that specifies the graph metadata

-

A unique alphanumeric name that you can use to reference the graph

An error results if you previously loaded a different graph with the same name.

Example: Using the Shell to Read a Graph

pgx> graph = session.readGraphWithProperties("/opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json", "sample");

==> PGX Graph named sample bound to PGX session pgxShell ...

pgx> graph.getNumVertices()

==> 4

Example: Using Java to Read a Graph

import oracle.pgx.api.*;

PgxGraph graph = session.readGraphWithProperties("/opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json");

The following topics contain additional examples of reading a property graph into memory:

5.1.4.1 Read a Graph Stored in Apache HBase into Memory

To read a property graph stored in Apache HBase, you can create a JSON based configuration file as follows. Note that the quorum, client port, graph name, and other information must be customized for your own setup.

% cat /tmp/my_graph_hbase.json

{

"format": "pg",

"db_engine": "hbase",

"zk_quorum": "scaj31bda07,scaj31bda08,scaj31bda09",

"zk_client_port": 2181,

"name": "connections",

"node_props": [{

"name": "country",

"type": "string"

}],

"load_edge_label": true,

"edge_props": [{

"name": "label",

"type": "string"

}, {

"name": "weight",

"type": "float"

}]

}

EOF

With the following command, the property graph connections will be read into memory:

pgx> session.readGraphWithProperties("/tmp/my_graph_hbase.json", "connections")

==> PGX Graph named connections ...

Note that when dealing with a large graph, it may become necessary to tune parameters like number of IO workers, number of workers for analysis, task timeout, and others. You can find and change those parameters in the following directory (assume the installation home is /opt/oracle/oracle-spatial-graph).

/opt/oracle/oracle-spatial-graph/property_graph/pgx/conf

5.1.4.2 Read a Graph Stored in Oracle NoSQL Database into Memory

To read a property graph stored in Oracle NoSQL Database, you can create a JSON based configuration file as follows. Note that the hosts, store name, graph name, and other information must be customized for your own setup.

% cat /tmp/my_graph_nosql.json

{

"format": "pg",

"db_engine": "nosql",

"hosts": [

"zathras01:5000"

],

"store_name": "kvstore",

"name": "connections",

"node_props": [{

"name": "country",

"type": "string"

}],

"load_edge_label": true,

"edge_props": [{

"name": "label",

"type": "string"

}, {

"name": "weight",

"type": "float"

}]

}

Then, read the configuration file into memory. The following example snippet read the file into memory, generates an undirected graph (named U) from the original data, and counts the number of triangles.

pgx> g = session.readGraphWithProperties("/tmp/my_graph_nosql.json", "connections")

pgx> analyst.countTriangles(g, false)

==> 8

5.1.4.3 Read a Graph Stored in the Local File System into Memory

The following command uses the configuration file from "Providing Graph Metadata in a Configuration File" and the name my-graph:

pgx> g = session.readGraphWithProperties("/opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json", "my-graph")

5.2 Reading Custom Graph Data

You can read your own custom graph data. This example creates a graph, alters it, and shows how to read it properly. This graph uses the adjacency list format, but the in-memory analyst supports several graph formats.

The main steps are:

5.2.1 Creating a Simple Graph File

This example creates a small, simple graph in adjacency list format with no vertex or edge properties. Each line contains the vertex (node) ID, followed by the vertex IDs to which iits outgoing edges point:

1 2 2 3 4 3 4 4 2

In this list, a single space separates the individual tokens. The in-memory analyst supports other separators, which you can specify in the graph configuration file.

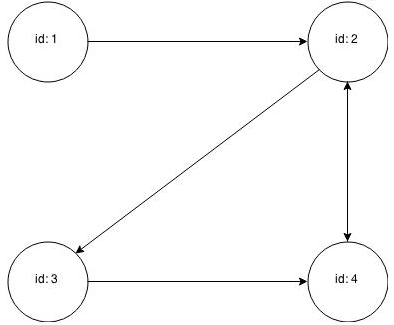

Figure 5-2 shows the data rendered as a property graph with 4 vertices and 5 edges. The edge from vertex 2 to vertex 4 points in both directions.

Reading a graph into the in-memory analyst requires a graph configuration. You can provide the graph configuration using either of these methods:

-

Write the configuration settings in JSON format into a file

-

Using a Java

GraphConfigBuilderobject.

This example shows both methods.

JSON Configuration

{

"uri": "graph.adj",

"format":"adj_list",

"separator":" "

}

Java Configuration

import oracle.pgx.config.FileGraphConfig;

import oracle.pgx.config.Format;

import oracle.pgx.config.GraphConfigBuilder;

FileGraphConfig config = GraphConfigBuilder

.forFileFormat(Format.ADJ_LIST)

.setUri("graph.adj")

.setSeparator(" ")

.build();

5.2.2 Adding a Vertex Property

The graph in "Creating a Simple Graph File" consists of vertices and edges, without vertex or edge properties. Vertex properties are positioned directly after the source vertex ID in each line. The graph data looks like this after a double vertex (node) property is added to the graph:

1 0.1 2 2 2.0 3 4 3 0.3 4 4 4.56789 2

Note:

The in-memory analyst supports only homogeneous graphs, in which all vertices have the same number and type of properties.

For the in-memory analyst to read the modified data file, you must add a vertex node) property in the configuration file or the builder code. The following examples provide a descriptive name for the property and set the type to double.

JSON Configuration

{

"uri": "graph.adj",

"format":"adj_list",

"separator":" ",

"node_props":[{

"name":"double-prop",

"type":"double"

}]

}

Java Configuration

import oracle.pgx.common.types.PropertyType;

import oracle.pgx.config.FileGraphConfig;

import oracle.pgx.config.Format;

import oracle.pgx.config.GraphConfigBuilder;

FileGraphConfig config = GraphConfigBuilder.forFileFormat(Format.ADJ_LIST)

.setUri("graph.adj")

.setSeparator(" ")

.addNodeProperty("double-prop", PropertyType.DOUBLE)

.build();

5.2.3 Using Strings as Vertex Identifiers

The previous examples used integer vertex (node) IDs. The default in In-Memory Analytics is integer vertex IDs, but you can define a graph to use string vertex IDs instead.

This data file uses "node 1", "node 2", and so forth instead of just the digit:

"node 1" 0.1 "node 2" "node 2" 2.0 "node 3" "node 4" "node 3" 0.3 "node 4" "node 4" 4.56789 "node 2"

Again, you must modify the graph configuration to match the data file:

JSON Configuration

{

"uri": "graph.adj",

"format":"adj_list",

"separator":" ",

"node_props":[{

"name":"double-prop",

"type":"double"

}],

"node_id_type":"string"

}

Java Configuration

import oracle.pgx.common.types.IdType;

import oracle.pgx.common.types.PropertyType;

import oracle.pgx.config.FileGraphConfig;

import oracle.pgx.config.Format;

import oracle.pgx.config.GraphConfigBuilder;

FileGraphConfig config = GraphConfigBuilder.forFileFormat(Format.ADJ_LIST)

.setUri("graph.adj")

.setSeparator(" ")

.addNodeProperty("double-prop", PropertyType.DOUBLE)

.setNodeIdType(IdType.STRING)

.build();

Note:

string vertex IDs consume much more memory than integer vertex IDs.

Any single or double quotes inside the string must be escaped with a backslash (\).

Newlines (\n) inside strings are not supported.

5.2.4 Adding an Edge Property

This example adds an edge property of type string to the graph. The edge properties are positioned after the destination vertex (node) ID.

"node1" 0.1 "node2" "edge_prop_1_2" "node2" 2.0 "node3" "edge_prop_2_3" "node4" "edge_prop_2_4" "node3" 0.3 "node4" "edge_prop_3_4" "node4" 4.56789 "node2" "edge_prop_4_2"

The graph configuration must match the data file:

JSON Configuration

{

"uri": "graph.adj",

"format":"adj_list",

"separator":" ",

"node_props":[{

"name":"double-prop",

"type":"double"

}],

"node_id_type":"string",

"edge_props":[{

"name":"edge-prop",

"type":"string"

}]

}

Java Configuration

import oracle.pgx.common.types.IdType;

import oracle.pgx.common.types.PropertyType;

import oracle.pgx.config.FileGraphConfig;

import oracle.pgx.config.Format;

import oracle.pgx.config.GraphConfigBuilder;

FileGraphConfig config = GraphConfigBuilder.forFileFormat(Format.ADJ_LIST)

.setUri("graph.adj")

.setSeparator(" ")

.addNodeProperty("double-prop", PropertyType.DOUBLE)

.setNodeIdType(IdType.STRING)

.addEdgeProperty("edge-prop", PropertyType.STRING)

.build();

5.3 Storing Graph Data on Disk

After reading a graph into memory using either Java or the Shell, you can store it on disk in different formats. You can then use the stored graph data as input to the in-memory analyst at a later time.

Storing graphs over HTTP/REST is currently not supported.

The options include:

5.3.1 Storing the Results of Analysis in a Vertex Property

This example reads a graph into memory and analyzes it using the Pagerank algorithm. This analysis creates a new vertex property to store the PageRank values.

Using the Shell to Run PageRank

pgx> g = session.readGraphWithProperties("/opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json", "my-graph")

==> ...

pgx> rank = analyst.pagerank(g, 0.001, 0.85, 100)

Using Java to Run PageRank

PgxGraph g = session.readGraphWithProperties("/opt/oracle/oracle-spatial-graph/property_graph/examples/pgx/graphs/sample.adj.json", "my-graph");

VertexProperty<Integer, Double> rank = session.createAnalyst().pagerank(g, 0.001, 0.85, 100);

5.3.2 Storing a Graph in Edge-List Format on Disk

This example stores the graph, the result of the Pagerank analysis, and all original edge properties as a file in edge-list format on disk.

To store a graph, you must specify:

-

The graph format

-

A path where the file will be stored

-

The properties to be stored. Specify VertexProperty.

ALLor EdgeProperty.ALLto store all properties, or VertexProperty.NONEor EdgePropery.NONEto store no properties. To specify individual properties, pass in the VertexProperty or /EdgeProperty objects you want to store. -

A flag that indicates whether to overwrite an existing file with the same name

The following examples store the graph data in /tmp/sample_pagerank.elist, with the /tmp/sample_pagerank.elist.json configuration file. The return value is the graph configuration stored in the file. You can use it to read the graph again.

Using the Shell to Store a Graph

pgx> config = g.store(Format.EDGE_LIST, "/tmp/sample_pagerank.elist", [rank], EdgeProperty.ALL, false)

==> {"node_props":[{"name":"session-12kta9mj-vertex-prop-double-2","type":"double"}],"error_handling":{},"node_id_type":"integer","uri":"/tmp/g.edge","loading":{},"edge_props":[{"name":"cost","type":"double"}],"format":"edge_list"}

Using Java to Store a Graph

import oracle.pgx.api.*; import oracle.pgx.config.*; FileGraphConfig config = g.store(Format.EDGE_LIST, "/tmp/sample_pagerank.elist", Collections.singletonList(rank), EdgeProperty.ALL, false);

5.4 Executing Built-in Algorithms

The in-memory analyst contains a set of built-in algorithms that are available as Java APIs. This section describes the use of the in-memory analyst using Triangle Counting and Pagerank analytics as examples.

5.4.1 About the In-Memory Analyst

The in-memory analyst contains a set of built-in algorithms that are available as Java APIs. The details of the APIs are documented in the Javadoc that in included in the product documentation library. Specifically, see the BuiltinAlgorithms interface Method Summary for a list of the supported in-memory analyst methods.

For example, this is the Pagerank procedure signature:

/** * Classic pagerank algorithm. Time complexity: O(E * K) with E = number of edges, K is a given constant (max * iterations) * * @param graph * graph * @param e * maximum error for terminating the iteration * @param d * damping factor * @param max * maximum number of iterations * @return Vertex Property holding the result as a double */ public <ID extends Comparable<ID>> VertexProperty<ID, Double> pagerank(PgxGraph graph, double e, double d, int max);

5.4.2 Running the Triangle Counting Algorithm

For triangle counting, the sortByDegree boolean parameter of countTriangles() allows you to control whether the graph should first be sorted by degree (true) or not (false). If true, more memory will be used, but the algorithm will run faster; however, if your graph is very large, you might want to turn this optimization off to avoid running out of memory.

Using the Shell to Run Triangle Counting

pgx> analyst.countTriangles(graph, true) ==> 1

Using Java to Run Triangle Counting

import oracle.pgx.api.*; Analyst analyst = session.createAnalyst(); long triangles = analyst.countTriangles(graph, true);

The algorithm finds one triangle in the sample graph.

Tip:

When using the in-memory analyst shell, you can increase the amount of log output during execution by changing the logging level. See information about the :loglevel command with :h :loglevel.

5.4.3 Running the Pagerank Algorithm

Pagerank computes a rank value between 0 and 1 for each vertex (node) in the graph and stores the values in a double property. The algorithm therefore creates a vertex property of type double for the output.

In the in-memory analyst, there are two types of vertex and edge properties:

-

Persistent Properties: Properties that are loaded with the graph from a data source are fixed, in-memory copies of the data on disk, and are therefore persistent. Persistent properties are read-only, immutable and shared between sessions.

-

Transient Properties: Values can only be written to transient properties, which are session private. You can create transient properties by alling

createVertexPropertyandcreateEdgeProperty() onPgxGraphobjects.

This example obtains the top three vertices with the highest Pagerank values. It uses a transient vertex property of type double to hold the computed Pagerank values. The Pagerank algorithm uses a maximum error of 0.001, a damping factor of 0.85, and a maximum number of 100 iterations.

Using the Shell to Run Pagerank

pgx> rank = analyst.pagerank(graph, 0.001, 0.85, 100); ==> ... pgx> rank.getTopKValues(3) ==> 128=0.1402019732468347 ==> 333=0.12002296283541904 ==> 99=0.09708583862990475

Using Java to Run Pagerank

import java.util.Map.Entry;

import oracle.pgx.api.*;

Analyst analyst = session.createAnalyst();

VertexProperty<Integer, Double> rank = analyst.pagerank(graph, 0.001, 0.85, 100);

for (Entry<Integer, Double> entry : rank.getTopKValues(3)) {

System.out.println(entry.getKey() + "=" entry.getValue());

}

5.5 Creating Subgraphs

You can create subgraphs based on a graph that has been loaded into memory. You can use filter expressions or create bipartite subgraphs based on a vertex (node) collection that specifies the left set of the bipartite graph.

For information about reading a graph into memory, see Reading Graph Data into Memory.

5.5.1 About Filter Expressions

Filter expressions are expressions that are evaluated for each edge. The expression can define predicates that an edge must fulfil to be contained in the result, in this case a subgraph.

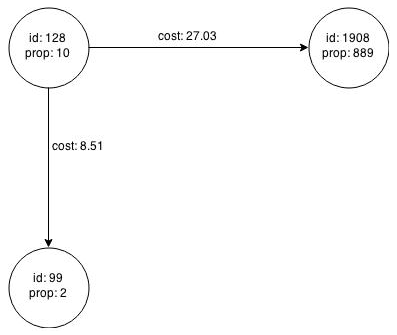

Consider the graph in Figure 5-1, which consists of four vertices (nodes) and four edges. For an edge to match the filter expression src.prop == 10, the source vertex prop property must equal 10. Two edges match that filter expression, as shown in Figure 5-3.

Figure 5-4 shows the graph that results when the filter is applied. The filter excludes the edges associated with vertex 333, and the vertex itself.

Figure 5-4 Graph Created by the Simple Filter

Description of "Figure 5-4 Graph Created by the Simple Filter"

Using filter expressions to select a single vertex or a set of vertices is difficult. For example, selecting only the vertex with the property value 10 is impossible, because the only way to match the vertex is to match an edge where 10 is either the source or destination property value. However, when you match an edge you automatically include the source vertex, destination vertex, and the edge itself in the result.

5.5.2 Using a Simple Filter to Create a Subgraph

The following examples create the subgraph described in "About Filter Expressions".

Using the Shell to Create a Subgraph

subgraph = graph.filter(new VertexFilter("vertex.prop == 10"))

Using Java to Create a Subgraph

import oracle.pgx.api.*;

import oracle.pgx.api.filter.*;

PgxGraph graph = session.readGraphWithProperties(...);

PgxGraph subgraph = graph.filter(new VertexFilter("vertex.prop == 10"));

5.5.3 Using a Complex Filter to Create a Subgraph

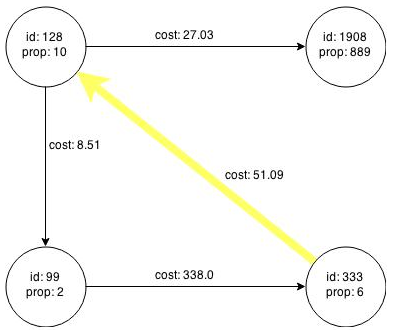

This example uses a slightly more complex filter. It uses the outDegree function, which calculates the number of outgoing edges for an identifier (source src or destination dst). The following filter expression matches all edges with a cost property value greater than 50 and a destination vertex (node) with an outDegree greater than 1.

dst.outDegree() > 1 && edge.cost > 50

One edge in the sample graph matches this filter expression, as shown in Figure 5-5.

Figure 5-5 Edges Matching the outDegree Filter

Description of "Figure 5-5 Edges Matching the outDegree Filter"

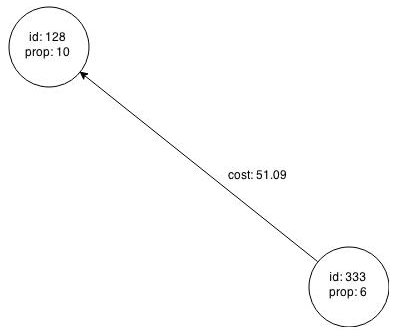

Figure 5-6 shows the graph that results when the filter is applied. The filter excludes the edges associated with vertixes 99 and 1908, and so excludes those vertices also.

Figure 5-6 Graph Created by the outDegree Filter

Description of "Figure 5-6 Graph Created by the outDegree Filter"

5.5.4 Using a Vertex Set to Create a Bipartite Subgraph

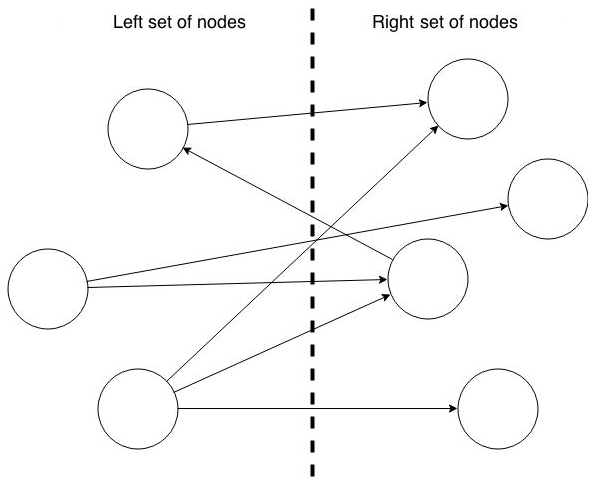

You can create a bipartite subgraph by specifying a set of vertices (nodes), which are used as the left side. A bipartite subgraph has edges only between the left set of vertices and the right set of vertices. There are no edges within those sets, such as between two nodes on the left side. In the in-memory analyst, vertices that are isolated because all incoming and outgoing edges were deleted are not part of the bipartite subgraph.

The following figure shows a bipartite subgraph. No properties are shown.

The following examples create a bipartite subgraph from the simple graph created in Figure 5-1. They create a vertex collection and fill it with the vertices for the left side.

Using the Shell to Create a Bipartite Subgraph

pgx> s = graph.createVertexSet() ==> ... pgx> s.addAll([graph.getVertex(333), graph.getVertex(99)]) ==> ... pgx> s.size() ==> 2 pgx> bGraph = graph.bipartiteSubGraphFromLeftSet(s) ==> PGX Bipartite Graph named sample-sub-graph-4

Using Java to Create a Bipartite Subgraph

import oracle.pgx.api.*; VertexSet<Integer> s = graph.createVertexSet(); s.addAll(graph.getVertex(333), graph.getVertex(99)); BipartiteGraph bGraph = graph.bipartiteSubGraphFromLeftSet(s);

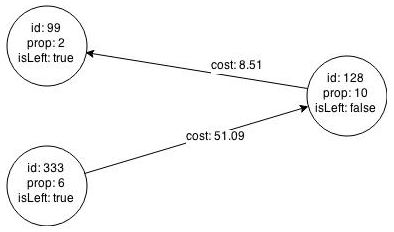

When you create a subgraph, the in-memory analyst automatically creates a Boolean vertex (node) property that indicates whether the vertex is on the left side. You can specify a unique name for the property.

The resulting bipartite subgraph looks like this:

Vertex 1908 is excluded from the bipartite subgraph. The only edge that connected that vertex extended from 128 to 1908. The edge was removed, because it violated the bipartite properties of the subgraph. Vertex 1908 had no other edges, and so was removed also.

5.6 Deploying to Jetty

You can deploy the in-memory analyst to Eclipse Jetty, Apache Tomcat, or Oracle WebLogic. This example shows how to deploy the in-memory analyst as a web application with Eclipse Jetty.

-

Copy the the in-memory analyst web application archive (WAR) file into the Jetty

webappsdirectory:cd $PGX_HOME cp $PGX_HOME/webapp/pgx-webapp-1.0.0-for-cdh5.2.1.war $JETTY_HOME/webapps/pgx.war

-

Set up a security realm within Jetty that specifies where it can find the user names and passwords. To add the most basic security realm, which reads the credentials from a file, add this snippet to

$JETTY_HOME/etc/jetty.xml:<Call name="addBean"> <Arg> <New class="org.eclipse.jetty.security.HashLoginService"> <Set name="name">PGX-Realm</Set> <Set name="config"> etc/realm.properties </Set> <Set name="refreshInterval">0</Set> </New> </Arg> </Call>This snippet instructs Jetty to use the simplest, in-memory login service it supports, the

HashLoginService. This service uses a configuration file that stores the user names, passwords, and roles. -

Add the users to

$JETTY_HOME/etc/realm.propertiesin the following format:username: password, roleFor example, this line adds user

SCOTT, with passwordTIGERand theUSERrole.scott: tiger, USER

-

Ensure that port 8080 is not already in use, and then start Jetty:

cd $JETTY_HOME java -jar start.jar

-

Verify that Jetty is working, using the appropriate credentials for your installation:

cd $PGX_HOME ./bin/pgx --base_url http://scott:tiger@localhost:8080/pgx

-

(Optional) Modify the in-memory analyst configuration files.

The configuration file (

pgx.conf) and the logging parameters (log4j.xml) for the in-memory analyst engine are in the WAR file underWEB-INF/classes. Restart the server to enable the changes.

See Also:

The Jetty documentation for configuration and use at

5.6.1 About the Authentication Mechanism

The in-memory analyst web deployment uses BASIC Auth by default. You should change to a more secure authentication mechanism for a production deployment.

To change the authentication mechanism, modify the security-constraint element of the web.xml deployment descriptor in the web application archive (WAR) file.

5.7 Deploying to Apache Tomcat

You can deploy the in-memory analyst to Eclipse Jetty, Apache Tomcat, or Oracle WebLogic. This example shows how to deploy In-Memory Analytics as a web application with Apache Tomcat.

The in-memory analyst ships with BASIC Auth enabled, which requires a security realm. Tomcat supports many different types of realms. This example configures the simplest one, MemoryRealm. See the Tomcat Realm Configuration How-to for information about the other types.

Note:

Oracle recommends BASIC Auth only for testing. Use stronger authentication mechanisms for all other types of deployments.

5.8 Deploying to Oracle WebLogic Server

You can deploy the in-memory analysts to Eclipse Jetty, Apache Tomcat, or Oracle WebLogic Server. This example shows how to deploy the in-memory analyst as a web application with Oracle WebLogic Server.

5.8.1 Installing Oracle WebLogic Server

To download and install the latest version of Oracle WebLogic Server, see

http://www.oracle.com/technetwork/middleware/weblogic/documentation/index.html

5.8.2 Deploying the In-Memory Analyst

To deploy the in-memory analyst to Oracle WebLogic, use commands like the following. Substitute your administrative credentials and WAR file for the values shown in this example:

cd $MW_HOME/user_projects/domains/mydomain . bin/setDomainEnv.sh java weblogic.Deployer -adminurl http://localhost:7001 -username username -password password -deploy -upload $PGX_HOME/lib/server/pgx-webapp-0.9.0.war

If the script runs successfully, you will see a message like this one:

Target state: deploy completed on Server myserver

5.9 Connecting to the In-Memory Analyst Server

After the property graph in-memory analyst is installed in a Hadoop cluster -- or on a client system without Hadoop as a web application on Eclipse Jetty, Apache Tomcat, or Oracle WebLogic -- you can connect to the in-memory analyst server.

5.9.1 Connecting with the In-Memory Analyst Shell

The simplest way to connect to an in-memory analyst instance is to specify the base URL of the server. The following base URL can connect the SCOTT user to the local instance listening on port 8080:

http://scott:tiger@localhost:8080/pgx

To start the in-memory analyst shell with this base URL, you use the --base_url command line argument

cd $PGX_HOME ./bin/pgx --base_url http://scott:tiger@localhost:8080/pgx

You can connect to a remote instance the same way. However, the in-memory analyst currently does not provide remote support for the Control API.

5.9.1.1 About Logging HTTP Requests

The in-memory analyst shell suppresses all debugging messages by default. To see which HTTP requests are executed, set the log level for oracle.pgx to DEBUG, as shown in this example:

pgx> :loglevel oracle.pgx DEBUG ===> log level of oracle.pgx logger set to DEBUG pgx> session.readGraphWithProperties("sample_http.adj.json", "sample") 10:24:25,056 [main] DEBUG RemoteUtils - Requesting POST http://scott:tiger@localhost:8080/pgx/core/session/session-shell-6nqg5dd/graph HTTP/1.1 with payload {"graphName":"sample","graphConfig":{"uri":"http://path.to.some.server/pgx/sample.adj","separator":" ","edge_props":[{"type":"double","name":"cost"}],"node_props":[{"type":"integer","name":"prop"}],"format":"adj_list"}} 10:24:25,088 [main] DEBUG RemoteUtils - received HTTP status 201 10:24:25,089 [main] DEBUG RemoteUtils - {"futureId":"87d54bed-bdf9-4601-98b7-ef632ce31463"} 10:24:25,091 [pool-1-thread-3] DEBUG PgxRemoteFuture$1 - Requesting GET http://scott:tiger@localhost:8080/pgx/future/session/session-shell-6nqg5dd/result/87d54bed-bdf9-4601-98b7-ef632ce31463 HTTP/1.1 10:24:25,300 [pool-1-thread-3] DEBUG RemoteUtils - received HTTP status 200 10:24:25,301 [pool-1-thread-3] DEBUG RemoteUtils - {"stats":{"loadingTimeMillis":0,"estimatedMemoryMegabytes":0,"numEdges":4,"numNodes":4},"graphName":"sample","nodeProperties":{"prop":"integer"},"edgeProperties":{"cost":"double"}}

This example requires that the graph URI points to a file that the in-memory analyst server can access using HTTP or HDFS.

5.9.2 Connecting with Java

You can specify the base URL when you initialize the in-memory analyst using Java. An example is as follows. A URL to an in-memory analyst server is provided to the getInMemAnalyst API call.

import oracle.pg.nosql.*;

import oracle.pgx.api.*;

PgNosqlGraphConfig cfg = GraphConfigBuilder.forNosql().setName("mygraph").setHosts(...).build();

OraclePropertyGraph opg = OraclePropertyGraph.getInstance(cfg);

ServerInstance remoteInstance = Pgx.getInstance("http://scott:tiger@hostname:port/pgx");

PgxSession session = remoteInstance.createSession("my-session");

PgxGraph graph = session.readGraphWithProperties(opg.getConfig());

5.9.3 Connecting with an HTTP Request

The in-memory analyst shell uses HTTP requests to communicate with the in-memory analyst server. You can use the same HTTP endpoints directly or use them to write your own client library.

This example uses HTTP to call create session:

HTTP POST 'http://scott:tiger@localhost:8080/pgx/core/session' with payload '{"source":"shell"}'

Response: {"sessionId":"session-shell-42v3b9n7"}

The call to create session returns a session identifier. Most HTTP calls return an in-memory analyst UUID, which identifies the resource that holds the result of the request. Many in-memory analyst requests take a while to complete, but you can obtain a handle to the result immediately. Using that handle, an HTTP GET call to a special endpoint provides the result of the request (or block, if the request is not complete).

Most interactions with the in-memory analyst with HTTP look like this example:

// any request, with some payload

HTTP POST 'http://scott:tiger@localhost:8080/pgx/core/session/session-shell-42v3b9n7/graph' with payload '{"graphName":"sample","graphConfig":{"edge_props":[{"type":"double","name":"cost"}],"format":"adj_list","separator":" ","node_props":[{"type":"integer","name":"prop"}],"uri":"http://path.to.some.server/pgx/sample.adj"}}'

Response: {"futureId":"15fc72e9-42e9-4527-9a31-bd20eb0adafb"}

// get the result using the in-memory analyst future UUID.

HTTP GET 'http://scott:tiger@localhost:8080/pgx/future/session/session-shell-42v3b9n7/result/15fc72e9-42e9-4527-9a31-bd20eb0adafb'

Response: {"stats":{"loadingTimeMillis":0,"estimatedMemoryMegabytes":0,"numNodes":4,"numEdges":4},"graphName":"sample","nodeProperties":{"prop":"integer"},"edgeProperties":{"cost":"double"}}

5.10 Using the In-Memory Analyst in Distributed Mode

The in-memory analyst can be run in the following modes:

-

Shared memory mode

Multiple threads work in parallel on in-memory graph data stored in a single node (a single, shared memory space). In shared memory mode, the size of the graph is constrained by the physical memory size and by other applications running on the same node.

-

Distributed mode

To overcome the limitations of shared memory mode, you can run the in-memory analyst in distributed mode, in which multiple nodes (computers) form a cluster, partition a large property graph across distributed memory, and work together to provide efficient and scalable graph analytics.

For using the in-memory analyst feature in distributed mode, the following requirements apply to each node in the cluster:

-

GNU Compiler Collection (GCC) 4.8.2 or later

C++ standard libraries built upon 3.4.20 of the GNU C++ API are needed.

-

Ability to open a TCP port

Distributed in-memory analyst requires a designated TCP port to be open for initial handshaking. The default port number is 7777, but you can set it using the run-time parameter

pgx_side_channel_port. -

Ability to use InfiniBand or UDP on Ethernet

Data communication among nodes mainly uses InfiniBand (IB) or UDP on Ethernet. When using Ethernet, the machines in the cluster need to accept UDP packets from other computers.

-

JDK7 or later

To start the in-memory analyst in distributed mode, do the following. (For this example, assume that four nodes (computers) have been allocated for this purpose, and that they have the host names hostname0, hostname1, hostname2, and hostname3.)

On each of the nodes, log in and perform the following operations (modifying the details for your actual environment):

export PGX_HOME=/opt/oracle/oracle-spatial-graph/property_graph/pgx export LD_LIBRARY_PATH=$PGX_HOME/server/distributed/lib:$LD_LIBRARY_PATH cd $PGX_HOME/server/distributed ./bin/node ./package/ClusterHost.js -server_config=./package/options.json -pgx_hostnames=hostname0,hostname1,hostname2,hostname3

After the operations have successfully completed on all four nodes. you can see a log message similar to the following:

17:11:42,709 [hostname0] INFO pgx.dist.cluster_host - PGX.D Server listening on http://hostname0:8023/pgx

The distributed in-memory analyst in now up and running. It provides service through the following endpoint: http://hostname0:8023/pgx

This endpoint can be consumed in the same manner as a remotely deployed shared-memory analyst. You can use Java APIs, Groovy shells, and the PGX shell. An example of using the PGX shell is as follows:

cd $PGX_HOME ./bin/pgx --base_url=http://hostname0:8023/pgx

The following example uses the service from a Groovy shell for Oracle NoSQL Database:

opg-nosql> session=Pgx.createSession("http://hostname0:8023/pgx", "session-id-123");

opg-nosql> analyst=session.createAnalyst();

opg-nosql> pgxGraph = session.readGraphWithProperties(opg.getConfig());

The following is an example options.json file:

$ cat ./package/options.json

{

"pgx_use_infiniband": 1,

"pgx_command_queue_path": ".",

"pgx_builtins_path": "./lib",

"pgx_executable_path": "./bin/pgxd",

"java_class_path": "./jlib/*",

"pgx_httpserver_port": 8023,

"pgx_httpserver_enable_csrf_token": 1,

"pgx_httpserver_enable_ssl": 0,

"pgx_httpserver_client_auth": 1,

"pgx_httpserver_key": "<INSERT_VALUE_HERE>/server_key.pem",

"pgx_httpserver_cert": "<INSERT_VALUE_HERE>/server_cert.pem",

"pgx_httpserver_ca": "<INSERT_VALUE_HERE>/server_cert.pem",

"pgx_httpserver_auth": "<INSERT_VALUE_HERE>/server.auth.json",

"pgx_log_configure": "./package/log4j.xml",

"pgx_ranking_query_max_cache_size": 1048576,

"zookeeper_timeout": 10000,

"pgx_partitioning_strategy": "out_in",

"pgx_partitioning_ignore_ghostnodes": false,

"pgx_ghost_min_neighbors": 5000,

"pgx_ghost_max_node_counts": 40000,

"pgx_use_bulk_communication": true,

"pgx_num_worker_threads": 28

}

5.11 Reading and Storing Data in HDFS

The in-memory analyst supports the Hadoop Distributed File System (HDFS). This example shows how to read and access graph data in HDFS using the in-memory analyst APIs.

Graph configuration files are parsed on the client side. The graph data and configuration files must be stored in HDFS. You must install a Hadoop client on the same computer as In-Memory Analytics. See Oracle Big Data Appliance Software User's Guide.

Note:

The in-memory analyst engine runs in memory on one node of the Hadoop cluster only.

5.11.1 Loading Data from HDFS

This example copies the sample.adj graph data and its configuration file into HDFS, and then loads it into memory.

-

Copy the graph data into HDFS:

cd $PGX_HOME hadoop fs -mkdir -p /user/pgx hadoop fs -copyFromLocal examples/graphs/sample.adj /user/pgx

-

Edit the

urifield of the graph configuration file to point to an HDFS resource:{ "uri": "hdfs:/user/pgx/sample.adj", "format": "adj_list", "node_props": [{ "name": "prop", "type": "integer" }], "edge_props": [{ "name": "cost", "type": "double" }], "separator": " " } -

Copy the configuration file into HDFS:

cd $PGX_HOME hadoop fs -copyFromLocal examples/graphs/sample.adj.json /user/pgx

-

Load the sample graph from HDFS into the in-memory analyst, as shown in the following examples.

Using the Shell to Load the Graph from HDFS

g = session.readGraphWithProperties("hdfs:/user/pgx/sample.adj.json");

===> {

"graphName" : "G",

"nodeProperties" : {

"prop" : "integer"

},

"edgeProperties" : {

"cost" : "double"

},

"stats" : {

"loadingTimeMillis" : 628,

"estimatedMemoryMegabytes" : 0,

"numNodes" : 4,

"numEdges" : 4

}

}

Using Java to Load the Graph from HDFS

import oracle.pgx.api.*;

PgxGraph g = session.readGraphWithProperties("hdfs:/user/pgx/sample.adj.json");

5.11.2 Storing Graph Snapshots in HDFS

The in-memory analyst binary format (.pgb) is a proprietary binary graph format for the in-memory analyst. Fundamentally, a .pgb file is a binary dump of a graph and its property data, and it is efficient for in-memory analyst operations. You can use this format to quickly serialize a graph snapshot to disk and later read it back into memory.

You should not alter an existing .pgb file.

The following examples store the sample graph, currently in memory, in PGB format in HDFS.

Using the Shell to Store a Graph in HDFS

g.store(Format.PGB, "hdfs:/user/pgx/sample.pgb", VertexProperty.ALL, EdgeProperty.ALL, true)

Using Java to Store a Graph in HDFS

import oracle.pgx.config.GraphConfig; import oracle.pgx.api.*; GraphConfig pgbGraphConfig = g.store(Format.PGB, "hdfs:/user/pgx/sample.pgb", VertexProperty.ALL, EdgeProperty.ALL, true);

To verify that the PGB file was created, list the files in the /user/pgx HDFS directory:

hadoop fs -ls /user/pgx

5.11.3 Compiling and Running a Java Application in Hadoop

The following is the HdfsExample Java class for the previous examples:

import oracle.pgx.api.Pgx;

import oracle.pgx.api.PgxGraph;

import oracle.pgx.api.PgxSession;

import oracle.pgx.api.ServerInstance;

import oracle.pgx.config.Format;

import oracle.pgx.config.GraphConfig;

import oracle.pgx.config.GraphConfigFactory;

public class HdfsDemo {

public static void main(String[] mainArgs) throws Exception {

ServerInstance instance = Pgx.getInstance(Pgx.EMBEDDED_URL);

instance.startEngine();

PgxSession session = Pgx.createSession("my-session");

GraphConfig adjConfig = GraphConfigFactory.forAnyFormat().fromHdfs("/user/pgx/sample.adj.json");

PgxGraph graph1 = session.readGraphWithProperties(adjConfig);

GraphConfig pgbConfig = graph1.store(Format.PGB, "hdfs:/user/pgx/sample.pgb");

PgxGraph graph2 = session.readGraphWithProperties(pgbConfig);

System.out.println("graph1 N = " + graph1.getNumVertices() + " E = " + graph1.getNumEdges());

System.out.println("graph2 N = " + graph1.getNumVertices() + " E = " + graph2.getNumEdges());

}

}

These commands compile the HdfsExample class:

cd $PGX_HOME mkdir classes javac -cp ../lib/* HdfsDemo.java -d classes

This command runs the HdfsExample class:

java -cp ../lib/*:conf:classes:$HADOOP_CONF_DIR HdfsDemo

5.12 Running the In-Memory Analyst as a YARN Application

In this example you will learn how to start, stop and monitor in-memory analyst servers on a Hadoop cluster via Hadoop NextGen MapReduce (YARN) scheduling.

5.12.1 Starting and Stopping In-Memory Analyst Services

Before you can start the in-memory analyst as a YARN application, you must configure the in-memory analyst YARN client.

5.12.1.1 Configuring the In-Memory Analyst YARN Client

The in-memory analyst distribution contains an example YARN client configuration file in $PGX_HOME/conf/yarn.conf.

Ensure that all the required fields are set properly. The specified paths must exist in HDFS, and zookeeper_connect_string must point to a running ZooKeeper port of the CDH cluster.

5.12.1.2 Starting a New In-Memory Analyst Service

To start a new in-memory analyst service on the Hadoop cluster, use the following command:

yarn jar $PGX_HOME/yarn/pgx-yarn-1.0.0-for-cdh5.2.1.jar

To use a YARN client configuration file other than $PGX_HOME/conf/yarn.conf, provide the file path:

yarn jar $PGX_HOME/yarn/pgx-yarn-1.0.0-for-cdh5.2.1.jar /path/to/different/yarn.conf

When the service starts, the host name and port of the Hadoop node where the in-memory analyst service launched are displayed.

5.12.1.3 About Long-Running In-Memory Analyst Services

The in-memory analyst YARN applications are configured by default to time out after a specified period. If you disable the time out by setting pgx_server_timeout_secs to 0, the in-memory analyst server keeps running until you or Hadoop explicitly stop it.

5.12.2 Connecting to In-Memory Analyst Services

You can connect to in-memory analyst services in YARN the same way you connect to any in-memory analyst server. For example, to connect the Shell interface with the in-memory analyst service, use a command like this one:

$PGX_HOME/bin/pgx --base_url username:password@hostname:port

In this syntax, username and password match those specified in the YARN configuration.