Table of Contents

- 6.1. MySQL Cluster File Systems

- 6.2.

DUMPCommands - 6.2.1.

DUMPCodes 1 to 999 - 6.2.2.

DUMPCodes 1000 to 1999 - 6.2.3.

DUMPCodes 2000 to 2999 - 6.2.4.

DUMPCodes 3000 to 3999 - 6.2.5.

DUMPCodes 4000 to 4999 - 6.2.6.

DUMPCodes 5000 to 5999 - 6.2.7.

DUMPCodes 6000 to 6999 - 6.2.8.

DUMPCodes 7000 to 7999 - 6.2.9.

DUMPCodes 8000 to 8999 - 6.2.10.

DUMPCodes 9000 to 9999 - 6.2.11.

DUMPCodes 10000 to 10999 - 6.2.12.

DUMPCodes 11000 to 11999 - 6.2.13.

DUMPCodes 12000 to 12999

- 6.2.1.

- 6.3. The NDB Protocol

- 6.4.

NDBKernel Blocks - 6.4.1. The

BACKUPBlock - 6.4.2. The

CMVMIBlock - 6.4.3. The

DBACCBlock - 6.4.4. The

DBDICTBlock - 6.4.5. The

DBDIHBlock - 6.4.6.

DBLQHBlock - 6.4.7. The

DBTCBlock - 6.4.8. The

DBTUPBlock - 6.4.9.

DBTUXBlock - 6.4.10. The

DBUTILBlock - 6.4.11. The

LGMANBlock - 6.4.12. The

NDBCNTRBlock - 6.4.13. The

NDBFSBlock - 6.4.14. The

PGMANBlock - 6.4.15. The

QMGRBlock - 6.4.16. The

RESTOREBlock - 6.4.17. The

SUMABlock - 6.4.18. The

TSMANBlock - 6.4.19. The

TRIXBlock

- 6.4.1. The

- 6.5. MySQL Cluster Start Phases

- 6.5.1. Initialization Phase (Phase -1)

- 6.5.2. Configuration Read Phase (

STTORPhase -1) - 6.5.3.

STTORPhase 0 - 6.5.4.

STTORPhase 1 - 6.5.5.

STTORPhase 2 - 6.5.6.

NDB_STTORPhase 1 - 6.5.7.

STTORPhase 3 - 6.5.8.

NDB_STTORPhase 2 - 6.5.9.

STTORPhase 4 - 6.5.10.

NDB_STTORPhase 3 - 6.5.11.

STTORPhase 5 - 6.5.12.

NDB_STTORPhase 4 - 6.5.13.

NDB_STTORPhase 5 - 6.5.14.

NDB_STTORPhase 6 - 6.5.15.

STTORPhase 6 - 6.5.16.

STTORPhase 7 - 6.5.17.

STTORPhase 8 - 6.5.18.

NDB_STTORPhase 7 - 6.5.19.

STTORPhase 9 - 6.5.20.

STTORPhase 101 - 6.5.21. System Restart Handling in Phase 4

- 6.5.22.

START_MEREQHandling

- 6.6.

NDBInternals Glossary

Abstract

This chapter contains information about MySQL Cluster that is not strictly necessary for running the Cluster product, but can prove useful for development and debugging purposes.

This section contains information about the file systems created and used by MySQL Cluster data nodes and management nodes.

This section discusses the files and directories created by MySQL Cluster nodes, their usual locations, and their purpose.

A cluster data node's DataDir contains at a

minimum 3 files. These are named as shown here, where

node_id is the node ID:

ndb_node_id_out.logSample output:

2006-09-12 20:13:24 [ndbd] INFO -- Angel pid: 13677 ndb pid: 13678 2006-09-12 20:13:24 [ndbd] INFO -- NDB Cluster -- DB node 1 2006-09-12 20:13:24 [ndbd] INFO -- Version 5.1.12 (beta) -- 2006-09-12 20:13:24 [ndbd] INFO -- Configuration fetched at localhost port 1186 2006-09-12 20:13:24 [ndbd] INFO -- Start initiated (version 5.1.12) 2006-09-12 20:13:24 [ndbd] INFO -- Ndbd_mem_manager::init(1) min: 20Mb initial: 20Mb WOPool::init(61, 9) RWPool::init(82, 13) RWPool::init(a2, 18) RWPool::init(c2, 13) RWPool::init(122, 17) RWPool::init(142, 15) WOPool::init(41, 8) RWPool::init(e2, 12) RWPool::init(102, 55) WOPool::init(21, 8) Dbdict: name=sys/def/SYSTAB_0,id=0,obj_ptr_i=0 Dbdict: name=sys/def/NDB$EVENTS_0,id=1,obj_ptr_i=1 m_active_buckets.set(0)

ndb_node_id_signal.logThis file contains a log of all signals sent to or from the data node.

NoteThis file is created only if the

SendSignalIdparameter is enabled, which is true only for-debugbuilds.ndb_node_id.pidThis file contains the data node's process ID; it is created when the ndbd process is started.

The location of these files is determined by the value of the

DataDir configuration parameter. See

DataDir.

This directory is named

ndb_,

where nodeid_fsnodeid is the data node's

node ID. It contains the following files and directories:

Files:

data-nodeid.datundo-nodeid.dat

Directories:

LCP: In MySQL Cluster NDB 6.3.8 and later MySQL Cluster releases, this directory holds 2 subdirectories, named0and1, each of which which contain locals checkpoint data files, one per local checkpoint (see Configuring MySQL Cluster Parameters for Local Checkpoints).Prior to MySQL Cluster NDB 6.3.8, this directory contained 3 subdirectories, named

0,1, and2, due to the fact thatNDBsaved 3 local checkpoints to disk (rather than 2) in these earlier versions of MySQL Cluster.These subdirectories each contain a number of files whose names follow the pattern

T, whereNFM.DataNis a table ID and and M is a fragment number. Each data node typically has one primary fragment and one backup fragment. This means that, for a MySQL Cluster having 2 data nodes, and withNoOfReplicas = 2,Mis either 0 to 1. For a 4-node cluster withNoOfReplicas = 2,Mis either 0 or 2 on node group 1, and either 1 or 3 on node group 2.In MySQL Cluster NDB 7.0 and later, when using ndbmtd there may be more than one primary fragment per node. In this case,

Mis a number in the range of 0 to the number of LQH worker threads in the entire cluster, less 1. The number of fragments on each data node is equal to the number of LQH on that node timesNoOfReplicas.NoteIncreasing

MaxNoOfExecutionThreadsdoes not change the number of fragments used by existing tables; only newly-created tables automatically use the new fragment count. To force the new fragment count to be used by an existing table after increasingMaxNoOfExecutionThreads, you must perform anALTER TABLE ... REORGANIZE PARTITIONstatement (just as when adding new node groups in MySQL Cluster NDB 7.0 and later).Directories named

D1andD2, each of which contains 2 subdirectories:DBDICT: Contains data dictionary information. This is stored in:The file

P0.SchemaLogA set of directories

T0,T1,T2, ..., each of which contains anS0.TableListfile.

Directories named

D8,D9,D10, andD11, each of which contains a directory namedDBLQH.In each case, the

DBLQHdirectory containsNfiles namedS0.Fraglog,S1.FragLog,S2.FragLog, ...,S, whereN.FragLogNis equal to the value of theNoOfFragmentLogFilesconfiguration parameter.The

DBLQHdirectories also contain the redo log files.DBDIH: This directory contains the fileP, which records information such as the last GCI, restart status, and node group membership of each node; its structure is defined inX.sysfilestorage/ndb/src/kernel/blocks/dbdih/Sysfile.hppin the MySQL Cluster source tree. In addition, theSfiles keep records of the fragments belonging to each table.X.FragList

MySQL Cluster creates backup files in the directory specified

by the BackupDataDir configuration

parameter, as discussed in

Using The MySQL Cluster Management Client to Create a Backup,

and

Identifying

Data Nodes.

The files created when a backup is performed are listed and described in MySQL Cluster Backup Concepts.

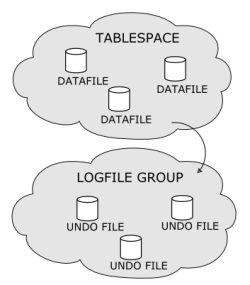

This section applies only to MySQL 5.1 and later. Previous versions of MySQL did not support Disk Data tables.

MySQL Cluster Disk Data files are created (or dropped) by the user by means of SQL statements intended specifically for this purpose. Such files include the following:

One or more undo logfiles associated with a logfile group

One or more datafiles associated with a tablespace that uses the logfile group for undo logging

Both undo logfiles and datafiles are created in the data

directory (DataDir) of each cluster data

node. The relationship of these files with their logfile group

and tablespace are shown in the following diagram:

Disk Data files and the SQL commands used to create and drop them are discussed in depth in MySQL Cluster Disk Data Tables.

The files used by a MySQL Cluster management node are discussed in ndb_mgmd.

- 6.2.1.

DUMPCodes 1 to 999 - 6.2.2.

DUMPCodes 1000 to 1999 - 6.2.3.

DUMPCodes 2000 to 2999 - 6.2.4.

DUMPCodes 3000 to 3999 - 6.2.5.

DUMPCodes 4000 to 4999 - 6.2.6.

DUMPCodes 5000 to 5999 - 6.2.7.

DUMPCodes 6000 to 6999 - 6.2.8.

DUMPCodes 7000 to 7999 - 6.2.9.

DUMPCodes 8000 to 8999 - 6.2.10.

DUMPCodes 9000 to 9999 - 6.2.11.

DUMPCodes 10000 to 10999 - 6.2.12.

DUMPCodes 11000 to 11999 - 6.2.13.

DUMPCodes 12000 to 12999

Never use these commands on a production MySQL Cluster except under the express direction of MySQL Technical Support. Oracle will not be held responsible for adverse results arising from their use under any other circumstances!

DUMP commands can be used in the Cluster

management client (ndb_mgm) to dump debugging

information to the Cluster log. They are documented here, rather

than in the MySQL Manual, for the following reasons:

They are intended only for use in troubleshooting, debugging, and similar activities by MySQL developers, QA, and support personnel.

Due to the way in which

DUMPcommands interact with memory, they can cause a running MySQL Cluster to malfunction or even to fail completely when used.The formats, arguments, and even availability of these commands are not guaranteed to be stable. All of this information is subject to change at any time without prior notice.

For the preceding reasons,

DUMPcommands are neither intended nor warranted for use in a production environment by end-users.

General syntax:

ndb_mgm> node_id DUMP code [arguments]

This causes the contents of one or more NDB

registers on the node with ID node_id

to be dumped to the Cluster log. The registers affected are

determined by the value of code. Some

(but not all) DUMP commands accept additional

arguments; these are noted and

described where applicable.

Individual DUMP commands are listed by their

code values in the sections that

follow. For convenience in locating a given

DUMP code, they are divided by thousands.

Each listing includes the following information:

The

codevalueThe relevant

NDBkernel block or blocks (see Section 6.4, “NDBKernel Blocks”, for information about these)The

DUMPcode symbol where defined; if undefined, this is indicated using a triple dash:---.Sample output; unless otherwise stated, it is assumed that each

DUMPcommand is invoked as shown here:ndb_mgm>

2 DUMPcodeGenerally, this is from the cluster log; in some cases, where the output may be generated in the node log instead, this is indicated. Where the DUMP command produces errors, the output is generally taken from the error log.

Where applicable, additional information such as possible extra

arguments, warnings, state or other values returned in theDUMPcommand's output, and so on. Otherwise its absence is indicated with “[N/A]”.

DUMP command codes are not necessarily

defined sequentially. For example, codes 2

through 12 are currently undefined, and so

are not listed. However, individual DUMP code

values are subject to change, and there is no guarantee that a

given code value will continue to be defined for the same

purpose (or defined at all, or undefined) over time.

There is also no guarantee that a given DUMP

code—even if currently undefined—will not have

serious consequences when used on a running MySQL Cluster.

For information concerning other ndb_mgm client commands, see Commands in the MySQL Cluster Management Client.

- 6.2.1.1.

DUMP 1 - 6.2.1.2.

DUMP 13 - 6.2.1.3.

DUMP 14 - 6.2.1.4.

DUMP 15 - 6.2.1.5.

DUMP 16 - 6.2.1.6.

DUMP 17 - 6.2.1.7.

DUMP 18 - 6.2.1.8.

DUMP 20 - 6.2.1.9.

DUMP 21 - 6.2.1.10.

DUMP 22 - 6.2.1.11.

DUMP 23 - 6.2.1.12.

DUMP 24 - 6.2.1.13.

DUMP 25 - 6.2.1.14.

DUMP 70 - 6.2.1.15.

DUMP 400 - 6.2.1.16.

DUMP 401 - 6.2.1.17.

DUMP 402 - 6.2.1.18.

DUMP 403 - 6.2.1.19.

DUMP 404 - 6.2.1.20.

DUMP 908

This section contains information about DUMP

codes 1 through 999, inclusive.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1 | --- | QMGR |

Description.

Dumps information about cluster start Phase 1 variables (see

Section 6.5.4, “STTOR Phase 1”).

Sample Output.

Node 2: creadyDistCom = 1, cpresident = 2 Node 2: cpresidentAlive = 1, cpresidentCand = 2 (gci: 157807) Node 2: ctoStatus = 0 Node 2: Node 2: ZRUNNING(3) Node 2: Node 3: ZRUNNING(3)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 13 | --- | CMVMI, NDBCNTR |

Description. Dump signal counter.

Sample Output.

Node 2: Cntr: cstartPhase = 9, cinternalStartphase = 8, block = 0 Node 2: Cntr: cmasterNodeId = 2

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 14 | CommitAckMarkersSize | DBLQH, DBTC |

Description.

Dumps free size in commitAckMarkerPool.

Sample Output.

Node 2: TC: m_commitAckMarkerPool: 12288 free size: 12288 Node 2: LQH: m_commitAckMarkerPool: 36094 free size: 36094

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 15 | CommitAckMarkersDump | DBLQH, DBTC |

Description.

Dumps information in commitAckMarkerPool.

Sample Output.

Node 2: TC: m_commitAckMarkerPool: 12288 free size: 12288 Node 2: LQH: m_commitAckMarkerPool: 36094 free size: 36094

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 16 | DihDumpNodeRestartInfo | DBDIH |

Description. Provides node restart information.

Sample Output.

Node 2: c_nodeStartMaster.blockLcp = 0, c_nodeStartMaster.blockGcp = 0, c_nodeStartMaster.wait = 0 Node 2: cstartGcpNow = 0, cgcpStatus = 0 Node 2: cfirstVerifyQueue = -256, cverifyQueueCounter = 0 Node 2: cgcpOrderBlocked = 0, cgcpStartCounter = 5

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 17 | DihDumpNodeStatusInfo | DBDIH |

Description. Dumps node status.

Sample Output.

Node 2: Printing nodeStatus of all nodes Node 2: Node = 2 has status = 1 Node 2: Node = 3 has status = 1

Additional Information. Possible node status values are shown in the following table:

| Value | Name |

|---|---|

| 0 | NOT_IN_CLUSTER |

| 1 | ALIVE |

| 2 | STARTING |

| 3 | DIED_NOW |

| 4 | DYING |

| 5 | DEAD |

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 18 | DihPrintFragmentation | DBDIH |

Description. Prints one entry per table fragment; lists the table number, fragment number, and list of nodes handling this fragment in order of priority.

Sample Output.

Node 2: Printing fragmentation of all tables -- Node 2: Table 0 Fragment 0 - 2 3 Node 2: Table 0 Fragment 1 - 3 2 Node 2: Table 1 Fragment 0 - 2 3 Node 2: Table 1 Fragment 1 - 3 2 Node 2: Table 2 Fragment 0 - 2 3 Node 2: Table 2 Fragment 1 - 3 2 Node 2: Table 3 Fragment 0 - 2 3 Node 2: Table 3 Fragment 1 - 3 2 Node 2: Table 4 Fragment 0 - 2 3 Node 2: Table 4 Fragment 1 - 3 2 Node 2: Table 9 Fragment 0 - 2 3 Node 2: Table 9 Fragment 1 - 3 2 Node 2: Table 10 Fragment 0 - 2 3 Node 2: Table 10 Fragment 1 - 3 2 Node 2: Table 11 Fragment 0 - 2 3 Node 2: Table 11 Fragment 1 - 3 2 Node 2: Table 12 Fragment 0 - 2 3 Node 2: Table 12 Fragment 1 - 3 2

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 20 | --- | BACKUP |

Description.

Prints values of BackupDataBufferSize,

BackupLogBufferSize,

BackupWriteSize, and

BackupMaxWriteSize

Sample Output.

Node 2: Backup: data: 2097152 log: 2097152 min: 32768 max: 262144

Additional Information. This command can also be used to set these parameters, as in this example:

ndb_mgm> 2 DUMP 20 3 3 64 512

Sending dump signal with data:

0x00000014 0x00000003 0x00000003 0x00000040 0x00000200

Node 2: Backup: data: 3145728 log: 3145728 min: 65536 max: 524288

You must set each of these parameters to the same value on

all nodes; otherwise, subsequent issuing of a START

BACKUP command crashes the cluster.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 21 | --- | BACKUP |

Description.

Sends a GSN_BACKUP_REQ signal to the

node, causing that node to initiate a backup.

Sample Output.

Node 2: Backup 1 started from node 2 Node 2: Backup 1 started from node 2 completed StartGCP: 158515 StopGCP: 158518 #Records: 2061 #LogRecords: 0 Data: 35664 bytes Log: 0 bytes

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

22 backup_id | --- | BACKUP |

Description.

Sends a GSN_FSREMOVEREQ signal to the

node. This should remove the backup having backup ID

backup_id from the backup

directory; however, it actually causes the node to

crash.

Sample Output.

Time: Friday 16 February 2007 - 10:23:00 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: ArrayPool<T>::getPtr Error object: ../../../../../storage/ndb/src/kernel/vm/ArrayPool.hpp line: 395 (block: BACKUP) Program: ./libexec/ndbd Pid: 27357 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.4 Version: Version 5.1.16 (beta)

Additional Information.

It appears that any invocation of

DUMP 22 causes the node or nodes to

crash.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 23 | --- | BACKUP |

Description. Dumps all backup records and file entries belonging to those records.

The example shows a single record with a single file only, but there may be multiple records and multiple file lines within each record.

Sample Output.

With no backup in progress (BackupRecord

shows as 0):

Node 2: BackupRecord 0: BackupId: 5 MasterRef: f70002 ClientRef: 0 Node 2: State: 2 Node 2: file 0: type: 3 flags: H'0

While a backup is in progress (BackupRecord

is 1):

Node 2: BackupRecord 1: BackupId: 8 MasterRef: f40002 ClientRef: 80010001 Node 2: State: 1 Node 2: file 3: type: 3 flags: H'1 Node 2: file 2: type: 2 flags: H'1 Node 2: file 0: type: 1 flags: H'9 Node 2: BackupRecord 0: BackupId: 110 MasterRef: f70002 ClientRef: 0 Node 2: State: 2 Node 2: file 0: type: 3 flags: H'0

Additional Information.

Possible State values are shown in the

following table:

| Value | State | Description |

|---|---|---|

| 0 | INITIAL | |

| 1 | DEFINING | Defining backup content and parameters |

| 2 | DEFINED | DEFINE_BACKUP_CONF signal sent by slave, received on

master |

| 3 | STARTED | Creating triggers |

| 4 | SCANNING | Scanning fragments |

| 5 | STOPPING | Closing files |

| 6 | CLEANING | Freeing resources |

| 7 | ABORTING | Aborting backup |

Types are shown in the following table:

| Value | Name |

|---|---|

| 1 | CTL_FILE |

| 2 | LOG_FILE |

| 3 | DATA_FILE |

| 4 | LCP_FILE |

Flags are shown in the following table:

| Value | Name |

|---|---|

0x01 | BF_OPEN |

0x02 | BF_OPENING |

0x04 | BF_CLOSING |

0x08 | BF_FILE_THREAD |

0x10 | BF_SCAN_THREAD |

0x20 | BF_LCP_META |

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 24 | --- | BACKUP |

Description. Prints backup record pool information.

Sample Output.

Node 2: Backup - dump pool sizes Node 2: BackupPool: 2 BackupFilePool: 4 TablePool: 323 Node 2: AttrPool: 2 TriggerPool: 4 FragmentPool: 323 Node 2: PagePool: 198

Additional Information.

If 2424 is passed as an argument (for

example, 2 DUMP 24 2424), this causes an

LCP.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 25 | NdbcntrTestStopOnError | NDBCNTR |

Description. Kills the data node or nodes.

Sample Output.

Time: Friday 16 February 2007 - 10:26:46 Status: Temporary error, restart node Message: System error, node killed during node restart by other node (Internal error, programming error or missing error message, please report a bug) Error: 2303 Error data: System error 6, this node was killed by node 2 Error object: NDBCNTR (Line: 234) 0x00000008 Program: ./libexec/ndbd Pid: 27665 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.5 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 70 | NdbcntrStopNodes | |

Description.

Sample Output.

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 400 | NdbfsDumpFileStat- | NDBFS |

Description.

Provides NDB file system statistics.

Sample Output.

Node 2: NDBFS: Files: 27 Open files: 10 Node 2: Idle files: 17 Max opened files: 12 Node 2: Max files: 40 Node 2: Requests: 256

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 401 | NdbfsDumpAllFiles | NDBFS |

Description.

Prints NDB file system file handles and

states (OPEN or

CLOSED).

Sample Output.

Node 2: NDBFS: Dump all files: 27 Node 2: 0 (0x87867f8): CLOSED Node 2: 1 (0x8787e70): CLOSED Node 2: 2 (0x8789490): CLOSED Node 2: 3 (0x878aab0): CLOSED Node 2: 4 (0x878c0d0): CLOSED Node 2: 5 (0x878d6f0): CLOSED Node 2: 6 (0x878ed10): OPEN Node 2: 7 (0x8790330): OPEN Node 2: 8 (0x8791950): OPEN Node 2: 9 (0x8792f70): OPEN Node 2: 10 (0x8794590): OPEN Node 2: 11 (0x8795da0): OPEN Node 2: 12 (0x8797358): OPEN Node 2: 13 (0x8798978): OPEN Node 2: 14 (0x8799f98): OPEN Node 2: 15 (0x879b5b8): OPEN Node 2: 16 (0x879cbd8): CLOSED Node 2: 17 (0x879e1f8): CLOSED Node 2: 18 (0x879f818): CLOSED Node 2: 19 (0x87a0e38): CLOSED Node 2: 20 (0x87a2458): CLOSED Node 2: 21 (0x87a3a78): CLOSED Node 2: 22 (0x87a5098): CLOSED Node 2: 23 (0x87a66b8): CLOSED Node 2: 24 (0x87a7cd8): CLOSED Node 2: 25 (0x87a92f8): CLOSED Node 2: 26 (0x87aa918): CLOSED

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 402 | NdbfsDumpOpenFiles | NDBFS |

Description.

Prints list of NDB file system open

files.

Sample Output.

Node 2: NDBFS: Dump open files: 10 Node 2: 0 (0x8792f70): /usr/local/mysql-5.1/cluster/ndb_2_fs/D1/DBDIH/P0.sysfile Node 2: 1 (0x8794590): /usr/local/mysql-5.1/cluster/ndb_2_fs/D2/DBDIH/P0.sysfile Node 2: 2 (0x878ed10): /usr/local/mysql-5.1/cluster/ndb_2_fs/D8/DBLQH/S0.FragLog Node 2: 3 (0x8790330): /usr/local/mysql-5.1/cluster/ndb_2_fs/D9/DBLQH/S0.FragLog Node 2: 4 (0x8791950): /usr/local/mysql-5.1/cluster/ndb_2_fs/D10/DBLQH/S0.FragLog Node 2: 5 (0x8795da0): /usr/local/mysql-5.1/cluster/ndb_2_fs/D11/DBLQH/S0.FragLog Node 2: 6 (0x8797358): /usr/local/mysql-5.1/cluster/ndb_2_fs/D8/DBLQH/S1.FragLog Node 2: 7 (0x8798978): /usr/local/mysql-5.1/cluster/ndb_2_fs/D9/DBLQH/S1.FragLog Node 2: 8 (0x8799f98): /usr/local/mysql-5.1/cluster/ndb_2_fs/D10/DBLQH/S1.FragLog Node 2: 9 (0x879b5b8): /usr/local/mysql-5.1/cluster/ndb_2_fs/D11/DBLQH/S1.FragLog

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 403 | NdbfsDumpIdleFiles | NDBFS |

Description.

Prints list of NDB file system idle file

handles.

Sample Output.

Node 2: NDBFS: Dump idle files: 17 Node 2: 0 (0x8787e70): CLOSED Node 2: 1 (0x87aa918): CLOSED Node 2: 2 (0x8789490): CLOSED Node 2: 3 (0x878d6f0): CLOSED Node 2: 4 (0x878aab0): CLOSED Node 2: 5 (0x878c0d0): CLOSED Node 2: 6 (0x879cbd8): CLOSED Node 2: 7 (0x87a0e38): CLOSED Node 2: 8 (0x87a2458): CLOSED Node 2: 9 (0x879e1f8): CLOSED Node 2: 10 (0x879f818): CLOSED Node 2: 11 (0x87a66b8): CLOSED Node 2: 12 (0x87a7cd8): CLOSED Node 2: 13 (0x87a3a78): CLOSED Node 2: 14 (0x87a5098): CLOSED Node 2: 15 (0x87a92f8): CLOSED Node 2: 16 (0x87867f8): CLOSED

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 404 | --- | NDBFS |

Description. Kills node or nodes.

Sample Output.

Time: Friday 16 February 2007 - 11:17:55 Status: Temporary error, restart node Message: Internal program error (failed ndbrequire) (Internal error, programming error or missing error message, please report a bug) Error: 2341 Error data: ndbfs/Ndbfs.cpp Error object: NDBFS (Line: 1066) 0x00000008 Program: ./libexec/ndbd Pid: 29692 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.7 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 908 | --- | DBDIH, QMGR |

Description.

Causes heartbeat transmission information.to be written to

the data node logs. Useful in conjunction with setting the

HeartbeatOrder parameter (introduced in

MySQL Cluster NDB 6.3.35, MySQL Cluster NDB 7.0.16, and

MySQL Cluster NDB 7.1.5).

Additional Information. [N/A]

This section contain information about DUMP

codes 1000 through 1999, inclusive.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1000 | DumpPageMemory | DBACC, DBTUP |

Description.

Prints data node mMemory usage (ACC &

TUP), as both a number of data pages, and

the percentage of DataMemory and

IndexMemory used.

Sample Output.

Node 2: Data usage is 8%(54 32K pages of total 640)

Node 2: Index usage is 1%(24 8K pages of total 1312)

Node 2: Resource 0 min: 0 max: 639 curr: 0

When invoked as ALL DUMP 1000, this

command reports memory usage for each data node separately,

in turn.

Additional Information.

Beginning with MySQL Cluster NDB 6.2.3 and MySQL Cluster NDB

6.3.0, you can use the ndb_mgm client

REPORT MEMORYUSAGE to obtain this

information (see

Commands in the MySQL Cluster Management Client).

Beginning with MySQL Cluster NDB 7.1.0, you can also query

the ndbinfo database for this information

(see The ndbinfo memoryusage Table).

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1223 | --- | DBDICT |

Description. Kills node.

Sample Output.

Time: Friday 16 February 2007 - 11:25:17 Status: Temporary error, restart node Message: Internal program error (failed ndbrequire) (Internal error, programming error or missing error message, please report a bug) Error: 2341 Error data: dbtc/DbtcMain.cpp Error object: DBTC (Line: 464) 0x00000008 Program: ./libexec/ndbd Pid: 742 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.10 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1224 | --- | DBDICT |

Description. Kills node.

Sample Output.

Time: Friday 16 February 2007 - 11:26:36 Status: Temporary error, restart node Message: Internal program error (failed ndbrequire) (Internal error, programming error or missing error message, please report a bug) Error: 2341 Error data: dbdih/DbdihMain.cpp Error object: DBDIH (Line: 14433) 0x00000008 Program: ./libexec/ndbd Pid: 975 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.11 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1225 | --- | DBDICT |

Description. Kills node.

Sample Output.

Node 2: Forced node shutdown completed. Initiated by signal 6. Caused by error 2301: 'Assertion(Internal error, programming error or missing error message, please report a bug). Temporary error, restart node'. - Unknown error code: Unknown result: Unknown error code

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1226 | --- | DBDICT |

Description. Prints pool objects.

Sample Output.

Node 2: c_obj_pool: 1332 1321 Node 2: c_opRecordPool: 256 256 Node 2: c_rope_pool: 4204 4078

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1332 | LqhDumpAllDefinedTabs | DBACC |

Description.

Prints the states of all tables known by the local query

handler (LQH).

Sample Output.

Node 2: Table 0 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 1 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 2 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 3 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 4 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 9 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 10 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 11 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0 Node 2: Table 12 Status: 0 Usage: 0 Node 2: frag: 0 distKey: 0 Node 2: frag: 1 distKey: 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 1333 | LqhDumpNoLogPages | DBACC |

Description. Reports redo log buffer usage.

Sample Output.

Node 2: LQH: Log pages : 256 Free: 244

Additional Information. The redo log buffer is measured in 32KB pages, so the sample output can be interpreted as follows:

Redo log buffer total. 8,338KB = ~8.2MB

Redo log buffer free. 7,808KB = ~7.6MB

Redo log buffer used. 384KB = ~0.4MB

- 6.2.3.1.

DUMP 2300 - 6.2.3.2.

DUMP 2301 - 6.2.3.3.

DUMP 2302 - 6.2.3.4.

DUMP 2303 - 6.2.3.5.

DUMP 2304 - 6.2.3.6.

DUMP 2305 - 6.2.3.7.

DUMP 2308 - 6.2.3.8.

DUMP 2315 - 6.2.3.9.

DUMP 2350 - 6.2.3.10.

DUMP 2352 - 6.2.3.11.

DUMP 2400 - 6.2.3.12.

DUMP 2401 - 6.2.3.13.

DUMP 2402 - 6.2.3.14.

DUMP 2403 - 6.2.3.15.

DUMP 2404 - 6.2.3.16.

DUMP 2405 - 6.2.3.17.

DUMP 2406 - 6.2.3.18.

DUMP 2500 - 6.2.3.19.

DUMP 2501 - 6.2.3.20.

DUMP 2502 - 6.2.3.21.

DUMP 2503 - 6.2.3.22.

DUMP 2504 - 6.2.3.23.

DUMP 2505 - 6.2.3.24.

DUMP 2506 - 6.2.3.25.

DUMP 2507 - 6.2.3.26.

DUMP 2508 - 6.2.3.27.

DUMP 2509 - 6.2.3.28.

DUMP 2510 - 6.2.3.29.

DUMP 2511 - 6.2.3.30.

DUMP 2512 - 6.2.3.31.

DUMP 2513 - 6.2.3.32.

DUMP 2514 - 6.2.3.33.

DUMP 2515 - 6.2.3.34.

DUMP 2550 - 6.2.3.35.

DUMP 2600 - 6.2.3.36.

DUMP 2601 - 6.2.3.37.

DUMP 2602 - 6.2.3.38.

DUMP 2603 - 6.2.3.39.

DUMP 2604

This section contains information about DUMP

codes 2000 through 2999, inclusive.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2300 | LqhDumpOneScanRec | DBACC |

Description. [Unknown]

Sample Output. [Not available]

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2301 | LqhDumpAllScanRec | DBACC |

Description. Kills the node.

Sample Output.

Time: Friday 16 February 2007 - 12:35:36 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: ArrayPool<T>::getPtr Error object: ../../../../../storage/ndb/src/kernel/vm/ArrayPool.hpp line: 345 (block: DBLQH) Program: ./ndbd Pid: 10463 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.22 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2302 | LqhDumpAllActiveScanRec | DBACC |

Description. [Unknown]

Sample Output.

Time: Friday 16 February 2007 - 12:51:14 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: ArrayPool<T>::getPtr Error object: ../../../../../storage/ndb/src/kernel/vm/ArrayPool.hpp line: 349 (block: DBLQH) Program: ./ndbd Pid: 10539 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.23 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2303 | LqhDumpLcpState | DBACC |

Description. [Unknown]

Sample Output.

Node 2: == LQH LCP STATE == Node 2: clcpCompletedState=0, c_lcpId=3, cnoOfFragsCheckpointed=0 Node 2: lcpState=0 lastFragmentFlag=0 Node 2: currentFragment.fragPtrI=9 Node 2: currentFragment.lcpFragOrd.tableId=4 Node 2: lcpQueued=0 reportEmpty=0 Node 2: m_EMPTY_LCP_REQ=-1077761081

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2304 | --- | DBLQH |

Description.

This command causes all fragment log files and their states

to be written to the data node's out file (in the case of

the data node having the node ID 1, this

would be ndb_1_out.log). The number of

these files is controlled by the

NoFragmentLogFiles configuration

parameter, whose default value is 16 in MySQL 5.1 and later

releases.

Sample Output.

The following is taken from

ndb_1_out.log for a cluster with 2 data

nodes:

LP 2 state: 0 WW_Gci: 1 gcprec: -256 flq: -256 currfile: 32 tailFileNo: 0 logTailMbyte: 1 file 0(32) FileChangeState: 0 logFileStatus: 20 currentMbyte: 1 currentFilepage 55 file 1(33) FileChangeState: 0 logFileStatus: 20 currentMbyte: 0 currentFilepage 0 file 2(34) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 3(35) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 4(36) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 5(37) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 6(38) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 7(39) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 8(40) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 9(41) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 10(42) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 11(43) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 12(44) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 13(45) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 14(46) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 15(47) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 LP 3 state: 0 WW_Gci: 1 gcprec: -256 flq: -256 currfile: 48 tailFileNo: 0 logTailMbyte: 1 file 0(48) FileChangeState: 0 logFileStatus: 20 currentMbyte: 1 currentFilepage 55 file 1(49) FileChangeState: 0 logFileStatus: 20 currentMbyte: 0 currentFilepage 0 file 2(50) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 3(51) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 4(52) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 5(53) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 6(54) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 7(55) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 8(56) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 9(57) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 10(58) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 11(59) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 12(60) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 13(61) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 14(62) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0 file 15(63) FileChangeState: 0 logFileStatus: 1 currentMbyte: 0 currentFilepage 0

Additional Information.

See also Section 6.2.3.6, “DUMP 2305”.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2305 | --- | DBLQH |

Description.

Show the states of all fragment log files (see

Section 6.2.3.5, “DUMP 2304”), then

kills the node.

Sample Output.

Time: Friday 16 February 2007 - 13:11:57 Status: Temporary error, restart node Message: System error, node killed during node restart by other node (Internal error, programming error or missing error message, please report a bug) Error: 2303 Error data: Please report this as a bug. Provide as much info as possible, expecially all the ndb_*_out.log files, Thanks. Shutting down node due to failed handling of GCP_SAVEREQ Error object: DBLQH (Line: 18619) 0x0000000a Program: ./libexec/ndbd Pid: 111 Time: Friday 16 February 2007 - 13:11:57 Status: Temporary error, restart node Message: Error OS signal received (Internal error, programming error or missing error message, please report a bug) Error: 6000 Error data: Signal 6 received; Aborted Error object: main.cpp Program: ./libexec/ndbd Pid: 11138 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.2 Version: Version 5.1.16 (beta)

Additional Information. No error message is written to the cluster log when the node is killed. Node failure is made evident only by subsequent heartbeat failure messages.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2308 | --- | DBLQH |

Description. Kills the node.

Sample Output.

Time: Friday 16 February 2007 - 13:22:06 Status: Temporary error, restart node Message: Internal program error (failed ndbrequire) (Internal error, programming error or missing error message, please report a bug) Error: 2341 Error data: dblqh/DblqhMain.cpp Error object: DBLQH (Line: 18805) 0x0000000a Program: ./libexec/ndbd Pid: 11640 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2315 | LqhErrorInsert5042 | DBLQH |

Description. [Unknown]

Sample Output. [N/A]

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

data_node_id 2350

operation_filter+ | --- | --- |

Description. Dumps all operations on a given data node or data nodes, according to the type and other parameters defined by the operation filter or filters specified.

Sample Output. Dump all operations on data node 2, from API node 5:

ndb_mgm> 2 DUMP 2350 1 5

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: Starting dump of operations

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: OP[470]:

Tab: 4 frag: 0 TC: 3 API: 5(0x8035)transid: 0x31c 0x3500500 op: SCAN state: InQueue

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: End of operation dump

Additional information. Information about operation filter and operation state values follows.

Operation filter values. The operation filter (or filters) can take on the following values:

| Value | Filter |

|---|---|

| 0 | table ID |

| 1 | API node ID |

| 2 | 2 transaction IDs, defining a range of transactions |

| 3 | transaction coordinator node ID |

In each case, the ID of the object specified follows the specifier. See the sample output for examples.

Operation states. The “normal” states that may appear in the output from this command are listed here:

Transactions:

Prepared: The transaction coordinator is idle, waiting for the API to proceedRunning: The transaction coordinator is currently preparing operationsCommitting,Prepare to commit,Commit sent: The transaction coordinator is committingCompleting: The transaction coordinator is completing the commit (after commit, some cleanup is needed)Aborting: The transaction coordinator is aborting the transactionScanning: The transaction coordinator is scanning

Scan operations:

WaitNextScan: The scan is idle, waiting for APIInQueue: The scan has not yet started, but rather is waiting in queue for other scans to complete

Primary key operations:

In lock queue: The operation is waiting on a lockRunning: The operation is being preparedPrepared: The operation is prepared, holding an appropriate lock, and waiting for commit or rollback to complete

Relation to NDB API.

It is possible to match the output of DUMP

2350 to specific threads or Ndb

objects. First suppose that you dump all operations on data

node 2 from API node 5, using table 4 only, like this:

ndb_mgm> 2 DUMP 2350 1 5 0 4

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: Starting dump of operations

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: OP[470]:

Tab: 4 frag: 0 TC: 3 API: 5(0x8035)transid: 0x31c 0x3500500 op: SCAN state: InQueue

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: End of operation dump

Suppose you are working with an Ndb

instance named MyNdb, to which this

operation belongs. You can see that this is the case by

calling the Ndb object's

getReference() method, like this:

printf("MyNdb.getReference(): 0x%x\n", MyNdb.getReference());

The output from the preceding line of code is:

MyNdb.getReference(): 0x80350005

The high 16 bits of the value shown corresponds to the number

in parentheses from the OP line in the

DUMP command's output (8035). For more

about this method, see Section 2.3.8.1.17, “Ndb::getReference()”.

This command was added in MySQL Cluster NDB 6.1.12 and MySQL Cluster NDB 6.2.2.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

node_id 2352

operation_id | --- | --- |

Description. Gets information about an operation with a given operation ID.

Sample Output.

First, obtain a dump of operations. Here, we use

DUMP 2350 to get a dump of all operations

on data node 2 from API node 5:

ndb_mgm> 2 DUMP 2350 1 5

2006-10-11 13:31:25 [MgmSrvr] INFO -- Node 2: Starting dump of operations

2006-10-11 13:31:25 [MgmSrvr] INFO -- Node 2: OP[3]:

Tab: 3 frag: 1 TC: 2 API: 5(0x8035)transid: 0x3 0x200400 op: INSERT state: Prepared

2006-10-11 13:31:25 [MgmSrvr] INFO -- Node 2: End of operation dump

In this case, there is a single operation reported on node 2,

whose operation ID is 3. To obtain the

transaction ID and primary key, we use the node ID and

operation ID with DUMP 2352 as shown here:

ndb_mgm> 2 dump 2352 3

2006-10-11 13:31:31 [MgmSrvr] INFO -- Node 2: OP[3]: transid: 0x3 0x200400 key: 0x2

Additional Information.

Use DUMP 2350 to obtain an operation ID.

See Section 6.2.3.9, “DUMP 2350”, and

the previous example.

This command was added in MySQL Cluster NDB 6.1.12 and MySQL Cluster NDB 6.2.2.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

2400 record_id | AccDumpOneScanRec | DBACC |

Description.

Dumps the scan record having record ID

record_id.

Sample Output.

For 2 DUMP 1:

Node 2: Dbacc::ScanRec[1]: state=1, transid(0x0, 0x0) Node 2: timer=0, continueBCount=0, activeLocalFrag=0, nextBucketIndex=0 Node 2: scanNextfreerec=2 firstActOp=0 firstLockedOp=0, scanLastLockedOp=0 firstQOp=0 lastQOp=0 Node 2: scanUserP=0, startNoBuck=0, minBucketIndexToRescan=0, maxBucketIndexToRescan=0 Node 2: scanBucketState=0, scanLockHeld=0, userBlockRef=0, scanMask=0 scanLockMode=0

Additional Information.

For dumping all scan records, see

Section 6.2.3.12, “DUMP 2401”.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2401 | AccDumpAllScanRec | DBACC |

Description. Dumps all scan records for the node specified.

Sample Output.

Node 2: ACC: Dump all ScanRec - size: 513 Node 2: Dbacc::ScanRec[1]: state=1, transid(0x0, 0x0) Node 2: timer=0, continueBCount=0, activeLocalFrag=0, nextBucketIndex=0 Node 2: scanNextfreerec=2 firstActOp=0 firstLockedOp=0, scanLastLockedOp=0 firstQOp=0 lastQOp=0 Node 2: scanUserP=0, startNoBuck=0, minBucketIndexToRescan=0, maxBucketIndexToRescan=0 Node 2: scanBucketState=0, scanLockHeld=0, userBlockRef=0, scanMask=0 scanLockMode=0 Node 2: Dbacc::ScanRec[2]: state=1, transid(0x0, 0x0) Node 2: timer=0, continueBCount=0, activeLocalFrag=0, nextBucketIndex=0 Node 2: scanNextfreerec=3 firstActOp=0 firstLockedOp=0, scanLastLockedOp=0 firstQOp=0 lastQOp=0 Node 2: scanUserP=0, startNoBuck=0, minBucketIndexToRescan=0, maxBucketIndexToRescan=0 Node 2: scanBucketState=0, scanLockHeld=0, userBlockRef=0, scanMask=0 scanLockMode=0 Node 2: Dbacc::ScanRec[3]: state=1, transid(0x0, 0x0) Node 2: timer=0, continueBCount=0, activeLocalFrag=0, nextBucketIndex=0 Node 2: scanNextfreerec=4 firstActOp=0 firstLockedOp=0, scanLastLockedOp=0 firstQOp=0 lastQOp=0 Node 2: scanUserP=0, startNoBuck=0, minBucketIndexToRescan=0, maxBucketIndexToRescan=0 Node 2: scanBucketState=0, scanLockHeld=0, userBlockRef=0, scanMask=0 scanLockMode=0 ⋮ Node 2: Dbacc::ScanRec[512]: state=1, transid(0x0, 0x0) Node 2: timer=0, continueBCount=0, activeLocalFrag=0, nextBucketIndex=0 Node 2: scanNextfreerec=-256 firstActOp=0 firstLockedOp=0, scanLastLockedOp=0 firstQOp=0 lastQOp=0 Node 2: scanUserP=0, startNoBuck=0, minBucketIndexToRescan=0, maxBucketIndexToRescan=0 Node 2: scanBucketState=0, scanLockHeld=0, userBlockRef=0, scanMask=0 scanLockMode=0

Additional Information.

If you want to dump a single scan record, given its record

ID, see Section 6.2.3.11, “DUMP 2400”;

for dumping all active scan records, see

Section 6.2.3.13, “DUMP 2402”.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2402 | AccDumpAllActiveScanRec | DBACC |

Description. Dumps all active scan records.

Sample Output.

Node 2: ACC: Dump active ScanRec - size: 513

Additional Information.

To dump all scan records (active or not), see

Section 6.2.3.12, “DUMP 2401”.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

2403 record_id | AccDumpOneOperationRec | DBACC |

Description. [Unknown]

Sample Output.

(For 2 DUMP 1:)

Node 2: Dbacc::operationrec[1]: transid(0x0, 0x7f1) Node 2: elementIsforward=1, elementPage=0, elementPointer=724 Node 2: fid=0, fragptr=0, hashvaluePart=63926 Node 2: hashValue=-2005083304 Node 2: nextLockOwnerOp=-256, nextOp=-256, nextParallelQue=-256 Node 2: nextSerialQue=-256, prevOp=0 Node 2: prevLockOwnerOp=24, prevParallelQue=-256 Node 2: prevSerialQue=-256, scanRecPtr=-256 Node 2: m_op_bits=0xffffffff, scanBits=0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2404 | AccDumpNumOpRecs | DBACC |

Description. Number the number of operation records (total number, and number free).

Sample Output.

Node 2: Dbacc::OperationRecords: num=69012, free=32918

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2405 | AccDumpFreeOpRecs | |

Description. Unknown: No output results if this command is called without additional arguments; if an extra argument is used, this command crashes the data node.

Sample Output.

(For 2 DUMP 2405 1:)

Time: Saturday 17 February 2007 - 18:33:54 Status: Temporary error, restart node Message: Job buffer congestion (Internal error, programming error or missing error message, please report a bug) Error: 2334 Error data: Job Buffer Full Error object: APZJobBuffer.C Program: ./libexec/ndbd Pid: 27670 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2406 | AccDumpNotFreeOpRecs | DBACC |

Description. Unknown: No output results if this command is called without additional arguments; if an extra argument is used, this command crashes the data node.

Sample Output.

(For 2 DUMP 2406 1:)

Time: Saturday 17 February 2007 - 18:39:16 Status: Temporary error, restart node Message: Job buffer congestion (Internal error, programming error or missing error message, please report a bug) Error: 2334 Error data: Job Buffer Full Error object: APZJobBuffer.C Program: ./libexec/ndbd Pid: 27956 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2500 | TcDumpAllScanFragRec | DBTC |

Description. Kills the data node.

Sample Output.

Time: Friday 16 February 2007 - 13:37:11 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: ArrayPool<T>::getPtr Error object: ../../../../../storage/ndb/src/kernel/vm/ArrayPool.hpp line: 345 (block: CMVMI) Program: ./libexec/ndbd Pid: 13237 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2501 | TcDumpOneScanFragRec | DBTC |

Description. No output if called without any additional arguments. With additional arguments, it kills the data node.

Sample Output.

(For 2 DUMP 2501 1:)

Time: Saturday 17 February 2007 - 18:41:41 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: ArrayPool<T>::getPtr Error object: ../../../../../storage/ndb/src/kernel/vm/ArrayPool.hpp line: 345 (block: DBTC) Program: ./libexec/ndbd Pid: 28239 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2502 | TcDumpAllScanRec | DBTC |

Description. Dumps all scan records.

Sample Output.

Node 2: TC: Dump all ScanRecord - size: 256 Node 2: Dbtc::ScanRecord[1]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=2 Node 2: Dbtc::ScanRecord[2]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=3 Node 2: Dbtc::ScanRecord[3]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=4 ⋮ Node 2: Dbtc::ScanRecord[254]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=255 Node 2: Dbtc::ScanRecord[255]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=-256 Node 2: Dbtc::ScanRecord[255]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=-256

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2503 | TcDumpAllActiveScanRec | DBTC |

Description. Dumps all active scan records.

Sample Output.

Node 2: TC: Dump active ScanRecord - size: 256

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

2504 record_id | TcDumpOneScanRec | DBTC |

Description.

Dumps a single scan record having the record ID

record_id. (For dumping all scan

records, see

Section 6.2.3.20, “DUMP 2502”.)

Sample Output.

(For 2 DUMP 2504 1:)

Node 2: Dbtc::ScanRecord[1]: state=0nextfrag=0, nofrag=0 Node 2: ailen=0, para=0, receivedop=0, noOprePperFrag=0 Node 2: schv=0, tab=0, sproc=0 Node 2: apiRec=-256, next=2

Additional Information. The attributes in the output of this command are described as follows:

ScanRecord. The scan record slot number (same asrecord_id)state. One of the following values (found in asScanStateinDbtc.hpp):Value State 0 IDLE1 WAIT_SCAN_TAB_INFO2 WAIT_AI3 WAIT_FRAGMENT_COUNT4 RUNNING5 CLOSING_SCANnextfrag: ID of the next fragment to be scanned. Used by a scan fragment process when it is ready for the next fragment.nofrag: Total number of fragments in the table being scanned.ailen: Length of the expected attribute information.para: Number of scan frag processes that belonging to this scan.receivedop: Number of operations received.noOprePperFrag: Maximum number of bytes per batch.schv: Schema version used by this scan.tab: The index or table that is scanned.sproc: Index of stored procedure belonging to this scan.apiRec: Reference toApiConnectRecordnext: Index of nextScanRecordin free list

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2505 | TcDumpOneApiConnectRec | DBTC |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2506 | TcDumpAllApiConnectRec | DBTC |

Description. [Unknown]

Sample Output.

Node 2: TC: Dump all ApiConnectRecord - size: 12288 Node 2: Dbtc::ApiConnectRecord[1]: state=0, abortState=0, apiFailState=0 Node 2: transid(0x0, 0x0), apiBref=0x1000002, scanRec=-256 Node 2: ctcTimer=36057, apiTimer=0, counter=0, retcode=0, retsig=0 Node 2: lqhkeyconfrec=0, lqhkeyreqrec=0, tckeyrec=0 Node 2: next=-256 Node 2: Dbtc::ApiConnectRecord[2]: state=0, abortState=0, apiFailState=0 Node 2: transid(0x0, 0x0), apiBref=0x1000002, scanRec=-256 Node 2: ctcTimer=36057, apiTimer=0, counter=0, retcode=0, retsig=0 Node 2: lqhkeyconfrec=0, lqhkeyreqrec=0, tckeyrec=0 Node 2: next=-256 Node 2: Dbtc::ApiConnectRecord[3]: state=0, abortState=0, apiFailState=0 Node 2: transid(0x0, 0x0), apiBref=0x1000002, scanRec=-256 Node 2: ctcTimer=36057, apiTimer=0, counter=0, retcode=0, retsig=0 Node 2: lqhkeyconfrec=0, lqhkeyreqrec=0, tckeyrec=0 Node 2: next=-256 ⋮ Node 2: Dbtc::ApiConnectRecord[12287]: state=7, abortState=0, apiFailState=0 Node 2: transid(0x0, 0x0), apiBref=0xffffffff, scanRec=-256 Node 2: ctcTimer=36308, apiTimer=0, counter=0, retcode=0, retsig=0 Node 2: lqhkeyconfrec=0, lqhkeyreqrec=0, tckeyrec=0 Node 2: next=-256 Node 2: Dbtc::ApiConnectRecord[12287]: state=7, abortState=0, apiFailState=0 Node 2: transid(0x0, 0x0), apiBref=0xffffffff, scanRec=-256 Node 2: ctcTimer=36308, apiTimer=0, counter=0, retcode=0, retsig=0 Node 2: lqhkeyconfrec=0, lqhkeyreqrec=0, tckeyrec=0 Node 2: next=-256

Additional Information. If the default settings are used, the output from this command is likely to exceed the maximum log file size.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2507 | TcSetTransactionTimeout | DBTC |

Description. Apparently requires an extra argument, but is not currently known with certainty.

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2508 | TcSetApplTransactionTimeout | DBTC |

Description. Apparently requires an extra argument, but is not currently known with certainty.

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2509 | StartTcTimer | DBTC |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2510 | StopTcTimer | DBTC |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2511 | StartPeriodicTcTimer | DBTC |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

2512 [delay] | TcStartDumpIndexOpCount | DBTC |

Description.

Dumps the value of

MaxNoOfConcurrentOperations, and the

current resource usage, in a continuous loop. The

delay time between reports can

optionally be specified (in seconds), with the default being

1 and the maximum value being 25 (values greater than 25 are

silently coerced to 25).

Sample Output. (Single report:)

Node 2: IndexOpCount: pool: 8192 free: 8192

Additional Information.

There appears to be no way to disable the repeated checking

of MaxNoOfConcurrentOperations once

started by this command, except by restarting the data node.

It may be preferable for this reason to use DUMP

2513 instead (see

Section 6.2.3.31, “DUMP 2513”).

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2513 | TcDumpIndexOpCount | |

Description.

Dumps the value of

MaxNoOfConcurrentOperations, and the

current resource usage.

Sample Output.

Node 2: IndexOpCount: pool: 8192 free: 8192

Additional Information.

Unlike the continuous checking done by DUMP

2512 the check is performed only once (see

Section 6.2.3.30, “DUMP 2512”).

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2514 | --- | DBTC |

Description. [Unknown]

Sample Output.

Node 2: IndexOpCount: pool: 8192 free: 8192 - Repeated 3 times Node 2: TC: m_commitAckMarkerPool: 12288 free size: 12288 Node 2: LQH: m_commitAckMarkerPool: 36094 free size: 36094 Node 3: TC: m_commitAckMarkerPool: 12288 free size: 12288 Node 3: LQH: m_commitAckMarkerPool: 36094 free size: 36094 Node 2: IndexOpCount: pool: 8192 free: 8192

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2515 | --- | DBTC |

Description. Appears to kill all data nodes in the cluster. Purpose unknown.

Sample Output. From the node for which the command is issued:

Time: Friday 16 February 2007 - 13:52:32 Status: Temporary error, restart node Message: Assertion (Internal error, programming error or missing error message, please report a bug) Error: 2301 Error data: Illegal signal received (GSN 395 not added) Error object: Illegal signal received (GSN 395 not added) Program: ./libexec/ndbd Pid: 14256 Trace: /usr/local/mysql-5.1/cluster/ndb_2_trace.log.1 Version: Version 5.1.16 (beta)

From the remaining data nodes:

Time: Friday 16 February 2007 - 13:52:31 Status: Temporary error, restart node Message: System error, node killed during node restart by other node (Internal error, programming error or missing error message, please report a bug) Error: 2303 Error data: System error 0, this node was killed by node 2515 Error object: NDBCNTR (Line: 234) 0x0000000a Program: ./libexec/ndbd Pid: 14261 Trace: /usr/local/mysql-5.1/cluster/ndb_3_trace.log.1 Version: Version 5.1.16 (beta)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

data_node_id 2550

transaction_filter+ | --- | --- |

Description.

Dumps all transaction from data node

data_node_id meeting the

conditions established by the transaction filter or filters

specified.

Sample Output. Dump all transactions on node 2 which have been inactive for 30 seconds or longer:

ndb_mgm> 2 DUMP 2550 4 30

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: Starting dump of transactions

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: TRX[123]: API: 5(0x8035) transid: 0x31c 0x3500500 inactive: 42s state:

2006-10-09 13:16:49 [MgmSrvr] INFO -- Node 2: End of transaction dump

Additional Information. The following values may be used for transaction filters. The filter value must be followed by one or more node IDs or, in the case of the last entry in the table, by the time in seconds that transactions have been inactive:

| Value | Filter |

|---|---|

| 1 | API node ID |

| 2 | 2 transaction IDs, defining a range of transactions |

| 4 | time transactions inactive (seconds) |

This command was added in MySQL Cluster NDB 6.1.12 and MySQL Cluster NDB 6.2.2.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 260 | CmvmiDumpConnections | CMVMI |

Description. Shows status of connections between all cluster nodes. When the cluster is operating normally, every connection has the same status.

Sample Output.

Node 3: Connection to 1 (MGM) is connected Node 3: Connection to 2 (MGM) is trying to connect Node 3: Connection to 3 (DB) does nothing Node 3: Connection to 4 (DB) is connected Node 3: Connection to 7 (API) is connected Node 3: Connection to 8 (API) is connected Node 3: Connection to 9 (API) is trying to connect Node 3: Connection to 10 (API) is trying to connect Node 3: Connection to 11 (API) is trying to connect Node 4: Connection to 1 (MGM) is connected Node 4: Connection to 2 (MGM) is trying to connect Node 4: Connection to 3 (DB) is connected Node 4: Connection to 4 (DB) does nothing Node 4: Connection to 7 (API) is connected Node 4: Connection to 8 (API) is connected Node 4: Connection to 9 (API) is trying to connect Node 4: Connection to 10 (API) is trying to connect Node 4: Connection to 11 (API) is trying to connect

Additional Information.

The message is trying to connect actually

means that the node in question was not started. This can

also be seen when there are unused [api]

or [mysql] sections in the

config.ini file nodes

configured—in other words when there are spare slots

for API or SQL nodes.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2601 | CmvmiDumpLongSignalMemory | CMVMI |

Description. [Unknown]

Sample Output.

Node 2: Cmvmi: g_sectionSegmentPool size: 4096 free: 4096

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2602 | CmvmiSetRestartOnErrorInsert | CMVMI |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2603 | CmvmiTestLongSigWithDelay | CMVMI |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 2604 | CmvmiDumpSubscriptions | CMVMI |

Description. Dumps current event subscriptions.

This output appears in the

ndb_

file (local to each data node) and not in the management

server (global) cluster log file.

node_id_out.log

Sample Output.

2007-04-17 17:10:54 [ndbd] INFO -- List subscriptions: 2007-04-17 17:10:54 [ndbd] INFO -- Subscription: 0, nodeId: 1, ref: 0x80000001 2007-04-17 17:10:54 [ndbd] INFO -- Category 0 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 1 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 2 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 3 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 4 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 5 Level 8 2007-04-17 17:10:54 [ndbd] INFO -- Category 6 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 7 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 8 Level 15 2007-04-17 17:10:54 [ndbd] INFO -- Category 9 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 10 Level 7 2007-04-17 17:10:54 [ndbd] INFO -- Category 11 Level 15

Additional Information. The output lists all event subscriptions; for each subscription a header line and a list of categories with their current log levels is printed. The following information is included in the output:

Subscription: The event subscription's internal IDnodeID: Node ID of the subscribing noderef: A block reference, consisting of a block ID fromstorage/ndb/include/kernel/BlockNumbers.hshifted to the left by 4 hexadecimal digits (16 bits) followed by a 4-digit hexadecimal node number. Block id0x8000appears to be a placeholder; it is defined asMIN_API_BLOCK_NO, with the node number part being 1 as expectedCategory: The cluster log category, as listed in Event Reports Generated in MySQL Cluster (see also the filestorage/ndb/include/mgmapi/mgmapi_config_parameters.h).Level: The event level setting (the range being 0 to 15).

This section contains information about DUMP

codes 5000 through 5999, inclusive.

- 6.2.8.1.

DUMP 7000 - 6.2.8.2.

DUMP 7001 - 6.2.8.3.

DUMP 7002 - 6.2.8.4.

DUMP 7003 - 6.2.8.5.

DUMP 7004 - 6.2.8.6.

DUMP 7005 - 6.2.8.7.

DUMP 7006 - 6.2.8.8.

DUMP 7007 - 6.2.8.9.

DUMP 7008 - 6.2.8.10.

DUMP 7009 - 6.2.8.11.

DUMP 7010 - 6.2.8.12.

DUMP 7011 - 6.2.8.13.

DUMP 7012 - 6.2.8.14.

DUMP 7013 - 6.2.8.15.

DUMP 7014 - 6.2.8.16.

DUMP 7015 - 6.2.8.17.

DUMP 7016 - 6.2.8.18.

DUMP 7017 - 6.2.8.19.

DUMP 7018 - 6.2.8.20.

DUMP 7020 - 6.2.8.21.

DUMP 7080 - 6.2.8.22.

DUMP 7090 - 6.2.8.23.

DUMP 7098 - 6.2.8.24.

DUMP 7099 - 6.2.8.25.

DUMP 7901

This section contains information about DUMP

codes 7000 through 7999, inclusive.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7000 | --- | DBDIH |

Description. Prints information on GCP state

Sample Output.

Node 2: ctimer = 299072, cgcpParticipantState = 0, cgcpStatus = 0 Node 2: coldGcpStatus = 0, coldGcpId = 436, cmasterState = 1 Node 2: cmasterTakeOverNode = 65535, ctcCounter = 299072

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7001 | --- | DBDIH |

Description. Prints information on the current LCP state.

Sample Output.

Node 2: c_lcpState.keepGci = 1 Node 2: c_lcpState.lcpStatus = 0, clcpStopGcp = 1 Node 2: cgcpStartCounter = 7, cimmediateLcpStart = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7002 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: cnoOfActiveTables = 4, cgcpDelay = 2000 Node 2: cdictblockref = 16384002, cfailurenr = 1 Node 2: con_lineNodes = 2, reference() = 16121858, creceivedfrag = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7003 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: cfirstAliveNode = 2, cgckptflag = 0 Node 2: clocallqhblockref = 16187394, clocaltcblockref = 16056322, cgcpOrderBlocked = 0 Node 2: cstarttype = 0, csystemnodes = 2, currentgcp = 438

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7004 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: cmasterdihref = 16121858, cownNodeId = 2, cnewgcp = 438 Node 2: cndbStartReqBlockref = 16449538, cremainingfrags = 1268 Node 2: cntrlblockref = 16449538, cgcpSameCounter = 16, coldgcp = 437

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7005 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: crestartGci = 1

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7006 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: clcpDelay = 20, cgcpMasterTakeOverState = 0 Node 2: cmasterNodeId = 2 Node 2: cnoHotSpare = 0, c_nodeStartMaster.startNode = -256, c_nodeStartMaster.wait = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7007 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: c_nodeStartMaster.failNr = 1 Node 2: c_nodeStartMaster.startInfoErrorCode = -202116109 Node 2: c_nodeStartMaster.blockLcp = 0, c_nodeStartMaster.blockGcp = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7008 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: cfirstDeadNode = -256, cstartPhase = 7, cnoReplicas = 2 Node 2: cwaitLcpSr = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7009 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: ccalcOldestRestorableGci = 1, cnoOfNodeGroups = 1 Node 2: cstartGcpNow = 0 Node 2: crestartGci = 1

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7010 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: cminHotSpareNodes = 0, c_lcpState.lcpStatusUpdatedPlace = 9843, cLcpStart = 1 Node 2: c_blockCommit = 0, c_blockCommitNo = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7011 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: c_COPY_GCIREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_COPY_TABREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_CREATE_FRAGREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_DIH_SWITCH_REPLICA_REQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_EMPTY_LCP_REQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_END_TOREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_GCP_COMMIT_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_GCP_PREPARE_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_GCP_SAVEREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_INCL_NODEREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_MASTER_GCPREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_MASTER_LCPREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_START_INFOREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_START_RECREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_START_TOREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_STOP_ME_REQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_TC_CLOPSIZEREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_TCGETOPSIZEREQ_Counter = [SignalCounter: m_count=0 0000000000000000] Node 2: c_UPDATE_TOREQ_Counter = [SignalCounter: m_count=0 0000000000000000]

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7012 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: ParticipatingDIH = 0000000000000000 Node 2: ParticipatingLQH = 0000000000000000 Node 2: m_LCP_COMPLETE_REP_Counter_DIH = [SignalCounter: m_count=0 0000000000000000] Node 2: m_LCP_COMPLETE_REP_Counter_LQH = [SignalCounter: m_count=0 0000000000000000] Node 2: m_LAST_LCP_FRAG_ORD = [SignalCounter: m_count=0 0000000000000000] Node 2: m_LCP_COMPLETE_REP_From_Master_Received = 0

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7013 | DihDumpLCPState | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: lcpStatus = 0 (update place = 9843) Node 2: lcpStart = 1 lcpStopGcp = 1 keepGci = 1 oldestRestorable = 1 Node 2: immediateLcpStart = 0 masterLcpNodeId = 2

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7014 | DihDumpLCPMasterTakeOver | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: c_lcpMasterTakeOverState.state = 0 updatePlace = 11756 failedNodeId = -202116109 Node 2: c_lcpMasterTakeOverState.minTableId = 4092851187 minFragId = 4092851187

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7015 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: Table 1: TabCopyStatus: 0 TabUpdateStatus: 0 TabLcpStatus: 3 Node 2: Fragment 0: noLcpReplicas==0 0(on 2)=1(Idle) 1(on 3)=1(Idle) Node 2: Fragment 1: noLcpReplicas==0 0(on 3)=1(Idle) 1(on 2)=1(Idle) Node 2: Table 2: TabCopyStatus: 0 TabUpdateStatus: 0 TabLcpStatus: 3 Node 2: Fragment 0: noLcpReplicas==0 0(on 2)=0(Idle) 1(on 3)=0(Idle) Node 2: Fragment 1: noLcpReplicas==0 0(on 3)=0(Idle) 1(on 2)=0(Idle) Node 2: Table 3: TabCopyStatus: 0 TabUpdateStatus: 0 TabLcpStatus: 3 Node 2: Fragment 0: noLcpReplicas==0 0(on 2)=0(Idle) 1(on 3)=0(Idle) Node 2: Fragment 1: noLcpReplicas==0 0(on 3)=0(Idle) 1(on 2)=0(Idle) Node 2: Table 4: TabCopyStatus: 0 TabUpdateStatus: 0 TabLcpStatus: 3 Node 2: Fragment 0: noLcpReplicas==0 0(on 2)=0(Idle) 1(on 3)=0(Idle) Node 2: Fragment 1: noLcpReplicas==0 0(on 3)=0(Idle) 1(on 2)=0(Idle)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7016 | DihAllAllowNodeStart | DBDIH |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7017 | DihMinTimeBetweenLCP | DBDIH |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7018 | DihMaxTimeBetweenLCP | DBDIH |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7020 | --- | DBDIH |

Description. This command provides general signal injection functionality. Two additional arguments are always required:

The number of the signal to be sent

The number of the block to which the signal should be sent

In addition some signals permit or require extra data to be sent.

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7080 | EnableUndoDelayDataWrite | DBACC, DBDIH,

DBTUP |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7090 | DihSetTimeBetweenGcp | DBDIH |

Description. [Unknown]

Sample Output.

...

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7098 | --- | DBDIH |

Description. [Unknown]

Sample Output.

Node 2: Invalid no of arguments to 7098 - startLcpRoundLoopLab - expected 2 (tableId, fragmentId)

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 7099 | DihStartLcpImmediately | DBDIH |

Description. Can be used to trigger an LCP manually.

Sample Output.

In this example, node 2 is the master node and controls

LCP/GCP synchronization for the cluster. Regardless of the

node_id specified, only the

master node responds:

Node 2: Local checkpoint 7 started. Keep GCI = 1003 oldest restorable GCI = 947 Node 2: Local checkpoint 7 completed

Additional Information. You may need to enable a higher logging level to have the checkpoint's completion reported, as shown here:

ndb_mgmgt; ALL CLUSTERLOG CHECKPOINT=8

This section contains information about DUMP

codes 8000 through 8999, inclusive.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 8004 | --- | SUMA |

Description. Dumps information about subscription resources.

Sample Output.

Node 2: Suma: c_subscriberPool size: 260 free: 258 Node 2: Suma: c_tablePool size: 130 free: 128 Node 2: Suma: c_subscriptionPool size: 130 free: 128 Node 2: Suma: c_syncPool size: 2 free: 2 Node 2: Suma: c_dataBufferPool size: 1009 free: 1005 Node 2: Suma: c_metaSubscribers count: 0 Node 2: Suma: c_removeDataSubscribers count: 0

Additional Information.

When subscriberPool ... free becomes and

stays very low relative to subscriberPool ...

size, it is often a good idea to increase the

value of the

MaxNoOfTables

configuration parameter (subscriberPool =

2 * MaxNoOfTables). However, there could

also be a problem with API nodes not releasing resources

correctly when they are shut down. DUMP

8004 provides a way to monitor these values.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 8005 | --- | SUMA |

Description. [Unknown]

Sample Output.

Node 2: Bucket 0 10-0 switch gci: 0 max_acked_gci: 2961 max_gci: 0 tail: -256 head: -256 Node 2: Bucket 1 00-0 switch gci: 0 max_acked_gci: 2961 max_gci: 0 tail: -256 head: -256

Additional Information. [N/A]

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 8010 | --- | SUMA |

Description. Writes information about all subscribers and connected nodes to the cluster log.

Sample Output. In this example, node 1 is a management node, nodes 2 and 3 are data nodes, and nodes 4 and 5 are SQL nodes (which both act as replication masters).

2010-10-15 10:08:33 [MgmtSrvr] INFO -- Node 2: c_subscriber_nodes: 0000000000000000000000000000000000000000000000000000000000000030 2010-10-15 10:08:33 [MgmtSrvr] INFO -- Node 2: c_connected_nodes: 0000000000000000000000000000000000000000000000000000000000000032 2010-10-15 10:08:33 [MgmtSrvr] INFO -- Node 3: c_subscriber_nodes: 0000000000000000000000000000000000000000000000000000000000000030 2010-10-15 10:08:33 [MgmtSrvr] INFO -- Node 3: c_connected_nodes: 0000000000000000000000000000000000000000000000000000000000000032

For each data node, this DUMP command

prints two hexadecimal numbers. These are representations of

bitfields having one bit per node ID, starting with node ID 0

for the rightmost bit (0x01).

The subscriber nodes bitmask

(c_subscriber_nodes) has the significant

hexadecimal digits 30 (decimal 48), or

binary 110000, which equates to nodes 4 and

5. The connected nodes bitmask

(c_connected_nodes) has the significant

hexadecimal digits 32 (decimal 50). The

binary representation of this number is

110010, which has 1 as

the second, fifth, and sixth digits (counting from the right),

and so works out to nodes 1, 4, and 5 as the connected nodes.

Additional Information.

This DUMP code was added in MySQL Cluster

NDB 6.2.9.

| Code | Symbol | Kernel Block(s) |

|---|---|---|

| 8011 | --- | SUMA |

Description. Writes information about all subscribers to the cluster log.

Sample Output. (From cluster log:)