| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster System Administration Guide Oracle Solaris Cluster 4.0 |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster System Administration Guide Oracle Solaris Cluster 4.0 |

1. Introduction to Administering Oracle Solaris Cluster

2. Oracle Solaris Cluster and RBAC

3. Shutting Down and Booting a Cluster

4. Data Replication Approaches

5. Administering Global Devices, Disk-Path Monitoring, and Cluster File Systems

7. Administering Cluster Interconnects and Public Networks

10. Configuring Control of CPU Usage

12. Backing Up and Restoring a Cluster

Configuring Host-Based Data Replication With StorageTek Availability Suite Software

Understanding StorageTek Availability Suite Software in a Cluster

Data Replication Methods Used by StorageTek Availability Suite Software

Replication in the Example Configuration

Guidelines for Configuring Host-Based Data Replication Between Clusters

Configuring Replication Resource Groups

Configuring Application Resource Groups

Configuring Resource Groups for a Failover Application

Configuring Resource Groups for a Scalable Application

Guidelines for Managing a Takeover

Task Map: Example of a Data Replication Configuration

Connecting and Installing the Clusters

Example of How to Configure Device Groups and Resource Groups

How to Configure a Device Group on the Primary Cluster

How to Configure a Device Group on the Secondary Cluster

How to Configure the File System on the Primary Cluster for the NFS Application

How to Configure the File System on the Secondary Cluster for the NFS Application

How to Create a Replication Resource Group on the Primary Cluster

How to Create a Replication Resource Group on the Secondary Cluster

How to Create an NFS Application Resource Group on the Primary Cluster

How to Create an NFS Application Resource Group on the Secondary Cluster

Example of How to Enable Data Replication

How to Enable Replication on the Primary Cluster

How to Enable Replication on the Secondary Cluster

Example of How to Perform Data Replication

How to Perform a Remote Mirror Replication

How to Perform a Point-in-Time Snapshot

How to Verify That Replication Is Configured Correctly

This appendix provides an alternative to host-based replication that does not use Oracle Solaris Cluster Cluster Geographic Edition. Oracle recommends that you use Oracle Solaris Cluster Geographic Edition for host-based replication to simplify the configuration and operation of host-based replication between clusters. See Understanding Data Replication.

The example in this appendix shows how to configure host-based data replication between clusters using StorageTek Availability Suite software. The example illustrates a complete cluster configuration for an NFS application that provides detailed information about how individual tasks can be performed. All tasks should be performed in the global-cluster voting node. The example does not include all of the steps that are required by other applications or other cluster configurations.

If you use role-based access control (RBAC) instead of superuser to access the cluster nodes, ensure that you can assume an RBAC role that provides authorization for all Oracle Solaris Cluster commands. This series of data replication procedures requires the following Oracle Solaris Cluster RBAC authorizations if the user is not superuser:

solaris.cluster.modify

solaris.cluster.admin

solaris.cluster.read

See the Oracle Solaris Administration: Security Services for more information about using RBAC roles. See the Oracle Solaris Cluster man pages for the RBAC authorization that each Oracle Solaris Cluster subcommand requires.

This section introduces disaster tolerance and describes the data replication methods that StorageTek Availability Suite software uses.

Disaster tolerance is the ability to restore an application on an alternate cluster when the primary cluster fails. Disaster tolerance is based on data replication and takeover. A takeover relocates an application service to a secondary cluster by bringing online one or more resource groups and device groups.

If data is replicated synchronously between the primary and secondary cluster, then no committed data is lost when the primary site fails. However, if data is replicated asynchronously, then some data may not have been replicated to the secondary cluster before the primary site failed, and thus is lost.

This section describes the remote mirror replication method and the point-in-time snapshot method used by StorageTek Availability Suite software. This software uses the sndradm and iiadm commands to replicate data. For more information, see the sndradm(1M) and iiadm(1M) man pages.

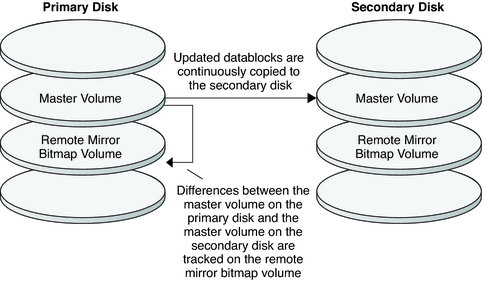

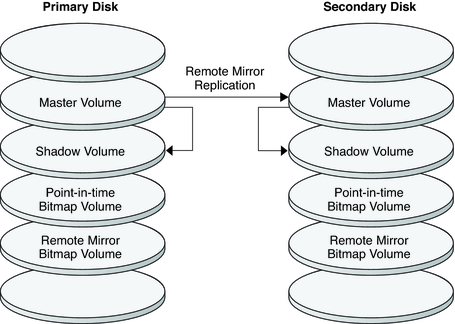

Figure A-1 shows remote mirror replication. Data from the master volume of the primary disk is replicated to the master volume of the secondary disk through a TCP/IP connection. A remote mirror bitmap tracks differences between the master volume on the primary disk and the master volume on the secondary disk.

Figure A-1 Remote Mirror Replication

Remote mirror replication can be performed synchronously in real time, or asynchronously. Each volume set in each cluster can be configured individually, for synchronous replication or asynchronous replication.

In synchronous data replication, a write operation is not confirmed as complete until the remote volume has been updated.

In asynchronous data replication, a write operation is confirmed as complete before the remote volume is updated. Asynchronous data replication provides greater flexibility over long distances and low bandwidth.

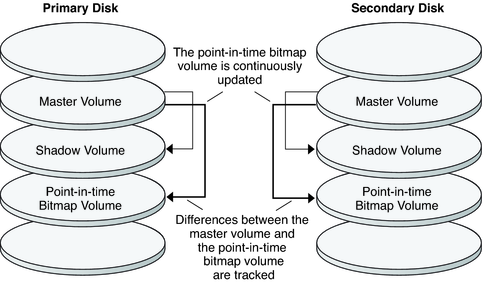

Figure A-2 shows a point-in-time snapshot. Data from the master volume of each disk is copied to the shadow volume on the same disk. The point-in-time bitmap tracks differences between the master volume and the shadow volume. When data is copied to the shadow volume, the point-in-time bitmap is reset.

Figure A-2 Point-in-Time Snapshot

Figure A-3 illustrates how remote mirror replication and point-in-time snapshot are used in this example configuration.

Figure A-3 Replication in the Example Configuration

This section provides guidelines for configuring data replication between clusters. This section also contains tips for configuring replication resource groups and application resource groups. Use these guidelines when you are configuring data replication for your cluster.

This section discusses the following topics:

Replication resource groups collocate the device group under StorageTek Availability Suite software control with a logical hostname resource. A logical hostname must exist on each end of the data replication stream, and must be on the same cluster node that acts as the primary I/O path to the device. A replication resource group must have the following characteristics:

Be a failover resource group

A failover resource can run on only one node at a time. When a failover occurs, failover resources take part in the failover.

Have a logical hostname resource

A logical hostname is hosted on one node of each cluster (primary and secondary) and is used to provide source and target addresses for the StorageTek Availability Suite software data replication stream.

Have an HAStoragePlus resource

The HAStoragePlus resource enforces the failover of the device group when the replication resource group is switched over or failed over. Oracle Solaris Cluster software also enforces the failover of the replication resource group when the device group is switched over. In this way, the replication resource group and the device group are always colocated, or mastered by the same node.

The following extension properties must be defined in the HAStoragePlus resource:

GlobalDevicePaths. This extension property defines the device group to which a volume belongs.

AffinityOn property = True. This extension property causes the device group to switch over or fail over when the replication resource group switches over or fails over. This feature is called an affinity switchover.

For more information about HAStoragePlus, see the SUNW.HAStoragePlus(5) man page.

Be named after the device group with which it is colocated, followed by -stor-rg

For example, devgrp-stor-rg.

Be online on both the primary cluster and the secondary cluster

To be highly available, an application must be managed as a resource in an application resource group. An application resource group can be configured for a failover application or a scalable application.

The ZPoolsSearchDir extension property must be defined in the HAStoragePlus resource. This extension property is required to use the ZFS file system.

Application resources and application resource groups configured on the primary cluster must also be configured on the secondary cluster. Also, the data accessed by the application resource must be replicated to the secondary cluster.

This section provides guidelines for configuring the following application resource groups:

In a failover application, an application runs on one node at a time. If that node fails, the application fails over to another node in the same cluster. A resource group for a failover application must have the following characteristics:

Have an HAStoragePlus resource to enforce the failover of the file system or zpool when the application resource group is switched over or failed over.

The device group is colocated with the replication resource group and the application resource group. Therefore, the failover of the application resource group enforces the failover of the device group and replication resource group. The application resource group, the replication resource group, and the device group are mastered by the same node.

Note, however, that a failover of the device group or the replication resource group does not cause a failover of the application resource group.

If the application data is globally mounted, the presence of an HAStoragePlus resource in the application resource group is not required but is advised.

If the application data is mounted locally, the presence of an HAStoragePlus resource in the application resource group is required.

For more information about HAStoragePlus, see the SUNW.HAStoragePlus(5) man page.

Must be online on the primary cluster and offline on the secondary cluster.

The application resource group must be brought online on the secondary cluster when the secondary cluster takes over as the primary cluster.

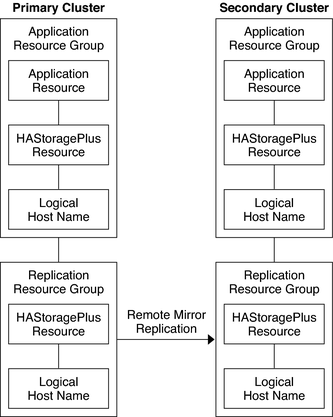

Figure A-4 illustrates the configuration of an application resource group and a replication resource group in a failover application.

Figure A-4 Configuration of Resource Groups in a Failover Application

In a scalable application, an application runs on several nodes to create a single, logical service. If a node that is running a scalable application fails, failover does not occur. The application continues to run on the other nodes.

When a scalable application is managed as a resource in an application resource group, it is not necessary to collocate the application resource group with the device group. Therefore, it is not necessary to create an HAStoragePlus resource for the application resource group.

A resource group for a scalable application must have the following characteristics:

Have a dependency on the shared address resource group

The nodes that are running the scalable application use the shared address to distribute incoming data.

Be online on the primary cluster and offline on the secondary cluster

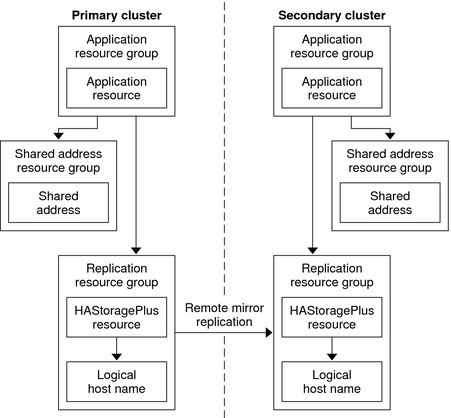

Figure A-5 illustrates the configuration of resource groups in a scalable application.

Figure A-5 Configuration of Resource Groups in a Scalable Application

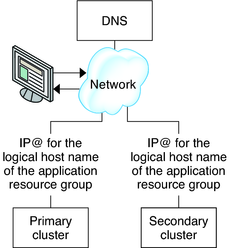

If the primary cluster fails, the application must be switched over to the secondary cluster as soon as possible. To enable the secondary cluster to take over, the DNS must be updated.

Clients use DNS to map an application's logical hostname to an IP address. After a takeover, where the application is moved to a secondary cluster, the DNS information must be updated to reflect the mapping between the application's logical hostname and the new IP address.

Figure A-6 DNS Mapping of a Client to a Cluster

To update the DNS, use the nsupdate command. For information, see the nsupdate(1M) man page. For an example of how to manage a takeover, see Example of How to Manage a Takeover.

After repair, the primary cluster can be brought back online. To switch back to the original primary cluster, perform the following tasks:

Synchronize the primary cluster with the secondary cluster to ensure that the primary volume is up-to-date. You can achieve this by stopping the resource group on the secondary node, so that the replication data stream can drain.

Reverse the direction of data replication so that the original primary is now, once again, replicating data to the original secondary.

Start the resource group on the primary cluster.

Update the DNS so that clients can access the application on the primary cluster.

Table A-1 lists the tasks in this example of how data replication was configured for an NFS application by using StorageTek Availability Suite software.

Table A-1 Task Map: Example of a Data Replication Configuration

|

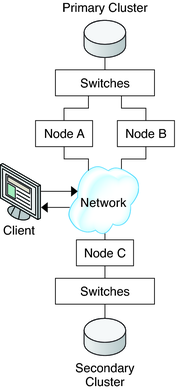

Figure A-7 illustrates the cluster configuration the example configuration uses. The secondary cluster in the example configuration contains one node, but other cluster configurations can be used.

Figure A-7 Example Cluster Configuration

Table A-2 summarizes the hardware and software that the example configuration requires. The Oracle Solaris OS, Oracle Solaris Cluster software, and volume manager software must be installed on the cluster nodes before StorageTek Availability Suite software and software updates are installed.

Table A-2 Required Hardware and Software

|

This section describes how device groups and resource groups are configured for an NFS application. For additional information, see Configuring Replication Resource Groups and Configuring Application Resource Groups.

This section contains the following procedures:

How to Configure the File System on the Primary Cluster for the NFS Application

How to Configure the File System on the Secondary Cluster for the NFS Application

How to Create a Replication Resource Group on the Primary Cluster

How to Create a Replication Resource Group on the Secondary Cluster

How to Create an NFS Application Resource Group on the Primary Cluster

How to Create an NFS Application Resource Group on the Secondary Cluster

The following table lists the names of the groups and resources that are created for the example configuration.

Table A-3 Summary of the Groups and Resources in the Example Configuration

|

With the exception of devgrp-stor-rg, the names of the groups and resources are example names that can be changed as required. The replication resource group must have a name with the format devicegroupname-stor-rg.

For information about Solaris Volume Manager software, see the Chapter 4, Configuring Solaris Volume Manager Software, in Oracle Solaris Cluster Software Installation Guide.