References

This section of the document consists of information related to intermediate

actions that needs to be performed while completing a task. The procedures

are common to all the sections and are referenced wherever required. You

can refer to the following sections based on your need.

RDBMS

RDBMS or relational

database management system stores data in the form of tables along with

the relationships of each data component. The data can be accessed or

reassembled in many different ways without having to change the table

forms.

RDBMS data

source lets you define the RDBMS engine present locally or on a remote

server using the FTP access. RDBMS can be defined to connect to any of

the RDBMS such as Oracle, Sybase, IBM DB2, MS SQL Server and any RDBMS

through native connectivity drivers or ODBC.

Passing

Runtime parameters in Data Mapping/ Data File Mapping

The following

Parameters are supported in Expressions, Joins and Filters used in the

Data Mapping definition.

$RUNID

$PHID

$EXEID

$RUNSK

$SYSDATE

$TASKID

$MISDATE

Apart from

the above $Parameters, any other parameter can be passed within Square-Brackets.

For example, [PARAM1], [PARAM2], [XYZ], [ABCD].

Additionally, L2H/H2H/T2H/H2T/F2H

mappings also support following additional default parameters. Values

for these are implicitly passed from ICC/RRF.

$MISDT_YYYY-MM-DD

- Data type is String and can be mapped to VARCHAR2. Value will be

the MISDATE in ‘yyyy-MM-dd‘ format.

$MISYEAR_YYYY

- Data type is String and can be mapped to VARCHAR2. Value will be

the year value in ‘yyyy‘ format from MISDATE.

$MISMONTH_MM

- Data type is String and can be mapped to VARCHAR2. Value will be

the month value in ‘MM‘ format from MISDATE.

$MISDAY_DD

- Data type is String and can be mapped to VARCHAR2. Value will be

the date value in ‘dd‘ format from MISDATE.

$SYSDT_YYYY-MM-DD-

Data type is String and can be mapped to VARCHAR2. Value will be the

System date in ‘yyyy-MM-dd‘ format.

$SYSHOUR_HH24

- Data type is String and can be mapped to VARCHAR2. Value will be

the hour value in ‘HH24‘ format from System date.

$MISDT_YYYYMMDD - Data type

is String and can be mapped to VARCHAR2. Value will be MISDATE in

YYYYMMDD date format.

$SYSDATE_YYYYMMDD-

Data type is String and can be mapped to VARCHAR2. Value will be system

date in YYYYMMDD date format.

Note:

The aforementioned parameters are not supported for T2T and F2T.

Two

additional parameters are also supported for L2H mappings:

[INCREMENTALLOAD] – Specify the value as TRUE/FALSE. If

set to TRUE, historically loaded data files will not be loaded

again (load history is checked against the definition name, source

name, target infodom, target table name and the file name combination).

If set to FALSE, the execution is similar to a snapshot load,

and everything from the source folder/file will be loaded irrespective

of load history.

[FOLDERNAME] – Value provided will be used to pick up the

data folder to be loaded.

For HDFS based Weblog source: Value will be suffixed to

HDFS File Path specified during the source creation.

For Local File System based Weblog source: By default the

system will look for execution date folder (MISDATE: yyyymmdd)

under STAGE/<source name>. If the user has specified the

FOLDERNAME for this source, system will ignore the MISDATE folder

and look for the directory provided as [FOLDERNAME].

Passing

values to the Runtime Parameters from the RRF module:

Values

for $Parameters are implicitly passed through RRF

Values

for dynamic parameters (given in Square Brackets) need to be passed

explicitly as: "PARAM1","param1Value", “PARAM2”,

“param2Valu

Passing values

to the Runtime Parameters from the Operations module

Value

for $MISDATE is passed implicitly from ICC

Value

for other $parameters and dynamic parameters (given in Square Brackets)

is passed as: [PARAM] = param1VALUE , $RUNSK = VALUE

Note:

If the Runtime parameter is a string or involves string comparison,

ensure that appropriate single quotes are given in the DI UI. For example,

Filter Condition can be DIM_COUNTRY.CountryName = ‘[PARAMCNTRY]’.

Back to Top

RAC

Real Application Clusters (RAC) allows multiple

computers to run RDBMS software simultaneously while accessing a single

database and providing a clustered database.

In an Oracle RAC environment, two or more

computers (each with an instance) concurrently access a single database.

This allows an application or user to connect to either of the computer

and have access to a single coordinated set of data. RAC addresses areas

such as fault tolerance, load balancing, and scalability.

Handling

Partitioned Target Tables

Data loading into a partitioned

Hive/Impala target table is supported. The partitioned columns are indicated

using a superscript P in the DI Mapping window.

You can set a static value to

a partitioned column from the AAI_FCT_PARTITION table. If it is set, you

can view it from the DI Mapping window by pointing the mouse over the

column name. You need not to map the target column to any source column.

If you map a source column to a target partitioned column which already

has a static value, the static value will get precedence.

If no static value is set to

a partitioned column, you can pass a dynamic partitioned valued. You should

map a source column to the target partitioned column. If there is no mapping

and static value is not set, the empty or blank is passed as the partition

value. Hive defaults the partition to _HIVE_DEFAULT_PARTITON_. There

is no loss of data in the non-partitioned columns.

Prescripts

Prescripts are fired on a Hive

connection, before firing a select from or insert into a hive table. While

defining Prescript, note the following:

Prescripts

should mandatorily begin with the keyword "SET".

Multiple

Prescripts should be semi-colon separated.

Prescripts

are validated for SQL Injection. The following key words are blocklisted:

"DROP","TRUNCATE","ALTER","DELETE","INSERT","UPDATE","CREATE",

"SELECT"

All validations applicable in

the UI are checked on execution also. If a prescript fails any of the

validations or if there is an error in firing the pre-script, the load

operation is exited.

Note:

For H2T, the Prescript is fired on the source.

Dynamic

Table Creation

This option allows you to create

a new table on the fly if the target Information Domain of the Data Mapping

or Data File Mapping definition is based on HDFS database. You can use

the newly created table for mapping. The newly created table will be part

of the OFSAAI data model and it is made visible and available to all other

modules.

You cannot create a table with

partition.

To dynamically create a table

From

the DI Mapping window, click  in the Target Entities pane.

The Create Table window is

displayed.

in the Target Entities pane.

The Create Table window is

displayed.

Enter

a table name and click Generate.

The new table name is displayed on the Target Entities pane.

Select

the required attributes from the Definition pane and map them to the

new Table in the Target Entities pane by clicking  button.

button.

After

defining all mappings, click Save. The table will be created in the

HDFS/ HIVE system, with the structure/data types of the mapped columns

and it will be added to the metadata repository (both database xml

and the object registration tables). The newly created table will

be available for use in other metadata like Datasets, Hierarchies,

and so on.

Flat File Types

A Flat File is a text and binary

file which contains data in a single line, i.e. one physical record per

line. For example, a list of names, addresses, and phone numbers. Flat

Files are of two types namely, Delimated File and Fixed Width File.

Delimited File refers to a Flat

File in which the data is organized in rows and columns and are separated

by delimiters (commas). Each row has a set of data, and each column

has a type of data. For example, a csv (comma separated values) file.

Fixed Width or Fixed Position File

refers to a Flat File in which the data is defined by the character

position (tab space). The data is formulated in such a way that the

data fields are of same size and the file is compact in size. For

example, the character spacing of a Birth date data column is known

and hence the extra spaces between the Birth date column and other

column can be eliminated.

Table

Classification

DQ rules can be defined on a

table is decided by a new Servlet parameter ENABLE_CLASSIFICATION, which

is present in the web.xml file.

If ENABLE_CLASSIFICATION is set

to Y, any tables with classification code 340 can be selected as base

table for DQ rule definition. This is the old behavior.

If ENABLE_CLASSIFICATION is set

to N, then irrespective of the classification any table can be selected

as base table for DQ rule definition.

Workspace Options

The workspace option consists of the various elements available in the

user interface to help you in selecting an option or to navigate to a

specific location on the page. The available workspace options are discussed

in detail.

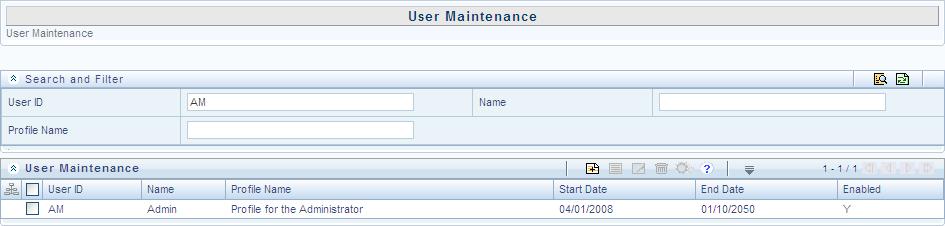

Search and Filter

The Search and Filter option in the user interface helps you to find

the required information. You can enter the nearest matching keywords

to search, and filter the results by entering information in the additional

fields.

For example, if you are in the User

Maintenance screen and need to search for administrator details,

enter the User ID and filter the

results by specifying either the Name

or Profile Description or both.

The search results are always filtered based on the additional information

you provide.

You can click  to start

a search and

to start

a search and  to reset the

search fields.

to reset the

search fields.

Back to Top

Pagination

The Pagination toolbar as indicated below is available in the user interface

screen and helps you to navigate through the display grid. The toolbar

displays the total number of available list items and the number of list

items displayed in the current view.

In the pagination toolbar, you can do the following:

Back to Top

Customize

work area

You can use the interface options to customize and auto adjusted the

work area.

To View or

Hide the left hand side (LHS) menu, click the collapsible icon.

To Expand/Collapse

a grid or section, click  or

or  icons.

icons.

Back to Top

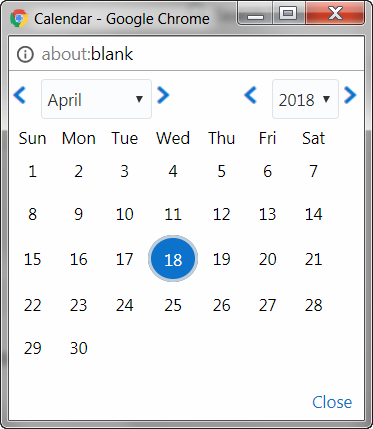

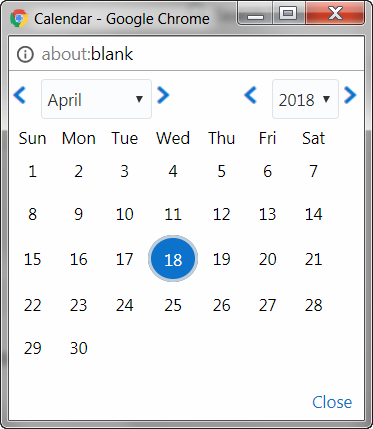

Calendar

Calendar icon in the user interface helps you to specify a date in the

DD/MM/YYYY format by selecting from the pop-up calendar. You can navigate

to the specific month or year by using the arrow buttons or select using

the drop down list. When you click on the required date the details are

auto updated in the date field.

Back to Top

Function

Mapping Codes

The following table lists the function codes with their description

to help you identify the user functions who needs to access the Infrastructure

system and map roles appropriately.

Function

Code |

Function

Name |

Function

Description |

ADAPTERS |

Run

Adapters |

The

user mapped to this function will have rights to run OFSAAI adapters |

ADDPROCESS |

Add

Process tree |

The

user mapped to this function can add the process tree |

ADDRULE |

Add

Rule |

The

user mapped to this function can add the rules |

ADDRUN |

Add

Run |

The

user mapped to this function can add the run |

ADMINSCR |

Administration

Screen |

The

user mapped to this function can access the Administration Screen |

ADVDRLTHR |

Access

to Advanced drill through |

The

User mapped to this function will have access to Advanced Drill

through |

ALDADD |

Add

Cube |

The

user mapped to this function can add cubes |

ALDATH |

Authorize

Cube |

The

user mapped to this function can authorize cubes |

ALDDEL |

Delete

Cube |

The

user mapped to this function will have rights to delete cubes |

ALDMOD |

Modify

Cube |

The

user mapped to this function can modify cubes |

ALDVIW |

View

Cube |

The user mapped to this function can

view cubes |

ALSADD |

Add

Alias |

The user mapped to this function can

add Alias |

ALSATH |

Authorize

Alias |

The user mapped to this function can

authorize Alias |

ALSDEL |

Delete

Alias |

The user mapped to this function will

have rights to delete Alias |

ALSMOD |

Modify

Alias |

The user mapped to this function can

modify Alias |

ALSVIW |

View

Alias |

The user mapped to this function can

view Alias |

APPSRVR |

Application

Server Screen |

The user mapped to this function can

access the Application Server Screen |

ATHPROCESS |

Authorize

Process Tree |

The user mapped to this function can

authorize Process Tree |

ATHRDM |

Authorize

RDM |

The user mapped to this function can

authorize RDM |

ATHRULE |

Authorize

Rule |

The user mapped to this function can

authorize the rule |

ATHRUN |

Authorize

Run |

The user mapped to this function can

authorize run |

ATTADD |

Add

Attributes |

The user mapped to this function can

add Hierarchy Attributes |

ATTATH |

Authorize

Attributes |

The user mapped to this function can

authorize Hierarchy Attributes |

ATTDEL |

Delete

Attributes |

The user mapped to this function can

delete Hierarchy Attributes |

ATTMOD |

Modify

Attributes |

The user mapped to this function can

add Hierarchy Attributes |

ATTVIW |

View

Attributes |

The user mapped to this function can

view Hierarchy Attributes |

AUD_TRL |

Audit

Trail Report Screen |

The user mapped to this function can

access the Audit Trail Report Screen |

AUTH_MAP |

Authorize

Map(s) |

The user mapped to this function can

AUTHORIZE Map definitions |

AUTH_SCR |

Metadata

Authorize Screen |

The user mapped to this function can

see Authorization Screen |

BATPRO |

Batch

Processing |

The user mapped to this function will

have rights to process batch |

BBATH |

Authorize

BBs |

The user mapped to this function can

authorize BBs |

BGCREATION |

Batch

Group Creation |

The user mapped to this function will

have rights to Creating Batch Group |

BGEXEC |

Batch

Group Execution |

The user mapped to this function will

have rights to Execute Batch Group |

BGMONITOR |

Batch

Group Monitor |

The user mapped to this function will

have rights to Monitor Batch Group Execution |

BGRESTART |

Batch

Group Restart |

The user mapped to this function will

have rights to Restart Batch Group Execution |

BPROCADD |

Add

Business Processor |

The user mapped to this function can

add business processors |

BPROCATH |

Authorize

Business Processor |

The user mapped to this function can

authorize business processors |

BPROCDEL |

Delete

Business Processor |

The user mapped to this function can

delete business processors |

BPROCMOD |

Modify

Business Processor |

The user mapped to this function can

modify business processors |

BPROCVIW |

View

Business Processor |

The user mapped to this function can

view business processors |

CFEDEF |

Cash

Flow Equation Definition |

The user mapped to this function can

view/add the

Cash Flow Equation definitions |

CFG |

Configuration |

The user mapped to this function will

have access to configuration details |

COMADD |

Add

Computed Measure |

The user mapped to this function can

add computed measures |

COMADV |

Computed

Measure Advanced |

The user mapped to this function will

have rights to the advanced options of computed measure |

COMATH |

Authorize

Computed Measure |

The user mapped to this function can

authorize computed measures |

COMDEL |

Delete

Computed Measure |

The user mapped to this function will

have rights to delete computed measures |

COMMOD |

Modify

Computed Measure |

The user mapped to this function can

modify computed measures |

COMVIW |

View

Computed Measures |

The user mapped to this function can

view computed measures |

CRTRDM |

Add

RDM |

The user mapped to this function can

Add RDM |

CRT_MAP |

Create

Map |

The user mapped to this function can

CREATE/SAVEAS Map definitions |

CWSDOCMGMT |

Document

Management Access |

The user mapped to this function can

use Document Management APIS via Callable Services Framework |

CWSEXTWSAS |

Call

Remote Web Services |

The user mapped to this function can

call web services configured in the Callable Services Framework |

CWSHIERRFR |

Refresh

Hierarchies |

The user mapped to this function can

refresh hierarchies through the Callable Services Framework |

CWSPR2ACCS |

Execute

Runs - Rules |

The user mapped to this function can

execute runs and rules through the Callable Services Framework |

CWSSMSACCS |

Remote

SMS Access |

The user mapped to this function can

access SMS APIS through the Callable

Services Framework |

CWSUMMACCS |

Remote

UMM Access |

The user mapped to this function can

access UMM APIS through the Callable

Services Framework |

CWS_STATUS |

Result

of request - Status of all |

The user mapped to this function can

access requests status through the Callable Services Framework |

CWS_TRAN |

Result

of own request only |

The user mapped to the function can

access own requests status using Callable Services Framework |

DATADD |

Add

Dataset |

The user mapped to this function can

add datasets |

DATATH |

Authorize

Dataset |

The user mapped to this function can

authorize datasets |

DATDEL |

Delete

Dataset |

The user mapped to this function will

have rights to delete datasets |

DATMOD |

Modify

Dataset |

The user mapped to this function can

modify datasets |

DATVIW |

View

Dataset |

The user mapped to this function can

view datasets |

DBATH |

Authorize

DBs |

The user mapped to this function can

authorize DBs |

DBD |

Database

Details |

The user mapped to this function will

have access to database details |

DBS |

Database

Server |

The user mapped to this function will

have access to Database Server details |

DEEADD |

Add

Derived Entities |

The user mapped to this function can

add derived entities |

DEEATH |

Authorize

Derived Entities |

The user mapped to this function can

authorize derived entities |

DEEDEL |

Delete

Derived Entities |

The user mapped to this function can

delete derived entities |

DEEMOD |

Modify

Derived Entities |

The user mapped to this function can

modify derived entities |

DEEVIW |

View

Derived Entities |

The user mapped to this function can

view derived entities |

DEFADM |

Defi

Administrator |

The user mapped to this function will

have Defi Administration rights |

DEFEXL |

DeFi

Excel |

DeFi Excel |

DEFQADM |

Defq

Administrator |

The user mapped to this function will

have Defi Administration rights |

DEFQUSR |

Defq

User |

The user mapped to this function will

have Defi user rights |

DEFUSR |

Defi

User |

The user mapped to this function will

have Defi user rights |

DELPROCESS |

Delete

Process |

The user mapped to this function can

the process |

DELRDM |

Delete

RDM |

The user mapped to this function can

delete RDM |

DELRULE |

Delete

Rule |

The user mapped to this function can

delete the rules |

DELRUN |

Delete

Run |

The user mapped to this function can

delete the run |

DEL_MAP |

Delete

Map |

The user mapped to this function can

DELETE Map definitions |

DESRDM |

Design

RDM |

The user mapped to this function can

design RDM |

DESREV |

Design

Reveleus Menu Screen |

The user mapped to this function can

access the Design Reveleus Menu Screen |

DIMADD |

Add

Dimension |

The user mapped to this function can

add dimensions |

DIMATH |

Authorize

Dimension |

The user mapped to this function can

authorize dimensions |

DIMDEL |

Delete

Dimension |

The user mapped to this function will

have rights to delete dimensions |

DIMMOD |

Modify

Dimension |

The user mapped to this function can

modify dimensions |

DIMVIW |

View

Dimension |

The user mapped to this function can

view dimensions |

DQLADD |

Data

Quality Add |

This function is for Data Quality Map

applet |

DQ_ADD |

Data

Quality Add Rule |

The user mapped to this function can

add DQ Rule |

DQ_AUTH |

Data

Quality Authorization Rule |

The user mapped to this function can

authorize DQ Rule |

DQ_CPY |

Data

Quality Copy Rule |

The user mapped to this function can

copy DQ Rule |

DQ_DEL |

Data

Quality Delete Rule |

The user mapped to this function can

delete DQ Rule |

DQ_EDT |

Data

Quality Edit Rule |

The user mapped to this function can

edit DQ Rule |

DQ_GP_ADD |

Data

Quality Add Rule Group |

The user mapped to this function can

add DQ Rule Group |

DQ_GP_CPY |

Data

Quality Copy Rule Group |

The user mapped to this function can

copy DQ Rule Group |

DQ_GP_DEL |

Data

Quality Delete Rule Group |

The user mapped to this function can

delete DQ Rule Group |

DQ_GP_EDT |

Data

Quality Edit Rule Group |

The user mapped to this function can

edit DQ Rule Group |

DQ_GP_EXEC |

Data

Quality Execute Rule Group |

The user mapped to this function can

execute DQ Rule Group |

DQ_GP_VIW |

Data

Quality View Rule Group |

The user mapped to this function can

view DQ Rule Group |

DQ_VIW |

Data

Quality View Rule |

The user mapped to this function can

view DQ Rule |

ENABLEUSR |

Enable

User Screen |

The user mapped to this function can

access the Enable User Screen |

ETLDEF |

DI

Designer |

Defining Application, Extract, Flat-File,

Mapping |

ETLDTQ |

DTDQ |

Data Quality Rules and Data Transformation |

ETLUSR |

DI

User |

The user mapped to this function will

be a Data Integrator user |

EXPMD |

Export

Metadata |

The user mapped to this function can

Export Metadata |

FIFADMIN |

Alerts

Administrator |

The user mapped to this function can

define admin mode rules |

FIFUSR |

Alerts

User |

The user mapped to this function will

be an Alerts user |

FUNCMAINT |

Function

Maintenance Screen |

The user mapped to this function can

access the Function Maintenance Screen |

FUNCROLE |

Function

Role Map Screen |

The user mapped to this function can

access the Function Role Map Screen |

FU_ATR_ADD |

Fusion

Add Attributes |

The user mapped to this function can

Create New Attributes |

FU_ATR_CPY |

Fusion

Copy Attributes |

The user mapped to this function can

Copy Attributes |

FU_ATR_DD |

Fusion

Attributes - View Dependent Data |

The user mapped to this function can

View Dependent Data for Attributes |

FU_ATR_DEL |

Fusion

Delete Attributes |

The user mapped to this function can

Delete Attributes |

FU_ATR_EDT |

Fusion

Edit Attributes |

The user mapped to this function can

Edit Attributes |

FU_ATR_HP |

Fusion

Attribute Home Page |

The user mapped to this function can

view Attribute Home Page |

FU_ATR_VIW |

Fusion

View Attributes |

The user mapped to this function can

View Attributes |

FU_EXP_ADD |

Fusion

Add Expressions |

The user mapped to this function can

Create New Expressions |

FU_EXP_CPY |

Fusion

Copy Expressions |

The user mapped to this function can

Copy Expressions |

FU_EXP_DD |

Fusion

View Dependency Expressions |

The user mapped to this function can

View Dependent Data for Expressions |

FU_EXP_DEL |

Fusion

Delete Expressions |

The user mapped to this function can

Delete Expressions |

FU_EXP_EDT |

Fusion

Edit Expressions |

The user mapped to this function can

Edit Expressions |

FU_EXP_HP |

Fusion

Expressions Home

Page |

The user mapped to this function can

view Expressions Home Page |

FU_EXP_VIW |

Fusion

View Expressions |

The user mapped to this function can

View Expressions |

FU_FIL_ADD |

Fusion

Add Filters |

The user mapped to this function can

Create New Filters |

FU_FIL_CPY |

Fusion

Copy Filters |

The user mapped to this function can

Copy Filters |

FU_FIL_DD |

Fusion

Filters - View Dependent Data |

The user mapped to this function can

View Dependent Data for Filters |

FU_FIL_DEL |

Fusion

Delete Filters |

The user mapped to this function can

Delete Filters |

FU_FIL_EDT |

Fusion

Edit Filters |

The user mapped to this function can

Edit Filters |

FU_FIL_HP |

Fusion

Filters Home Page |

The user mapped to this function can

view Filters Home Page |

FU_FIL_SQL |

Fusion

Filters - View SQL |

The user mapped to this function can

view SQL for Filters |

FU_FIL_VIW |

Fusion

View Filters |

The user mapped to this function can

View Filters |

FU_HIE_ADD |

Fusion

Add Hierarchies |

The user mapped to this function can

Create New Hierarchies |

FU_HIE_CPY |

Fusion

Copy Hierarchies |

The user mapped to this function can

Copy Hierarchies |

FU_HIE_DD |

Fusion

Hierarchies - View Dependent Data |

The user mapped to this function can

View Dependent Data for Hierarchies |

FU_HIE_DEL |

Fusion

Delete Hierarchies |

The user mapped to this function can

Delete Hierarchies |

FU_HIE_EDT |

Fusion

Edit Hierarchies |

The user mapped to this function can

Edit Hierarchies |

FU_HIE_HP |

Fusion

Hierarchy Home Page |

The user mapped to this function can

view Hierarchy Home Page |

FU_HIE_UMM |

Fusion

Hierarchies to UMM Mapping |

The user mapped to this function can

Map Fusion Hierarchies to UMM Hierarchies |

FU_HIE_VIW |

Fusion

View Hierarchies |

The user mapped to this function can

View Hierarchies |

FU_MEM_ADD |

Fusion

Add Members |

The user mapped to this function can

Create New Members |

FU_MEM_CPY |

Fusion

Copy Members |

The user mapped to this function can

Copy Members |

FU_MEM_DD |

Fusion

Members - View Dependent Data |

The user mapped to this function can

View Dependent Data for Members |

FU_MEM_DEL |

Fusion

Delete Members |

The user mapped to this function can

Delete Members |

FU_MEM_EDT |

Fusion

Edit Members |

The user mapped to this function can

Edit Members |

FU_MEM_HP |

Fusion

Member Home Page |

The user mapped to this function can

view Member Home Page |

FU_MEM_VIW |

Fusion

View Members |

The user mapped to this function can

View Members |

FU_MIG_ADD |

Object

Migration Create Migration Ruleset |

The user mapped to this function can

Create Migration Ruleset |

FU_MIG_CFG |

Object

Migration Source Configuration |

The user mapped to this function can

manipulate Source Configuration |

FU_MIG_CPY |

Object

Migration Copy Migration Ruleset |

The user mapped to this function can

Object Migration Edit Migration RulesetCopy Migration Ruleset |

FU_MIG_CRN |

Cancel

Migration Execution |

The user mapped to this function can

Cancel migration execution |

FU_MIG_DEL |

Object

Migration Delete Migration Ruleset |

The user mapped to this function can

Delete Migration Ruleset |

FU_MIG_EDT |

Object

Migration Edit Migration Ruleset |

The user mapped to this function can

Edit Migration Ruleset |

FU_MIG_HP |

Object

Migration Home Page |

The user mapped to this function can

Object Migration Link |

FU_MIG_RUN |

Execute/Run

Migration Process |

The user mapped to this function can

Run the migration process |

FU_MIG_VCF |

Object

Migration ViewSource Configuration |

The user mapped to this function can

view Source Configuration |

FU_MIG_VIW |

Object

Migration View Migration Ruleset |

The user mapped to this function can

View Migration Ruleset |

FU_SQL_ADD |

SQL

Rule Add |

This function is for SQL Rule Add |

FU_SQL_CPY |

SQL

Rule Copy |

This function is for SQL Rule Copy |

FU_SQL_DEL |

SQL

Rule Delete |

This function is for SQL Rule Delete |

FU_SQL_EDT |

SQL

Rule Edit |

This function is for SQL Rule Edit |

FU_SQL_RUN |

SQL

Rule Run |

This function is for SQL Rule Run |

FU_SQL_VIW |

SQL

Rule View |

This function is for SQL Rule View |

GMVDEF |

GMV

Definition |

The user mapped to this function can

view/add the

General Market Variable definitions |

GSTMNU |

Menu

for Guest User |

Menu for Guest User |

HCYADD |

Add

Hierarchy |

The user mapped to this function can

add hierarchies |

HCYATH |

Authorize

Hierarchy |

The user mapped to this function can

authorize hierarchies |

HCYDEL |

Delete

Hierarchy |

The user mapped to this function will

have rights to delete hierarchies |

HCYMOD |

Modify

Hierarchy |

The user mapped to this function can

modify hierarchies |

HCYVIW |

View

Hierarchy |

The user mapped to this function can

view hierarchies |

HOLMAINT |

Holiday

Maintenance Screen |

The user mapped to this function can

access the Holiday Maintenance Screen |

HSEC |

Hierarchy

Security |

The user mapped to this function will

have access to hierarchy security settings |

IBMADD |

Import

Business Model |

The user mapped to this function can

import business models |

IMPMD |

Import

Metadata |

The user mapped to this function can

Import Metadata |

IND |

Information

Domain |

The user mapped to this function will

have access to Information Domain details |

KPIATH |

Authorize

KPIs |

The user mapped to this function can

authorize KPIs |

LOCDESC |

Locale

Desc Upload

Screen |

The user mapped to this function can

access the Locale Desc Upload Screen |

MDDIFF |

Metadata

Difference Screen |

The user mapped to this function can

access the Metadata Difference Screen |

MDLAUTH |

Model

Authorize |

The user mapped to this function can

Authorize Model Maintenance |

MDLCALIB |

Model

Calibration |

The user mapped to this function can

view/add the

Model Calibration screen |

MDLCHAMP |

Model

Make Champion |

The user mapped to this function can

view the Champion Challenger screen |

MDLDEF |

Model

Definition |

The user mapped to this function can

view/add the Model definitions |

MDLDEPLOY |

Model

Deployment |

The user mapped to this function can

access the Model Deployment screen |

MDLEXEC |

Model

Execution |

The user mapped to this function can

access the Model Execution screen |

MDLOUTPUT |

Model

Outputs |

The user mapped to this function can

view the Model Outputs |

MDMP |

Metadata

Segment Map |

The user mapped to this function will

have rights to perform metadata segment mapping |

METVIW |

View

Metadata |

The user mapped to this function can

access metadata browser |

MODPROCESS |

Modify

Process Tree |

The user mapped to this function can

modify Process Tree |

MODRDM |

Modify

RDM |

The user mapped to this function can

Modify RDM |

MODRULE |

Modify

Rule |

The user mapped to this function can

modify the rules |

MODRUN |

Modify

Run |

The user mapped to this function can

modify run |

MOD_MAP |

Modify

Map |

The user mapped to this function can

SAVE Map definitions |

MSRADD |

Add

Measure |

The user mapped to this function can

add measures |

MSRATH |

Authorize

Measure |

The user mapped to this function can

authorize measures |

MSRDEL |

Delete

Measure |

The user mapped to this function will

have rights to delete measures |

MSRMOD |

Modify

Measure |

The user mapped to this function can

modify measures |

MSRVIW |

View

Measure |

The user mapped to this function can

view measures |

NVATH |

Authorize

Nested Views |

The user mapped to this function can

authorize Nested Views |

OLAPDETS |

OLAP

Details Screen |

The user mapped to this function can

access the OLAP Details Screen |

OPRADD |

Create

Batch |

The user mapped to this function will

have rights to define batches |

OPRCANCEL |

Batch

Cancellation |

The user mapped to this function can

Cancel Batch |

OPRDEL |

Delete

Batch |

The user mapped to this function will

have rights to delete batches |

OPREXEC |

Execute

Batch |

The user mapped to this function will

have rights to run, restart and rerun batches |

OPRMON |

Batch

Monitor |

The user mapped to this function will

have rights to monitor batches |

OPTDEF |

Optimizer

Add |

The user mapped to this function can

view/add the Optimizer definitions |

OPTDEL |

Optimizer

Delete |

The user mapped to this function can

delete the Optimizer definitions |

ORACBADD |

Add

Oracle Cube |

The user mapped to this function can

add Oracle cubes |

ORACBATH |

Authorize

Oracle Cube |

The user mapped to this function can

authorize Oracle cubes |

ORACBDEL |

Delete

Oracle Cube |

The user mapped to this function will

have rights to delete Oracle cubes |

ORACBMOD |

Modify

Oracle Cube |

The user mapped to this function can

modify Oracle cubes |

ORACBVIW |

View

Oracle Cube |

The user mapped to this function can

view Oracle cubes |

PGATH |

Authorize

Pages |

The user mapped to this function can

authorize Pages |

POOLDEF |

Pooling

Add |

The user mapped to this function can

view/add the Pooling definitions |

POOLDEL |

Pooling

Delete |

The user mapped to this function can

delete the Pooling definitions |

PR2SCREEN |

PR2

Screens |

The user mapped to this function can

access PR2 screens |

PROFMAINT |

Profile

Maintenance Screen |

The user mapped to this function can

access the Profile Maintenance Screen |

REPATH |

Authorize

Reports |

The user mapped to this function can

authorize Reports |

RESTPASS |

Restricted

Passwords Screen |

The user mapped to this function can

access the Restricted Passwords Screen |

RLSETCFG |

Rules

Setup Configuration Screen |

The user mapped to this function can

access the Rules Setup Configuration Screen |

ROLEMAINT |

Role

Maintenance Screen |

The user mapped to this function can

access the Role Maintenance Screen |

RULESHKDEF |

Rule

Shock Definition |

The user mapped to this function can

define the rule shocks |

SANDBXAUTH |

Sandbox

Authorize |

The user mapped to this function can

Authorize a Sandbox Maintenance |

SANDBXCR |

Sandbox

Creation |

The user mapped to this function can

view/add the

Sandbox definitions |

SANDBXMOD |

Sandbox

Maintenance |

The user mapped to this function can

view the Sandbox Maintenance |

SAVEMD |

Save

Metadata Screen |

The user mapped to this function can

access the Save Metadata Screen |

SCNDEF |

Scenario

Definition |

The user mapped to this function can

define the scenarios |

SCRBAU |

Business

Analyst User Screen |

The user mapped to this function can

access the business analyst user screen |

SCRDES |

Access

to Designer |

The User mapped to this function will

have access to Designer |

SCROPC |

Operator

Console |

The user mapped to this function will

have access to the operator console |

SCRPRT |

Portal

User |

The user mapped to this function will

be a portal user |

SCRRUN |

Access

to Runner |

The User mapped to this function will

have access to Runner |

SCRSAU |

System

Administrator Screen |

The user mapped to this function can

access system administrator screens |

SCRVIEW |

Access

to Viewer |

The User mapped to this function will

have access to Viewer |

SCR_ROR |

Access

to Operational Risk |

The user mapped to this function can

access Operational Risk |

SEGMAINT |

Segment

Maintenance Screen |

The user mapped to this function can

access the Segment Maintenance Screen |

STRESSDEF |

Stress

Definition |

The user mapped to this function can

define the stress |

SYSADM |

System

Administrator |

The user mapped to this function will

be a system administrator |

SYSATH |

System

Authorizer |

The user mapped to this function will

be a system authorizer |

TEMPATH |

Authorize

Templates |

The user mapped to this function can

authorize Templates |

TRANS_OWNR |

Access

to Transfer Ownership |

The User mapped to this function will

have access to Transfer Portal Objects |

TSK_MNU |

Access

to My Tasks |

The user mapped to this function can

access My Tasks |

UGDOMMAP |

User

Group Domain Map Screen |

The user mapped to this function can

access the User Group Domain Map Screen |

UGMAINT |

User

Group Maintenance Screen |

The user mapped to this function can

access the User Group Maintenance Screen |

UGMAP |

User

Group User Map Screen |

The user mapped to this function can

access the User Group User Map Screen |

UGROLMAP |

User

Group Role Map Screen |

The user mapped to this function can

access the User Group Role Map Screen |

USRACTREP |

User

Activity Reports Screen |

The user mapped to this function can

access the User Activity Reports Screen |

USRATH |

User

Authorization Screen |

The user mapped to this function can

access the User Authorization Screen |

USRATTUP |

User

Attribute Upload Screen |

The user mapped to this function can

access the User Attribute Upload Screen |

USRBATMAP |

User-Batch

Execution Mapping Screen |

The user mapped to this function can

access the User-Batch Execution Mapping Screen |

USRMAINT |

User

Maintenance Screen |

The user mapped to this function can

access the User Maintenance Screen |

USRPROFREP |

User

Profile Report Screen |

The user mapped to this function can

access the User Profile Report Screen |

VARDEF |

Variable

Definition |

The user mapped to this function can

view/add the Variable definitions. |

VARSHKDEF |

Variable

Shock Definition |

The user mapped to this function can

define the variable shocks |

VARTRANS |

Variable

Transformation |

The user mapped to this function can

view and add the Variable Transformation screen |

VIEWLOG |

View

log |

The user mapped to this function will

have rights to view log |

VIEWPROC |

View

Process |

The user mapped to this function can

view the process tree definitions |

VIEWRULE |

View

Rule |

The user mapped to this function can

view the rules definitions |

VIEWRUN |

View

Run |

The user mapped to this function can

view the run definitions |

VIEW_HOME |

View

OFSAAI LHS Menu |

The user mapped to this function can

view main LHS menu |

VIWATH |

Authorize

Views |

The user mapped to this function can

authorize Views |

VIWRDM |

View

RDM |

The user mapped to this function can

view RDM |

VSDEF |

VariableSet

Definition |

The user mapped to this function can

define the variablesets |

WEBSRVR |

Web

Server Screen |

The user mapped to this function can

access the Web Server Screen |

WRTPR_BAT |

Write-Protected

Batch Screen |

The user mapped to this function can

access the Write-Protected Batch Screen |

XLADMIN |

Excel

Admin |

The user mapped to this function can

define Excel Mapping |

XLUSER |

Excel

User |

The user mapped to this function can

Upload Excel Data |

Back to Top

Role Mapping

Codes

By default, the following roles are defined within the Infrastructure

application:

Role

Code |

Role

Name |

Role

Description |

CWSADMIN |

CWS

Administrator |

CWS

Administrator Role |

DEFQMAN |

DEFQ

Manager |

Data

Entry Forma and Query Manager Role |

DQADMN |

DQ

Rule Admin |

Data

Quality Rule Admin Role |

ETLADM |

ETL

Analyst |

ETL

Analyst Role |

METAAUTH |

Metadata

Authorizer |

Metadata

Authorizer Role |

ORACUB |

Oracle

Cube Administrator |

Oracle

Cube Administrator Role |

PR2ADM |

PR2

Administrator |

PR2

Administrator Role |

SYSADM |

System

Administrator |

System

Administrator Role |

SYSAMHM |

Fusion

AMHM Admin |

Fusion

Dimension Maintenance Admin Role |

SYSAMHMUMM |

Fusion

AMHM UMM Map Admin |

Fusion

UMM Maintenance Admin Role |

SYSATH |

System

Authorizer |

System

Authorizer Role |

SYSBAU |

Business

Analyst |

Business

Analyst Role |

SYSEXPN |

Fusion

Expressions Admin |

Fusion

Expressions Admin Role |

SYSFILTERS |

Fusion

Filters Admin |

Fusion

Filters Admin Role |

SYSOBJMIG |

Object

Migration Admin |

Object

Migration Maintenance Admin Role |

SYSOPC |

Data

Centre Manager |

Operator

Console Role |

SYSSQLRULE |

SQL

Rule Admin |

SQL

Rule Administrator Role |

Back to Top

Function

Role Mapping

The default roles are mapped to the following functions within the Infrastructure

application.

Roles |

Function

Mappings |

Business

Analyst |

Add Alias

Add Attributes

Add Business Processor

Add Computed Measure

Add Cube

Add Dataset

Add Derived Entities

Add Dimension

Add Hierarchy

Add Measure

Add RDM

Alias Admin

Authorize Hierarchy

Authorize Attributes

Authorize Dataset

Authorize Dimension

Authorize Measure

Business Analyst User Screen

Call Remote Web Services

Cash Flow Equation Definition

Computed Measure Advanced

Defi Administrator

Defi User

Delete Alias

Delete Attributes

Delete Business Processor

Delete Computed Measure

Delete Cube

Delete Dataset

Delete Derived Entities

Delete Dimension

Delete Hierarchy

Delete Measure

Delete RDM

Design RDM

Document management Access

Excel Admin

Excel User

Execute Runs and Rules

Export Metadata

GMV Definition

Hierarchy Attributes

Import Business Model

Import Metadata |

Model Calibration

Model Definition

Model Deployment

Model Execution

Model Make Champion

Model Outputs

Modify Alias

Modify Attributes

Modify Business Processor

Modify Computed Measure

Modify Cube

Modify Dataset

Modify Derived Entities

Modify Dimension

Modify Hierarchy

Modify Measure

Modify RDM

Optimizer Add

Optimizer Delete

Pooling Add

Pooling Delete

Refresh Hierarchies

Remote SMS Access

Result of own request only

Result of Request and Status of all

Rule Shock Definition

Sandbox Creation

Sandbox Maintenance

Scenario Definition

Stress Definition

Variable Definition

Variable Shock Definition

View Alias

View Attributes

View Business Processor

View Computed Measures

View Cube

View Dataset

View Derived Entities

View Dimension

View Hierarchy

View Measure

View Metadata

View RDM |

CWS Administrator |

Call Remote Web Services

Document Management Access

Execute Runs - Rules

Refresh Hierarchies

Remote SMS Access

Remote UMM Access

Result of own request only

Result of request - Status of all |

Data

Centre Manager |

Batch Cancellation

Batch Group Creation

Batch Group Execution

Batch Group Monitor

Batch Group Restart

Batch Monitor

Batch Processing

Create Batch

Delete Batch

Execute Batch

Operator Console

View log |

DEFQ Manager |

DeFi

Excel

Defq

User

Defq

Administrator |

DQ Rule Admin |

Data

Quality Delete Rule

Data

Quality Authorization Rule

Data

Quality Add Rule

Data

Quality Edit Rule

Data

Quality Copy Rule

Data

Quality Execute Rule Group

Data

Quality View Rule Group

Data

Quality Copy Rule Group

Data

Quality Delete Rule Group

Data

Quality Add Rule Group

Data

Quality View Rule

Data

Quality Edit Rule Group |

ETL

Analyst |

DI

Designer

DTDQ

Data

Quality Add

DI

User |

Fusion

AMHM Admin |

Fusion

Add Attributes

Fusion

Add Hierarchies

Fusion

Add Members

Fusion

Attribute Home Page

Fusion

Attributes - View Dependent Data

Fusion

Copy Attributes

Fusion

Copy Hierarchies

Fusion

Copy Members

Fusion

Delete Attributes

Fusion

Delete Hierarchies

Fusion

Delete Members

Fusion

Edit Attributes

Fusion

Edit Hierarchies

Fusion

Edit Members

Fusion

Hierarchies - View Dependent Data

Fusion

Hierarchy Home Page

Fusion

Member Home Page

Fusion

Members - View Dependent Data

Fusion

View Attributes

Fusion

View Hierarchies

Fusion

View Members |

Fusion

AMHM UMM Map Admin |

Fusion

Hierarchies to UMM Mapping |

Fusion

Expressions Admin |

Fusion

Add Expressions

Fusion

Copy Expressions

Fusion

Delete Expressions

Fusion

Edit Expressions

Fusion

Expressions Home Page

Fusion

View Dependency Expressions

Fusion

View Expressions |

Fusion

Filters Admin |

Fusion

Add Filters

Fusion

Copy Filters

Fusion

Delete Filters

Fusion

Edit Filters

Fusion

Filters - View Dependent Data

Fusion

Filters - View SQL

Fusion

Filters Home Page

Fusion

View Filters |

Infrastructure

Administrator |

Configuration

Database

Details

Database

Server

Hierarchy

Security

Information

Domain

Metadata

Segment Map

Operator

Console

Infrastructure

Administrator

Infrastructure

Administrator Screen |

Metadata

Authorizer |

Authorize

Alias

Authorize

Attributes

Authorize

BBs

Authorize

Business Processor

Authorize

Computed Measure

Authorize

Cube

Authorize

Dataset

Authorize

DBs

Authorize

Derived Entities

Authorize

Dimension

Authorize

Hierarchy

Authorize

KPIs

Authorize

Measure

Authorize

Nested Views

Authorize

Pages

Authorize

Process Tree

Authorize

RDM

Authorize

Reports

Authorize

Rule

Authorize

Run

Authorize

Templates

Authorize

Views

Metadata

Authorize Screen

Model

Authorize

Sandbox

Authorize

View

Alias

View

Attributes

View

Business Processor

View

Computed Measures

View

Cube

View

Dataset

View

Derived Entities

View

Dimension

View

Hierarchy

View

Measure

View

Process

View

RDM

View

Rule

View

Run |

Object

Migration Admin |

Cancel

Migration Execution

Execute/Run

Migration Process

Object

Migration Copy Migration Ruleset

Object

Migration Create Migration Ruleset

Object

Migration Delete Migration Ruleset

Object

Migration Edit Migration Ruleset

Object

Migration Home Page

Object

Migration Source Configuration

Object

Migration View Migration Ruleset

Object

Migration ViewSource Configuration |

Oracle

Cube Administrator |

Add

Dataset

Add

Dimension

Add

Hierarchy

Add

Measure

Add

Oracle Cube

Authorize

Oracle Cube

Business

Analyst User Screen

Delete

Oracle Cube

Modify

Dataset

Modify

Dimension

Modify

Hierarchy

Modify

Measure

Modify

Oracle Cube

View

Alias

View

Dataset

View

Dimension

View

Hierarchy

View

Measure

View

Oracle Cube |

PR2

Administrator |

Access

to Process

Access

to Rule

Access

to Run

Add

Process tree

Add

Rule

Add

Run

Delete

Process

Delete

Rule

Delete

Run

Modify

Process Tree

Modify

Rule

Modify

Run

PR2

Screens

View

Process

View

Rule

View

Run |

SQL

Rule Admin |

SQL

Rule Edit

SQL

Rule View

SQL

Rule Add

SQL

Rule Run

SQL

Rule Delete

SQL

Rule Copy |

System

Administrator |

Administration

Screen

Application

Server Screen

Audit

Trail Report Screen

Batch

Cancellation

Batch

Monitor

Configuration

Database

Details

Database

Server

Design

OFSAAI Menu Screen

Enable

User Screen

Function

Maintenance Screen

Function

Role Map Screen

Hierarchy

Security

Holiday

Maintenance Screen

Information

Domain

Locale

Desc Upload Screen

Metadata

Difference Screen

Metadata

Segment Map

OLAP

Details Screen

Operator

Console

Restricted

Passwords Screen

Role

Maintenance Screen

Rules

Setup Configuration Screen

Save

Metadata Screen

Segment

Maintenance Screen

System

Administrator

System

Administrator Screen

User

Activity Reports Screen

User

Attribute Upload Screen

User

Group Domain Map Screen

User

Group Maintenance Screen

User

Group Role Map Screen

User

Group User Map Screen

User

Maintenance Screen

User

Profile Report Screen

User-Batch

Execution Mapping Screen

View

log

Web

Server Screen

Write-Protected

Batch Screen |

System

Authorizer |

Administration

Screen

Infrastructure

Administrator Screen

Profile

Maintenance Screen

System

Administrator Screen

System

Authorizer

User

Authorization Screen

Note: To access an object, the respective

Group or Role needs to be mapped instead of functions. |

Back to Top

SMS

Auto Authorization

If auto authorization is enabled, the system authorizer needs not to

manually authorize the user- user group mapping, user group-domain mapping,

user group-role mapping and user group-role-folder mapping. The mappings

get authorized automatically.

To enable auto authorization

Execute the following query in the Configuration Schema:

UPDATE CONFIGURATION SET PARAMVALUE ='TRUE'

WHERE PARAMNAME='SMS_AUTOAUTH_REQD'

Restart the OFSAA server.

Task

Component Parameters

Components are individual functional units that are put together to

form a process. Task Component Parameters reflect the parameters that

are being applied to the selected task. Each component triggers its own

set of processes in the back-end to achieve the final output.

Click on the following components to view the tabulated view of the

parameters required for each of them:

Component:

AGGREGATE DATA

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain.

The unique combination of the Datastore

Name and the Datastore Type determine

the physical machine on which the task will be executed. It is

assumed that the user gives the correct information else task

invocations may fail at runtime. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Cube Parameter |

Refers

to the cube identifier as defined through the Business Metadata

(Cube) menu option. Click the field in the Value column to select

the cube code. |

Operation |

Refers

to the operation to be performed. Click the drop-down list in

the Value field to select the Operation. The available options

are ALL, GENDATAFILES

and GENPRNFILES. |

Optional

parameters |

Refers

to the additional parameter that has to be processed during runtime.

You can specify the runsk value that should be processed as a

runtime parameter during execution. By default, the value is set

to "null". |

Back to Top

Component:

CREATE CUBE

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain.

The unique combination of the Datastore

Name and the Datastore Type determine

the physical machine on which the task will be executed. It is

assumed that the user gives the correct information else task

invocations may fail at runtime. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Cube Parameter |

Refers

to the cube identifier as defined through the Business Metadata

(Cube) menu option. Click the field provided in the Value column

to select the cube code. |

Operation |

Refers

to the operation to be performed. Click the drop down list to

select the Operation.

ALL - This option will execute

BUILDDB and DLRU. BUILDDB - This option should

be used to build the outline in Essbase Cube. The outline

is built based on the parentage file(s) contents. TUNEDB - This option should

be used to analyze data and optimize cube settings. For example,

if you are trying to achieve the best block size, where 64K

bytes is the ideal size. PROCESSDB - This option

will execute BUILDDB and DLRU, and is same as All option.

Selecting this option will internally assign as ALL. DLRU - This option

should be used to Load Data in the Essbase Cube and trigger

a Rollup. ROLLUP - ROLLUP refers to

populating data in parent nodes based on calculations (E.g.

Addition). This option should be used to trigger just the

ROLLUP option where in the CALC scripts are executed. The

same is applicable for DLRU option also. VALIDATE - This option will

validate the outline. DELDB - This option will

delete the Essbase cube. OPTSTORE - This option will

create the Optimized outline for the cube. |

Back to Top

Component:

EXTRACT DATA

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain.

The unique combination of the Datastore

Name and the Datastore Type determine

the physical machine on which the task will be executed. It is

assumed that the user gives the correct information else task

invocations may fail at runtime. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Source

Name |

Identifies

the Source from which the Extract is derived. This is defined

in the Define Source Screen of Data Integrator. Select the source

name from the drop down list. |

Extract

Name |

Identifies

the extract file definition file for the given source. This is

defined in the Define Extract screen of Data Integrator. |

Back to Top

Component:

LOAD DATA

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain.

The unique combination of the Datastore

Name and the Datastore Type determine

the physical machine on which the task will be executed. It is

assumed that the user gives the correct information else task

invocations may fail at runtime. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Load Mode |

Refers

to the mode which user wants to work, which means the user, can

transfer data from Table to Table or File to Table. |

Source

Name |

Identifies

the Source from which the extract is derived. This is defined

in the Define Source Screen of Data Integrator. Select the source

name from drop down list. |

File Name |

Identifies

the Data File Mapping (F2T) definition name or Data Mapping (T2T)

definition name as defined for the given source. This can be different

from the data file. File Name is defined in the Data File Mapping

window (F2T) or Data Mapping (T2T) window of Data Management Tools

framework. Select the file name from the drop down list. |

Data File

Name |

The

data filename refers to the .dat file that exists in the database.

Specifying Data File Name is mandatory for F2T definition and

optional in case of T2T definition. If the file name or the .dat

file name is incorrect, the task fails during execution. |

Default

Value |

Used

to pass values to the parameters defined in Load Data Definition.

You can pass multiple runtime parameters while defining a batch

by specifying the values separated by 'comma'.

For example, $MIS_DATE=value,$RUNSKEY=value,[DLCY]=value and

so on.

Note the following:

The

parameters can either be specified with $

or within [ ]. For

example, $RUNSKEY=value or [RUNSKEY]=value. When

the definition is saved from the UI, no value is assigned

to these parameters and these are just passed for syntax correctness

only. Actual values will be passed to these parameters while

defining an ICC batch or a RUN. The

list of valid Default Parameters are:

RUNID- Data type is String and can be mapped to

VARCHAR2 PHID- Data type is String and can be mapped to VARCHAR2 EXEID- Data type is String and can be mapped to

VARCHAR2 RUNSK- Data type is Integer and can be mapped to

VARCHAR2 or INTEGER. SYSDATE- Data type is Date and can be mapped to

DATE, VARCHAR2. TASKID- Data type is String and can be mapped to

VARCHAR2 MISDATE- Data type is Date and can be mapped to

DATE, VARCHAR2.

Note:

RUNID, PHID, EXEID, RUNSK, MISDATE are implicitly passed through

RRF. Rest must be explicitly passed.

EXEC_ENV_SOURCE- This parameter is used to replace

the source of the T2T, T2H, H2T or H2H definition during

run time, provided the structure of the source in the

mapping definition is same as that of the replacing source.

Thus you can convert a T2T definition into H2T or T2H

into H2H or H2H into T2H. But the resultant definition

should not be T2T, which is not supported.

For example, [EXEC_ENV_SOURCE]=newSourceName

EXEC_ENV_TARGET- This parameter

is used to replace the target of the T2T, T2H, H2T or H2H

definition during run time, provided the structure of the

target in the mapping definition is same as that of the replacing

target. Thus you can convert a T2T definition into T2H or

H2T into H2H or H2H into H2T. But the resultant definition

should not be T2T, which is not supported.

For example, [EXEC_ENV_TARGET]=newTargetName

Note:

You can use both EXEC_ENV_SOURCE and EXEC_ENV_TARGET together

as well. Only limitation is the resultant definition should not

be T2T.

Note:

If you are converting a mapping definition to T2H using EXEC_ENV_SOURCE/EXEC_ENV_TARGET,

there is no provision in UI to specify the Split By Column/Generic

Options. In such scenarios, execution via Sqoop may fail, when

the split by column is defaulted to a string/date column.

EXECUTION_ENGINE_MODE-

This parameter is used to execute H2H on Spark. For example,

[EXECUTION_ENGINE_MODE]=SPARK CLOSE_SPARK_SESSION-

This parameter is used to close the Spark session after

executing the last H2H-Spark task in the batch.

In a batch execution, a new Spark

session is created when the first H2H-Spark task is encountered,

and the same Spark session is reused for the rest of the H2H-Spark

tasks in the same run. For the Spark session to close at the end

of the run, user needs to set the CLOSE_SPARK_SESSION to YES in

the last H2H-spark task in the batch.

For example, [CLOSE_SPARK_SESSION]=YES

Note that the

value should not contain : /*+ */. Only the content

should be given.

Note:

ALTER keyword is not supported.

Note that the value should not contain

: /*+ */. Only the content should be given.

Note:

ALTER keyword is not supported.

Apart from these, L2H/H2H/T2H/H2T/F2H data mappings also support

following additional default parameters. Values for these are

implicitly passed from ICC/RRF.

$MISDT_YYYY-MM-DD - Data type is String and can

be mapped to VARCHAR2. Value will be the MISDATE in ‘yyyy-MM-dd‘

format. $MISYEAR_YYYY - Data type is String and can be mapped

to VARCHAR2. Value will be the year value in ‘yyyy‘ format

from MISDATE. $MISMONTH_MM - Data type is String and can be mapped

to VARCHAR2. Value will be the month value in ‘MM‘ format

from MISDATE. $MISDAY_DD - Data type is String and can be mapped

to VARCHAR2. Value will be the date value in ‘dd‘ format

from MISDATE. $SYSDT_YYYY-MM-DD- Data type is String and can be

mapped to VARCHAR2. Value will be the System date in ‘yyyy-MM-dd‘

format. $SYSHOUR_HH24 - Data type is String and can be mapped

to VARCHAR2. Value will be the hour value in ‘HH24‘ format

from System date.

Note: The aforementioned parameters

are not supported for T2T and F2T.

- Only those variable which start with $ or [, will be replaced

at run time and the value of this variable will be equal to

anything starting after “=” and ending before comma “,”.

For example, if $DCCY/[DCCY] =’USD’,

$RUNSKEY=1, then the replaced value in query for $DCCY will be

‘USD’ and for $RUNSKEY will be 1.

If you are using “RUNSKEY” parameter in ICC Batch, then

ensure that you specify the value of it instead of specifying

$RUNSKEY / [RUNSKEY]. For example, FCT_STANDARD_ACCT_HEAD.N_RUN_SKEY=’$RUNSKEY’.

Since the value of RUNSKEY will not be replaced during runtime. If there are quotes specified in parameter name, then

ensure not to use quotes while defining the expression or

vice versa to avoid SQL errors. For example, if the parameter

name is $DCCY=’USD’ and the expression is defined using ‘$DCCY’

instead of $DCCY, then the final value will be ‘ ‘USD’ ’. When you execute a RUN, the run is always tagged with

a RUNSK value (a unique value for each run fired directly

from the RRF). You might have a DERIVED COLUMN in your T2T

with expression like $RUNSK. If you execute this T2T through

a RUN, a unique RUNSK value is passed implicitly to the T2T

engine, which then assigns that value wherever $RUNSK is found.

But if you try to execute the T2T through ICC, then you need

to explicitly pass a $RUNSK as a parameter so that the T2T

engine can use it.

Two additional parameters are now supported for L2H mappings:

[INCREMENTALLOAD] – Specify the value as TRUE/FALSE.

If set to TRUE, historically loaded data files will not be

loaded again (load history is checked against the definition

name, source name, target infodom, target table name and the

file name combination). If set to FALSE, the execution is

similar to a snapshot load, and everything from the source

folder/file will be loaded irrespective of load history. [FOLDERNAME] – Value provided will be used to pick up

the data folder to be loaded.

For HDFS based Weblog source: Value will be suffixed

to HDFS File Path specified during the source creation. For Local File System based Weblog source: By

default the system will look for execution date folder

(MISDATE: yyyymmdd) under STAGE/<source name>. If

the user has specified the FOLDERNAME for this source,

system will ignore the MISDATE folder and look for the

directory provided as [FOLDERNAME]. |

Back to Top

Component:

MODEL

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain.

The unique combination of the Datastore

Name and the Datastore Type determine

the physical machine on which the task will be executed. It is

assumed that the user gives the correct information else task

invocations may fail at runtime. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Model Code |

Refers

to the model that has to be processed. This is a system generated

code that is assigned at the time of model definition. |

Operation |

The All definition for the Operation field

conveys the process of extracting the data from the flat files

and applying the run regression on the data extracted.

For Batches that are being built for the first

time the data will be extracted from the flat files and the run

regression will be applied on it. |

Optional

Parameters |

Refers to the set of parameters specific

to the model that has to be processed. This set of parameters

is automatically generated by the system at the time of definition.

You must NOT define a Model using the Define

mode under Batch Scheduling. You

must define all models using the Modeling framework menu. |

Back to Top

Component:

PROCESS_EXECUTION

This component will combine all the rules to create single or multiple

merge queries. Only rules defined on the same dataset can be merged. For

creation of queries the current order of the rules inside the process

or sub-process will be taken into consideration. Following validations

are performed to determine single or multiple DMLs for merging Rules that

is, validation on subsequent rules.

For classification-classification

or classification-computation rule combination, the target column

of the prior classification rule must not be used in any of the subsequent

rules as source hierarchies in the executable process or sub-process.

Also the same target hierarchy must not be used as a target in the

subsequent rule.

For computation-computation

rule combination, the target measures of the prior computation rule

must not be used in any of the subsequent computation rules in the

executable process or sub-process.

All the merge queries created after satisfying all the conditions will

be executed in a single transaction.

Note the following:

RRF framework

cannot validate the semantic correctness of the rules grouped for

merge. It is left to the application developer/user to make a conscious

choice.

If the merge

results in an ill-formed or runaway SQL, the framework will not be

able to detect it at design time. This is again left to application

developer/user to design the grouping that is syntactically valid.

Property |

Description |

Datastore

Type |

Refers

to the type of data store such as Enterprise Data Warehouse (EDW)

which refers to the Multi-dimensional Database/Cubes. |

Datastore

Name |

Refers to the name of the Information Domain.

Click the drop down list in the Value column to select the Information

Domain. |

IP Address |

Refers

to the IP Address of the machine on which Infrastructure Database

Components have been installed. Click the drop down list box in

the Value column to select the desired IP address. |

Process

Code |

Display

the codes of the RRF Processes defined under the selected Infodom. |

Sub Process

Code |

Display

the codes of the Sub Processes available under the selected Process. |

Build Flag |

Select