6 Virtualized Exadata Database Machine

The Exadata plug-in discovers, manages, and monitors virtualized Exadata Database Machine in conjunction with the Virtualization Infrastructure plug-in. For details about this plug-in, see the "Direct Monitoring of Xen Based Systems" chapter of the Oracle® Enterprise Manager Cloud Administration Guide:

http://docs.oracle.com/cd/E24628_01/doc.121/e28814/direct_monitor_cs.htm#EMCLO531

The following sections describe how to discover a virtualized Exadata Database Machine and other supported targets:

Once you have completed the discovery of a virtualized Exadata Database Machine, continue with the configuration steps outlined in Post-Discovery Configuration and Verification.

6.1 Integration with Virtualization Infrastructure Plug-in

The physical server (physical Oracle Server target), Dom0 (Virtual Platform target), and DomU (virtual Oracle Server target) are discovered and monitored by the Virtualization Infrastructure (VI) plug-in.

The Exadata discovery can work with the VI plug-in by the physical server, Dom0, and DomU are discovered using the VI plug-in before Exadata Database Machine discovery. During Exadata discovery, the discovery code looks up the existing Virtual Platform target that corresponds to the Dom0 of the compute node.

The Exadata discovery flow with VI plug-in integration includes the following checks:

-

Check whether the Exadata Database Machine is virtualized based on the configuration metric of the host target of the discovery agent.

-

Check whether the VI plug-in is deployed. If not, you will be prompted to deploy it as described in the "Direct Monitoring of Xen Based Systems" chapter of the Oracle® Enterprise Manager Cloud Administration Guide:

http://docs.oracle.com/cd/E24628_01/doc.121/e28814/direct_monitor_cs.htm#EMCLO531

6.2 Discovering Virtualized Exadata Database Machine

With virtualized Exadata, one Exadata Database Machine target will be created for each physical Database Machine instead of one DB Machine target for each DB cluster deployed through OEDA. Compute nodes, Exadata Storage Servers, InfiniBand switches, compute node ILOM, PDU, KVM, and Cisco switches targets are discovered by the Exadata plug-in.

With only the compute nodes virtualized, the physical servers, Dom0 and DomU, are monitored by the Virtualization Infrastructure (VI) plug-in. The Exadata plug-in is integrates with the VI plug-in for discovery and target management as discussed below.

The hardware targets in virtualized Exadata are discovered in almost the same way as physical Exadata (see Discovering an Exadata Database Machine) except as noted below:

-

The

Dom0of the compute nodes are discovered usingibnetdiscover. Compute node ILOM to compute node mapping and VM hierarchy are obtained from the VI plug-in. -

Exadata Storage Servers are discovered using

ibnetdiscoverinstead ofkfod. Therefore, there is no need to specify the Database Oracle Home during discovery. -

InfiniBand switches are discovered using

ibnetdiscover. -

Compute node ILOM, PDU, and Cisco switch are discovered based on the

databasemachine.xmlschematic file. -

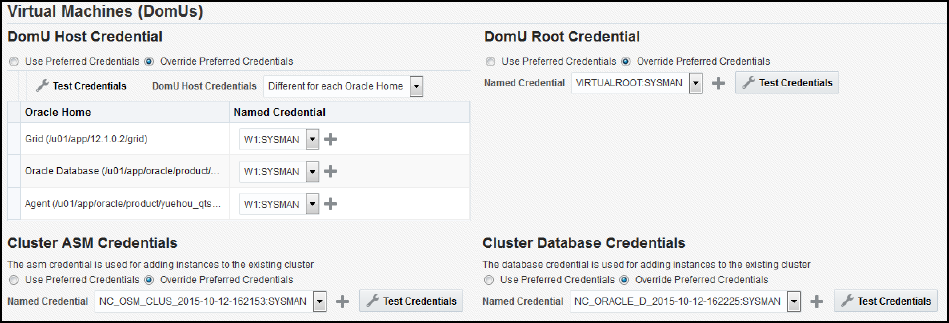

Cluster and Database Target Discovery

-

The cluster and database target discovery is similar to the physical Exadata case (see Discovering Grid Infrastructure and RAC). The only difference is that the Enterprise Manager agents needed to be deployed on the

DomUof the Database cluster before the cluster, ASM, and database targets can be discovered using the DB plug-in.

-

-

Agent Placement

-

The primary and backup Enterprise Manager agents monitoring the Exadata hardware targets should be deployed in two dedicated

DomUthat will not be suspended or shut down, and are ideally on different physical servers to ensure high availability. -

For static virtual machine configurations, the Enterprise Manager agents that are used to monitor the Database clusters can be used to monitor the Exadata hardware.

-

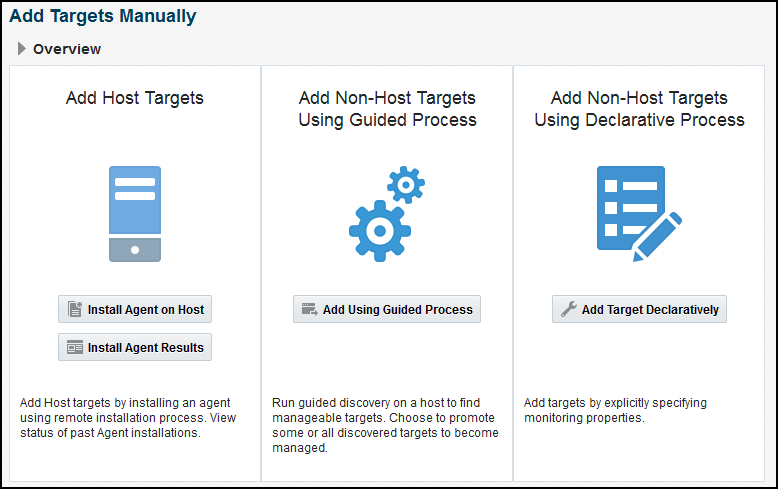

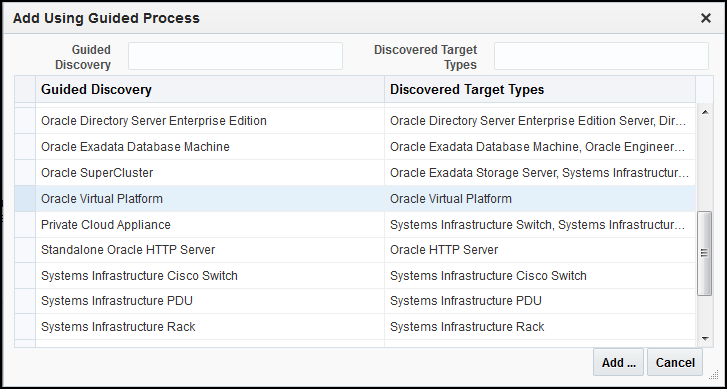

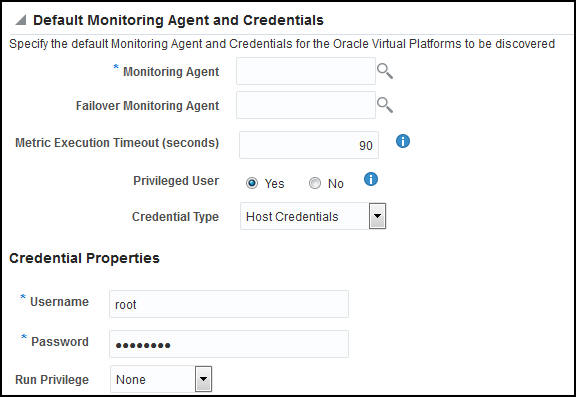

To discover a virtualized Exadata Database Machine:

Note:

The VI plug-in regularly checks whether a VM is added or deleted. The Oracle VM instance targets are auto-promoted once they are detected by the VI plug-in. If you want to monitor the OS, ASM, and DB in the VM, you need to push the Enterprise Manager agent to the VM.

6.3 Post-Discovery Configuration

Since agents run inside DomU nodes and remotely monitor cells, ILOMs, switches, and PDUs, ensure that Primary and Backup agents are on physically different DomU hosts. In case of a Dom0 outage, Enterprise Manager can continue to monitor such targets using an agent that is running on an active DomU hosted by a different Dom0 that is still running. This will achieve continuous monitoring in case of a complete Dom0 outage.

For details on how agents monitor Exadata targets remotely, refer to Post-Discovery Configuration and Verification, for details.

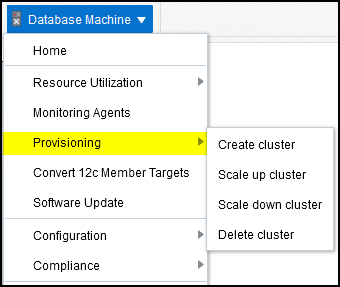

6.4 Exadata Virtualized Provisioning

Provisioning involves repeatable, reliable, automated, unattended, and scheduled mass deployment of a RAC Cluster including virtual machines (VMs), Oracle Database (DB), Grid Infrastructure, and ASM on Virtualized Exadata.

With the Exadata plug-in's virtualization provisioning functionality, you can:

6.4.3 Scaling Down a Database Cluster

To scale down a database cluster, the Virtual Machine is removed from the cluster:

6.5 Viewing Virtualized Exadata Database Machine

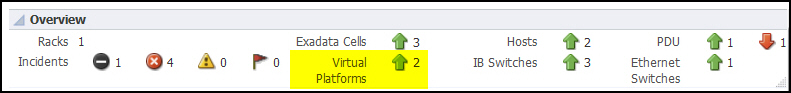

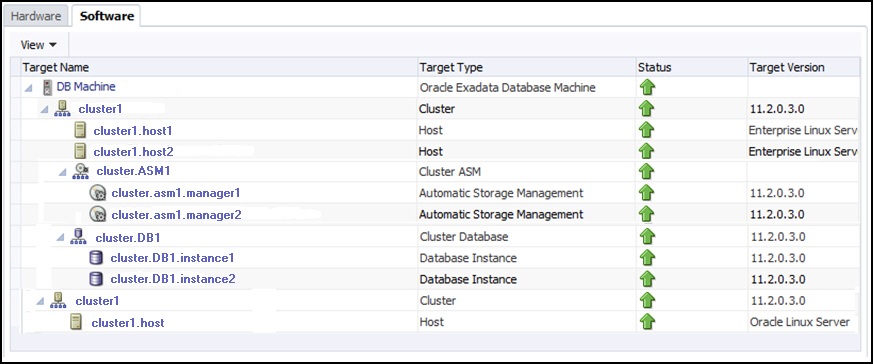

Once discovered, the Exadata plug-in shows the virtual machines monitored by Enterprise Manager Cloud Control 12c, as shown in Figure 6-32:

Figure 6-32 Virtual Machines Monitored

Note:

The schematic diagram in the Database Machine home page is based on the content of the databasemachine.xml file found during discovery. The virtual platforms (Dom0) are displayed as compute nodes in the rack in the schematic diagram.

The Database Machine Software topology diagram will not display the physical Oracle Server, virtual Oracle Server targets (DomU), and Virtual Platform target (Dom0) targets. However, it will continue to show the host targets which are running in DomU.

The Software tab for the Exadata Database Machine target shows all clusters, ASM, and Database targets in the whole physical Database Machine grouped by clusters as described in Figure 6-33:

Figure 6-33 Exadata Database Machine Software Tab

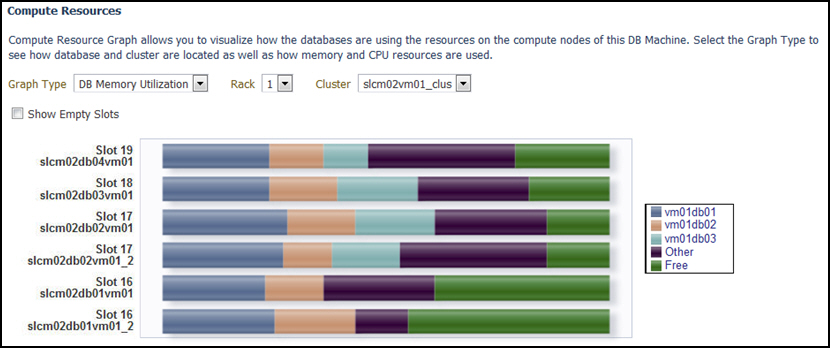

6.6 Resource Utilization Graphs

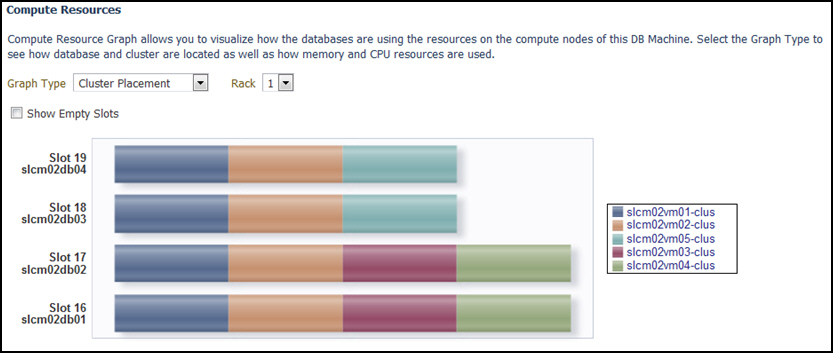

The following compute resource allocation graphs are available in virtualized Exadata. These graphs are dependent on the virtual machine hierarchy and metric data from the VI plug-in:

6.6.1 Cluster Placement

This graph (Figure 6-34) shows the ClusterWare cluster placement on physical servers in a particular Exadata Database Machine rack. Since this is a placement graph, the widths of the data series reflect the number of clusters on the physical server that has the most number of clusters.

Figure 6-34 Resource Utilization: Cluster Placement

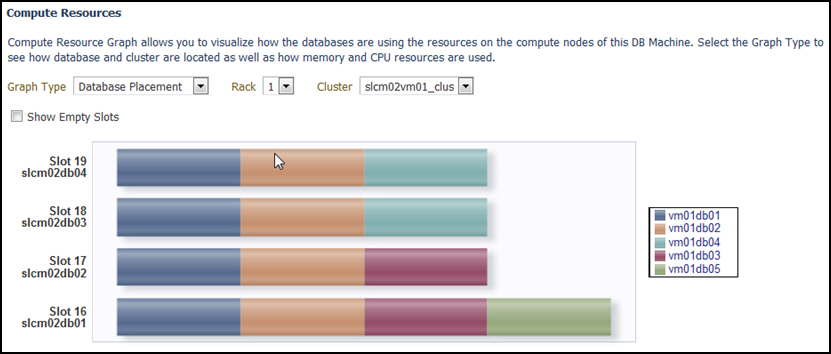

6.6.2 Database Placement

This graph (Figure 6-35) shows the database placement on physical servers in a particular Exadata Database Machine rack for a particular DB cluster. Since this is a placement graph, the widths of the data series reflect the number of DB on the physical server that has the most number of databases for a particular DB cluster.

Figure 6-35 Resource Utilization: Database Placement

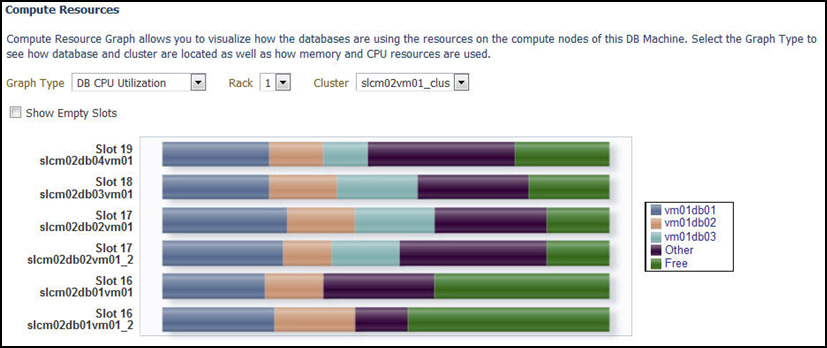

6.6.3 Database CPU Utilization

This graph (Figure 6-36) shows the database CPU utilization per database per VM host for a particular DB cluster.

Figure 6-36 Resource Utilization: Database CPU Utilization

6.6.4 Database Memory Utilization

This graph (Figure 6-37) shows the database memory utilization per database per VM host for a particular DB cluster.

Figure 6-37 Resource Utilization: Database Memory Utilization