4 Preventive Maintenance

This chapter describes preventive maintenance of the LSMS. Included are topics on backing up databases and file systems, monitoring hardware and network performance, and routine cleaning.

Introduction

This chapter describes preventive maintenance of the LSMS. Included are topics on backing up databases and file systems, monitoring hardware and network performance, and routine cleaning.

Use the system monitoring features regularly, especially during times of peak load, to verify that the system has adequate resources. This practice provides an insight into system resource utilization and provides early warning if the system capacity limits are being approached.

The procedures in this chapter assume that you are familiar with the LSMS hardware. For more information about the hardware, refer to Application B Card Hardware and Installation Guide.

Recommended Daily Monitoring

To properly maintain your LSMS system, it is recommended that you perform the activities described in this section on a daily basis.

Continuous Monitoring Activities

Perform the following activities continually:

-

Always keep at least one graphical user interface (GUI) open. Monitor the GUI especially for any red or yellow conditions, either on the NPAC and EMS status icons or in the notifications display area. For more information about the display areas of the GUI, refer to the Database Administrator's Guide. For information about notifications displayed in the notifications display area, see Automatic Monitoring of Events.

-

Monitor the latest Surveillance notifications in either or both of the following ways:

-

Connect a customer-provided administration console to Serial Port 3 of each server so that Surveillance notifications can be displayed there.

-

View the Surveillance log file,

/var/TKLC/lsms/logs/survlog.log. To display the latest contents of this file, log in as any user and enter the following command:$ tail -f /var/TKLC/lsms/logs/survlog.logFor more information about the Surveillance feature, see “Understanding the Surveillance Feature”.

-

Once a Day Monitoring Activities

It is recommended that once each day you perform the following:

-

Examine logs for abnormalities. For more information, see Daily Examination of Logs for Abnormalities.

-

Determine the success or failure of the database and file system backups by examining the backup log (

/var/TKLC/log/backup/backup.log)and the surveillance log (/var/TKLC/lsms/logs/survlog.log). For more information, see Daily Determination of Success or Failure of Backup.

Note:

Logs are maintained up to 20 MB for rejected logs and 500 MB for transaction logs. The old rotated logs get deleted automatically in a day and new logs start getting pegged in the newly created files.Daily Examination of Logs for Abnormalities

Examine the following logs for any abnormalities once a

day, preferably near the end of the day. In each of these logs,

<MMDD> indicates the month and

day. Each log is kept for seven days. For more information about these logs,

refer to the

Database Administrator's Guide. You can view

the logs using the

GUI or you can use any text editor.

-

Examine the following exception log files:

-

Run the

chkfiltercommand and then examine/var/TKLC/lsms/logs/trace/LsmsSubNotFwd.log.<MMDD>. This log contains subscription versions (SVs) or number pool blocks (NPBs) that have been received from an NPAC but could not be forwarded to a network element because the LSMS has no EMS routing defined for the SVs or NPBs. -

/var/TKLC/lsms/logs/<clli>/LsmsRejected.log.<MMDD>. This log contains transactions that the LSMS attempted to forward to a network element, but which were rejected by the network element.

-

-

Examine the following alarm logs to verify that you are aware of all alarms (these events will also have been reported in the GUI notifications display).

-

/var/TKLC/lsms/logs/alarm/LsmsAlarm.log.<MMDD>. This log contains events associated with the Local Data Manager, the Local Services Manager and regional NPAC agent processes.

-

-

Examine the following transaction logs for any abnormalities:

-

/var/TKLC/lsms/logs/<clli>/LsmsTrans.log.<MMDD>for each network element identified by<clli>. These logs contain all transactions forwarded to EMS agents, including information associated with M-Create, M-Set, and M-Delete operations initiated from the NPAC.

-

-

Examine the Surveillance log

/var/TKLC/lsms/logs/survlog.logfor any abnormalities. This log contains all surveillance notifications that have been posted.

Daily Determination of Success or Failure of Backup

Each day, check the backup log from the previous day on each server (as you can see from the timestamps in Figure 4-1 and Figure 4-2, backups generally begin a few minutes before midnight). Ensure that the backup logs contain text similar to that shown in the referenced figures. If you need help interpreting the logs, contact the unresolvable-reference.html#GUID-646F2C79-C167-4B5A-A8DF-7ED0EAA9AD66.

If you determine that the automatic backup(s) did not complete successfully, perform a manual backup right away.

LSMS Database Defragmentation

In releases of LSMS prior to 13.0, a database sort was sometimes required to keep the LSMS operating at maximum efficiency in terms of transactions per second (TPS). This was a manually-intensive operation that could be performed only by the Technical Assistance Center (TAC). LSMS 13.0 and later releases use the E5-APP-B platform, which has solid state drives (the old platform used disk drives) that by design do not require defragmentation. Oracle performed testing to validate that fragmentation will not be an issue on the E5-APP-B platform. However, if for some reason there is any indication of a need for database sorting, contact the unresolvable-reference.html#GUID-646F2C79-C167-4B5A-A8DF-7ED0EAA9AD66 so your system can be fully evaluated. If it is determined there is a need for database sorting, the Customer Care Center has access to MO006201 which defines this database sort procedure.

Using Backup Procedures

The most basic form of backup happens continuously and automatically, as the redundant LSMS servers contain duplicate hardware, and the standby server replicates the active server’s database.

However, if data becomes corrupted on the active server’s database, because data on the active server’s database is automatically replicated to the standby server, you must also follow more conventional backup procedures so that you can recover from a corrupted database. A database saved to file on the Network Attached Storage (NAS) device or copied from the disk to tape on the NAS and then stored off-site is a precaution against database corruption.

Understanding How the LSMS Backs Up File Systems and Databases

Each night at midnight, the LSMS automatically backs up the following to disk:

- Platform configuration (for each server), stored as

plat.xml - The entire LSMS database, stored as

lsmsdb.xml - The entire LSMS logs filesystem, stored as

lsmslogs.xml

When both servers are functioning, the automatic backup function backs up the database (lsmsdb.xml) and logs (lsmslogs.xml) from the standby server, and backs up only the platform configuration (plat.xml) from the active server.

If only one server is active, the automatic backup function backs up all the files shown in the bulleted list above from the active server.

In addition, you can perform the same backups manually at any time (see Backing Up the LSMS Manually).

Understanding the Backup Results

The result of each backup is posted to the log file on the server on which the backup was scheduled to take place.

Backing Up the LSMS Manually

Before beginning a manual backup:

- Read Understanding How the LSMS Backs Up File Systems and Databases.

- Check the GUI notification information and surveillance logs for database errors before beginning the manual backup procedure to ensure that the LSMS is functioning correctly.

- Check whether servdi is running before starting the manual backup. If servdi is running, wait for it to complete before running the manual backup.

Note:

Backups can also be performed via the platcfg menu. For more information, see Using Restore Procedures.The following procedure explains how to start a backup manually. If a backup procedure fails, contact the unresolvable-reference.html#GUID-646F2C79-C167-4B5A-A8DF-7ED0EAA9AD66.

Stopping an Automatic or Manual Backup

Under normal conditions, backups complete relatively quickly (in less than 45 minutes). However, if no backup has been previously performed or if the previous backup was stopped before it completed, the next backup can take up to 4 hours.

It is advisable to allow a backup to complete. However, if you accidentally start a backup or need to stop the backup process, use the following procedure. You must log into both the active and standby servers to stop a backup.

Note that a backup cannot restart at the point where it was aborted because various lock files are created to prevent conflicting backups. To restart a manual backup, start the procedure from the beginning. See “Backing Up the LSMS Manually” if you need help.

If you need to restore data from a previously recorded backup, contact the unresolvable-reference.html#GUID-646F2C79-C167-4B5A-A8DF-7ED0EAA9AD66.

Checking for Running Backups

Both database backups and query server snapshots use the same file space on the LSMS. If a backup is in process and a query server snapshot or another backup is started, the first backup process will terminate prematurely, and the next backup will take significantly longer to complete. Therefore, it is very important that you perform the following procedure to check for a running backup before starting a manual backup or creating a query server snapshot.

In addition, the following tasks all use temporary file space on the LSMS. If you attempt to run these processes simultaneously, you may run out of disk space. Since backups can be run automatically, it is recommended that you perform the following procedure before attempting any of these tasks to ensure that no database backups are running:

- Starting a standby node (changing its state from UNINITIALIZED "INHIBITED" to STANDBY)

- Running the

importcommand - Running the

lsmsdb quickauditcommand.

Using Restore Procedures

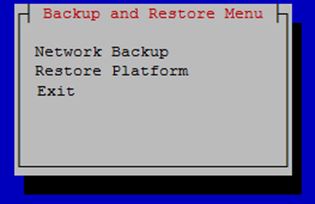

The platcfg utility provides for network backup and restore operations. From the Main Menu, selecting Backup and Restore displays the Backup and Restore menu as shown.

Figure 4-18 Backup and Restore Menu

- Network Backup works in the same way as it does for lsmsmgr. For more information, see Backing Up the LSMS Manually.

- Restore Platform enables restoration of data from NAS to LSMS.

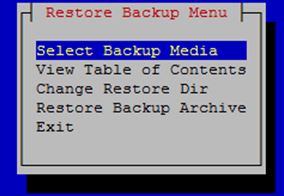

Selecting Restore Platform transfers control to the Restore Backup Menu as shown.

Figure 4-19 Restore Backup Menu

- Select Backup Media enables selection of the backup archive to be restored from NAS to LSMS.

- View Table of Contents displays the contents of the selected backup archive. If no backup archive is selected, a message is displayed indicating that you must select the media first.

- Change Restore Dir is used to indicate the restore directory to which the archive will be restored.

- Restore Backup Archive restores the selected archive from NAS to LSMS. If no backup archive is selected, a message is displayed indicating that you must select the media first.

To restore the data from NAS when the servers are in active/standby state, follow these steps:

- On the standby server, open the lsmsmgr menu using the following command:

su - lsmsmgr - Select .

- Repeat steps 1 and 2 on the active server.

- Start restore from NAS on the active server from the platcfg menu ().

- After restore, issue the following command on both the A and B servers:

rm -rf /var/TKLC/lsms/db/auto.cnf - On the active server, open the lsmsmgr menu using the following command:

su - lsmsmgr - Select .

- Repeat steps 6 and 7 on the standby server.

Additional Tools for Monitoring the LSMS Hardware and the Network

LSMS provides various tools that you can use to monitor the LSMS hardware and the network. Monitoring can help you prevent and diagnose errors.

Use the system monitoring features regularly, especially during times of peak load, to verify that the system has adequate resources. This practice provides an insight into system resource utilization and provides early warning if the system capacity limits are being approached.

Verifying Active Server Network Interfaces and NPAC Connections

Use one or more of the following methods to verify network connectivity:

- The

ifconfigcommand - The

tracerouteutility to verify network connectivity and routing between hosts - The LSMS graphical user interface (GUI) to determine connectivity to NPACs

Using the ifconfig Command

Use the ifconfig -a command on the target host to verify that ports are in the UP state.

Using the traceroute Utility

The traceroute utility determines the path between the host where the utility is run and the remote host named by the utility’s input parameter. The utility also reports the latency of each hop along the route.

Note:

If the network between the hosts contains firewalls, this utility may fail unless the firewalls are properly set up. Setting up firewalls is the responsibility of the customer.Managing Automatic File Transfers

The LSMS generates many logs, measurements, and data files on a regular basis. These files are maintained on the LSMS for seven days. Customers can use the data in these files for traffic pattern analysis, identification of various network events, and investigation of problems.

The optional Automatic File Transfer feature enables customers to set up an automatic method of transferring selected files to specified remote sites at a specified frequency. Using this feature can reduce costs and also the chance of user error that could result in missed transfers of required data.

Whenever an error occurs during an automatic file transfer, an entry is made in the file aft.log.<MMDD> in the directory /var/TKLC/lsms/logs/aft (where <MMDD> is the month and day when the error occurred).

Use the autoxfercfg command, as described in the following subsections, to set up and manage automatic file transfers. To initially set up an automatic transfer of files, perform in the order shown below, the procedures in the following sections:

In addition, you can use the autoxfercfg command to perform the following functions:

Displaying Remote Locations Used for Automatic File Transfers

To display all remote locations that have been previously added using this feature, perform the following procedure.

Adding a New Remote Location for Automatic File Transfers

To add a new remote location for files to be automatically transferred to, perform the following procedure.

Deleting a Remote Location for Automatic File Transfers

To delete a remote locations that has been previously added using this feature, perform the following procedure.

Displaying Previously Scheduled Automatic File Transfers

To display all automatic transfers that have been previously set up using this feature, perform the following procedure.

Note:

Any file transfers that have been set up to be performed one time only are not displayed.Scheduling an Automatic File Transfer

To set up files to be transferred automatically, perform the following procedure. It is recommended that you schedule transfers according to the following guidelines:

- Choose an off-peak time, such as very early in the morning.

- Avoid planning transfers that would result in the same file being transferred more than once. For example, because LSMS application logs are maintained on the LSMS for seven days, they only need to be scheduled for a weekly transfer. If you schedule a daily transfer for logs of that type, the same file will be transferred each day for seven days. For this reason the display described in “Displaying Previously Scheduled Automatic File Transfers” shows that the files with filenames that start with

Lsmsin the/var/TKLC/lsms/logs/Midwestdirectory are transferred only on Mondays.

Transferring large numbers of files does not impact the processing performance of the LSMS, but it can impact network performance, especially networks that use the single-subnet design. (For more information about network design, refer to the LSMS Configuration Manual.). This feature is designed for insignificant network degradation for up to 10 configured remote locations with up to 600 transferred files.