Monitor Compute

This section explains the different methods and metrics you can use to monitor compute in your Oracle AI Data Platform Workbench.

View Spark UI

You can view the Spark Web UI to see to monitor the status and resource consumption of your all-purpose compute clusters.

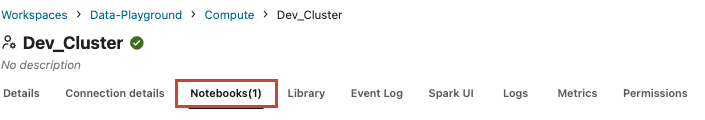

- Navigate to your workspace and click Compute.

- Click your cluster, then click the Spark UI tab.

- Optional: Click to pop-out button on the top right to view the Spark UI in a separate window.

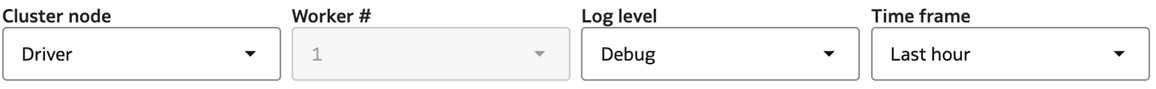

View Driver and Worker Logs

You can view the Driver and Worker Logs of your All Purpose Compute Clusters for troubleshooting or debugging.

View Metrics

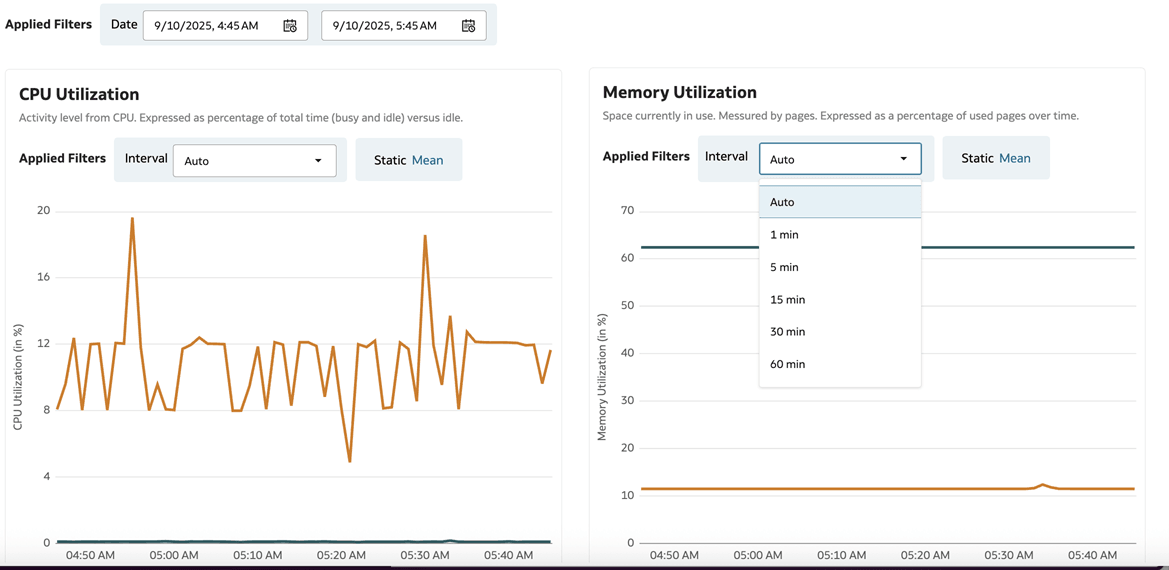

You can monitor the infrastructure metrics of your compute clusters for troubleshooting or for making any sizing adjustments.

You can view status and history for the following metrics:

- CPU Utilization

- Memory Utilization

- Disk read

- Disk write

- File system utilization

- Garbage Collector CPU utilization

- Network received

- Network transmitted

- Active tasks

- Total failed tasks

- Total task tasks

- Total completed tasks

- Total number of tasks

- Total shuffle read bytes

- Total shuffle write bytes

- Total task duration in seconds

- SQL: Peak concurrent queries

- SQL: Peak concurrent connections