Import Transactional Data Using the Bulk Data Import Operation

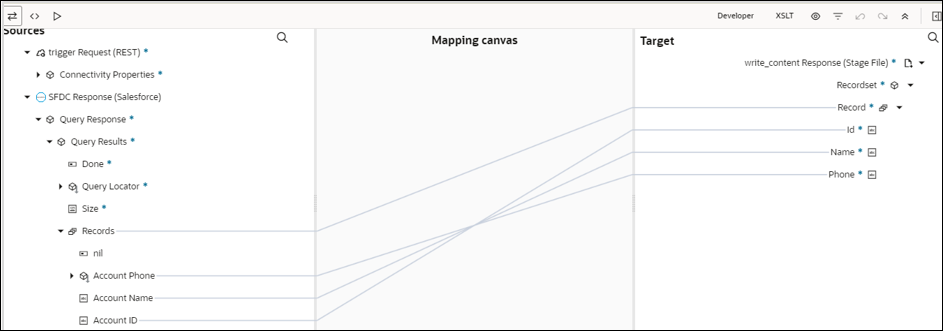

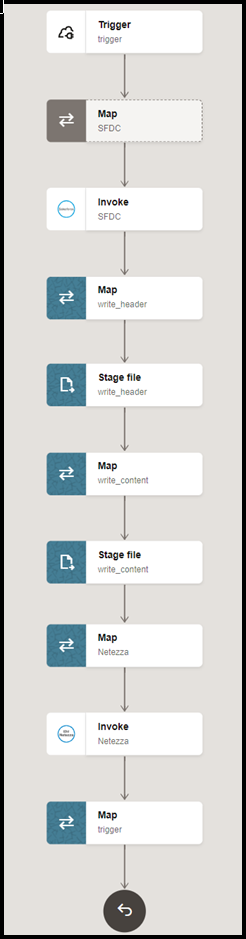

This use case describes how to import transactional records in chunks from an application (for example, Salesforce) into the Netezza database. In this use case, the Salesforce application is used. Similarly, you can import data files from other applications into the Netezza database using the Netezza Adapter.

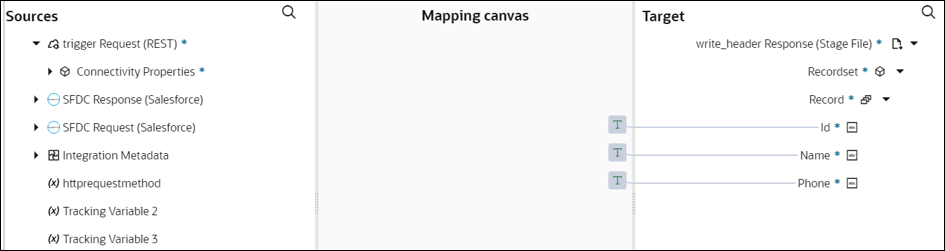

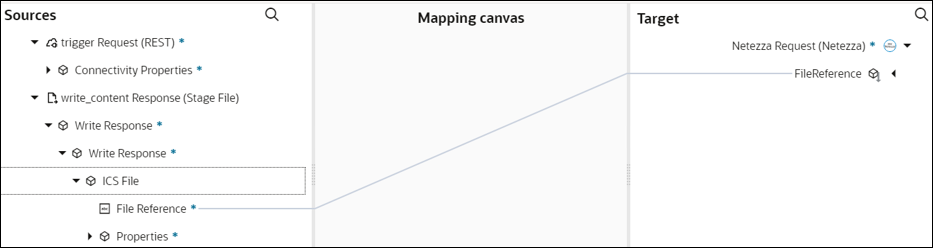

To perform this operation, you create the Salesforce Adapter and Netezza Adapter connections in Oracle Integration. The Netezza Adapter first validates an input file header with the target table header (columns), places file data into the mount location (local to the database), and inserts mount location data into the target table if the data is in the expected format.