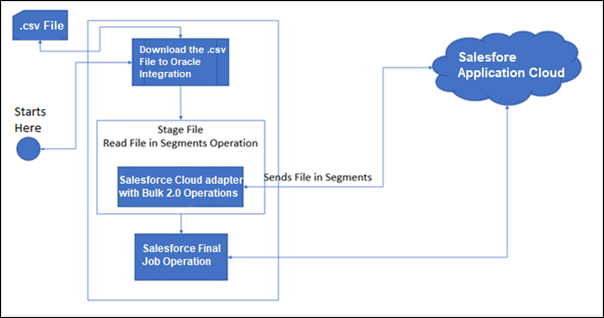

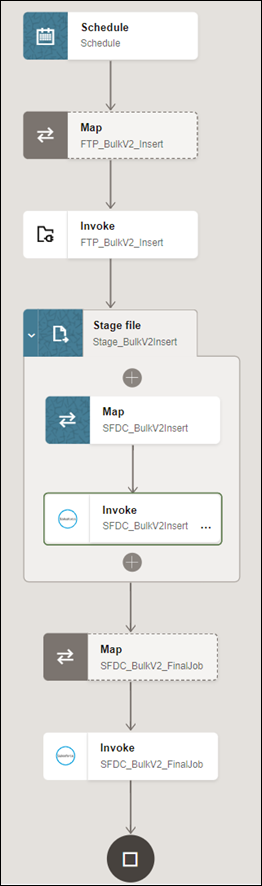

Process Large Data Sets Asynchronously with Different Bulk 2.0 Data Operations

The Salesforce Bulk API enables you to handle huge data sets asynchronously with different bulk operations. For every bulk operation, the Salesforce application creates a job that is processed in batches. A job contains one or more batches in which each batch is processed independently. The batch is a nonempty CSV file.

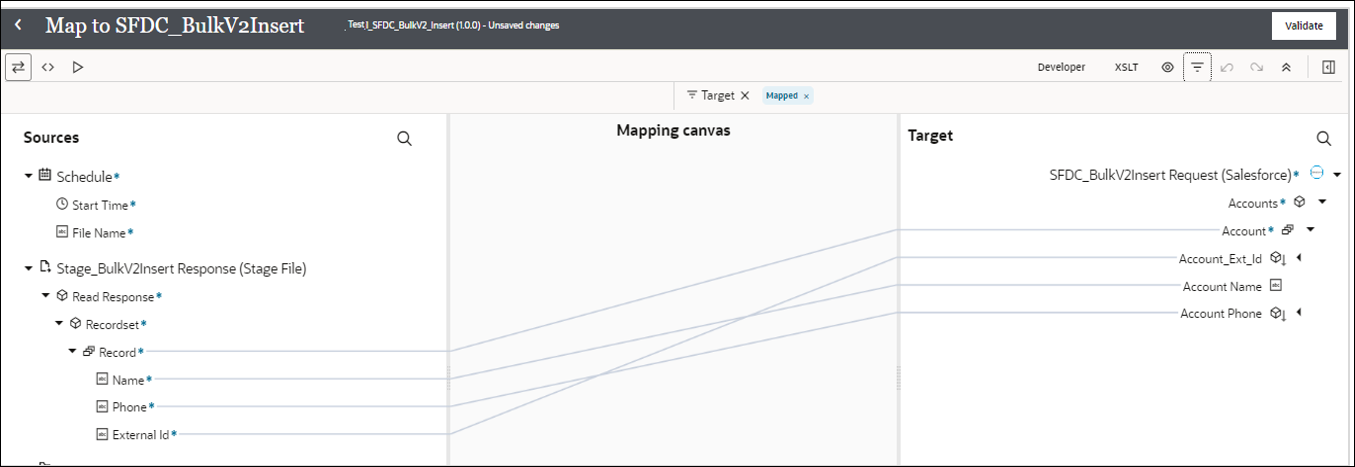

This use case describes how to configure the Salesforce Adapter to create a large number of account records in Salesforce Cloud.

To perform this operation, you create FTP Adapter and Salesforce Adapter connections in Oracle Integration.

In this use case, a CSV file is used as input. However, you can also use other format files. The Salesforce Adapter transforms the file contents into a Salesforce-recognizable format.