13 Implement Conversation Flows

Here are some best practices for implementing conversations flows in digital assistants.

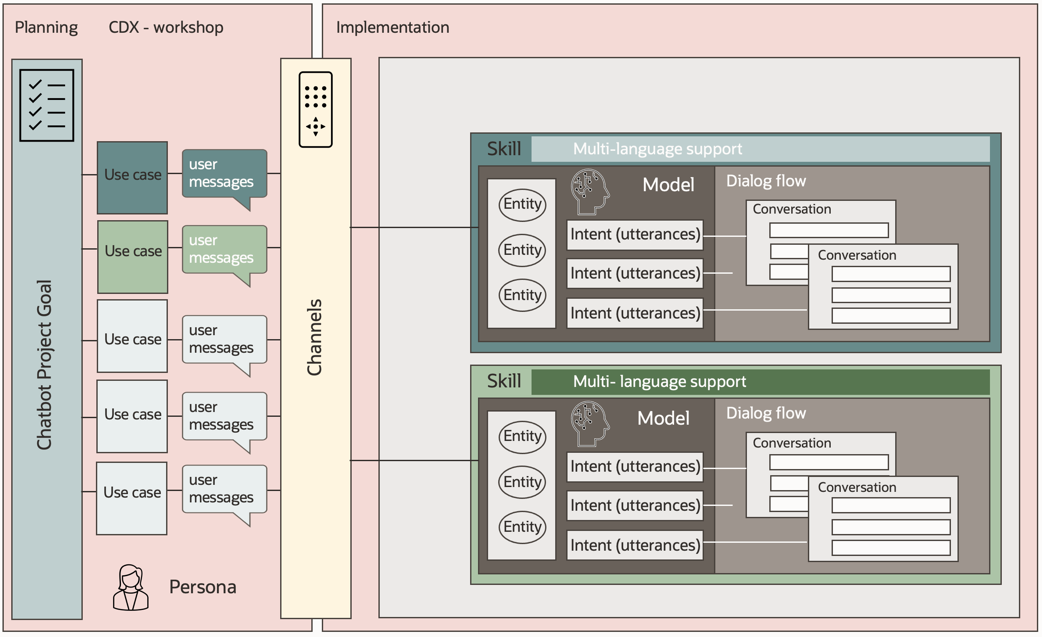

With a well-designed model, you are ready to start building the conversation flows for your regular intents. Conversations are defined by a series of dialog flow steps in Oracle Digital Assistant skills.

Use Visual Mode

When you create a skill, you have the option to set it to use Visual or the legacy YAML design mode. You should always use Visual mode, which is also the default option. Visual mode offer the follows a host of advantages over the legacy YAML mode, including the following:

- A visual design experience, with flow states represented visually on a canvas, component property editors, and design-time validation.

- The ability to break the overall dialog flow into multiple flows.

- The ability to create reusable flows that can be called from other flows to handle things that are used by multiple flows.

- Much easier readability and maintainability.

Dialog-Driven Conversations

Dialog-driven conversations collect the user information needed to complete a task by navigating a user through a series of dialog flow states. Each state is linked to a component to render bot responses and user prompts, or to handle conditions, logic, and other functionality.

With this approach, you develop the dialog flow like a film script that you derive from the use cases that you designed in the planning phase of your project. Because the individual steps in a conversation are controlled by the dialog flow, your dialog flow can quickly become quite large. To avoid dialog flows that are unmanageable in size, you should partition your use cases into different skills.

Use a Naming Convention for Dialog Flow State Names

Consider the names of dialog flow states as part of your documentation. If the name of the dialog flow state provides a context, code reviewers find it easier to understand what a dialog flow state does, and which conversation flow it belongs to. For example:

promptForOrderNumberfindOrdercancelOrder

Also, if your skill is in YAML mode, consider keeping related dialog flow states closely together within the BotML so it is easier to follow the course of actions in a conversation when reviewing your code.

Best Practices for Using Variables

Variables contain the information that a skill collects from a user. Whenever possible, use variables of an entity type for the information you want to collect. Do this for the following reasons:

-

User input is validated, and users are re-prompted after an invalid data input.

-

Digital assistants handle non sequitur navigation, which means that users can start a new conversation while in an existing conversation without you needing to code for it.

-

For built-in entities wrapped in a composite bag entity and for custom entities, you can define prompts, a validation error message, and a disambiguation prompt (that is automatically shown by the Common Response and Resolve Entities components).

-

A variable of an entity type can be slotted from initial user messages if you associate the entity with the intent. For skills developed in Visual dialog mode, this slotting happens automatically with the Common Response and Resolve Entities components. For skills developed in YAML dialog mode, use the

nlpResultVariableproperty on the input components to get this functionality.

Using Keywords on Action Items

Common Response components and custom components allow you to define keywords for your action items. With a keyword, users can invoke an action by sending a shortcut like a number or an abbreviation. This means that they don't have to press an action item, which they couldn't anyway using a text-only channel or voice, or type the full text of the displayed action item label.

Consider the following when defining keywords using Common Response or custom components, including entity event handlers.

-

Keywords don’t have to have the same text shown in the label.

-

Keywords are case-insensitive. You do not have to define a keyword in all possible case-sensitive variants.

-

If you plan to build bots for multiple languages, then your keywords cannot be defined in English only. To support multilingual keywords, you can reference a resource bundle string from the

keywordproperty of Common Response components (${rb('resource_key_name')}). The resource bundle string then contains a comma-separated list of keywords. -

Provide visual clues indicating that keywords can be used, such as by adding an index number in front of the label.

For example, an action menu to submit or cancel an order could be defined with the following labels: "1. Send", "2. Cancel". And the keywords could be defined as "1,1.,send" and "2,2.,cancel". So, to cancel an order, the user could type "cancel", "2", or "2.".

Note:

Note that in this case “send” and "cancel" also need to be defined as keywords because the labels are “1. Send” and “2. Cancel”, not just “Send” an "Cancel". -

Keywords don't work if used in a sentence. If, for example, a user writes "I’d prefer to cancel my order", "cancel" is not detected as a keyword. If you expect your users to use conversation instead of keywords to select from an option, then consider NLU-based action menus as explained in the next section.

If you’re wondering how to create index-based keywords for dynamically-created action items, here are the choices:

-

Enable auto-numbering in your skills through the skill's Enable Auto Numbering on Postback Actions in Task Flows configuration setting. This configures the components to add the keyword to the list of keywords and the index to the label.

-

Use Apache FreeMarker expressions to add the index number and/or to reference a resource bundle string that contains the action item value in its name.

Consider NLU-Based Action Menus

Action menus usually use action items a user can press to perform navigation to a specific conversation or to submit, confirm, or cancel an operation. When an action item is pressed, a message is sent to the skill with an optional payload for updating variables and an action string to determine the dialog flow state to transition to.

If a user types a message that does not match the action item label, or a keyword defined for an action item, then the next transition is followed. For example, imagine a pair of action items that use Send expense report and Cancel expense report as labels. If a user types or says "Yes, please send", the next transition is triggered instead of the action item marked with Send expense report. The reason this would happen is because an implementation that requires users to press a button or action item is not conversational!

To create robust and truly conversational action menus, you need to create them based on value-list entities, where the list values indicate the action to follow and the synonyms define possible keywords a user would use in a message to invoke an action.

To do this, you first create a value list entity, for which you then define a variable in the dialog flow. You can then use a Common Response or Resolve Entities component to display the list of options. The variable you create must be configured as the value of the variable property of the component. In this way, the next transition is triggered when the user types "Yes, please send" and navigates to a dialog flow state that checks the value stored in the variable.

The value stored in the variable is one of the values defined in the value-list entity. Using a Switch component, you can define the next dialog flow state the conversation continues with.

If the user enters a message that is not in the value-list entity or as one of its synonyms, the action menu is invoked again. Since you used a value-list enitity, you can use the error message defined for the entity to help users understand what is expected of them. Also, because you can configure multiple prompts for the value-list entity, you can display alternative prompts and even messages that gradually reveal additional information, including information about how to cancel the action menu from displaying.

If you create resource bundle string names for the values in the value-list entity, then you can make sure the labels displayed on the action items can be translated using one of the following expressions:

${rb(enumValue)}${rb(enumValue.value.primaryLanguageValue)}(if thefullEntityMatchesproperty is set totruefor the Common Response component)

To dynamically set the values on an action item, Common Response components are easier to work with. If you are comfortable programming entity event handlers, the use of the Resolve Entities is also possible.

With NLU-based action menus, users can press an action item or type a message that doesn’t have to be the exact match of an action label or keyword.

Interrupting a Current Conversation for a New Conversation

A frequently asked question is how to configure a conversation so that users can start a new or different conversation when prompted for input. However, this question is more a design decision that you need to make than about technical know-how. Here are the design considerations:

-

Is your skill exposed through a digital assistant? If so, the skill participates in the non sequitur routing of the digital assistant, which routes messages to another skill or another intent in the same skill if the user message could not be successfully validated for the current dialog flow state. To make this non sequitur navigation happen, make sure user input gets validated against an entity-based variable.

-

Is your skill exposed as a stand-alone skill? If so, then there is no built-in non sequitur navigation, and you need to design for it. To do this you use an entity-based variable for the dialog flow state that you want to allow users to branch into a new conversation. You then set the

maxPromptsproperty of the user input component to 1 and configure thecancelaction transition to start a new conversation. It would be a mistake to directly point the cancel transition to the intent state as it most likely would cause an endless loop. So, before navigating back into the intent state, make sure you use a dialog flow state configured with the Reset Variables component to reset thenlpresulttype variable and other variables needed for the conversation.

Note:

While creating standalone skills that you expose directly on a channel is an option, we don't recommend it. The main reason is that you miss out on all the conversational and development benefits you get from using a digital assistant. Some of the benefits you would miss out on are:-

Non sequitur navigation, which is the ability of digital assistant to suspend a current conversation to temporarily change the topic to another conversation.

-

Modular development that allows you to partition your development effort into multiple skills, allowing incremental development and improvements to your bot.

-

Automatic handling of help requests.

-

Reuse of commonly needed skills like frequently asked questions, small talk, and agent integration.

Model-Driven Conversations

Model-driven conversations are an extension of dialog-driven conversations. With model-driven conversations, you reduce the amount of dialog flow code you write, providing a mature and domain object focused navigation of bot-user interactions.

The idea behind what we refer to as model-driven conversation is to handle the conversation by resolving composite bag entities using Resolve Entities or Common Response components. Composite bag entities are similar to domain objects in that they group a set of entities to form a real-world object that represents an order, booking, customer, or the like.

Each entity in the composite bag is automatically resolved by a Resolve Entities or Common Response component, which means that all bot responses and prompts are generated for you without the need to create dialog flow states for each of them. With model-driven conversations, you write less dialog flow code and get more functionality.

Recommended Approach

Best practices for creating model-driven conversation are to use composite bag entities, entity event handlers, and the Resolve Entities component.

There is nothing wrong with using the Common Response component instead of ResolveEntities, but, thanks to entity event handlers, Resolve Entities is sufficient for most implementations.

-

Composite bag entities are domain objects that have bag items for each piece of information to collect from a user. Each bag item has prompts, error messages and a disambiguation prompt defined that is displayed if needed. For composite bag items that are based on value list entities, you can also display multi-select lists. A benefit of composite bag entities is their ability to collect user input for many of its bag items from a single user message. This functionality is called out-of-order extraction and is enabled by default.

-

The Resolve Entities component resolves entities by displaying prompts defined in the entity, validating user input, displaying validation error messages defined in the entity, and showing a disambiguation dialog when users provide more information than expected in a message. For composite bag entities, the Resolve Entities component renders user interfaces for all bag items in the composite bag entity in the order in which they are defined.

-

An Entity Event Handler is a JavaScript component that is registered with a composite bag entity and contains functions that are called by the Resolve Entities component when resolving the composite bag entity. This event-driven approach allows you to run custom code logic and even invoke remote REST services while a user is working in a composite bag entity. We will cover entity event handlers in more depth later in this guide.

How to Design Model-Driven Conversations

The best design for model-driven conversations is to minimize the number of bag items required by a composite bag entity. Imagine composite bag entities as individual conversational modules you chain up to a conversation.

Check with the user after each composite bag entity you resolve to give her the opportunity to continue or discontinue the conversation.

Of course, this shouldn't be implemented with a prompt like "should I continue" followed by a pair of "yes" and "no" buttons. Let your conversation designer create a less intrusive transition that ties two conversation modules together.

Resource Bundles for Messages and Prompts

As with all messages and prompts we strongly recommend the use of resource bundle strings for prompts and messages defined in composite entity bag items. Here are some examples for composite bag entities:

-

cbe.<entity_name>.bag_item_name.errorMessage -

cbe.<entity_name>.bag_item_name.disambiguationMessage -

cbe.<entity_name>.bag_item_name.prompt1 -

cbe.<entity_name>.bag_item_name.prompt2

Apache FreeMarker Best Practices

Apache FreeMarker is a powerful expression language that you can use in your dialog flows as well as in entity and skill configurations. However, Apache FreeMarker expressions become problematic when they get too complex, making them error-prone and difficult to use due to the lack of debugging options.

Our recommendation is to avoid complex multi-line Apache FreeMarker expressions and instead consider one of the following options:

-

Break up complex FreeMarker expressions by saving values in variables with short names before using them in the expression.

-

Use

<#/if …>directives to improve readability for your FreeMarker expressions. -

Use entity event handlers with composite bag entities to deal with complex validation code or when computing values to be assigned to a variable.

-

Check for null values for variables you reference using the

?has_contentbuilt-in expression. Provide a sensible default value if the expression resolves to false, e.g.?has_content?then(…,<SENSIBLE_DEFAULT_VALUE>).

Checklist for Implementing Conversations

- ☑ Choose sensible and descriptive names for your flows and flow states.

- ☑ Use entity-type variables.

- ☑ User input prompts for entity type variables should read the prompt from the entity.

- ☑ Build model-driven conversations.

- ☑ Build action menus from value-list entities.

- ☑ Avoid complex Apache FreeMarker expressions.

- ☑ Use resource bundles. No text message or prompt should be added directly to the dialog flow.

- ☑ Create reusable flows for parts of the conversation that are common to different flows.

Learn More

- Oracle Digital Assistant Design Camp video: The Art of Navigation in Oracle Digital Assistant

- Oracle TechExchange sample: Model driven conversation – Pasta ordering skill

- Oracle TechExchange sample: Model driven conversation - Expense reporting skill

- Tutorial: Developing dialog flows

- Tutorial: Building composite bag entities

- Tutorial: Optimize Insights Reports with Conversation Markers