Create a Log Source

Log sources define the location of your target's logs. A log source needs to be associated with one or more targets to start log monitoring.

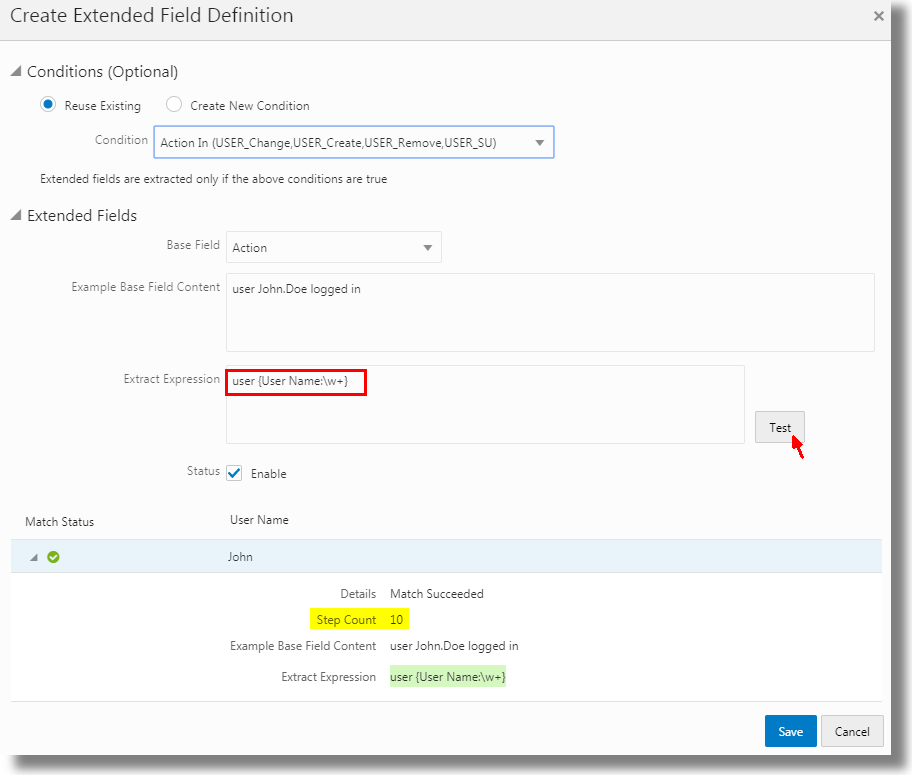

Use Extended Fields in Log Sources

The Extended Fields feature in Oracle Log Analytics lets you extract additional fields from a log entry, in addition to the fields defined by the out-of-the-box parsers.

If you use automatic parsing that only parses time, then the extended field definition is based on the Original Log Content field, because that’s the only field that will exist in the log results. See Use the Generic Parser.

When you search for logs using the updated log source, values of the extended fields are displayed along with the fields extracted by the base parser.

Oracle Log Analytics enables you to search for the extended fields that you’re looking for. You can search based on the how it was created, the type of base field, or with some example content of the field. Enter the example content in the Search field, or click the down arrow for the search dialog box. In the search dialog box, under Creation Type, select if the extended fields that you’re looking for are out-of-the-box or user-defined. Under Base Field, you can select from the options available. You can also specify the example content or the extraction field expression that can be used for the search. Click Apply Filters.

Table 6-1 Sample Example Content and Extended Field Extraction Expression

| Log Source | Parser Name | Base Field | Example Content | Extended Field Extraction Expression |

|---|---|---|---|---|

|

|

Linux Syslog Format |

|

|

|

|

|

Yum Format |

|

|

|

|

|

Database Alert Log Format (Oracle DB 11.1+) |

|

|

|

|

|

WLS Server Log Format |

|

|

|

Use Data Filters in Log Sources

Oracle Log Analytics lets you mask and hide sensitive information from your log records as well as hide entire log entries before the log data is uploaded to the cloud. Using the Data Filters tab under Log Sources in the Configuration page, you can mask IP addresses, user ID, host name, and other sensitive information with replacement strings, drop specific keywords and values from a log entry, and also hide an entire log entry.

Masking Log Data

If you want to mask information such as the user name and the host name from the log entries:

-

From Oracle Log Analytics, click the OMC Navigation

icon on the top left corner of the interface. In the OMC Navigation bar, click Administration Home.

icon on the top left corner of the interface. In the OMC Navigation bar, click Administration Home.

-

In the Log Sources section, click Create source.

Alternatively, you can click the available number of log sources link in the Log Sources section and then in the Log Sources page, click Create.

This displays the Create Log Source dialog box.

-

Specify the relevant values for the Source, Source Type, Entity Type, and File Parser fields.

-

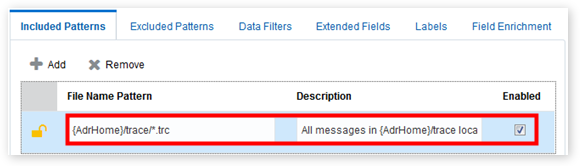

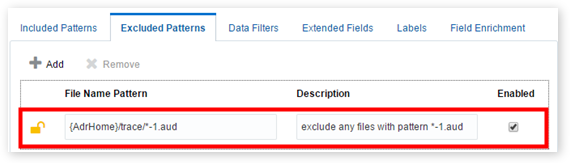

In the Included Patterns tab, click Add to specify file name patterns for this log source.

-

Click the Data Filters tab and click Add.

-

Enter the mask Name, select Mask as the Type, enter the Find Expression value, and its associated Replace Expression value.

Name Find Expression Replace Expression mask username User=(\S+)s+confidential mask host Host=(\S+)s+mask_host Note:

The syntax of the replace string should match the syntax of the string that’s being replaced. For example, a number shouldn’t be replaced with a string. An IP address of the form

ddd.ddd.ddd.dddshould be replaced with000.000.000.000and not with000.000. If the syntaxes don’t match, then the parsers will break. -

Click Save.

When you view the masked log entries for this log source, you’ll find that Oracle Log Analytics has masked the values of the fields that you’ve specified.

-

User = confidential

-

Host = mask_host

Note:

Apart from adding data filters when creating a log source, you can also edit an existing log source to add data filters. See Manage Existing Log Sources to learn about editing existing log sources.

Note:

Data masking works on continuous log monitoring as well as on syslog listeners.

Hash Masking the Log Data

When you mask the log data using the mask as described in the previous section, the masked information is replaced by a static string provided in the Replace Expression. For example, when the user name is masked with the string confidential, then the user name is always replaced with the expression confidential in the log records for every occurrence. By using hash mask, you can hash the found value with a unique hash. For example, if the log records contain multiple user names, then each user name is hashed with a unique expression. So, if user1 is replaced with the text hash ebdkromluceaqie for every occurrence, then the hash can still be used for all analytical purposes.

To apply the hash mask data filter on your log data:

-

From Oracle Log Analytics, click the OMC Navigation

icon on the top left corner of the interface. In the OMC Navigation bar, click Administration Home.

icon on the top left corner of the interface. In the OMC Navigation bar, click Administration Home.

-

In the Log Sources section, click Create source. Alternatively, you can click the available number of log sources link in the Log Sources section and then in the Log Sources page, click Create. This displays the Create Log Source dialog box.

You can also edit a log source that already exists. In the Log Sources page, click

next to your log source, and click Edit. This displays the Edit Log Source dialog box.

next to your log source, and click Edit. This displays the Edit Log Source dialog box.

-

Specify the relevant values for the Source, Source Type, Entity Type, and File Parser fields.

-

In the Included Patterns tab, click Add to specify file name patterns for this log source.

-

Click the Data Filters tab and click Add.

-

Enter the mask Name, select Hash Mask as the Type, enter the Find Expression value, and its associated Replace Expression value.

Name Find Expression Replace Expression Mask User Name User=(\S+)s+Text Hash Mask Port Port=(\d+)s+Numeric Hash -

Click Save.

As the result of the above example hash masking, each user name is replaced by a unique text hash, and each port number is replaced by a unique numeric hash.

You can extract the hash masked log data using the hash for filtering. See Filter Logs by Hash Mask.

Dropping Specific Keywords or Values from Your Log Records

Oracle Log Analytics lets you search for a specific keyword or value in log records and drop the matched keyword or value if that keyword exists in the log records.

Consider the following log record:

ns5xt_119131: NetScreen device_id=ns5xt_119131 [Root]system-notification-00257(traffic): start_time="2017-02-07 05:00:03" duration=4 policy_id=2 service=smtp proto=6 src zone=Untrust dst zone=mail_servers action=Permit sent=756 rcvd=756 src=249.17.82.75 dst=212.118.246.233 src_port=44796 dst_port=25 src-xlated ip=249.17.82.75 port=44796 dst-xlated ip=212.118.246.233 port=25 session_id=18738

If you want to hide the keyword device_id and its value from the log record:

-

Perform Step 1 through Step 5 listed in the Masking Log Data section.

-

Enter the filter Name, select Drop String as the Type, and enter the Find Expression value such as

device_id=\S*. -

Click Save.

When you view the log entries for this log source, you’ll find that Oracle Log Analytics has dropped the keywords or values that you’ve specified.

Note:

Ensure that your parser regular expression matches the log record pattern, otherwise Oracle Log Analytics may not parse the records properly after dropping the keyword.

Note:

Apart from adding data filters when creating a log source, you can also edit an existing log source to add data filters. See Manage Existing Log Sources to learn about editing existing log sources.

Dropping an Entire Line in a Log Record Based on Specific Keywords

Oracle Log Analytics lets you search for a specific keyword or value in log records and drop an entire line in a log record if that keyword exists.

Consider the following log record:

ns5xt_119131: NetScreen device_id=ns5xt_119131 [Root]system-notification-00257(traffic): start_time="2017-02-07 05:00:03" duration=4 policy_id=2 service=smtp proto=6 src zone=Untrust dst zone=mail_servers action=Permit sent=756 rcvd=756 src=249.17.82.75 dst=212.118.246.233 src_port=44796 dst_port=25 src-xlated ip=249.17.82.75 port=44796 dst-xlated ip=212.118.246.233 port=25 session_id=18738

Let’s say that you want to drop entire lines if the keyword device_id exists in them:

-

Perform Step 1 through Step 5 listed in the Masking Log Data section.

-

Enter the filter Name, select Drop Log Entry as the Type, and enter the Find Expression value such as

.*device_id=.*. -

Click Save.

When you view the log entries for this log source, you’ll find that Oracle Log Analytics has dropped all those lines that contain the string device_id in them.

Note:

Apart from adding data filters when creating a log source, you can also edit an existing log source to add data filters. See Manage Existing Log Sources to learn about editing existing log sources.

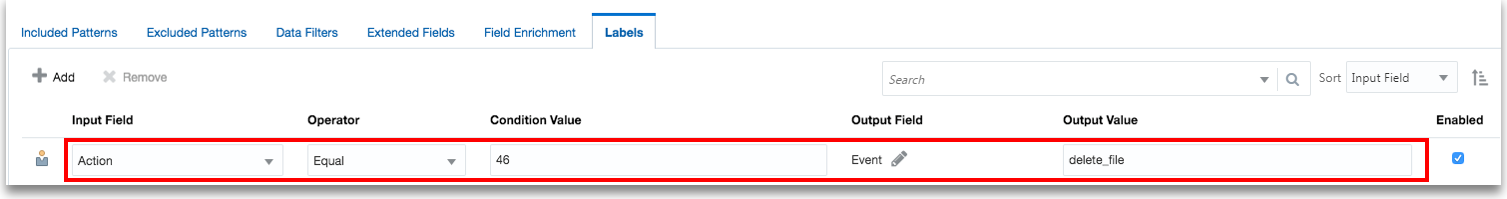

Use Labels in Log Sources

Oracle Log Analytics lets you add labels or tags to log entries, based on defined conditions.

Oracle Log Analytics enables you to search for the labels that you’re looking for. You can search based on any of the parameters defined for the labels. Enter the search string in the Search field, or click the down arrow for the search dialog box. You can specify the search criteria in the search dialog box. Under Creation Type, select if the labels that you’re looking for are out-of-the-box or user-defined. Under the fields Input Field, Operator, and Output Field, you can select from the options available. You can also specify the condition value or the output value that can be used for the search. Click Apply Filters.

You can now search log data based on the labels that you’ve created. See Filter Logs by Labels.

You can also use the labels to enrich the data set instead of creating a lookup table for a one time operation, as in the following example:

In this example, if the input field Action has the value 46, then the output field Event is loaded with the value delete_file.