11 Quality Reports

Bots that can distinguish between its intents easily will have fewer intent resolution errors and better user adoption. The quality reports can help you reach these goals.

You can use these reports when you’re forming your training data and later on, when you’ve published your bot and want to find out how your intents are fielding customer messages at any point in time.

How Do I Use the Data Quality Reports?

-

Utterances—Assigns quality rankings to pairs of intents as follows:

-

High—The intents are distinct.

-

Medium—The intents have similar utterances.

-

Low—The intent pairs aren’t differentiated enough.

-

-

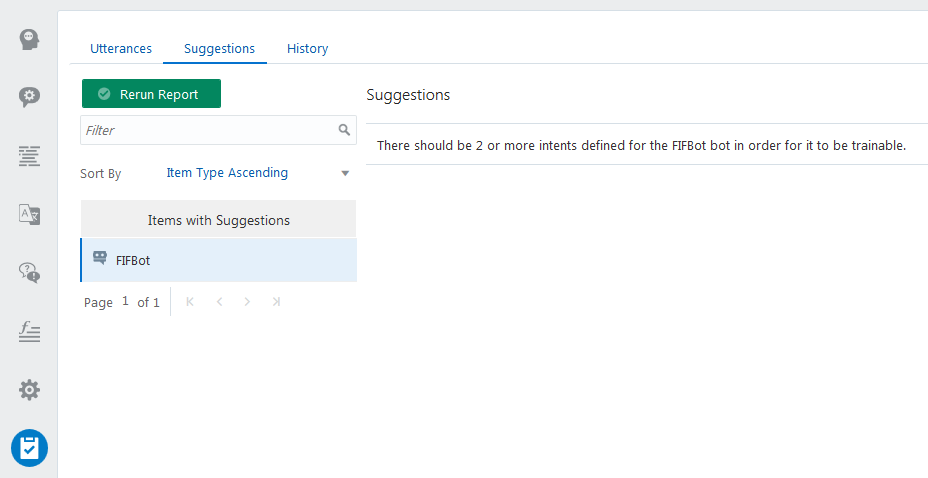

Suggestions—Tells you if your bot is viable. You can find out if you’ve added enough intents and if you defined a sufficient number of utterances for each intent.

-

History—Shows your bot’s resolution history, so that you can identify when the intents worked as expected and when they didn’t. You can use this feedback to retrain your bot.

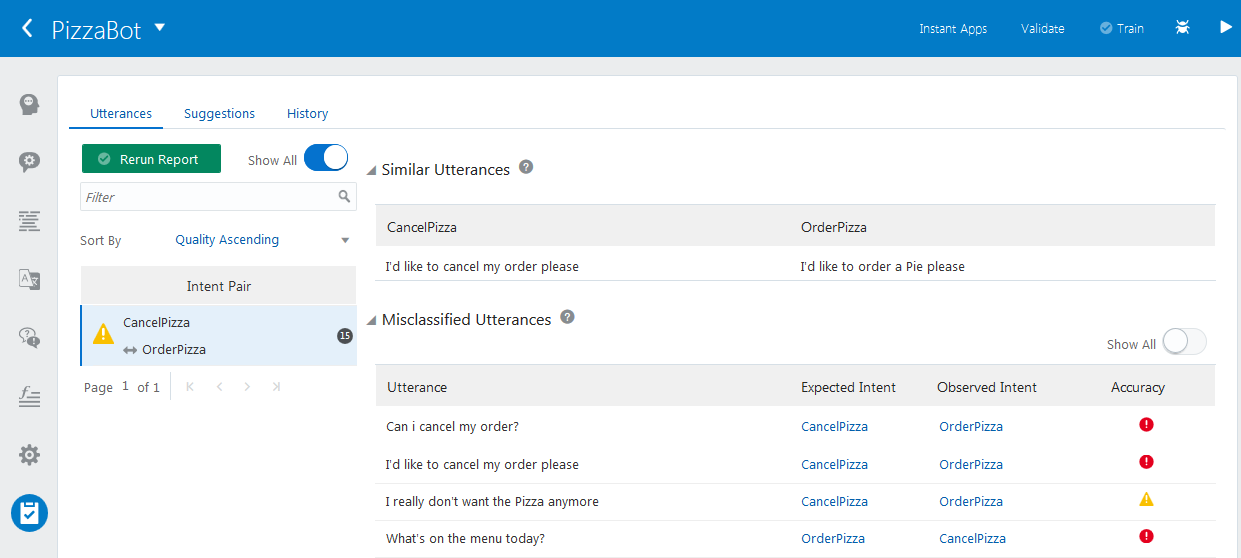

Utterances

When you’re building your training corpus, you can gauge how distinct your intents are from one another by running an utterance quality report. This report shows you different combinations of intent pairs, each rated on the similarity of their respective utterances. It generates these results by randomly splitting the utterances into two sets: training and testing. It builds and trains a model from 80% of the utterances and then uses the remaining 20% to test this model. If you don’t already have a lot of training data, you can build high-quality intents by combining this report with the utterance guidelines.

Run an Utterance Quality Report

Important:

Before you run a report, you need to train your bot with Trainer Tm.-

After the training completes, click Quality (

) in the left navbar.

) in the left navbar.

-

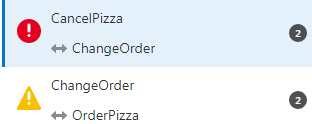

Click Run Report. The report scores the intent pairs in terms of their utterances that are too alike.

Score What Does this Mean? (And What Do I Do?) High While your bot can easily distinguish between these intents, they still may have utterances that are too alike, so you should continue to edit and add training data. Medium The utterances are so similar that they potentially blur the meanings of these intents. Because your bot may have trouble distinguishing between these intents, edit or delete these utterances. Low The utterances are too alike, so the bot can’t distinguish between them. To fix this, edit or delete these utterances and then retrain the bot. You can also add more utterances to your intent. -

If needed, click Show All. By default, this switch is toggled off (

), so the report shows only the medium and low-ranking intent pairs. Keep in mind that just because a report ranks an intent pair as high quality, doesn’t mean that your corpus is complete, or doesn’t need more utterances.

), so the report shows only the medium and low-ranking intent pairs. Keep in mind that just because a report ranks an intent pair as high quality, doesn’t mean that your corpus is complete, or doesn’t need more utterances.

-

If needed, choose a sorting option to view the intent pairs.

-

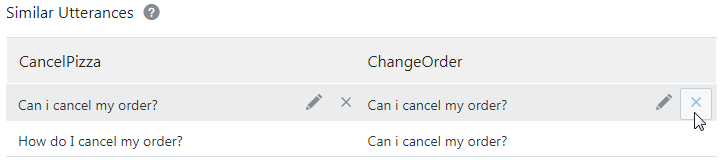

Click an intent pair to see the similar utterances. By hovering over an utterance, you an edit or delete it.

-

If needed, adjust the utterances.

Based on the score of the similar entities, your typical course of action is:-

Adding new utterances.

-

Modifying the similar utterances.

-

Deleting similar utterances.

-

Leaving the utterances alone, even if they clash.

-

Collapsing the two intents into a single intent if they have too many utterances in common. This common intent uses entities (such as list value entities with synonyms) to recognize the distinctions in the user input.

Important:

Keep your sights on how modifying the data improves your bot’s coverage, not the report results. While you can increase accuracy within the context of this report by adding similar utterances to an intent, you should instead focus on anticipating real-world user input maintaining a diverse set of utterances for each intent. If you pad your intents to suit the report, your bot won’t perform well. -

-

After you’ve made your changes, retrain your bot again and then click Rerun Report.

Troubleshooting Utterance Quality Reports

Why does the report show similar utterances for high-quality intent pairs?

The report not only compares utterances, but also looks at an intent as a whole. So if most of the utterances for an intent pair are distinct, a low number of similar ones won’t detract from the overall quality rating. For example, if you have two intents called FAQ and Balances which each have 100 utterances each. They’re easily distinguished, but there are still one or two utterances that belong to each.

Why doesn’t the report show similar utterances for low-quality pairs?

This can happen because, on the whole, the report can’t distinguish between the intent pair even though they don’t share any utterances. Factors like a low number of utterances, or vague and general wording can cause this.

Why does the report continue to show my utterances as similar, even after I edited them?

Whenever you delete or edit and utterance, you need to retrain the bot with Trainer TM before you run the report again.

Suggestions

When you’re starting out with your data set, check the Suggestions page to find out if your bot meets the minimum standards of having at least two intents, each of which has two or more utterances.

History

The report is designed to help you look for:

-

Complete failures (unresolved intents)—When your bot can’t classify the user comment to any of its intents.

-

Potentially misclassified user messages—When the top intent is separated from the second intent by only a narrow margin.

-

Low confidence levels—When the intended intent resolves the message, but just barely, as indicated by a low confidence level.

How Do I Run a History Report?

-

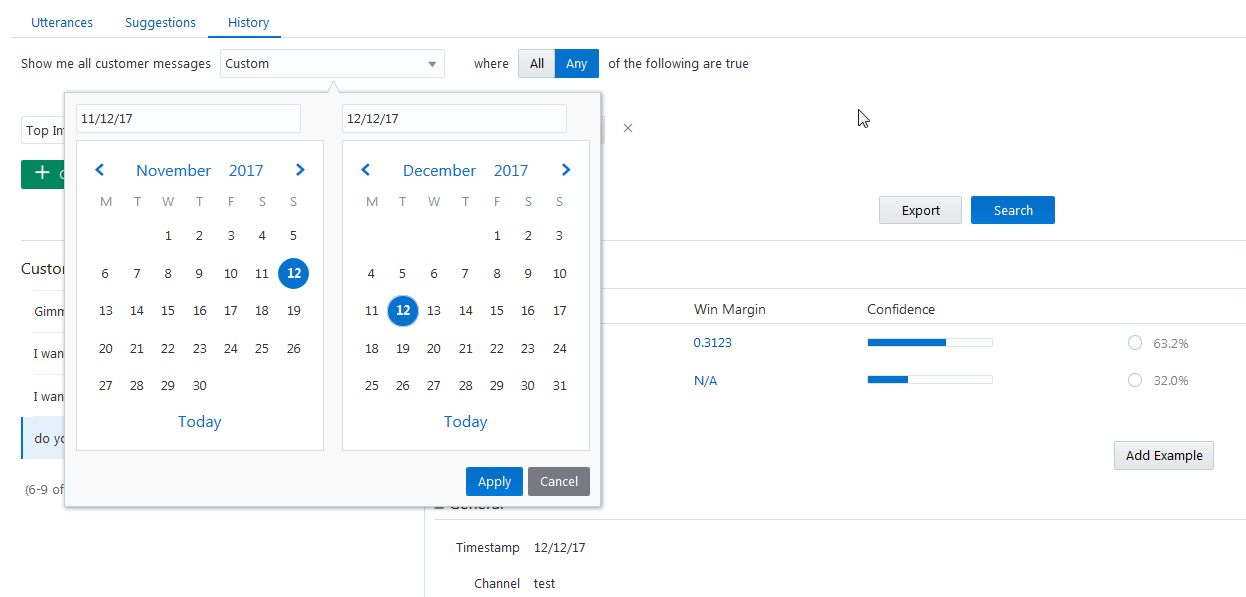

Choose the time period. You can use one of the preset periods, like Today, Yesterday, or Last 90 Days, or add your own by first choosing Custom and then by setting the collection period using the date picker.

Tip:

If you don’t want to filter this data any further, then just delete the filter criteria ( ) and then click Search.

) and then click Search.

-

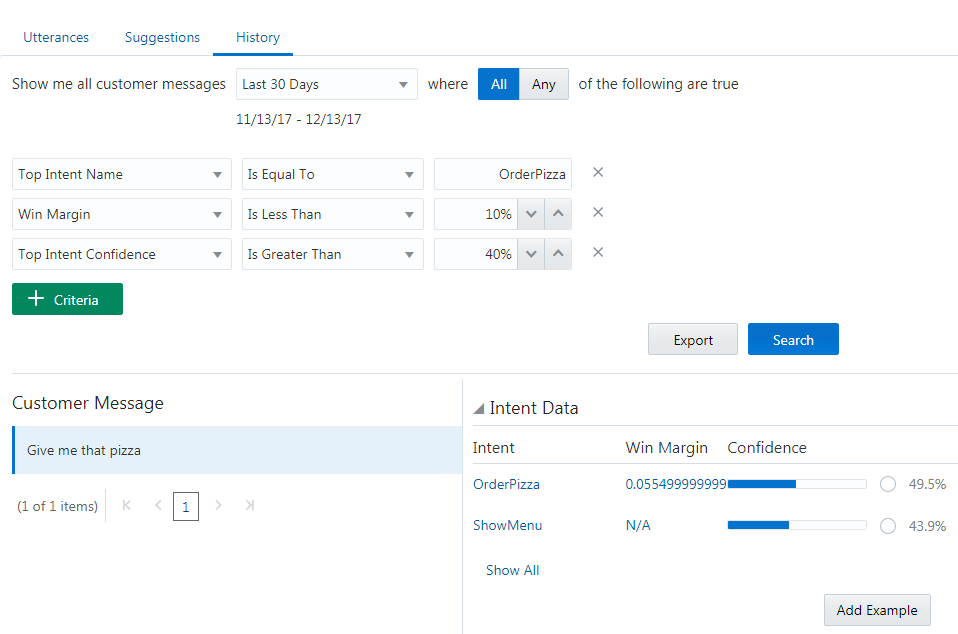

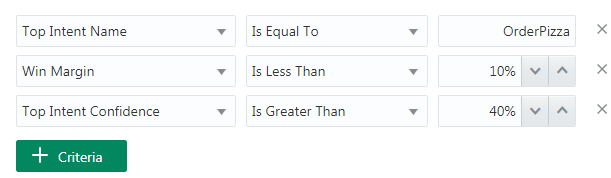

If you want to use the report to find out about intents that resolve the messages correctly but with only a low confidence level or by a thin margin, first chose one of the operators (All or Any) and then apply your search criteria.

-

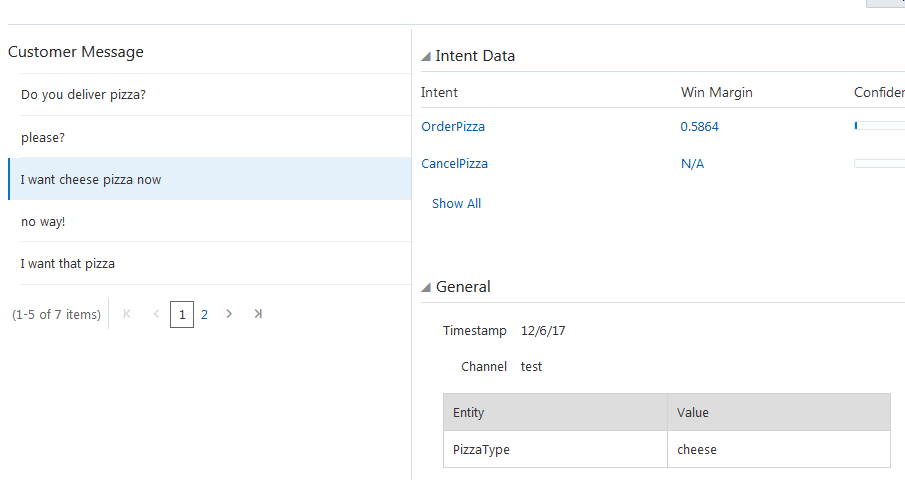

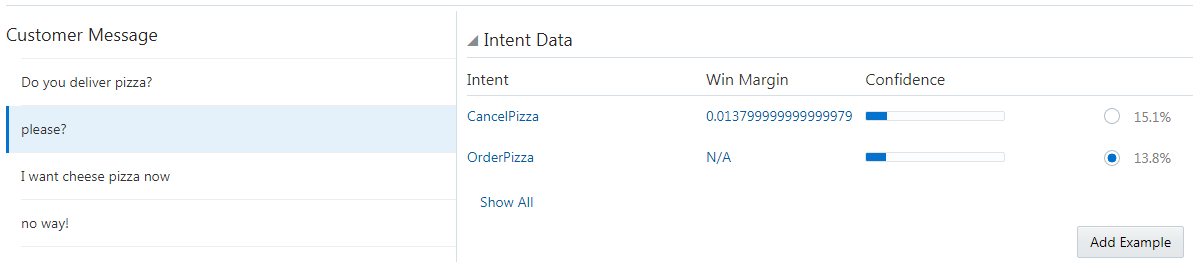

Click Search. For each message within the time frame, the report shows you which intent your bot used to resolve the message along with the second-runner up. To reflect the intent ranking, the report shows you the intents’ confidence ranking and, for the top intent, it’s win margin, the difference, in terms of confidence, between it and the second intent.

Tip:

In general, you set the win margin at around 10%.If you click Show All, you can see the lower-ranking intents (if any).

By expanding the General section of page, you can see which entities played a role in resolving the message and the channel (which you can set as a filter).

-

If you think the message improves your corpus by say, widening the win margin between the top two intents, select the intent’s confidence level radio button and then click Add Example. Remember that since you’ve now added a new utterance to your corpus, you need to retrain your bot.

Running Failure Reports

To identify all of the messages that your bot treated as unresolved because the resolution fell below the confidence threshold, set Top Intent Confidence to a value lower than the one set for the System.Intent’s confidenceThreshold property. You can add the messages returned by this report to an existing intent, or if they indicate that users want your bot to perform some other action entirely, you can use them to define a new intent.

Running Low Confidence Reports

When the top intent resolves the message, but only with a low confidence might indicate that you might need to revise the utterances that belong to the intents because they’re potentially misclassified. To run a report of low confidence intents, set Top Intent Confidence equal to a value that’s just above the one set for the System.Intent’s confidenceThreshold property.

Troubleshooting Narrow Win Margins

Thin win margins might indicate where user messages fall in between your bot’s intents. Review these messages to make sure that they are getting resolved by the right intent. You can also configure the System.Intent’s confidenceWinMargin property to help your bot respond to vaguely worded or compound user messages.

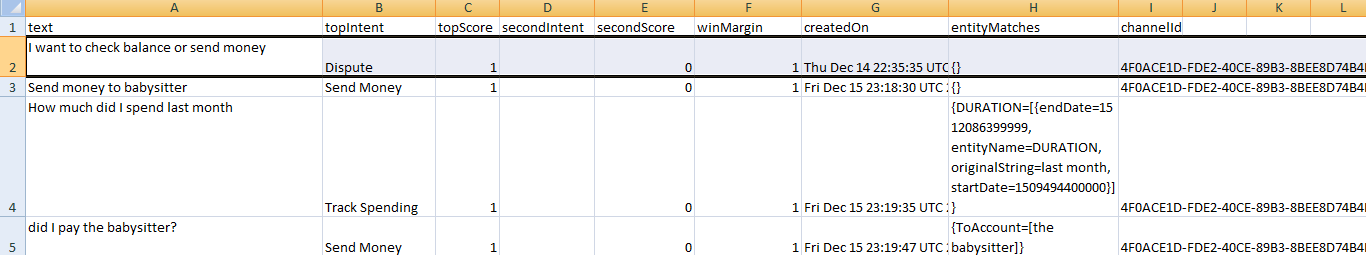

Viewing the Resolution History as a CSV File

-

text

-

topInent

-

topScore

-

secondIntent

-

secondScore

-

winMargin

-

createdOn

-

entityMatches

-

channelId