Use Predictions to Identify Asset Risks

Predictions use historical and transactional data to predict future asset parameters, and to identify potential risks to your assets.

You can either use internal Oracle Internet of Things Intelligent Applications Cloud data or import and use external device data to help make predictions for your asset.

Note:

Before predictions can work, the data source must have at least 72 hours of historical data in it. This requirement may be larger if you have selected a forecast window greater than 72 hours. For example, if you choose to forecast for 7 days ahead, the system must have at least 7 days of historical data before predictive analytics can start training the system.

You may have to wait until the system completes the training for the predictions to start showing.

Predictions help warn you of impending asset failure in advance. Preventive maintenance can help save the costs associated with asset breakdown or unavailability.

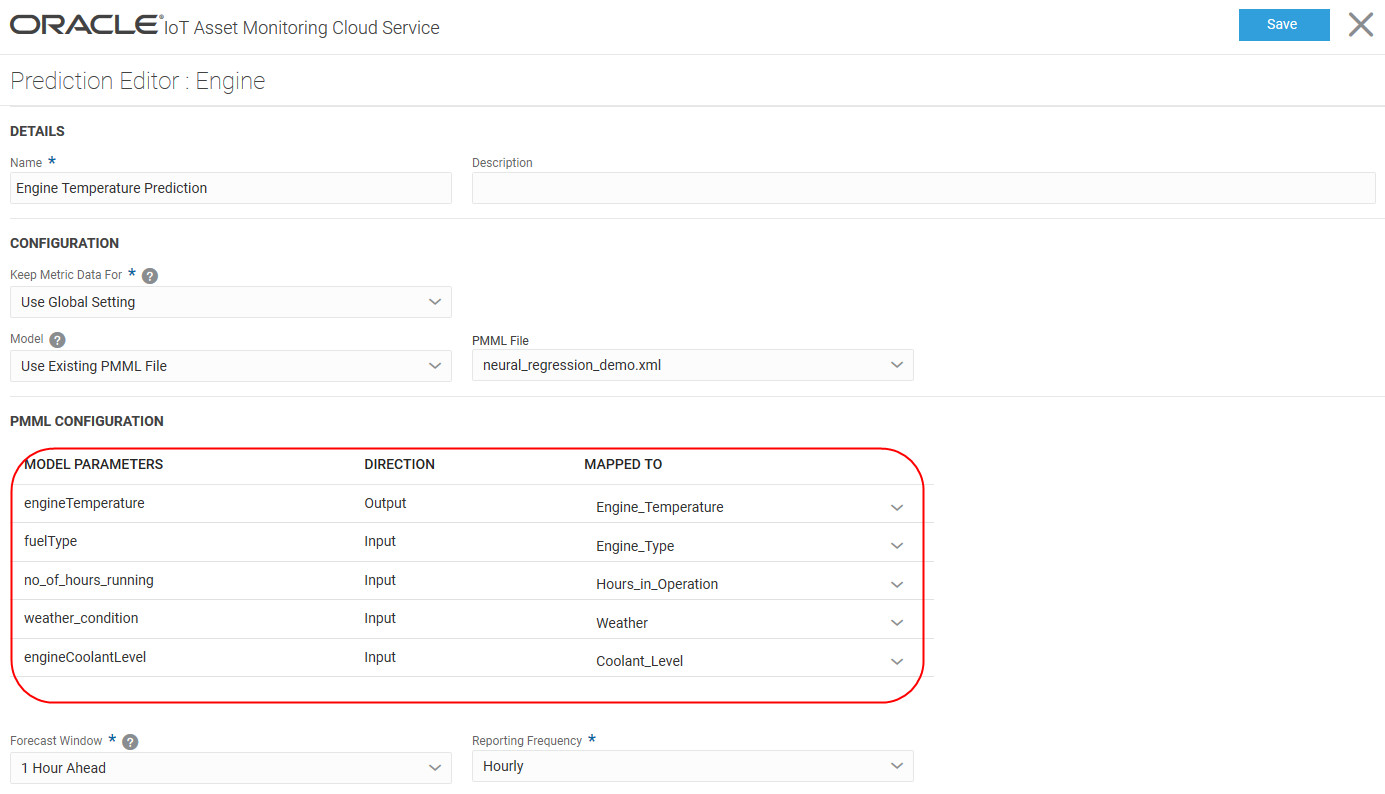

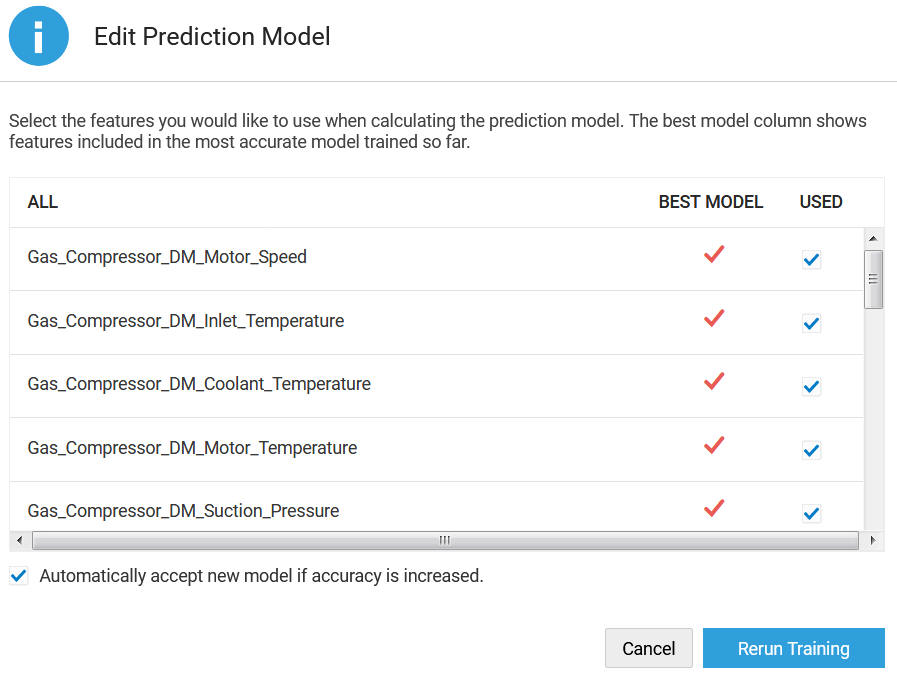

By default, Oracle IoT Asset Monitoring Cloud Service uses the most appropriate built-in training model to train the prediction. However, if your data scientists have externally trained models for your specific environment, you can use these to replace the training in Oracle IoT Asset Monitoring Cloud Service. Oracle IoT Asset Monitoring Cloud Service then performs the prediction scoring using your pre-trained model. You can use training models supported by PMML4S (PMML Scoring Library for Scala), such as the neural network. When creating a new prediction, upload your PMML file to replace the built-in models used by Oracle IoT Asset Monitoring Cloud Service.

All detected predictions appear on the Predictions ![]() page accessible from the Operations Center or Asset Details page of individual assets. The predictions displayed in the Operations Center depend on your current context (organization, group, and subgroup).

page accessible from the Operations Center or Asset Details page of individual assets. The predictions displayed in the Operations Center depend on your current context (organization, group, and subgroup).

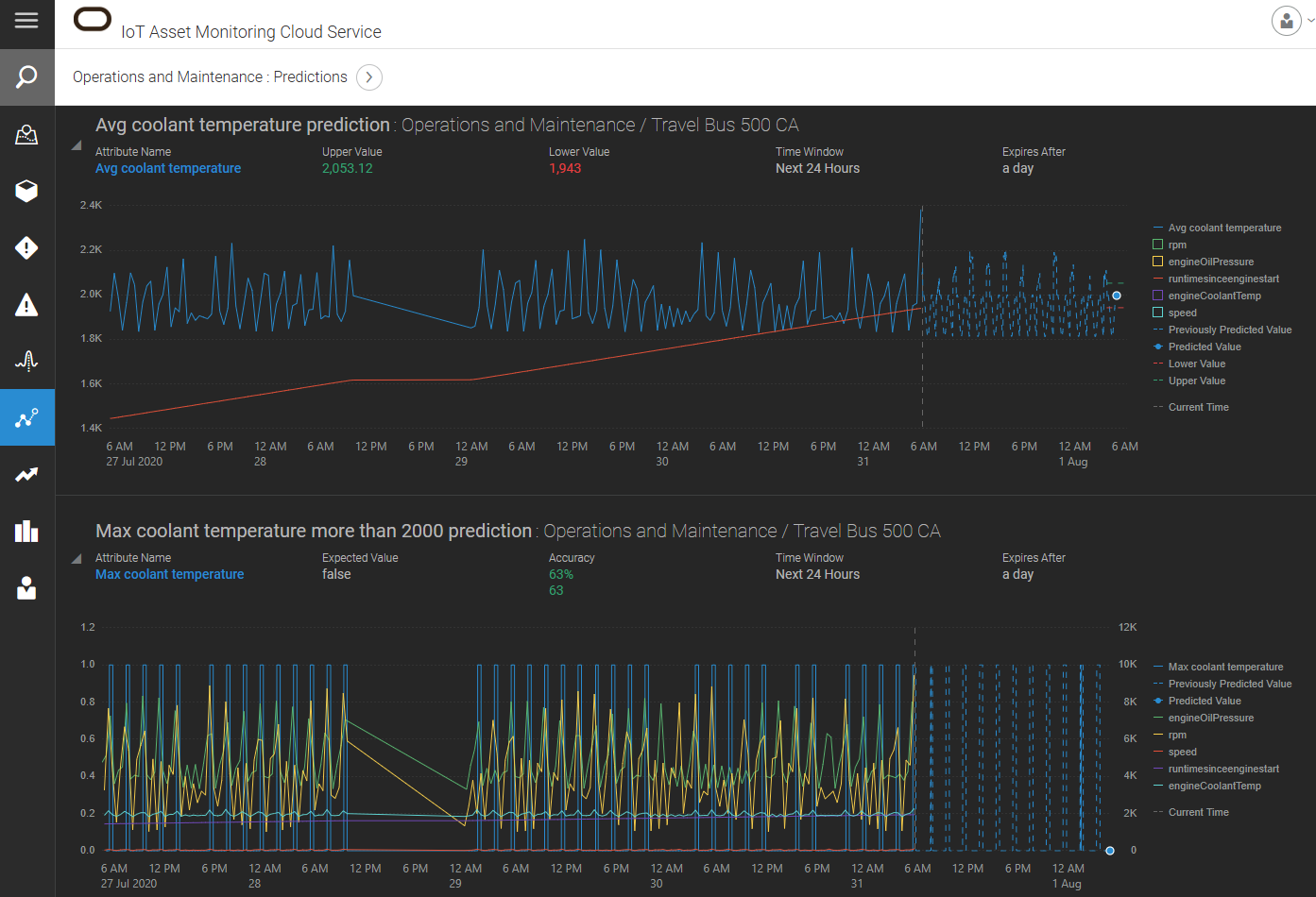

The following image shows some predictions for the organization in the Operations Center view. Predictions for different assets are shown in the same page. You can change your context using the breadcrumbs in the Operations Center.

Use the breadcrumbs to change your context in the organization. You can filter your view for a group, subgroup, or individual asset.

Note:

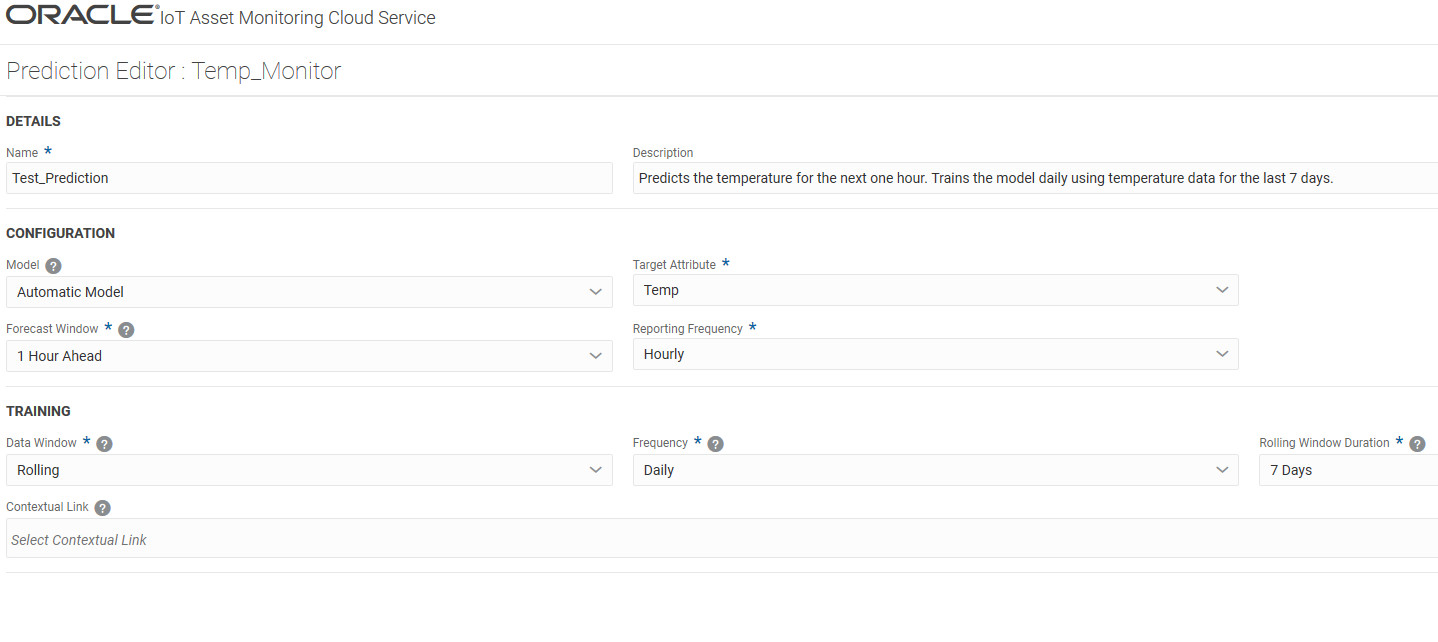

If you are using simulated sensor data to test your predictions, refer to the following sections for more information on the data characteristics of simulated data:Create a Prediction

Create a prediction to identify risks to your assets.

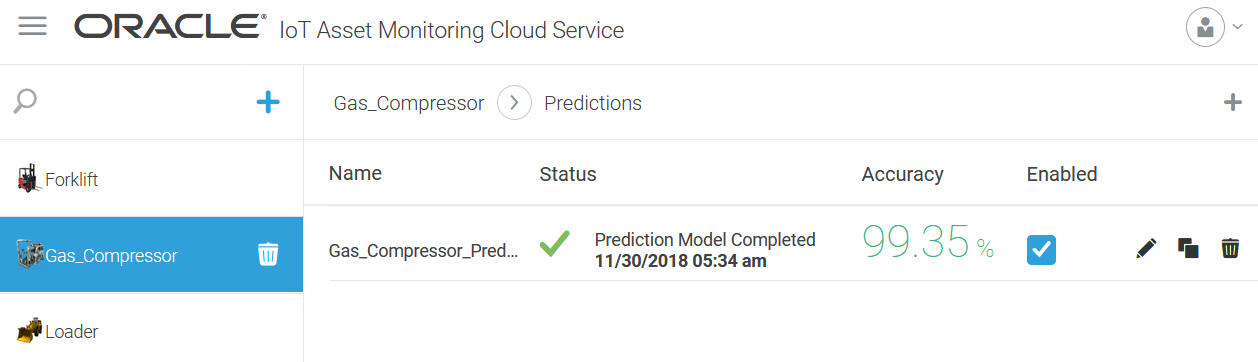

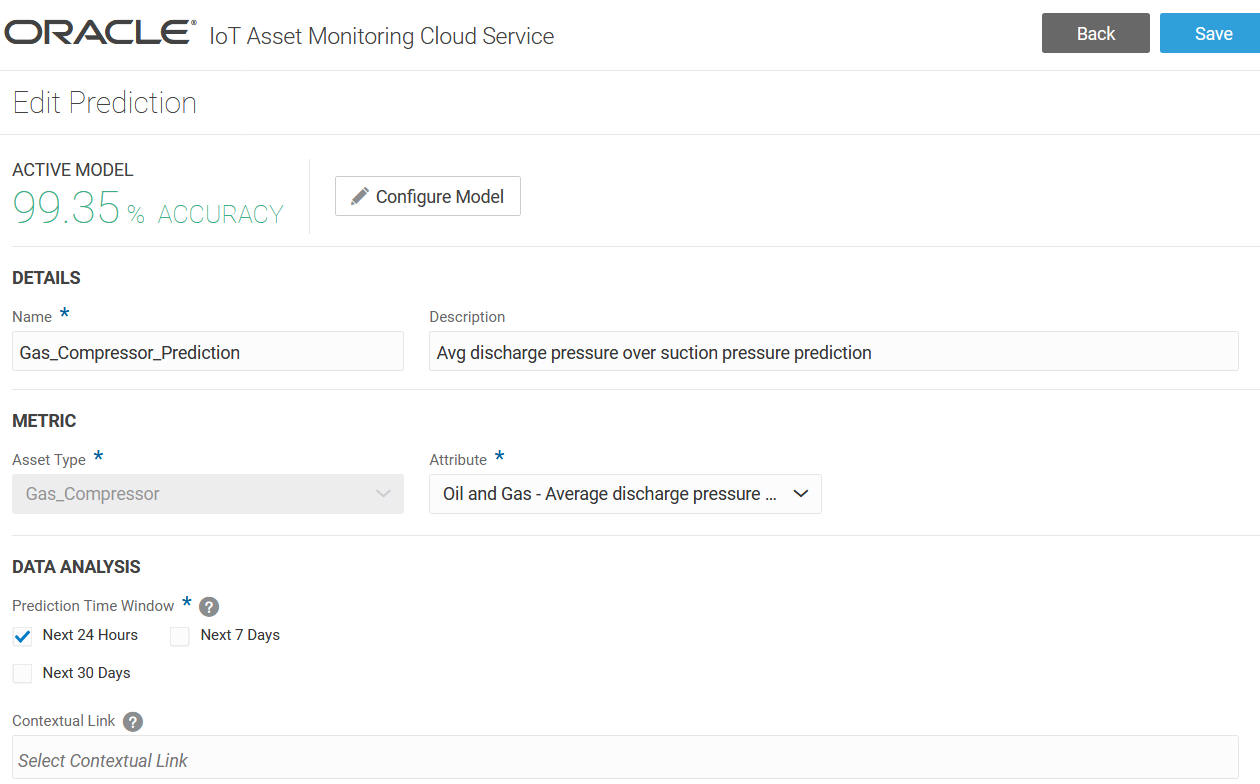

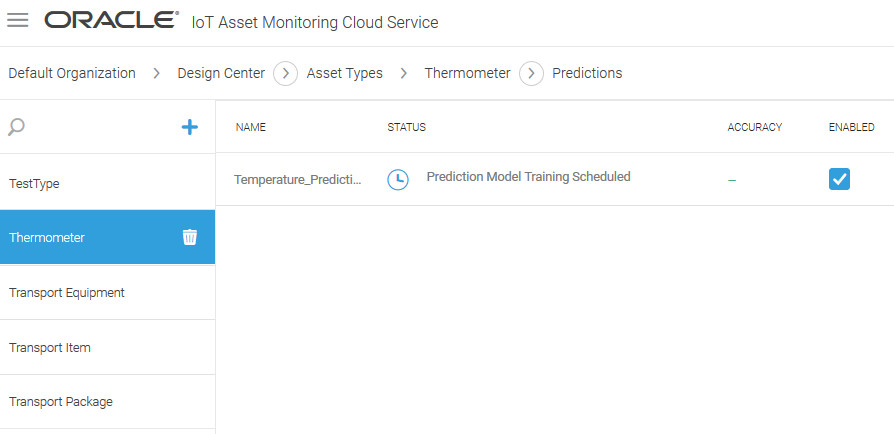

The application reports completed model trainings along with their timestamps. If training fails, the application includes pertinent information related to the failure. For example, the chosen training data set's statistical properties might not be suitable for predictions. The Feedback Center is also used to notify the Asset Manager about failures.

The application also reports skipped trainings along with an explanation for the same. For example, the system may be waiting to accumulate the minimum amount of data that is required for successful training.

You can enable or disable a prediction from within the Prediction Editor. If a prediction has been disabled by the system, a relevant message appears inside the Editor. The message also appears on the Predictions page in Operations Center.

Create a Prediction Using an Externally Trained Model

If you have a PMML file containing your externally trained model, you can use the PMML file to score your prediction in Oracle IoT Asset Monitoring Cloud Service.