3 Oracle Key Vault Multi-Master Cluster Concepts

A multi-master cluster is a fully connected network of Oracle Key Vault servers called nodes.

- Oracle Key Vault Multi-Master Cluster Overview

The multi-master cluster nodes provide high availability, disaster recovery, load distribution, and geographic distribution to an Oracle Key Vault environment. - Benefits of Oracle Key Vault Multi-Master Clustering

- Multi-Master Cluster Architecture

An Oracle Key Vault node can be a read-write or a read-only node operating in different modes. Nodes can also form a subgroup. - Building and Managing a Multi-Master Cluster

You initialize a multi-master cluster using a single Oracle Key Vault server. - Oracle Key Vault Multi-Master Cluster Deployment Scenarios

All multi-master cluster nodes can serve endpoints actively and independently. - Multi-Master Cluster Features

Oracle Key Vault provides features that help with inconsistency resolution and name conflict resolution in clusters, and endpoint node scan lists.

Related Topics

3.1 Oracle Key Vault Multi-Master Cluster Overview

The multi-master cluster nodes provide high availability, disaster recovery, load distribution, and geographic distribution to an Oracle Key Vault environment.

An Oracle Key Vault multi-master cluster provides a mechanism to create read-write pairs of Oracle Key Vault nodes for maximum availability and reliability. You can add read-only Oracle Key Vault nodes to the cluster to provide even greater availability to endpoints that need Oracle wallets, encryption keys, Java keystores, certificates, credential files, and other objects.

An Oracle Key Vault multi-master cluster is an interconnected group of Oracle Key Vault nodes. Each node in the cluster is automatically configured to connect with all the other nodes, in a fully connected network. The nodes can be geographically distributed. Oracle Key Vault endpoints interact with any node in the cluster.

This configuration replicates data to all other nodes, reducing risk of data loss. To prevent data loss, you must configure pairs of nodes called read-write pairs to enable bi-directional synchronous replication. This configuration enables an update to one node to be replicated to the other node, and verifies this on the other node, before the update is considered successful. Critical data can only be added or updated within the read-write pairs. All added or updated data is asynchronously replicated to the rest of the cluster.

After you have completed the upgrade process, every node in the Oracle Key Vault cluster must be at Oracle Key Vault release 18.1 or later, and within one release update of all other nodes. Any new Oracle Key Vault server that is to join the cluster must be at the same release level as the cluster.

The clocks on all the nodes of the cluster must be synchronized. Consequently, all nodes of the cluster must have the Network Time Protocol (NTP) settings enabled.

Every node in the cluster can serve endpoints actively and independently while maintaining an identical dataset through continuous replication across the cluster. The smallest possible configuration is a two node cluster, and the largest configuration can have up to 16 nodes with several pairs spread across several data centers.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.2 Benefits of Oracle Key Vault Multi-Master Clustering

To ensure high availability for geographically distributed endpoints, Oracle Key Vault nodes that are deployed in different data centers operate in active-active multi-master cluster configurations to create and share keys. With an active-active configuration, there are no passive machines in the cluster, which allows for better resource utilization. An added benefit of the multi-master cluster configuration is load distribution. When multiple Oracle Key Vault nodes in multi-master configuration are deployed in a data center, they can actively share the key requests of the endpoint databases in that data center.

In a typical large scale deployment, Oracle Key Vault must serve a large number of endpoints possibly distributed in geographically distant data centers.

-

A primary-standby configuration only has a single primary Oracle Key Vault server that can actively serve clients.

-

If the server running in the standby role is unavailable, then the server running in the primary role is in read-only mode and does not allow any write operations.

-

The primary-standby mode can support either high availability in the same data center or disaster recovery across data centers.

-

If the persistent master encryption key cache is not enabled, then database downtime is unavoidable during maintenance windows.

The Oracle Key Vault multi-master cluster configuration addresses these limitations. You can geographically disperse nodes to provide simultaneous high availability and disaster recovery capability.

-

Data compatibility between multi-master cluster nodes similar to a primary-standby deployment

Because all the nodes have an identical data set, the endpoints can retrieve information from any node. In a cluster, the unavailability of an Oracle Key Vault node does not affect the operations of an endpoint. If a given node is unavailable, then the endpoint interacts transparently with another node in the cluster.

-

Fault tolerance

Successfully enrolled clients transparently update their own list of available Oracle Key Vault nodes in the cluster. This enables clients to locate available nodes at any given time, without additional intervention. As such, unexpected failure in nodes or network disruptions do not lead to service interruption for endpoints as long as at least one operational Oracle Key Vault read-write pair remains accessible to the endpoint. If all read-write pairs are unavailable to an endpoint, but a read-only restricted node is available, then the endpoint can still invoke read-only operations.

-

Zero data loss

Data that has been added or updated at a read-write node is immediately replicated to its read-write peer and must be confirmed at the peer to be considered committed. It is then distributed across the cluster. Therefore, data updates are considered successful only if they are guaranteed to exist in multiple servers.

-

No passive machines in the system

A primary-standby configuration requires a passive standby server. The Oracle Key Vault multi-master cluster contains only active servers. This allows for better utilization of hardware.

-

Scaling up and scaling down

You can add extra Oracle Key Vault nodes to the cluster or remove existing nodes from the cluster without interrupting the overall Oracle Key Vault services to clients. This means the number of nodes in the cluster can be increased or decreased as required to meet the expected workload.

-

Maintenance

Whenever hardware or software maintenance is required, Oracle Key Vault nodes can leave the cluster and return back to the cluster after maintenance. The remaining nodes continue to serve the clients. Properly planned maintenance does not cause any service downtime, avoiding interruption of service to endpoints.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.3 Multi-Master Cluster Architecture

An Oracle Key Vault node can be a read-write or a read-only node operating in different modes. Nodes can also form a subgroup.

- Oracle Key Vault Cluster Nodes

An Oracle Key Vault node is an Oracle Key Vault server that operates as a member of a multi-master cluster. - Cluster Node Limitations

Limitations to cluster nodes depend on whether the node is asynchronous or synchronous. - Cluster Subgroup

A cluster subgroup is a group of one or more nodes of the cluster. - Critical Data in Oracle Key Vault

Oracle Key Vault stores critical data in Oracle Key Vault that is necessary for the endpoints to operate. - Oracle Key Vault Read-Write Nodes

A read-write node is a node in which critical data can be added or updated using the Oracle Key Vault or endpoint software. - Oracle Key Vault Read-Only Nodes

In a read-only node, users can add or update non-critical data but not add or update critical data. - Cluster Node Mode Types

Oracle Key Vault supports two types of mode for cluster nodes: read-only restricted mode or read-write mode. - Operations Permitted on Cluster Nodes in Different Modes

In an Oracle Key Vault multi-master cluster, operations are available or restricted based on the node and the operating mode of the node.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.3.1 Oracle Key Vault Cluster Nodes

An Oracle Key Vault node is an Oracle Key Vault server that operates as a member of a multi-master cluster.

To configure an Oracle Key Vault server to operate as a member of the cluster, you must convert it to be a multi-master cluster node. The process is referred to as node induction. You initiate induction on the Cluster Management page of the Oracle Key Vault management console.

On induction, Oracle Key Vault modifies the Cluster tab to enable management, monitoring, and conflict resolution capabilities on the management console of the node. Cluster-specific features of the management console, such as cluster settings, audit replication, naming resolution, cluster alerts, and so on, are enabled as well.

The Primary-Standby Configuration page is not available on the Oracle Key Vault management console of a node. A node cannot have a passive standby server.

A node runs additional services to enable it to communicate with the other nodes of the cluster. Endpoints enrolled from a node are made aware of the cluster topology.

Each node in the cluster has a user-allocated node identifier. The node identifier must be unique in the cluster.

Parent topic: Multi-Master Cluster Architecture

3.3.2 Cluster Node Limitations

Limitations to cluster nodes depend on whether the node is asynchronous or synchronous.

The nodes of a Oracle Key Vault multi-master cluster replicate data asynchronously between them. The only exception is replicating data to the read-write peer. There are various limitations arising as a result of the asynchronous replicate operations.

The IP addresses of a node in the cluster are static and cannot be changed after the node joins the cluster. If you want the node to have a different IP address, then delete the node from the cluster, and either add a new node with the correct IP address, or re-image the deleted node using the correct IP address before adding it back to the cluster.

You can perform one cluster change operation (such as adding, disabling, or deleting a node) at a time.

Node IDs are unique across the cluster. You must ensure that the node ID is unique when you select the node during the induction process.

An Oracle Key Vault cluster does a best effort job at preventing users from performing nonsupported actions such as trying to remove a node ID that a controller node must have access to during the induction process. Similarly, a multi-master cluster will prevent the user from adding a second read-write peer if one already exists.

Parent topic: Multi-Master Cluster Architecture

3.3.3 Cluster Subgroup

A cluster subgroup is a group of one or more nodes of the cluster.

A cluster can be conceptually divided into one or more cluster subgroups.

The node is assigned to a subgroup when you add the node to the multi-master cluster. The assignment cannot be changed for the life of the node. A node's cluster subgroup assignment is a property of the individual node, and members of a read-write pair may be in different cluster subgroups.

A cluster subgroup represents the notion of endpoint affinity. A node's cluster subgroup assignment is used to set the search order in the endpoint's node scan list. Nodes in the same cluster subgroup as the node where the endpoint was added are considered local to the endpoint. The nodes within an endpoint's local subgroup are scanned first, before communicating with nodes that are not in the local subgroup.

The cluster topology can change when you add or remove new nodes to and from the cluster. Nodes can also be added or removed from the local cluster subgroup. Each endpoint gets updates to this information along with the response message for any operation which the endpoint initiated. The updated endpoint's node scan list is sent back to the endpoint periodically even if there is no change to cluster topology. This is to make up for any lost messages.

Parent topic: Multi-Master Cluster Architecture

3.3.4 Critical Data in Oracle Key Vault

Oracle Key Vault stores critical data in Oracle Key Vault that is necessary for the endpoints to operate.

The loss of this information can result in the loss of data on the endpoint. Endpoint encryption keys, certificates, and similar security objects that Oracle Key Vault manages are examples of critical data in Oracle Key Vault. Critical data must be preserved in the event of an Oracle Key Vault server failure to ensure endpoint recovery and continued operations.

Oracle Key Vault data that can be re-created or discarded after an Oracle Key Vault server failure is non-critical data. Cluster configuration settings, alert settings, email settings, and key sharing between virtual wallets are examples of non-critical data.

Parent topic: Multi-Master Cluster Architecture

3.3.5 Oracle Key Vault Read-Write Nodes

A read-write node is a node in which critical data can be added or updated using the Oracle Key Vault or endpoint software.

The critical data that is added or updated can be data such as keys, wallet contents, and certificates.

Oracle Key Vault read-write nodes always exist in pairs. Each node in the read-write pair can accept updates to critical and non-critical data, and these updates are immediately replicated to the other member of the pair, the read-write peer. A read-write peer is the specific member of one, and only one, read-write pair in the cluster. There is bi-directional synchronous replication between read-write peers. Replication to all nodes that are not a given node's read-write peer is asynchronous.

A node can be a member of, at most, one read-write pair. A node can have only one read-write peer. A node becomes a member of a read-write pair, and therefore a read-write node, during the induction process. A read-write node reverts to being a read-only node when its read-write peer is deleted, at which time it can form a new read-write pair.

A read-write node operates in read-write mode when it can successfully replicate to its read-write peer and when both peers are active. A read-write node is temporarily placed in read-only restricted mode when it is unable to replicate to its read-write peer or when its read-write peer is disabled.

Oracle Key Vault multi-master cluster requires at least one read-write pair to be fully operational. It can have a maximum of 8 read-write pairs.

Parent topic: Multi-Master Cluster Architecture

3.3.6 Oracle Key Vault Read-Only Nodes

In a read-only node, users can add or update non-critical data but not add or update critical data.

Critical data is updated only through replication from other nodes.

A read-only node is not a member of a read-write pair and does not have an active read-write peer.

A read-only node can induct a new server into a multi-master cluster. The new node can be another read-only node. However, a read-only node becomes a read-write node if it inducts another node as its read-write peer.

The first node in the cluster is a read-only node. Read-only nodes are used to expand the cluster. A multi-master cluster does not need to have any read-only nodes. A multi-master cluster with only read-only nodes is not ideal because no useful critical data can be added to such a multi-master cluster.

Parent topic: Multi-Master Cluster Architecture

3.3.7 Cluster Node Mode Types

Oracle Key Vault supports two types of mode for cluster nodes: read-only restricted mode or read-write mode.

- Read-only restricted mode: In this mode, only non-critical data can be updated or added to the node. Critical data can be updated or added only through replication in this mode. There are two situations in which a node is in read-only restricted mode:

- A node is read-only and does not yet have a read-write peer.

- A node is part of a read-write pair but there has been a breakdown in communication with its read-write peer or if there is a node failure. When one of the two nodes is non-operational, then the remaining node is set to be in the read-only restricted mode. When a read-write node is again able to communicate with its read-write peer, then the node reverts back to read-write mode from read-only restricted mode.

- Read-write mode: This mode enables both critical and non-critical information to be written to a node. A read-write node should always operate in the read-write mode.

You can find the mode type of the cluster node on the Monitoring page of the Cluster tab of the node management console. The Cluster tab of any node management console displays the mode type of all nodes in the cluster.

Parent topic: Multi-Master Cluster Architecture

3.3.8 Operations Permitted on Cluster Nodes in Different Modes

In an Oracle Key Vault multi-master cluster, operations are available or restricted based on the node and the operating mode of the node.

Related Topics

Parent topic: Multi-Master Cluster Architecture

3.4 Building and Managing a Multi-Master Cluster

You initialize a multi-master cluster using a single Oracle Key Vault server.

- About Building and Managing a Multi-Master Cluster

After the initial cluster is created in the Oracle Key Vault server, you can add the different types of nodes that you need for the cluster. - Creation of the Initial Node in a Multi-Master Cluster

The initial node in a multi-master cluster must follow certain requirements before being made the initial node. - Expansion of a Multi-Master Cluster

After you initialize the cluster, you can expand it by adding up to 15 more nodes, as either read-write pairs or read-only nodes. - Migration to the Cluster from an Existing Deployment

You can migrate an existing Oracle Key Vault deployment to a multi-master cluster node.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.4.1 About Building and Managing a Multi-Master Cluster

After the initial cluster is created in the Oracle Key Vault server, you can add the different types of nodes that you need for the cluster.

This Oracle Key Vault server seeds the cluster data and converts the server into the first cluster node, which is called the initial node. The cluster is expanded when you induct additional Oracle Key Vault servers, and add them as read-write nodes, or as simple read-only nodes.

A multi-master cluster can contain a minimum of 2 nodes and a maximum of 16 nodes.

Parent topic: Building and Managing a Multi-Master Cluster

3.4.2 Creation of the Initial Node in a Multi-Master Cluster

The initial node in a multi-master cluster must follow certain requirements before being made the initial node.

You create a multi-master cluster by converting a single Oracle Key Vault server to become the initial node. The Oracle Key Vault server can be a freshly installed Oracle Key Vault server, or it can already be in service with existing data. A standalone server, or a primary server of a primary-standby configuration can be converted to the initial node of a cluster.

Before using the primary server of the primary-standby configuration, you must unpair the primary-standby configuration. For a primary-standby configuration, you can use either of the following methods to upgrade to a cluster:

- Method 1:

- Back up the servers.

- Upgrade both the primary and standby servers to release 18.1.

- Unpair the paired primary and standby servers. (Before you unpair the servers, see Oracle Key Vault Release Notes for known issues regarding the unpair process.)

- Convert the primary server to be the first node of the cluster.

- Method 2:

- Back up the servers.

- Unpair the paired primary and standby servers.

- Upgrade the former primary server to release 18.1.

- Convert the primary server to be the first node of the cluster.

The initial node is special in that it provides the entirety of the data with which the cluster is initialized. This happens only once for the cluster when it is created. The data provided by the initial node will include but is not limited to the following components:

- Certificates, keys, wallets, and other security objects

- Users and groups

- Endpoint information

- Audits

- Reports

All other nodes added after the initial node must be created from freshly installed Oracle Key Vault servers.

The cluster name is chosen when the initial node is created. Once this name is chosen, you cannot change the cluster name.

The cluster subgroup of the initial node is also configured when the initial node is created and it cannot be changed. You must configure an Oracle Key Vault server that is converted to the initial node to use a valid Network Time Protocol (NTP) setting before you begin the conversion. The initial node always starts as a read-only node in read-only restricted mode.

To create the cluster, you select the Add Node button on the Cluster tab of the Oracle Key Vault Management console.

Parent topic: Building and Managing a Multi-Master Cluster

3.4.3 Expansion of a Multi-Master Cluster

After you initialize the cluster, you can expand it by adding up to 15 more nodes, as either read-write pairs or read-only nodes.

- About the Expansion of a Multi-Master Cluster

Node induction is the process of configuring an Oracle Key Vault server to operate as a multi-master cluster node. - Management of Cluster Reconfiguration Changes Using a Controller Node

A controller node is the node that controls or manages a cluster reconfiguration change, such as adding, enabling, disabling, or removing nodes. - Addition of a Candidate Node to the Multi-Master Cluster

A freshly installed Oracle Key Vault server that is being added to a cluster is called a candidate node. - Addition of More Nodes to a Multi-Master Cluster

You add nodes one at a time, first as a single read-write node, and then later as read-write paired nodes.

Parent topic: Building and Managing a Multi-Master Cluster

3.4.3.1 About the Expansion of a Multi-Master Cluster

Node induction is the process of configuring an Oracle Key Vault server to operate as a multi-master cluster node.

A controller node inducts an Oracle Key Vault server converted to a candidate node into the cluster.

To expand a multi-master cluster, you use the induction process found on the Cluster tab of the Oracle Key Vault Management console. Nodes added to the Oracle Key Vault multi-master cluster are initialized with the current cluster data. You can add nodes either as read-write peers, or as read-only nodes.

Related Topics

Parent topic: Expansion of a Multi-Master Cluster

3.4.3.2 Management of Cluster Reconfiguration Changes Using a Controller Node

A controller node is the node that controls or manages a cluster reconfiguration change, such as adding, enabling, disabling, or removing nodes.

A node is only a controller node during the life of the change. During induction, the controller node provides the server certificate and the data that is used to initialize the candidate node. Another node can be the controller node for a subsequent cluster change. One controller node can only control one cluster configuration change at a time. Oracle Key Vault does not permit multiple cluster operations at the same time.

Oracle recommends that you perform one cluster operation at a time. Each concurrent operation will have its own controller node. One controller node can only control one cluster configuration transaction at a time.

The following table shows the role of the controller and controlled nodes during various cluster configuration.

| Cluster Configuration Operation | Controller Node | Node Being Controlled |

|---|---|---|

| Induction as the first node | Any server | The controller node itself |

| Induction as a read-only node | Any node | Any server |

| Induction as a read-write node | Any node that does not have a read-write peer | Any server |

| Disable a node | Any node in the cluster | Any node in the cluster |

| Enable a node | Only the disabled node can only re-enable itself, and it can also not re-enable any other node | The disabled node |

| Delete a node | Any node in the cluster | Any other node in the cluster |

| Force Delete a node | Any node in the cluster | Any other node in the cluster |

| Manage inbound replication | Any node in the cluster | The node itself |

Parent topic: Expansion of a Multi-Master Cluster

3.4.3.3 Addition of a Candidate Node to the Multi-Master Cluster

A freshly installed Oracle Key Vault server that is being added to a cluster is called a candidate node.

In the process of adding the server to a cluster, Oracle Key Vault converts the server to a candidate node before it becomes a node of the cluster. The server is converted to a candidate node before it becomes a node of the cluster. To induct an Oracle Key Vault server to a cluster, you must provide necessary information such as the controller certificate, the server IP address, and the recovery passphrase so that the new candidate can successfully and securely communicate with the controller node.

You can convert an Oracle Key Vault server to be a candidate node by using the Cluster tab of the Oracle Key Vault Management console.

Parent topic: Expansion of a Multi-Master Cluster

3.4.3.4 Addition of More Nodes to a Multi-Master Cluster

You add nodes one at a time, first as a single read-write node, and then later as read-write paired nodes.

When an Oracle Key Vault multi-master cluster is first created, and only contains one node, that initial node is a read-only node. After you have created the initial node, you can induct additional read-only nodes or a read-write peer. After these nodes have been added, you can further add read-only nodes or add a read-write peer.

Because the initial node is in read-only restricted mode and no critical data can be added to it, Oracle recommends that you induct a second node to form a read-write pair with the first node. You should expand the cluster to have read-write pairs so that both critical and non-critical data can be added to the read-write nodes. Read-only nodes can help with load balancing or operation continuity during maintenance operations.

The general process for adding nodes to a cluster is to add one node at a time, and then pair these so that they become read-write pairs:

- Add the initial node (for example, N1). N1 is a read-only node.

- Add the second node, N2. N2 will be in read-only restricted node during the induction process.

If you are adding N2 as the read-write peer of N1, then both N1 and N2 will become read-write nodes when N2 is added to the cluster. Otherwise, N1 and N2 will remain read-only nodes after you add N2 to the cluster. If you want to add N2 as the read-write peer of N1, then you must set Add Candidate Node as Read-Write Peer to Yes on N1 during the induction process. If you do not want N2 to be paired with N1, then set Add Candidate Node as Read-Write Peer to No. This example assumes that N2 will be made a read-write peer of N1.

- Add a third node, N3, which must be a read-only node if N1 and N2 were made read-write peers, because the other two nodes in the cluster already are read-write peers.

In fact, you can add multiple read-only nodes to this cluster, but Oracle recommends that you not do this, because when write operations take place, the few read-write nodes that are in the cluster will be overloaded. For optimum performance and load balancing, you must have more read-write pairs.

- To create a second read-write pair for the cluster, when you add the next node (N4), set Add Candidate Node as Read-Write Peer to Yes to add node N4 to be paired with node N3.

Node N4 must be added from N3 to make the second read-write pair, because N3 is the only node without a read-write peer at this point. After you complete this step, at this stage the cluster has two read-write pairs: the N1-N2 pair, and the N3-N4 pair.

- To create the next pairing, add the next read-only node (for example, N5), followed by node N6.

Be sure to set Add Candidate Node as Read-Write Peer to Yes when you add N6. Node N6 must be added to N5 because at this point, N5 is the only node without a read-write peer. By the time you complete this step, there will be three read-write pairs in the cluster: the N1-N2 pair, the N3-N4 pair, and the N5-N6 pair.

A freshly installed Oracle Key Vault server at the same version as the other nodes in the cluster, that is, at release 18.1 or later is converted to a candidate node. You should ensure that the candidate has Network Time Protocol (NTP) configured.

Any node in the cluster can be the controller node given no other cluster change operations are in progress. The candidate and the controller node exchange information that enables the controller node to ascertain the viability of induction. The controller node ships data over to the candidate node. Induction replicates the cluster data set to the candidate node. After a successful induction, you can configure the node to use the cluster-wide configuration settings. A cluster data set includes but is not limited to the following components:

- Certificates, keys, wallets, and other security objects

- Users and user groups

- Endpoint and endpoint group information

- Audit data

- Cluster name and cluster node details

- Cluster settings

The controller node assigns the node ID and the cluster subgroup for the candidate node. You cannot change these later on. If the controller node provides an existing cluster subgroup during induction, then the candidate node becomes part of that subgroup. If the controller node provides the name of the cluster subgroup that does not exist, then the cluster subgroup is created as part of the induction process and the candidate node is added to the cluster subgroup. You can have all endpoints associated with one subgroup, if you want. For example, all endpoints in data center A can be in one subgroup, and all endpoints in data center B can be in another subgroup. Endpoints that are in the same subgroup will prioritize connecting to the nodes in that subgroup before connecting to nodes in other subgroups.

A controller node that is a read-write node in read-write mode or read-only restricted mode can only add read-only nodes. A controller node that is a read-only node can add one candidate node as its read-write peer to form a read-write pair. A node becomes a member of a read-write pair, and therefore a read-write node, during the induction process. A controller node that is a read-only node can add additional nodes as read-only nodes, which can subsequently be used to form a new read-write pair.

A read-write node can become a read-only node if its read-write peer is deleted. This read-only node can be used to form another read-write pair.

Note the following:

- If the controller is a member of an existing read-write pair, then the node being added is inducted as a read-only node.

- If the controller is not a member of an existing read-write pair, then the node being added can be a read-only node or it can become the read-write peer to the controller node.

Related Topics

Parent topic: Expansion of a Multi-Master Cluster

3.4.4 Migration to the Cluster from an Existing Deployment

You can migrate an existing Oracle Key Vault deployment to a multi-master cluster node.

- Conversion of an Oracle Key Vault Standalone Server to a Multi-Master Cluster

You can migrate a standalone Oracle Key Vault deployment that is at an older release to a multi-master deployment. - Conversion from a Primary-Standby Server to a Multi-Master Cluster

You can migrate an Oracle Key Vault primary-standby deployment that is at an earlier release than 18.1 to a multi-master deployment.

Parent topic: Building and Managing a Multi-Master Cluster

3.4.4.1 Conversion of an Oracle Key Vault Standalone Server to a Multi-Master Cluster

You can migrate a standalone Oracle Key Vault deployment that is at an older release to a multi-master deployment.

First, you must upgrade the server to an Oracle Key Vault 18.1 server. After you complete the upgrade to release 18.1, you then can convert it to an initial node.

If your Oracle Key Vault server deployment is already at release 18.1, then you can directly convert it to an initial node.

The initial node will retain all the data of the existing Oracle Key Vault standalone deployment. After you create the initial node of the cluster, you can add more nodes to this cluster as necessary.

3.4.4.2 Conversion from a Primary-Standby Server to a Multi-Master Cluster

You can migrate an Oracle Key Vault primary-standby deployment that is at an earlier release than 18.1 to a multi-master deployment.

First, you must unpair the primary-standby server configuration. Then you should upgrade the unpaired former primary server to an Oracle Key Vault 18.1 server. After you have upgraded the former primary server to Oracle Key Vault release 18.1, you can convert it to an initial node.

If you have Oracle Key Vault release 18.1 in a primary-standby deployment, you must also unpair it before you can move it to a multi-master cluster. Then, you can directly convert the former primary server to an initial node.

Before you perform this kind of migration, you should back up the servers that will be used in the primary-standby deployment.

3.5 Oracle Key Vault Multi-Master Cluster Deployment Scenarios

All multi-master cluster nodes can serve endpoints actively and independently.

They can do this while striving to maintain an identical cluster data set through continuous replication across the cluster. Deployment scenarios of the multi-master cluster can range from a small two-node cluster to large 16-node deployments spanning across data centers.

- Cluster Size and Availability in Deployments

In general, the availability of the critical data to the endpoints increases with the increasing size of the cluster. - Two-Node Cluster Deployment

A single read-write pair formed with two Oracle Key Vault nodes is the simplest multi-master cluster. - Mid-Size Cluster Across Two Data Centers Deployment

A two-data center configuration provides high availability, disaster recovery, and load distribution.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.5.1 Cluster Size and Availability in Deployments

In general, the availability of the critical data to the endpoints increases with the increasing size of the cluster.

Usually, you must remove the read-write pairs from the cluster together to undergo maintenance such as patching or upgrade. While a two-node cluster provides better utilization, it does mean the endpoints will have a down time.

A two-node cluster is suitable for development and test environments where the endpoint downtime is non-critical. For small deployments where critical data is added or updated infrequently or can be controlled, a three-node cluster is acceptable. Large scale deployments under heavy load should deploy at least two read-write pairs to ensure endpoint continuity.

A three-node cluster with one read-write pair and one read-only node fares better than a two-node cluster because it provides endpoint continuity so long as no critical data is added or updated.

A four-node, two read-write pair cluster provides continuity for all endpoint operations while the nodes are in maintenance.

The cluster should ideally be comprised of read-write pairs. If network latency or network interruptions across data centers is of little concern, then you should deploy read-write pairs across data centers. In case of a disaster, the keys are preserved in the read-write peer. However, if the network latency or interruptions are of concern, then you should place the read-write pair in the same data center. A disaster resulting in the loss of read-write pair may result in the loss of keys if the disaster strikes within the replication time after the key is created.

3.5.2 Two-Node Cluster Deployment

A single read-write pair formed with two Oracle Key Vault nodes is the simplest multi-master cluster.

A two-node multi-master cluster looks similar to a standard primary-standby environment, in that there are only two nodes. The significant difference is that unlike the primary-standby configuration where the standby is passive, both nodes are active and can respond to endpoint requests at the same time.

A cluster made up of two read-only nodes is not useful because no critical data can be added to it. Using a two-node multi-master cluster provides the following advantages over a primary-standby environment:

- Both nodes can be actively queried and updated by endpoints unlike the primary-standby configuration where only the primary server can be queried.

- A multi-master cluster can be expanded to three or more nodes without downtime.

- If the nodes are in separate data centers, then endpoints can interact with local nodes, rather than reach across the network to the primary server node.

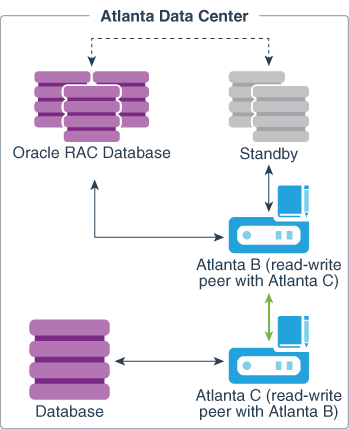

The following figure describes the deployment used for a two-node, single data center.

Figure 3-1 Oracle Key Vault Multi-Master Cluster Deployment in Single Data Center

Description of "Figure 3-1 Oracle Key Vault Multi-Master Cluster Deployment in Single Data Center"

In this scenario, the Atlanta Data Center hosts three databases, as follows:

- A single instance database

- A multi-instance Oracle Real Applications Clusters (Oracle RAC) database

- A multi-instance standby database for the Oracle RAC database

There are two Oracle Key Vault servers, labeled Atlanta B and Atlanta C, presenting a read-write pair of Oracle Key Vault nodes. These nodes are connected by a bidirectional line indicating that these are read-write peers. Read-write peer nodes are synchronous, which means that the transactions occur immediately.

The Oracle RAC database and the associated standby database are enrolled with the Oracle Key Vault, which would be at the head of the endpoint node scan lists for this database. Not shown is that each Oracle RAC instance would have a separate connection to the Oracle Key Vault server.

The database instance is enrolled with the Atlanta C node. To illustrate this connection, the database is connected by an arrow to Oracle Key Vault Atlanta C, which would be at the head of the endpoint node scan lists for this database.

In the event that either Oracle Key Vault server is offline, perhaps for maintenance, all endpoints automatically connect to the other (available) Oracle Key Vault server (Atlanta B).

3.5.3 Mid-Size Cluster Across Two Data Centers Deployment

A two-data center configuration provides high availability, disaster recovery, and load distribution.

At least two read-write pairs are required. A read-write pair is only created when you pair a new node with a read-only node. As a best practice, you could configure the peers in different data centers if you are concerned about disaster recovery, or you could put the read-write peers in the same data center if you are concerned about network latency or network interruptions. Cluster nodes in the same data center should be part of the same cluster subgroup. You should enroll all endpoints in a data center with the nodes in that data center. This ensures that the nodes within a given Berkeley Data Center at the head of the endpoint node scan list for endpoints in the same data center.

For a large deployment, Oracle recommends that you have a minimum of four Oracle Key Vault servers in a data center for high availability. This enables additional servers to be available for key updates if one of the servers fails. When you register the database endpoints, balance these endpoints across the Oracle Key Vault servers. For example, if the data center has 1000 database endpoints to register, and you have Oracle Key Vault four servers to accommodate them, then enroll 250 endpoints with each of the four servers.

Each endpoint first contacts the Oracle Key Vault nodes in the local data center. If an outage causes all Oracle Key Vault nodes to be unavailable in one data center, then as long as connectivity to another data center is available, the endpoint node scan list will redirect the endpoints to available Oracle Key Vault nodes in another data center.

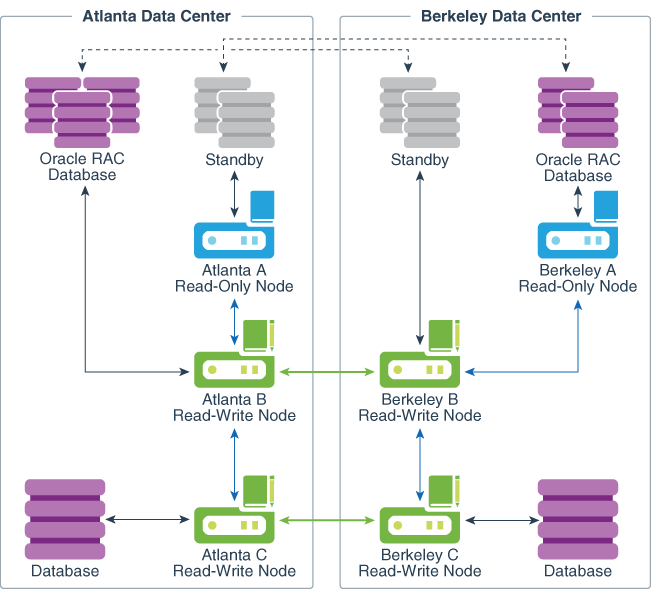

A possible deployment scenario with two data centers, each containing two read-write nodes, paired with read-write nodes in the other data center is shown in Figure 3-2. A data center can also host one or more read-only nodes as needed for load balancing, reliability, or expansion purposes. In the scenario described in the following figure, each data center hosts a single read-only node.

Figure 3-2 Oracle Key Vault Multi-Master Cluster Deployment across Two Data Centers

Description of "Figure 3-2 Oracle Key Vault Multi-Master Cluster Deployment across Two Data Centers"

In this scenario, both the Atlanta Data Center and the Berkeley Data Center each hosts three databases, as follows:

- A single instance database

- A multi-instance Oracle RAC database

- A multi-instance standby database for the Oracle RAC database

Atlanta Data Center and Berkeley Data Center each have three Oracle Key Vault nodes. These nodes are configured as follows:

- Atlanta A Read-Only Node and Berkeley B Read-Only Node are read-only restricted nodes.

- Atlanta B Read-Write Node is a read-write peer with Berkeley B Read-Write Node. These two nodes are within one cluster. Their connection is bi-directional and enables these two nodes to be in sync at all times. You can extend these nodes to include up to 16 read-only nodes. All these nodes can communicate with each other.

- Atlanta C Read-Write Node is a read-write peer with Berkeley C Read-Write Node. The relationship between these two nodes operates in the same way as the relationship between the Atlanta B and Berkeley B nodes.

All of the read-write nodes connect to all other nodes. To maintain legibility, only some of these connections are shown, specifically:

- The read-write pair connection between Atlanta B Read-Write Node and Berkeley B Read-Write Node across the two data centers

- The read-write pair connection between Atlanta C Read-Write Node and Berkeley C Read-Write Node across the two data centers

- The regular connection between Atlanta B Read-Write Node and Atlanta C Read-Write Node in the Atlanta Data Center

- The regular connection between Berkeley B Read-Write Node and Berkeley C Read-Write Node in the Berkeley Data Center

- The regular connection between Atlanta A Read-Write Node and Atlanta B Read-Write Node in the Atlanta Data Center

- The regular connection between Berkeley A Read-Write Node and Berkeley B Read-Write Node in the Berkeley Data Center

The endpoint node scan list is unique to each endpoint and controls the order of Oracle Key Vault nodes to which the endpoint connections from databases are established. Each endpoint can connect to all Oracle Key Vault nodes. Preference is given to the nodes in the same cluster subgroup as the node where the endpoint was added before moving to nodes in other cluster subgroups. In Figure 3-2, the following connections are shown, which imply the first entry in each client endpoint node scan list:

- In Atlanta Data Center:

- The Oracle RAC Database connects to Atlanta A Read-Only Node.

- The standby server connects to Atlanta A Read-Only Node.

- The database connects to Atlanta C Read-Write Node.

- In Berkeley Data Center:

- The Oracle RAC Database connects to Berkeley A Read-Only Node.

- The standby server connects to Berkeley B Read-Write Node.

- The database connects to Berkeley C Read-Write Node.

In the event that Atlanta C Read-Write node (a read-write peer with Berkeley C Read-Write Node) cannot be reached or does not have the necessary key, the database to which it connects in Atlanta Data Center will connect to other Oracle Key Vault nodes to fetch the key.

3.6 Multi-Master Cluster Features

Oracle Key Vault provides features that help with inconsistency resolution and name conflict resolution in clusters, and endpoint node scan lists.

- Cluster Inconsistency Resolution in a Multi-Master Cluster

Network outages can introduce inconsistency in data in a cluster, but when the outage is over, data is consistent again. - Name Conflict Resolution in a Multi-Master Cluster

Naming conflicts can arise when an object has the same name as another object in a different node. - Endpoint Node Connection Lists (Endpoint Node Scan Lists)

An endpoint node scan list is a list of nodes to which the endpoint can connect.

Parent topic: Oracle Key Vault Multi-Master Cluster Concepts

3.6.1 Cluster Inconsistency Resolution in a Multi-Master Cluster

Network outages can introduce inconsistency in data in a cluster, but when the outage is over, data is consistent again.

A node can be disconnected from other nodes in the cluster voluntarily or involuntarily. When a node becomes available to the cluster after being voluntarily disconnected, any data changes in the cluster are replicated to the node. Network disruptions, power outages, and other disconnects can happen any time for any Oracle Key Vault node, causing an involuntary disconnection from other nodes in the cluster. Such failures interrupt the data replication processes within a multi-master cluster. Temporary failures do not always introduce inconsistency to a cluster. As soon as the problem is addressed, the data replication process will resume from the moment it was halted. This ensures that even after some disconnections, disconnected Oracle Key Vault nodes will be able to synchronize themselves with the other nodes in the cluster eventually.

Any change made in a read-write node is guaranteed to be replicated to the other paired read-write node. Therefore, even if the read-write node suffers a failure, the data is available on one other node in the cluster.

Parent topic: Multi-Master Cluster Features

3.6.2 Name Conflict Resolution in a Multi-Master Cluster

Naming conflicts can arise when an object has the same name as another object in a different node.

Users must specify names when creating virtual wallets, users, user groups, endpoints, and endpoint groups. A name conflict arises when two or more users create the same object with the same name on different nodes before the object has been replicated. If the object has been replicated on other nodes, then the system prevents the creation of objects with duplicate names. But replication in the Oracle Key Vault cluster is not instantaneous, so there is a possibility that during the replication window (which can be in the order of seconds), another object with the same name may have been created in this cluster. If this happens, it becomes a name conflict. Name conflicts have obvious drawbacks. For example, the system cannot distinguish between the references to two objects with duplicate names. Uniqueness in names is thus enforced to avoid inconsistencies in the cluster. All other object names must be unique within their object type, such as wallets, endpoint groups, user groups, and any other object type. For example, no two wallets may have the same name within the cluster. User names and endpoint names must not conflict.

While rare, a naming conflict can still arise. When this occurs, Oracle Key Vault detects this name conflict and raises an alert. Oracle Key Vault then will append _OKVxx (where xx is a node number) to the name of the conflicting object that was created later. You can choose to accept this suggested object name or rename the object.

To accept or change a conflicting object name, click the Cluster tab, then Conflict Resolution from the left navigation bar to see and resolve all conflicts.

Parent topic: Multi-Master Cluster Features

3.6.3 Endpoint Node Connection Lists (Endpoint Node Scan Lists)

An endpoint node scan list is a list of nodes to which the endpoint can connect.

An endpoint connects to an Oracle Key Vault server or node to manage or access wallets, keys, certificates, and credentials.

In a standalone situation the endpoint node scan list has one entry. In a primary-standby configuration, the endpoint can connect to one of two nodes.

In an Oracle Key Vault multi-master cluster, the endpoint node scan list is the list of all the nodes in the cluster. There is a read-only node list and read-write node list. Node subgroup assignments and node modes influence the order of nodes in the endpoint node scan list. The list is made available to the endpoint at the time of endpoint enrollment. The list is maintained automatically to reflect the available nodes in the cluster. This node tracks changes to the cluster and makes them available to the endpoints. The following events will trigger a change to the endpoint node scan list:

- A change of cluster size, for example due to node addition or node removal

- A change to the mode of the node, for example when a node in read-only restricted mode changes to read-write mode

- An hour has passed since the last endpoint update

The endpoint gets the updated scan list along with the response to the next request from the endpoint to the node. Once the scan list is sent by the node, it marks the scan list as sent to the endpoint. It is possible that a scan list sent to the endpoint and marked sent in the node, may not be applied at the endpoint. As such the cluster periodically sends the scan list to the endpoint even if there are no changes to the cluster nodes or the modes of any of the cluster nodes.

Parent topic: Multi-Master Cluster Features