2.6 AutoML UI

AutoML User Interface (AutoML UI) is an Oracle Machine Learning interface that provides you no-code automated machine learning modeling. When you create and run an experiment in AutoML UI, it performs automated algorithm selection, feature selection, and model tuning, thereby enhancing productivity as well as potentially increasing model accuracy and performance.

The following steps comprise a machine learning modeling workflow and are automated by the AutoML user interface:

- Algorithm Selection: Ranks algorithms likely to produce a more accurate model based on the dataset and its characteristics, and some predictive features of the dataset for each algorithm.

- Adaptive Sampling: Finds an appropriate data sample. The goal of this stage is to speed up Feature Selection and Model Tuning stages without degrading the model quality.

- Feature Selection: Selects a subset of features that are most predictive of the target. The goal of this stage is to reduce the number of features used in the later pipeline stages, especially during the model tuning stage to speed up the pipeline without degrading predictive accuracy.

- Model Tuning: Aims at increasing individual algorithm model quality based on the selected metric for each of the shortlisted algorithms.

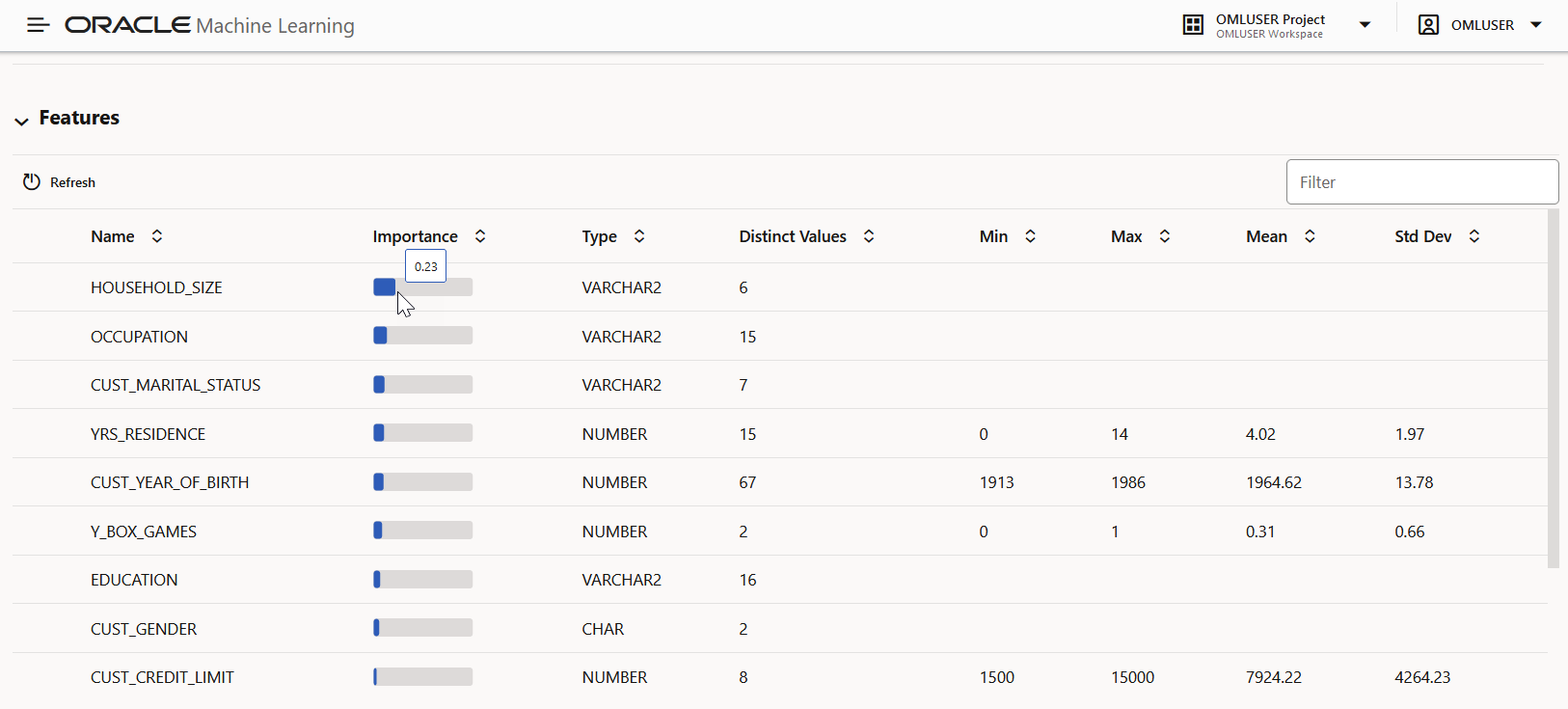

- Feature Prediction Impact: This is the final stage in the AutoML UI pipeline. Here, the impact of each input column on the predictions of the final tuned model is computed. The computed prediction impact provides insights into the behavior of the tuned AutoML model.

- Create machine learning models

- Deploy machine learning models

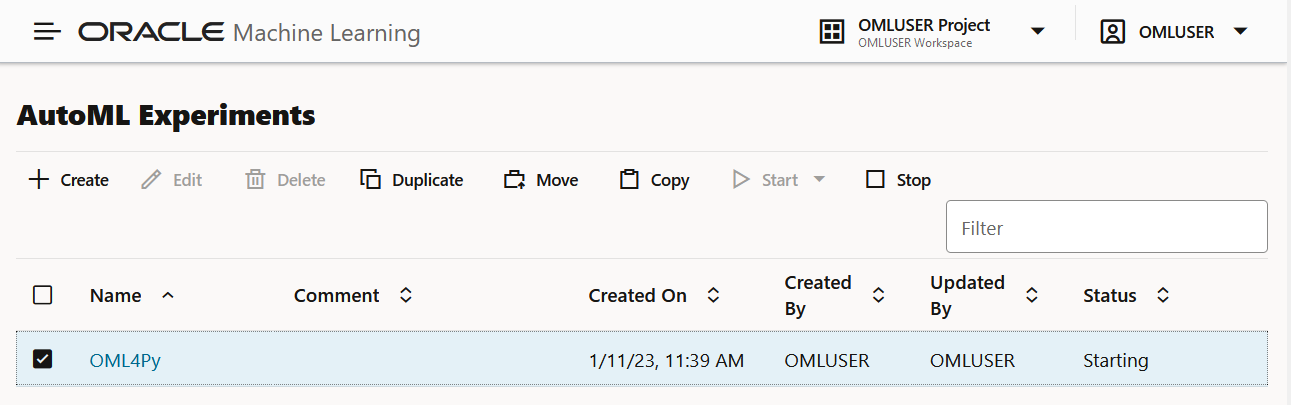

AutoML UI Experiments

When you create an experiment in AutoML UI, it automatically runs all the steps involved in the machine learning workflow. In the Experiments page, all the experiments that you have created are listed. To view any experiment details, click an experiment. Additionally, you can perform the following tasks:

- Create: Click Create to create a new AutoML UI experiment. The AutoML UI experiment that you create resides inside the project that you selected in the Project under the Workspace.

- Edit: Select any experiment that is listed here, and click Edit to edit the experiment definition.

- Delete: Select any experiment listed here, and click Delete to delete it. You cannot delete an experiment which is running. You must first stop the experiment to delete it.

- Duplicate: Select an experiment and click Duplicate to create a copy of it. The experiment is duplicated instantly and is in Ready status.

- Move: Select an experiment and click Move to move the experiment to a different project in the same or a different workspace. You must have either the

AdministratororDeveloperprivilege to move experiments across projects and workspaces.Note:

An experiment cannot be moved if it is in RUNNING, STOPPING or STARTING states, or if an experiment already exists in the target project by the same name. - Copy: Select an experiment and click Copy to copy the experiment to another project in the same or different workspace.

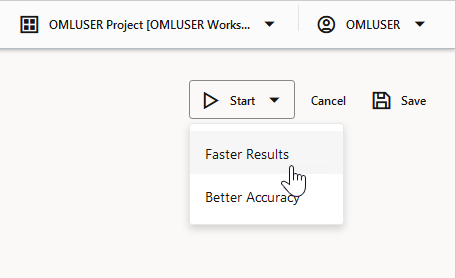

- Start: If you have created an experiment but have not run it, then click Start to run the experiment.

- Stop: Select an experiment that is running, and click Stop to stop the running of the experiment.

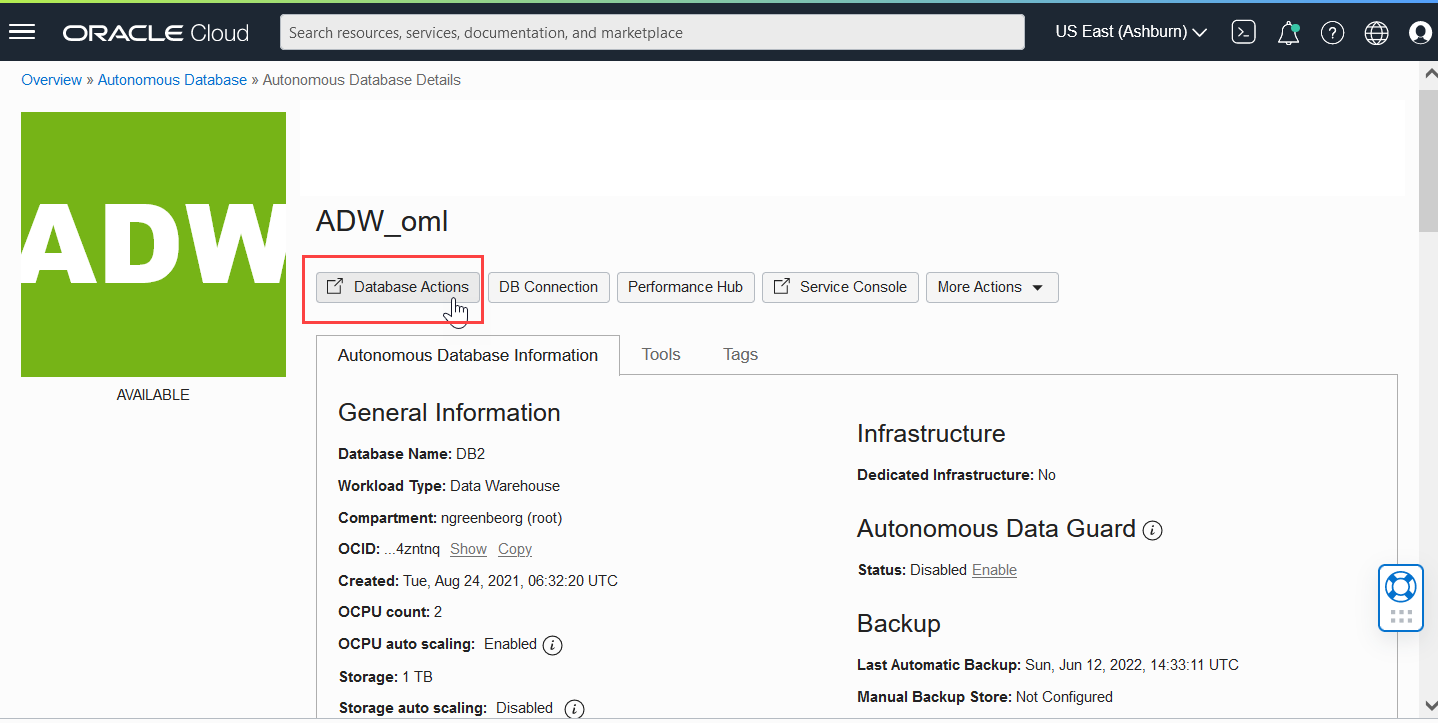

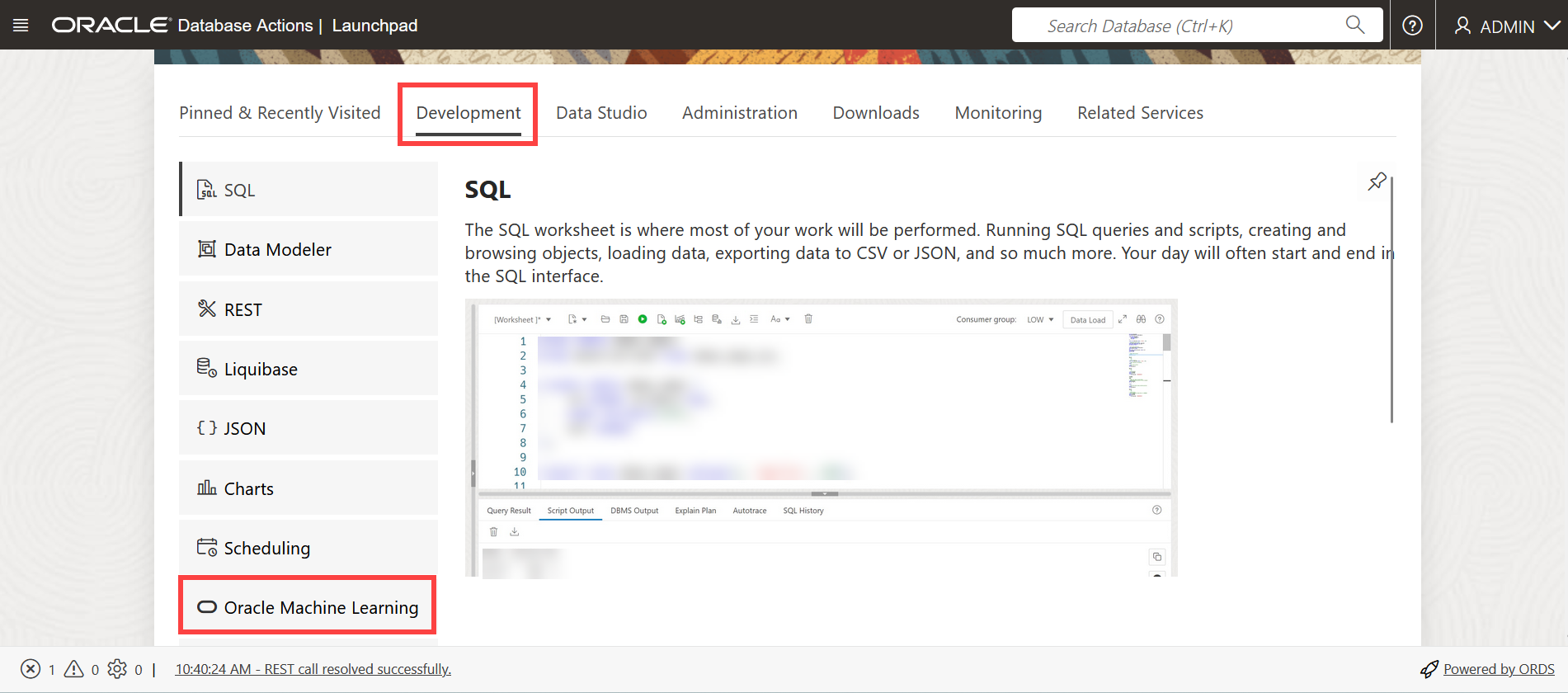

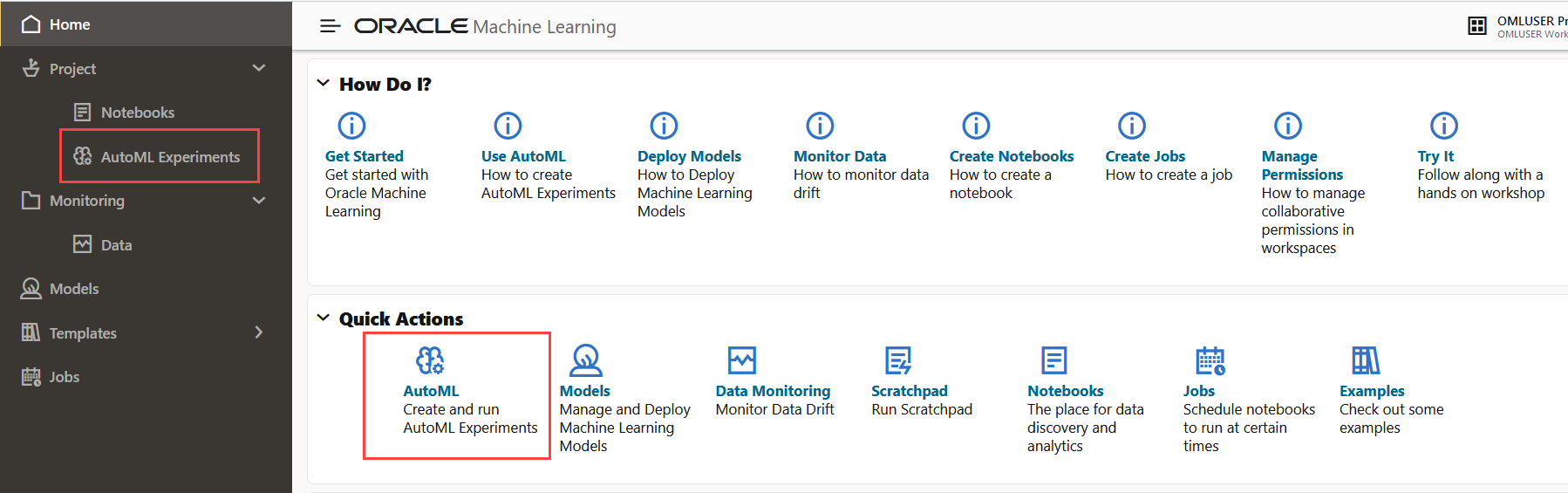

- Access AutoML UI

You can access AutoML UI from Oracle Machine Learning UI home page. - Create AutoML UI Experiment

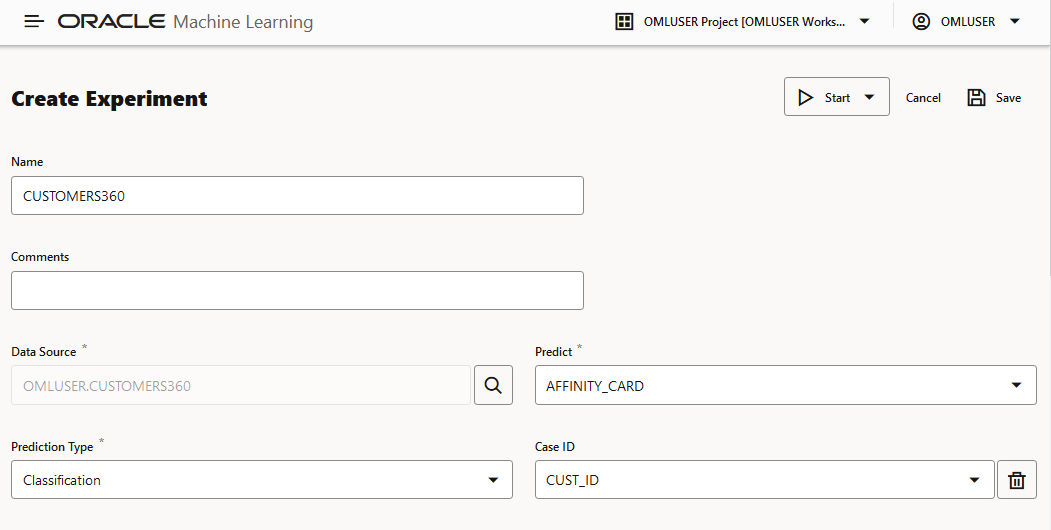

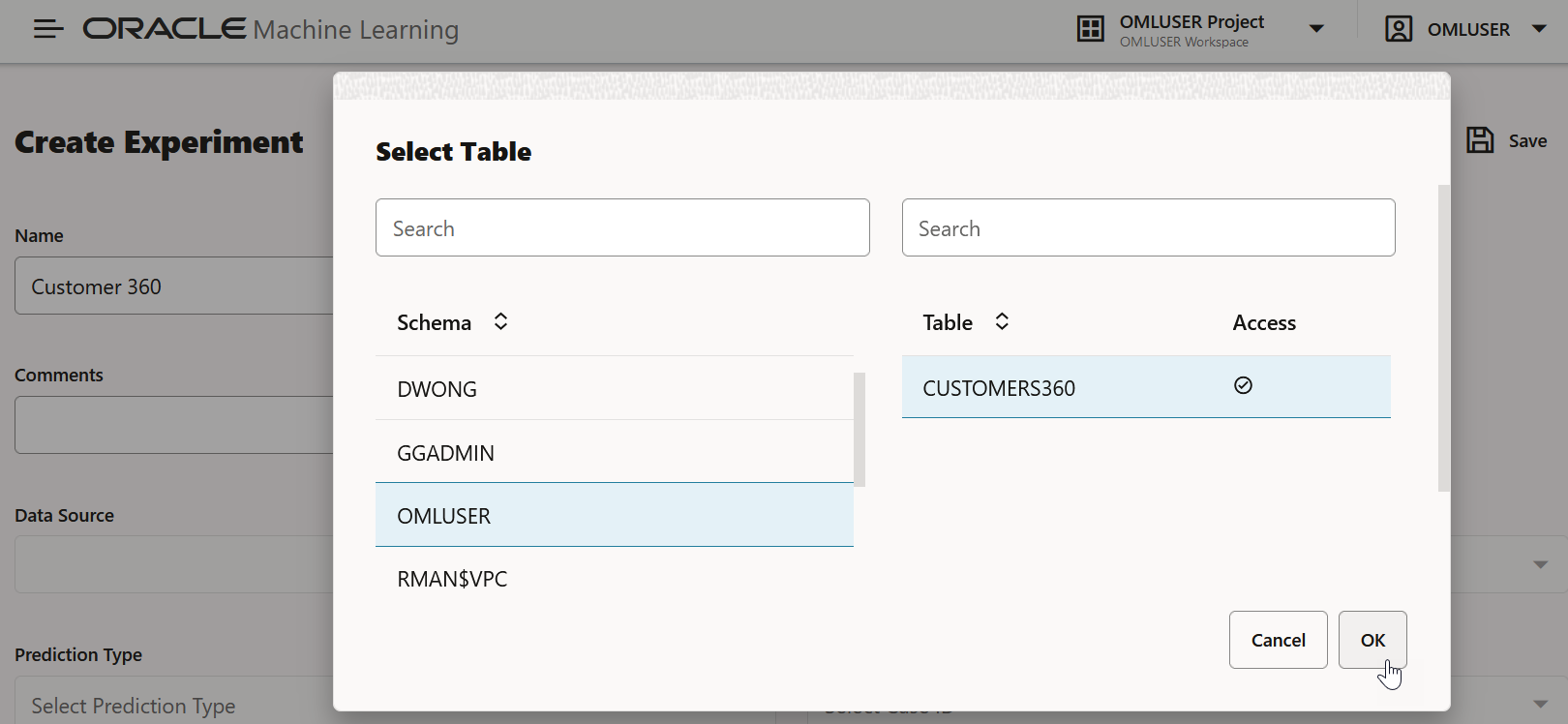

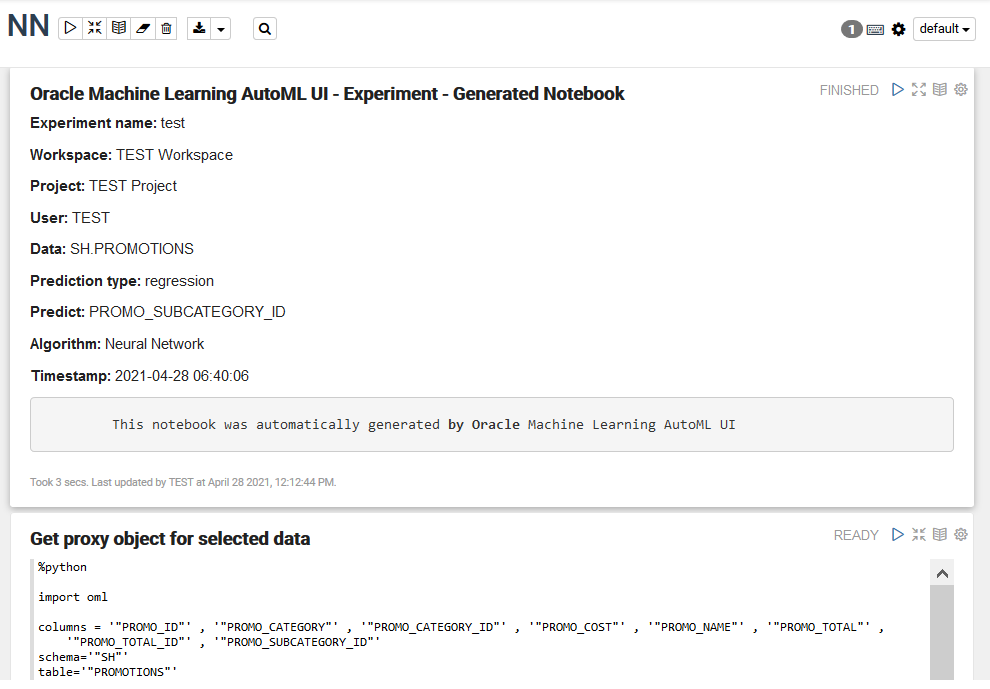

To use the Oracle Machine Learning AutoML UI, you start by creating an experiment. An experiment is a unit of work that minimally specifies the data source, prediction target, and prediction type. After an experiment runs successfully, it presents you a list of machine learning models in order of model quality according to the metric selected. You can select any of these models for deployment or to generate a notebook. The generated notebook contains Python code using OML4Py and the specific settings AutoML used to produce the model. - View an Experiment

In the AutoML UI Experiments page, all the experiments that you have created are listed. Each experiment will be in one of the following stages: Completed, Running, and Ready.

Related Topics

Parent topic: Get Started

2.6 Access AutoML UI

You can access AutoML UI from Oracle Machine Learning UI home page.

Parent topic: AutoML UI

2.6 Create AutoML UI Experiment

To use the Oracle Machine Learning AutoML UI, you start by creating an experiment. An experiment is a unit of work that minimally specifies the data source, prediction target, and prediction type. After an experiment runs successfully, it presents you a list of machine learning models in order of model quality according to the metric selected. You can select any of these models for deployment or to generate a notebook. The generated notebook contains Python code using OML4Py and the specific settings AutoML used to produce the model.

- Supported Data Types for AutoML UI Experiments

When creating an AutoML experiment, you must specify the data source and the target of the experiment. This topic lists the data types for Python and SQL that are supported by AutoML experiments.

Parent topic: AutoML UI

2.6 Supported Data Types for AutoML UI Experiments

When creating an AutoML experiment, you must specify the data source and the target of the experiment. This topic lists the data types for Python and SQL that are supported by AutoML experiments.

Table 5-1 Supported Data Types by AutoML Experiments

| Data Types | SQL Data Types | Python Data Types |

|---|---|---|

| Numerical |

|

|

| Categorical |

|

|

| Unstructured Text |

|

|

Parent topic: Create AutoML UI Experiment

2.6 View an Experiment

In the AutoML UI Experiments page, all the experiments that you have created are listed. Each experiment will be in one of the following stages: Completed, Running, and Ready.

To view an experiment, click the experiment name. The Experiment page displays the details of the selected experiment. It contains the following sections:

Edit Experiment

Note:

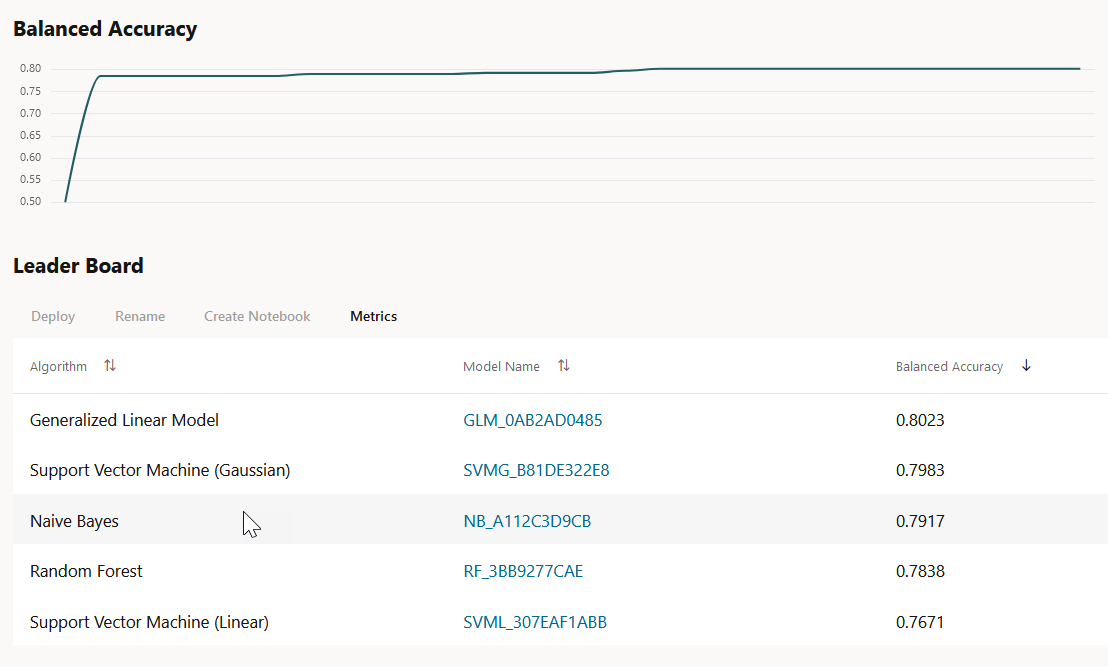

You cannot edit an experiment that is running.Metric Chart

The Model Metric Chart depicts the best metric value over time as the experiment runs. It shows improvement in accuracy as the running of the experiment progresses. The display name depends on the selected model metric when you create the experiment.

Leader Board

- View Model Details: Click on the Model

Name to view the details. The model details are displayed in

the Model Details dialog box. You can click

multiple models on the Leader Board, and view the model details

simultaneously. The Model Details window

depicts the following:

- Prediction Impact: Displays the importance of the attributes in terms of the target prediction of the models.

- Confusion Matrix: Displays the different combination of actual and predicted values by the algorithm in a table. Confusion Matrix serves as a performance measurement of the machine learning algorithm.

- Deploy: Select any model on the Leader Board and click Deploy to deploy the selected model. Deploy Model.

- Rename: Click Rename to change the name of the system generated model name. The name must be alphanumeric (not exceeding 123 characters) and must not contain any blank spaces.

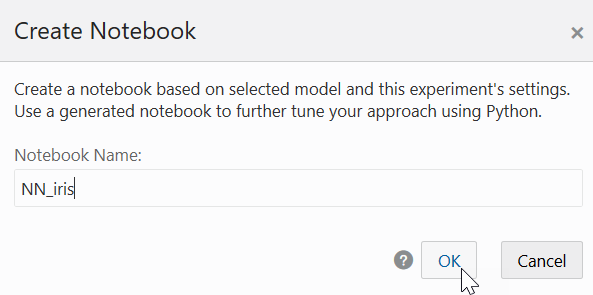

- Create Notebook: Select any model on the Leader Board and click Create Notebooks from AutoML UI Models to recreate the selected model from code.

- Metrics: Click

Metrics to select additional metrics to display

in the Leader Board. The additional metrics are:

- For Classification

- Accuracy: Calculates the proportion of correctly

classifies cases - both Positive and Negative. For example,

if there are a total of TP (True Positives)+TN (True

Negatives) correctly classified cases out of TP+TN+FP+FN

(True Positives+True Negatives+False Positives+False

Negatives) cases, then the formula is:

Accuracy = (TP+TN)/(TP+TN+FP+FN) - Balanced Accuracy: Evaluates how good a binary classifier is. It is especially useful when the classes are imbalanced, that is, when one of the two classes appears a lot more often than the other. This often happens in many settings such as Anomaly Detection etc.

- Recall: Calculates the proportion of actual Positives that is correctly classified.

- Precision: Calculates the proportion of predicted Positives that is True Positive.

- F1 Score: Combines precision and recall into a

single number. F1-score is computed using harmonic mean

which is calculated by the formula:

F1-score = 2 × (precision × recall)/(precision + recall)

- Accuracy: Calculates the proportion of correctly

classifies cases - both Positive and Negative. For example,

if there are a total of TP (True Positives)+TN (True

Negatives) correctly classified cases out of TP+TN+FP+FN

(True Positives+True Negatives+False Positives+False

Negatives) cases, then the formula is:

- For Regression:

- R2 (Default): A statistical measure that

calculates how close the data are to the fitted regression

line. In general, the higher the value of R-squared, the

better the model fits your data. The value of R2 is always

between 0 to 1, where:

0indicates that the model explains none of the variability of the response data around its mean.1indicates that the model explains all the variability of the response data around its mean.

- Negative Mean Squared Error: This is the mean of the squared difference of predicted and true targets.

- Negative Mean Absolute Error: This is the mean of the absolute difference of predicted and true targets.

- Negative Median Absolute Error: This is the median of the absolute difference between predicted and true targets.

- R2 (Default): A statistical measure that

calculates how close the data are to the fitted regression

line. In general, the higher the value of R-squared, the

better the model fits your data. The value of R2 is always

between 0 to 1, where:

- For Classification

Features

0 to 1, with values

closer to 1 being more important.

- Create Notebooks from AutoML UI Models

You can create notebooks using OML4Py code that will recreate the selected model using the same settings. It also illustrates how to score data using the model. This option is helpful if you want to use the code to re-create a similar machine learning model.

Parent topic: AutoML UI

2.6 Create Notebooks from AutoML UI Models

You can create notebooks using OML4Py code that will recreate the selected model using the same settings. It also illustrates how to score data using the model. This option is helpful if you want to use the code to re-create a similar machine learning model.

Parent topic: View an Experiment