3.3 Clustering Use Case

A retail store has information about its customers' behavior and the purchases they make. Now with the available data, they would like you to analyze and identify if there are any similarities between the customers. Use Oracle Machine Learning to segment customers by finding clusters in the data set which can be then used to support targeted marketing campaigns to increase retail sales. In this use case, you will learn how to identify such segments using the k-Means algorithm.

Related Contents

Table 3-1 Related Contents

| Topic | Link |

|---|---|

| OML4Py GitHub Example | Clustering k-Means |

| About Clustering | About Clustering |

| Model Settings | About Model Settings |

| Shared Settings | Shared Settings |

| k-Means - Model Detail Views | Model Detail Views for k-Means |

Before you start your OML4Py use case journey, ensure that you have the following:

- Data Set

The data set used for this use case is from the SH schema. The SH schema can be readily accessed in Oracle Autonomous AI Database. For on-premises databases, the schema is installed during the installation or can be manually installed by downloading the scripts. See Installing the Sample Schemas.

- Database

Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle AI Database Cloud Service (DBCS), or Oracle Autonomous AI Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle AI Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous AI Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

- Load Data

Access the data set from the SH Schema and explore the data to understand the attributes. - Explore Data

Once the data is accessible, explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values. - Build Model

To evaluate a model's performance, it is common practice to split the data into training and test sets. This allows you to assess how well the model generalizes to unseen data. However, in unsupervised learning, such as clustering, there are no labels or predictors available to calculate accuracy or evaluate performance. As a result, you can use the entire dataset to build the model without the need to split it. Since there is no ground truth to compare the results against, the training-test split is neither applicable nor useful in unsupervised learning. - Evaluate

Evaluate a model by assessing its performance using various metrics and techniques to determine how effectively it generalizes to new, unseen data. This process involves comparing predictions to actual outcomes using metrics like accuracy, precision, recall, F1 score, or mean squared error, depending on the model type. The evaluation helps identify the model's strengths and weaknesses, guiding further improvement or tuning.

Related Topics

Parent topic: Use Cases

3.3.1 Load Data

Access the data set from the SH Schema and explore the data to understand the attributes.

Remember:

The data set used for this use case is from the SH schema. The SH schema can be readily accessed in Oracle Autonomous AI Database. For on-premises databases, the schema is installed during the installation or can be manually installed by downloading the scripts. See Installing the Sample Schemas.

- Access the data.

- Examine the various attributes or columns of the data set.

- Assess data quality (by exploring the data).

Access Data

You will use CUSTOMERS and

SUPPLEMENTARY_DEMOGRAPHICS table data from the SH schema.

Parent topic: Clustering Use Case

3.3.2 Explore Data

Once the data is accessible, explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

Assess Data Quality

To access database data from Python using OML4Py, you must first create a

oml.DataFrame proxy object in Python which represents a

database table, view, or query. Create a oml.DataFrame proxy object

for SUPPLEMENTARY_DEMOGRAPHICS and CUSTOMERS and then merge them by inner join on a

key column, e.g., CUST_ID. Assess the data to identify data types

and noise in the data. Look for missing values, outlier numeric values, or

inconsistently labeled categorical values.

The following steps help you with the exploratory analysis of the data:

- Run the following script in a

%pythoninterpreter paragraph to import theomlmodules, the Panda's module, and set the display options:import pandas as pd import matplotlib.pyplot as plt import oml pd.set_option('display.max_rows', 500) pd.set_option('display.max_columns', 500) pd.set_option('display.width', 1000) import warnings warnings.simplefilter(action='ignore', category=FutureWarning) - Use the

oml.syncfunction to create the Python object DEMOGRAPHICS as a proxy for a database table SUPPLEMENTARY_DEMOGRAPHICS. Theoml.syncfunction returns anoml.DataFrameobject. Theoml.DataFrameobject returned byoml.syncis a proxy for the database object.Note:

Only one environment for a given database schema can exist at a time. If"schema=None", then objects are created searched in the current user's schema.DEMOGRAPHICS = oml.sync(table = "SUPPLEMENTARY_DEMOGRAPHICS", schema = "SH") z.show(DEMOGRAPHICS.head())

- To determine the number of rows and columns in the

oml.DataFrameobject DEMOGRAPHICS, useDataFrame.shape.print("Shape:",DEMOGRAPHICS.shape)(4500, 14) - Use the

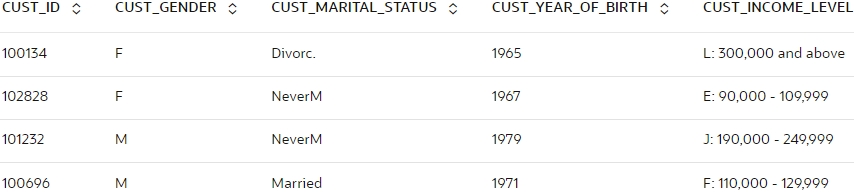

oml.syncfunction to create the Python object CUSTOMERS as a proxy for a database table SH.CUSTOMERS. The query argument uses the SQL SELECT statement for selecting columns to include for use through the proxy object.cCUSTOMERS = oml.sync(query = 'SELECT CUST_ID, CUST_GENDER, CUST_MARITAL_STATUS, CUST_YEAR_OF_BIRTH, CUST_INCOME_LEVEL, CUST_CREDIT_LIMIT FROM SH.CUSTOMERS') z.show(CUSTOMERS.head())

- To determine the number of rows and columns in the

oml.DataFrameobject CUSTOMERS, useDataFrame.shape.print("Shape:",CUSTOMERS.shape)(55500, 6) - Create a new

oml.DataFrameCUSTOMER_DATA by merging the table CUSTOMERS and DEMOGRAPHICS with an inner join on the common column CUST_ID. The merge function joins one oml.DataFrame to another oml.DataFrame. The suffixes parameter is used when the two oml.DataFrame have conflicting column names.CUSTOMER_DATA = CUSTOMERS[["CUST_ID", "CUST_GENDER", "CUST_MARITAL_STATUS", "CUST_YEAR_OF_BIRTH", "CUST_INCOME_LEVEL", "CUST_CREDIT_LIMIT"]].merge(DEMOGRAPHICS[["CUST_ID", "HOUSEHOLD_SIZE","YRS_RESIDENCE", "Y_BOX_GAMES"]], how = "inner", on = 'CUST_ID',suffixes = ["",""]) - To determine the number of rows and columns in the

oml.DataFrameobject CUSTOMER_DATA, use DataFrame.shape.print("Shape:",CUSTOMER_DATA.shape)Shape: (4500, 9) - Use the

concatfunction to concatenate the new column CUST_AGE in anoml.DataFrameobject CUSTOMER_DATA. The column CUST_AGE contains the age based on the column CUST_YEAR_OF_BIRTH where the year of birth is converted to age in the year 2005. The information in the CUST_YEAR_OF_BIRTH column has been modified and maintained in CUST AGE, so drop the CUST_YEAR_OF_BIRTH column.CUSTOMER_DATA=CUSTOMER_DATA.concat({'CUST_AGE':abs(CUSTOMER_DATA['CUST_YEAR_OF_BIRTH'] -2005)}) CUSTOMER_DATA=CUSTOMER_DATA.drop('CUST_YEAR_OF_BIRTH') CUSTOMER_DATA.head()

- Run the following script to view the data type of each

column.

print("The datatypes of the column: ","\n") print(CUSTOMER_DATA.dtypes)

- To check if there are any missing values run the following script.

The count function returns the number of elements that are not NULL for each

column and the

len()function returns the number of rows in the dataset.print("Number of missing values in each column is : \n") print(len(CUSTOMER_DATA)-CUSTOMER_DATA.count())

- Use the crosstab method to perform a cross-column analysis of an

oml.DataFrameobject in the database. The crosstab method computes a cross-tabulation of two or more columns. By default, it computes a frequency table for the columns unless a column and an aggregation function have been passed to it. In this example, the crosstab function displays the distribution of unique values of CUST_CREDIT_LIMIT along the x-axis and its occurrence frequency along the y-axis.z.show(CUSTOMER_DATA.crosstab(‘CUST_CREDIT_LIMIT’))

- Use the transparency layer method

describeto calculate descriptive statistics that summarize the central tendency, dispersion, and shape of the CUSTOMER_DATA table in each numeric column.Note:

All computations are computed in the database and only the result statistics are returned to the Python client, in this case, the notebook. Eliminating the need to move data and using the database as a high-performance compute engine greatly increases scalability.CUSTOMER_DATA.describe()

- Before building the model, it's important to ensure that the data

is clean. Data often contains outliers, which can form separate clusters that

negatively impact model quality.

The following script defines a function, IQR, to calculate the interquartile range for a dataframe. It takes two arguments: SUMMARY_DF (which contains summary statistics of the dataframe, generated using the

Run the script to calculate the interquartile range for the specified columns:describemethod) and a list of features. The IQR function uses a for loop to compute the interquartile range for each feature in the list.def IQR(SUMMARY_DF, features): result = [0]*len(features) for i, feature in enumerate(features): result[i] = abs(SUMMARY_DF[feature]['75%'] - SUMMARY_DF[feature]['25%']) return result print(IQR(CUSTOMER_DATA.describe(),['CUST_AGE', 'CUST_CREDIT_LIMIT', 'YRS_RESIDENCE', 'Y_BOX_GAMES']))[20.0, 6000.0, 2.0, 1.0]The user-defined function

The following function removes rows with outliers of a given feature based on quantiles:remove_outlieruses the interquartile range to find outliers in the data and remove them. In boxplot, outliers are points that lie outside of the upper and lower quartiles by 1.5 times the interquartile range (Q1 - 1.5 * IQR or Q3 + 1.5 * IQR). Another form of outlier treatment is clipping or capping, where more extreme values are replaced with a max or min value, e.g., the 1.5 IRQ values.def remove_outlier(DF, SUMMARY_DF, features): iqrs = IQR(SUMMARY_DF, features) for i, iqr in enumerate(iqrs): H = 1.5*iqr DF = DF[ ( DF[features[i]] > SUMMARY_DF[features[i]]['25%'] - H ) & ( DF[features[i]] < SUMMARY_DF[features[i]]['75%'] + H )] print(DF.shape) return DF CUSTOMER_DATA_CLEAN= remove_outlier(CUSTOMER_DATA, CUSTOMER_DATA.describe(), ['CUST_AGE', 'CUST_CREDIT_LIMIT', 'YRS_RESIDENCE', 'Y_BOX_GAMES']) print("Shape:",CUSTOMER_DATA_CLEAN.shape)Shape: (4233, 9)

This completes the data understanding and data preparation stage.

Parent topic: Clustering Use Case

3.3.3 Build Model

To evaluate a model's performance, it is common practice to split the data into training and test sets. This allows you to assess how well the model generalizes to unseen data. However, in unsupervised learning, such as clustering, there are no labels or predictors available to calculate accuracy or evaluate performance. As a result, you can use the entire dataset to build the model without the need to split it. Since there is no ground truth to compare the results against, the training-test split is neither applicable nor useful in unsupervised learning.

Algorithm Selection

- Expectation-Maximization (EM)

- K-Means (KM)

The Expectation-Maximization (EM) algorithm uses a probabilistic clustering based on a density estimation algorithm. The EM algorithm is used when data contains hidden components or when some data points are absent. In contrast, the k-Means (KM) algorithm is a distance-based clustering algorithm that partitions data into a specified number of clusters. Distance-based algorithms are based on the principle that nearby data points are more closely related to one another than to those that are farther away. This algorithm works iteratively to minimize the within-cluster variance in relation to the nearest cluster centroid.

The k-Means algorithm is chosed, as it is simpler than the Expectation-Maximization (EM) algorithm. Since, the optimal number of clusters is unknown, start with one cluster and gradually increase the number of clusters. Use the Elbow meathod to determine the optimal number of clusters.

To specify model settings and build a k-Means model object that will partition and segment the data, run the following script. The settings are provided as key-value pairs, or dictionary pairs, where each key represents a parameter name and its corresponding value represents the setting. Some of the specified settings include KMNS_ITERATIONS and KMNS_DISTANCE. The k-Means algorithm utilizes the number of clusters (k) along with these settings to configure the algorithm.

The following steps guide you to build your model with the selected algorithm.

-

Use the

oml.kmalgorithm to build your model and specify the model settings. Run the following script:try: oml.drop(model="CUST_CLUSTER_MODEL") except: pass setting = {'KMNS_ITERATIONS': 10, 'KMNS_DISTANCE': 'KMNS_EUCLIDEAN', 'KMNS_NUM_BINS': 10, 'KMNS_DETAILS': 'KMNS_DETAILS_ALL', 'PREP_AUTO': 'ON'} km_mod1 = oml.km(n_clusters = 1, **setting).fit(CUSTOMER_DATA_CLEAN, model_name = "CUST_CLUSTER_MODEL", case_id = 'CUST_ID')Examine the script:

KMNS_ITERATIONS: Specifies the maximum number of allowed iterations, with a default of 20.KMNS_DISTANCE: Specify the type of distance functions used, by default distance function is the Euclidean distance.KMNS_NUM_BINS: Specifies the number of bins in the attribute histogram produced by k-Means.KMNS_DETAILS: Determines the level of cluster details that are computed during the build. The valueKMNS_DETAILS_ALLindicates that the cluster hierarchy, record counts, and descriptive statistics such as variances, modes, histograms, and rules are computed.PREP_AUTO: Used for Automatic Data Preparation. By default, it is enabled as'PREP_AUTO': PREP_AUTO_ON, which requires the DBMS_DATA_MINING package. Alternatively, it can be set as'PREP_AUTO': 'ON'. This allows the compiler to validate that the PL/SQL constant name is correct.

Parent topic: Clustering Use Case

3.3.4 Evaluate

Evaluate a model by assessing its performance using various metrics and techniques to determine how effectively it generalizes to new, unseen data. This process involves comparing predictions to actual outcomes using metrics like accuracy, precision, recall, F1 score, or mean squared error, depending on the model type. The evaluation helps identify the model's strengths and weaknesses, guiding further improvement or tuning.

Information and Model Settings

Note:

To get a complete list of information on the settings available in thek-Means module,

run the below

script:help(oml.algo.km)The following steps help you to view different model detail views.

- Use

km_mod1to access the model details available through the k-Means model object, like the model settings, coefficients, fit details, and more.km_mod1

- Use

km_mod1.clustersto list the clusters information.z.show(km_mod1.clusters)

- Run the following script to display the model's centroid concerning

each column. It gives mean, variance for a numeric attribute and mode for a

categorical

attribute.

z.show(km_mod1.centroids)

- To determine the optimal value of k you will use the Elbow method.

Assuming that k lies in a given range, search for the best k by running k-Means

over each k in the given range. For each k find the within-cluster variance. As

the value of k increases within-cluster variance should decrease. This is

because more centers mean that the data points are on average at less distance

from each other for a given centroid. Imagine that each data point is a center

then within-cluster variance would be zero. Before the optimal value of k, the

decrease in within-cluster-variance will be relatively large as new clusters

will have increasingly closer data points within them. After the optimal value

of k, the decrease will be slow as the new clusters formed will be similar to

each other. The range of k should be chosen such that we can see the sharp

decrease at optimal value and the slow decrease afterward resulting in an almost

linear pattern being formed in the plot. The entire curve usually looks like an

L shape and the best K lies in the turning point or the elbow of the L shape.

incluster_sum = [] for cluster in range(1, 9): setting = {'kmns_iterations': 15, 'KMNS_RANDOM_SEED': 1} km_mod2 = oml.km(n_clusters = cluster, **setting).fit(CUSTOMER_DATA_CLEAN) incluster_sum.append(abs(km_mod2.score(CUSTOMER_DATA_CLEAN))) plt.plot(range(1, 9),incluster_sum) plt.title('The Elobw Method Graph') plt.xlabel('Number of clusters(k)') plt.ylabel('wcss_list') plt.show()

The elbow joint or the number of optimal clusters can be observed at k=3.

- Build the final model according to the optimal value of

clusters.

try: oml.drop(model="CUST_CLUSTER_MODEL") except: pass setting = {'KMNS_ITERATIONS': 20, 'KMNS_DISTANCE': 'KMNS_EUCLIDEAN', 'KMNS_NUM_BINS': 10, 'KMNS_DETAILS': 'KMNS_DETAILS_ALL', 'PREP_AUTO': 'ON'} km_mod3 = oml.km(n_clusters = 3, **setting).fit(CUSTOMER_DATA_CLEAN, model_name = "CUST_CLUSTER_MODEL", case_id = 'CUST_ID') - Run the following script to display the model's clusters and the

parent-child relationship in the cluster

hierarchy.

z.show(km_mod3.clusters)

The clusters with cluster_id 3, 4, and 5 are the leaf clusters having 1369, 1442, and 1422 rows of data points in them. The cluster with cluster_id equal to 1 is the root of the binary tree as its parent_cluster_id is equal to nan.

- Run the following script to get only the parent/child relationship.

z.show(km_mod3.taxonomy)

- To view the per cluster-attribute center (centroid) information of

leaf clusters use the model attribute centroids which displays model statistics

like mean, variance, and mode value for each cluster and

attribute.

km_mod3.centroids[km_mod3.centroids["CLUSTER_ID"]>=3]

Cluster ID 3 has users with the highest mean for Y_BOX_GAMES and CUST_INCOME_LEVEL.

- For the model km_mod3, use the model attribute cluster_hists to view

the cluster histogram details.To see the CUST_INCOME_LEVEL attribute's histogram

details for Cluster ID

5.

mod_histogram=km_mod3.cluster_hists z.show(mod_histogram[(mod_histogram['cluster.id']==3) & ((mod_histogram['variable']=='CUST_INCOME_LEVEL:A: Below 30,000') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:B: 30,000 - 49,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:C: 50,000 - 69,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:D: 70,000 - 89,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:E: 90,000 - 109,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:F: 110,000 - 129,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:G: 130,000 - 149,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:H: 150,000 - 169,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:I: 170,000 - 189,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:J: 190,000 - 249,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:K: 250,000 - 299,999') | (mod_histogram['variable']=='CUST_INCOME_LEVEL:L: 300,000 and above'))])

This histogram groups cluster-id 3 into bins based on the CUST_INCOME_LEVEL. The bin with the highest count of customers earns salaries ranging from 190,000 to 249,999 annually.

-

Check the support and confidence level of leaf clusters(3,4 and 5) using the model attribute rules which give the conditions for a case to be assigned with some probability to a cluster. Support and confidence are metrics that describe the relationships between clustering rules and cases. Support is the percentage of cases for which the rule holds. Confidence is the probability that a case described by this rule is assigned to the cluster.

km_mod3.rules

The columns headers in the above data frame specify the following:

- cluster.id: The ID of a cluster in the model rhs.support: The record count rhs.conf:

- rhs.support: The record count

- rhs.conf: The record confidence

- lhr.support: The rule support

- lhs.conf: The rule confidence

- lhs.var: The attribute predicate name

- lhs.var.support: The attribute predicate support

- lhs.var.conf: The attribute predicate confidence

- predicate: The attribute predicate

- Run the following script to get the cluster id and the total number of data

points present in each leaf node. The total number of data points at each tree

level should always be conserved. The total number of data points present in the

root node should equal the sum of all the data points present in the leaf nodes

(1369+1442+1422=4233).

z.show(km_mod3.leaf_cluster_counts)

Score

The clusters discovered by k-Means are used to score a new record by estimating the probabilities that the new record belongs to each of the k clusters. The cluster with the highest probability is assigned to the record.

-

In this step, you will make predictions on the CUSTOMER_DATA_CLEAN and add the CUST_ID as a supplemental column so that you can uniquely associate scores with the data. To do so run the below script:

pred = km_mod3.predict(CUSTOMER_DATA_CLEAN, supplemental_cols = CUSTOMER_DATA_CLEAN[["CUST_ID"]]) z.show(pred)

- To make predictions that return probability for each cluster on the

data use predict_proba

function.

pred = km_mod3.predict_proba(CUSTOMER_DATA_CLEAN, supplemental_cols = CUSTOMER_DATA_CLEAN[["CUST_ID"]]) z.show(pred)

- With Embedded Python Execution, all the above tasks can be

achieved. You can invoke user-defined Python functions in Python engines spawned

and managed by the database environment. Use the oml.do_eval function to run a

user-defined input function that builds a k-Means model, scores records, and

displays the

results.

def build_km_1(): setting = {'KMNS_ITERATIONS': 20, 'KMNS_DISTANCE': 'KMNS_EUCLIDEAN', 'KMNS_NUM_BINS': 10, 'KMNS_DETAILS': 'KMNS_DETAILS_ALL', 'PREP_AUTO': 'ON'} # Create a KM model object and fit it. try: oml.drop(model="CUST_CLUSTER_MODEL_EPE") except: pass km_mod_epe = oml.km(n_clusters = 3, **setting).fit(CUSTOMER_DATA_CLEAN, model_name = "CUST_CLUSTER_MODEL_EPE", case_id = 'CUST_ID') # Show model details. #km_mod_epe pred=(km_mod_epe.predict(CUSTOMER_DATA_CLEAN, supplemental_cols =CUSTOMER_DATA_CLEAN[:, ['CUST_ID']])) return pred z.show(oml.do_eval(func = build_km_1))

- Run the following script to display the probability score of

customer c1 with CUST_ID (102308) belonging to each

cluster.

c1=CUSTOMER_DATA_CLEAN[CUSTOMER_DATA_CLEAN['CUST_ID']==102308] km_mod3.predict_proba(c1, supplemental_cols =c1['CUST_ID'])

To sell a new gaming product, you must target customers who have already purchased Y_BOX_GAMES and have a high credit limit. You have successfully segmented the population into different clusters and the cluster with cluster-id 3 has the target population with the greatest percentage of customers who have already purchased Y_BOX_GAMES, with a mean CUST_CREDIT_LIMIT of 8322. So, you can confidently target customers in cluster-id 3 to sell a new game product.

Parent topic: Clustering Use Case