3.1 Regression Use case

The Brooklyn housing dataset contains the sale prices of homes in brooklyn borough, along with various factors that influence these prices, such as the area of the house, its location, and the type of dwelling. You are tasked with analyzing years of historical home sales data to estimate sales prices, which will help optimize real estate operations. In this case study, you will learn how to predict sales prices using the regression technique and the GLM algorithm.

Related Contents

| Topic | Link |

|---|---|

| OML4Py GitHub Example | OML4Py Regression GLM |

| About Generalized Linear Model | About Generalized Linear Model |

| About Machine Learning Classes and Algorithms | About Machine Learning Classes and Algorithms |

| Shared Settings | Shared Settings |

- Data Set

Download the data set from Brooklyn housing dataset .

- Database Select or create database out of the following

options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle Database Cloud Service (DBCS), or Oracle Autonomous AI Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of OOracle AI Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous AI Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

- Load Data

Load the data in your database and examine the data set and its attributes. - Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values. - Build Model

Build your model using the training data set. Use theoml.glmfunction to build your model and specify model settings. - Evaluate

Before you make predictions using your model on new data, you should first evaluate model accuracy. You can evaluate the model using different methods.

Parent topic: Use Cases

3.1.1 Load Data

Load the data in your database and examine the data set and its attributes.

You can download the dataset from Rolling sales data for the Brooklyn borough. If you are using the Oracle Autonomous AI Database, you will upload files to the Oracle Cloud Infrastructure (OCI) Object Storage, create a sample table, load data into the sample table from files on the OCI Object Storage, and explore the data.

Examine Data

There are 110 attributes in the dataset; below are descriptions of a few important ones. For a complete description of the attribute, see Rolling sales data.

| Attribute Name | Information |

|---|---|

| Borough | The borough in which the tax lot is located. |

| BoroCode | The borough in which the tax lot is located. |

| Year Built | The year construction of the building was completed. |

| Zip Code | A ZIP code that is valid for one of the addresses assigned to the tax lot. |

| Address | An address for the tax lot |

| BUILDING CLASS | A code describing the major use of structures on the tax lot. |

| HEALTH CENTER DISTRICT | The health center district in which the tax lot is located. Thirty health center districts were created by the City in 1930 to conduct neighborhood focused health interventions |

| LAND USE CATEGORY | A code for the tax lot's land use category |

| LOT AREA | Total area of the tax lot, expressed in square feet rounded to the nearest integer. |

| Building Area | The total gross area in square feet. Same as 'gross_sqft' |

| Gross Square Feet | The total area of all the floors of a building as measured from the exterior surfaces of the outside walls of the building, including the land area and space within any building or structure on the property. |

| Year Built | Year the structure on the property was built. |

| Sales Price | Price paid for the property. |

- Import Data

Import data into the Oracle AI Database by using Object Storage (for Cloud).

Related Topics

Parent topic: Regression Use case

3.1.1.1 Import Data

Import data into the Oracle AI Database by using Object Storage (for Cloud).

- from local files in your client computer, or

- from files stored in a cloud-based object store

Follow the steps to upload your data file to the Object Storage bucket.

- Login to your cloud account.

- Click the left-side hamburger menu and select Storage from the menu.

- Select Buckets from the Object Storage & Archive Storage option.

- Select the compartment in which you want to upload the data.

- Click Create Bucket.

- Enter a name for your bucket. For example, Bucket1. Leave the rest of the fields as default.

- Click Create.

- Click on the bucket that you created. Scroll down and click Upload under Objects.

- Leave the Object Name Prefix field black. Click select files to navigate to the data file that you want to upload or drag and drop the data file. In this use case, select the modified .csv file.

- Click Upload. The data file appears under Objects.

- Click the ellipses on the right side of the data file to view the menu. Click View Object Details.

- Copy the URL PATH (URI) to a text file. This URI is used in the

DBMS_CLOUD.COPY_DATAprocedure.

This procedure creates an object storage containing the data file in your cloud account.

Create Auth Token

The Auth Token is required in the DBMS_CLOUD.CREATE_CREDENTIAL

procedure. You can generate the Auth Token in your cloud account.

- Login into your ADW Cloud account.

- Hover your mouse cursor over the human figure icon at the top right of the console and click User Settings from the drop-down menu.

- Click Auth Tokens under Resources on the left of the console.

- Click Generate Token. A pop-up dialog appears.

- Enter a description (optional).

- Click Generate Token.

- Copy the generated token to a text file. The token does not appear again.

- Click Close.

Create Object Storage Credential

The object storage credential is used in the DBMS_CLOUD.COPY_DATA

procedure.

- Login to the OML Notebooks page and create a notebook. See Create a Notebook Classic

- Open the notebook that you just created.

- Enter the following query to create an object storage

credentials:

%script begin DBMS_CLOUD.create_credential ( credential_name => 'CRED', username => '<your cloud account username>', password => '<your Auth Token>' ); end; /--------------------------- PL/SQL procedure successfully completed. ---------------------------Examine the query:

credential_name: The name of the credential to be stored. Provide any name. Here, CRED is the name given.username: This is your cloud account username.password: Enter your Auth Token password that you copied after generating the Auth Token.

- Click the play icon to run the query in your notebook. Your credentials are stored in the ADW user schema.

- In another para, run the following query to check the user

credentials:

SELECT* FROM USER_CREDENTIALS;

Parent topic: Load Data

3.1.2 Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

Data Understanding and Preparation

This stage focuses on building a clear understanding of the dataset through the following steps:

Import necessary libraries: Load essential Python libraries along with Oracle Machine Learning (OML).Load the dataset: Import the dataset for initial exploration.Create a DataFrame proxy object: Represent the table using a proxy object to simplify data manipulation.Perform initial analysis: Examine the dataset's structure, including its shape, data types, missing values, and categorical feature cardinality.

These steps provide a solid foundation for deeper data exploration and preprocessing.

For data preparation and understanding run the following steps:

-

Import necessary libraries

Run the following script in a%pythoninterpreter paragraph to import theomlmodules, the Panda's module, and set the display options:import oml import ssl import pandas as pd import numpy as np import matplotlib.pyplot as plt import warnings warnings.simplefilter(action='ignore', category=FutureWarning) -

Load the dataset

url="https://objectstorage.us-ashburn-1.oraclecloud.com/n/adwc4pm/b/OML_Data/o/brooklyn_sales.csv" ssl._create_default_https_context = ssl._create_unverified_context brooklyn_sales = pd.read_csv(url, engine='python') z.show(brooklyn_sales.head())Figure 3-1 Raw Brooklyn Data

-

Replace missing values (NaN with None)

df = brooklyn_sales.apply(lambda x: x.replace(np.nan, None) if x.dtypes == 'object' else x) -

Impute missing values based on data type

First, the code removes any columns that are entirely empty or contain only missing values. Then, it goes through each remaining column, checks the data type of the non-missing values, and fills in any missing data with the most appropriate replacement based on the column's type.# Drop columns where all values are missing brooklyn_sales1 = brooklyn_sales.dropna(axis=1, how="all") d = {} # Iterate through each column and fill missing values based on its data type for col in brooklyn_sales1: x = brooklyn_sales1[col].dropna().tolist() # Get non-null values to check the column type if len(x) > 0: # For text columns, replace missing values with an empty string if isinstance(x[0], str): y = brooklyn_sales1[col].fillna("") # For integer columns, missing values are left unchanged elif isinstance(x[0], int): y = brooklyn_sales1[col] # For other numeric columns (e.g., floats), replace missing values with 0.0 else: y = brooklyn_sales1[col].fillna(float(0)) d[col] = y # Store the modified column # Convert the dictionary back into a DataFrame brooklyn_sales2 = pd.DataFrame.from_dict(d) # Print the shape of the updated DataFrame print(brooklyn_sales2.shape)(390883, 110) -

Create dataframe proxy object

try: oml.drop(table = 'BROOKLYN') except: pass # Create a persistent table named BROOKLYN in the Oracle AI Database BROOKLYN = oml.create(brooklyn_sales2, table="BROOKLYN") -

Analyze the dataframe

Examine and interpret the shape, data types, missing values and find columns having low cardinality.- Shape of DataFrame:

BROOKLYN.shape(390883, 110) - Data Types of Columns:

BROOKLYN.dtypesID <class 'oml.core.integer.Integer'> borough <class 'oml.core.integer.Integer'> neighborhood <class 'oml.core.string.String'> building_class_category <class 'oml.core.string.String'> tax_class <class 'oml.core.string.String'> ... PFIRM15_FL <class 'oml.core.float.Float'> Version <class 'oml.core.string.String'> MAPPLUTO_F <class 'oml.core.float.Float'> SHAPE_Leng <class 'oml.core.float.Float'> SHAPE_Area <class 'oml.core.float.Float'> Length: 110, dtype: object - Identify columns with missing values (>75% ):

def percent_missing(dat): per_miss={} large_miss_columns=[] for i in dat.columns: l=len(dat) a=100-(dat[i].count()/l)*100 if a>=75: per_miss[i]=round(a) return per_miss z.show(pd.DataFrame(list(percent_missing(BROOKLYN).items()), columns=["Columns", "% Missing"]))Figure 3-2 View columns and their missing percentage.

- Identify columns with low cardinality:

def unique_values_less_10(data): cols=[] for x in data.columns: unique_values=data[x].nunique() if unique_values< 10: cols.append(x) return cols print(unique_values_less_10(BROOKLYN))['borough', 'tax_class_at_sale', 'Borough', 'SanitBoro', 'ZoneDist4', 'Overlay1', 'Overlay2', 'SPDist2', 'SPDist3', 'LtdHeight', 'SplitZone', 'Easements', 'OwnerType', 'AreaSource', 'Ext', 'ProxCode', 'IrrLotCode', 'BsmtCode', 'BoroCode', 'CondoNo', 'ZMCode', 'PLUTOMapID', 'FIRM07_FLA', 'PFIRM15_FL', 'Version', 'MAPPLUTO_F']

- Shape of DataFrame:

- Data Preparation

Data preparation is the process of cleaning (handling missing values, outliers, and inconsistencies), transforming (scaling, encoding, and creating new features) and organizing raw data to make it more compatible with machine learning algorithms.

Parent topic: Regression Use case

3.1.2.1 Data Preparation

Data preparation is the process of cleaning (handling missing values, outliers, and inconsistencies), transforming (scaling, encoding, and creating new features) and organizing raw data to make it more compatible with machine learning algorithms.

Data Preparation

This stage focuses on building a clear understanding of the dataset through the following steps:

- Redundant Columns:

- Clean the Data: Identify and remove duplicate records, redundant columns, and highly correlated columns.

- Data Subset Creation: Filter/select relevant columns.

- Visualizations: Generate visual representations to understand data patterns and relationships.

- Feature Engineering: Create new features like "Decade Built", "Sale Age", and "Street Address".

- Clean the DataFrame: Handle missing values, outliers, and inconsistencies.

- Dataframe Dimensions: Ensure correct dimensions after cleaning.

These steps provide a solid foundation for data preparation.

Redundant Columns: Cleaning Up the Data

To identify redundant columns, compare column names and descriptions for clues. Then analyze the data within the columns to see if the values are identical or highly correlated. Once you identify the redundant columns, you need to decide which one to remove. The column with more missing values, inconsistent formatting, or mostly a unique value can be removed.

Analyze Columns with Similar Naming Conventions

Analyse and compare pairs or groups of columns that appear similar due to their content or variations in naming conventions or formatting. The goal is to ensure data consistency, detect redundancy, and verify if columns represent the same information.

The following columns are compared:

- borough, Borough, and BoroCode

- YearBuilt and year_built

- ZipCode and zip_code

Similarly, this can be applied to other columns, such as building_class, BldgClass and building_class_at_sale; Address and address; etc.

-

Compare columns: borough, Borough, and BoroCode

The column names “borough,” “Borough,” and “BoroCode” are quite similar, suggesting they may contain the same information. To verify this, print five random samples from each column for comparison. Additionally, based on the column descriptions, all three columns represent the Brooklyn borough, with values such as “BK” or “3.0.” Since the data is specific to Brooklyn, these columns are redundant and can be safely removed from the dataset.

z.show(BROOKLYN[['borough','Borough', 'BoroCode']].sample(n=5))

-

Identify Matching Data Percentage

def matching_percentage(data, cols, threshold=0.9): # Filter rows where both columns have non-zero values filtered_df = data[(data[cols[0]] != 0) & (data[cols[1]] != 0)] # Calculate matching percentage total_rows = len(filtered_df) matching_rows = len(filtered_df[filtered_df[cols[0]] == filtered_df[cols[1]]]) matching_percentage = matching_rows / total_rows if total_rows else 0 # Output result if matching_percentage >= threshold: print(f"The columns have a high percentage ({matching_percentage * 100:.2f}%) of matching values, suggesting similarity.") else: print("The columns do not have a high percentage of matching values.") -

Compare columns: YearBuilt and year_built

The column names "YearBuilt" and "year_built" are quite similar, suggesting they may contain the same information. To verify this, we should print out 5 random samples from each column.

matching_percentage(BROOKLYN, ['YearBuilt', 'year_built'])The columns have a high percentage (99.25%) of matching values, suggesting similarity.The columns "YearBuilt", "year_built" contain similar information,so remove one. Remove "year_built" from the dataset.

-

Compare columns: ZipCode and zip_code

matching_percentage(BROOKLYN, ['ZipCode', 'zip_code'])The columns have a high percentage (98.72%) of matching values, suggesting similarity.The column names "ZipCode" and "zip_code" are quite similar, suggesting they may contain the same information. To verify this, we should print out 5 random samples from each column.

z.show(BROOKLYN[['ZipCode', 'zip_code']].sample(n=5))

Filter data by selecting the desired columns

To focus on more reliable data, only houses built after the 1800s are included in the dataset. This is because houses built before the 1800s frequently have a single YearBuilt value of 0, indicating potentially missing or inaccurate information and more is exaplained in the next step.

BROOKLYN2 = BROOKLYN[(BROOKLYN['YearBuilt']>= 1800)][['building_class_at_sale', 'HealthCent', 'YearBuilt', 'ResidFAR',

'sale_date', 'building_class_category', 'GarageArea', 'CD', 'YearAlter1', 'ID', 'SchoolDist', 'SanitDistr', 'PolicePrct','address',

'CT2010', 'commercial_units', 'BldgArea','NumFloors', 'sale_price','AssessTot', 'ResArea','land_sqft','LotFront',

'LotArea','AssessLand', 'SHAPE_Area','year_of_sale', 'gross_sqft','XCoord','YCoord', 'SHAPE_Leng']]

# Dataframe dimension

BROOKLYN2.shape(295356, 31)Feature Engineering and Visualization

Create new columns and modify existing features based on insights gathered from visualizations. The newly engineered features are then merged back into the dataset to enhance its quality and readiness for modeling.

-

Built periods and their counts:

-

Analyze the distribution of the periods in which the houses were built and identify the least and most common periods within our dataset. The column, YearBuilt, is first rounded to the nearest integer value. These rounded values will then be categorized into predefined intervals, or bins. Count the number of YearBuilt within each bin to determine the frequency distribution of built periods.

built_period = (BROOKLYN2['YearBuilt'] // 10) * 10 + oml.Integer(BROOKLYN2['YearBuilt'] % 10 >= 5) * 10 bins_str = built_period.cut(bins=[1700,1800,1880,1900,1920,1940,1960,1980,2000,2020,2040]) bins = sorted(bins_str.drop_duplicates().pull()) z.show(pd.DataFrame({'Built Period':bins, 'Count':[oml.Integer(bins_str == b).sum() for b in bins]}))

-

Visualisation of built periods and their counts

# Data might be incomplete when DECADE_BUILT < 1900 Nbins = 141 n, bins, patches = plt.hist(built_period.pull(), Nbins) plt.xlabel('Built_Period') plt.ylabel('number of records') plt.yscale('log') p = plt.xlim(1795, 2025)

-

-

Preview sale price and count by binning

-

Analyze the distribution of the sale price of the houses and identify the least and most common sale price within our dataset. The column, sale_price, is rounded and then are categorized into predefined intervals, or bins. Count the number of sale_price within each bin to determine the frequency distribution of sale_price.

Sale_Price=(BROOKLYN2['sale_price'].cut(bins=[-100000000,0,20000,40000,60000,80000,100000,1000000,10000000,500000000])) # bins_str = decade_built.cut(bins=[1700,1800,1880,1900,1920,1940,1960,1980,2000,2020,2040]) bins = sorted(Sale_Price.drop_duplicates().pull()) z.show(pd.DataFrame({'Sale Price':bins, 'Count':[oml.Integer(Sale_Price == b).sum() for b in bins]}))

-

Examine logarithmic sales price distribution

# Most properties have 10^5 < sale_price < 10^6.5=3.2M Nbins = 101 n, bins, patches = plt.hist((BROOKLYN2[BROOKLYN2['sale_price']>0]['sale_price']).log(10).pull(), Nbins) plt.xlabel('log10(sale_price)') plt.ylabel('number of records') p = plt.xlim(3.9, 7.1)

-

-

Preview building class category, count and count percentage

Analyze the categorical column, building_class_category, by computing a cross-tabulation. Sort this table in descending order. Finally, determine the frequency of each building class category.

build_category= BROOKLYN2.crosstab('building_class_category').sort_values('count', ascending=False) count_percentage= ((build_category['count'] / len(BROOKLYN2)) * 100).round(decimals=2) z.show(build_category.concat({'count_percentage':count_percentage}))

-

Examine logarithmic gross qft distribution

Values in the gross qft column, when log-transformed, approximate a normal distribution.

# Most properties have 10^2.9=800 < sale_price < 10^3.7=5000 Nbins = 201 n, bins, patches = plt.hist((BROOKLYN2[BROOKLYN2['gross_sqft']>0]['gross_sqft']).log(10).pull(), Nbins) plt.xlabel('log10(sale_price)') plt.ylabel('number of records') p = plt.xlim(2.5, 4.2)

Feature Engineering

Feature engineering is the process of creating new input features from existing data which explains the underlying patterns of a data. These new featues help to improce the model's predictability.

The following features have been engineered:

- Built Period: The Period in which the house was

built.

built_period=(BROOKLYN2['YearBuilt'] // 10) * 10 + oml.Integer(BROOKLYN2['YearBuilt'] % 10 >= 5) * 10 BROOKLYN2=BROOKLYN2.concat({'Built_Period':built_period}) - Age_At_Sale: Age of the house at sale is the number of years from

its construction to the sale

date.

Age_At_Sale2 = abs(BROOKLYN2['year_of_sale'] - BROOKLYN2['YearBuilt']) BROOKLYN2= BROOKLYN2.concat({'Age_At_Sale2': Age_At_Sale2}) - Quarter: Refers to the quarter in which the house was

built.

time_period = oml.Datetime.strptime(BROOKLYN2['sale_date'], format="MM/DD/YYYY") Quarter= (oml.Integer((time_period.month - 1)// 3 + 1))

Clean the Dataframe

After feature engineering, remove columns that no longer contribute to the analysis and drop any rows that have a missing value.

BROOKLYN2=BROOKLYN2.drop(['sale_date'])

BROOKLYN2=BROOKLYN2.dropna()Filter the data to include properties whose sale price, gross square footage, and the decade year of construction fall within a specific, relevant range.

BROOKLYN3 = BROOKLYN2[(BROOKLYN2['sale_price']>=1.0e5) & (BROOKLYN2['sale_price']<=5.0e6) &

(BROOKLYN2['gross_sqft']>=800) & (BROOKLYN2['gross_sqft']<=5000) &

(BROOKLYN2['Built_Period']>=1900) & (BROOKLYN2['Built_Period']<=2010) ]Apply a log transformation to normalize the column. The original, untransformed column is then removed.

BROOKLYN3 = BROOKLYN3.concat({'log_gross_sqft': BROOKLYN3['gross_sqft'].log(10)})

BROOKLYN3=BROOKLYN3.drop(['gross_sqft'])To improve the model's performance, filter the data to focus on properties with higher probabilities of belonging to specific building classes and categories.

BROOKLYN4 = BROOKLYN3[(BROOKLYN3['building_class_at_sale']=='A1') | (BROOKLYN3['building_class_at_sale']=='A2')

| (BROOKLYN3['building_class_at_sale']=='A4') | (BROOKLYN3['building_class_at_sale']=='A5')

| (BROOKLYN3['building_class_at_sale']=='B1') | (BROOKLYN3['building_class_at_sale']=='B2')

| (BROOKLYN3['building_class_at_sale']=='B3') ]

BROOKLYN5 = BROOKLYN4[((BROOKLYN4['building_class_category']=='02 TWO FAMILY HOMES') | (BROOKLYN4['building_class_category']=='01 ONE FAMILY HOMES'))]Dataframe Dimensions

Run the following script to verify the dataframe dimensions:

BROOKLYN5.shape(67083, 32)Parent topic: Explore Data

3.1.3 Build Model

Build your model using the training data set. Use the

oml.glm function to build your model and specify model

settings.

For a supervised learning, like Regression, before creating the model, split the data in to training and test data. Although you can use the entire data set to build a model, it is difficult to validate the model unless there are new data sets available. Therefore, to evaluate the model and to accurately assess the performance of the model on the same data, you generally split or separate the data into training and test data. You use the training data set to train the model and then use the test data set to test the accuracy of the model by running prediction queries. The testing data set already contains known values for the attribute that you want to predict. It is thus easy to determine whether the model's predictions are correct.

Algorithm Selection

Before you build a model, choose the suitable algorithm. You can choose one of the following algorithms to solve a regression problem:

- Extreme Gradient Boosting

- Generalized Linear Model

- Neural Network

- Support Vector Machine

When you want to understand the data set, you always start from a simple and easy baseline model. The Generalized Linear Model algorithm is the right choice because it is simple and easy to interpret since it fits a linear relationship between the feature and the target. You can get an initial understanding of a new data set from the result of the linear model.

The following steps guide you to split your data and build your model with the selected algorithm.

-

Split Data: Train/Test:

Split the data into training and test data, with a 80/20 ratio respectively. The seed parameter is used for random splitting. The split method splits the data referenced by the DataFrame proxy objectBROOKLYN5into two new DataFrame proxy objects train, and test.TRAIN, TEST = BROOKLYN5.split(ratio = (0.8,0.2), seed=15) TRAIN_X = TRAIN.drop('sale_price') TRAIN_Y = TRAIN['sale_price'] TEST_X = TEST TEST_Y = TEST['sale_price'] -

Model Building:

Specify the model settings and build a Generalized Linear Model (GLM) model object for predicting the

sale_priceattribute, run the following script. The settings are given as key-value or dictionary pairs where it refers to parameters name and value setting respectively.try: oml.drop(model = 'BROOKLYN_GLM_REGRESSION_MODEL') except: print('No such model') setting = {'PREP_AUTO':'ON', 'GLMS_ROW_DIAGNOSTICS':'GLMS_ROW_DIAG_ENABLE', 'GLMS_FTR_SELECTION':'GLMS_FTR_SELECTION_ENABLE', 'GLMS_FTR_GENERATION':'GLMS_FTR_GENERATION_ENABLE'} glm_mod = oml.glm("regression", **setting) glm_mod = glm_mod.fit(TRAIN_X,TRAIN_Y,model_name = 'BROOKLYN_GLM_REGRESSION_MODEL',case_id = 'ID')Model setting parameters:

PREP_AUTO: Used to specify fully automated or user-directed general data preparation. By default, it is enabled with a constant value as 'PREP_AUTO': PREP_AUTO_ON.GLMS_ROW_DIAGNOSTICS: Enables or disables the row diagnostics. By default, row diagnostics are disabled.GLMS_FTR_SELECTION: Enables or disables feature selection for GLM. By default, feature selection is not enabled.GLMS_FTR_GENERATION: Specifies whether or not feature generation is enabled for GLM. By default, feature generation is not enabled.Note:

Feature generation can only be enabled when feature selection is also enabled.

Parent topic: Regression Use case

3.1.4 Evaluate

Before you make predictions using your model on new data, you should first evaluate model accuracy. You can evaluate the model using different methods.

Information about Model settings

Evaluate the model by examining the various statistics generated after building the model. The statistics indicate the model's quality.

- Model details: Run the following script for model details available

through the GLM model object, like the model settings, attribute coefficients,

fit details, etc.

glm_modThey can also be displayed and viewed individually as shown below.

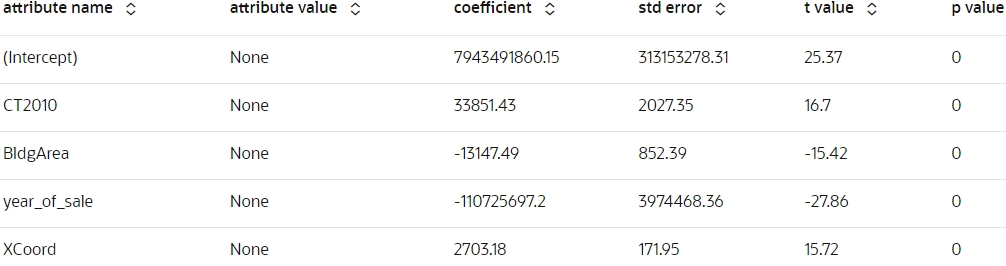

- Attribute Coefficient: Run the following script to display the

model's attribute

coefficient.

z.show(glm_mod.coef.round(2).head())

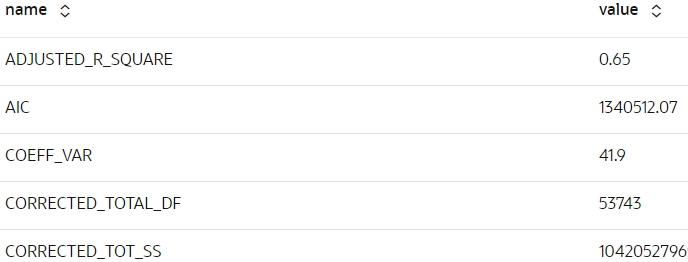

- Fit Details: Run the following script to display the fit details of

the

model.

z.show(glm_mod.fit_details.round(2).head())

Score

Scoring is the process of applying the model on the test data to access its performance.

- Predict sale price: Use the model to make predictions on test

data.

BROOKLYN_RES = glm_mod.predict(TEST.drop('sale_price'), supplemental_cols = TEST[:,['ID','sale_price']]) z.show(BROOKLYN_RES.round(2).head())

- Evaluate Model Performance: Evaluate the model's performance by

using the score

function.

model_coef = len(glm_mod.coef) no_rows_test = TEST_X.shape[0] R_squared = glm_mod.score(TEST_X, TEST_Y).round(3) print( f"RMSE : {(((BROOKLYN_RES['PREDICTION'] - BROOKLYN_RES['sale_price']) ** 2).mean() ** .5).round(2)}\n" f"MAE: {((abs(BROOKLYN_RES['PREDICTION'] - BROOKLYN_RES['sale_price'])).mean()).round(2)}\n" f"R squared: {R_squared}\n" f"Adjusted R^2: {(1 - ( 1 - R_squared)*(no_rows_test-1)/(no_rows_test - model_coef -1)).round(4)}" )The interpreation of the model's performance based on the metrics are as follows:

- RMSE (286,137.56): The model's predictions, on average, deviate by approximately 286,137.56 units from the actual values.

- MAE (170,992.05): The average absolute error in predictions is 170,992.05 units, which provides a sense of the model's accuracy without penalizing large errors.

- R² (0.581): The model explains 58.1% of the variance in the target variable, suggesting a moderate fit to the data.

- Adjusted R² (0.5604): After adjusting for the number of predictors, the model explains about 56.04% of the variance

Overall, the model demonstrates moderate predictive accuracy, with potential for further improvement.

- Residual Graph: The Residual plot is showing heteroscedastic

pattern. This indicates that the errors in the model are inconsistent. The

non-linear relationship between the fitted values (predicted values) and the

residuals, suggests that the model can be improved

further.

import matplotlib.pyplot as plt y_pred = BROOKLYN_RES['PREDICTION'].pull() residuals = (BROOKLYN_RES['sale_price'] - BROOKLYN_RES['PREDICTION']).pull() plt.scatter(y_pred, residuals) plt.xlabel('Predicted Sale Prices') plt.ylabel('Residuals') plt.title('Residual Plot', fontsize=16) plt.grid(True) plt.axhline(y=0, color='r', linestyle='--') plt.show()

SQL Interface to Score Data and Display Prediction Details

You can score data and make predictions using the SQL interface. The test data is materialized into BROOKLYN_GLM_TEST_DATA so that you can query it using SQL. The materialized method writes the contents of an Oracle Machine Learning oml.DataFrame proxy table to an Oracle AI Database table.

- Materialize BROOKLYN_GLM_TEST_DATA for use in query below:

try: oml.drop(table = 'BROOKLYN_GLM_TEST_DATA') except: pass _ = TEST.materialize(table = 'BROOKLYN_GLM_TEST_DATA') -

Display prediction with explanatory prediction details in SQL: The SQL command to score and display the prediction details. The prediction functions apply a glm regressional model named BROOKLYN_GLM_REGRESSION_MODEL to the data from the materialized table BROOKLYN_GLM_TEST_DATA. The query includes information about the predictors that have the greatest influence on the prediction.

SELECT ID, round(PREDICTION_YRS_RES,3) PRED_YRS_RES, round(PRED_LOWER_LIMIT,1) LOWER_BOUND, round(PRED_UPPER_LIMIT,1) UPPER_BOUND, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute1",17,100)),'rank="1"/>') FIRST_ATTRIBUTE, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute2",17,100)),'rank="2"/>') SECOND_ATTRIBUTE, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute3",17,100)),'rank="3"/>') THIRD_ATTRIBUTE FROM (SELECT ID, PREDICTION(BROOKLYN_GLM_REGRESSION_MODEL USING *) PREDICTION_YRS_RES, PREDICTION_BOUNDS(BROOKLYN_GLM_REGRESSION_MODEL USING *).LOWER PRED_LOWER_LIMIT, PREDICTION_BOUNDS(BROOKLYN_GLM_REGRESSION_MODEL USING *).UPPER PRED_UPPER_LIMIT, PREDICTION_DETAILS(BROOKLYN_GLM_REGRESSION_MODEL USING *) PD FROM BROOKLYN_GLM_TEST_DATA WHERE ID < 100015 ORDER BY ID) OUT, XMLTABLE('/Details' PASSING OUT.PD COLUMNS "Attribute1" XMLType PATH 'Attribute[1]', "Attribute2" XMLType PATH 'Attribute[2]', "Attribute3" XMLType PATH 'Attribute[3]') OUTPRED

To conclude, you have successfully predicted the median house prices in Brooklyn using Generalized Linear Model algorithm.

Parent topic: Regression Use case