2 Oracle Machine Learning Basics

Understand the basic concepts of Oracle Machine Learning.

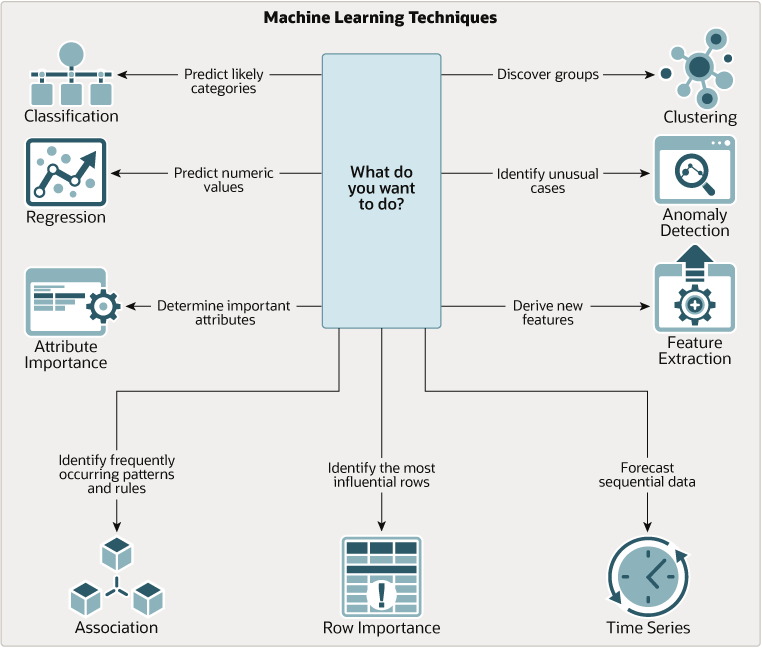

2.1 Machine Learning Techniques

Machine learning techniques define classes of problems for modeling and solving. Understand supervised and unsupervised methods in Oracle Machine Learning.

A basic understanding of machine learning techniques and algorithms is required for using Oracle Machine Learning.

Each machine learning technique specifies a class of problems that can be modeled and solved. Machine learning techniques fall generally into two categories: supervised and unsupervised. Notions of supervised and unsupervised learning are derived from the science of machine learning, which has been called a sub-area of artificial intelligence.

Artificial intelligence refers to the implementation and study of systems that exhibit autonomous intelligence or behavior of their own. Machine learning deals with techniques that enable devices to learn from their own performance and modify their own functioning.

Figure 2-1 How to Use Machine Learning techniques

Description of "Figure 2-1 How to Use Machine Learning techniques"

Related Topics

2.1.1 Supervised Machine Learning

Supervised learning uses known outcomes to guide the model-building process, resulting in predictive models for classification and regression tasks.

Supervised learning is also known as directed learning. The learning process is directed by a previously known dependent attribute or target. Directed Oracle Machine Learning attempts to explain the behavior of the target as a function of a set of independent attributes or predictors.

Supervised learning generally results in predictive models. This is in contrast to unsupervised learning where the goal is pattern detection.

2.1.1.1 Supervised Learning: Testing

Testing evaluates the model's generalizability to new data, ensuring it avoids overfitting and accurately predicts outcomes.

The process of applying the model to test data helps to determine whether the model, built on one chosen sample, is generalizable to other data. In other words, test data is used for scoring. In particular, it helps to avoid the phenomenon of overfitting, which can occur when the logic of the model fits the build data too well and therefore has little predictive power.

2.1.1.2 Supervised Learning: Scoring

Scoring applies the model to new data to make predictions, using techniques like classification and regression for different types of data.

Apply data, also called scoring data, is the actual population to which a model is applied. For example, you might build a model that identifies the characteristics of customers who frequently buy a certain product. To obtain a list of customers who shop at a certain store and are likely to buy a related product, you might apply the model to the customer data for that store. In this case, the store customer data is the scoring data.

Most supervised learning can be applied to a population of interest. The principal supervised machine learning techniques, classification and regression, can both be used for scoring.

Oracle Machine Learning does not support the scoring operation for attribute importance, another supervised technique. Models of this type are built on a population of interest to obtain information about that population; they cannot be applied to separate data. An attribute importance model returns and ranks the attributes that are most important in predicting a target value.

Oracle Machine Learning supports the supervised machine learning techniques described in the following table:

Table 2-1 Oracle Machine Learning Supervised Techniques

| Technique | Description | Sample Problem |

|---|---|---|

|

Identifies the attributes that are most important in predicting a target attribute |

Given customer response to an affinity card program, find the most significant predictors |

|

|

Assigns items to discrete classes and predicts the class to which an item belongs |

Given demographic data about a set of customers, predict customer response to an affinity card program |

|

|

Approximates and forecasts continuous values |

Given demographic and purchasing data about a set of customers, predict customers' age |

2.1.2 Unsupervised Machine Learning

Unsupervised learning identifies patterns and relationships in data without predefined outcomes, useful for clustering, feature extraction, and anomaly detection.

Unsupervised learning is non-directed. There is no distinction between dependent and independent attributes. There is no previously-known result to guide the algorithm in building the model.

Unsupervised learning can be used for descriptive purposes. It can also be used to make predictions.

2.1.2.1 Unsupervised Learning: Scoring

Scoring in unsupervised learning applies models to data for clustering and feature extraction, revealing hidden patterns and structures.

Although unsupervised machine learning does not specify a target, most unsupervised learning can be applied to a population of interest. For example, clustering models use descriptive machine learning techniques, but they can be applied to classify cases according to their cluster assignments. Anomaly Detection, although unsupervised, is typically used to predict whether a data point is typical among a set of cases.

Oracle Machine Learning supports the scoring operation for Clustering and Feature Extraction, both unsupervised machine learning techniques. Oracle Machine Learning does not support the scoring operation for Association Rules, another unsupervised function. Association models are built on a population of interest to obtain information about that population; they cannot be applied to separate data. An association model returns rules that explain how items or events are associated with each other. The association rules are returned with statistics that can be used to rank them according to their probability.

OML supports the unsupervised techniques described in the following table:

Table 2-2 Oracle Machine Learning Unsupervised Techniques

Related Topics

2.2 What is a Machine Learning Algorithm

An algorithm is a mathematical procedure for solving a specific kind of problem. For some machine learning techniques, you can choose among several algorithms.

Each algorithm produces a specific type of model, with different characteristics. Some machine learning problems can best be solved by using more than one algorithm in combination. For example, you might first use a feature extraction model to create an optimized set of predictors, then a classification model to make a prediction on the results.

2.2.1 Oracle Machine Learning Supervised Algorithms

Oracle Machine Learning for SQL (OML4SQL) supports the supervised machine learning algorithms described in the following table.

Table 2-3 Oracle Machine Learning Algorithms for Supervised techniques

2.2.2 Oracle Machine Learning Unsupervised Algorithms

Oracle Machine Learning for SQL (OML4SQL) supports the unsupervised machine learning algorithms described in the following table.

Table 2-4 Oracle Machine Learning Algorithms for Unsupervised Techniques

| Algorithm | Technique | Description |

|---|---|---|

|

Apriori performs market basket analysis by identifying co-occurring items (frequent itemsets) within a set. Apriori finds rules with support greater than a specified minimum support and confidence greater than a specified minimum confidence. |

||

|

CUR Matrix Decomposition is an alternative to Singular Value Decomposition (SVD) and Principal Component Analysis (PCA) and an important tool for exploratory data analysis. This algorithm performs analytical processing and singles out important columns and rows. |

||

|

Expectation Maximization (EM) is a density estimation algorithm that performs probabilistic clustering. In density estimation, the goal is to construct a density function that captures how a given population is distributed. The density estimate is based on observed data that represents a sample of the population. Oracle Machine Learning supports probabilistic clustering and data frequency estimates and other applications of Expectation Maximization. The EM Anomaly algorithm can detect the underlying data distribution and thereby identify records that do not fit the learned data distribution well. |

||

|

Explicit Semantic Analysis (ESA) uses existing knowledge base as features. An attribute vector represents each feature or a concept. ESA creates a reverse index that maps every attribute to the knowledge base concepts or the concept-attribute association vector value. |

||

|

k-Means is a distance-based clustering algorithm that partitions the data into a predetermined number of clusters. Each cluster has a centroid (center of gravity). Cases (individuals within the population) that are in a cluster are close to the centroid. OML4SQL supports an enhanced version of k-Means. It goes beyond the classical implementation by defining a hierarchical parent-child relationship of clusters. |

||

|

Multivariate State Estimation Technique - Sequential Probability Ratio Test |

Anomaly Detection |

The Multivariate State Estimation Technique - Sequential Probability Ratio Test (MSET-SPRT) algorithm is a nonlinear, nonparametric anomaly detection machine learning technique designed for monitoring critical processes. It detects subtle anomalies while also producing minimal false alarms. |

|

Non-Negative Matrix Factorization (NMF) generates new attributes using linear combinations of the original attributes. The coefficients of the linear combinations are non-negative. During model apply, an NMF model maps the original data into the new set of attributes (features) discovered by the model. |

||

|

One-class SVM builds a profile of one class. When the model is applied, it identifies cases that are somehow different from that profile. This allows for the detection of rare cases that are not necessarily related to each other. |

||

|

Clustering |

Orthogonal Partitioning Clustering (O-Cluster) creates a hierarchical, grid-based clustering model. The algorithm creates clusters that define dense areas in the attribute space. A sensitivity parameter defines the baseline density level. |

|

|

Singular Value Decomposition and Principal Component Analysis |

Feature Extraction |

Singular Value Decomposition (SVD) and Principal Component Analysis (PCA) are orthogonal linear transformations that are optimal at capturing the underlying variance of the data. This property is extremely useful for reducing the dimensionality of high-dimensional data and for supporting meaningful data visualization. In addition to dimensionality reduction, SVD and PCA have a number of other important applications, such as data de-noising (smoothing), data compression, matrix inversion, and solving a system of linear equations. |

Related Topics

2.3 Data Preparation

Data preparation involves cleaning, transforming, and organizing data for building effective machine learning models. Quality data is essential for accurate model predictions.

The quality of a model depends to a large extent on the quality of the data used to build (train) it. Much of the time spent in any given machine learning project is devoted to data preparation. The data must be carefully inspected, cleansed, and transformed, and algorithm-appropriate data preparation methods must be applied.

The process of data preparation is further complicated by the fact that any data to which a model is applied, whether for testing or for scoring, must undergo the same transformations as the data used to train the model.

2.3.1 Simplify Data Preparation with Oracle Machine Learning for SQL

Oracle Machine Learning for SQL (OML4SQL) provides inbuilt data preparation, automatic data preparation, custom data preparation through the DBMS_DATA_MINING_TRANSFORM PL/SQL package, model details, and employs consistent approach across machine learning algorithms to manage missing and sparse data.

OML4SQL offers several features that significantly simplify the process of data preparation:

-

Embedded data preparation: The transformations used in training the model are embedded in the model and automatically run whenever the model is applied to new data. If you specify transformations for the model, you only have to specify them once.

-

Automatic Data Preparation (ADP): Oracle Machine Learning for SQL supports an automated data preparation mode. When ADP is active, Oracle Machine Learning for SQL automatically performs the data transformations required by the algorithm. The transformation instructions are embedded in the model along with any user-specified transformation instructions.

-

Automatic management of missing values and sparse data: Oracle Machine Learning for SQL uses consistent methodology across machine learning algorithms to handle sparsity and missing values.

-

Transparency: Oracle Machine Learning for SQL provides model details, which are a view of the attributes that are internal to the model. This insight into the inner details of the model is possible because of reverse transformations, which map the transformed attribute values to a form that can be interpreted by a user. Where possible, attribute values are reversed to the original column values. Reverse transformations are also applied to the target of a supervised model, thus the results of scoring are in the same units as the units of the original target.

-

Tools for custom data preparation: Oracle Machine Learning for SQL provides many common transformation routines in the

DBMS_DATA_MINING_TRANSFORMPL/SQL package. You can use these routines, or develop your own routines in SQL, or both. The SQL language is well suited for implementing transformations in the database. You can use custom transformation instructions along with ADP or instead of ADP.

2.3.2 Case Data

Case data organizes information in single-record rows for each case, essential for most machine learning algorithms in Oracle Machine Learning for SQL.

Most machine learning algorithms act on single-record case data, where the information for each case is stored in a separate row. The data attributes for the cases are stored in the columns.

When the data is organized in transactions, the data for one case (one transaction) is stored in many rows. An example of transactional data is market basket data. With the single exception of Association Rules, which can operate on native transactional data, Oracle Machine Learning for SQL algorithms require single-record case organization.

2.3.2.1 Nested Data

Nested data supports attributes in nested columns, enabling effective mining of complex data structures and multiple sources.

OML4SQL supports attributes in nested columns. A transactional table can be cast as a nested column and included in a table of single-record case data. Similarly, star schemas can be cast as nested columns. With nested data transformations, Oracle Machine Learning for SQL can effectively mine data originating from multiple sources and configurations.

2.3.3 Text Data

Text data involves transforming unstructured text into numeric values for analysis, utilizing Oracle Text utilities and configurable transformations.

Oracle Machine Learning for SQL interprets CLOB columns and long VARCHAR2

columns automatically as unstructured text. Additionally, you can specify columns of

short VARCHAR2, CHAR, BLOB, and

BFILE as unstructured text. Unstructured text includes data items

such as web pages, document libraries, Power Point presentations, product

specifications, emails, comment fields in reports, and call center notes.

OML4SQL uses Oracle Text utilities and term weighting strategies to transform unstructured text for analysis. In text transformation, text terms are extracted and given numeric values in a text index. The text transformation process is configurable for the model and for individual attributes. Once transformed, the text can by mined with a OML4SQL algorithm.

Related Topics

2.4 In-Database Scoring

In-database scoring applies machine learning models to new data within the database, ensuring security, efficiency, and ease of integration with applications.

Scoring is the application of a machine learning algorithm to new data. In Oracle Machine Learning for SQL scoring engine and the data both reside within the database. In traditional machine learning, models are built using specialized software on a remote system and deployed to another system for scoring. This is a cumbersome, error-prone process open to security violations and difficulties in data synchronization.

With OML4SQL, scoring is simple and secure. The scoring engine and the data both reside within the database. Scoring is an extension to the SQL language, so the results of machine learning can easily be incorporated into applications and reporting systems.

2.4.1 Parallel Execution and Ease of Administration

Parallel execution and in-database scoring provide performance advantages and simplify model deployment, ensuring efficient handling of large data sets.

All Oracle Machine Learning for SQL scoring routines support parallel execution for scoring large data sets.

In-database scoring provides performance advantages. All Oracle Machine Learning for SQL scoring routines support parallel execution, which significantly reduces the time required for executing complex queries and scoring large data sets.

In-database machine learning minimizes the IT effort needed to support OML4SQL initiatives. Using standard database techniques, models can easily be refreshed (re-created) on more recent data and redeployed. The deployment is immediate since the scoring query remains the same; only the underlying model is replaced in the database.

Related Topics

2.4.2 SQL Functions for Model Apply and Dynamic Scoring

In Oracle Machine Learning for SQL, scoring is performed by SQL language functions. Understand the different ways of scoring using SQL functions.

The functions perform prediction, clustering, and feature extraction. The functions can be loaded in two different ways: By applying a machine learning model object (Example 2-1), or by running an analytic clause that computes the machine learning analysis dynamically and applies it to the data (Example 2-2). Dynamic scoring, which eliminates the need for a model, can supplement, or even replace, the more traditional methodology described in "The Machine Learning Process".

In Example 2-1, the PREDICTION_PROBABILITY function applies the

model svmc_sh_clas_sample, created in Example 1-1, to score the data in

mining_data_apply_v. The function returns the ten customers in

Italy who are most likely to use an affinity card.

In Example 2-2, the functions PREDICTION and

PREDICTION_PROBABILITY use the analytic syntax (the

OVER () clause) to dynamically score the data in

mining_data_apply_v. The query

returns the customers who currently do not have an affinity card with the probability

that they are likely to use.

Example 2-1 Applying a Oracle Machine Learning for SQL Model to Score Data

SELECT cust_id FROM

(SELECT cust_id,

rank() over (order by PREDICTION_PROBABILITY(svmc_sh_clas_sample, 1

USING *) DESC, cust_id) rnk

FROM mining_data_apply_v

WHERE country_name = 'Italy')

WHERE rnk <= 10

ORDER BY rnk;

The

output is as

follows:

CUST_ID

----------

101445

100179

100662

100733

100554

100081

100344

100324

100185

101345

Example 2-2 Executing an Analytic Function to Score Data

SELECT cust_id, pred_prob FROM

(SELECT cust_id, affinity_card,

PREDICTION(FOR TO_CHAR(affinity_card) USING *) OVER () pred_card,

PREDICTION_PROBABILITY(FOR TO_CHAR(affinity_card),1 USING *) OVER () pred_prob

FROM mining_data_build_v)

WHERE affinity_card = 0

AND pred_card = 1

ORDER BY pred_prob DESC;

The

output is similar

to:

CUST_ID PRED_PROB

---------- ---------

102434 .96

102365 .96

102330 .96

101733 .95

102615 .94

102686 .94

102749 .93

.

.

.

101656 .51