1 Introduction

This chapter provides an introduction to the core concepts of the Oracle Data Masking and Subsetting Pack.

Note:

For Oracle Data Masking and Subsetting licensing information, please refer to Oracle Database Licensing Guide.

What to Know

About DMS

Oracle Data Masking and Subsetting unlocks the value of data without increasing risk and minimizes storage costs by enabling secure and cost-effective data provisioning for various scenarios, including test and development environments. It protects sensitive data by discovering and masking it, ensuring that only relevant data is shared. This approach speeds up regulatory compliance and maximizes data value by safely sharing realistic, functionally intact data with stakeholders. It also reduces costs by extracting and sharing only the necessary data, thereby lowering time, storage, and infrastructure expenses.

Parent topic: What to Know

Major Components of Oracle Data Masking and Subsetting

Oracle Data Masking and Subsetting consists of the following major components:

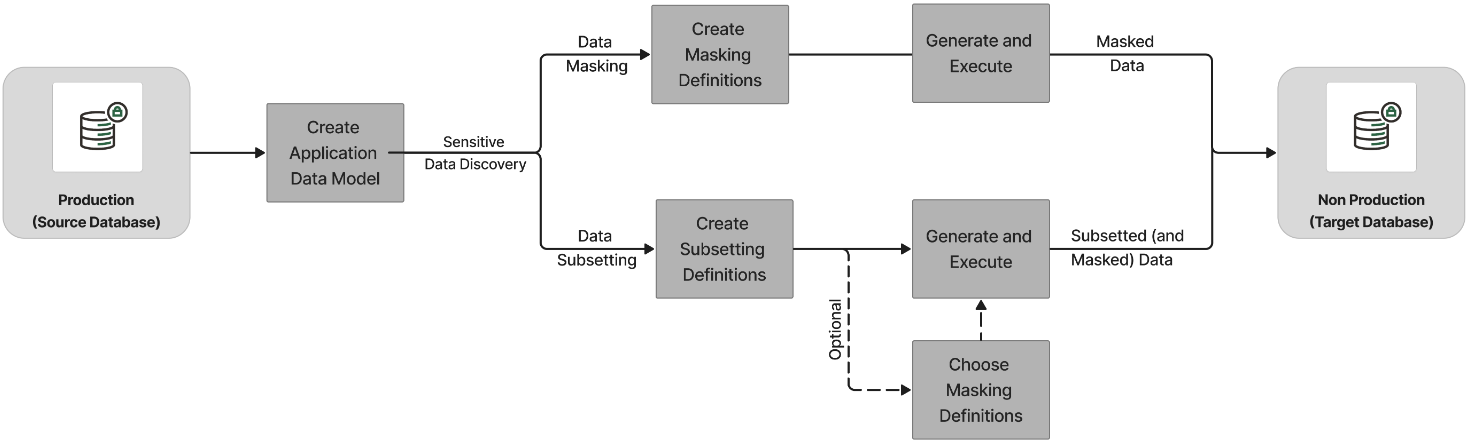

Figure 1-1 Oracle Data Masking and Subsetting Overview

Description of "Figure 1-1 Oracle Data Masking and Subsetting Overview"

Note:

It is recommended that you understand the concepts of Oracle Data Masking and Subsetting mentioned in this chapter prior to the implementation. If you are already aware of these concepts or want to start masking and subsetting data, please refer to the chapters below this chapter:- Start with the Prerequisites chapter to understand the privileges, roles, and storage requirements.

- Refer to the Data Discovery chapter to discover and model sensitive columns.

- Refer to the Data Masking chapter to define and execute masking operation.

- Refer to the Data Subsetting chapter to define and execute subsetting operation.

Parent topic: What to Know

Why DMS?

Parent topic: Introduction

Challenges

- How to Locate Sensitive Data at Short and Long Term? Finding sensitive data across a sprawling landscape of numerous applications, databases, and environments can be a daunting task. Organizations must implement robust discovery tools and methodologies to ensure all sensitive data is accurately identified both initially and on an ongoing basis.

- How to Accurately Protect Sensitive Data? Sensitive data comes in various shapes and forms, such as Credit Card Number, Ethnicity, Phone Number, Date of Birth, and more. Protecting such diverse data types necessitates sophisticated masking and techniques tailored to each specific data type to ensure comprehensive data security.

- Is the Protected Data Usable? For developers, testers, applications, and more, it's crucial that protected data remains usable. Ensuring that data masking and subsetting solutions preserve the functionality and realism of the data is key to maintaining productivity and the integrity of testing and development processes.

- Will the Applications Continue to Work? Developing and maintaining data protection solutions in an ever-changing IT landscape, encompassing both on-premises and cloud environments, requires continuous adaptation. Ensuring that applications continue to work seamlessly despite these changes is vital for ongoing operations and development.

- Limit Sensitive Data Proliferation: Sensitive data often proliferates across various environments such as Test, Dev, QA, Training, Research, Cloud, and more. Implementing strict controls and monitoring to limit the spread of sensitive data is essential for reducing exposure and maintaining data security.

- Save Storage Costs: Managing data storage efficiently in non-production environments, such as test/dev and large data warehouses, is crucial for controlling costs. By extracting and sharing only relevant data, organizations can significantly reduce their storage expenses while maintaining necessary access to data.

- Share What is Necessary: When sharing data with subscribers, auditors, courts, partners, testers, developers, and more, it is essential to ensure that only necessary information is provided and sensitive information is masked. This minimizes the risk of unnecessary data exposure while complying with regulatory and operational requirements.

- Right to be Forgotten/Erasure (GDPR in Europe): Compliance with regulations such as GDPR in Europe mandates the right to be forgotten, requiring organizations to effectively erase personal data upon request. Implementing efficient data management processes to handle such requests is critical for regulatory compliance and maintaining customer trust.

Parent topic: Why DMS?

Use Cases

- Data Sharing: When outsourcing or collaborating with third parties, sharing full datasets can pose a risk. Data Masking and Subsetting solution helps by removing sensitive or non-essential data so that only relevant, non-sensitive data is shared, protecting sensitive information like intellectual property, financial, and personal data.

- Secure Application Testing: Developers need real data for effective testing. Data Masking and Subsetting allows them to use real-world data by obfuscating sensitive information like personal identifiers and financial details. This helps in robust testing without compromising data privacy.

- Analytics without compromise: By masking sensitive information, businesses can share data securely for analytics without exposing personal data. This enables effective data-driven insights while maintaining privacy and compliance.

- Regulatory Compliance: With regulations like GDPR and CCPA, organizations are required to handle sensitive data securely. Oracle’s solution helps anonymize and protect sensitive information in non-production environments, aiding compliance efforts and minimizing the risk of penalties.

Parent topic: Why DMS?

Benefits

-

Sensitive Data Protection: Ensure the confidentiality of sensitive information, such as Personally Identifiable Information (PII) or financial data, by masking it before it's used in non-production environments, thereby safeguarding against unauthorized access.

- Compliance Boundary Management: Ensure enterprise-wide compliance with regulations and laws for managing sensitive data and only allowing access to non-sensitive data in testing and development environments, thereby reducing the risk of compliance violations.

- Cost-Effective Test and Development Environments: Reduce storage costs in test and development environments by subsetting data. By only including relevant subsets of data necessary for testing and development purposes, storage requirements are minimized without sacrificing the quality of testing.

-

Automated Sensitive Data Discovery: Automatically identify sensitive data and its relationships within databases. This feature streamlines compliance efforts by providing a clear understanding of where sensitive data resides and how it's connected within the database.

- Comprehensive Masking and Subsetting Capabilities: Access a wide range of masking formats, subsetting techniques, and application templates. This empowers users to tailor data masking and subsetting strategies to their specific requirements, ensuring comprehensive data protection and optimization.

- Flexible Deployment Options: Mask and subset data either directly in the database or from exported files. This flexibility accommodates diverse data environments and preferences, allowing users to choose the method that best suits their infrastructure and workflows.

Parent topic: Why DMS?

Architecture

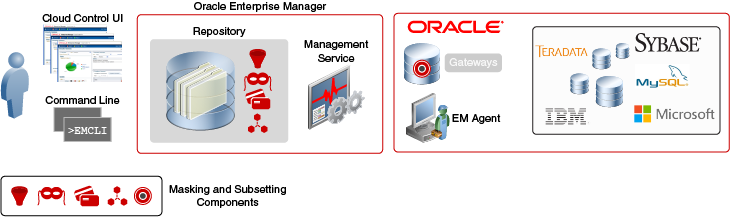

Oracle Data Masking and Subsetting is part of the Oracle Enterprise Manager infrastructure. Organizations using Oracle Enterprise Manager do not need to download and install the Oracle Data Masking and Subsetting Pack separately. Oracle Enterprise Manager provides unified browser-based user interface for administration. All Data Masking and Subsetting objects are centrally located in the Oracle Enterprise Manager repository, which facilitates centralized creation and administration of Data Discovery, Masking Definitions and Data Subsetting Definitions. In addition to its intuitive cloud control Graphical User Interface (GUI), Oracle Enterprise Manager also provides Command Line Interface (EMCLI) to automate select Data Masking and Subsetting tasks.

Figure 1-2 Oracle Data Masking and Subsetting Architecture

Description of "Figure 1-2 Oracle Data Masking and Subsetting Architecture"

For more details on Oracle Enterprise Manager Architecture, please refer to the Oracle Enterprise Manager Introduction Guide.

Parent topic: Introduction

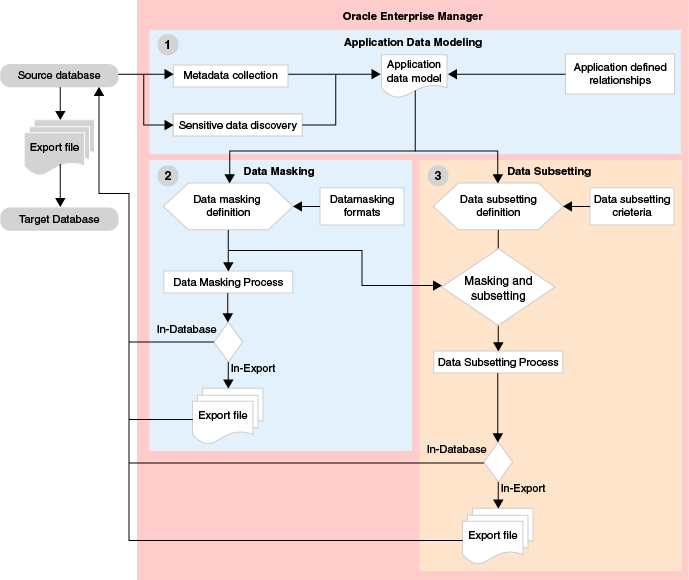

Methodology

The following diagram explains the Oracle Data Masking and Subsetting workflow.

Figure 1-3 Oracle Data Masking and Subsetting Workflow

Description of "Figure 1-3 Oracle Data Masking and Subsetting Workflow"

The following steps describe the Oracle Data Masking and Subsetting Workflow:

-

Create an Application Data Model — To begin using Oracle Data Masking and Subsetting, you must create an Application Data Model (ADM). ADMs capture application metadata, referential relationships, and allow discovery of sensitive data from the source database.

-

Create a Data Masking Definition — After an ADM is created, the next step is to create a data masking definition. A masking definition includes information regarding the columns to be masked and the format to use for masking each of these columns.

-

Create a Data Subsetting Definition — Create a data subsetting definition to define the conditions and parameters to subset data.

Parent topic: Architecture

Deployment Options

Oracle Data Masking and Subsetting provides the following modes for masking and subsetting data:

-

In-Database mode directly masks and subsets data within a non-production database with minimal or zero impact on production environments. As In-Database mode permanently changes the data in a database, this deployment mode is recommended for non-production environments such as staging, test or development databases instead of production databases.

-

In-Export mode masks and subsets the data in near real-time while extracting the data from a database. The masked and subsetted data that is extracted is written to data pump export files, which can be further imported into test, development or QA databases. In general, In-Export mode is used for production databases. In-Export method of masking and subsetting is a unique offering from Oracle that sanitizes sensitive information within the product perimeter.

-

Heterogeneous mode Oracle Data Masking and Subsetting can mask and subset data in non-Oracle databases. Target production data is first copied from the non-Oracle environment into Oracle Database using an Oracle Database Gateway, and is then masked and subsetted within the Oracle Database, and is finally copied back to the non-Oracle environment. This approach is very similar to the steps used in various ETL (Extract, Transform, and Load) tools, except that the Oracle Database is the intermediary that transforms the data. Oracle Database Gateways enable Oracle Data Masking and Subsetting to operate on data from Oracle MySQL, Microsoft SQL Server, Sybase SQL Server, IBM DB2 (UDB, 400, z/OS), IBM Informix, and Teradata.

Note:

For information on licensing, please refer to Oracle Database Licensing Guide.

Parent topic: Architecture

Data Discovery

The Data Discovery component of Data Masking and Subsetting on Enterprise Manager helps you scan and manage sensitive data in your target database. This component supports the creation of Application Data Models (ADM) and Sensitive Types to facilitate data management.

A sensitive type identifies the type of sensitive data, such as national insurance number, using a combination of data patterns in column names, column data, and column comments. Although Oracle provides pre-defined sensitive types to facilitate in identifying sensitive data, users can also create their own custom sensitive types according to their requirements. Automated discovery procedures leverage sensitive types to pattern match the column name, column comment and sample data in the database table columns while scanning for sensitive information.

Creating an ADM is an important step in discovering and managing sensitive data. The ADM keeps a record of applications, tables, and the relationships between table columns that are declared in the data dictionary, imported from application metadata, or user-specified.

Oracle provides predefined sensitive types that leverage pattern matching in column names, comments, and data content. Users can also create their own sensitive types. Automated discovery uses these types to scan and sample data, identifying sensitive data such as national insurance numbers.

Oracle Data Masking Application Templates deliver pre-identified sensitive types, their relationships, and industry-standard best practice masking techniques out-of-the box for packaged applications such as Oracle E-Business Suite and Oracle Fusion Applications. Use the Self Update feature to get the latest masking and subsetting templates available from Oracle.

Parent topic: Introduction

Data Masking

Data Masking protects sensitive data by replacing it with realistic, yet fictitious data. This ensures that data remains usable while safeguarding sensitive information.

Masking formats are the building blocks of a data masking definition which define the format for masking different sensitive columns according to different business requirements

Oracle provides a library containing ready-to-use masking formats for masking data, or users can create custom masking formats as required. Masking Definitions associate table columns with the appropriate masking formats to be used for masking the data.

Parent topic: Introduction

Data Subsetting

Data Subsetting involves creating smaller, manageable sets of data from larger enterprise-class applications while retaining the data’s integrity and usability. Oracle Data Masking and Subsetting simplifies this process through goal-based and condition-based subsetting techniques.

Subsetting Definitions allow users to set rules for how data is extracted.

For example, goals can be defined by relative table size, such as extracting a 1% subset

from a table with 1 million rows. Conditions can be defined based on specific criteria,

such as time (e.g., excluding user records created before a certain year) or region

(e.g., extracting only Asia-Pacific data for development purposes). These conditions are

specified using a SQL WHERE clause.

Parent topic: Introduction