3 Using the In-Memory Graph Server (PGX)

The in-memory Graph server of Oracle Graph supports a set of analytical functions.

This chapter provides examples using the in-memory Graph Server (also referred to as Property Graph In-Memory Analytics, and often abbreviated as PGX in the Javadoc, command line, path descriptions, error messages, and examples). It contains the following major topics.

- Overview of the In-Memory Graph Server (PGX)

The In-Memory Graph Server (PGX) is an in-memory graph server for fast, parallel graph query and analytics. The server uses light-weight in-memory data structures to enable fast execution of graph algorithms. - User Authentication and Authorization

The Oracle Graph server (PGX) uses an Oracle Database as identity manager. - Keeping the Graph in Oracle Database Synchronized with the Graph Server

You can use theFlashbackSynchronizerAPI to automatically apply changes made to graph in the database to the correspondingPgxGraphobject in memory, thus keeping both synchronized. - Configuring the In-Memory Analyst

You can configure the in-memory analyst engine and its run-time behavior by assigning a single JSON file to the in-memory analyst at startup. - Storing a Graph Snapshot on Disk

After reading a graph into memory using either Java or the Shell, if you make some changes to the graph such as running the PageRank algorithm and storing the values as vertex properties, you can store this snapshot of the graph on disk. - Executing Built-in Algorithms

The in-memory graph server (PGX) contains a set of built-in algorithms that are available as Java APIs. - Using Custom PGX Graph Algorithms

A custom PGX graph algorithm allows you to write a graph algorithm in Java and have it automatically compiled to an efficient parallel implementation. - Creating Subgraphs

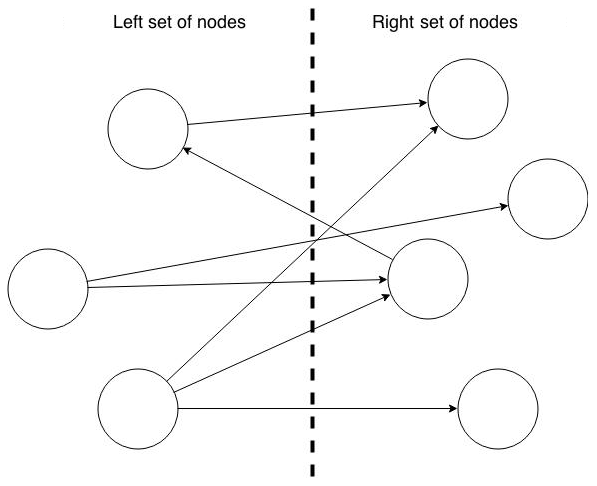

You can create subgraphs based on a graph that has been loaded into memory. You can use filter expressions or create bipartite subgraphs based on a vertex (node) collection that specifies the left set of the bipartite graph. - Using Automatic Delta Refresh to Handle Database Changes

You can automatically refresh (auto-refresh) graphs periodically to keep the in-memory graph synchronized with changes to the property graph stored in the property graph tables in Oracle Database (VT$ and GE$ tables). - Starting the In-Memory Analyst Server

A preconfigured version of Apache Tomcat is bundled, which allows you to start the in-memory analyst server by running a script. - Deploying to Apache Tomcat

The example in this topic shows how to deploy the graph server as a web application with Apache Tomcat. - Deploying to Oracle WebLogic Server

The example in this topic shows how to deploy the graph server as a web application with Oracle WebLogic Server. - Connecting to the In-Memory Analyst Server

After the property graph in-memory analyst is installed in a computer running Oracle Database -- or on a client system without Oracle Database server software as a web application on Apache Tomcat or Oracle WebLogic Server -- you can connect to the in-memory analyst server. - Managing Property Graph Snapshots

You can manage property graph snapshots. - User-Defined Functions (UDFs) in PGX

User-defined functions (UDFs) allow users of PGX to add custom logic to their PGQL queries or custom graph algorithms, to complement built-in functions with custom requirements.

3.1 Overview of the In-Memory Graph Server (PGX)

The In-Memory Graph Server (PGX) is an in-memory graph server for fast, parallel graph query and analytics. The server uses light-weight in-memory data structures to enable fast execution of graph algorithms.

The In-Memory Graph Server (PGX) is an in-memory graph server for fast, parallel graph query and analytics. The server uses light-weight in-memory data structures to enable fast execution of graph algorithms.

There are multiple options to load a graph into the graph server either from Oracle Database or from files.

The graph server can be deployed standalone (it includes an embedded Apache Tomcat instance), or deployed in Oracle WebLogic Server or Apache Tomcat.

3.1.1 Connecting to the In-Memory Graph Server (PGX)

Multiple graph clients can connect to the in-memory graph server at the same time. The client request are processed by the graph server asynchronously. The client requests are queued up first and processed later, when resources are available. The client can poll the server to check if a request has been finished. Internally, the server maintains its own engine (thread pools) for running parallel graph algorithms. The engine tries to process each analytics request concurrently with as many threads as possible.

Note:

- If multiple clients load the same graph instance the graph server can share one graph instance between multiple clients under the hood.

- Each client can add additional vertex or edge properties to a loaded graph in its own session. Such properties are transient properties, and are private to each session and not visible to another session. If a client creates mutated version of a graph, the graph server will create a private graph instance for that client.

Parent topic: Overview of the In-Memory Graph Server (PGX)

3.2 User Authentication and Authorization

The Oracle Graph server (PGX) uses an Oracle Database as identity manager.

This means that you log into the graph server using existing Oracle Database credentials (user name and password), and the actions which you are allowed to do on the graph server are determined by the roles that have been granted to you in the Oracle database.

Basic Steps for Using an Oracle Database for Authentication

- Use an Oracle Database version that is supported by Oracle Graph Server and Client: version 12.2 or later, including Autonomous Database.

- Be sure that you have ADMIN access (or SYSDBA access for non-autonomous databases) to grant and revoke users access to the graph server (PGX).

- Be sure that all existing users to which you plan to grant access to the graph server have at least the CREATE SESSION privilege granted.

- Be sure that the database is accessible via JDBC from the host where the Graph Server runs.

- As ADMIN (or SYSDBA on non-autonomous databases), run the following procedure to create the roles required by the graph server:

Note:

If you install the PL/SQL packages of the Oracle Graph Server and Client distribution on the target Oracle Database, this step is not necessary. All the roles shown in the following code are created as part of the PL/SQL installation automatically. You cannot install the PL/SQL packages on Autonomous Database, so if you use the graph server with Autonomous Database, it is recommended to execute the following statements using SQL Developer Web.DECLARE PRAGMA AUTONOMOUS_TRANSACTION; role_exists EXCEPTION; PRAGMA EXCEPTION_INIT(role_exists, -01921); TYPE graph_roles_table IS TABLE OF VARCHAR2(50); graph_roles graph_roles_table; BEGIN graph_roles := graph_roles_table( 'GRAPH_DEVELOPER', 'GRAPH_ADMINISTRATOR', 'PGX_SESSION_CREATE', 'PGX_SERVER_GET_INFO', 'PGX_SERVER_MANAGE', 'PGX_SESSION_READ_MODEL', 'PGX_SESSION_MODIFY_MODEL', 'PGX_SESSION_NEW_GRAPH', 'PGX_SESSION_GET_PUBLISHED_GRAPH', 'PGX_SESSION_COMPILE_ALGORITHM', 'PGX_SESSION_ADD_PUBLISHED_GRAPH'); FOR elem IN 1 .. graph_roles.count LOOP BEGIN dbms_output.put_line('create_graph_roles: ' || elem || ': CREATE ROLE ' || graph_roles(elem)); EXECUTE IMMEDIATE 'CREATE ROLE ' || graph_roles(elem); EXCEPTION WHEN role_exists THEN dbms_output.put_line('create_graph_roles: role already exists. continue'); WHEN OTHERS THEN RAISE; END; END LOOP; EXCEPTION when others then dbms_output.put_line('create_graph_roles: hit error '); raise; END; / - Assign default permissions to the roles GRAPH_DEVELOPER and GRAPH_ADMINISTRATOR to group multiple permissions together.

Note:

If you install the PL/SQL packages of the Oracle Graph Server and Client distribution on the target Oracle Database, this step is not necessary. All the grants shown in the following code are executed as part of the PL/SQL installation automatically. You cannot install the PL/SQL packages on Autonomous Database, so if you use the graph server with Autonomous Database, it is recommended to execute the following statements using SQL Developer Web.GRANT PGX_SESSION_CREATE TO GRAPH_ADMINISTRATOR; GRANT PGX_SERVER_GET_INFO TO GRAPH_ADMINISTRATOR; GRANT PGX_SERVER_MANAGE TO GRAPH_ADMINISTRATOR; GRANT PGX_SESSION_CREATE TO GRAPH_DEVELOPER; GRANT PGX_SESSION_NEW_GRAPH TO GRAPH_DEVELOPER; GRANT PGX_SESSION_GET_PUBLISHED_GRAPH TO GRAPH_DEVELOPER; GRANT PGX_SESSION_MODIFY_MODEL TO GRAPH_DEVELOPER; GRANT PGX_SESSION_READ_MODEL TO GRAPH_DEVELOPER; - Assign roles to all the database developers who should have access the graph server (PGX). For example:

GRANT graph_developer TO <graphuser>

where <graphuser> is a user in the database. You can also assign individual permissions (roles prefixed with PGX_) to users directly.

- Assign the administrator role to users who should have administrative access. For example:

GRANT graph_administrator to <administratoruser>where <administratoruser> is a user in the database.

- Prepare the Graph Server for Database Authentication

Locate thepgx.conffile of your installation. - Connect to the Server from JShell with Database Authentication

You can use the JShell client to connect to the server in remote mode, using database authentication. - Read Data from the Database

Once logged in, you can now read data from the database into the graph server without specifying any connection information in the graph configuration. - Store the Database Password in a Keystore

- Token Expiration

By default, tokens are valid for 1 hour. - Advanced Access Configuration

You can customize the following fields inpgx.confrealm options to customize login behavior. - Revoking Access to the Graph Server

To revoke a user's ability to access the graph server, either drop the user from the database or revoke the corresponding roles from the user, depending on how you defined the access rules in your pgx.conf file. - Examples of Custom Authorization Rules

You can define custom authorization rules for developers.

Parent topic: Using the In-Memory Graph Server (PGX)

3.2.1 Prepare the Graph Server for Database Authentication

Locate the pgx.conf file of your installation.

If you installed the graph server via RPM, the file is located at: /etc/oracle/graph/pgx.conf

If you use the webapps package to deploy into Tomcat or WebLogic Server, the pgx.conf file is located inside the web application archive file (WAR file) at: WEB-INF/classes/pgx.conf

Tip: On Linux, you can use vim to edit the file directly inside the WAR file without unzipping it first. For example: vim pgx-webapp-21.1.0.war

Inside the pgx.conf file, locate the jdbc_url line of the realm options:

...

"pgx_realm": {

"implementation": "oracle.pg.identity.DatabaseRealm",

"options": {

"jdbc_url": "<REPLACE-WITH-DATABASE-URL-TO-USE-FOR-AUTHENTICATION>",

"token_expiration_seconds": 3600,

...Replace the text with the JDBC URL pointing to your database that you configured in the previous step. For example:

...

"pgx_realm": {

"implementation": "oracle.pg.identity.DatabaseRealm",

"options": {

"jdbc_url": "jdbc:oracle:thin:@myhost:1521/myservice",

"token_expiration_seconds": 3600,

...

If you are using an Autonomous Database, specify the JDBC URL like this:

...

"pgx_realm": {

"implementation": "oracle.pg.identity.DatabaseRealm",

"options": {

"jdbc_url": "jdbc:oracle:thin:@my_identifier_low?TNS_ADMIN=/etc/oracle/graph/wallet",

"token_expiration_seconds": 3600,

...

where /etc/oracle/graph/wallet is an example path to the unzipped wallet file that you downloaded from your Autonomous Database service console, and my_identifier_low is one of the connect identifiers specified in /etc/oracle/graph/wallet/tnsnames.ora.

Now, start the graph server. If you installed via RPM, execute the following command as a root user or with sudo:

sudo systemctl start pgxParent topic: User Authentication and Authorization

3.2.2 Connect to the Server from JShell with Database Authentication

You can use the JShell client to connect to the server in remote mode, using database authentication.

./bin/opg-jshell --base_url https://localhost:7007 --username <database_user>You will be prompted for the database password.

import oracle.pg.rdbms.*

import oracle.pgx.api.*

...

ServerInstance instance = GraphServer.getInstance("https://localhost:7007", "<database user>", "<database password>");

PgxSession session = instance.createSession("my-session");

...Internally, users are authenticated with the graph server using JSON Web Tokens (JWT). See Token Expiration for more details about token expiration.

Parent topic: User Authentication and Authorization

3.2.3 Read Data from the Database

Once logged in, you can now read data from the database into the graph server without specifying any connection information in the graph configuration.

Your database user must exist and have read access on the graph data in the database.

For example, the following graph configuration will read a property graph named hr into memory, authenticating as <database user>/<database password> with the database:

GraphConfig config = GraphConfigBuilder.forPropertyGraphRdbms()

.setName("hr")

.addVertexProperty("FIRST_NAME", PropertyType.STRING)

.addVertexProperty("LAST_NAME", PropertyType.STRING)

.addVertexProperty("EMAIL", PropertyType.STRING)

.addVertexProperty("CITY", PropertyType.STRING)

.setPartitionWhileLoading(PartitionWhileLoading.BY_LABEL)

.setLoadVertexLabels(true)

.setLoadEdgeLabel(true)

.build();

PgxGraph hr = session.readGraphWithProperties(config);

The following example is a graph configuration in JSON format that reads from relational tables into the graph server, without any connection information being provided in the configuration file itself:

{

"name":"hr",

"vertex_id_strategy":"no_ids",

"vertex_providers":[

{

"name":"Employees",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"key_type":"string",

"props":[

{

"name":"FIRST_NAME",

"type":"string"

},

{

"name":"LAST_NAME",

"type":"string"

}

]

},

{

"name":"Departments",

"format":"rdbms",

"database_table_name":"DEPARTMENTS",

"key_column":"DEPARTMENT_ID",

"key_type":"string",

"props":[

{

"name":"DEPARTMENT_NAME",

"type":"string"

}

]

}

],

"edge_providers":[

{

"name":"WorksFor",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"source_column":"EMPLOYEE_ID",

"destination_column":"EMPLOYEE_ID",

"source_vertex_provider":"Employees",

"destination_vertex_provider":"Employees"

},

{

"name":"WorksAs",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"source_column":"EMPLOYEE_ID",

"destination_column":"JOB_ID",

"source_vertex_provider":"Employees",

"destination_vertex_provider":"Jobs"

}

]

}

For more information about how to read data from the database into the graph server, see Store the Database Password in a Keystore.

Parent topic: User Authentication and Authorization

3.2.4 Store the Database Password in a Keystore

PGX requires a database account to read data from the database into memory. The account should be a low-privilege account (see Security Best Practices with Graph Data).

As described in Read Data from the Database, you can read data from the database into the graph server without specifying additional authentication as long as the token is valid for that database user. But if you want to access a graph from a different user, you can do so, as long as that user's password is stored in a Java Keystore file for protection.

You can use the keytool command that is bundled together with the JDK to generate such a keystore file on the command line. See the following script as an example:

# Add a password for the 'database1' connection

keytool -importpass -alias database1 -keystore keystore.p12

# 1. Enter the password for the keystore

# 2. Enter the password for the database

# Add another password (for the 'database2' connection)

keytool -importpass -alias database2 -keystore keystore.p12

# List what's in the keystore using the keytool

keytool -list -keystore keystore.p12

If you are using Java version 8 or lower, you should pass the additional parameter -storetype pkcs12 to the keytool commands in the preceding example.

You can store more than one password into a single keystore file. Each password can be referenced using the alias name provided.

- Either, Write the PGX graph configuration file to load from the property graph schema

- Or, Write the PGX graph configuration file to load a graph directly from relational tables

- Read the data

- Secure coding tips for graph client applications

Either, Write the PGX graph configuration file to load from the property graph schema

Next write a PGX graph configuration file in JSON format. The file tells PGX where to load the data from, how the data looks like and the keystore alias to use. The following example shows a graph configuration to read data stored in the Oracle property graph format.

{

"format": "pg",

"db_engine": "rdbms",

"name": "hr",

"jdbc_url": "jdbc:oracle:thin:@myhost:1521/orcl",

"username": "hr",

"keystore_alias": "database1",

"vertex_props": [{

"name": "COUNTRY_NAME",

"type": "string"

}, {

"name": "DEPARTMENT_NAME",

"type": "string"

}, {

"name": "SALARY",

"type": "double"

}],

"partition_while_loading": "by_label",

"loading": {

"load_vertex_labels": true,

"load_edge_label": true

}

}(For the full list of available configuration fields, including their meanings and default values, see https://docs.oracle.com/cd/E56133_01/latest/reference/loader/database/pg-format.html.)

Or, Write the PGX graph configuration file to load a graph directly from relational tables

The following example loads a subset of the HR sample data from relational tables directly into PGX as a graph. The configuration file specifies a mapping from relational to graph format by using the concept of vertex and edge providers.

Note:

Specifying the vertex_providers and edge_providers properties loads the data into an optimized representation of the graph.

{

"name":"hr",

"jdbc_url":"jdbc:oracle:thin:@myhost:1521/orcl",

"username":"hr",

"keystore_alias":"database1",

"vertex_id_strategy": "no_ids",

"vertex_providers":[

{

"name":"Employees",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"key_type": "string",

"props":[

{

"name":"FIRST_NAME",

"type":"string"

},

{

"name":"LAST_NAME",

"type":"string"

},

{

"name":"EMAIL",

"type":"string"

},

{

"name":"SALARY",

"type":"long"

}

]

},

{

"name":"Jobs",

"format":"rdbms",

"database_table_name":"JOBS",

"key_column":"JOB_ID",

"key_type": "string",

"props":[

{

"name":"JOB_TITLE",

"type":"string"

}

]

},

{

"name":"Departments",

"format":"rdbms",

"database_table_name":"DEPARTMENTS",

"key_column":"DEPARTMENT_ID",

"key_type": "string",

"props":[

{

"name":"DEPARTMENT_NAME",

"type":"string"

}

]

}

],

"edge_providers":[

{

"name":"WorksFor",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"source_column":"EMPLOYEE_ID",

"destination_column":"EMPLOYEE_ID",

"source_vertex_provider":"Employees",

"destination_vertex_provider":"Employees"

},

{

"name":"WorksAs",

"format":"rdbms",

"database_table_name":"EMPLOYEES",

"key_column":"EMPLOYEE_ID",

"source_column":"EMPLOYEE_ID",

"destination_column":"JOB_ID",

"source_vertex_provider":"Employees",

"destination_vertex_provider":"Jobs"

},

{

"name":"WorkedAt",

"format":"rdbms",

"database_table_name":"JOB_HISTORY",

"key_column":"EMPLOYEE_ID",

"source_column":"EMPLOYEE_ID",

"destination_column":"DEPARTMENT_ID",

"source_vertex_provider":"Employees",

"destination_vertex_provider":"Departments",

"props":[

{

"name":"START_DATE",

"type":"local_date"

},

{

"name":"END_DATE",

"type":"local_date"

}

]

}

]

}

Note about vertex and edge IDs:

PGX enforces by default the existence of a unique identifier for each vertex and edge in a graph, so that they can be retrieved by using PgxGraph.getVertex(ID id) and PgxGraph.getEdge(ID id) or by PGQL queries using the built-in id() method.

The default strategy to generate the vertex IDs is to use the keys provided during loading of the graph. In that case, each vertex should have a vertex key that is unique across all the types of vertices. For edges, by default no keys are required in the edge data, and edge IDs will be automatically generated by PGX. Note that the generation of edge IDs is not guaranteed to be deterministic. If required, it is also possible to load edge keys as IDs.

However, because it may cumbersome for partitioned graphs to define such identifiers, it is possible to disable that requirement for the vertices and/or edges by setting the vertex_id_strategy and edge_id_strategy graph configuration fields to the value no_ids as shown in the preceding example. When disabling vertex (resp. edge) IDs, the implication is that PGX will forbid the call to APIs using vertex (resp. edge) IDs, including the ones indicated previously.

If you want to call those APIs but do not have globally unique IDs in your relational tables, you can specify the unstable_generated_ids generation strategy, which generates new IDs for you. As the name suggests, there is no correlation to the original IDs in your input tables and there is no guarantee that those IDs are stable. Same as with the edge IDs, it is possible that loading the same input tables twice yields two different generated IDs for the same vertex.

Read the data

Now you can instruct PGX to connect to the database and read the data by passing in both the keystore and the configuration file to PGX, using one of the following approaches:

- Interactively in the graph shell

If you are using the graph shell, start it with the

--secret_storeoption. It will prompt you for the keystore password and then attach the keystore to your current session. For example:cd /opt/oracle/graph ./bin/opg-jshell --secret_store /etc/my-secrets/keystore.p12 enter password for keystore /etc/my-secrets/keystore.p12:Inside the shell, you can then use normal PGX APIs to read the graph into memory by passing the JSON file you just wrote into the

readGraphWithPropertiesAPI:opg-jshell-rdbms> var graph = session.readGraphWithProperties("config.json") graph ==> PgxGraph[name=hr,N=215,E=415,created=1576882388130] - As a PGX preloaded graphAs a server administrator, you can instruct PGX to load graphs into memory upon server startup. To do so, modify the PGX configuration file at

/etc/oracle/graph/pgx.confand add the path the graph configuration file to thepreload_graphssection. For example:{ ... "preload_graphs": [{ "name": "hr", "path": "/path/to/config.json" }], ... }As root user, edit the service file at/etc/systemd/system/pgx.serviceand change theExecStartcommand to specify the location of the keystore containing the password:ExecStart=/bin/bash start-server --secret-store /etc/keystore.p12Note:

Please note that/etc/keystore.p12must not be password protected for this to work. Instead protect the file via file system permission that is only readable byoraclegraphuser.After the file is edited, reload the changes using:sudo systemctl daemon-reloadFinally start the server:sudo systemctl start pgx - In a Java application

To register a keystore in a Java application, use the

registerKeystore()API on thePgxSessionobject. For example:import oracle.pgx.api.*; class Main { public static void main(String[] args) throws Exception { String baseUrl = args[0]; String keystorePath = "/etc/my-secrets/keystore.p12"; char[] keystorePassword = args[1].toCharArray(); String graphConfigPath = args[2]; ServerInstance instance = Pgx.getInstance(baseUrl); try (PgxSession session = instance.createSession("my-session")) { session.registerKeystore(keystorePath, keystorePassword); PgxGraph graph = session.readGraphWithProperties(graphConfigPath); System.out.println("N = " + graph.getNumVertices() + " E = " + graph.getNumEdges()); } } }You can compile and run the preceding sample program using the Oracle Graph Client package. For example:cd $GRAPH_CLIENT // create Main.java with above contents javac -cp 'lib/*' Main.java java -cp '.:conf:lib/*' Main http://myhost:7007 MyKeystorePassword path/to/config.json

Secure coding tips for graph client applications

When writing graph client applications, make sure to never store any passwords or other secrets in clear text in any files or in any of your code.

Do not accept passwords or other secrets through command line arguments either. Instead, use Console.html#readPassword() from the JDK.

Parent topic: User Authentication and Authorization

3.2.5 Token Expiration

By default, tokens are valid for 1 hour.

Internally, the graph client automatically renews tokens which are about to expire in less than 30 minutes. This is also configurable by re-authenticating your credentials with the database. By default, tokens can only be automatically renewed for up to 24 times, then you need to login again.

If the maximum amount of auto-renewals is reached, you can log in again without losing any of your session data by using the GraphServer#reauthenticate (instance, "<user>", "<password>") API. For example:

opg> var graph = session.readGraphWithProperties(config) // fails because token cannot be renewed anymore

opg> GraphServer.reauthenticate(instance, "<user>", "<password>") // log in again

opg> var graph = session.readGraphWithProperties(config) // works now

Parent topic: User Authentication and Authorization

3.2.6 Advanced Access Configuration

You can customize the following fields in pgx.conf realm options to customize login behavior.

Table 3-1 Advanced Access Configuration Options

| Field Name | Explanation | Default |

|---|---|---|

token_expiration_seconds |

After how many seconds the generated bearer token will expire. | 3600 (1 hour) |

connect_timeout_milliseconds |

After how many milliseconds an connection attempt to the specified JDBC URL will time out, resulting in the login attempt being rejected. | 10000 |

max_pool_size |

Maximum number of JDBC connections allowed per user. If the number is reached, attempts to read from the database will fail for the current user. | 64 |

max_num_users |

Maximum number of active, signed in users to allow. If this number is reached, the graph server will reject login attempts. | 512 |

max_num_token_refresh |

Maximum amount of times a token can be automatically refreshed before requiring a login again. | 24 |

To configure the refresh time on the client side before token expiration, use the following API to login:

int refreshTimeBeforeTokenExpiry = 900; // in seconds, default is 1800 (30 minutes)

ServerInstance instance = GraphServer.getInstance("https://localhost:7007", "<database user>", "<database password>",

refreshTimeBeforeTokenExpiry);Note:

The preceding options work only if the realm implementation is configured to be oracle.pg.identity.DatabaseRealm.

- Customizing Roles and Permissions

You can fully customize the permissions to roles mapping by adding and removing roles and specifying permissions for a role. You can also authorize individual users instead of roles.

Parent topic: User Authentication and Authorization

3.2.6.1 Customizing Roles and Permissions

You can fully customize the permissions to roles mapping by adding and removing roles and specifying permissions for a role. You can also authorize individual users instead of roles.

This topic includes examples of how to customize the permission mapping.

- Adding and Removing Roles

You can add new role permission mappings or remove existing mappings by modifying the authorization list. - Defining Permissions for Individual Users

In addition to defining permissions for roles, you can define permissions for individual users.

Parent topic: Advanced Access Configuration

3.2.6.1.1 Adding and Removing Roles

You can add new role permission mappings or remove existing mappings by modifying the authorization list.

For example:

CREATE ROLE MY_CUSTOM_ROLE_1

GRANT PGX_SESSION_CREATE TO MY_CUSTOM_ROLE1

GRANT PGX_SERVER_GET_INFO TO MY_CUSTOM_ROLE1

GRANT MY_CUSTOM_ROLE1 TO SCOTTParent topic: Customizing Roles and Permissions

3.2.6.1.2 Defining Permissions for Individual Users

In addition to defining permissions for roles, you can define permissions for individual users.

For example:

GRANT PGX_SESSION_CREATE TO SCOTT GRANT PGX_SERVER_GET_INFO TO SCOTT

Parent topic: Customizing Roles and Permissions

3.2.7 Revoking Access to the Graph Server

To revoke a user's ability to access the graph server, either drop the user from the database or revoke the corresponding roles from the user, depending on how you defined the access rules in your pgx.conf file.

For example:

REVOKE graph_developer FROM scottRevoking Graph Permissions

If you have the MANAGE permission on a graph, you can revoke graph access from users or roles using the PgxGraph#revokePermission API. For example:

PgxGraph g = ...

g.revokePermission(new PgxRole("GRAPH_DEVELOPER")) // revokes previously granted role access

g.revokePermission(new PgxUser("SCOTT")) // revokes previously granted user access

Parent topic: User Authentication and Authorization

3.2.8 Examples of Custom Authorization Rules

You can define custom authorization rules for developers.

Example 3-1 Allowing Developers to Use Custom Graph Algorithms

To allow developers to compile custom graph algorithms (see Using Custom PGX Graph Algorithms), add the following static permission to the list of permissions:

GRANT PGX_SESSION_COMPILE_ALGORITHM TO GRAPH_DEVELOPERExample 3-2 Allowing Developers to Publish Graphs

Allowing graph server users to publish graphs or share graphs with other users which originate from the Oracle Database breaks the database authorization model. If you work with graphs in the database, use GRANT statements in the database instead. See the OPG_APIS.GRANT_ACCESS API for examples how to do this for PG graphs. When reading from relational tables, use normal GRANT READ statements on tables.

To allow developers to publish graphs, add the following static permission to the list of permissions:

GRANT PGX_SESSION_ADD_PUBLISHED_GRAPH TO GRAPH_DEVELOPERPublishing graphs alone does not give others access to the graph. You must also specify the type of access. There are three levels of permissions for graphs:

- READ: allows to read the graph data via the PGX API or in PGQL queries, run Analyst or custom algorithms on a graph and create a subgraph or clone the given graph

- EXPORT: export the graph via the PgxGraph#store() APIs. Includes READ permission. Please note that in addition to the EXPORT permission, users also need WRITE permission on a file system in order to export the graph.

- MANAGE: publish the graph or snapshot, grant or revoke permissions on the graph. Includes the EXPORT permission.

The creator of the graph automatically gets the MANAGE permission granted on the graph. If you have the MANAGE permission, you can grant other roles or users READ or EXPORT permission on the graph. You cannot grant MANAGE on a graph. The following example of a user named userA shows how:

import oracle.pgx.api.*

import oracle.pgx.common.auth.*

PgxSession session = GraphServer.getInstance("<base-url>", "<userA>", "<password-of-userA").createSession("userA")

PgxGraph g = session.readGraphWithProperties("examples/sample-graph.json", "sample-graph")

g.grantPermission(new PgxRole("GRAPH_DEVELOPER"), PgxResourcePermission.READ)

g.publish()

PgxSession session = GraphServer.getInstance("<base-url>", "<userB>", "<password-of-userB").createSession("userB")

PgxGraph g = session.getGraph("sample-graph")

g.queryPgql("select count(*) from match (v)").print().close()

Similarly, graphs can be shared with individual users instead of roles, as shown in the following example:

g.grantPermission(new PgxUser("OTHER_USER"), PgxResourcePermission.EXPORT)where OTHER_USER is the user name of the user that will receive the EXPORT permission on graph g.

Example 3-3 Allowing Developers to Access Preloaded Graphs

To allow developers to access preloaded graphs (graphs loaded during graph server startup), grant the read permission on the preloaded graph in the pgx.conf file. For example:

"preload_graphs": [{

"path": "/data/my-graph.json",

"name": "global_graph"

}],

"authorization": [{

"pgx_role": "GRAPH_DEVELOPER",

"pgx_permissions": [{

"preloaded_graph": "global_graph"

"grant": "read"

},

...

You can grant READ, EXPORT, or MANAGE permission.

Example 3-4 Allowing Developers Access to the Hadoop Distributed Filesystem (HDFS) or the Local File System

To allow developers to read files from HDFS, you must first declare the HDFS directory and then map it to a read or write permission. For example:

CREATE OR REPLACE DIRECTORY pgx_file_location AS 'hdfs:/data/graphs'

GRANT READ ON DIRECTORY pgx_file_location TO GRAPH_DEVELOPERSimilarly, you can add another permission with GRANT WRITE to allow write access. Such a write access is required in order to export graphs.

Access to the local file system (where the graph server runs) can be granted the same way. The only difference is that location would be an absolute file path without the hdfs: prefix. For example:

CREATE OR REPLACE DIRECTORY pgx_file_location AS '/opt/oracle/graph/data'Note that in addition to the preceding configuration, the operating system user that runs the graph server process must have the corresponding directory privileges to actually read or write into those directories.

Parent topic: User Authentication and Authorization

3.3 Keeping the Graph in Oracle Database Synchronized with the Graph Server

You can use the FlashbackSynchronizer API to automatically apply changes made to graph in the database to the corresponding PgxGraph object in memory, thus keeping both synchronized.

This API uses Oracle's Flashback Technology to fetch the changes in the database since the last fetch and then push those changes into the graph server using the ChangeSet API. After the changes are applied, the usual snapshot semantics of the graph server apply: each delta fetch application creates a new in-memory snapshot. Any queries or algorithms that are executing concurrently to snapshot creation are unaffected by the changes until the corresponding session refreshes its PgxGraph object to the latest state by calling the session.setSnapshot(graph, PgxSession.LATEST_SNAPSHOT) procedure.

For detailed information about Oracle Flashback technology, see the Database Development Guide.

Prerequisites for Synchronizing

The Oracle database must have Flashback enabled and the database user that you use to perform synchronization must have:

- Read access to all tables which need to kept synchornized.

- Permission to use flashback APIs. For example:

grant execute on dbms_flashback to <user>

The database must also be configured to retain changes for the amount of time needed by your use case.

Limitations for Synchronizing

The synchronizer API currently has the following limitations

- Only partitioned graph configurations with all providers being database tables are supported.

- Both the vertex and edge ID strategy must be set as follows:

"vertex_id_strategy": "keys_as_ids", "edge_id_strategy": "keys_as_ids" - Each edge/vertex provider must be configured to create a key mapping. In each provider block of the graph configuration, add the following loading section:

"loading": { "create_key_mapping": true }This implies that vertices and edges must have globally unique ID columns.

- Each edge/vertex provider must specify the owner of the table by setting the username field. For example, if user SCOTT owns the table, then set the username accordingly in the provider block of that table:

"username": "scott" - In the root loading block, the snapshot source must be set to

change_set:"loading": { "snapshots_source": "change_set" }

For a detailed example, including some options, see the following topic.

- Example of Synchronizing

As an example of performing synchronization, assume you have the following Oracle Database tables, PERSONS and FRIENDSHIPS.

Parent topic: Using the In-Memory Graph Server (PGX)

3.3.1 Example of Synchronizing

As an example of performing synchronization, assume you have the following Oracle Database tables, PERSONS and FRIENDSHIPS.

CREATE TABLE PERSONS (

PERSON_ID NUMBER GENERATED ALWAYS AS IDENTITY (START WITH 1 INCREMENT BY 1),

NAME VARCHAR2(200),

BIRTHDATE DATE,

HEIGHT FLOAT DEFAULT ON NULL 0,

INT_PROP INT DEFAULT ON NULL 0,

CONSTRAINT person_pk PRIMARY KEY (PERSON_ID)

);

CREATE TABLE FRIENDSHIPS (

FRIENDSHIP_ID NUMBER GENERATED ALWAYS AS IDENTITY (START WITH 1 INCREMENT BY 1),

PERSON_A NUMBER,

PERSON_B NUMBER,

MEETING_DATE DATE,

TS_PROP TIMESTAMP,

CONSTRAINT fk_PERSON_A_ID FOREIGN KEY (PERSON_A) REFERENCES persons(PERSON_ID),

CONSTRAINT fk_PERSON_B_ID FOREIGN KEY (PERSON_B) REFERENCES persons(PERSON_ID)

);

You add some sample data into these tables:

INSERT INTO PERSONS (NAME, HEIGHT, BIRTHDATE) VALUES ('John', 1.80, to_date('13/06/1963', 'DD/MM/YYYY'));

INSERT INTO PERSONS (NAME, HEIGHT, BIRTHDATE) VALUES ('Mary', 1.65, to_date('25/09/1982', 'DD/MM/YYYY'));

INSERT INTO PERSONS (NAME, HEIGHT, BIRTHDATE) VALUES ('Bob', 1.75, to_date('11/03/1966', 'DD/MM/YYYY'));

INSERT INTO PERSONS (NAME, HEIGHT, BIRTHDATE) VALUES ('Alice', 1.70, to_date('01/02/1987', 'DD/MM/YYYY'));

INSERT INTO FRIENDSHIPS (PERSON_A, PERSON_B, MEETING_DATE) VALUES (1, 3, to_date('01/09/1972', 'DD/MM/YYYY'));

INSERT INTO FRIENDSHIPS (PERSON_A, PERSON_B, MEETING_DATE) VALUES (2, 4, to_date('19/09/1992', 'DD/MM/YYYY'));

INSERT INTO FRIENDSHIPS (PERSON_A, PERSON_B, MEETING_DATE) VALUES (4, 2, to_date('19/09/1992', 'DD/MM/YYYY'));

INSERT INTO FRIENDSHIPS (PERSON_A, PERSON_B, MEETING_DATE) VALUES (3, 2, to_date('10/07/2001', 'DD/MM/YYYY'));

Synchronizing Using Connection Information in the Graph Configuration

You then want to synchronize using connection information in the graph configuration. You have the following sample graph configuration (KeystoreGraphConfigExample), which reads those tables as a graph:

{

"name": "PeopleFriendships",

"optimized_for": "updates",

"edge_id_strategy": "keys_as_ids",

"edge_id_type": "long",

"vertex_id_type": "long",

"jdbc_url": "<jdbc_url>",

"username": "<username>",

"keystore_alias": "<keystore_alias>",

"vertex_providers": [

{

"format": "rdbms",

"username": "<username>",

"key_type": "long",

"name": "person",

"database_table_name": "PERSONS",

"key_column": "PERSON_ID",

"props": [

...

],

"loading": {

"create_key_mapping": true

}

}

],

"edge_providers": [

{

"format": "rdbms",

"username": "<username>",

"name": "friendOf",

"source_vertex_provider": "person",

"destination_vertex_provider": "person",

"database_table_name": "FRIENDSHIPS",

"source_column": "PERSON_A",

"destination_column": "PERSON_B",

"key_column": "FRIENDSHIP_ID",

"key_type":"long",

"props": [

...

],

"loading": {

"create_key_mapping": true

}

}

],

"loading": {

"snapshots_source": "change_set"

}

}

(In the preceding example, replace the values <jdbc_url>, <username>, and <keystore_alias> with the values for connecting to your database.)

Open the Oracle Property Graph JShell (be sure to register the keystore containing the database password when starting it), and load the graph into memory:

var pgxGraph = session.readGraphWithProperties("persons_graph.json");The following output line shows that the example graph has four vertices and four edges:

pgxGraph ==> PgxGraph[name=PeopleFriendships,N=4,E=4,created=1594754376861]

Now, back in the database, insert a few new rows:

INSERT INTO PERSONS (NAME, BIRTHDATE, HEIGHT) VALUES ('Mariana',to_date('21/08/1996','DD/MM/YYYY'),1.65);

INSERT INTO PERSONS (NAME, BIRTHDATE, HEIGHT) VALUES ('Francisco',to_date('13/06/1963','DD/MM/YYYY'),1.75);

INSERT INTO FRIENDSHIPS (PERSON_A, PERSON_B, MEETING_DATE) VALUES (1, 6, to_date('13/06/2013','DD/MM/YYYY'));

COMMIT;

Back in JShell, you can now use the FlashbackSynchronizer API to automatically fetch and apply those changes:

var synchronizer = new Synchronizer.Builder<FlashbackSynchronizer>().setType(FlashbackSynchronizer.class).setGraph(pgxGraph).build()

pgxGraph = synchronizer.sync()

As you can see from the output, the new vertices and edges have been applied:

pgxGraph ==> PgxGraph[name=PeopleFriendships,N=6,E=5,created=1594754376861]

Note that pgxGraph = synchronizer.sync() is equivalent to calling the following:

synchronizer.sync()

session.setSnapshot(pgxGraph, PgxSession.LATEST_SNAPSHOT)

Splitting the Fetching and Applying of Changes

The synchronizer.sync() invocation fetches the changes and applies them in one call. However, you can encode a more complex update logic by splitting this process into separate fetch() and apply() invocations. For example:

synchronizer.fetch() // fetches changes from the database

if (synchronizer.getGraphDelta().getTotalNumberOfChanges() > 100) {

// only create snapshot if there have been more than 100 changes

synchronizer.apply()

}

Synchronizing Using an Explicit Oracle Connection

The synchronizer API fetches the changes on the client side. That means the client needs to connect to the database. In the preceding example, it did that by reading the connection information available in the graph configuration of the loaded PgxGraph object. However, there can be situations in which connection information cannot be obtained from the PgxGraph object, such as when:

- The associated graph configuration does not contain any database connection information, and the graph was loaded using credentials of a logged in user; or

- The associated graph configuration contains a datasource ID corresponding to a connection stored on the server side.

In these cases, you can pass in an Oracle connection object building the synchronizer object to be used to fetch changes. For example (ExampleGraphConfig.json):

String jdbcUrl = "<JDBC URL>";

String username = "<USERNAME>";

String password = "<PASSWORD>";

Connection connection = DriverManager.getConnection(jdbcUrl, username, password)

Synchronizer synchronizer = new Synchronizer.Builder<FlashbackSynchronizer>()

.setType(FlashbackSynchronizer.class)

.setGraph(pgxGraph)

.setConnection(connection)

.build();

3.4 Configuring the In-Memory Analyst

You can configure the in-memory analyst engine and its run-time behavior by assigning a single JSON file to the in-memory analyst at startup.

This file can include the parameters shown in the following table. Some examples follow the table.

To specify the configuration file, see Specifying the Configuration File to the In-Memory Analyst.

Note:

-

Relative paths in parameter values are always resolved relative to the parent directory of the configuration file in which they are specified. For example, if the configuration file is

/pgx/conf/pgx.conf, then the file pathgraph-configs/my-graph.bin.jsoninside that file would be resolved to/pgx/conf/graph-configs/my-graph.bin.json. -

The parameter default values are optimized to deliver the best performance across a wide set of algorithms. Depending on your workload. you may be able to improve performance further by experimenting with different strategies, sizes, and thresholds.

Table 3-2 Configuration Parameters for the In-Memory Analyst

| Parameter | Type | Description | Default |

|---|---|---|---|

|

admin_request_cache_timeout |

integer |

After how many seconds admin request results get removed from the cache. Requests which are not done or not yet consumed are excluded from this timeout. Note: This is only relevant if PGX is deployed as a webapp. |

60 |

|

allow_idle_timeout_overwrite |

boolean |

If true, sessions can overwrite the default idle timeout. |

true |

|

allow_local_filesystem |

boolean |

Allow loading from the local file system in client/server mode. Default is false. If set to true, additionally specify the property datasource_dir_whitelist to list the directories. The server can only read from the directories that are listed here.

|

false |

|

allow_override_scheduling_information |

boolean |

If true, allow all users to override scheduling information like task weight, task priority, and number of threads |

true |

| allowed_remote_loading_locations | array of string |

Allow loading of graphs into the PGX engine from remote locations (http, https, ftp, ftps, s3, hdfs). Default is empty. Value supported is “*” (asterisk), meaning that all remote locations will be allowed. Note that pre-loaded graphs are loaded from any location, regardless of the value of this setting. WARNING: Specifying * (asterisk) should be done only if you want to explicitly allow users of the PGX remote interface to access files on the local file system. |

[] |

|

allow_task_timeout_overwrite |

boolean |

If true, sessions can overwrite the default task timeout. |

true |

|

allow_user_auto_refresh |

boolean |

If true, users may enable auto refresh for graphs they load. If false, only graphs mentioned in |

false |

| allowed_remote_loading_locations | array of string | (This option may reduce security; use it only if you know what you are doing!) Allow loading graphs into the PGX engine from remote locations (http, https, ftp, ftps, s3, hdfs). If empty, as by default, no remote location is allowed. If "*" is specified in the array, all remote locations are allowed. Only the value "*" is currently supported. Note that pre-loaded graphs are loaded from any location, regardless of the value of this setting. | [] |

| basic_scheduler_config | object | Configuration parameters for the fork join pool backend. | null |

|

bfs_iterate_que_task_size |

integer |

Task size for BFS iterate QUE phase. |

128 |

|

bfs_threshold_parent_read_based |

number |

Threshold of BFS traversal level items to switch to parent-read-based visiting strategy. |

0.05 |

|

bfs_threshold_read_based |

integer |

Threshold of BFS traversal level items to switch to read-based visiting strategy. |

1024 |

|

bfs_threshold_single_threaded |

integer |

Until what number of BFS traversal level items vertices are visited single-threaded. |

128 |

|

character_set |

string |

Standard character set to use throughout PGX. UTF-8 is the default. Note: Some formats may not be compatible. |

utf-8 |

|

cni_diff_factor_default |

integer |

Default diff factor value used in the common neighbor iterator implementations. |

8 |

|

cni_small_default |

integer |

Default value used in the common neighbor iterator implementations, to indicate below which threshold a subarray is considered small. |

128 |

|

cni_stop_recursion_default |

integer |

Default value used in the common neighbor iterator implementations, to indicate the minimum size where the binary search approach is applied. |

96 |

| datasource_dir_whitelist | array of string | If allow_local_filesystem is set, the list of directories from which it is allowed to read files.

|

[] |

|

dfs_threshold_large |

integer |

Value that determines at which number of visited vertices the DFS implementation will switch to data structures that are optimized for larger numbers of vertices. |

4096 |

|

enable_csrf_token_checks |

boolean |

If true, the PGX webapp will verify the Cross-Site Request Forgery (CSRF) token cookie and request parameters sent by the client exist and match. This is to prevent CSRF attacks. |

true |

|

enable_gm_compiler |

boolean |

[relevant when profiling with solaris studio] When enabled, label experiments using the 'er_label' command. |

false |

|

enable_shutdown_cleanup_hook |

boolean |

If true, PGX will add a JVM shutdown hook that will automatically shutdown PGX at JVM shutdown. Notice: Having the shutdown hook deactivated and not explicitly shutting down PGX may result in pollution of your temp directory. |

true |

|

enterprise_scheduler_config |

object |

Configuration parameters for the enterprise scheduler. |

null |

|

enterprise_scheduler_flags |

object |

[relevant for enterprise_scheduler] Enterprise scheduler-specific settings. |

null |

|

explicit_spin_locks |

boolean |

true means spin explicitly in a loop until lock becomes available. false means using JDK locks which rely on the JVM to decide whether to context switch or spin. Setting this value to true usually results in better performance. |

true |

| graph_algorithm_language | enum[GM_LEGACY, GM, JAVA] | Front-end compiler to use. | gm |

| graph_validation_lever | enum[low, high] | Level of validation performed on newly loaded or created graphs. | low |

| ignore_incompatible_backend_operations | boolean | If true, only log when encountering incompatible operations and configuration values in RTS or FJ pool. If false, throw exceptions. | false |

| in_place_update_consistency | enum[ALLLOW_INCONSISTENCIES, CANCEL_TASKS] | Consistency model used when in-place updates occur. Only relevant if in-place updates are enabled. Currently updates are only applied in place if the updates are not structural (Only modifies properties). Two models are currently implemented: one only delays new tasks when an update occurs, the other also delays running tasks. | allow_inconsistencies |

| init_pgql_on_startup | boolean | If true PGQL is directly initialized on start-up of PGX. Otherwise, it is initialized during the first use of PGQL. | true |

| interval_to_poll_max | integer | Exponential backoff upper bound (in ms) to which -once reached, the job status polling interval is fixed | 1000 |

| java_home_dir | string | The path to Java's home directory. If set to <system-java-home-dir>, use the java.home system property.

|

null |

| large_array_threshold | integer | Threshold when the size of an array is too big to use a normal Java array. This depends on the used JVM. (Defaults to Integer.MAX_VALUE - 3) | 2147483644 |

|

max_active_sessions |

integer |

Maximum number of sessions allowed to be active at a time. |

1024 |

| max_distinct_strings_per_pool | integer | [only relevant if string_pooling_strategy is indexed] Number of distinct strings per property after which to stop pooling. If the limit is reached, an exception is thrown. | 65536 |

|

max_off_heap_size |

integer |

Maximum amount of off-heap memory (in megabytes) that PGX is allowed to allocate before an OutOfMemoryError will be thrown. Note: this limit is not guaranteed to never be exceeded, because of rounding and synchronization trade-offs. It only serves as threshold when PGX starts to reject new memory allocation requests. |

<available-physical-memory> |

|

max_queue_size_per_session |

integer |

The maximum number of pending tasks allowed to be in the queue, per session. If a session reaches the maximum, new incoming requests of that sesssion get rejected. A negative value means no limit. |

-1 |

|

max_snapshot_count |

integer |

Number of snapshots that may be loaded in the engine at the same time. New snapshots can be created via auto or forced update. If the number of snapshots of a graph reaches this threshold, no more auto-updates will be performed, and a forced update will result in an exception until one or more snapshots are removed from memory. A value of zero indicates to support an unlimited amount of snapshots. |

0 |

| memory_allocator | enum[basic_allocator, enterprise_allocator] | The memory allocator to use. | basic_allocator |

|

memory_cleanup_interval |

integer |

Memory cleanup interval in seconds. |

600 |

|

ms_bfs_frontier_type_strategy |

enum[auto_grow, short, int] |

The type strategy to use for MS-BFS frontiers. |

auto_grow |

|

num_spin_locks |

integer |

Number of spin locks each generated app will create at instantiation. Trade-off: a small number implies less memory consumption; a large number implies faster execution (if algorithm uses spin locks). |

1024 |

| parallelism | integer | Number of worker threads to be used in thread pool. Note: If the caller thread is part of another thread-pool, this value is ignored and the parallelism of the parent pool is used. | <number-of-cpus> |

| pattern_matching_supernode_cache_threshold | integer | Minimum number of a node's neighbor to be a supernode. This is for the pattern matching engine. | 1000 |

| pooling_factor | number | [only relevant if string_pooling_strategy is on_heap] This value prevents the string pool to grow as big as the property size, which could render the pooling ineffective. | 0.25 |

|

preload_graphs |

array of object |

List of graph configs to be registered at start-up. Each item includes path to a graph config, the name of the graph and whether it should be published. |

[] |

| random_generator_strategy | enum[non_deterministic, deterministic] | Method of generating random numbers in PGX. | non_deterministic |

|

random_seed |

long |

[relevant for deterministic random number generator only] Seed for the deterministic random number generator used in the in-memory analyst. The default is -24466691093057031. |

-24466691093057031 |

|

release_memory_threshold |

double |

Threshold percentage (decimal fraction) of used memory after which the engine starts freeing unused graphs. Examples: A value of 0.0 means graphs get freed as soon as their reference count becomes zero. That is, all sessions which loaded that graph were destroyed/timed out. A value of 1.0 means graphs never get freed, and the engine will throw OutOfMemoryErrors as soon as a graph is needed which does not fit in memory anymore. A value of 0.7 means the engine keeps all graphs in memory as long as total memory consumption is below 70% of total available memory, even if there is currently no session using them. When consumption exceeds 70% and another graph needs to get loaded, unused graphs get freed until memory consumption is below 70% again. |

0.85 |

| revisit_threshold | integer | Maximum number of matched results from a node to be reached. | 4096 |

| scheduler | enum[basic_scheduler, enterprise_scheduler] | The scheduler to use. basic_scheduler uses a scheduler with basic features. enterprise_scheduler uses a scheduler with advanced enterprise features for running multiple tasks concurrently and providing better performance.

|

advanced_scheduler |

|

session_idle_timeout_secs |

integer |

Timeout of idling sessions in seconds. Zero (0) means no timeout |

0 |

|

session_task_timeout_secs |

integer |

Timeout in seconds to interrupt long-running tasks submitted by sessions (algorithms, I/O tasks). Zero (0) means no timeout. |

0 |

|

small_task_length |

integer |

Task length if the total amount of work is smaller than default task length (only relevant for task-stealing strategies). |

128 |

|

spark_streams_interface |

string |

The name of an interface will be used for spark data communication. |

null |

|

strict_mode |

boolean |

If true, exceptions are thrown and logged with ERROR level whenever the engine encounters configuration problems, such as invalid keys, mismatches, and other potential errors. If false, the engine logs problems with ERROR/WARN level (depending on severity) and makes best guesses and uses sensible defaults instead of throwing exceptions. |

true |

| string_pooling_strategy | enum[indexed, on_heap, none] | [only relevant if use_string_pool is enabled] The string pooling strategy to use. | on_heap |

|

task_length |

integer |

Default task length (only relevant for task-stealing strategies). Should be between 100 and 10000. Trade-off: a small number implies more fine-grained tasks are generated, higher stealing throughput; a large number implies less memory consumption and GC activity. |

4096 |

|

tmp_dir |

string |

Temporary directory to store compilation artifacts and other temporary data. If set to <system-tmp-dir>, uses the standard tmp directory of the underlying system (/tmp on Linux). |

<system-tmp-dir> |

|

udf_config_directory |

string |

Directory path containing UDF files. |

null |

| use_memory_mapper_for_reading_pgb | boolean | If true, use memory mapped files for reading graphs in PGB format if possible; if false, always use a stream-based implementation. | true |

| use_memory_mapper_for_storing_pgb | boolean | If true, use memory mapped files for storing graphs in PGB format if possible; if false, always use a stream-based implementation. | true |

Enterprise Scheduler Parameters

The following parameters are relevant only if the advanced scheduler is used. (They are ignored if the basic scheduler is used.)

-

analysis_task_configConfiguration for analysis tasks. Type: object. Default:

prioritymediummax_threads<no-of-CPUs>weight<no-of-CPUs> -

fast_analysis_task_configConfiguration for fast analysis tasks. Type: object. Default:

priorityhighmax_threads<no-of-CPUs>weight1 -

maxnum_concurrent_io_tasksMaximum number of concurrent tasks. Type: integer. Default: 3

-

num_io_threads_per_taskConfiguration for fast analysis tasks. Type: object. Default:

<no-of-cpus>

Basic Scheduler Parameters

The following parameters are relevant only if the basic scheduler is used. (They are ignored if the advanced scheduler is used.)

-

num_workers_analysisNumber of worker threads to use for analysis tasks. Type: integer. Default:

<no-of-CPUs> -

num_workers_fast_track_analysisNumber of worker threads to use for fast-track analysis tasks. Type: integer. Default: 1

-

num_workers_ioNumber of worker threads to use for I/O tasks (load/refresh/write from/to disk). This value will not affect file-based loaders, because they are always single-threaded. Database loaders will open a new connection for each I/O worker. Default:

<no-of-CPUs>

Example 3-5 Minimal In-Memory Analyst Configuration

The following example causes the in-memory analyst to initialize its analysis thread pool with 32 workers. (Default values are used for all other parameters.)

{

"enterprise_scheduler_config": {

"analysis_task_config": {

"max_threads": 32

}

}

}

Example 3-6 Two Pre-loaded Graphs

sets more fields and specifies two fixed graphs for loading into memory during PGX startup.

{

"enterprise_scheduler_config": {

"analysis_task_config": {

"max_threads": 32

},

"fast_analysis_task_config": {

"max_threads": 32

}

},

"memory_cleanup_interval": 600,

"max_active_sessions": 1,

"release_memory_threshold": 0.2,

"preload_graphs": [

{

"path": "graph-configs/my-graph.bin.json",

"name": "my-graph"

},

{

"path": "graph-configs/my-other-graph.adj.json",

"name": "my-other-graph",

"publish": false

}

]

}

Parent topic: Using the In-Memory Graph Server (PGX)

3.4.1 Specifying the Configuration File to the In-Memory Analyst

The in-memory analyst configuration file is parsed by the in-memory analyst at startup-time whenever ServerInstance#startEngine (or any of its variants) is called. You can write the path to your configuration file to the in-memory analyst or specify it programmatically. This topic identifies several ways to specify the file

Programmatically

All configuration fields exist as Java enums. Example:

Map<PgxConfig.Field, Object> pgxCfg = new HashMap<>(); pgxCfg.put(PgxConfig.Field.MEMORY_CLEANUP_INTERVAL, 600); ServerInstance instance = ... instance.startEngine(pgxCfg);

All parameters not explicitly set will get default values.

Explicitly Using a File

Instead of a map, you can write the path to an in-memory analyst configuration JSON file. Example:

instance.startEngine("path/to/pgx.conf"); // file on local file system

instance.startEngine("classpath:/path/to/pgx.conf"); // file on current classpath

For all other protocols, you can write directly in the input stream to a JSON file. Example:

InputStream is = ... instance.startEngine(is);

Implicitly Using a File

If startEngine() is called without an argument, the in-memory analyst looks for a configuration file at the following places, stopping when it finds the file:

-

File path found in the Java system property

pgx_conf. Example:java -Dpgx_conf=conf/my.pgx.config.json ... -

A file named

pgx.confin the root directory of the current classpath -

A file named

pgx.confin the root directory relative to the currentSystem.getProperty("user.dir")directory

Note: Providing a configuration is optional. A default value for each field will be used if the field cannot be found in the given configuration file, or if no configuration file is provided.

Using the Local Shell

To change how the shell configures the local in-memory analyst instance, edit $PGX_HOME/conf/pgx.conf. Changes will be reflected the next time you invoke $PGX_HOME/bin/pgx.

You can also change the location of the configuration file as in the following example:

./bin/opg --pgx_conf path/to/my/other/pgx.conf

Setting System Properties

Any parameter can be set using Java system properties by writing -Dpgx.<FIELD>=<VALUE> arguments to the JVM that the in-memory analyst is running on. Note that setting system properties will overwrite any other configuration. The following example sets the maximum off-heap size to 256 GB, regardless of what any other configuration says:

java -Dpgx.max_off_heap_size=256000 ...

Setting Environment Variables

Any parameter can also be set using environment variables by adding 'PGX_' to the environment variable for the JVM in which the in-memory analyst is executed. Note that setting environment variables will overwrite any other configuration; but if a system property and an environment variable are set for the same parameter, the system property value is used. The following example sets the maximum off-heap size to 256 GB using an environment variable:

PGX_MAX_OFF_HEAP_SIZE=256000 java ...

Parent topic: Configuring the In-Memory Analyst

3.5 Storing a Graph Snapshot on Disk

After reading a graph into memory using either Java or the Shell, if you make some changes to the graph such as running the PageRank algorithm and storing the values as vertex properties, you can store this snapshot of the graph on disk.

This is helpful if you want to save the state of the graph in memory, such as if you must shut down the in-memory analyst server to migrate to a newer version, or if you must shut it down for some other reason.

(Storing graphs over HTTP/REST is currently not supported.)

A snapshot of a graph can be saved as a file in a binary format (called a PGB file) if you want to save the state of the graph in memory, such as if you must shut down the in-memory analyst server to migrate to a newer version, or if you must shut it down for some other reason.

In general, we recommend that you store the graph queries and analytics APIs that had been executed, and that after the in-memory analyst has been restarted, you reload and re-execute the APIs. But if you must save the state of the graph, you can use the logic in the following example to save the graph snapshot from the shell.

In a three-tier deployment, the file is written on the server-side file system. You must also ensure that the file location to write is specified in the in-memory analyst server. (As explained in Three-Tier Deployments of Oracle Graph with Autonomous Database, in a three-tier deployment, access to the PGX server file system requires a list of allowed locations to be specified.)

opg-jshell> var graph = session.createGraphBuilder().addVertex(1).addVertex(2).addVertex(3).addEdge(1,2).addEdge(2,3).addEdge(3, 1).build()

graph ==> PgxGraph[name=anonymous_graph_1,N=3,E=3,created=1581623669674]

opg-jshell> analyst.pagerank(graph)

$3 ==> VertexProperty[name=pagerank,type=double,graph=anonymous_graph_1]

// Now save the state of this graph

opg-jshell> g.store(Format.PGB, "/tmp/snapshot.pgb")

$4 ==> {"edge_props":[],"vertex_uris":["/tmp/snapshot.pgb"],"loading":{},"attributes":{},"edge_uris":[],"vertex_props":[{"name":"pagerank","dimension":0,"type":"double"}],"error_handling":{},"vertex_id_type":"integer","format":"pgb"}

// reload from disk

opg-jshell> var graphFromDisk = session.readGraphFile("/tmp/snapshot.pgb")

graphFromDisk ==> PgxGraph[name=snapshot,N=3,E=3,created=1581623739395]

// previously computed properties are still part of the graph and can be queried

opg-jshell> graphFromDisk.queryPgql("select x.pagerank match (x)").print().close()

The following example is essentially the same as the preceding one, but it uses partitioned graphs. Note that in the case of partitioned graphs, multiple PGB files are being generated, one for each vertex/edge partition in the graph.

-jshell> analyst.pagerank(graph)

$3 ==> VertexProperty[name=pagerank,type=double,graph=anonymous_graph_1]// store graph including all props to disk

// Now save the state of this graph

opg-jshell> var storedPgbConfig = g.store(ProviderFormat.PGB, "/tmp/snapshot")

$4 ==> {"edge_props":[],"vertex_uris":["/tmp/snapshot.pgb"],"loading":{},"attributes":{},"edge_uris":[],"vertex_props":[{"name":"pagerank","dimension":0,"type":"double"}],"error_handling":{},"vertex_id_type":"integer","format":"pgb"}

// Reload from disk

opg-jshell> var graphFromDisk = session.readGraphWithProperties(storedPgbConfig)

graphFromDisk ==> PgxGraph[name=snapshot,N=3,E=3,created=1581623739395]

// Previously computed properties are still part of the graph and can be queried

opg-jshell> graphFromDisk.queryPgql("select x.pagerank match (x)").print().close()

Parent topic: Using the In-Memory Graph Server (PGX)

3.6 Executing Built-in Algorithms

The in-memory graph server (PGX) contains a set of built-in algorithms that are available as Java APIs.

The following table provides an overview of the available algorithms, grouped by category.

Note:

These algorithms can be invoked through theAnalyst interface. See the Analyst Class in Javadoc for more details.

Table 3-3 Overview of Built-In Algorithms

| Category | Algorithms |

|---|---|

| Classic graph algorithms | Prim's Algorithm |

| Community detection | Conductance Minimization (Soman and Narang Algorithm), Infomap, Label Propagation, Louvain |

| Connected components | Strongly Connected Components, Weakly Connected Components (WCC) |

| Link predition | WTF (Whom To Follow) Algorithm |

| Matrix factorization | Matrix Factorization |

| Other | Graph Traversal Algorithms |

| Path finding | All Vertices and Edges on Filtered Path, Bellman-Ford Algorithms, Bidirectional Dijkstra Algorithms, Compute Distance Index, Compute High-Degree Vertices, Dijkstra Algorithms, Enumerate Simple Paths, Fast Path Finding, Fattest Path, Filtered Fast Path Finding, Hop Distance Algorithms |

| Ranking and walking | Closeness Centrality Algorithms, Degree Centrality Algorithms, Eigenvector Centrality, Hyperlink-Induced Topic Search (HITS), PageRank Algorithms, Random Walk with Restart, Stochastic Approach for Link-Structure Analysis (SALSA) Algorithms, Vertex Betweenness Centrality Algorithms |

| Structure evaluation | Adamic-Adar index, Bipartite Check, Conductance, Cycle Detection Algorithms, Degree Distribution Algorithms, Eccentricity Algorithms, K-Core, Local Clustering Coefficient (LCC), Modularity, Partition Conductance, Reachability Algorithms, Topological Ordering Algorithms, Triangle Counting Algorithms |

This following topics describe the use of the in-memory graph server (PGX) using Triangle Counting and PageRank analytics as examples.

Parent topic: Using the In-Memory Graph Server (PGX)

3.6.1 About the In-Memory Analyst

The in-memory analyst contains a set of built-in algorithms that are available as Java APIs. The details of the APIs are documented in the Javadoc that is included in the product documentation library. Specifically, see the BuiltinAlgorithms interface Method Summary for a list of the supported in-memory analyst methods.

For example, this is the PageRank procedure signature:

/** * Classic pagerank algorithm. Time complexity: O(E * K) with E = number of edges, K is a given constant (max * iterations) * * @param graph * graph * @param e * maximum error for terminating the iteration * @param d * damping factor * @param max * maximum number of iterations * @return Vertex Property holding the result as a double */ public <ID extends Comparable<ID>> VertexProperty<ID, Double> pagerank(PgxGraph graph, double e, double d, int max);

Parent topic: Executing Built-in Algorithms

3.6.2 Running the Triangle Counting Algorithm

For triangle counting, the sortByDegree boolean parameter of countTriangles() allows you to control whether the graph should first be sorted by degree (true) or not (false). If true, more memory will be used, but the algorithm will run faster; however, if your graph is very large, you might want to turn this optimization off to avoid running out of memory.

Using the Shell to Run Triangle Counting

opg> analyst.countTriangles(graph, true) ==> 1

Using Java to Run Triangle Counting

import oracle.pgx.api.*; Analyst analyst = session.createAnalyst(); long triangles = analyst.countTriangles(graph, true);

The algorithm finds one triangle in the sample graph.

Tip:

When using the in-memory analyst shell, you can increase the amount of log output during execution by changing the logging level. See information about the :loglevel command with :h :loglevel.

Parent topic: Executing Built-in Algorithms

3.6.3 Running the PageRank Algorithm

PageRank computes a rank value between 0 and 1 for each vertex (node) in the graph and stores the values in a double property. The algorithm therefore creates a vertex property of type double for the output.

In the in-memory analyst, there are two types of vertex and edge properties:

-

Persistent Properties: Properties that are loaded with the graph from a data source are fixed, in-memory copies of the data on disk, and are therefore persistent. Persistent properties are read-only, immutable and shared between sessions.

-

Transient Properties: Values can only be written to transient properties, which are private to a session. You can create transient properties by calling

createVertexPropertyandcreateEdgePropertyonPgxGraphobjects, or by copying existing properties usingclone()on Property objects.Transient properties hold the results of computation by algorithms. For example, the PageRank algorithm computes a rank value between 0 and 1 for each vertex in the graph and stores these values in a transient property named

pg_rank. Transient properties are destroyed when the Analyst object is destroyed.

This example obtains the top three vertices with the highest PageRank values. It uses a transient vertex property of type double to hold the computed PageRank values. The PageRank algorithm uses the following default values for the input parameters: error (tolerance = 0.001), damping factor = 0.85, and maximum number of iterations = 100.

Using the Shell to Run PageRank

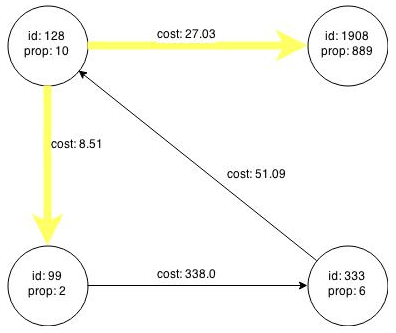

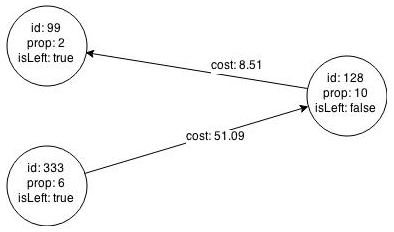

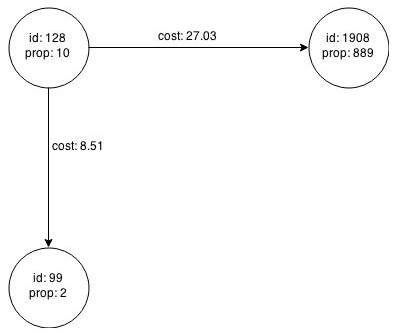

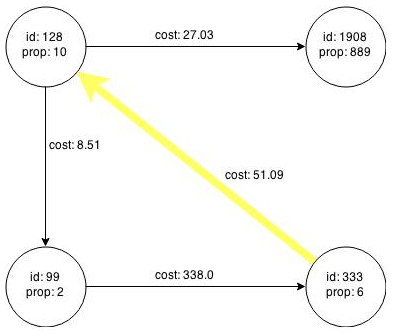

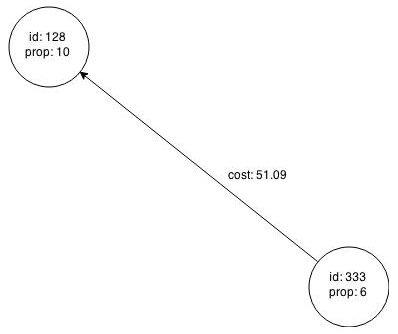

opg> rank = analyst.pagerank(graph, 0.001, 0.85, 100); ==> ... opg> rank.getTopKValues(3) ==> 128=0.1402019732468347 ==> 333=0.12002296283541904 ==> 99=0.09708583862990475

Using Java to Run PageRank

import java.util.Map.Entry;

import oracle.pgx.api.*;

Analyst analyst = session.createAnalyst();

VertexProperty<Integer, Double> rank = analyst.pagerank(graph, 0.001, 0.85, 100);

for (Entry<Integer, Double> entry : rank.getTopKValues(3)) {

System.out.println(entry.getKey() + "=" + entry.getValue());

}Parent topic: Executing Built-in Algorithms

3.7 Using Custom PGX Graph Algorithms

A custom PGX graph algorithm allows you to write a graph algorithm in Java and have it automatically compiled to an efficient parallel implementation.

For more detailed information than appears in the following subtopics, see the PGX Algorithm specification at https://docs.oracle.com/cd/E56133_01/latest/PGX_Algorithm_Language_Specification.pdf.

- Writing a Custom PGX Algorithm

- Compiling and Running a PGX Algorithm

- Example Custom PGX Algorithm: PageRank

Parent topic: Using the In-Memory Graph Server (PGX)

3.7.1 Writing a Custom PGX Algorithm

A PGX algorithm is a regular .java file with a single class definition that is annotated with @graphAlgorithm. For example:

import oracle.pgx.algorithm.annotations.GraphAlgorithm;

@GraphAlgorithm

public class MyAlgorithm {

...

}

A PGX algorithm class must contain exactly one public method which will be used as entry point. For example:

import oracle.pgx.algorithm.PgxGraph;

import oracle.pgx.algorithm.VertexProperty;

import oracle.pgx.algorithm.annotations.GraphAlgorithm;

import oracle.pgx.algorithm.annotations.Out;

@GraphAlgorithm

public class MyAlgorithm {

public int myAlgorithm(PgxGraph g, @Out VertexProperty<Integer> distance) {

System.out.println("My first PGX Algorithm program!");

return 42;

}

}

As with normal Java methods, a PGX algorithm method can return a value (an integer in this example). More interesting is the @Out annotation, which marks the vertex property distance as output parameter. The caller passes output parameters by reference. This way, the caller has a reference to the modified property after the algorithm terminates.

Parent topic: Using Custom PGX Graph Algorithms

3.7.1.1 Collections

To create a collection you call the .create() function. For example, a VertexProperty<Integer> is created as follows:

VertexProperty<Integer> distance = VertexProperty.create();To get the value of a property at a certain vertex v:

distance.get(v);Similarly, to set the property of a certain vertex v to a value e:

distance.set(v, e);You can even create properties of collections:

VertexProperty<VertexSequence> path = VertexProperty.create();However, PGX Algorithm assignments are always by value (as opposed to by reference). To make this explicit, you must call .clone() when assigning a collection:

VertexSequence sequence = path.get(v).clone();Another consequence of values being passed by value is that you can check for equality using the == operator instead of the Java method .equals(). For example:

PgxVertex v1 = G.getRandomVertex();

PgxVertex v2 = G.getRandomVertex();

System.out.println(v1 == v2);

Parent topic: Writing a Custom PGX Algorithm

3.7.1.2 Iteration

The most common operations in PGX algorithms are iterations (such as looping over all vertices, and looping over a vertex's neighbors) and graph traversal (such as breath-first/depth-first). All collections expose a forEach and forSequential method by which you can iterate over the collection in parallel and in sequence, respectively.

For example:

- To iterate over a graph's vertices in parallel: