Pre-General Availability: 2023-04-04

11 The Data Transforms Page

Data Transforms is an easy-to-use graphical user interface that Oracle Autonomous Database users can use to design graphical data transformations for data integration.

Data Transforms allows you to design data transformations in the form of data loads, data flows, and workflows, without requiring you to write any code. Data loads provide a convenient way of loading data into Autonomous Database, data flows define how the data is moved and transformed between different systems, while the workflows define the sequence in which the data flows are executed.

Note:

If you do not see the Data Transforms card then your database user is missing the required DATA_TRANSFORM_USER role.After your data flows and workflows are ready, you can execute the mappings immediately or schedule to execute them at a later time. Oracle Data Transforms run-time agent orchestrates the execution of jobs. On execution, Oracle Data Transforms generates the code for you.

- Oracle Cloud Marketplace: Create a Data Transforms instance from Oracle Cloud Marketplace. Data Transforms is available as a separate listing on Marketplace called Data Integrator: Web Edition.

- Database Actions Data Studio: Navigate to Database Actions Data Studio Page, and click Data Transforms in the Database Actions page.

If you have already registered a Data Transforms instance from OCI Marketplace with the Autonomous Database, the Data Transforms card on the Database Actions page will continue to take you to your Marketplace instance.

- APPLIES TO:

Data Transforms that is available as a separate listing on Marketplace

called Data Integrator: Web Edition.

Data Transforms that is available as a separate listing on Marketplace

called Data Integrator: Web Edition. - APPLIES TO:

Data Transforms instance that is registered with Autonomous Database.

Data Transforms instance that is registered with Autonomous Database. - APPLIES TO:

Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

The following topics describe how to access and use the Data Transforms page.

- unresolvable-reference.html#GUID-294447AE-CD0E-4056-8212-54BACC4B688C

- unresolvable-reference.html#GUID-A7AA5C37-0A7F-41B0-8533-BE74F4E5F554

- unresolvable-reference.html#GUID-6E63B44D-4896-4789-89D3-6E1FC5E907B9

- unresolvable-reference.html#GUID-21AF7E2D-F73B-4252-94F4-36A483DBD3CC

- unresolvable-reference.html#GUID-74C9DC45-8137-49B4-A9E3-44C30A012501

- Creating Data Flows

- unresolvable-reference.html#GUID-7B13FC67-94B8-4B51-80E6-D17BAAF8B2ED

- unresolvable-reference.html#GUID-5895961A-6D91-47D3-9401-71BAC0098882

- unresolvable-reference.html#GUID-FD0F4B4E-6CFB-4742-8ED0-EEE70D074684

- unresolvable-reference.html#GUID-B54E671D-CB37-47FA-AFC2-A507A8F53094

Accessing the Data Transforms Tool

APPLIES TO: ![]() Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

To use the Data Transforms tool you must access Database Actions as the ADMIN user or have the DATA_TRANSFORM_USER role assigned. See Manage User Profiles with Autonomous Database for information on granting roles.

To access the Data Transforms tool:

- Login as an ADMIN user or a user with the DATA_TRANSFORM_USER role assigned.

- Click Data Transforms in the Database Actions page, or click the Selector icon and select Data Transforms from the Data Studio menu in the navigation pane.

Note:

Data Transforms is also available as a separate listing on OCI Marketplace. If you have already registered a Data Transforms instance from OCI Marketplace with the Autonomous Database, the Data Transforms card on the Database Actions page will continue to take you to your Marketplace instance. If you wish to use the embedded Data Transforms, then you must unregister the Marketplace instance. See Unregistering the ODI Instance from Autonomous Database. - When you login to the Data Transforms tool for the first time, you need to provide the database user credentials to sign in.

It takes approximately 1-2 minutes for the Data Transforms service to start. After the service starts, the Data Transforms home page opens.

- From the left pane of the Home page, click the Connections tab to view the newly created Autonomous Database connection.

4. Click the Actions icon next to the connection and select Edit.

- In the Update Connection page, enter the database username and password to use the connection. Click Update to save the changes.

Data Transforms Notes

APPLIES TO: ![]() Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

Data Transforms that is part of the suite of data tools built into Oracle Autonomous Database.

-

Regional unique constraint for database names: Oracle Databases 19c onwards you can create multiple databases with the same name in a particular region with the database names being unique only at the compartment level. However, Data Transforms does not support duplicate database names even if the databases exist in different compartments. While you may be able to launch the Data Transforms tool, login to all such instances would fail with an “invalid credentials” error.

-

Data Transforms jobs stuck in the Running status: If there are any Data Transforms jobs that are stuck in the Running status for an interminably long time, either stop the job immediately or delete the job and then rerun it. You may want to do this to avoid unwanted usage of resources for your tenancy. If the issue persists, file a service request at Oracle Cloud Support or contact your support representative.

Creating and Running a Data Load

A data load allows you to load multiple data entities from a source connection to a target connection.

Note:

Data load is not supported for Oracle Object Storage connections.Creating a Data Load

You can create a data load in either of the following ways:

Creating a Data Load from the Home Page

This section describes the generic steps to create a data load. If you plan to load and transform data using OCI GoldenGate, create the data load using the Projects page. See Creating a Data Load from the Projects Page.

To create a data load from the Home page:

- From the left pane, click the Home tab. Click Load Data.

The Create Data Load wizard appears.

- In the Name field, enter a name for the data load. The field is pre-populated with a default name. You can edit this value.

- Add a description. This is optional.

- Select a project name from the drop-down. If this your first time here, click the + icon to create a project. If you have logged in as SUPERVISOR, the default project name is

Home. For other users, the default project name is in the format<username>_Home. You can edit the default value. See unresolvable-reference.html#GUID-74C9DC45-8137-49B4-A9E3-44C30A012501 for more information about projects. - Click Next.

- To define your source connection, from the Connection drop-down, select the required connection from which you wish to add the data entities. Alternatively, click the + icon to create a new connection. See unresolvable-reference.html#GUID-6E63B44D-4896-4789-89D3-6E1FC5E907B9 for more details about connections.

- In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

Select the schema that you want to use from the drop down.Note:

If there is missing information such as user name or password not specified, wallet missing, and so on, the list may fail to populate with a “This connection has missing information.” error. Click the Edit icon ( ) to open the Update Connection page where you can fill in the missing details.

) to open the Update Connection page where you can fill in the missing details.

- Click Next.

- Similarly, define the target connection.

- Click Save.

The Data Load Detail page appears listing all the loaded data entities.

Creating a Data Load from the Projects Page

To create a data load from the Projects page,

- On the Home page, click Projects, and then the required project title. In the left pane, click Data Loads, and then click Create Data Load.

The Create Data Load wizard appears.

- In the Name field, enter a name for the data load.

- Add a description. This is optional.

- Select the source and target schemas.

Note:

Make sure that you have created connections before you plan to create a data load using the Projects page. See unresolvable-reference.html#GUID-6E63B44D-4896-4789-89D3-6E1FC5E907B9 for more details about connections.- To use the OCI GoldenGate Deployment Console to load data entities:

- Select the Use GoldenGate checkbox.

The Create Data Load page now shows fields that are specific to OCI GoldenGate.

- Select the GoldenGate connection from the drop-down.

- To select the source connection, select the Registered Database and the Schema.

- Similarly, define the target connection.

- Select the Use GoldenGate checkbox.

- To use all other connection types to load entities:

- To define your source connection, select the connection from which you wish to add the data entities from the Connection drop-down.

- From the Schema drop-down select the schema that you want to use. All schema corresponding to the selected connection are listed in two groups

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

- To use the OCI GoldenGate Deployment Console to load data entities:

- Click Create.

The Data Load Detail page appears listing all the loaded data entities.

Running a Data Load

You can run a data load in either of the following ways:

Running a Data Load from the Data Load Detail Page

The Data Load Detail page displays the information that you need to run a data load. You can apply different actions - incremental merge, incremental append, recreate, truncate, append - on the data entities before loading it to the target schema.

Note:

APPLIES TO:If the data load is huge, you might want to increase the memory of the ODI Agent to avoid any issues. Follow the instructions in Increasing the Memory of ODI Agent before you start to run the data load.

To run a data load from the Data Load Detail Page:

- In the Data Load Detail page, select the data entities that you want to move to the target schema. To filter the list, you can do one of the following:

- Enter the name or part of the name in the Name text box. This search returns data entities that include the specified string in the name. For example, the search string AD returns results such as ADMIN, ADDRESS, BADGE, UPGRADE, WORKLOAD, and so on.

- Turn on the Use Regular Expression toggle to use pattern matching to search for particular strings of characters. For example, the search string CO.* returns results such as CONTACT, COUNT, COUNTRY and so on.

You can select multiple data entities and load them to the target schema. You can also sort the displayed list using the following options:- All - Displays all the data entities that match the search criteria.

- Selected - Displays all the rows that you selected.

- Unselected - Displays all the unselected rows.

- Invalid – Displays all the invalid rows.

Note:

These options display the list of data entities based on the search criteria. To view the list of all data entities, clear any applied filters. - Click on the required icon to choose any of the following actions:

- Incremental Merge - Updates the data in the selected column by comparing the source table with the target table based on the specified merge key. To use this option, select the column that you want to merge and then select the merge key. Click the Validate icon (

) to validate the selected values.

) to validate the selected values.

- Incremental Append - Updates data in the selected column in the target schema. To use this option, select the column that you want to update and click the Validate icon (

) to validate the selection.

) to validate the selection.

- Recreate – If the table is already present in the target schema, drops the existing table and recreates it.

Note:

This option is not available for data entities that are loaded using OCI GoldenGate. - Truncate – If the table is already present in the target schema, deletes all the data from the selected table. Nothing is dropped.

Note:

This option is not available for data entities that are loaded using OCI GoldenGate. - Append – If the table is already present in the target schema, adds rows to the table.

- Do Not Load – Skips the selected data entity from the data load job. After you click Save, these data entities are no longer available for future data load jobs.

You can select multiple data entities and apply different actions. The unsaved rows are highlighted in bold.

- Incremental Merge - Updates the data in the selected column by comparing the source table with the target table based on the specified merge key. To use this option, select the column that you want to merge and then select the merge key. Click the Validate icon (

- Click

to save the changes. A green checkmark (

to save the changes. A green checkmark ( ) in the row indicates that the changes are saved.

) in the row indicates that the changes are saved.

- To start the data load,

- Click

.

.

- For GoldenGate data loads, click

.

.

A confirmation prompt appears when the data load starts successfully.

- Click

To check the status of the data load, see the Status panel on the right below the Target Schema details. For details about the Status panel, see unresolvable-reference.html#GUID-FD0F4B4E-6CFB-4742-8ED0-EEE70D074684. This panel shows links to the jobs that execute to run this data load. Click the link to monitor the progress on the Job Details page. For more information about jobs, see unresolvable-reference.html#GUID-B54E671D-CB37-47FA-AFC2-A507A8F53094.

Running a Data Load from the Workflow Details Page

You can add multiple data loads to a workflow along with data flows or workflows and run them as separate steps. The left panel of the Workflow Details page lists the data flows, workflows, and data loads that are available for use.

- Data Loads - This folder lists all the data loads that you have created in the local Data Transforms instance. When you select a step in the workflow that is a local data load, the Properties Panel available on the right side of the design canvas displays the Type as

Data Loadand the Linked Object as Home><nameofDataLoad>. - Remote Data Loads - This folder lists all the data loads that you have created in a remote Data Transforms instance. See Creating a Data Transforms Connection for Remote Data Load. When you select a step in the workflow that is a remote data load, the Properties Panel available on the right side of the design canvas displays the Type as

Remote Data Loadand the Linked Object asName of the Data Transforms connection><nameofDataLoad>.

After you have added the data loads to the workflow, click ![]() to execute them.

to execute them.

Increasing the Memory of ODI Agent

APPLIES TO: ![]() Data Transforms that is available as a separate listing on Marketplace

called Data Integrator: Web Edition.

Data Transforms that is available as a separate listing on Marketplace

called Data Integrator: Web Edition.

If the data that you are loading from the source schema is huge, then you may want to increase the memory of the ODI Agent to avoid OutOfMemory exception errors.

To increase the memory of the ODI Agent:

- Edit the

/u01/oracle/transforms_home/common/scripts/jettyServer.shfile. - Add the java -Xms1024m -Xmx4096m parameter.

- Restart the jetty server. Log in as OPC user and execute the following commands:

ssh -i <path to id_rsa> opc@<Instance IP> sudo su systemctl stop|start jettyserver.service exit

Working with Connections

Connections help you to connect Data Transforms to various technologies reachable from your OCI network.

To create a new connection:

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit to edit the provided connection details.

- Select Test Connection to test the created connection.

- Select Delete Schema to delete schemas.

- Select Delete Connection to delete the created connection.

You can also search for the required connection to know its details based on the following filters:

- Name of the connection.

- Technology associated with the created connection.

List of Supported Connection Types

Note:

APPLIES TO:- For the connectors that require driver installation, you need to copy the jar files to the

/u01/oracle/transforms_home/userlibsdirectory before you add the connection. - Apart from the connection types listed here you can create custom connectors, which you can use to connect Data Transforms to any JDBC supported data sources. See Creating Custom Connectors.

| Name | Type | Supported in Data Integrator: Web Edition | Supported in Data Transforms built into Autonomous Database | Notes |

|---|---|---|---|---|

| Aha! | Application | Yes | Yes | |

| Ahrefs | Application | Yes | Yes | |

| Amazon Aurora | Database | Yes | Yes | |

| Amazon EMR Hive | Database | Yes | Yes | |

| Amazon Redshift | Database | Yes | Yes | |

| Apache Hive | Database | Yes | Yes | |

| Apache Spark SQL | Database | Yes | Yes | |

| AWS S3 | Database | Yes | Yes | |

| Azure Billing | Application | Yes | Yes | |

| Azure Compute | Database | Yes | Yes | |

| Azure Data Lake Storage | Database | Yes | Yes | |

| Azure Reserved VM Instances | Database | Yes | Yes | |

| Azure Resource Health | Application | Yes | Yes | |

| Azure SQL Database | Database | Yes | Yes | |

| Azure Synapse Analytics | Database | Yes | Yes | |

| BigCommerce | Application | Yes | Yes | Requires driver installation |

| Cassandra | Database | Yes | Yes | |

| Cloudera CDH Hive | Database | Yes | Yes | |

| Cloudera Impala | Database | Yes | Yes | |

| Confluence Cloud | Database | Yes | Yes | |

| Data Transforms | Service | Yes | Yes | For instructions on connecting to an existing Data Transforms instance, see Creating a Data Transforms Connection for Remote Data Load. |

| DataStax | Application | Yes | Yes | |

| DocuSign | Database | Yes | Yes | |

| eBay | Application | Yes | Yes | Requires driver installation |

| EnterpriseDB | Database | Yes | Yes | |

| FinancialForce | Application | Yes | Yes | |

| FourSquare | Application | Yes | Yes | |

| Generic Rest | Application | Yes | Yes | For information about connecting to any REST service endpoint to create a connection, see Creating a REST Server Connection. |

| GitHub | Application | Yes | Yes | |

| Google Ads | Application | Yes | No | Requires driver installation |

| Google AdSense | Application | Yes | Yes | |

| Google Analytics | Application | Yes | Yes | |

| Google BigQuery | Database | Yes | Yes | |

| Google Calendar | Application | Yes | Yes | |

| Google Campaign Manager | Application | Yes | Yes | |

| Google Contacts | Application | Yes | Yes | |

| Google Drive | Database | Yes | Yes | |

| Google Search Ads 360 | Application | Yes | Yes | |

| Greenplum | Database | Yes | Yes | |

| Hortonworks Hive | Database | Yes | Yes | |

| HubSpot | Application | Yes | Yes | |

| Hyperion Essbase | Application | Yes | No | |

| Hypersonic SQL | Database | Yes | Yes | |

| IBM BigInsights | Database | Yes | Yes | |

| IBM DB2 Hosted | Database | Yes | Yes | |

| IBM DB2 UDB | Database | Yes | Yes | |

| IBM DB2 Warehouse | Database | Yes | Yes | |

| IBM DB2/400 | Database | Yes | Yes | |

| Informix | Database | Yes | Yes | |

| Jira | Application | Yes | Yes | |

| Klaviyo | Application | Yes | Yes | |

| Magento | Application | Yes | No | Requires driver installation |

| Mailchimp | Application | Yes | Yes | |

| MapR Hive | Database | Yes | Yes | |

| Marketo | Application | Yes | Yes | |

| Microsoft Dynamics 365 | Application | Yes | Yes | |

| Microsoft SharePoint | Application | Yes | Yes | |

| Microsoft SQL Server | Database | Yes | Yes | |

| Mongo DB | Database | Yes | Yes | |

| MySQL | Database | Yes | Yes | |

| MySQL Heatwave | Database | Yes | Yes | |

| Netezza | Database | Yes | No | Oracle Data Transforms uses the Netezza JDBC to connect to a NCR Netezza database. This driver must be installed in your Data Transforms userlibs directory. See Download the Netezza JDBC driver for more information.

|

| Oracle | Database | Yes | Yes | |

| Oracle Analytics Cloud | Application | Yes | Yes | |

| Oracle BI Cloud Connector | Application | Yes | Yes | |

| Oracle EBS | Application | Yes | Yes | |

| Oracle Financials Cloud | Application | Yes | Yes | For information about creating a connection using Oracle Financials Cloud, see Creating an Oracle Financials Cloud Connection. |

| Oracle Fusion ERP | Application | Yes | Yes | |

| Oracle Fusion Sales | Application | Yes | Yes | |

| Oracle Fusion Service | Application | Yes | Yes | |

| Oracle GoldenGate – OCI | Service | Yes | Yes | |

| Oracle Marketing Cloud | Application | Yes | Yes | |

| Oracle NetSuite | Application | Yes | Yes | |

| Oracle Object Storage | Database | Yes | Yes | For information about creating a connection using Oracle Object Storage, see Creating an Oracle Object Storage Connection. |

| Oracle People Soft | Application | Yes | Yes | |

| Oracle Sales Cloud | Application | Yes | Yes | |

| Oracle Service Cloud | Application | Yes | Yes | |

| Oracle SIEBEL | Application | Yes | Yes | |

| PayPal | Application | Yes | Yes | |

| Pivotal HD | Database | Yes | Yes | |

| Pivotal HDB | Database | Yes | Yes | |

| PostgreSQL | Database | Yes | Yes | |

| Qmetry | Application | Yes | Yes | |

| QuickBooks Online | Application | Yes | Yes | |

| QuickBooks Payments | Application | Yes | Yes | |

| Quora Ads | Application | Yes | Yes | |

| Sage | Application | Yes | Yes | |

| Salesforce Chatter | Application | Yes | Yes | |

| Salesforce.com | Application | Yes | Yes | |

| SAP BW/4HANA | Database | Yes | Yes | |

| SAP NetWeaver | Database | Yes | Yes | |

| SAP S/4HANA Cloud | Application | Yes | Yes | |

| Semrush | Application | Yes | Yes | |

| Shopify | Application | Yes | Yes | Requires driver installation |

| Snowflake | Database | Yes | Yes | |

| Square | Application | Yes | Yes | |

| Stripe | Application | Yes | Yes | |

| Sybase As Anywhere | Database | Yes | Yes | |

| Sybase as Enterprise | Database | Yes | Yes | |

| Sybase AS IQ | Database | Yes | Yes | |

| TeamCity | Application | Yes | Yes | |

| Teradata | Database | Yes | No | Data Transforms uses the Teradata JDBC Driver to connect to a Teradata Database. To use Teradata as a data source the Teradata Gateway for JDBC must be running, and this driver must be installed in your Data Transforms userlibs directory. You will find the driver here: https://downloads.teradata.com/download/connectivity/jdbc-driver.

|

| Tumblr | Application | Yes | Yes | |

| Application | Yes | Yes | ||

| Veeva CRM | Application | Yes | Yes | |

| Volusion | Application | Yes | Yes | |

| Wistia | Application | Yes | Yes | |

| WooCommerce | Application | Yes | No | Requires driver installation |

| WordPress | Application | Yes | Yes | |

| Workday | Application | Yes | No | Requires driver installation |

| Xero | Application | Yes | Yes | Requires driver installation |

| Yelp | Application | Yes | Yes | |

| Zendesk | Application | Yes | Yes | Requires driver installation |

| Zoho CRM | Application | Yes | Yes | |

| Zoom | Application | Yes | Yes |

Creating Custom Connectors

To create a new connector:

- In the left pane, click Administration.

A warning message appears.

- Click Continue.

- In the left pane, click Custom Connections.

Custom Connections screen appears.

- Click Create Connection Type.

The Create Connection Type page appears.

- From the Category drop-down select the type of connection that you wish to create whether database, application, or service.

- Enter a name for the connection.

- Enter the name of the JDBC Driver.

- Click OK.

The newly created custom connection appears in the list and are available in the Create Connection page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit, to edit the provided connection details.

- Select Delete, to delete the created connection.

Note:

You cannot delete custom connectors that have existing connections.

Creating a Data Transforms Connection for Remote Data Load

To define a Data Transforms connection:

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit to edit the provided connection details.

- Select Test Connection to test the created connection.

- Select Delete Schema to delete schemas.

- Select Delete Connection to delete the created connection.

You can also search for the required connection to know its details based on the following filters:

- Name of the Connection.

- Technology associated with the created Connection.

Creating an Oracle Financials Cloud Connection

To define an Oracle Financials Cloud connection,

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit, to edit the provided connection details.

- Select Test Connection, to test the created connection.

- Select Delete Schema, to delete schemas.

- Select Delete Connection, to delete the created connection.

You can also search for the required Connection to know its details based on the following filters:

- Name of the Connection.

- Technology associated with the created Connection.

To know about supported technologies, refer to the following section:

Creating and Using an Oracle NetSuite Connection

Creating the Oracle NetSuite Connection

To define an Oracle NetSuite connection:

- From the left pane of the Home page, click the Connections tab.

Connections page appears.

- Click Create Connection.

Create Connection page slides in.

- For Select Type,

- In the Name field, enter the name of the newly created connection.

- Select Applications as the type of connection that you wish to create.

- Select Oracle NetSuite as the connection type. Click Next.

- In the JDBC URL textbox, enter the URL of the SuiteAnalytics Connect server to be used for the connection.

- In the User text box enter the user name for connecting to the data server.

- In the Password text box enter the password for connecting to the data server.

- In the Account ID textbox, enter the account ID for connecting to the data server.

- In the Role ID textbox, enter the role ID for connecting to the data server.

- If the source that you want to use for mapping is a saved search, you need to also specify the following details in Saved Search Extraction:

- Application ID: Enter the NetSuite Application ID for Data Transforms.

- Version: Enter the NetSuite version number.

- Select the checkbox in Build Data Model to install pre-built dataflows and workflows that you can run to to extract data from NetSuite and move it to your Oracle target schema using the Build Data Warehouse wizard.

- Click Test Connection, to test the established connection.

- After providing all the required connection details, click Create.

The new connection is created.

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit, to edit the provided connection details.

- Select Test Connection, to test the created connection.

- Select Build Data Warehouse, to select the functional areas and create the NetSuite Data Warehouse in the target schema. See Using the Build Data Warehouse Wizard for more information.

- Select Delete Schema, to delete schemas.

- Select Delete Connection, to delete the created connection.

You can also search for the required Connection to know its details based on the following filters:

- Name of the Connection.

- Technology associated with the created Connection.

Using the Build Data Warehouse Wizard

Data in your NetSuite account is grouped into business or subject areas in the Analytics Warehouse. The Build Data Warehouse wizard allows you to select the areas that you want to include in the newly created Data Warehouse.

To use the Build Data Warehouse Wizard:

- On the Home page, click the Connections tab. The Connections page appears.

- Click the Actions icon (

) next to the Oracle NetSuite connection that you want to use to build the data warehouse.

) next to the Oracle NetSuite connection that you want to use to build the data warehouse.

The Build Data Warehouse wizard opens.

- From the Connection drop-down list, choose the Autonomous Database connection where your target schema resides.

- From the Staging Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

- Similarly select the Target Schema.

- Click Next.

- Select the NetSuite Business Areas that you want to use to transfer data from the NetSuite Data Warehouse to the target schema.

- Click Save.

Data Transforms starts the process to build the data warehouse. Click Jobs on the left pane of the Home page to monitor the progress of the process. When the job completes successfully, Data Transforms creates a Project folder that includes all the pre-built workflows and dataflows, which you can run to transfer data from the NetSuite connection to your target schema. See Running the Pre-Built Workflows to Load Data into the Target Schema for more information.

Running the Pre-Built Workflows to Load Data into the Target Schema

When the Build Data Warehouse wizard completes successfully, Data Transforms creates a project that includes all the pre-built data flows and workflows that you can run extract data from a Netsuite connection and load it into your target schema.

To view and run the pre-built workflows:

- Click Projects on the left pane of the Home page and select the newly created NetSuite project.

- Click Workflows in the left pane. The following pre-built workflows are listed in the Project Details page:

- Stage NetSuite Source to SDS

- Extract Transaction Primary Keys

- Load SDS to Warehouse

- Apply Deletes

- All Workflows

- Click the Actions icon (

) next to the worflow you want to run and click Start.

) next to the worflow you want to run and click Start.

Oracle recommends that you run All Workflows to execute all the pre-built workflows.

To see the status of the workflow, click Jobs from the left pane in the current project. When the job completes successfully, all the data from the NetSuite connection is loaded into the target schema.

Creating an Oracle Object Storage Connection

To create an Oracle Object Storage connection you need to have an Oracle Cloud Infrastructure username and an auth token. See Getting an Auth Token for information about how to generate the auth token. You need to specify these details when you define the connection in Oracle Data Transforms.

Note the following:

- To use an Oracle Object Storage connection to import data into Data Transforms, you must use a public IP address to access the compute node. If you want to use a private IP address to access the Object Storage service, make sure that you have access to the Internet.

- The supported file format for loading data from Oracle Object Storage to Autonomous Database and vice versa is CSV.

- The supported data types are Numeric, Double, String, and Date.

- Data load is not supported for Oracle Object Storage connections.

- You cannot use an Oracle Object Storage connection as target for mapping.

To define an Oracle Object Storage connection,

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit, to edit the provided connection details.

- Select Test Connection, to test the created connection.

- Select Delete Schema, to delete schemas.

- Select Delete Connection, to delete the created connection.

You can also search for the required Connection to know its details based on the following filters:

- Name of the Connection.

- Technology associated with the created Connection.

To know about supported technologies, refer to the following section:

Creating a REST Server Connection

To define a REST server connection:

The newly created connections are displayed in the Connections page.

Click the Actions icon (![]() ) next to the selected connection to perform the following operations:

) next to the selected connection to perform the following operations:

- Select Edit to edit the provided connection details.

- Select Test Connection to test the created connection.

- Select Delete Schema to delete schemas.

- Select Delete Connection to delete the created connection.

You can also search for the required connection to know its details based on the following filters:

- Name of the connection.

- Technology associated with the created connection.

Working with Data Entities

A Data Entity is a tabular representation of a data structure. It includes Database Tables or Views that can be used in a mapping as a source or target. They are simply the metadata for sources and targets. They are used in creating data flows.

The easiest and most common way to create a Data Entity is by importing its structure from the connection type (for example, Oracle database). You can add Data Entities to your newly created project in one of the following two ways:

Importing Data Entities

To import existing Data Entities:

- From the left pane of the Home page, click the Data Entities tab.

Data Entities page appears.

- Click Import Data Entities, to import the existing data entities.

Import Data Entities page slides-in.

- Select the Connection followed by Schema and then finally select the Type of Objects you want to import. For Oracle Object Storage connections, the Schema drop-down lists the name of the bucket that you specified in the URL when you created the connection.

- Choose a Mask/filter if you don't want to import every object in the schema.

Depending on the Connection Type, you will be presented with further options for importing.

Note:

For Oracle Object Storage connections, this value is case-sensitive. If Batch similar files is set toTrue, all the files that match the mask and have the same structure are grouped together into a single data entity. - [For Oracle Financials Cloud connections only] From the list in the Resources section, select the items that you want to import. When the import process completes, a table is created for each selected resource.

- [For REST server connections only] In the Resources section, do the following:

- In the Resource URI field, enter the URL of the REST service you want to import resources from.

- Click the + icon.

- In the Name column enter an identifier for the resource.

- In the Operation URI column enter the URI of the resource.

- Click Test Resource to check whether the entries are valid.

- Click Start.

A Job is created and the corresponding Job ID is displayed for you to track the session. Click the Job ID to view the details of the job.

Upon successful execution of the job, all the selected Data Entities are imported. Click the Refresh icon

present at the right corner of the Data Entities page, to see the new imported Data Entities.

present at the right corner of the Data Entities page, to see the new imported Data Entities.

Creating Data Entities

Data entities should possess the corresponding objects in the source connection to be used as a source in a data flow. Usually the import process makes sure that these objects are in coordination. However in some cases you can manually create or update the Data Entities through Oracle Data Transforms interface. Whenever you are creating or updating Data Entities always make sure to check if both the definitions are in coordination with each other.

When you use a Data Entity as a target then it doesn't have to exist previously in the target connection and can be created as a part of Data Flow execution.

- From the left pane of the Home page, click the Data Entities tab.

Data Entities page appears.

- Click Create Data Entity, to create a new data entity.

Create Data Entity page appears.

- In the Name text box, enter the name of the new Data Entity that you are creating.

- From the Connection drop-down, select the required connection from which you wish to add the newly created Data Entity.

Note:

Oracle Financials Cloud connections are not listed here because you cannot manually create data entities for such connections. You can only import data entities from Oracle Financials Cloud REST endpoints using the Import Data Entities page. See Importing Data Entities. - In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups.

- New Database Schema (ones that you've not imported from before) and

- Existing Database Schema (ones that you've imported from before and are potentially replacing data entities).

From the Schema drop-down, select the required schema.

Note:

For Oracle Object Storage connections, the Schema drop-down lists the name of the bucket that you specified in the URL when you created the connection. - From the Type drop-down, select the data entity type.

- Table: To define the table structure for the newly created Data Entity, click the + icon to add columns. For each column, you can specify parameters such as Name, Data Type, Length, Scale, Not Null. Double click on the cell to configure the value.

Click the 'x' icon, to delete a row. Click the Up and Down arrows to sort the table rows.

- Inline View: To create the data entity using inline code, enter the Select statement in the Query tab. For example,

SELECT * FROM CUSTOMER. Click Validate.The Columns tab displays a read-only list of the columns that the query returns. Click the Preview tab to see the column data.

- Table: To define the table structure for the newly created Data Entity, click the + icon to add columns. For each column, you can specify parameters such as Name, Data Type, Length, Scale, Not Null. Double click on the cell to configure the value.

- In the Tags text box, enter a tag of your choice. You can use tags to filter the Data Entities displayed in the Data Entity Page.

- For Oracle Object Storage connections, this page displays the following options:

- Contents – Select the CSV file that contains the data you want to import. The metadata displayed in the preview table, such as the data type and length of columns, is based on the first row of the CSV file. Make sure that the CSV file has a header line. The header should contain only alphanumeric characters and no special characters.

- Group Files – Select this check box if you want to group data from multiple CSV files into one data entity. For example, say you want to merge data from Employee_Data1.csv, Employee_Data2.csv, and Employee_Data3.csv into a single data entity.

- Resource Name – Use this option along with Group Files. Enter the value you want to use to identify the files. The resource name should be a regular expression. You can use only an asterisk (*) as a wildcard character in the resource name. For example,

Employee_Data*.csv.

- For Oracle database connections, you can mark the data entities as a feature group. Expand Advanced Options and click the Treat as Feature Group checkbox.

- Click Save.

The new Data Entity is created.

All the newly created or imported Data Entities along with their details are displayed in the Data Entities page. The details include:

- Name of the Data Entity

- Connection for which the Data Entity was created

- Schema to which the Data Entity is associated

- Click the Actions icon (

) next to the selected Data Entity to perform the following operations:

) next to the selected Data Entity to perform the following operations:

- Select Edit, to edit the existing details.

- Select Preview, to preview the selected Data Entity. If the data entity belongs to an Oracle database, you can also view statistics of the table. See Viewing Statistics of Data Entities for more details.

- Select Delete, to delete the selected Data Entity.

- To delete the Data Entities in bulk, in the Data Entities page, select the check boxes of the respective Data Entities and click Delete.

- You can also search for the required Data Entity to know its details based on the following filters:

- Name of the Data Entity

- Connection for which the Data Entity was created

- Schema to which the Data Entity is associated

- Tag that is associated with the Data Entity

Viewing Statistics of Data Entities

Note:

This feature is available for Oracle database tables only.- In the Data Entities list, click the Actions icon (

) next to the Data Entity and click Preview. Select the Statistics tab to view the statistics of the selected data entity.

) next to the Data Entity and click Preview. Select the Statistics tab to view the statistics of the selected data entity.

- On any data flow click on any source or target data entity, and expand the properties panel in the right pane. Click Preview.

The statistical data is presented as follows:

- The total number of rows and columns in the data entity is displayed at the top.

- The statistics panel displays the thumbnail graphs for each column with information about the Min, Max, Distinct, and Null values.

- Two types of thumbnail representations are displayed based on the histogram:

- A bar chart represents data for frequency and top-frequency histograms. The bar chart show the first top 10 values for the number of rows in the table.

- A table lists data for Hybrid and Height-Balanced histograms. The table displays the entire data and is scrollable. The table displays the range for the values and the percentage of rows in each range.

- You can click each thumbnail to view the statistics of the column in a new browser tab.

- The detailed view of each chart also shows the type of histogram.

Working with Projects

Note:

The Projects page includes projects created by all users not just the logged in user.You can perform the following operations of a Project folder:

- View Details

- Edit the name

- Delete

Creating a Project

To create a new project:

- Click Create Project on the Projects page.

- On the Create Project page, provide a project name.

- Click Create.

After creating the project, you're redirected to the Projects page.

Viewing Project Details

To view project details:

- On the Projects page, select a project and click View Details from the project's Actions icon (

) or select a project to open its details page.

) or select a project to open its details page.

- It displays all the resources associated to the selected project. It includes details such as Data Flow Name and Folder details for the selected project.

- Click the Actions icon (

) of the respective data flow to edit, rename, copy, change folder, start or delete the required data flow of the selected project.

) of the respective data flow to edit, rename, copy, change folder, start or delete the required data flow of the selected project.

Deleting a Project

Note:

Be sure to review all contained resources before you delete the project.To delete a project, on the Projects page, select Delete from the Actions icon (![]() ) for the project you want to delete or select a project to open its details page, and then click Delete.

) for the project you want to delete or select a project to open its details page, and then click Delete.

In the Delete Project dialog, click Delete to confirm the delete operation.

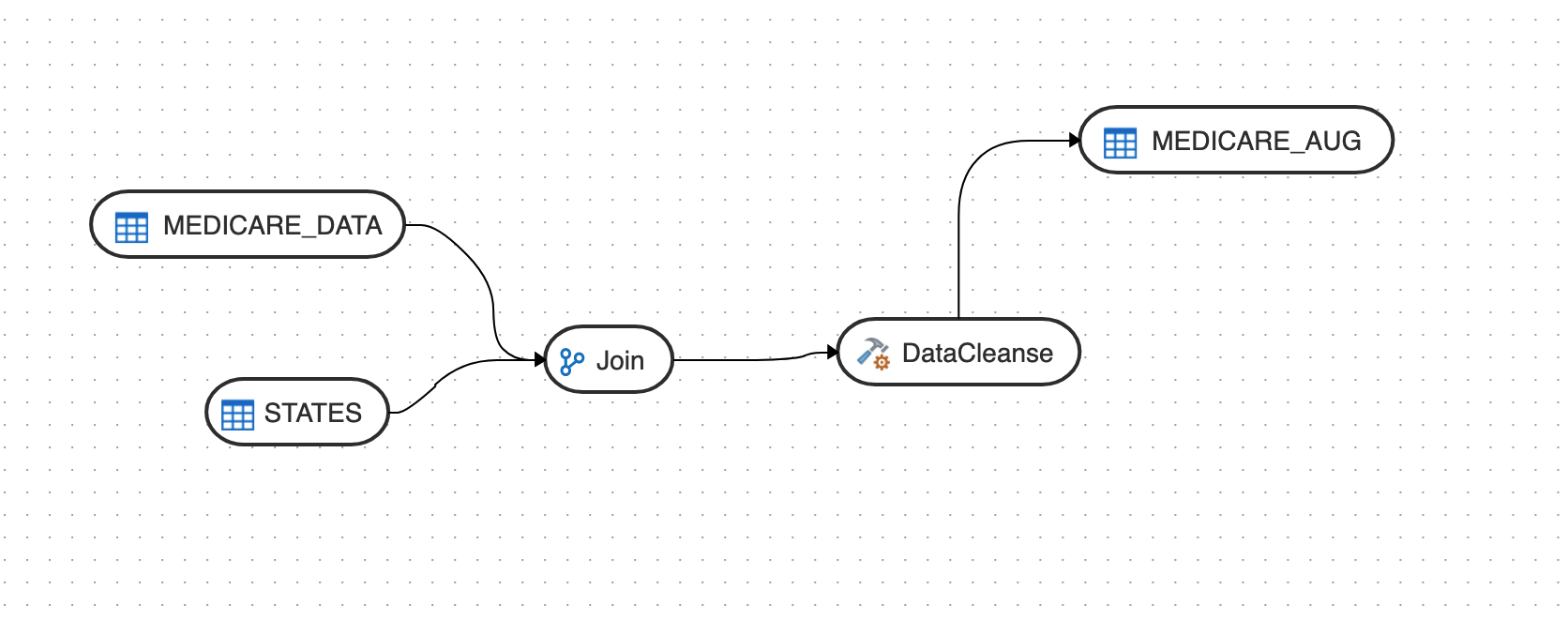

Creating Data Flows

To start creating data flows:

- On the Home page, click Transform Data.

Or

- On the Projects page, click Create Data Flow.

Or

- Select an existing project and on the Project Details page, click Create Data Flow.

Note:

When you see a blank page while clicking Create Data Flow, clear your browser cache and refresh the page to navigate to the Data Flow Editor.The Data Flow Editor appears allowing you to create a new data flow.

About Data Flow Editor

The Data flow editor is divided into five parts, the Data Entity Panel, the Transformations Toolbar, the Design Canvas, the Properties Panel, and the Status Panel.

- Data Entities Panel: The data entity panel displays the Data Entities that are available to use in your Data flows. The displayed list can be filtered using the Name and Tags fields. The panel includes options that let you add schemas, import data entities, remove any of the schemas that are associated with the data flow, and refresh data entities. See Add Components for information about how to use these options.

- Database Functions Toolbar: The Database Functions toolbar display the database functions that can be used in your data flows. Just like Data Entities, you can drag and drop the Database tools you want to use on the design canvas.

- Design Canvas: The design canvas is where you build your transformation logic. After adding the Data Entities and Database Functions to the design canvas, you can connect them in a logical order to complete your data flows.

- Properties Panel: The properties panel displays the properties of the selected object on the design canvas. The Properties Panel is grouped into four Tabs. General, Attributes, Preview Data, Column Mapping, and Options. Not all tabs are available as they vary based on the selected object. See Component Properties to know more about these options.

- Status Panel: When you run a data flow, the Status Panel shows the status of the job that is running in the background to complete the request. You can see the status of the job that is currently running or the status of the last job. For more information about the Status panel, see unresolvable-reference.html#GUID-FD0F4B4E-6CFB-4742-8ED0-EEE70D074684.

After designing the required data flow,

- Click

, to save the created/designed data flow.

, to save the created/designed data flow.

- Click

, to align the nodes of the designed data flow.

, to align the nodes of the designed data flow.

- Click

, to execute the created data flow.

, to execute the created data flow.

- Click

, to validate the created data flow.

, to validate the created data flow.

- Click

, to maximize or minimize the created data flow diagram in the design canvas.

, to maximize or minimize the created data flow diagram in the design canvas.

Oracle Database Functions

Oracle Data Transforms supports the following components to perform various database functions:

- Data Transformation

It contains the following components:

- Aggregate

- Expression

- Filter

- Join

- Distinct

- Lookup

- Set

- Sort

- Split

- Subquery Filter

- Table Function

- Data Preparation

It contains the following components:

- Data Cleanse

- Equi_Width Binning

- Quantile Binning

- Lead

- Lag

- Replace

- Machine Learning

It contains the following components:

- Prediction

- Outlier Detection

- JSON/XML/Text

It contains the following components:

- REGEXP COUNT

- REGEXP INSTR

- REGEXP SUBSTR

- REGEXP REPLACE

- Edit Distance Similarity

- Contains

- Oracle Spatial and Graph

It contains the following components:

- Buffer Dim

- Buffer Tol

- Distance Dim

- Distance Tol

- Nearest

- Simplify

- Point

- Spatial Join

- Geocode Tools:

Note:

The following Geocode Tools work only in non-Autonomous Database environment.- Geocode As Geometry

- Geocode

- Geocode Address

- Geocode All

- Geocode Address All

- Reverse Geocode

Create a New Data Flow

You can create data flows in any of the following ways:

From the Projects page

To create a data flow from the Projects page,

- On the Projects page, click Create Data Flow.

Create Data Flow page appears:

- In the Name field, enter a name for the new data flow.

- Select Create New Project, if you wish to create new project folder for the newly created data flow.

- Else, click Add to Existing Projects, if you wish to add the newly created data flow to an existing project folder.

- If you have selected Create New Project for the previous option, in the Project Name field, enter the name of the newly created project.

- Else, if you have selected Add to Existing Projects for the previous option, select the required project from the Project Name drop-down arrow.

- In the Description field, enter a description for the newly created data flow.

- Click Create.

From the Data Flows page within a project

To create a data flow from the Data Flows page within a project,

- On the Projects page click the project tile you wish to create a new data flow for. The Project Details page appears.

- In the Data Flows page, click Create Data Flow.

- Provide the Name and Description of the new data flow.

- Click Next.

- To define your source connection, from the Connection drop-down, select the required connection from which you wish to add the data entities.

- In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

Select the schema that you want to use from the drop down. For Oracle Object Storage connections, the Schema drop-down lists the name of the bucket that you specified in the URL when you created the connection.

- Click Save.

The Data Flow Editor appears that allows you to create a new data flow.

From the Home page

To create a data flow from the Home page,

- On the Home page, click Transform Data. The Create Data Flow page appears.

- Provide the Name and Description of the new data flow.

- Select a project name from the drop-down. Alternatively, click the + icon to create a project.

- Click Next.

- From the Connection drop-down, select the required connection from which you wish to add the data entities. Alternatively, click the + icon to create a new connection.

- In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

Select the schema that you want to use from the drop down.

- Click Save.

Add Components

- In the Data Entities panel, click Add a Schema to add schemas that contain the data entities that you want to use in the data flow.

- In the Add a Schema page, select the connection and schema name.

- Click Import.

- In the Import Data Entities page, select the Type of Objects you want to import. Choose a Mask/filter if you don't want to import every object in the schema and click Start.

- The Data Entities panel lists the imported data entities. The panel includes various options that let you do the following:

- Refresh Data Entities – Click the Refresh icon

to refresh the displayed list.

to refresh the displayed list.

- Name - Search for data entities by name.

- Tags - Filter the data entities by the name of the tag used.

- Import Data Entities - Right-click the schema to see this option. Use this option to import the data entities.

- Remove Schema - Right-click the data entity to see this option. Use this option to remove the schema from the list. Note that this option does not delete the schema, it only removes the association of the schema with this data flow.

- Refresh Data Entities – Click the Refresh icon

- Similarly add more schemas to the Data Flow, if required.

- Drag the required Data Entities that you want to use in the data flow and drop them on the design canvas.

- From the Transformations toolbar, drag the transformation component that you want to use in the data flow and drop them on the design canvas.

- Select an object on the design canvas, and drag the Connector icon (

) next to it to connect the components.

) next to it to connect the components.

- After saving the data flow, there may be a Transfer icon overlaid on one or more of the component connections. This indicates that ODI has detected an additional step and it is required to move the data between data servers. You can click on this Icon to view properties associated with this step.

Component Properties

The Properties Panel displays various settings for components selected in the Design Canvas. Depending on the component selected, you may see any of the following icons:

- General (

) - Displays the name of the component along with its connection and schema details. You can edit some of these properties.

) - Displays the name of the component along with its connection and schema details. You can edit some of these properties.

- Attributes (

) - Displays the details of all the attributes associated with the component.

) - Displays the details of all the attributes associated with the component.

- Column Mapping (

) - Allows you to map all the columns automatically. See Map Data Columns for more information.

) - Allows you to map all the columns automatically. See Map Data Columns for more information.

- Preview (

) - Displays a preview of the component. For Oracle tables, you can also view the statistics of the selected data entity. See Viewing Statistics of Data Entities for details about the statistical information available.

) - Displays a preview of the component. For Oracle tables, you can also view the statistics of the selected data entity. See Viewing Statistics of Data Entities for details about the statistical information available.

- Options (

) - Displays options such as

) - Displays options such as

- Truncate Table - Replaces any existing target table content with new data.

- Append - Inserts records from the flow into the target. Existing records are not updated.

- Incremental - Integrates data in the target table by comparing the records of the flow with existing records and updating the records when their associated data is not the same. Those that don't yet exist in the target are inserted.

The option includes an Auto compression feature that is set to

Trueby default. For data flow jobs that use the Incremental Update mode to load data onto a compressed Oracle target partition, the Auto compression feature recompresses the modified target partitions after the load completes successfully. For table partitions that are not originally compressed, the compression is skipped irrespective of whether Auto compression is set to true.Note:

The Auto compression option is available to the ADMIN user or to a user with the DWROLE role. For data flows that have schema users other than ADMIN you need to either assign the DWROLE to the user or disable Auto compression to avoid execution errors.

Map Data Columns

To map columns by Position or by Name:

- Select the target Data Entity.

- Click the arrow icon present on the top right corner to expand the Properties Panel. This will give you more space to work with.

- In the Properties Panel, click the Column Mapping icon (

).

).

- To map the columns by Position or by Name, from the Auto Map drop-down menu, select By Position or By Name.

To map the columns manually:

- From the Auto Map drop-down menu, select Clear to clear the existing mappings.

- Drag and drop the attributes from the tree on the left to map with the Expression column.

- To edit an expression, click the Edit icon of the respective column. The Expression Editor appears allowing you to perform the required changes (for example, you can just add an expression-"UPPER" or open the Expression Editor to edit the expression).

Note:

Use the expression editor only if you have complex expressions for a particular column. - Click OK.

Create Data Entities within the Data Flow editor

If you have already created or imported your target data entity, then you would drag the data entity onto the design canvas and complete the column mappings and options.

To create the definition of a new target table while in the Data Flow editor,

- Select the component at the end of your data flow.

- Click the Add Data Entity icon

present on the top right corner of the target component.

present on the top right corner of the target component.

- Add Data Entity page appears allowing you to configure the following details of the target component:

General tab

- In the Name text box, enter the name of the newly created Data Entity.

- The Alias text box is auto-populated with the name of the newly created Data Entity.

- From the Connection Type drop-down, select the required connection from which you wish to add the newly created Data Entity.

- It loads the server name coined at the time of connection creation. From the Server drop-down, select the required server name from which you wish to add the newly created Data Entity.

- From the Schema drop-down, select the required schema.

- Click Next.

Columns tab

It allows you to create, remove or edit the column definitions.

- Click the

Add Columns icon, to add new columns to the newly created Data Entity.

Add Columns icon, to add new columns to the newly created Data Entity.

A new column is added to the displayed table.

- The table displays the following columns:

- Name

- Data Type - Click the cell to configure the required Data Type.

- Scale

- Length

- Actions - Click the cross icon to delete the created column.

- To delete the columns in bulk, select the columns and click the

Delete icon.

Delete icon.

- To search for the required column details, in the Search text box enter the required column name and click enter. The details of the required column are displayed.

- Click Next.

Preview Data Entity tab

It displays a preview of all the created columns and their configured details. If the data entity belongs to an Oracle database, you can also view statistics of the table. See Viewing Statistics of Data Entities for more information.

- Click Save.

The new target Data Entity is created.

- Expand the Properties Panel in the right pane to view the following settings of the created components:

- General - Displays the Name of the component along with its Connection and Schema details.

- Attributes - Displays the details of all the attributes associated to the component.

- Column Mapping - Click Auto Map to map all the columns automatically.

- Preview - Click to have a preview of the component.

- Options - Change the options as appropriate.

Validate and Execute a Data Flow

- Click Save.

After saving, if data needs to be staged before transforming, Transfer button is added to one or more links. You can click these buttons to set more options, if available.

- Click the Simulate Code icon (

) if you want to check the code that will run to complete the tasks that are performed when you execute the data flow job. The source and target details are displayed in different colors for ease of reference. This is handy if you want to check if the mapping is correct before you run the job or if the job fails. Note that the code cannot be used for debugging. For detailed information about the job, see the Job Details page.

) if you want to check the code that will run to complete the tasks that are performed when you execute the data flow job. The source and target details are displayed in different colors for ease of reference. This is handy if you want to check if the mapping is correct before you run the job or if the job fails. Note that the code cannot be used for debugging. For detailed information about the job, see the Job Details page.

- Click the Validate icon (

) in the toolbar above the design canvas to validate the data flow.

) in the toolbar above the design canvas to validate the data flow.

- After a successful validation, click the Execute icon (

) next to the Validate icon to execute the data flow.

) next to the Validate icon to execute the data flow.

A message appears that displays the execution Job ID and name. To check the status of the data flow, see the Status panel on the right below the Properties Panel. For details about the Status panel, see unresolvable-reference.html#GUID-FD0F4B4E-6CFB-4742-8ED0-EEE70D074684. This panel also shows the link to the Job ID that you can click to monitor the progress on the Jobs page. For more information, see unresolvable-reference.html#GUID-B54E671D-CB37-47FA-AFC2-A507A8F53094.

For data flows created using Oracle Object Storage connections, the data from the source CSV file is loaded into the target Oracle Autonomous Database. You can also export data from an Oracle Autonomous Database table to a CSV file in Oracle Object Storage.

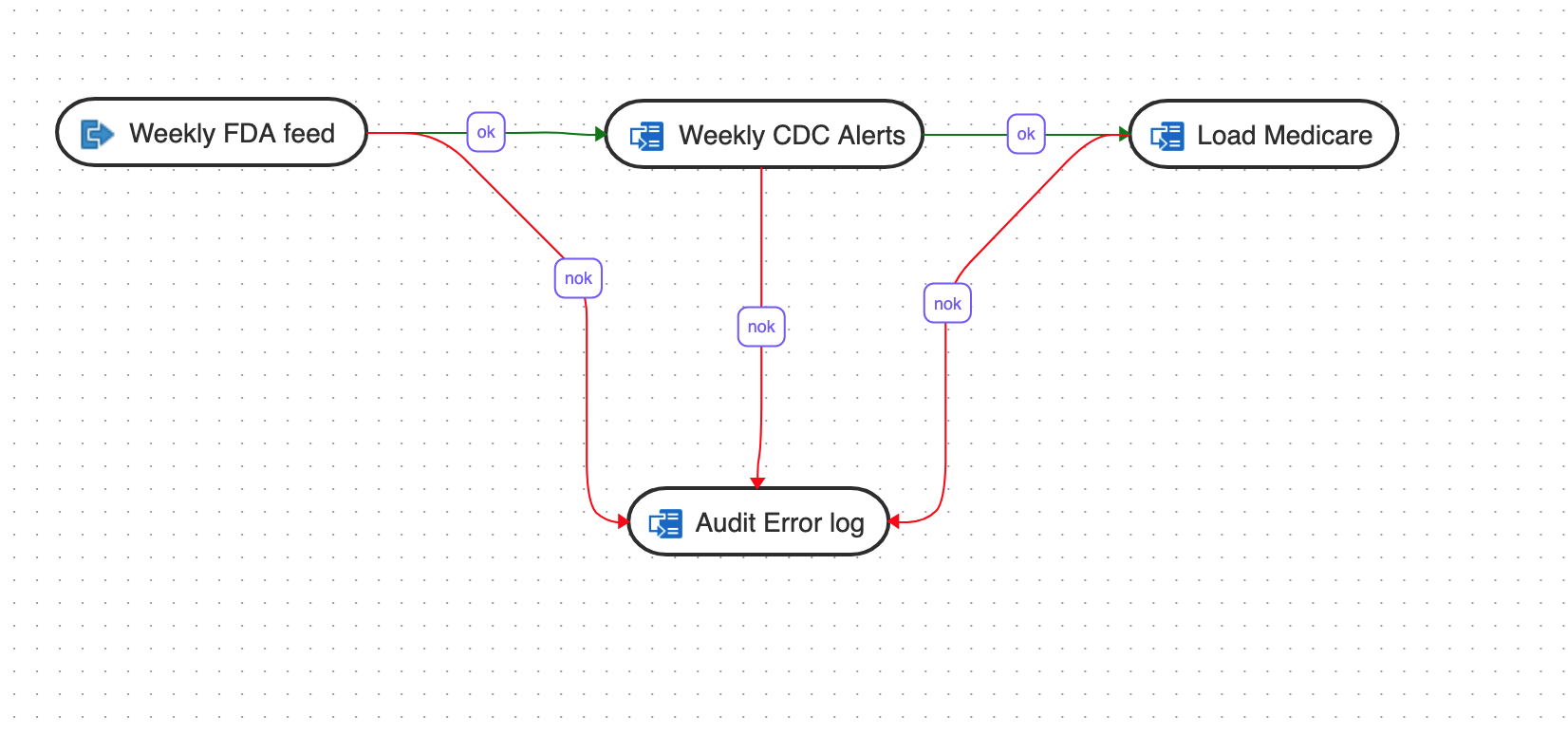

Introduction to Workflows

A workflow is made up of multiple data flows organized in a sequence in which they must be executed. Each data flow is executed as a step. You can also add workflows within a workflow.

When you execute a workflow, a data flow either succeeds or fails. Depending on whether the first data flow succeeds or fails, you can choose the next data flow that must be executed.

Here is an example of a workflow:

Description of the illustration introduction-workflows.png

In this example, the workflow performs the following actions:

- Execute the "Weekly FDA feed" data flow.

- If the "Weekly FDA feed" data flow execution is successful, execute the "Weekly CDC alerts" data flow.

- If the "Weekly CDC alerts" data flow execution is successful, execute the "Load Medicare" data flow.

- If any of the above data flow fails, then execute the "Audit_error_log" data flow.

Create a New Workflow

- On the Home page, click the required Project title.

You are navigated to the Project Details page.

- In the left pane, click Workflows.

The Workflow page appears.

- On the Workflow page, click Create Workflow.

The Create Workflow page appears.

- In the Name field provide a name for the new workflow and click Create.

The new workflow is created and listed in the Workflow page with its Name and Folder details.

- Click the Workflow to configure the Workflow Details.

- From the left panel drag the data flows, workflows, or data loads that you want to run in the workflow. If you have connected to any other Data Transforms instance, you can also add data loads that you have created in that Data Transforms instance. See Creating a Data Transforms Connection for Remote Data Load and Running a Data Load for more information.

- Select either the ok (green arrow) icon, the not ok (red arrow) or the ok/not ok (black arrow) in the toolbar.

This defines the mode that subsequent links drawn on the canvas will be set to.

- ok (green) defines the success path.

- not ok (red) defines the failure path.

- ok/not ok (black) defines a path that is followed on success or failure.

- Sleep (clock icon) defines the duration of delay between steps.

- Select the data flow and click the Connector icon (

) next to it to connect it with the next data flow.

) next to it to connect it with the next data flow.

- After defining all the required Workflow details,

- Click

, to save the created/designed workflow.

, to save the created/designed workflow.

- Click

, to align the nodes of the designed workflow.

, to align the nodes of the designed workflow.

- Click

, to execute the created workflow.

, to execute the created workflow.

- Select a single step in the canvas and click the Execute Step icon (

), to execute only the selected data flow or workflow.

), to execute only the selected data flow or workflow.

To check the status of the workflow, see the Status panel on the right below the Properties Panel. For details about the Status panel, see unresolvable-reference.html#GUID-FD0F4B4E-6CFB-4742-8ED0-EEE70D074684. This panel shows the link to the Job ID that you can click to monitor the execution status on the Jobs page. For more information about jobs see unresolvable-reference.html#GUID-B54E671D-CB37-47FA-AFC2-A507A8F53094.

- Click

, to maximize or minimize the created workflow diagram in the design canvas.

, to maximize or minimize the created workflow diagram in the design canvas.

The newly created workflows get listed in the Project Details page. The following details are displayed:

- Name of the Workflow

- Folder corresponding to the workflow - Default Folder

To view the details of a workflow, click the name of the workflow and you are navigated to the Workflow Details page.

In the left pane, you can search for the required Data Flow or Workflow using the Name filter. In the Name text box, enter the name of the required Data Flow or Workflow.

Select a step in the canvas and check the Properties Panel available at the right side of the design canvas to know the following properties of the selected step in a created Data Flow or Workflow:

- Name

- Linked Object

Note:

You cannot edit this field. - Step -

- First Step - Select the First Step check-box, to execute the selected step as the first step for execution in a Data Flow or Workflow.

Note:

You can select only a single step as a first step for execution in a Data Flow or Workflow.

- First Step - Select the First Step check-box, to execute the selected step as the first step for execution in a Data Flow or Workflow.

- Number of attempts on Failure

- Time between attempts in seconds(s)

- Log steps in journal - Select any of the following options from the drop-down box:

- Always

- Never

- Error

- Click

Scheduling Data Flows or Workflows

You can schedule Workflows and Data Flows to run at specified time interval. Each schedule allows you to specify a start date and time, and execution frequency.

To schedule a data flow or a workflow:

- In the left pane, click Schedules.

- Click Create Schedule.

- From the Resource drop-down menu, select Data Flow or Workflow as appropriate.

- From the Resource Name drop-down list, select a data flow or workflow that you want to schedule.

- From the Frequency drop-down list, select the time frame in which you wish to execute the created schedule. You can schedule it On Startup, Simple, Daily, Hourly, Weekly, Monthly or Yearly. Based on the selected frequency, Time field appears allowing you to schedule the selected Data Flow or Workflow. For detailed information refer to the below table:

Frequency Time Values On Startup NIL Simple Click Select Date Time icon next to Date and Time field, to select the required date and time in MM/DD/YYandHH:mmformat.Daily Click the clock icon next to Time field, to select the required time in HH:mmformat.Hourly Click up and down arrows next to Time field, to select the required time in Minutes:Secondsformat.Weekly - Run Every parameter appears with check-boxes for all the days of the week.

- Click the clock icon next to Time field, to select the required time in

HH:mmformat.

Monthly (day of the month) - Select the required date from the Monthly Date drop-down box.

- Click the clock icon next to Time field, to select the required time in

HH:mmformat.

Monthly (week day) - From the Monthly date drop down box select the required value.

- From the Week day drop-down box, select the required weekday in which you wish to schedule the job.

Yearly - From the Month drop down box, select the required month in which you wish to schedule the job.

- From the Monthly date drop down box, select the required date in which you wish to schedule the job.

- Click the clock icon next to Time field, to select the required time in

HH:mmformat.

- In the Number of Attempts on Failure text box, enter the required value or click the up or down arrows next to the text box, to select the required value. This value denotes the number of retry attempts that should happen after the schedule failure.

- Stop Execution After field denotes the time after which the schedule has to stop after executing it. It can be in Hours, Minutes or Seconds. Select the value and its unit.

- Select the status of the scheduled Data Flow or Workflow from the Status options. It can be Active, Inactive or Active for Period. Through Active for Period option you can configure the exact time frame of the created schedule. It's Starting and Ending Date with time, time interval in which you wish to run the schedule and exceptions, if any.

- After configuring the above details, click Save.

The created schedules get listed in the Schedules page along with the following details:

- Resource

- Status

- Frequency

- Valid or Invalid

) of the respective schedule to perform the following operations:

) of the respective schedule to perform the following operations:

- Click Edit to edit the details of the created schedule.

- Click Disable to disable the created schedule. The status of a schedule becomes inactive when you disable it and gets displayed in the Scheduled list. Select the schedule and click Enable, to enable it whenever required. You can enable it again for a specific period, through Enable for Period option.

- Click Validate to validate the created schedule.

- Click Delete to delete the created schedule. Upon confirmation the created schedule is deleted.

To schedule a data flow or a workflow specific to a project:

- Click the Project title displayed on the Projects page.

Project Details page appears.

- In the left pane, click Schedules.

Schedules page appears. It displays all the schedules pertaining to the selected project.

Note:

This page is project specific and displays only the Data Flows and Workflows pertaining to the selected project. - To create a new schedule, click Create Schedule.

- Enter all the required details and click Save.

The new schedule is created and is added to the existing list of schedules for the project.

Viewing Status of Data Loads, Data Flows, and Workflows

When you run a data load, data flow, or workflow Oracle Data Transforms runs jobs in the background to complete the request. You can view the status of the job in the panel on the bottom right of the Data Load Details, the Data Flow Editor, and the Workflow Editor page.

- A Refresh icon (

) to refresh the displayed status.

) to refresh the displayed status.

- The current status. You can see any of the following statuses:

- Not Started – If no data load, data flow, or workflow has been executed yet.

- Running – When the job starts.

- Done – When the job completes.

The status of the last job run persists in this panel till you run a new data load, data flow, or workflow.

Note:

For data loads created using OCI GoldenGate, the status panel shows the link to the GoldenGate Deployment Console and the status of the Extract and Replicat processes.

- The name of the job and a link that takes you to the Jobs Details page where you can see detailed information about the job and monitor the execution status. For more information, see unresolvable-reference.html#GUID-B54E671D-CB37-47FA-AFC2-A507A8F53094.

When you run a data load, multiple jobs run in the background to complete the request. This panel shows a link for each job that executes when running a data load.

Managing Jobs

The Jobs page by default, lists the execution job sessions that are running and completed for the present day as Date parameter is set to Today by default. You can click on any of the instance id from the list to see its details.

To create a new job,

- Click Create Job.

The Create Job page slides-in.

- From the Resource Type drop-down, select the type of resource for which you wish to create a new job. It can be - Data Flow, Workflow or Schema.

- All the resources associated with the selected Resource Type get listed in the Resource field. From the Resource drop-down, select the required resource for which you wish to create a new job.

- Click Create.

A new job is created and is added to the existing list of jobs in the Jobs page.

For each session, you can see the Job Session ID, Name, Status, Start Time and End Time for each of the jobs that are executed. Click the Actions menu (![]() ) to View Details of the jobs, Delete and Rerun the jobs, if required. If any of the steps failed, you can click on them to see the error details.

) to View Details of the jobs, Delete and Rerun the jobs, if required. If any of the steps failed, you can click on them to see the error details.

To delete a job, select the check box of the respective job session and click Delete. Upon confirmation the selected job gets deleted.

You can also search for the required job session to know its details based on filters such as:

- Name - Name of the Job

- Status - Select the required session status from the list - All, Done, Error, Queued, Running, Waiting and Warning.

- Date - Select the date in which the required job session was executed - All, Today, Yesterday, Last Week, Last Month and Custom Range, which allows you to select specific From and To dates.

To view the job sessions pertaining to a specific project:

- Click the Project tile displayed in the Projects page.

Project Details page appears.

- In the left pane, click Jobs.

Jobs page appears. It displays all the jobs and their details pertaining to the selected project.

Note:

This page is project specific and displays only the jobs pertaining to the selected project. - To create a new job, click Create Job.

- Enter all the required details and click Create.

A new job is created and is added to the existing list of jobs for the project.