Federated Partition Data Load

From an Essbase federated partition cube, you can load data to your fact table in Autonomous Data Warehouse. Before you can do so, you need to integrate Essbase with OCI object storage, and configure DBMS_CLOUD credentials using the configure-dbms-writeback script.

Integrate Essbase with OCI Object Storage

To load data from a federated partition cube to your fact table in Autonomous Data Warehouse, Essbase uses the the DBMS_CLOUD package, available with Autonomous Database.

In order to access the DBMS_CLOUD package, you must have selected to integrate Essbase with OCI Object Storage when you deployed the Oracle Essbase stack from Oracle Cloud Marketplace.

For full details, see Deploy Essbase from Marketplace for Federated Partitions.

Set Up Credentials for Data Load

Before any data load operation can be performed through Essbase to Autonomous Data Warehouse, a workflow of steps must be completed to enable cloud credentials to be usable with federated partitions.

User Types in Data Load Credentials Workflow

Users with following types of access are or may be involved in the required workflow for setting up a federated partition cube for data load. In your organization, these roles may be separate (so that the setup is a collaborative workflow), or the roles may combined (one person has all the required access).

| User Type | Role within Workflow |

|---|---|

| SSH User |

Can use the operating system command line to access, as opc user, the Essbase instance deployed on Oracle Cloud Infrastructure. (May be the same person who deployed Essbase as a stack on OCI). |

| DB User |

Knows the Autonomous Data Warehouse schema name and password -- the same schema and password that is used to create the Essbase connection to Oracle Database (a required prerequisite before creating the federated partition). |

| OCI User |

Can access the OCI Console, including the object storage bucket for the Essbase catalog. |

| DB Admin |

Knows the Oracle Database administrator schema name and password. |

| Essbase Admin |

The Essbase system administrator. Can be the initial Essbase administrator created by the identity domain administrator (who may also be the OCI User), or it can be another Essbase system administrator created after completion of Essbase deployment. |

| Essbase Application Manager |

The manager/owner of an Essbase application, created after completion of Essbase deployment. |

Data Load Credentials Workflow

The following workflow of steps must be completed per each database schema that you are using for federated partition.

-

OCI User: Follow instructions in Deploy Essbase from Marketplace for Federated Partitions to deploy Essbase to the OCI tenancy with the appropriate selections for federated partitions.

-

DB User, Essbase Admin, or Essbase Application Manager: Log in to Essbase web interface, and create a connection to Autonomous Data Warehouse, as described in Create a Connection for Federated Partitions.

-

DB User, Essbase Admin, or Essbase Application Manager: Create the federated partition, as described in Create a Federated Partition.

-

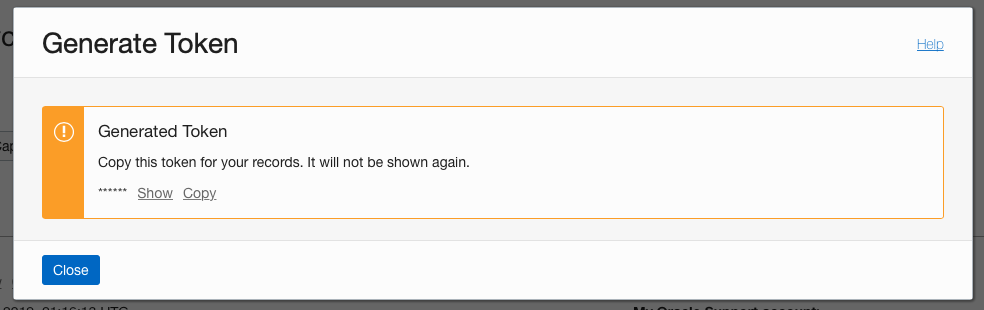

OCI User: From your user profile in OCI Console, generate and copy an authentication token. Provide this, and your user name, to the SSH User.

-

SSH User: Run the data load preparation script, available on the Essbase instance on OCI. You only need to run the script once per database schema in Autonomous Data Warehouse.

Example:

-

Switch to the oracle user.

sudo su oracle -

Navigate to the script location.

cd /u01/vmtools/config/adwwb_dbms -

Run the script.

./configure-dbms-writeback.shNote:

To see script options, run the script with the-hor--helpargument. Syntax:./configure-dbms-writeback.sh [--help | -h]Note:

Optionally run the script with the vault option. This option sets the script to take the DB admin credentials stored in the vault, accessed using the OCID, instead of prompting you for the password. Syntax:./configure-dbms-writeback.sh [--vault | -V] -

When prompted, enter the required information:

- DB Admin password, if you did not run the script with vault option. Because the password is protected information, you will not see the text as you type at the command prompt.

- DB User username and password. Because the password is protected information, you will not see the text as you type at the command prompt.

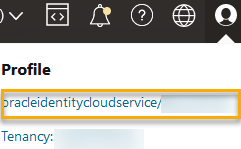

- OCI User username and auth token. Enter the full user identification string. To find this string, in the OCI Console, click the profile icon in the top-right corner to display an overview of the user profile. Copy the complete string displayed beneath Profile and above Tenancy.

The script creates the necessary cloud credential and stores it in the database schema. There is no need to rerun the script when you restart OCI, Essbase, or Essbase applications.

-

Now you can load data through Essbase to update the Autonomous Data Warehouse fact table.

Note:

To SSH User– If the OCI user's auth token used in the data load preparation script no longer has access to the object storage bucket for the Essbase catalog, you will need to find another OCI user meeting the requirements listed in User Types in Data Load Preparation Workflow, and repeat the steps in the workflow.

Other Notes on Federated Partition Data Load

Source Data File Location

Before performing a data load through Essbase to the fact table, Oracle recommends you upload the data file to the Essbase Server. Client side data load is supported, but takes longer.

Non-Essbase Data Load Tools

If you do not need to load data through Essbase to Autonomous Data Warehouse, you can use Data Tools in Autonomous Database to load data to the fact table and perform other management tasks. However, ensure that the cube outline and fact table do not get out of sync – see Metadata Precautions for Federated Partition Cubes.

DBMS Formatted Data Files

Loading Essbase-formatted data export files into federated partition cubes can be time consuming. To optimize data loads, use a DBMS formatted source file. You can make one using the DATAEXPORT calculation command with DataExportCSVFormat option. CSV formatted files can be loaded faster because they are in accordance with DBMS_CLOUD package format options for source files.

Pivot Dimension in Fact Table and Data Load Input Source

The pivot dimension used in data load input files must be the same as the pivot dimension of the fact table.

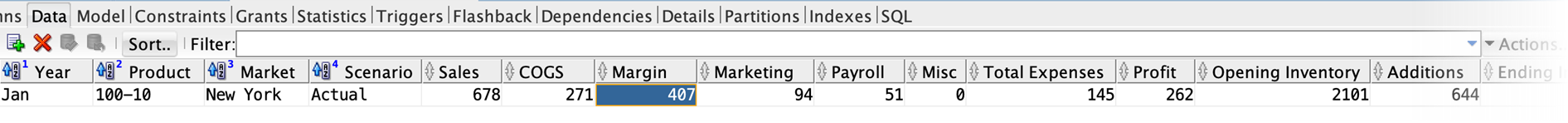

For example, in the following fact table, the pivot dimension is the Measures dimension (Sales, COGS, Margin, etc).

An acceptable data load input file for this fact table has a similar shape, because it has the same pivot dimension. Example (truncated):

"Year","Product","Market","Scenario","Sales","COGS","Margin","Marketing","Payroll","Misc","Total Expenses","Profit","Opening Inventory","Additions","Ending Inventory"

"Jan","100-10","New York","Actual",678,271,407,94,51,0,145,262,2101,644,2067

"Feb","100-10","New York","Actual",645,258,387,90,51,1,142,245,2067,619,2041

"Mar","100-10","New York","Actual",675,270,405,94,51,1,146,259,2041,742,2108If the input file pivot dimension differs from the fact table pivot dimension, an error is returned and the data load job is terminated.

Multiple Import Files in MaxL not Supported

Importing data from multiple files in parallel using a MaxL import statement with wildcard characters is not supported for federated partition cubes.