4.4 Proactively Detecting and Diagnosing Performance Issues for Oracle RAC

Oracle Cluster Health Advisor provides system and database administrators with early warning of pending performance issues, and root causes and corrective actions for Oracle RAC databases and cluster nodes. Use Oracle Cluster Health Advisor to increase availability and performance management.

Oracle Cluster Health Advisor estimates an expected value of an observed input based on the default model, which is a trained calibrated model based on a normal operational period of the target system. Oracle Cluster Health Advisor then performs anomaly detection for each input based on the difference between observed and expected values. If sufficient inputs associated with a specific problem are abnormal, then Oracle Cluster Health Advisor raises a warning and generates an immediate targeted diagnosis and corrective action.

Oracle Cluster Health Advisor also sends warning messages to Enterprise Manager Cloud Control using the Oracle Clusterware event notification protocol.

The ability of Oracle Cluster Health Advisor to detect performance and availability issues on Oracle Exadata systems has been improved in this release.

With the Oracle Cluster Health Advisor support for Oracle Solaris, you can now get early detection and prevention of performance and availability issues in your Oracle RAC database deployments.

For more information on Installing Grid Infrastructure Management Repository, see Oracle® Grid Infrastructure Grid Infrastructure Installation and Upgrade Guide 20c for Linux.

- Oracle Cluster Health Advisor Architecture

Oracle Cluster Health Advisor runs as a highly available cluster resource,ochad, on each node in the cluster. - Removing Grid Infrastructure Management Repository

GIMR is desupported in Oracle AI Database 26ai. If GIMR is configured in your existing Oracle Grid Infrastructure installation, then remove the GIMR. - Monitoring the Oracle Real Application Clusters (Oracle RAC) Environment with Oracle Cluster Health Advisor

Oracle Cluster Health Advisor is automatically provisioned on each node by default when Oracle Grid Infrastructure is installed for Oracle Real Application Clusters (Oracle RAC) or Oracle RAC One Node database. - Using Cluster Health Advisor for Health Diagnosis

Oracle Cluster Health Advisor raises and clears problems autonomously. - Calibrating an Oracle Cluster Health Advisor Model for a Cluster Deployment

As shipped with default node and database models, Oracle Cluster Health Advisor is designed not to generate false warning notifications. - Viewing the Details for an Oracle Cluster Health Advisor Model

Use thechactl query modelcommand to view the model details. - Managing the Oracle Cluster Health Advisor Repository

Oracle Cluster Health Advisor repository stores the historical records of cluster host problems, database problems, and associated metric evidence, along with models. - Viewing the Status of Cluster Health Advisor

SRVCTL commands are the tools that offer total control on managing the life cycle of Oracle Cluster Health Advisor as a highly available service. - Enhanced Cluster Health Advisor Support for Oracle Pluggable Databases

The Cluster Health Advisor (CHA) diagnostic capabilities have been extended to support 4K PDBs, up from 256 in Oracle AI Database 26ai. - New Profile to Include Cluster Health Advisor (CHA) Data in Oracle Orachk and Oracle Exachk Reports

AHF 25.9 introduces a new profile,cha, which includes Cluster Health Advisor (CHA) data in both Orachk and Exachk reports.

Related Topics

Parent topic: Collect Diagnostic Data

4.4.1 Oracle Cluster Health Advisor Architecture

Oracle Cluster Health Advisor runs as a highly available cluster resource, ochad, on each node in the cluster.

Each Oracle Cluster Health Advisor daemon (ochad) monitors the operating system on the cluster node and optionally, each Oracle Real Application Clusters (Oracle RAC) database instance on the node.

The ochad daemon receives operating system metric data from the Cluster Health Monitor and gets Oracle RAC database instance metrics from a memory-mapped file. The daemon does not require a connection to each database instance. This data, along with the selected model, is used in the Health Prognostics Engine of Oracle Cluster Health Advisor for both the node and each monitored database instance in order to analyze their health multiple times a minute.

4.4.2 Removing Grid Infrastructure Management Repository

GIMR is desupported in Oracle AI Database 26ai. If GIMR is configured in your existing Oracle Grid Infrastructure installation, then remove the GIMR.

Related Topics

4.4.3 Monitoring the Oracle Real Application Clusters (Oracle RAC) Environment with Oracle Cluster Health Advisor

Oracle Cluster Health Advisor is automatically provisioned on each node by default when Oracle Grid Infrastructure is installed for Oracle Real Application Clusters (Oracle RAC) or Oracle RAC One Node database.

Oracle Cluster Health Advisor does not require any additional configuration.

When Oracle Cluster Health Advisor detects an Oracle Real Application Clusters (Oracle RAC) or Oracle RAC One Node database instance as running, Oracle Cluster Health Advisor autonomously starts monitoring the cluster nodes. Use CHACTL while logged in as the Grid user to turn on monitoring of the database.

To monitor the Oracle Real Application Clusters (Oracle RAC) environment:

4.4.4 Using Cluster Health Advisor for Health Diagnosis

Oracle Cluster Health Advisor raises and clears problems autonomously.

The Oracle Grid Infrastructure user can query the stored information using CHACTL.

To query the diagnostic data:

Example 4-5 Cluster Health Advisor Output Examples in Text and HTML Format

chactl query diagnosis command for a database named oltpacbd.$ chactl query diagnosis -db oltpacdb -start "2016-02-01 02:52:50" -end "2016-02-01 03:19:15"

2016-02-01 01:47:10.0 Database oltpacdb DB Control File IO Performance (oltpacdb_1) [detected]

2016-02-01 01:47:10.0 Database oltpacdb DB Control File IO Performance (oltpacdb_2) [detected]

2016-02-01 02:52:15.0 Database oltpacdb DB CPU Utilization (oltpacdb_2) [detected]

2016-02-01 02:52:50.0 Database oltpacdb DB CPU Utilization (oltpacdb_1) [detected]

2016-02-01 02:59:35.0 Database oltpacdb DB Log File Switch (oltpacdb_1) [detected]

2016-02-01 02:59:45.0 Database oltpacdb DB Log File Switch (oltpacdb_2) [detected]

Problem: DB Control File IO Performance

Description: CHA has detected that reads or writes to the control files are slower than expected.

Cause: The Cluster Health Advisor (CHA) detected that reads or writes to the control files were slow

because of an increase in disk IO.

The slow control file reads and writes may have an impact on checkpoint and Log Writer (LGWR) performance.

Action: Separate the control files from other database files and move them to faster disks or Solid State Devices.

Problem: DB CPU Utilization

Description: CHA detected larger than expected CPU utilization for this database.

Cause: The Cluster Health Advisor (CHA) detected an increase in database CPU utilization

because of an increase in the database workload.

Action: Identify the CPU intensive queries by using the Automatic Diagnostic and Defect Manager (ADDM) and

follow the recommendations given there. Limit the number of CPU intensive queries or

relocate sessions to less busy machines. Add CPUs if the CPU capacity is insufficent to support

the load without a performance degradation or effects on other databases.

Problem: DB Log File Switch

Description: CHA detected that database sessions are waiting longer than expected for log switch completions.

Cause: The Cluster Health Advisor (CHA) detected high contention during log switches

because the redo log files were small and the redo logs switched frequently.

Action: Increase the size of the redo logs.

The timestamp displays date and time when the problem was detected on a specific host or database.

Note:

The same problem can occur on different hosts and at different times, yet the diagnosis shows complete details of the problem and its potential impact. Each problem also shows targeted corrective or preventive actions.

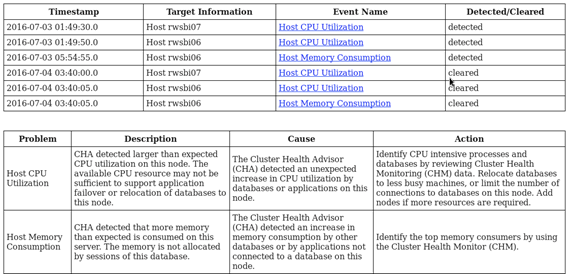

$ chactl query diagnosis -start "2016-07-03 20:50:00" -end "2016-07-04 03:50:00" -htmlfile ~/chaprob.htmlFigure 4-5 Cluster Health Advisor Diagnosis HTML Output

Description of "Figure 4-5 Cluster Health Advisor Diagnosis HTML Output"

4.4.5 Calibrating an Oracle Cluster Health Advisor Model for a Cluster Deployment

As shipped with default node and database models, Oracle Cluster Health Advisor is designed not to generate false warning notifications.

You can increase the sensitivity and accuracy of the Oracle Cluster Health Advisor models for a specific workload using the chactl calibrate command.

Oracle recommends that a minimum of 6 hours of data be available and that both the cluster and databases use the same time range for calibration.

The chactl calibrate command analyzes a user-specified time interval that includes all workload phases operating normally. This data is collected while Oracle Cluster Health Advisor is monitoring the cluster and all the databases for which you want to calibrate.

Example 4-6 Output for the chactl query calibrate command

Database name : oltpacdb

Start time : 2016-07-26 01:03:10

End time : 2016-07-26 01:57:25

Total Samples : 120

Percentage of filtered data : 8.32%

The number of data samples may not be sufficient for calibration.

1) Disk read (ASM) (Mbyte/sec)

MEAN MEDIAN STDDEV MIN MAX

4.96 0.20 8.98 0.06 25.68

<25 <50 <75 <100 >=100

97.50% 2.50% 0.00% 0.00% 0.00%

2) Disk write (ASM) (Mbyte/sec)

MEAN MEDIAN STDDEV MIN MAX

27.73 9.72 31.75 4.16 109.39

<50 <100 <150 <200 >=200

73.33% 22.50% 4.17% 0.00% 0.00%

3) Disk throughput (ASM) (IO/sec)

MEAN MEDIAN STDDEV MIN MAX

2407.50 1500.00 1978.55 700.00 7800.00

<5000 <10000 <15000 <20000 >=20000

83.33% 16.67% 0.00% 0.00% 0.00%

4) CPU utilization (total) (%)

MEAN MEDIAN STDDEV MIN MAX

21.99 21.75 1.36 20.00 26.80

<20 <40 <60 <80 >=80

0.00% 100.00% 0.00% 0.00% 0.00%

5) Database time per user call (usec/call)

MEAN MEDIAN STDDEV MIN MAX

267.39 264.87 32.05 205.80 484.57

<10000000 <20000000 <30000000 <40000000 <50000000 <60000000 <70000000 >=70000000

100.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00%

Database name : oltpacdb

Start time : 2016-07-26 03:00:00

End time : 2016-07-26 03:53:30

Total Samples : 342

Percentage of filtered data : 23.72%

The number of data samples may not be sufficient for calibration.

1) Disk read (ASM) (Mbyte/sec)

MEAN MEDIAN STDDEV MIN MAX

12.18 0.28 16.07 0.05 60.98

<25 <50 <75 <100 >=100

64.33% 34.50% 1.17% 0.00% 0.00%

2) Disk write (ASM) (Mbyte/sec)

MEAN MEDIAN STDDEV MIN MAX

57.57 51.14 34.12 16.10 135.29

<50 <100 <150 <200 >=200

49.12% 38.30% 12.57% 0.00% 0.00%

3) Disk throughput (ASM) (IO/sec)

MEAN MEDIAN STDDEV MIN MAX

5048.83 4300.00 1730.17 2700.00 9000.00

<5000 <10000 <15000 <20000 >=20000

63.74% 36.26% 0.00% 0.00% 0.00%

4) CPU utilization (total) (%)

MEAN MEDIAN STDDEV MIN MAX

23.10 22.80 1.88 20.00 31.40

<20 <40 <60 <80 >=80

0.00% 100.00% 0.00% 0.00% 0.00%

5) Database time per user call (usec/call)

MEAN MEDIAN STDDEV MIN MAX

744.39 256.47 2892.71 211.45 45438.35

<10000000 <20000000 <30000000 <40000000 <50000000 <60000000 <70000000 >=70000000

100.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00%4.4.6 Viewing the Details for an Oracle Cluster Health Advisor Model

Use the chactl query model command to view the model details.

4.4.7 Managing the Oracle Cluster Health Advisor Repository

Oracle Cluster Health Advisor repository stores the historical records of cluster host problems, database problems, and associated metric evidence, along with models.

Note:

Applicable only if GIMR is configured. GIMR is optionally supported in Oracle Database 19c. However, it's desupported in Oracle AI Database 26ai.The Oracle Cluster Health Advisor repository is used to diagnose and triage periodic problems. By default, the repository is sized to retain data for 16 targets (nodes and database instances) for 72 hours. If the number of targets increase, then the retention time is automatically decreased. Oracle Cluster Health Advisor generates warning messages when the retention time goes below 72 hours, and stops monitoring and generates a critical alert when the retention time goes below 24 hours.

Use CHACTL commands to manage the repository and set the maximum retention time.

-

To retrieve the repository details, use the following command:

$ chactl query repositoryFor example, running the command mentioned earlier shows the following output:specified max retention time(hrs) : 72 available retention time(hrs) : 212 available number of entities : 2 allocated number of entities : 0 total repository size(gb) : 2.00 allocated repository size(gb) : 0.07 -

To set the maximum retention time in hours, based on the current number of targets being monitored, use the following command:

$ chactl set maxretention -time number_of_hoursFor example:$ chactl set maxretention -time 80 max retention successfully set to 80 hoursNote:

The

maxretentionsetting limits the oldest data retained in the repository, but is not guaranteed to be maintained if the number of monitored targets increase. In this case, if the combination of monitored targets and number of hours are not sufficient, then increase the size of the Oracle Cluster Health Advisor repository. -

To increase the size of the Oracle Cluster Health Advisor repository, use the

chactl resize repositorycommand.For example, to resize the repository to support 32 targets using the currently set maximum retention time, you would use the following command:

$ chactl resize repository –entities 32 repository successfully resized for 32 targets

4.4.8 Viewing the Status of Cluster Health Advisor

SRVCTL commands are the tools that offer total control on managing the life cycle of Oracle Cluster Health Advisor as a highly available service.

Use SRVCTL commands to the check the status and configuration of Oracle Cluster Health Advisor service on any active hub or leaf nodes of the Oracle RAC cluster.

Note:

A target is monitored only if it is running and the Oracle Cluster Health Advisor service is also running on the host node where the target exists.

-

To check the status of Oracle Cluster Health Advisor service on all nodes in the Oracle RAC cluster:

srvctl status cha [-help]For example:# srvctl status cha Cluster Health Advisor is running on nodes racNode1, racNode2. Cluster Health Advisor is not running on nodes racNode3, racNode4. -

To check if Oracle Cluster Health Advisor service is enabled or disabled on all nodes in the Oracle RAC cluster:

srvctl config cha [-help]For example:# srvctl config cha Cluster Health Advisor is enabled on nodes racNode1, racNode2. Cluster Health Advisor is not enabled on nodes racNode3, racNode4.

4.4.9 Enhanced Cluster Health Advisor Support for Oracle Pluggable Databases

The Cluster Health Advisor (CHA) diagnostic capabilities have been extended to support 4K PDBs, up from 256 in Oracle AI Database 26ai.

Going forward, this is crucial for Oracle Autonomous Database deployments. CHA's problem detection and root cause analysis will be improved by considering DB events such as reconfiguration. This improves detection, analysis, and targeted preventative actions for problems such as instance evictions.

4.4.10 New Profile to Include Cluster Health Advisor (CHA) Data in Oracle Orachk and Oracle Exachk Reports

AHF 25.9 introduces a new profile, cha, which includes Cluster Health Advisor (CHA) data in both Orachk and Exachk reports.

To collect and report CHA data, run either tool with the cha profile:

orachk -profile cha

exachk -profile chaWhen run with the cha profile, CHA data is collected and only the CHA section is included in the report.

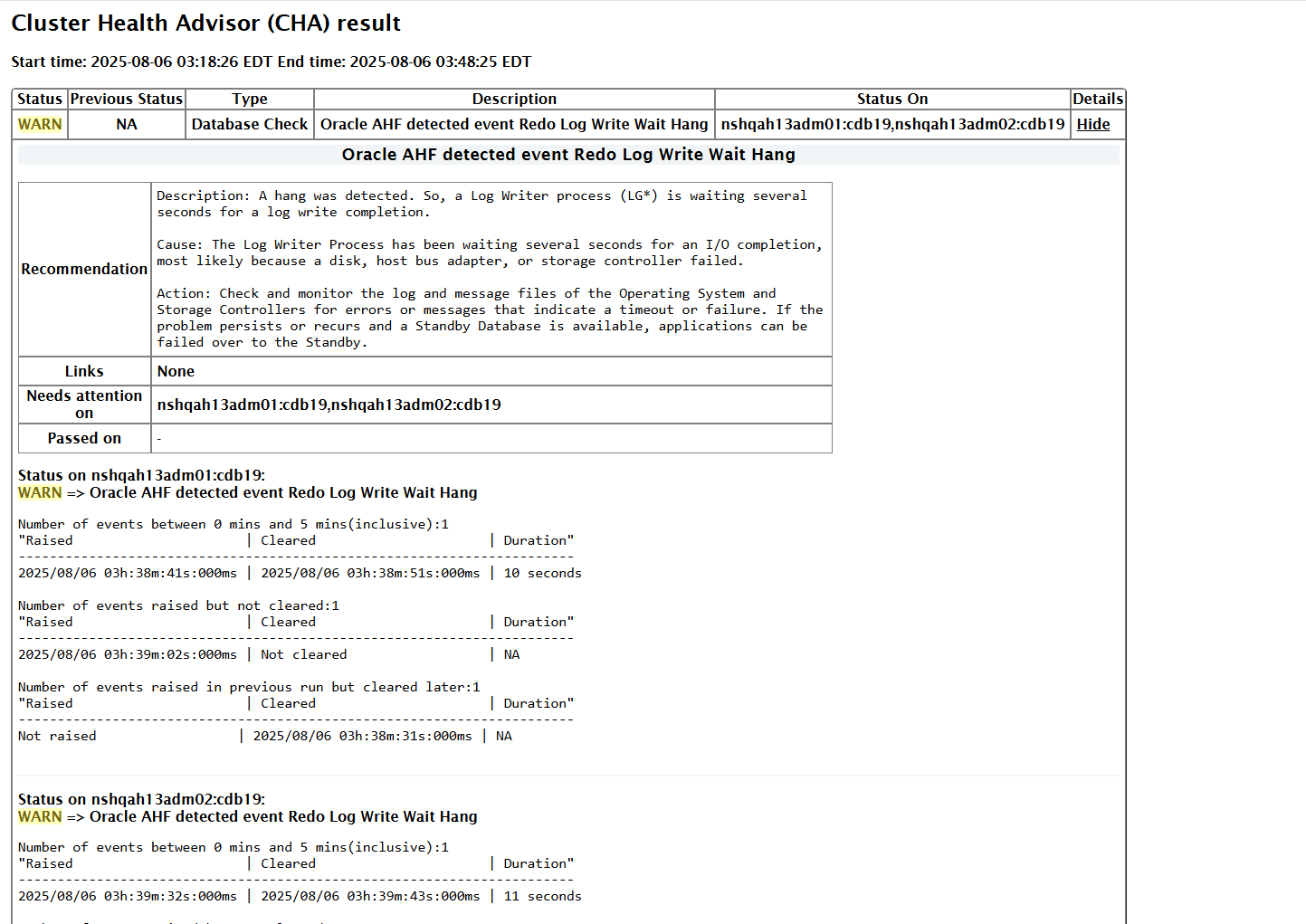

Figure 4-6 CHA result

By default, the cha profile is not included when running either Exachk or Orachk.

To collect CHA data and include the CHA section in the report along with other sections, run Exachk or Orachk with the -includeprofile cha option:

orachk -includeprofile cha

exachk -includeprofile cha