2 Monitoring and Managing Oracle Private Cloud Appliance

Monitoring and management of the Private Cloud Appliance is achieved using the Oracle Private Cloud Appliance Dashboard. This browser-based graphical user interface is also used to perform the initial configuration of the appliance beyond the instructions provided in the Quick Start poster included in the packaging of the appliance.

Attention:

Before starting the system and applying the initial configuration, read and understand the Oracle Private Cloud Appliance Release Notes. The section Known Limitations and Workarounds provides information that is critical for correctly executing the procedures in this document. Ignoring the release notes may cause you to configure the system incorrectly. Bringing the system back to normal operation may require a complete factory reset.

The Oracle Private Cloud Appliance Dashboard allows you to perform the following tasks:

-

Initial software configuration (and reconfiguration) for the appliance using the Network Environment window, as described in Network Settings.

-

Hardware provisioning monitoring and identification of each hardware component used in the appliance, accessed via the Hardware View window described in Hardware View.

-

Resetting the passwords used for different components within the appliance, via the Password Management window, as described in Authentication.

-

Backup

The configuration of all components within Private Cloud Appliance is automatically backed up based on a crontab entry. This functionality is not configurable. Restoring a backup requires the intervention of an Oracle-qualified service person. For details, see Oracle Private Cloud Appliance Backup.

-

Update

The update process is controlled from the command line of the active management node, using the Oracle Private Cloud Appliance Upgrader. For details, see Oracle Private Cloud Appliance Upgrader. For step-by-step instructions, see Upgrading Oracle Private Cloud Appliance.

-

Custom Networks

In situations where the default network configuration is not sufficient, the command line interface allows you to create additional networks at the appliance level. For details and step-by-step instructions, see Network Customization.

-

Tenant Groups

The command line interface provides commands to optionally subdivide a Private Cloud Appliance environment into a number of isolated groups of compute nodes. These groups of servers are called tenant groups, which are reflected in Oracle VM as different server pools. For details and step-by-step instructions, see Tenant Groups.

Guidelines and Limitations

The Oracle Private Cloud Appliance is provided as an appliance with carefully preconfigured hardware and software stacks. Making any changes to the hardware or software of the appliance, unless explicitly told to do so by the Oracle Private Cloud Appliance product documentation or Oracle support, will result in an unsupported configuration. This section lists some of the operations that are not permitted, presented as guidelines and limitations that should be followed when working within the Oracle Private Cloud Appliance Dashboard, CLI, or Oracle VM Manager interfaces. If you have a question about changing anything not explicitly permitted or described in the documentation, contact Oracle to open an SR.

The following actions must not be performed, except when Oracle gives specific instructions to do so.

Do Not:

-

attempt to discover, remove, rename or otherwise modify servers or their configuration;

-

add, remove, or update RPMs on Private Cloud Appliance server components other than those distributed with Private Cloud Appliance software except when specifically approved by Oracle;

-

attempt to modify the NTP configuration of a server;

-

attempt to add, remove, rename or otherwise modify server pools or their configuration;

-

attempt to change the configuration of server pools corresponding with tenant groups configured through the appliance controller software (except for DRS policy setting);

-

attempt to move servers out of the existing server pools, except when using the

pca-admincommands for administering tenant groups; -

attempt to add or modify or remove server processor compatibility groups;

-

attempt to modify or remove the existing local disk repositories or the repository named Rack1-repository;

-

attempt to delete or modify any of the preconfigured default networks, or custom networks configured through the appliance controller software;

-

attempt to connect virtual machines to the appliance management network;

-

attempt to modify or delete any existing Storage elements that are already configured within Oracle VM, or use the reserved names of the default storage elements – for example

OVCA_ZFSSA_Rack1– for any other configuration; -

attempt to configure global settings, such as YUM Update, in the Reports and Resources tab (except for tags, which are safe to edit);

-

attempt to select a non-English character set or language for the operating system, because this is not supported by Oracle VM Manager – see support document [PCA 2.3.4] OVMM upgrade fails with Invalid FQN (Doc ID 2519818.1);

-

attempt to connect any Private Cloud Appliance component to a customer's LDAP or Active Directory for authentication, including management nodes, compute nodes, ZFS Storage Appliances, NIS, NIS+, and other naming services;

-

attempt to add users – for example – adding users to management nodes or to WebLogic;

-

attempt to change DNS settings on compute nodes or ZFS Storage Appliances. The Oracle Private Cloud Appliance Dashboard contains the only permitted DNS settings.

-

change settings on Ethernet switches integrated into the Oracle Private Cloud Appliance or Oracle Private Cloud at Customer.

-

attempt to add a server running Oracle VM 3.4.6.x to a tenant group that already contains a compute node running Oracle VM 3.4.7.

-

migrate a virtual machine from a compute node running Oracle VM 3.4.7 to a compute node running Oracle VM 3.4.6.x.

If you ignore this advice, the Private Cloud Appliance automation, which uses specific naming conventions to label and manage assets, may fail. Out-of-band configuration changes would not be known to the orchestration software of the Private Cloud Appliance. If a conflict between the Private Cloud Appliance configuration and Oracle VM configuration occurs, it may not be possible to recover without data loss or system downtime.

Note:

An exception to these guidelines applies to the creation of a Service VM. This is a VM created specifically to perform administrative operations, for which it needs to be connected to both the public network and internal appliance networks. For detailed information and instructions, see support document How to Create Service Virtual Machines on the Private Cloud Appliance by using Internal Networks (Doc ID 2017593.1).

There is a known issue with the Oracle Private Cloud Appliance Upgrader, which stops the upgrade process if Service VMs are present. For the appropriate workaround, see support document [PCA 2.3.4] pca_upgrader Check Fails with Exception - Network configuration error: 'inet' (Doc ID 2510822.1).

Connecting and Logging in to the Oracle Private Cloud Appliance Dashboard

To open the Login page of the Oracle Private Cloud Appliance Dashboard, enter the following address in a Web browser:

https://manager-vip:7002/dashboard

Where, manager-vip refers to the shared Virtual IP address

that you configured for your management nodes during installation. By using the shared Virtual

IP address, you ensure that you always access the Oracle Private Cloud Appliance Dashboard on the active management node.

Figure 2-1 Dashboard Login

Note:

If you are following the installation process and this is your first time accessing the Oracle Private Cloud Appliance Dashboard, the Virtual IP address in use by the active management node is set to the factory default 192.168.4.216 . This is an IP address in the internal appliance management network, which can only be reached if you use a workstation patched directly into the available Ethernet port 48 in the Cisco Nexus 9348GC-FXP Switch.

Important:

You must ensure that if you are accessing the Oracle Private Cloud Appliance Dashboard through a firewalled connection, the firewall is configured to allow TCP traffic on the port that the Oracle Private Cloud Appliance Dashboard is using to listen for connections.

Enter your Oracle Private Cloud Appliance Dashboard administration user name in the User Name field. This is the administration user name you configured during installation. Enter the password for the Oracle Private Cloud Appliance Dashboard administration user name in the Password field.

Important:

The Oracle Private Cloud Appliance Dashboard makes use of cookies in order to store session data. Therefore, to successfully log in and use the Oracle Private Cloud Appliance Dashboard, your web browser must accept cookies from the Oracle Private Cloud Appliance Dashboard host.

When you have logged in to the Dashboard successfully, the home page is displayed. The central part of the page contains Quick Launch buttons that provide direct access to the key functional areas.

Figure 2-2 Dashboard Home Page

From every Dashboard window you can always go to any other window by clicking the Menu in the top-left corner and selecting a different window from the list. A button in the header area allows you to open Oracle VM Manager.

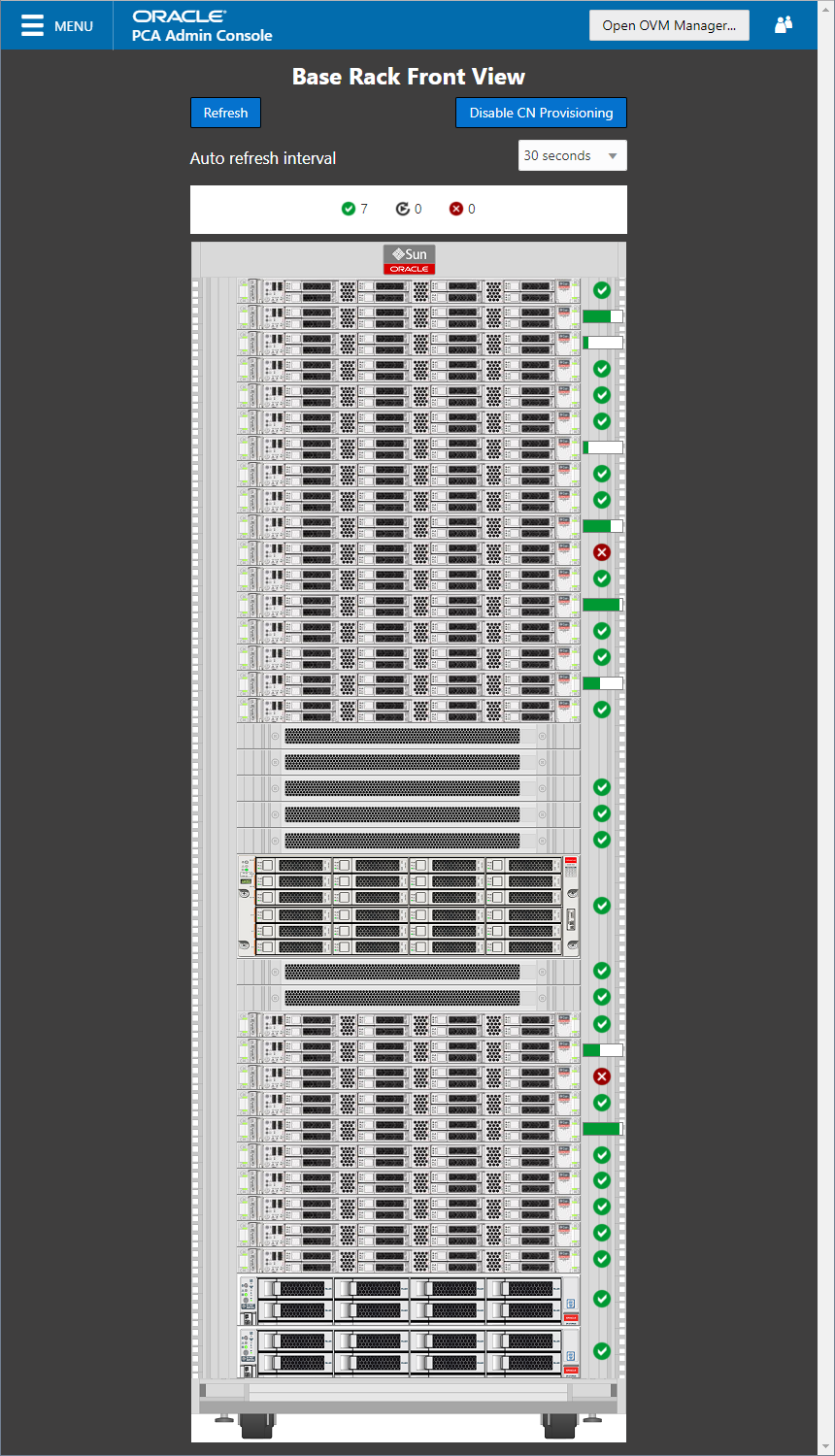

Hardware View

The Hardware View window within the Oracle Private Cloud Appliance Dashboard provides a graphical representation of the hardware components as they are installed within the rack. The view of the status of these components is automatically refreshed every 30 seconds by default. You can set the refresh interval or disable it through the Auto Refresh Interval list. Alternatively, a Refresh button at the top of the page allows you to refresh the view at any time.

During particular maintenance tasks, such as upgrading management nodes, you may need to disable compute node provisioning temporarily. This Disable CN Provisioning button at the top of the page allows you to suspend provisioning activity. When compute node provisioning is suspended, the button text changes to Enable CN Provisioning and its purpose changes to allow you to resume compute node provisioning as required.

Rolling over each item in the graphic with the mouse raises a pop-up window providing the name of the component, its type, and a summary of configuration and status information. For compute nodes, the pop-up window includes a Reprovision button, which allows you to restart the provisioning process if the node becomes stuck in an intermittent state or goes into error status before it is added to the Oracle VM server pool. Instructions to reprovision a compute node are provided in Reprovisioning a Compute Node when Provisioning Fails.

Caution:

The Reprovision button is to be used only for compute nodes that fail to complete provisioning. For compute nodes that have been provisioned properly and/or host running virtual machines, the Reprovision button is made unavailable to prevent incorrect use, thus protecting healthy compute nodes from loss of functionality, data corruption, or being locked out of the environment permanently.

Caution:

Reprovisioning restores a compute node to a clean state. If a compute node was previously added to the Oracle VM environment and has active connections to storage repositories other than those on the internal ZFS storage, the external storage connections need to be configured again after reprovisioning.

Alongside each installed component within the appliance rack, a status icon provides an indication of the provisioning status of the component. A status summary is displayed just above the rack image, indicating with icons and numbers how many nodes have been provisioned, are being provisioned, or are in error status. The Hardware View does not provide real-time health and status information about active components. Its monitoring functionality is restricted to the provisioning process. When a component has been provisioned completely and correctly, the Hardware View continues to indicate correct operation even if the component should fail or be powered off. See Table 2-1 for an overview of the different status icons and their meaning.

Table 2-1 Table of Hardware Provisioning Status Icons

| Icon | Status | Description |

|---|---|---|

|

|

OK |

The component is running correctly and has passed all health check operations. Provisioning is complete. |

|

|

Provisioning |

The component is running, and provisioning is in progress. The progress bar fills up as the component goes through the various stages of provisioning. Key stages for compute nodes include: HMP initialization actions, Oracle VM Server installation, network configuration, storage setup, and server pool membership. |

|

|

Error |

The component is not running and has failed health check operations. Component troubleshooting is required and the component may need to be replaced. Compute nodes also have this status when provisioning has failed. |

Note:

For real-time health and status information of your active Private Cloud Appliance hardware, after provisioning, consult the Oracle VM Manager or Oracle Enterprise Manager UI.

The Hardware View provides an accessible tool for troubleshooting hardware components within the Private Cloud Appliance and identifying where these components are actually located within the rack. Where components might need replacing, the new component must take the position of the old component within the rack to maintain configuration.

Figure 2-3 The Hardware View

Network Settings

The Network Environment window is used to configure networking and service information for the management nodes. For this purpose, you should reserve three IP addresses in the public (data center) network: one for each management node, and one to be used as virtual IP address by both management nodes. The virtual IP address provides access to the Dashboard once the software initialization is complete.

To avoid network interference and conflicts, you must ensure that the data center network does not overlap with any of the infrastructure subnets of the Oracle Private Cloud Appliance default configuration. These are the subnets and VLANs you should keep clear:

Subnets:

-

192.168.4.0/24 – internal machine administration network: connects ILOMs and physical hosts

-

192.168.32.0/21 – internal management network: traffic between management and compute nodes

-

192.168.64.0/21 – underlay network for east/west traffic within the appliance environment

-

192.168.72.0/21 – underlay network for north/south traffic, enabling external connectivity

-

192.168.40.0/21 – storage network: traffic between the servers and the ZFS Storage Appliance

Note:

Each /21 subnet comprises the IP ranges of eight /24

subnets or over 2000 IP addresses. For example: 192.168.32.0/21 corresponds

with all IP addresses from 192.168.32.1 to

192.168.39.255.

VLANs:

-

1 – the Cisco default VLAN

-

3040 – the default service VLAN

-

3041-3072 – a range of 31 VLANs reserved for customer VM and host networks

-

3073-3099 – a range reserved for system-level connectivity

Note:

VLANs 3073-3088 are in use for VM access to the internal ZFS feature

VLANs 3090-3093 are already in use for tagged traffic over the

/21subnets listed above. -

3968-4095 – a range reserved for Cisco internal device allocation

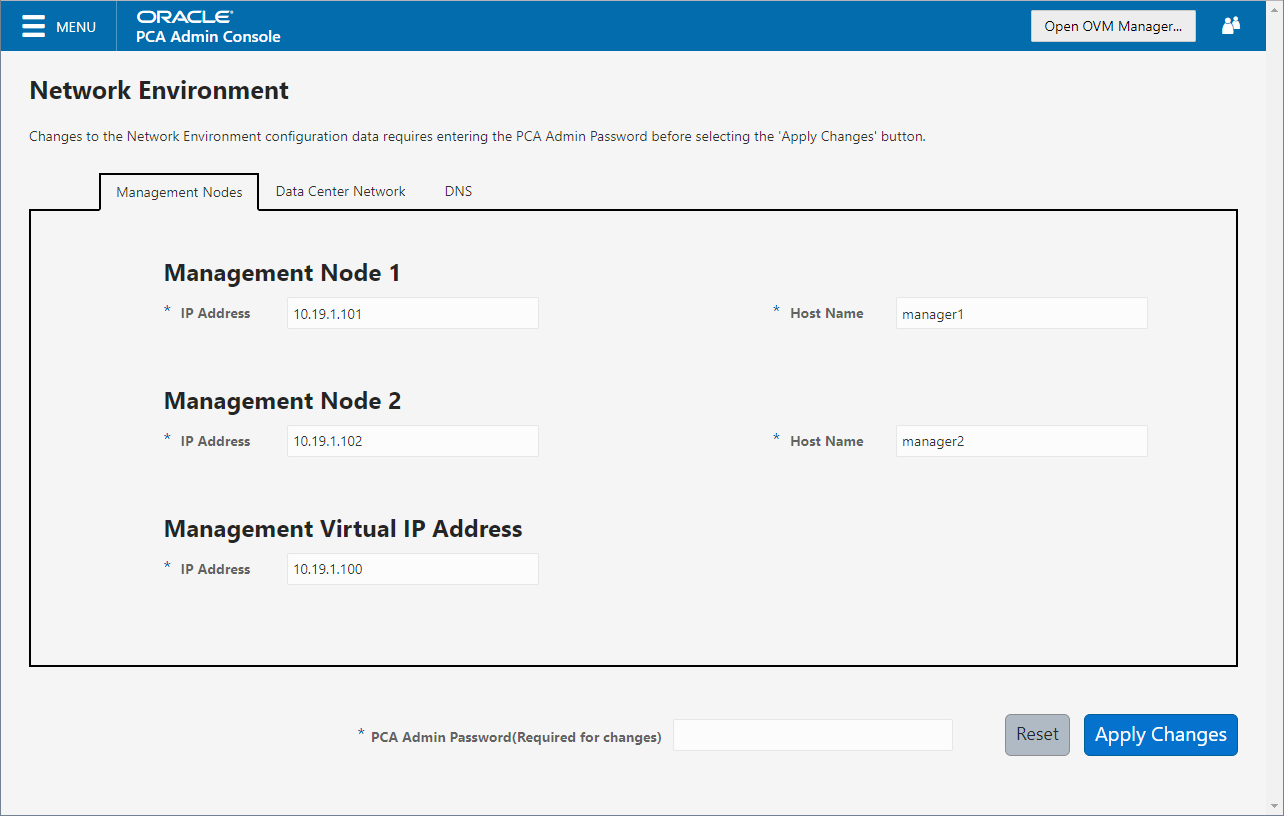

The Network Environment window is divided into three tabs: Management Nodes, Data Center Network, and DNS. Each tab is shown in this section, along with a description of the available configuration fields.

You can undo the changes you made in any of the tabs by clicking the Reset button. To confirm the configuration changes you made, enter the Dashboard Admin user password in the applicable field at the bottom of the window, and click Apply Changes.

Note:

When you click Apply Changes, the configuration settings in all three tabs are applied. Make sure that all required fields in all tabs contain valid information before you proceed.

Figure 2-4 shows the Management Nodes tab. The following fields are available for configuration:

-

Management Node 1:

-

IP Address: Specify an IP address within your datacenter network that can be used to directly access this management node.

-

Host Name: Specify the host name for the first management node system.

-

-

Management Node 2:

-

IP Address: Specify an IP address within your datacenter network that can be used to directly access this management node.

-

Host Name: Specify the host name for the second management node system.

-

-

Management Virtual IP Address: Specify the shared Virtual IP address that is used to always access the active management node. This IP address must be in the same subnet as the IP addresses that you have specified for each management node.

Figure 2-4 Management Nodes Tab

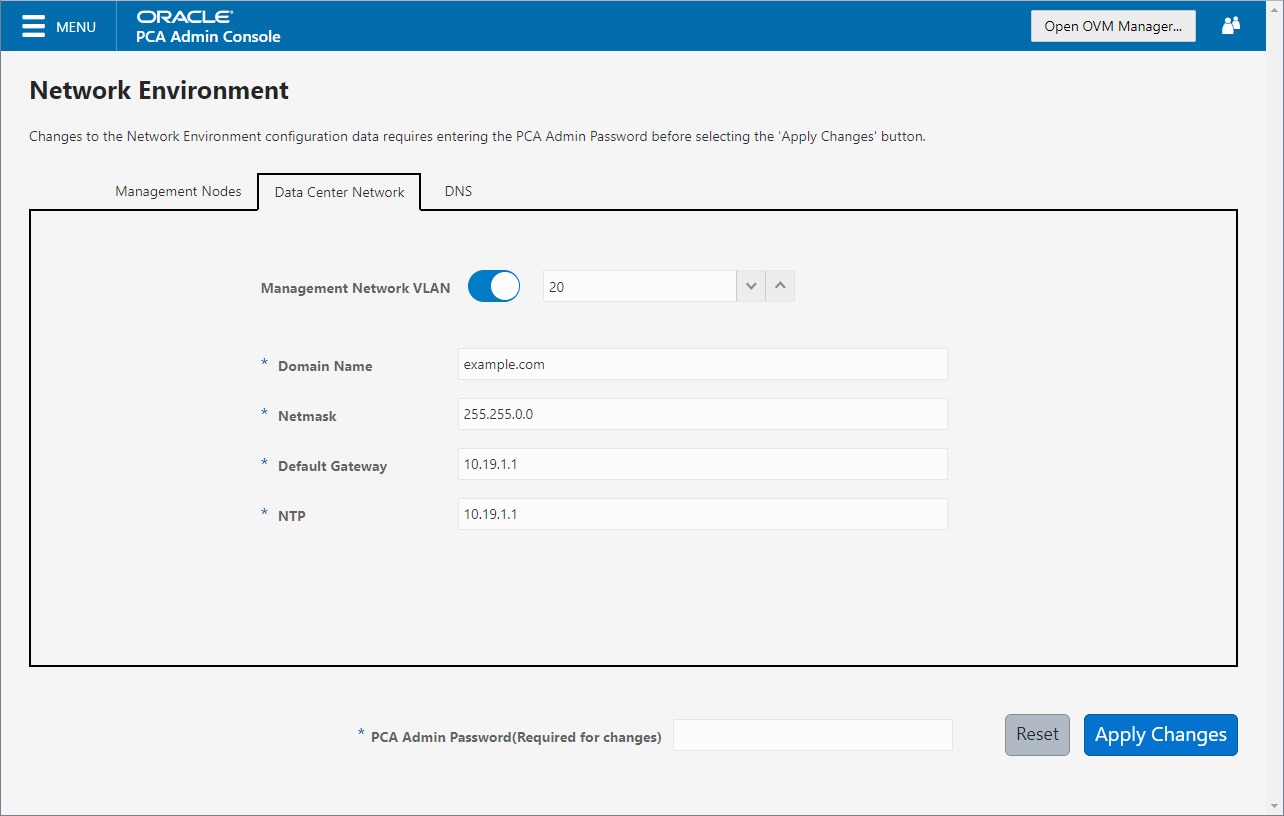

Figure 2-5 shows the Data Center Network tab. The following fields are available for configuration:

-

Management Network VLAN: The default configuration does not assume that your management network exists on a VLAN. If you have configured a VLAN on your switch for the management network, you should toggle the slider to the active setting and then specify the VLAN ID in the provided field.

Caution:

When a VLAN is used for the management network, and VM traffic must be enabled over the same network, you must manually configure a VLAN interface on the vx13040 interfaces of the necessary compute nodes to connect them to the VLAN with the ID in question. For instructions to create a VLAN interface on a compute node, see Create VLAN Interfaces in the Oracle VM Manager User's Guide.

-

Domain Name: Specify the data center domain that the management nodes belong to.

-

Netmask: Specify the netmask for the network that the Virtual IP address and management node IP addresses belong to.

-

Default Gateway: Specify the default gateway for the network that the Virtual IP address and management node IP addresses belong to.

-

NTP: Specify the NTP server that the management nodes and other appliance components must use to synchronize their clocks to.

Figure 2-5 Data Center Network Tab

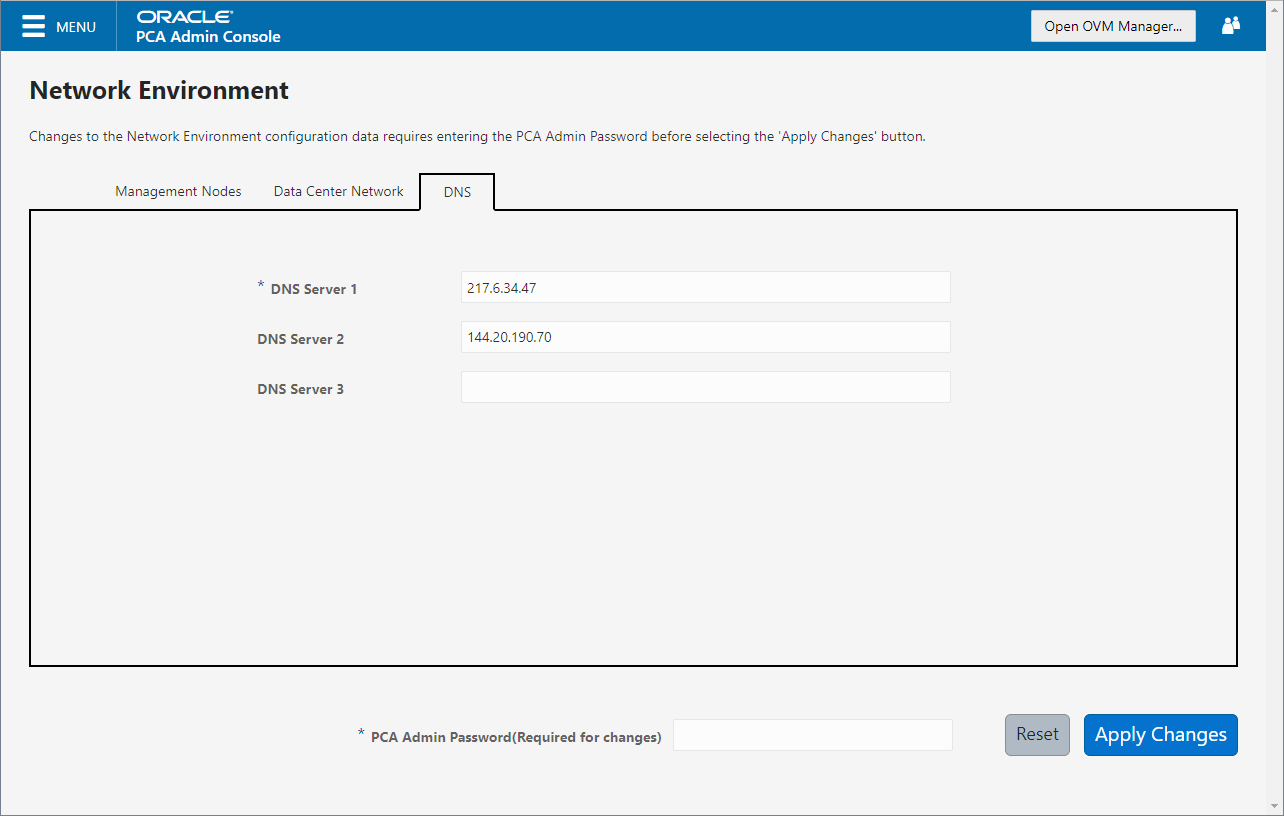

Figure 2-6 shows the Data Center Network tab. The following fields are available for configuration:

-

DNS Server 1: Specify at least one DNS server that the management nodes can use for domain name resolution.

-

DNS Server 2: Optionally, specify a second DNS server.

-

DNS Server 3: Optionally, specify a third DNS server.

Figure 2-6 DNS Tab

You must enter the current Private Cloud Appliance

Admin account password to make changes to any of these settings. Clicking the

Apply Changes button at the bottom of the page saves the settings

that are currently filled out in all three Network Environment tabs, and updates the

configuration on each of the management nodes. The ovca services are

restarted in the process, so you are required to log back in to the Dashboard afterward.

Functional Networking Limitations

There are different levels and areas of network configuration in an Oracle Private Cloud Appliance environment. For the correct operation of both the host infrastructure and the virtualized environment, it is critical that the administrator can make a functional distinction between the different categories of networking, and knows how and where to configure all of them. This section is intended as guidance to select the suitable interface to perform the main network administration operations.

In terms of functionality, practically all networks operate either at the appliance level or the virtualization level. Each has its own administrative interface: Oracle Private Cloud Appliance Dashboard and CLI on the one hand, and Oracle VM Manager on the other. However, the network configuration is not as clearly separated, because networking in Oracle VM depends heavily on existing configuration at the infrastructure level. For example, configuring a new public virtual machine network in Oracle VM Manager requires that the hosts or compute nodes have network ports already connected to an underlying network with a gateway to the data center network or internet.

A significant amount of configuration – networking and other – is pushed from the appliance level to Oracle VM during compute node provisioning. This implies that a hierarchy exists; that appliance-level configuration operations must be explored before you consider making changes in Oracle VM Manager beyond the standard virtual machine management.

Network Configuration

This section describes the Oracle Private Cloud Appliance and Oracle VM network configuration.

-

Virtual Machine Network

By default, a fully provisioned Private Cloud Appliance is ready for virtual machine deployment. In Oracle VM Manager you can connect virtual machines to these networks directly:

-

default_external, created on thevx13040VxLAN interfaces of all compute nodes during provisioning -

default_internal, created on thevx2VxLAN interfaces of all compute nodes during provisioning

Also, you can create additional VLAN interfaces and VLANs with the Virtual Machine role. For virtual machines requiring public connectivity, use the compute nodes'

vx13040VxLAN interfaces. For internal-only VM traffic, use thevx2VxLAN interfaces. For details, see Configuring Network Resources for Virtual Machines.Note:

Do not create virtual machine networks using the

ethxports. These are detected in Oracle VM Manager as physical compute node network interfaces, but they are not cabled. Also, thebondxports and default VLAN interfaces (tun-ext,tun-int,mgmt-intandstorage-int) that appear in Oracle VM Manager are part of the appliance infrastructure networking, and are not intended to be used in VM network configurations.Virtual machine networking can be further diversified and segregated by means of custom networks, which are described below. Custom networks must be created in the Private Cloud Appliance CLI. This generates additional VxLAN interfaces equivalent to the default

vx13040andvx2. The custom networks and associated network interfaces are automatically set up in Oracle VM Manager, where you can expand the virtual machine network configuration with those newly discovered network resources. -

-

Custom Network

Custom networks are infrastructure networks you create in addition to the default configuration. These are constructed in the same way as the default private and public networks, but using different compute node network interfaces and terminating on different spine switch ports. Whenever public connectivity is required, additional cabling between the spine switches and the next-level data center switches is required.

Because they are part of the infrastructure underlying Oracle VM, all custom networks must be configured through the Private Cloud Appliance CLI. The administrator chooses between three types: private, public or host network. For detailed information about the purpose and configuration of each type, see Network Customization.

If your environment has additional tenant groups, which are separate Oracle VM server pools, then a custom network can be associated with one or more tenant groups. This allows you to securely separate traffic belonging to different tenant groups and the virtual machines deployed as part of them. For details, see Tenant Groups.

Once custom networks have been fully configured through the Private Cloud Appliance CLI, the networks and associated ports automatically appear in Oracle VM Manager. There, additional VLAN interfaces can be configured on top of the new VxLAN interfaces, and then used to create more VLANs for virtual machine connectivity. The host network is a special type of custom public network, which can assume the Storage network role and can be used to connect external storage directly to compute nodes.

-

Network Properties

The network role is a property used within Oracle VM. Most of the networks you configure, have the Virtual Machine role, although you could decide to use a separate network for storage connectivity or virtual machine migration. Network roles – and other properties such as name and description, which interfaces are connected, properties of the interfaces and so on – can be configured in Oracle VM Manager, as long as they do not conflict with properties defined at the appliance level.

Modifying network properties of the VM networks you configured in Oracle VM Manager involves little risk. However, you must not change the configuration – such as network roles, ports and so on – of the default networks:

eth_management,mgmt_internal,storage_internal,underlay_external,underlay_internal,default_external, anddefault_internal. For networks connecting compute nodes, including custom networks, you must use the Private Cloud Appliance CLI. Furthermore, you cannot modify the functional properties of a custom network: you have to delete it and create a new one with the required properties.The maximum transfer unit (MTU) of a network interface, standard port or bond, cannot be modified. It is determined by the hardware properties or the SDN configuration, which cannot be controlled from within Oracle VM Manager.

-

VLAN Management

With the exception of the underlay VLAN networks configured through SDN, and the appliance management VLAN you configure in the Network Settings tab of the Oracle Private Cloud Appliance Dashboard, all VLAN configuration and management operations are performed in Oracle VM Manager. These VLANs are part of the VM networking.

Tip:

When a large number of VLANs are required, it is good practice not to generate them all at once, because the process is time-consuming. Instead, add (or remove) VLANs in groups of 10.

Network Customization

The Oracle Private Cloud Appliance controller software allows you to add custom networks at the appliance level. This means that certain hardware components require configuration changes to enable the additional connectivity. The new networks are then configured automatically in your Oracle VM environment, where they can be used for isolating and optimizing network traffic beyond the capabilities of the default network configuration. All custom networks, both internal and public, are VLAN-capable.

The virtual machines hosted on the Private Cloud Appliance have access to external compute resources and storage, through the default external facing networks, as soon as the Oracle VM Manager is accessible.

If you need additional network functionality, custom networks can be configured for virtual machines and compute nodes. For example, a custom network can provide virtual machines with additional bandwidth or additional access to external compute resources or storage. Or you can use a custom network if compute nodes need to access storage repositories and data disks contained on external storage. The sections below describe how to configure and cable your Private Cloud Appliance for these custom networks.

Attention:

Do not modify the network configuration while upgrade operations are running. No management operations are supported during upgrade, as these may lead to configuration inconsistencies and significant repair downtime.

Attention:

Custom networks must never be deleted in Oracle VM Manager. Doing so would leave the environment in an error state that is extremely difficult to repair. To avoid downtime and data loss, always perform custom network operations in the Private Cloud Appliance CLI.

Caution:

The following network limitations apply:

-

The maximum number of custom external networks is 7 per tenant group or per compute node.

-

The maximum number of custom internal networks is 3 per tenant group or per compute node.

-

The maximum number of VLANs is 256 per tenant group or per compute node.

-

Only one host network can be assigned per tenant group or per compute node.

Caution:

When configuring custom networks, make sure that no provisioning operations or virtual machine environment modifications take place. This might lock Oracle VM resources and cause your Private Cloud Appliance CLI commands to fail.

Creating custom networks requires use of the CLI. The administrator chooses between three types: a network internal to the appliance, a network with external connectivity, or a host network. Custom networks appear automatically in Oracle VM Manager. The internal and external networks take the virtual machine network role, while a host network may have the virtual machine and storage network roles.

The host network is a particular type of external network: its configuration contains additional parameters for subnet and routing. The servers connected to it also receive an IP address in that subnet, and consequently can connect to an external network device. The host network is particularly useful for direct access to storage devices.

Configuring Custom Networks

For all networks with external connectivity, the spine Cisco Nexus 9336C-FX2 Switch ports must be specified so that these are reconfigured to route the external traffic. These ports must be cabled to create the physical uplink to the next-level switches in the data center. For detailed information, refer to "Appliance Uplink Configuration" in Network Requirements in the Oracle Private Cloud Appliance Installation Guide.

Creating a Custom Network

-

Using SSH and an account with superuser privileges, log into the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Launch the Private Cloud Appliance command line interface.

# pca-admin Welcome to PCA! Release: 2.4.4 PCA>

-

If your custom network requires public connectivity, you need to use one or more spine switch ports. Verify the number of ports available and carefully plan your network customizations accordingly. The following example shows how to retrieve that information from your system:

PCA> list network-port Port Switch Type State Networks ---- ------ ---- ----- -------- 1:1 ovcasw22r1 10G down None 1:2 ovcasw22r1 10G down None 1:3 ovcasw22r1 10G down None 1:4 ovcasw22r1 10G down None 2 ovcasw22r1 40G up None 3 ovcasw22r1 auto-speed down None 4 ovcasw22r1 auto-speed down None 5:1 ovcasw22r1 10G up default_external 5:2 ovcasw22r1 10G down default_external 5:3 ovcasw22r1 10G down None 5:4 ovcasw22r1 10G down None 1:1 ovcasw23r1 10G down None 1:2 ovcasw23r1 10G down None 1:3 ovcasw23r1 10G down None 1:4 ovcasw23r1 10G down None 2 ovcasw23r1 40G up None 3 ovcasw23r1 auto-speed down None 4 ovcasw23r1 auto-speed down None 5:1 ovcasw23r1 10G up default_external 5:2 ovcasw23r1 10G down default_external 5:3 ovcasw23r1 10G down None 5:4 ovcasw23r1 10G down None ----------------- 22 rows displayed Status: Success

-

For a custom network with external connectivity, configure an uplink port group with the uplink ports you wish to use for this traffic. Select the appropriate breakout mode.

PCA> create uplink-port-group MyUplinkPortGroup '1:1 1:2' 10g-4x Status: SuccessNote:

The port arguments are specified as

'x:y'wherexis the switch port number andyis the number of the breakout port, in case a splitter cable is attached to the switch port. The example above shows how to retrieve that information.You must set the breakout mode of the uplink port group. When a 4-way breakout cable is used, all four ports must be set to either 10Gbit or 25Gbit. When no breakout cable is used, the port speed for the uplink port group should be either 100Gbit or 40Gbit, depending on connectivity requirements. See create uplink-port-group for command details.

Network ports cannot be part of more than one network configuration.

-

Create a new network and select one of these types:

-

rack_internal_network: an Oracle VM virtual machine network with no access to external networking; no IP addresses are assigned to compute nodes. Use this option to allow virtual machines additional bandwidth beyond the default internal network. -

external_network: an Oracle VM virtual machine network with access to external networking; no IP addresses are assigned to compute nodes. Use this option to allow virtual machines additional bandwidth when accessing external storage on a physical network separate from the default external facing network. -

host_network: an Oracle VM compute node network with access to external networking; IP addresses are added to compute nodes. Use this option to allow compute nodes to access storage and compute resources external to the Private Cloud Appliance. This can also be used by virtual machines to access external compute resources just likeexternal_network.

Use the following syntax:

-

For an internal-only network, specify a network name.

PCA> create network MyInternalNetwork rack_internal_network Status: Success -

For an external network, specify a network name and the spine switch port group to be configured for external traffic.

PCA> create network MyPublicNetwork external_network MyUplinkPortGroup Status: Success

-

For a host network, specify a network name, the spine switch ports to be configured for external traffic, the subnet, and optionally the routing configuration.

PCA> create network MyHostNetwork host_network MyUplinkPortGroup \ 10.10.10 255.255.255.0 10.1.20.0/24 10.10.10.250 Status: Success

Note:

In this example the additional network and routing arguments for the host network are specified as follows, separated by spaces:

-

10.10.10= subnet prefix -

255.255.255.0= netmask -

10.1.20.0/24= route destination (as subnet or IPv4 address) -

10.10.10.250= route gateway

The subnet prefix and netmask are used to assign IP addresses to servers joining the network. The optional route gateway and destination parameters are used to configure a static route in the server's routing table. The route destination is a single IP address by default, so you must specify a netmask if traffic could be intended for different IP addresses in a subnet.

When you define a host network, it is possible to enter invalid or contradictory values for the Prefix, Netmask and Route_Destination parameters. For example, when you enter a prefix with "0" as the first octet, the system attempts to configure IP addresses on compute node Ethernet interfaces starting with 0. Also, when the netmask part of the route destination you enter is invalid, the network is still created, even though an exception occurs. When such a poorly configured network is in an invalid state, it cannot be reconfigured or deleted with standard commands. If an invalid network configuration is applied, use the

--forceoption to delete the network.Details of the create network command arguments are provided in create network in the CLI reference chapter.

Caution:

Network and routing parameters of a host network cannot be modified. To change these settings, delete the custom network and re-create it with updated settings.

-

-

-

Connect the required servers to the new custom network. You must provide the network name and the names of the servers to connect.

PCA> add network MyPublicNetwork ovcacn07r1 Status: Success PCA> add network MyPublicNetwork ovcacn08r1 Status: Success PCA> add network MyPublicNetwork ovcacn09r1 Status: Success

-

Verify the configuration of the new custom network.

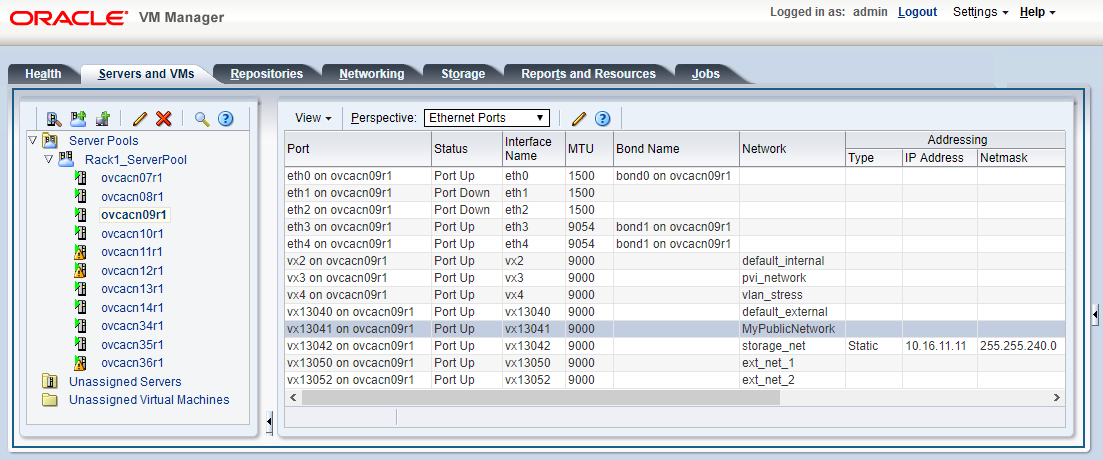

PCA> show network MyPublicNetwork ---------------------------------------- Network_Name MyPublicNetwork Trunkmode None Description None Ports ['1:1', '1:2'] vNICs None Status ready Network_Type external_network Compute_Nodes ovcacn07r1, ovcacn08r1, ovcacn09r1 Prefix None Netmask None Route Destination None Route Gateway None ---------------------------------------- Status: SuccessAs a result of these commands, a VxLAN interface is configured on each of the servers to connect them to the new custom network. These configuration changes are reflected in the Networking tab and the Servers and VMs tab in Oracle VM Manager.

Note:

If the custom network is a host network, the server is assigned an IP address based on the prefix and netmask parameters of the network configuration, and the final octet of the server's internal management IP address.

For example, if the compute node with internal IP address 192.168.4.9 were connected to the host network used for illustration purposes in this procedure, it would receive the address 10.10.10.9 in the host network.

Figure 2-7 shows a custom network named MyPublicNetwork, which is VLAN-capable and uses the compute node's

vx13041interface.Figure 2-7 Oracle VM Manager View of Custom Network Configuration

-

To disconnect servers from the custom network use the remove network command.

Attention:

Before removing the network connection of a server, make sure that no virtual machines rely on this network.

When a server is no longer connected to a custom network, make sure that its port configuration is cleaned up in Oracle VM.

PCA> remove network MyPublicNetwork ovcacn09r1 ************************************************************ WARNING !!! THIS IS A DESTRUCTIVE OPERATION. ************************************************************ Are you sure [y/N]:y Status: Success

Deleting Custom Networks

This section describes how to delete custom networks.

Deleting a Custom Network

Caution:

Before deleting a custom network, make sure that all servers have been disconnected from it.

-

Using SSH and an account with superuser privileges, log into the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Launch the Oracle Private Cloud Appliance command line interface.

# pca-admin Welcome to PCA! Release: 2.4.4 PCA>

-

Verify that all servers have been disconnected from the custom network. No vNICs or nodes should appear in the network configuration.

Caution:

Related configuration changes in Oracle VM must be cleaned up as well.

PCA> show network MyPublicNetwork ---------------------------------------- Network_Name MyPublicNetwork Trunkmode None Description None Ports ['1:1', '1:2'] vNICs None Status ready Network_Type external_network Compute_Nodes None Prefix None Netmask None Route_Destination None Route_Gateway None ----------------------------------------

-

Delete the custom network.

PCA> delete network MyPublicNetwork ************************************************************ WARNING !!! THIS IS A DESTRUCTIVE OPERATION. ************************************************************ Are you sure [y/N]:y Status: SuccessCaution:

If a custom network is left in an invalid or error state, and the delete command fails, you may use the

--forceoption and retry.

VM Storage Networks

Starting with Oracle Private Cloud Appliance Controller Software release

2.4.3, you can configure private storage networks that grant users access to the internal ZFS Storage Appliance from their Oracle VM environment. Private Cloud Appliance administrators with root access to the

management nodes can create and manage the required networks and ZFS shares (iSCSI/NFS) using

the pca-admin command line interface. To ensure you can use this

functionality, upgrade the storage network as described in Upgrading the Storage Network.

Private Cloud Appliance administrators can create up to sixteen VM storage networks which can be accessed by any virtual machine in any tenant group. End users of virtual machines configure their guest operating system to access one or more of these internal storage networks through NFS or iSCSI once the Private Cloud Appliance administrator has completed the setup.

The VM storage networks are designed to isolate different business systems or groups of end users from each other. For example, the HR department can use two VM storage networks for their virtual machines, while the payroll department can have three or four VM storage networks of their own. Each VM storage network is assigned a single, private non-routed VxLAN to ensure the network is isolated from other virtual machines owned by different end users. End users cannot gain root access to mange the internal ZFS Storage Appliance through the VM storage networks.

The ability to define internal storage networks directly for VMs was introduced in Oracle Private Cloud Appliance Controller Software release 2.4.3. Refer to Oracle Support Document 2722899.1 for important details before using this feature. Should you have any questions, contact Oracle support.

Tenant Groups

A standard Oracle Private Cloud Appliance environment built on a full rack configuration contains 25 compute nodes. A tenant group is a logical subset of a single Private Cloud Appliance environment. Tenant groups provide an optional mechanism for a Private Cloud Appliance administrator to subdivide the environment in arbitrary ways for manageability and isolation. The tenant group offers a means to isolate compute, network and storage resources per end customer. It also offers isolation from cluster faults.

Design Assumptions and Restrictions

Oracle Private Cloud Appliance supports a maximum of 8 tenant groups. This number includes the default tenant group, which cannot be deleted from the environment, and must always contain at least one compute node. Therefore, a single custom tenant group can contain up to 24 compute nodes, while the default Rack1_ServerPool can contain all 25.

Regardless of tenant group membership, all compute nodes are connected to all of the default Private Cloud Appliance networks. Custom networks can be assigned to multiple tenant groups. When a compute node joins a tenant group, it is also connected to the custom networks associated with the tenant group. When you remove a compute node from a tenant group, it is disconnected from those custom networks. A synchronization mechanism, built into the tenant group functionality, keeps compute node network connections up to date when tenant group configurations change.

When you reprovision compute nodes, they are automatically removed from their tenant groups, and treated as new servers. Consequently, when a compute node is reprovisioned, or when a new compute node is added to the environment, it is added automatically to Rack1_ServerPool. After successful provisioning, you can add the compute node to the appropriate tenant group.

When you create a new tenant group, the system does not create a storage repository for the new tenant group. An administrator must configure the necessary storage resources for virtual machines in Oracle VM Manager. See Viewing and Managing Storage Resources.

Configuring Tenant Groups

The tenant group functionality can be accessed through the Oracle Private Cloud Appliance CLI. With a specific set of commands, you manage the tenant groups, their member compute nodes, and the associated custom networks. The CLI initiates a number of Oracle VMoperations to set up the server pool, and a synchronization service maintains settings across the members of the tenant group.

Attention:

Do not modify the tenant group configuration while upgrade operations are running. No management operations are supported during upgrade, as these may lead to configuration inconsistencies and significant repair downtime.

Caution:

You must not modify the server pool in Oracle VM Manager because this causes inconsistencies in the tenant group configuration and disrupts the operation of the synchronization service and the Private Cloud Appliance CLI. Only server pool policies may be edited in Oracle VM Manager.

If you inadvertently used Oracle VM Manager to modify a tenant group, see Recovering from Tenant Group Configuration Mismatches.

Note:

For detailed information about the Private Cloud Appliance CLI tenant group commands, see Oracle Private Cloud Appliance Command Line Interface (CLI).

Creating and Populating a Tenant Group

-

Using SSH and an account with superuser privileges, log into the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Launch the Private Cloud Appliance command line interface.

# pca-admin Welcome to PCA! Release: 2.4.4 PCA>

-

Create the new tenant group.

PCA> create tenant-group myTenantGroup Status: Success PCA> show tenant-group myTenantGroup ---------------------------------------- Name myTenantGroup Default False Tenant_Group_ID 0004fb00000200008154bf592c8ac33b Servers None State ready Tenant_Group_VIP None Tenant_Networks ['storage_internal', 'mgmt_internal', 'underlay_internal', 'underlay_external', 'default_external', 'default_internal'] Pool_Filesystem_ID 3600144f0d04414f400005cf529410003 ---------------------------------------- Status: Success

The new tenant group appears in Oracle VM Manager as a new server pool. It has a 12GB server pool file system located on the internal ZFS Storage Appliance.

-

Add compute nodes to the tenant group.

If a compute node is currently part of another tenant group, it is first removed from that tenant group.

Caution:

If the compute node is hosting virtual machines, or if storage repositories are presented to the compute node or its current tenant group, removing a compute node from an existing tenant group will fail. If so, you have to migrate the virtual machines and unpresent the repositories before adding the compute node to a new tenant group.

PCA> add compute-node ovcacn07r1 myTenantGroup Status: Success PCA> add compute-node ovcacn09r1 myTenantGroup Status: Success

-

Add a custom network to the tenant group.

PCA> add network-to-tenant-group myPublicNetwork myTenantGroup Status: Success

Custom networks can be added to the tenant group as a whole. This command creates synchronization tasks to configure custom networks on each server in the tenant group.

Caution:

While synchronization tasks are running, make sure that no reboot or provisioning operations are started on any of the compute nodes involved in the configuration changes.

-

Verify the configuration of the new tenant group.

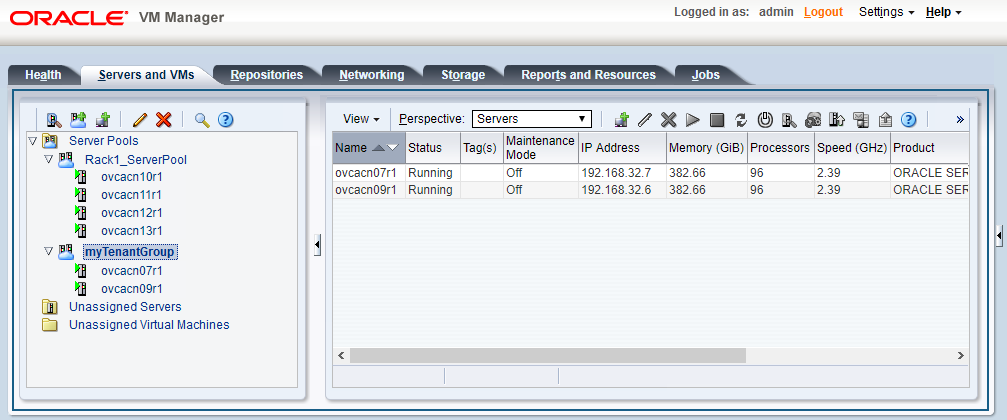

PCA> show tenant-group myTenantGroup ---------------------------------------- Name myTenantGroup Default False Tenant_Group_ID 0004fb00000200008154bf592c8ac33b Servers ['ovcacn07r1', 'ovcacn09r1'] State ready Tenant_Group_VIP None Tenant_Networks ['storage_internal', 'mgmt_internal', 'underlay_internal', 'underlay_external', 'default_external', 'default_internal', 'myPublicNetwork'] Pool_Filesystem_ID 3600144f0d04414f400005cf529410003 ---------------------------------------- Status: SuccessThe new tenant group corresponds with an Oracle VM server pool with the same name and has a pool file system. The command output also shows that the servers and custom network were added successfully.

These configuration changes are reflected in the Servers and VMs

tab in Oracle VM Manager. Figure 2-8

shows a second server pool named MyTenantGroup, which contains the two

compute nodes that were added as examples in the course of this procedure.

Note:

The system does not create a storage repository for a new tenant group. An administrator must configure the necessary storage resources for virtual machines in Oracle VM Manager. See Viewing and Managing Storage Resources.

Figure 2-8 Oracle VM Manager View of New Tenant Group

Reconfiguring and Deleting a Tenant Group

-

Identify the tenant group you intend to modify.

PCA> list tenant-group Name Default State ---- ------- ----- Rack1_ServerPool True ready myTenantGroup False ready ---------------- 2 rows displayed Status: Success PCA> show tenant-group myTenantGroup ---------------------------------------- Name myTenantGroup Default False Tenant_Group_ID 0004fb00000200008154bf592c8ac33b Servers ['ovcacn07r1', 'ovcacn09r1'] State ready Tenant_Group_VIP None Tenant_Networks ['storage_internal', 'mgmt_internal', 'underlay_internal', 'underlay_external', 'default_external', 'default_internal', 'myPublicNetwork'] Pool_Filesystem_ID 3600144f0d04414f400005cf529410003 ---------------------------------------- Status: Success -

Remove a network from the tenant group.

A custom network that has been associated with a tenant group can be removed again. The command results in serial operations, not using the synchronization service, to unconfigure the custom network on each compute node in the tenant group.

PCA> remove network-from-tenant-group myPublicNetwork myTenantGroup ************************************************************ WARNING !!! THIS IS A DESTRUCTIVE OPERATION. ************************************************************ Are you sure [y/N]:y Status: Success

-

Remove a compute node from the tenant group.

Use Oracle VM Manager to prepare the compute node for removal from the tenant group. Make sure that virtual machines have been migrated away from the compute node, and that no storage repositories are presented.

PCA> remove compute-node ovcacn09r1 myTenantGroup ************************************************************ WARNING !!! THIS IS A DESTRUCTIVE OPERATION. ************************************************************ Are you sure [y/N]:y Status: SuccessWhen you remove a compute node from a tenant group, any custom network associated with the tenant group is automatically removed from the compute node network configuration. Custom networks that are not associated with the tenant group are not removed.

-

Delete the tenant group.

Before attempting to delete a tenant group, make sure that all compute nodes have been removed.

Before removing the last remaining compute node from the tenant group, use Oracle VM Manager to unpresent any shared repository from the compute node, and then release ownership of it. For details, see support document Remove Last Compute Node from Tenant Group Fails with "There are still OCFS2 file systems" (Doc ID 2653515.1).

PCA> delete tenant-group myTenantGroup ************************************************************ WARNING !!! THIS IS A DESTRUCTIVE OPERATION. ************************************************************ Are you sure [y/N]:y Status: Success

When the tenant group is deleted, operations are launched to remove the server pool file system LUN from the internal ZFS storage appliance. The tenant group's associated custom networks are not destroyed.

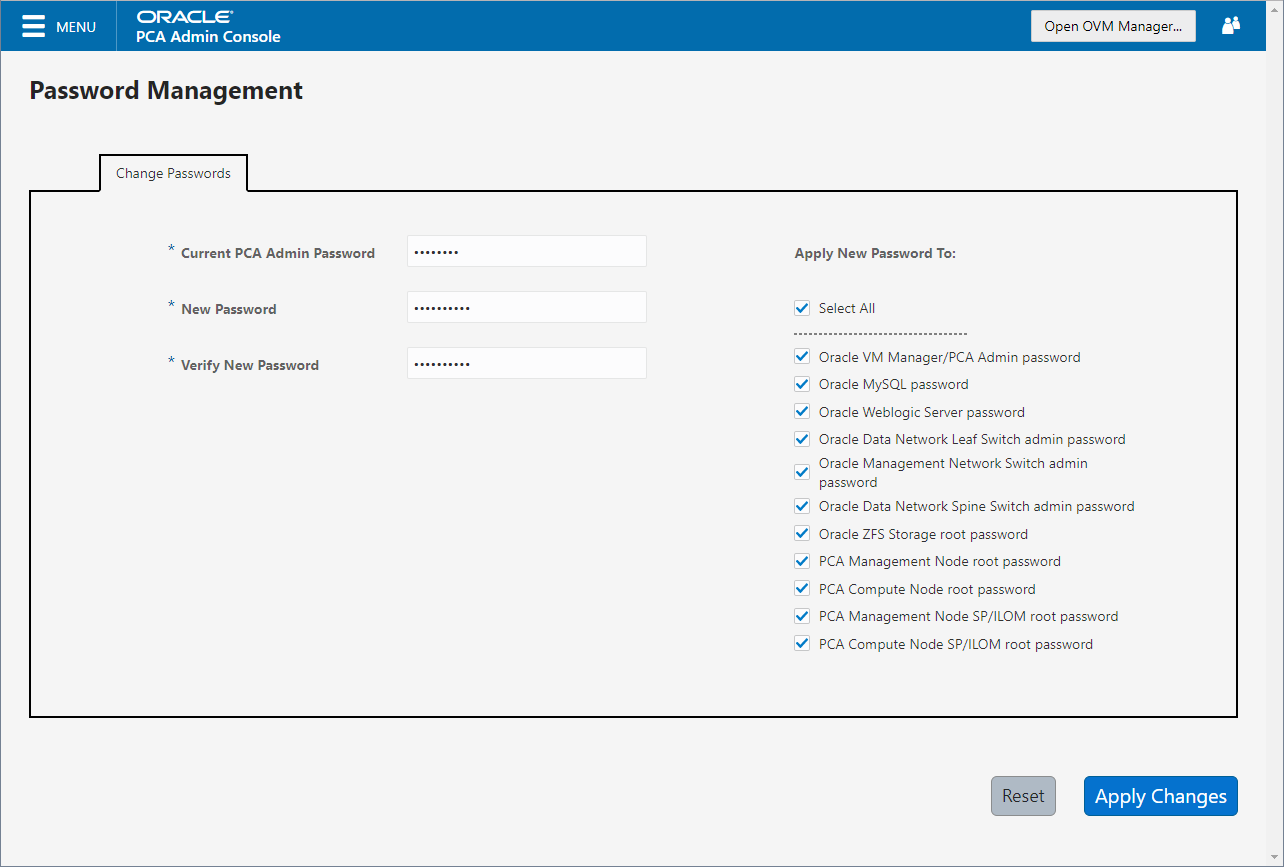

Authentication

The Password Management window is used to reset the global Oracle Private Cloud Appliance password and to set unique passwords for

individual components within the appliance. All actions performed via this tab require that

you enter the current password for the Private Cloud Appliance

admin user in the field labeled Current PCA Admin

Password. Fields are available to specify the new password value and to confirm the

value:

-

Current PCA Admin Password: You must provide the current password for the Private Cloud Appliance

adminuser before any password changes can be applied. -

New Password: Provide the value for the new password that you are setting.

-

Verify Password: Confirm the new password and check that you have not mistyped what you intended.

The window provides a series of check boxes that make it easy to select the level of granularity that you wish to apply to a password change. At this time, do not use the Select All button to apply a global password to all components that are used in the appliance. For more information see Changing Multiple Component Passwords Causes Authentication Failure in Oracle VM Manager. This action resets any individual passwords that you may have set for particular components. For stricter controls, you may set the password for individual components by simply selecting the check box associated with each component that you wish to apply a password to.

Caution:

Password changes are not instantaneous across the appliance, but are propagated through a task queue. When applying a password change, allow at least 30 minutes for the change to take effect. Do not attempt any further password changes during this delay. Verify that the password change has been applied correctly.

-

Select All: Apply the new password to all components. All components in the list are selected.

-

Oracle VM Manager/PCA admin password: Set the new password for the Oracle VM Manager and Oracle Private Cloud Appliance Dashboard

adminuser. -

Oracle MySQL password: Set the new password for the

ovsuser in MySQL used by Oracle VM Manager. -

Oracle WebLogic Server password: Set the new password for the

weblogicuser in WebLogic Server. -

Oracle Data Network Leaf Switch admin password: Set the new password for the

adminuser for the leaf Cisco Nexus 9336C-FX2 Switches. -

Oracle Management Network Switch admin password: Set the new password for the

adminuser for the Cisco Nexus 9348GC-FXP Switch. -

Oracle Data Network Spine Switch admin password: Set the new password for the

adminuser for the spine Cisco Nexus 9336C-FX2 Switches. -

Oracle ZFS Storage root password: Set the new password for the

rootuser for the ZFS Storage Appliance. -

PCA Management Node root password: Set the new password for the

rootuser for both management nodes. -

PCA Compute Node root password: Set the new password for the

rootuser for all compute nodes. -

PCA Management Node SP/ILOM root password: Set the new password for the

rootuser for the ILOM on both management nodes. -

PCA Compute Node SP/ILOM root password: Set the new password for the

rootuser for the ILOM on all compute nodes.

Figure 2-9 Password Management

The functionality that is available in the Oracle Private Cloud Appliance Dashboard is equally available via the Oracle Private Cloud Appliance CLI as described in update password.

Caution:

Passwords of components must not be changed manually as this will cause mismatches with the authentication details stored in the Oracle Private Cloud Appliance Wallet.

Health Monitoring

The Oracle Private Cloud Appliance Controller Software contains a

monitoring service, which is started and stopped with the ovca service on the

active management node. When the system runs for the first time, it creates an inventory

database and monitor database. Once these are set up and the monitoring service

is active, health information about the hardware components is updated continuously.

The inventory database is populated with information about the various components installed in the rack, including the IP addresses to be used for monitoring. With this information, the ping manager pings all known components every 3 minutes and updates the inventory database to indicate whether a component is pingable and when it was last seen online. When errors occur, they are logged in the monitor database. Error information is retrieved from the component ILOMs.

For troubleshooting purposes, historic health status details can be retrieved through the CLI support mode by an authorized Oracle Field Engineer. When the CLI is used in support mode, a number of additional commands are available, two of which are used to display the contents of the health monitoring databases.

-

Use

show db inventoryto display component health status information from the inventory database. -

Use

show db monitorto display errors logged in the monitoring database.

The appliance administrator can retrieve current component health status information from the Oracle Linux command line on the active management node by using the Oracle Private Cloud Appliance Health Check utility. The Health Check utility is built on the framework of the Oracle Private Cloud Appliance Upgrader, and is included in the Upgrader package. It detects the appliance network architecture and runs the sets of health checks defined for the system in question.

Checking the Current Health Status of an Oracle Private Cloud Appliance Installation

-

Using SSH and an account with superuser privileges, log in to the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Launch the Health Check utility.

# pca_healthcheck PCA Rack Type: PCA X8_BASE. Please refer to log file /nfs/shared_storage/pca_upgrader/log/pca_healthcheck_2019_10_04-12.09.45.log for more details.

After detecting the rack type, the utility executes the applicable health checks.

Beginning PCA Health Checks... Check Management Nodes Are Running 1/24 Check Support Packages 2/24 Check PCA DBs Exist 3/24 PCA Config File 4/24 Check Shares Mounted on Management Nodes 5/24 Check PCA Version 6/24 Check Installed Packages 7/24 Check for OpenSSL CVE-2014-0160 - Security Update 8/24 Management Nodes Have IPv6 Disabled 9/24 Check Oracle VM Manager Version 10/24 Oracle VM Manager Default Networks 11/24 Repositories Defined in Oracle VM Manager 12/24 PCA Services 13/24 Oracle VM Server Model 14/24 Network Interfaces on Compute Nodes 15/24 Oracle VM Manager Settings 16/24 Check Network Leaf Switch 17/24 Check Network Spine Switch 18/24 All Compute Nodes Running 19/24 Test for ovs-agent Service on Compute Nodes 20/24 Test for Shares Mounted on Compute Nodes 21/24 Check for bash ELSA-2014-1306 - Security Update 22/24 Check Compute Node's Active Network Interfaces 23/24 Checking for xen OVMSA-2014-0026 - Security Update 24/24 PCA Health Checks completed after 2 minutes

-

When the health checks have been completed, check the report for failures.

Check Management Nodes Are Running Passed Check Support Packages Passed Check PCA DBs Exist Passed PCA Config File Passed Check Shares Mounted on Management Nodes Passed Check PCA Version Passed Check Installed Packages Passed Check for OpenSSL CVE-2014-0160 - Security Update Passed Management Nodes Have IPv6 Disabled Passed Check Oracle VM Manager Version Passed Oracle VM Manager Default Networks Passed Repositories Defined in Oracle VM Manager Passed PCA Services Passed Oracle VM Server Model Passed Network Interfaces on Compute Nodes Passed Oracle VM Manager Settings Passed Check Network Leaf Switch Passed Check Network Spine Switch Failed All Compute Nodes Running Passed Test for ovs-agent Service on Compute Nodes Passed Test for Shares Mounted on Compute Nodes Passed Check for bash ELSA-2014-1306 - Security Update Passed Check Compute Node's Active Network Interfaces Passed Checking for xen OVMSA-2014-0026 - Security Update Passed --------------------------------------------------------------------------- Overall Status Failed --------------------------------------------------------------------------- Please refer to log file /nfs/shared_storage/pca_upgrader/log/pca_healthcheck_2019_10_04-12.09.45.log for more details.

-

If certain checks have resulted in failures, review the log file for additional diagnostic information. Search for text strings such as "error" and "failed".

# grep -inr "failed" /nfs/shared_storage/pca_upgrader/log/pca_healthcheck_2019_10_04-12.09.45.log 726:[2019-10-04 12:10:51 264234] INFO (healthcheck:254) Check Network Spine Switch Failed - 731: Spine Switch ovcasw22r1 North-South Management Network Port-channel check [FAILED] 733: Spine Switch ovcasw22r1 Multicast Route Check [FAILED] 742: Spine Switch ovcasw23r1 North-South Management Network Port-channel check [FAILED] 750:[2019-10-04 12:10:51 264234] ERROR (precheck:148) [Check Network Spine Switch ()] Failed 955:[2019-10-04 12:12:26 264234] INFO (precheck:116) [Check Network Spine Switch ()] Failed # less /nfs/shared_storage/pca_upgrader/log/pca_healthcheck_2019_10_04-12.09.45.log [...] Spine Switch ovcasw22r1 North-South Management Network Port-channel check [FAILED] Spine Switch ovcasw22r1 OSPF Neighbor Check [OK] Spine Switch ovcasw22r1 Multicast Route Check [FAILED] Spine Switch ovcasw22r1 PIM RP Check [OK] Spine Switch ovcasw22r1 NVE Peer Check [OK] Spine Switch ovcasw22r1 Spine Filesystem Check [OK] Spine Switch ovcasw22r1 Hardware Diagnostic Check [OK] [...]

-

Investigate and fix any detected problems. Repeat the health check until the system passes all checks.

Fault Monitoring

Beginning with Oracle Private Cloud Appliance Controller software release

2.4.3, the health checker is a service started by the ovca-daemon on the

active management node. Checks can be run manually from the command line, or by using

definitions in the scheduler. Depending on the check definition, the Private Cloud Appliance health checker, the Oracle VM health check, and the Private Cloud Appliance pre-upgrade checks can be invoked.

-

pca_healthcheckmonitors the health of system hardware components. For details, see Health Monitoring. -

ovm_monitormonitors the Oracle VM Manager objects and other environment factors. -

pca_upgradermonitors the system during an upgrade.

Health checking can be integrated with the ZFS Phone Home service to send reports to Oracle on a weekly basis. The Phone Home function must be activated by the customer and requires that the appliance is registered with Oracle Auto Service Request (ASR). No separate installation is required: All functions come with the controller software in Oracle Private Cloud Appliance Controller software release 2.4.3 and later. For configuration information, see Phone Home Service. For more information about ASR, see Oracle Auto Service Request (ASR).

Using Fault Monitoring Checks

The appliance administrator can access current component health status information from the

Oracle Linux command line on the active management node

by using the Oracle Private Cloud Appliance Fault Monitoring utility. The

Fault Monitoring utility is included in the ovca services. In addition, you

can schedule checks to run automatically. The Fault Monitoring utility detects the appliance

network architecture and runs the sets of health checks that are defined for that system.

Running Fault Monitor Tests Manually

The Fault Monitoring utility enables you to run an individual check, all the checks for a particular monitoring service, or all of the checks that are available.

-

Using SSH and an account with superuser privileges, log in to the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

List the available checks.

[root@ovcamn05r1 ~]# pca-faultmonitor --help usage: pca-faultmonitor [-h] [--list_all_monitors][--list_ovm_monitors] [--list_pca_healthcheck_monitors] [--list_pca_upgrader_monitors] [--run_all_monitors] [--run_ovm_monitors] [--run_pca_healthcheck_monitors] [--run_pca_upgrader_monitors][-m MONITOR_LIST] [--print_report] optional arguments: -h, --help show this help message and exit --list_all_monitors List all Fault Monitors(Oracle VM, pca_healthcheck and pca_upgrader) --list_ovm_monitors List Oracle VM Fault Monitors --list_pca_healthcheck_monitors List pca_healthcheck Fault Monitors --list_pca_upgrader_monitors List pca_upgrader Fault Monitors --run_all_monitors Run all Fault Monitors --run_ovm_monitors Run Oracle VM Fault Monitors --run_pca_healthcheck_monitors Run pca_healthcheck Fault Monitors --run_pca_upgrader_monitors Run pca_upgrader Fault Monitors -m MONITOR_LIST Runs a list of Fault Monitors. Each Fault Monitor must be specified with -m option --print_report Prints the report on console None PCA Rack type: hardware_orange Please refer the log file in /var/log/ovca-faultmonitor.log Please look at fault report in /nfs/shared_storage/faultmonitor/20200512/ Note: Reports will not be created for success statusList all monitors of a specified check.

[root@ovcamn05r1 faultmonitor]# pca-faultmonitor --list_pca_upgrader_monitors PCA Rack type: hardware_orange Please refer the log file in /var/log/faultmonitor/ovca-faultmonitor.log Please look at fault report in /nfs/shared_storage/faultmonitor/20200221/ Note: Reports will not be created for success status Listing all PCA upgrader faultmonitors check_ib_symbol_errors verify_inventory_cns validate_image check_available_space check_max_paths_iscsi check_serverUpdateConfiguration check_onf_error verify_password check_rpm_db verify_network_config check_yum_proxy check_motd check_yum_repo connect_mysql check_osa_disabled check_xsigo_configs check_pca_services check_mysql_desync_passwords check_storage_space verify_xms_cards ...

-

Run the desired checks.

-

Run all checks.

[root@ovcamn05r1 ~]# pca-faultmonitor --run_all_monitors

-

To run a specific check or list of checks, list one or more checks, each as an argument of a separate

-moption.[root@ovcamn05r1 ~]# pca-faultmonitor -m event_monitor -m check_storage_space

-

Run checks for a specific monitor.

[root@ovcamn05r1 ~]# pca-faultmonitor --run_pca_upgrader_monitors [root@ovcamn05r1 faultmonitor]# pca-faultmonitor --run_ovm_monitors PCA Rack type: hardware_orange Please refer the log file in /var/log/faultmonitor/ovca-faultmonitor.log Please look at fault report in /nfs/shared_storage/faultmonitor/20200220/ Note: Reports will not be created for success status Beginning OVM Fault monitor checks ... event_monitor 1/13 repository_utilization_monitor 2/13 storage_utilization_monitor 3/13 db_size_monitor 4/13 onf_monitor 5/13 db_backup_monitor 6/13 firewall_monitor 7/13 server_connectivity_monitor 8/13 network_monitor 9/13 port_flapping_monitor 10/13 storage_path_flapping_monitor 11/13 repository_mount_monitor 12/13 server_pool_monitor 13/13 -------------------------------------------------- Fault Monitor Report Summary -------------------------------------------------- OVM_Event_Monitor Success OVM_Repository_Utilization_Monitor Success OVM_Storage_Utilization_Monitor Success DB_Size_Monitor Success ONF_Monitor Success DB_Backup_Monitor Success Firewall_Monitor Success Server_Connectivity_Monitor Success Network_Monitor Warning Port_Flapping_Monitor Success Storage_Path_Flapping_Monitor Success Repository_Mount_Monitor Warning Server_Pool_Monitor Success -------------------------------------------------- Overall Failure -------------------------------------------------- PCA Rack type: hardware_orange Please refer the log file in /var/log/faultmonitor/ovca-faultmonitor.log Please look at fault report in /nfs/shared_storage/faultmonitor/20200220/ Note: Reports will not be created for success status Monitor execution completed after 5 minutes

-

-

If a check result is failed, review the console or log file for additional diagnostic information.

-

Investigate and fix any detected problems. Repeat the check until the system passes all checks.

Scheduling Fault Monitor Tests

By default, the run_ovm_monitors,

run_pca_healthcheck_monitors, and

run_pca_upgrader_monitors checks are scheduled to run weekly. You can

change the frequency of these checks or add other checks to the scheduler. You must restart

the ovca service to implement any schedule changes.

-

Using SSH and an account with superuser privileges, log in to the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Change the schedule properties in the

ovca-system.conffile.Use the following scheduling format:

* * * * * command - - - - - | | | | | | | | | –--- day of week (0-7, Sunday= 0 or 7) | | | –----- month (1-12) | | –------- day of month (1-31) | –--------- hour (0-23) –----------- minute (0-59)[root@ovcamn05r1 ~]# cat /var/lib/ovca/ovca-system.conf [faultmonitor] report_path: /nfs/shared_storage/faultmonitor/ report_format: json report_dir_cleanup_days: 10 disabled_check_list: validate_image enable_phonehome: 0 collect_report: 1 [faultmonitor_scheduler] run_ovm_monitors: 0 2 * * * run_pca_healthcheck_monitors: 0 1 * * * run_pca_upgrader_monitors: 0 0 * * * repository_utilization_monitor: 0 */2 * * * check_ovmm_version: */30 * * * *

Changing Fault Monitoring Options

-

Using SSH and an account with superuser privileges, log in to the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Change the appropriate property in the

ovca-system.conffile.The

report_formatoptions arejson,text, orhtml.[root@ovcamn05r1 ~]# cat /var/lib/ovca/ovca-system.conf [faultmonitor] report_path: /nfs/shared_storage/faultmonitor/ report_format: json report_dir_cleanup_days: 10 disabled_check_list: validate_image enable_phonehome: 1 collect_report: 1

Phone Home Service

The Fault Monitoring utility is designed so that the management nodes collect fault data reports and copy those reports to the ZFS Storage Appliance.

If you want Oracle Service to monitor these fault reports, you can configure the Phone Home service to push these reports to Oracle on a weekly basis. Oracle Private Cloud Appliance uses the ZFS Storage Appliance Phone Home service.

Activating the Phone Home Service for Oracle Private Cloud Appliance

Use the following procedure to configure your system to send fault reports to Oracle for automated service response.

-

Make sure Oracle Auto Service Request (ASR) is installed on the Private Cloud Appliance, the appliance is registered with ASR, and ASR is enabled on the appliance. See Oracle Auto Service Request (ASR).

-

Using SSH and an account with superuser privileges, log in to the active management node.

# ssh root@10.100.1.101 root@10.100.1.101's password: root@ovcamn05r1 ~]#

-

Enable Phone Home in the Fault Monitoring service.

By default, Phone Home is disabled in Private Cloud Appliance.

Use one of the following methods to enable the service:

-

Set the

enable_phonehomeproperty to1in theovca-system.conffile on both management nodes.[root@ovcamn05r1 ~]# edit /var/lib/ovca/ovca-system.conf [faultmonitor] report_path: /nfs/shared_storage/faultmonitor/ report_format: json report_dir_cleanup_days: 10 disabled_check_list: validate_image enable_phonehome: 1 collect_report: 1 -

Starting with release 2.4.4.1, you can also set the Phone Home property by using the Private Cloud Appliance CLI

set system-propertycommand. Execute the following command on both the active and passive management nodes:[root@ovcamn05r1 ~]# pca-admin Welcome to PCA! Release: 2.4.4.1 PCA> set system-property phonehome enable Status: SuccessFor more information, see set system-property.

-

-

Enable Phone Home in the ZFS Storage Appliance browser interface.

-

Log in to the ZFS Storage Appliance browser interface.

-

Go to Configuration > Services > Phone Home.

-

Click the power icon to bring the service online.

-

Data Collection for Service and Support

If Oracle Auto Service Request (ASR) is enabled on the Private Cloud Appliance, a service request will be created and sent to Oracle Support automatically for some failures. See Oracle Auto Service Request (ASR).

If an issue is not automatically reported by ASR, open a service request at My Oracle Support to request assistance from Oracle Support. You need an Oracle Premier Support Agreement and your Oracle Customer Support Identifier (CSI). If you do not know your CSI, find the correct Service Center for your country (https://www.oracle.com/support/contact.html), then contact Oracle Services to open a non-technical service request (SR) to get your CSI.

When you open a service request, provide the following information where applicable:

-

Description of the problem, including the situation where the problem occurs, and its impact on your operation.

-

Machine type, operating system release, browser type and version, locale and product release, patches that you have applied, and other software that might be affecting the problem.

-

Details of steps that you have taken to reproduce the problem.

-

Applicable logs and other support data.

This section describes how to collect an archive file with relevant log files and other health information, and how to upload the information to Oracle Support. Oracle Support uses this information to analyze and diagnose the issues. Oracle Support might request that you collect particular data and attach it to an existing service request.

Collecting Support Data

Collecting support data files involves logging in to the command line on components in your Private Cloud Appliance and copying files to a storage location external to the appliance environment, in the data center network. This can only be achieved from a system with access to both the internal appliance management network and the data center network. You can set up a physical or virtual system with those connections, or use the active management node.

The most convenient way to collect the necessary files, is to mount the target storage

location on the system using nfs, and copy the files using

scp with the appropriate login credentials and file path. The command

syntax should be similar to the following example:

# mkdir /mnt/mynfsshare # mount -t nfs storage-host-ip:/path-to-share /mnt/mynfsshare # scp root@component-ip:/path-to-file /mnt/mynfsshare/pca-support-data/

Using kdump

Oracle Support Services often require a

system memory dump. For this purpose, kdump must be installed and

configured on the component under investigation. By default, kdump is

installed on all Private Cloud Appliance compute nodes and

configured to write the system memory dump to the ZFS Storage Appliance at this location:

192.168.4.100:/export/nfs_repository1/.

For more information, see How to Configure 'kdump' for Oracle VM 3.4.x (Doc ID 2142488.1).

Using OSWatcher

Oracle Support Services often recommend that the OSWatcher tool be run for an extended period of time to diagnose issues. OSWatcher is installed by default on all Private Cloud Appliance compute nodes.

For more information, see Oracle Linux: How To Start OSWatcher Black Box (OSWBB) Every System Boot Using RPM oswbb-service (Doc ID 580513.1).

Using pca-diag

Oracle Support Services use the

pca-diag tool. This tool is part of the Private Cloud Appliance controller software installed on both

management nodes and on all compute nodes.

The pca-diag tool collects troubleshooting information from the Private Cloud Appliance environment. For more information,

see Oracle Private Cloud Appliance Diagnostics Tool.

Oracle Support might request specific

output from pca-diag to help diagnose and resolve hardware or software

issues.

Use the following procedure to use pca-diag to collect support data from

your system.

-

Log in to the active management node as root.

-

Run

pca-diagwith the appropriate command-line arguments.For the most complete set of diagnostic data, run the command with both the

ilomandvmpinfoarguments.-

pca-diag ilomUse the

pca-diag ilomcommand to detect and diagnose potential component hardware and software problems.[root@ovcamn05r1 ~]# pca-diag ilom Oracle Private Cloud Appliance diagnostics tool Gathering Linux information... Gathering system messages... Gathering PCA related files... Gathering OS version information... Gathering host specific information... Gathering PCI information... Gathering SCSI and partition data... Gathering OS process data... Gathering network setup information... Gathering installed packages data... Gathering disk information... Gathering ILOM Service Processor data... this may take a while Generating diagnostics tarball and removing temp directory ============================================================================== Diagnostics completed. The collected data is available in: /tmp/pcadiag_ovcamn05r1_<ID>_<date>_<time>.tar.bz2 ==============================================================================

-

pca-diag vmpinfoUse the

pca-diag vmpinfocommand to detect and diagnose potential problems in the Oracle VM environment.Note:

To collect diagnostic information for a subset of Oracle VM servers in the environment, run the command with an additional

serversparameter, as shown in the following example:[root@ovcamn05r1 ~]# pca-diag vmpinfo servers='ovcacn07r1,ovcacn08r1' Oracle Private Cloud Appliance diagnostics tool Gathering Linux information... Gathering system messages... Gathering PCA related files... Gathering OS version information... Gathering host specific information... Gathering PCI information... Gathering SCSI and partition data... Gathering OS process data... Gathering network setup information... Gathering installed packages data... Gathering disk information... Gathering FRU data and console history. Use ilom option for complete ILOM data.

When the

vmpinfo3script is called as a sub-process frompca-diag, the console output continues as follows:Running vmpinfo tool... Starting data collection Gathering files from servers: ovcacn07r1,ovcacn08r1 This process may take some time. Gathering OVM Model Dump files Gathering sosreports from servers The following server(s) will get info collected: [ovcacn07r1,ovcacn08r1] Gathering sosreport from ovcacn07r1 Gathering sosreport from ovcacn08r1 Data collection complete Gathering OVM Manager Logs Clean up metrics Copying model files Copying DB backup log files Invoking manager sosreport