6 Troubleshooting OCNRF

This section provides information to troubleshoot the common error which can be encountered during the installation and upgrade of Oracle Communications Network Repository Function (OCNRF).

Following are the troubleshooting procedures:

Generic Checklist

The following sections provide generic checklist for troubleshooting tips.

Deployment related tips

- Are OCNRF deployment, pods and services created, running and

available?

Execute following the command:

# kubectl -n <namespace> get deployments,pods,svcInspect the output, check the following columns:- AVAILABLE of deployment

- READY, STATUS and RESTARTS of pod

- PORT(S) of service

- Is the correct image used and the correct environment variables set in the

deployment?

Execute following the command:

# kubectl -n <namespace> get deployment <deployment-name> -o yamlInspect the output, check the environment and image.# kubectl -n nrf-svc get deployment ocnrf-nfregistration -o yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"ocnrf-nfregistration","namespace":"nrf-svc"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"ocnrf-nfregistration"}},"template":{"metadata":{"labels":{"app":"ocnrf-nfregistration"}},"spec":{"containers":[{"env":[{"name":"MYSQL_HOST","value":"mysql"},{"name":"MYSQL_PORT","value":"3306"},{"name":"MYSQL_DATABASE","value":"nrfdb"},{"name":"NRF_REGISTRATION_ENDPOINT","value":"ocnrf-nfregistration"},{"name":"NRF_SUBSCRIPTION_ENDPOINT","value":"ocnrf-nfsubscription"},{"name":"NF_HEARTBEAT","value":"120"},{"name":"DISC_VALIDITY_PERIOD","value":"3600"}],"image":"dsr-master0:5000/ocnrf-nfregistration:latest","imagePullPolicy":"Always","name":"ocnrf-nfregistration","ports":[{"containerPort":8080,"name":"server"}]}]}}}} creationTimestamp: 2018-08-27T15:45:59Z generation: 1 name: ocnrf-nfregistration namespace: nrf-svc resourceVersion: "2336498" selfLink: /apis/extensions/v1beta1/namespaces/nrf-svc/deployments/ocnrf-nfregistration uid: 4b82fe89-aa10-11e8-95fd-fa163f20f9e2 spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: ocnrf-nfregistration strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: ocnrf-nfregistration spec: containers: - env: - name: MYSQL_HOST value: mysql - name: MYSQL_PORT value: "3306" - name: MYSQL_DATABASE value: nrfdb - name: NRF_REGISTRATION_ENDPOINT value: ocnrf-nfregistration - name: NRF_SUBSCRIPTION_ENDPOINT value: ocnrf-nfsubscription - name: NF_HEARTBEAT value: "120" - name: DISC_VALIDITY_PERIOD value: "3600" image: dsr-master0:5000/ocnrf-nfregistration:latest imagePullPolicy: Always name: ocnrf-nfregistration ports: - containerPort: 8080 name: server protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: 2018-08-27T15:46:01Z lastUpdateTime: 2018-08-27T15:46:01Z message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: 2018-08-27T15:45:59Z lastUpdateTime: 2018-08-27T15:46:01Z message: ReplicaSet "ocnrf-nfregistration-7898d657d9" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1 - Check if the micro-services can access each other via REST

interface.

Execute following command:

# kubectl -n <namespace> exec <pod name> -- curl <uri>Example:# kubectl -n nrf-svc exec $(kubectl -n nrf-svc get pods -o name|cut -d'/' -f2|grep nfs) -- curl http://ocnrf-nfregistration:8080/nnrf-nfm/v1/nf-instances# kubectl -n nrf-svc exec $(kubectl -n nrf-svc get pods -o name|cut -d'/' -f2|grep nfr) -- curl http://ocnrf-nfsubscription:8080/nnrf-nfm/v1/nf-instancesNote:

These commands are in their simple form and display the logs only if there is 1 nrf<registration> and nf<subscription> pod deployed.

Application related tips

# kubectl -n <namespace> logs -f <pod name>You can use '-f' to follow the logs or 'grep' for specific pattern in the log output.

Example:

# kubectl -n nrf-svc logs -f $(kubectl -n nrf-svc get pods -o name|cut -d'/' -f2|grep nfr)

# kubectl -n nrf-svc logs -f $(kubectl -n nrf-svc get pods -o name|cut -d'/' -f2|grep nfs)Note:

These commands are in their simple form and display the logs only if there is 1 nrf<registration> and nf<subscription> pod deployed.Helm Install Failure

helm install

might fail. Following are some of the scenarios:

Incorrect image name in ocnrf-custom-values files

Problem

helm install might fail if incorrect image name is

provided in the ocnrf-custom-values file.

Error Code/Error Message

When kubectl get pods -n <ocnrf_namespace> is

executed, the status of the pods might be ImagePullBackOff or ErrImagePull.

Solution

- Edit ocnrf-custom-values file and provide release specific image name and tags. Refer to Customizing OCNRF for OCNRF images details.

- Execute

helm installcommand. - Execute

kubectl get pods -n <ocnrf_namespace>to verify if the status of all the pods is Running.

Docker registry is configured incorrectly

Problem

helm install might fail if docker registry is not

configured in all primary and secondary nodes.

Error Code/Error Message

When kubectl get pods -n <ocnrf_namespace> is

executed, the status of the pods might be ImagePullBackOff or ErrImagePull.

Solution

Configure docker registry on all primary and secondary nodes.

Continuous Restart of Pods

Problem

helm install might fail if MySQL primary and secondary

hosts may not be configured properly in ocnrf-custom-values.yaml.

Error Code/Error Message

When kubectl get pods -n <ocnrf_namespace> is

executed, the pods restart count increases continuously.

Solution

MySQL servers(s) may not be configured properly according to the pre-installation steps as mentioned in Configuring MySql database and user.

Custom Value File Parse Failure

Problem

Not able to parse ocnrf-custom-values-x.x.x.yaml, while running helm install.

Error Code/Error Message

Error: failed to parse ocnrf-custom-values-x.x.x.yaml: error converting YAML to JSON: yaml

Symptom

While creating the ocnrf-custom-values-x.x.x.yaml file, if the above mentioned error is received, it means that the file is not created properly. The tree structure may not have been followed and/or there may also be tab spaces in the file.

Solution

- Download the latest NRF templates zip file from OHC. Refer to Installation Tasks for more information.

- Follow the steps mentioned in the Installation Tasks section.

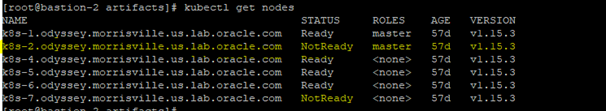

Kubernetes Node Failure

Problem

Kubernetes nodes goes down.

Error Code/Error Message

"NotReady" status is displayed against the Kubernetes node.

Symptom

Figure 6-1 Kubernetes Nodes Output

Solution

- Execute the following command to describe the node:

kubectl describe node <kubernete_node_name>Example:

kubectl describe node k8s-1.odyssey.morrisville.us.lab.oracle.com - Check Nodes utilization by running the command:

kubectl top nodes

Tiller Pod Failure

Problem

Tiller Pod is not ready to run helm install.

Error Code/Error Message

The error 'could not find a ready tiller pod' message is received.

Symptom

When helm ls is executed, 'could not find a ready

tiller pod' message is received.

Solution

- Delete the pre-installed

helm:

kubectl delete svc tiller-deploy -n kube-system kubectl delete deploy tiller-deploy -n kube-system - Install helm and tiller using this

commands:

helm init --client-only helm plugin install https://github.com/rimusz/helm-tiller helm tiller install helm tiller start kube-system