7 Policy Alerts

Policy Control Function Alerts

This section includes information about alerts for PCF.

Table 7-1 Common Alerts

| Alert Name | Description | Severity |

|---|---|---|

| PCF_SERVICES_DOWN | Alert if any PCF service down for 5mins for given namespace in AlertRules file | Critical |

| IngressErrorRateAbove10PercentPerPod | Alert if ingress error rate on each pod above 10% | Critical |

Table 7-2 SM Service Alerts

| Alert Name | Description | Severity |

|---|---|---|

| SMTrafficRateAboveThreshold | Alert if Ingress traffic on SM service reaches 90% of max MPS in 2mins | Major |

| SMIngressErrorRateAbove10Percent | Alert if Ingress transaction error rate exceeds 10% of all SM transactions in last 24 hours | Critical |

| SMEgressErrorRateAbove1Percent | Alert if Egress transaction error rate exceeds 1% of all SM transactions in last 24 hours | Minor |

Table 7-3 Diameter Connector Alerts

| Alert Name | Description | Severity |

|---|---|---|

| DiamTrafficRateAboveThreshold | Alert if Diameter Connector traffic reaches 90% of max MPS | Major |

| DiamIngressErrorRateAbove10Percent | Alert if error rate exceeds 10% of all Diameter transactions in last 24 hours | Critical |

| DiamEgressErrorRateAbove1Percent | Alert if Egress transaction error rate exceeds 1% of all Diameter transactions | Minor |

Table 7-4 User Service - UDR Alerts

| Alert Name | Description | Severity |

|---|---|---|

| PcfUdrIngressTrafficRateAboveThreshold | Alert if Ingress traffic from UDR reaches 90% of max MPS | Major |

| PcfUdrEgressErrorRateAbove10Percent | Alert if error rate exceeds 10% of all UDR transactions | Critical |

Table 7-5 User Service - CHF Alerts

| Alert Name | Description | Severity |

|---|---|---|

| PcfChfIngressTrafficRateAboveThreshold | Alert if Ingress traffic from CHF reaches 90% of max MPS | Major |

| PcfChfEgressErrorRateAbove10Percent | Alert if error rate exceeds 10% of all CHF transactions | Critical |

Table 7-6 PolicyDS Service Alerts

| Alert Name | Description | Severity |

|---|---|---|

| PolicyDsIngressTrafficRateAboveThreshold | Alert if Ingress traffic reaches 90% of max MPS | Major |

| PolicyDsIngressErrorRateAbove10Percent | Alert if Ingress error rate exceeds 10% of all PolicyDS transactions | Critical |

| PolicyDsEgressErrorRateAbove1Percent | Alert if Egress error rate exceeds 10% of all PolicyDS transactions | Minor |

PCF Alert Configuration

This section describes the Measurement based Alert rules configuration for PCF. The Alert Manager uses the Prometheus measurements values as reported by microservices in conditions under alert rules to trigger alerts.

PCF Alert Configuration

Note:

- The alertmanager and prometheus tools should run in Oracle CNE namespace, for example, occne-infra.

- Alert file is packaged with PCF Custom Templates. The PCF Templates.zip file can be downloaded from OHC. Unzip the PCF Templates.zip file to get PcfAlertRules.yaml file.

- Edit the value of the following parameters in

thePcfAlertRules.yaml file before following the procedure for

configuring the alerts:

- [ 90% of Max MPS].

For Example, if the value of Max MPS is 10000, set [ 90% of Max MPS] as 9000 in yaml file as follows:

sum(rate(ocpm_ingress_request_total{servicename_3gpp="npcf-smpolicycontrol"}[2m])) >=9000 -

kubernetes_namespace.

For Example,

If PCF is deployed at more than one site, set kubernetes_namespace in yaml file as follows:expr: up{kubernetes_namespace=~"pcf|ocpcf"} == 0If PCF is deployed at only one site, set kubernetes_namespace in yaml file as follows:

expr: up{kubernetes_namespace="pcf"}==0

- [ 90% of Max MPS].

- Find the config map to configure alerts in

prometheus server by executing the following command:

where, <Namespace> is the prometheus server namespace used in helm install command.kubectl get configmap -n <Namespace>For Example, assuming prometheus server is under occne-infra namespace, execute the following command to find the config map:

0utput: occne-prometheus-server 4 46dkubectl get configmaps -n occne-infra | grep prometheus-server - Take Backup of current config map

of prometheus server by executing the following command:

where, <Name> is the prometheus config map name used in helm install command.kubectl get configmaps <Name> -o yaml -n <Namespace> > /tmp/t_mapConfig.yaml - Check if alertspcf is present in the t_mapConfig.yaml file by

executing the following command:

cat /tmp/t_mapConfig.yaml | grep alertspcf - If alertspcf is present, delete the

alertspcf entry from the t_mapConfig.yaml file, by

executing the following command:

sed -i '/etc\/config\/alertspcf/d' /tmp/t_mapConfig.yamlNote:

This command should be executed only once. - If alertspcf is not present, add the alertspcf entry in

the t_mapConfig.yaml file by executing the following command:

sed -i '/rule_files:/a\ \- /etc/config/alertspcf' /tmp/t_mapConfig.yamlNote:

This command should be executed only once. - Reload the config map with the

modifed file by executing the following command:

kubectl replace configmap <Name> -f /tmp/t_mapConfig.yaml - Add PcfAlertRules.yaml file

into prometheus config map by executing the following command :

where, <PATH> is the location of the PcfAlertRules.yaml file.kubectl patch configmap <Name> -n <Namespace> --type merge --patch "$(cat <PATH>/PcfAlertRules.yaml)" - Restart prometheus-server pod.

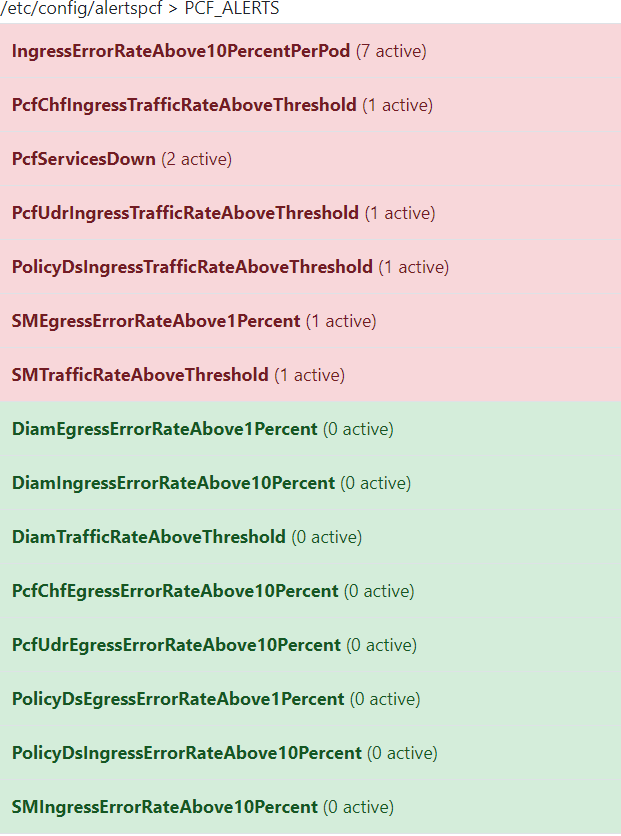

- Verify the alerts in prometheus GUI. Below screenshot displays the PCF

alerts:

Cloud Native Policy and Charging Rule Function Alerts

This section includes information about alerts for CNPCRF.

| Alarm Name | Alarm Description | Severity | App/Metrics |

|---|---|---|---|

| PRE_UNREACHABLE | PRE is unreachable | CRITICAL | Metrics |

| PDS_DOWN | PDS is down | CRITICAL | Metrics |

| PDS_UP | PDS is up | INFO | Metrics |

| DB_UNREACHABLE | Connectivity to DB lost | CRITICAL | Metrics |

| DB_REACHABLE | Connectivity to DB available | INFO | Metrics |

| SH_UNREACHABLE | Remote Sh connection is unreachable | CRITICAL | App |

| SY_UNREACHABLE | Remote Sy connection is unreachable | CRITICAL | App |

| SOAP_CONNECTOR_DOWN | SOAP Connector is down | CRITICAL | Metrics |

| SOAP_CONNECTOR_UP | SOAP Connector is up | INFO | Metrics |

| CONFIG_SERVER_DOWN | Config server is down | CRITICAL | Metrics |

| CONFIG_SERVER_UP | Config server is up | INFO | Metrics |

| DIAM_GATEWAY_DOWN | Diameter Gateway is down | CRITICAL | Metrics |

| DIAM_GATEWAY_UP | Diameter Gateway is up | INFO | Metrics |

| LDAP_GATEWAY_DOWN | LDAP Gateway is down | CRITICAL | Metrics |

| LDAP_GATEWAY_UP | LDAP Gateway is up | INFO | Metrics |

| LDAP_DATASOURCE_UNREACHABLE | LDAP Datasource is unreachable | CRITICAL | App |

| CM_SERVICE_DOWN | CM Service is down | CRITICAL | Metrics |

| CM_SERVICE_UP | CM Service is up | INFO | Metrics |

| CCA_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAI_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-I Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAT_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-T Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAU_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-U Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| ASA_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of ASA Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| RAA_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of RAA Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| STA_SEND_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of STA Send Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCA_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAI_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-I Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAT_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-T Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCAU_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of CCA-U Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| ASA_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of ASA Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| RAA_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of RAA Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| STA_RECV_FAIL_COUNT_EXCEEDS_THRESHOLD | Rate of STA Receive Failure has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCR_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of CCR Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCRI_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of CCR-I Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCRT_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of CCR-T Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| CCRU_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of CCR-U Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| ASR_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of ASR Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| RAR_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of RAR Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

| STR_TIMEOUT_COUNT_EXCEEDS_THRESHOLD | Rate of STR Timeout count has exceeded threshold limit(1000 times) in 1 min | CRITICAL | Metrics |

PCRF Alert Configuration

This section describes the Measurement based Alert rules configuration for CNPCRF. The Alert Manager uses the Prometheus measurements values as reported by microservices in conditions under alert rules to trigger alerts.

PCRF Alert Configuration

Note:

- The alert manager and prometheus tools should run in the default namespace.

- The PCRF Templates.zip file can be downloaded from OHC. Unzip the package after downloading to get cnpcrfalertrule.yaml and mib files.

- Find the config map to

configure alerts in prometheus server by executing the following command:

where, Namespace is the namespace used in helm install command.kubectl get configmap -n Namespace - Take Backup of current

config map of prometheus server by executing the following command:

where, Name is the release name used in helm install command.kubectl get configmaps NAME -o yaml -n Namespace /tmp/t_mapConfig.yaml - Delete the entry

alertscnpcrf

under rule_files, if present, in the Alert Manager config map by executing the

following command:

sed -i '/etc\/config\/alertscnpcrf/d' /tmp/t_mapConfig.yamlNote:

This command should be executed only once. - Add entry

alertscnpcrf

under rule_files in the prometheus server config map by executing the following

command:

sed -i '/rule_files:/a\ \- /etc/config/alertscnpcrf' /tmp/t_mapConfig.yamlNote:

This command should be executed only once. - Reload the modified

config map by executing the following command:

kubectl replace configmap <_NAME_> -f /tmp/t_mapConfig.yamlNote:

This step is not required for AlertRules. - Add cnpcrfAlertrules in

config map by executing the following command :

kubectl patch configmap _NAME_-server -n _Namespace_--type merge --patch "$(cat ~/cnpcrfAlertrules.yaml)"