3 NEF Features

Note:

- The performance and capacity of the NEF system may vary based on the call model, feature or interface configuration, and underlying CNE and hardware environment.

- Following are the guidelines on mandatory and optional

parameters validation in NEF as per the

specification 3GPP TS 29.500, section 5.2.7.4:

- Every request message is checked for presence of all the mandatory parameters. If a mandatory parameter is missing, that request is rejected.

- NEF validates only those parameters (mandatory or optional) coming from external entity like AF that impacts NEF processing or call flow (involves making some decision, db lookup, creating subscription, and so on).

- For all remaining parameters, NEF acts as pass-through and will not validate. If these parameters impact any processing by subsequent NFs in the call flow, NEF expects error to come from those NFs and that will be propagated to AF.

- NEF will not validate any messages that are coming from trusted 5GC entities (like internal NFs), it will only rely on 5GC entities to send correct messages or parameters. In any case, if a 5GC entity sends some wrong parameter then that results in failed lookups at NEF (example, invalid correlation id) and a appropriate error response gets generated.

3.1 Support for MSISDN-Less MO-SMS

The Support for MSISDN-Less-MO-SMS feature enables NEF to deliver the MSISDN-less MO-SMS notification message from Short Message Service - Service Center (SMSSC) to Application Function (AF).

With this feature, user equipment (UE) can send messages to AF without using Mobile Station International Subscriber Directory Number (MSISDN) through T4 interface, which is an interface between SMS-SC and NEF. NEF uses the Nnef_MSISDN-Less-MO-SMS API to send UE messages to AF.

- protect subscribers’ data.

- expand the capabilities of IoT devices.

- enhance the security and efficiency of messaging across various applications.

Note:

- AF addresses are pre-configured in NEF.

- The Supported-Features attribute in N33 interface is set to 0, which implies that there are no features defined towards AF.

Call Flows

This section describes the call flow of the MSISDN-Less-MO-SMS feature.Notification Flow

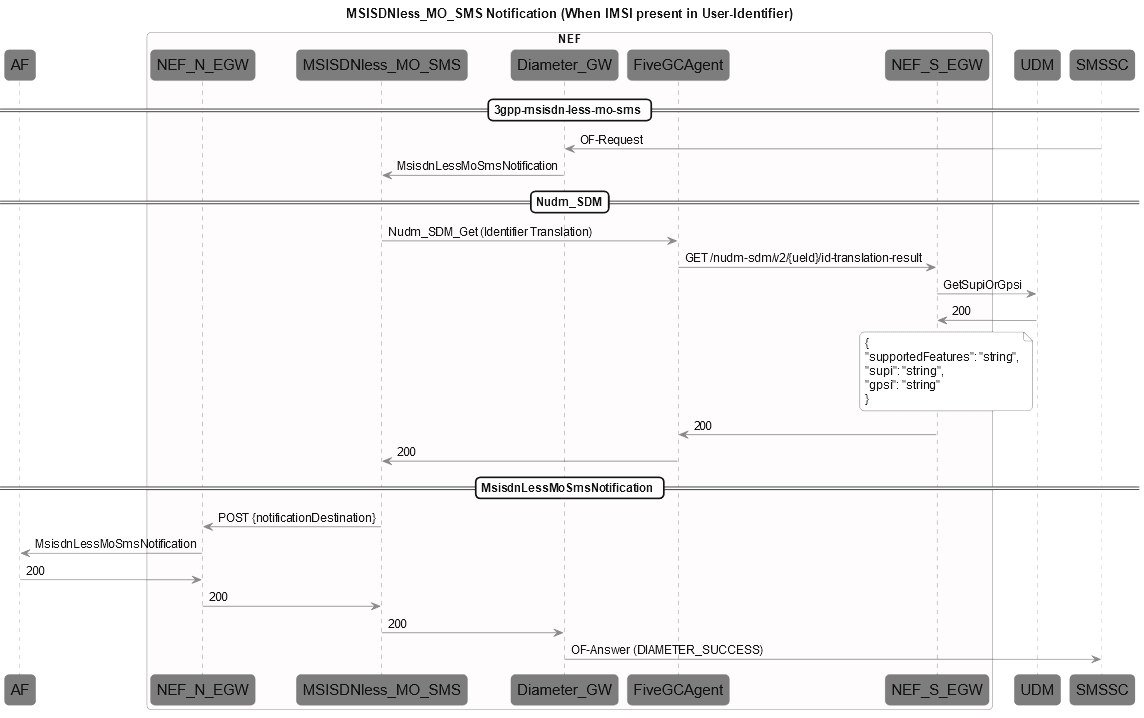

Figure 3-1 MSISDN-Less MO-SMS Notification Call Flow

- SMSSC sends the OF-Request message to NEF.

Sample Notification Message Request

SUCCESS: Diameter Message: OFR Version: 1 Msg Length: 296 Cmd Flags: REQ,PXY Cmd Code: 8388645 App-Id: 16777313 Hop-By-Hop-Id: 2196095456 End-To-End-Id: 1868559833 Origin-Host (264,M,l=17) = T6-client Origin-Realm (296,M,l=22) = mme.oracle.com Session-Id (263,M,l=17) = sesion123 User-Identifier (3102,VM,v=10415,l=40) = External-Identifier (3111,VM,v=10415,l=27) = 1234@oracle.com SC-Address (3300,VM,v=10415,l=22) = 8967452301 SM-RP-UI (3301,VM,v=10415,l=82) = 710D0B911326880736F40000A91506050412121213F7FBDD454E87CDE1B0DB357EB701 Auth-Session-State (277,M,l=12) = NO_STATE_MAINTAINED (1) Vendor-Specific-Application-Id (260,M,l=32) = Vendor-Id (266,M,l=12) = 10415 Auth-Application-Id (258,M,l=12) = 16777313 Destination-Realm (283,M,l=18) = oracle.comTable 3-1 Parameters for Request Message

Attribute Name Data Type Description Origin-Host DiameterIdentity The Origin-Host AVP identifies the endpoint that originated the Diameter message. Relay agents must not modify this AVP. The value of the Origin-Host AVP is guaranteed to be unique within a single host. Origin-Realm DiameterIdentity This AVP contains the Realm of the originator of any Diameter message and must be present in all messages. This AVP should be placed as close to the Diameter header as possible. Session-Id UTF8String The Session-Id AVP is used to identify a specific session. All messages pertaining to a specific session must include only one Session-Id AVP and the same value must be used throughout the life of a session. Each Session-Id is eternally unique. When present, the Session-Id should appear immediately following the Diameter Header. User-Identifier Grouped The User-Identifier AVP contains the different identifiers used by the UE. User-Identifier.External-Identifier UTF8String This information element contains External-Identifier.User-Identifier.User-Name UTF8String This information element contains IMSI. User-Identifier.MSISDN OctetString This information element contains MSISDN. SC-Address OctetString The SC-Address AVP contains the E164 number of the SMS-SC and is encoded as TBCD-string This AVP shall not include leading indicators for the nature of address and the numbering plan; it shall contain only the TBCD-encoded digits of the address. SM-RP-UI OctetString The SM-RP-UI contains a short message transfer protocol data uni and represents the user data field carried by the short message service relay sub-layer protocol. Its maximum length is of 200 octets. Note: For further details on SMS-SUBMIT message, refer to TS 23.040.

The shortcode and applicationPort are extracted from this field.

Note:The

1 0 1destination address type (coded according to 3GPP TS 23.038 [9] GSM 7-bit default alphabet) is not supported. The data type for this is alphanumeric.Auth-Session-State Enumerated The Auth-Session-State AVP specifies whether state is maintained for a particular session. The client may include this AVP in requests as a hint to the server, but the value in the server's answer message is binding. - NEF uses the Diameter-GW

microservice to translate the message and sends it to MSISDNless_MO_SMS microservice.

The MSISDNless_MO_SMS microservice matches short code in the notification message with the pre-configured short codes in the NEF microservices to obtain the AF URL. If the short code does not match, NEF returns the error response with SM-Delivery-Failure-Cause is set to UNKNOWN_SERVICE_CENTRE and Experimental-Result is set to SM_DELIVERY_FAILUR_CAUSE_EXPERIMENTAL_RESULT_CODE = 5555.

- external ID is present in the notification message, NEF sends the message to AF.

- If the external ID is absent, NEF sends the Nudm_SDM_Get (Identifier Translation) message to UDM to obtain the external ID. If UDM sends an error response, Experimental-Result is set to SM_DELIVERY_FAILUR_CAUSE_EXPERIMENTAL_RESULT_CODE = 5555 and SM-Delivery-Failure-Cause is set to USER_NOT_SC-USER. If UDM responds with success code, NEF sends the message to AF.

- AF responds with success code, NEF responds with OF-Answer message

to SMSSC.

Sample Notification Message Response

SUCCESS: Diameter Message: OFA Version: 1 Msg Length: 236 Cmd Flags: PXY Cmd Code: 8388645 App-Id: 16777313 Hop-By-Hop-Id: 2196095456 End-To-End-Id: 1868559833 Session-Id (263,M,l=17) = sesion123 Result-Code (268,M,l=12) = DIAMETER_SUCCESS (2001) Origin-Host (264,M,l=26) = ocnef-diam-gateway Origin-Realm (296,M,l=18) = oracle.com Auth-Session-State (277,M,l=12) = NO_STATE_MAINTAINED (1) SM-RP-UI (3301,VM,v=10415,l=82) = 710D0B911326880736F40000A91506050412121213F7FBDD454E87CDE1B0DB357EB701 External-Identifier (3111,VM,v=10415,l=27) = 1234@oracle.com Auth-Session-State (277,M,l=12) = NO_STATE_MAINTAINED (1)Table 3-2 Parameters for Response Message

Attribute Name Data Type Description Origin-Host DiameterIdentity The Origin-Host AVP identifies the endpoint that originated the Diameter message. Relay agents must not modify this AVP. The value of the Origin-Host AVP is guaranteed to be unique within a single host. Origin-Realm DiameterIdentity This AVP contains the Realm of the originator of any Diameter message and must be present in all messages. This AVP should be placed as close to the Diameter header as possible. Session-Id UTF8String The Session-Id AVP is used to identify a specific session. All messages pertaining to a specific session must include only one Session-Id AVP and the same value must be used throughout the life of a session. Each session id is eternally unique. When present, the Session-Id should appear immediately following the Diameter Header. Result-Code Unsigned32 The Result-Code AVP indicates whether a particular request was completed successfully or whether an error occurred. Experimental-Result Grouped The Experimental-Result AVP indicates whether a particular vendor-specific request was completed successfully or whether an error occurred Supported Experimental-Result:

SM_DELIVERY_FAILUR_CAUSE_EXPERIMENTAL_RESULT_CODE = 5555

SM-Delivery-Failure-Cause Grouped The SM-Delivery-Failure-Cause AVP is of type Grouped, and contains information about the cause of the failure of a SM delivery with an optional Diagnostic information Supported SM-Delivery-Failure-Cause:

when short code doesnt match -> UNKNOWN_SERVICE_CENTRE when getGpsi from udm returns error -> USER_NOT_SC-USER

Auth-Session-State Enumerated The Auth-Session-State AVP specifies whether state is maintained for a particular session. The client may include this AVP in requests as a hint to the server, but the value in the server's answer message is binding. SM-RP-UI OctetString The SM-RP-UI contains a short message transfer protocol data uni and represents the user data field carried by the short message service relay sub-layer protocol. Its maximum length is of 200 octets. External-Identifier UTF8String The External-Identifier AVP shall contain an external identifier of the UE.

Managing Support for MSISDN-Less-MO-SMS for NEF

Enable

You can enable the MSISDN-Less-MO-SMS service by setting msisdnless_mo_sms parameter to true. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function Installation, Upgrade, and Fault Recovery Guide.

Configure

You can configure this feature using Helm parameters. You can create

the Model A, B, and D communication profiles using the

custom-values.yaml file.

For information about configuring the

msisdnlessmosms microservice parameters, see Oracle Communications Cloud Native Core, Network Exposure

Function Installation, Upgrade, and Fault Recovery Guide.

For information about configuring this feature through CNC Console, see Oracle Communications Cloud Native Core, Network Exposure Function User Guide.

NEF provides the MSISDN-Less-MO-SMS service related metrics for observing AF Session with this feature. For more information about the metrics, see Diameter Gateway Metrics and MSISDNless MO SMS Metrics.

3.2 Converged Charging Support for NEF

This feature facilitates NEF with charging capabilities for its northbound API invocation. This allows NEF to engage in converged charging through interaction with CHF NF using Network Charging Function (Nchf).

Note:

For further information, refer to TS 32.290 and TS 32.291 documents.For northbound API access, NEF performs convergent charging in collaboration with CHF.

Note:

- NEF uses Nchf_ConvergedCharging API only for PEC scenarios.

- The selection of the CHF can be configured in NEF by relying on NRF.

Call Flows

For invocation, after creating a AS Session with Quality of Service (QoS) call flow, NEF interacts with CHF to create a Call Detail Record (CDR) of API Invocation. It then processes the QoS payload to create a CDR and then passes the CDR message to 5G Core (5GC) with necessary headers. 5GC forwards the same request to CHF and allows NEF to create a CDR message on CHF for all the successful and failed call flows.

For notification, after notifying a AS Session with QoS call flow, NEF interacts with CHF to create a CDR of API Notification. It then processes the QoS payload to create a CDR in 5GC. The actual notification message in 5GC is asynchronously passed to CHF. 5GC then creates the CDR message and forwards the same request to CHF. NEF then creates CDR for all the successfully processed as well as failed notifications. The apiContent parameter holds the JSON sent to AF for the notification.

Note:

- This feature can be enabled through qualityofservice.converged.pe.charging parameter. For further information, see Oracle Communications Cloud Native Core, Network Exposure Function Installation and Upgrade Guide.

- The call flows are valid only if the feature is enabled.

- Currently, this feature is implemented only for QoS service API (3gpp-as-session-with-qos). For QoS service, the PRODUCER_NF is PCF for 5G and PCRF for 4G fallback. For further information on QoS call flow, refer to Support for AF Session with QoS section in Oracle Communications Cloud Native Core, Network Exposure Function User Guide.

API Invocation Call Flow in Converged Charging

Note:

- In case of an invocation, the message reaching QoS service is the confirmation for CDR generation.

- This call flow is not applicable for GET method.

In this following scenario, once the actual request from AF is complete, NEF initiates a one time charging request towards CHF using Nchf-convergedcharging_create API. This creates a converged charging request at CHF, which then can be used for further billing and other charging related functionalities.

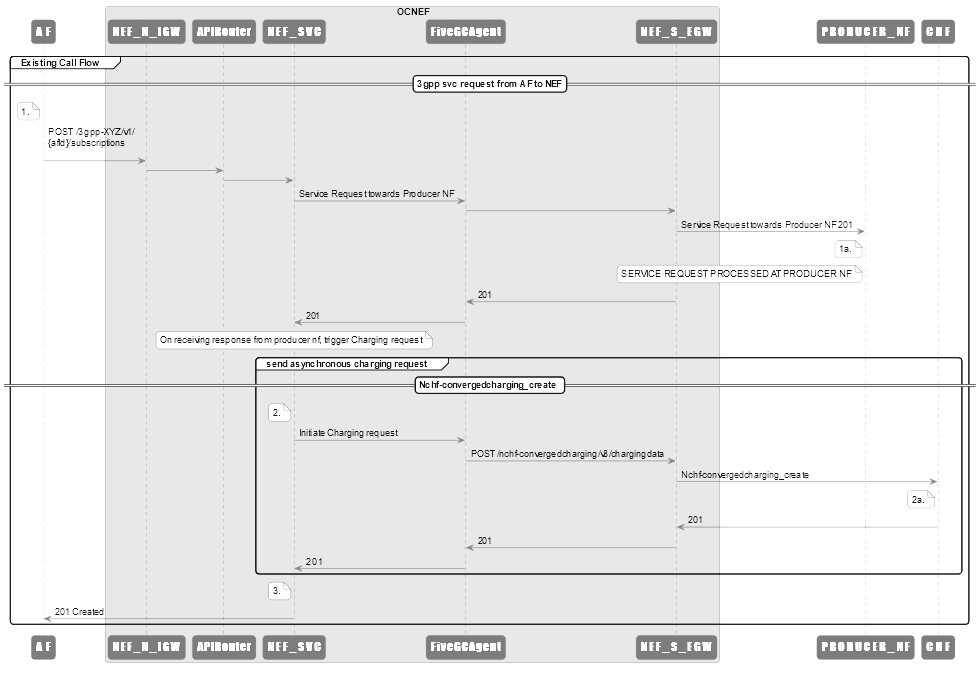

Figure 3-2 API Invocation Call Flow

- AF initiates a POST 3gpp-as-session-with-qos service request to

northbound NEF Ingress Gateway.

Note:

Currently, only the 3gpp-as-session-with-qos service API is supported. - After completing create request, NEF processes the payload to create a CDR (asynchronously).

- NEF sends the request to APIRouter.

- APIRouter validates the request and sends it to NEF service.

- NEF service then passes the CDR message to FiveGCAgent with the

necessary headers.

Note:

NEF creates CDR message on CHF for all the successful and failed call flows. - The request is then sent to southbound NEF Egress Gateway.

- The message then reaches the PRODUCER_NF, where the request is processed.

- Post processing, the PRODUCER_NF returns the response to NEF service through NEF Egress Gateway and FiveGCAgent.

- Once the response is received by NEF service, it initiates a charging request and a asynchronous converged post event charging request to CHF.

- The asynchronous call reaches FiveGCAgent, which is then translated to a charging request and sent to southbound NEF egress gateway.

- Southbound NEF Egress Gateway sends the request to CHF.

- CHF processes the request and sends the response to NEF Service.

API Notification Call Flow in Converged Charging

Any request sent from PRODUCER_NF is a Notification request. This call flow scenario represents how an NEF interacts with CHF to create a CDR of API Notification, post completion of notification AS Session with the QoS call flow.

Note:

In case of notification, the message reaching AF is the confirmation for CDR generation.In this following scenario, once the PRODUCER_NF sends a request towards NEF, NEF notifies the request to AF. NEF initiates a one time converged charging request towards CHF using Nchf-convergedcharging_create API, after the notification request is received. This creates a converged charging request at CHF, which then can be used for further billing and other charging related functionalities.

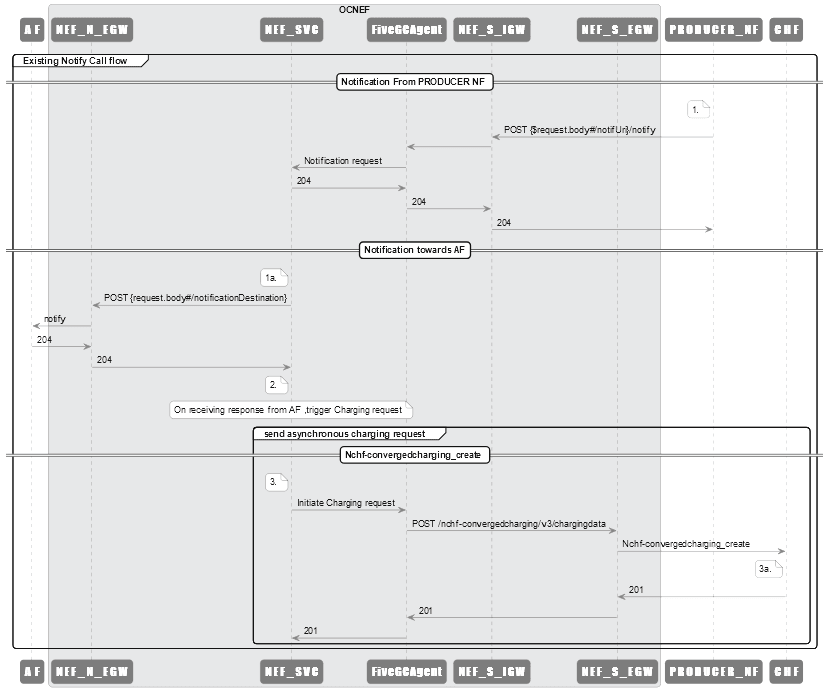

Figure 3-3 API Notification Call Flow

The above call flow represents an API notification request being processed by Converged Charging scenario in NEF:

- PRODUCER_NF initiates a POST notification request to southbound NEF Ingress Gateway.

- Southbound NEF ingress gateway sends this request to FiveGCAgent.

- FiveGCAgent sends this request to NEF Service.

- NEF Service sends this request to AF and also sends a response to PRODUCER_NF.

- After completing the notification request, NEF processes the payload to create a CDR in FiveGCAgent. The actual notification message is with FiveGCAgent, which needs to be passed to CHF (asynchronous).

- 5GC then creates the CDR message and forwards the same request to CHF.

- NEF Service initiates a asynchronous converged post event charging request and charging request to CHF.

- The asynchronous call reaches FiveGCAgent, which is then translated to a charging request and sent to southbound NEF Egress Gateway.

- Southbound NEF Egress Gateway sends the request to CHF.

- CHF processes the request and sends the response to NEF Service.

- NEF creates CDR for all successfully processed as well as failed notifications. The apiContent parameter holds the JSON sent to AF for the notification.

Managing Converged Charging Support for NEF

Enable

You can enable the Converged Charging service by setting qualityofservice.converged.pe.charging parameter to true. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function Installation, Upgrade, and Fault Recovery Guide.

Configure

You can configure this feature using Helm parameters. You can create

the Model A, B, and D communication profiles using the

custom-values.yaml file.

For information about configuring the

targetNfCommunicationProfileMapping.CHF,

fivegcagent.chfBaseUrl,

communicationProfiles, and egress-gateway

microservice parameters. See Oracle Communications Cloud

Native Core, Network Exposure Function Installation, Upgrade, and Fault

Recovery Guide.

NEF provides the Traffic Influence related metrics for observing AF Session with this feature. For more information about the metrics, see QoS Service Metrics.

3.3 Support for Device Trigger

The Device Trigger feature enables an Application Function (AF) to notify a particular User Equipment (UE) to perform application-specific tasks such as initiating communication with AFs, by sending a device trigger request through 5G core (5GC).

Device triggering is required when an AF does not contain information of IP address for the UE or if the UE is not reachable. Device trigger requests contain information required for an AF to send a message request to an appropriate UE and to route the message to the required application. This information processing method is known as trigger payload.

When an AF sends the device trigger message to an application at the UE side, the PDU Session establishment request is triggered. This is done by using the payload in the message, which contains information about the application at the UE side. After receiving this request, the application on the UE side initiates the PDU Session Establishment procedure.

Note:

This feature is required when AF is unable to establish communication with the UE.Device Trigger Call flow

The following call flow scenario represents how an AF sends a Device Trigger (DT) request to NEF to notify a particular UE.

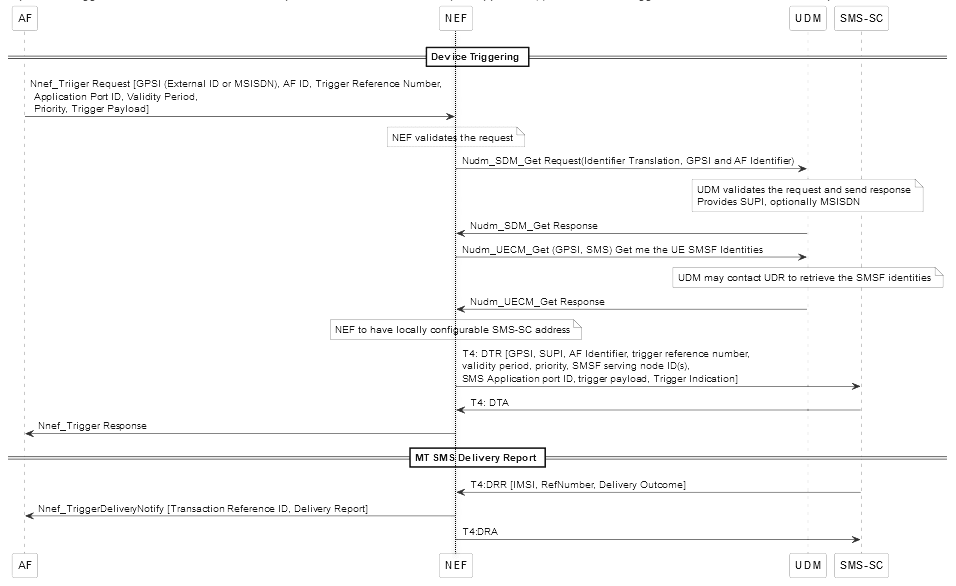

Figure 3-4 Device Trigger Call flow

- AF sends a Nnef_Trigger request, which includes either External ID or MSISDN of the UE to which the message must be sent, to NEF. This request consists of additional information such as Application Port ID, the Validity Period of the payload in the network, Priority, and the message content.

- NEF validates this request by verifying if the AF is allowed to use the requested service. Then, it interacts with UDM to get the corresponding internal IDs and SMSF identities.

- After receiving the required information from UDM, NEF sends a diameter request, which contains AF information, to SMS-SC.

- When the diameter request is acknowledged with a DTA response to

NEF, the request is sent to AF and the transaction is marked as complete.

Note:

In case the UE is unavailable in the Device Trigger, the SMS-SC retains the data until the UE is available. When the UE is available, the payload is sent. - If payload is successful, it provides a DRR notification (either success or failure of message delivery) from SMS-SC to NEF.

- Once NEF provides notification to AF, then it sends a DRA acknowledgment to SMS-SC.

Based on the reference number in the DRR, it can be identified for which Device Trigger request, the response is received.

Note:

There can be failures due to validity being expired or UE being unreachable.Managing the Device Trigger Feature

Enable

global.enableFeature.deviceTrigger parameter to true. For more

information, see Oracle Communications Cloud Native Core, Network

Exposure Function Installation, Upgrade, and Fault Recovery Guide.

Note:

Theglobal.enableFeature.convergedScefNef parameter must be

enabled in Helm configuration for this feature.

Configure

You can configure this feature using Helm parameters.

For information about configuring the

global.enableFeature.convergedScefNef,

global.enableFeature.deviceTrigger,

global.networkConfiguration.scsShortMessageEntity, and

ocnef-diam-gateway.peerNodes parameters, see Oracle Communications Cloud Native Core, Network Exposure

Function Installation, Upgrade, and Fault Recovery Guide.

NEF provides the Device Trigger related metrics and alerts for observing AF Session with this feature. For more information about the metrics, see NEF Metrics. For more information about the alerts, see Alerts.

3.4 API Invoker Onboarding and Offboarding

The API Invoker Onboarding and Offboarding feature enables Oracle Communications Cloud Native Core Network Exposure Function (NEF) to manage the exposure of the service APIs to different applications.

As per the 3GPP standard, API Invoker onboarding is the process of establishing trust between an API Invoker and an API Provider (NEF) to initiate a communication path. This process enables the API invoker application (external third-party applications or internal AF) to onboard the NEF system. Once the onboarding is complete, the invoker application is enabled to discover NEF and send service requests. The NEF services are provided based on the discovery group assigned to the invoker. For information related to discovery groups, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- API Invoker Onboarding: A one time process facilitated by CAPIF to enroll API invoker as a recognized application. The invoker negotiates the security methods and obtains authorizations from CAPIF for accessing the NEF services based on the discovery group assigned to it. For more information about discovery group, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- API Invoker Offboarding: The process to remove the API invoker as a recognized application of CAPIF. The API invoker triggers the offboarding procedure.

Example

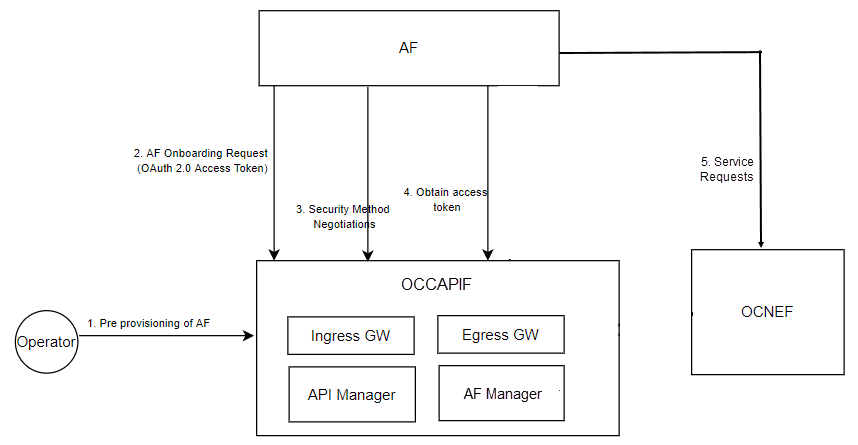

Figure 3-5 Example of Communication Establishment between AF and NEF

- Pre provisioning of AF: The operator sends a pre provisioning

request to CAPIF. The request contains the key details of the AF along with the

discovery group ID that needs to be assigned to the API invoker. For more

information about discovery group, see Oracle Communications Cloud Native

Core, Network Exposure Function REST Specification Guide.

As a response to this request, AF receives an access token to be included in the onboarding request (onboardingSecret) and the onboarding URI. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- Onboarding Request: AF sends the onboardedInvokers POST request to CAPIF for onboarding. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- Security Method Negotiation: NEF supports OAuth for service

API invocation. Therefore, AF sends the capif-security PUT request to CAPIF.

The API returns OAuth as the security method, in response. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- Obtain Authorization: The AF sends a capif-security POST request to CAPIF.

The API returns the authorization code. For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

Note:

The Authorization code must be included in each service request sent from AF to NEF through CAPIF.For more information about the onboarding procedure, see the "Security procedures for API invoker onboarding" section in 3GPP Technical Specification 33.122, Release 16.

- After the successful onboarding, AF starts sending service requests to NEF.

Managing API Invoker Onboarding and Offboarding

API Invoker Onboarding and Offboarding is a core functionality of NEF. It remains enabled by default.

Observe

CAPIF provides the metrics for observing API Invoker Onboarding and Offboarding feature. For more information about the metrics, see CAPIF Metrics.

3.5 Support for Model D Communication

As per the 3GPP 23.501 specifications, Network Functions (NFs) and services use different communication models to interact with each other. As a consumer NF, NEF communicates with different producer NFs, such as GMLC and UDM to handle the service requests coming from Application Functions (AFs). It supports the direct communication models and the Model D of the indirect communication method.

Support for Model D

In this model, NEF interacts with producer NFs through Service Communication Proxy (SCP).

The Model D Support functionality enables NEF to configure the necessary discovery and selection parameters required to find a suitable producer NF for handling a service request through SCP. SCP uses the discovery or selection parameters available in the request message to route the request to a suitable producer instance.

Model D Headers

Table 3-3 Supported Model D Headers

| Header Name | Description |

|---|---|

| 3gpp-Sbi-Target-Apiroot |

This header enables routing through SCP. The header contains the apiRoot of the target URI in a request sent to SCP during indirect communication. The header is used to indicate the apiRoot of the target URI when communicating indirectly with the HTTP server through SCP. This header is also used by SCP to indicate the apiRoot of the target URI if a new HTTP server is selected or reselected and there is no location header included in the response. Note: NEF does not include the 3gpp-Sbi-Target-apiRoot header in the initial requests as it does not discover a producer NF. In subsequent requests, the header value is set to the apiRoot received earlier in the location header of the service responses from the NF Service Producer. |

| 3gpp-Sbi-Binding |

This header is used to communicate the binding information from an HTTP server for storage and subsequent use by an HTTP client. This header contains a comma-delimited list of Binding Indications from an HTTP server for storage and use of HTTP clients. The absence of this parameter in a Binding Indication in a service request is interpreted as "callback". |

| 3gpp-Sbi-Routing-Binding |

This header is used in a service request to send the binding information (3gpp-Sbi-Binding header) to direct the service request to an HTTP server, which has the targeted NF Service Resource context. This header enables alternate routing for subsequent requests at SCP. It contains a routing binding Indication to direct a service request to an HTTP server, which has the targeted NF service resource context. |

| 3gpp-Sbi-Producer-Id | This header is used in a service response from SCP to NEF. As a consumer NF, NEF uses this header to identify the producer NF. |

| 3gpp-Sbi-Target-Nf-Id | The identity of the target NF that is being discovered. |

| 3gpp-Sbi-Discovery-target-nf-type |

The header indicating the type of the consumer NF |

| 3gpp-Sbi-Discovery-requester-nf-type | The NF type of the Requester NF. |

| 3gpp-Sbi-Discovery-service-names |

This header contains the service names used by the discovery NF. For example: when the target NF type is UDM, the

when the target NF type is GMLC, the

|

| 3gpp-Sbi-Discovery-supported-features |

List of features required to be supported by the target NF. This IE may be present only if the service-names attribute is present and if it contains a single service-name. It shall be ignored by the NRF otherwise. |

| 3gpp-Sbi-Access-Scope | This header indicates the access scope of the service request for NF service access authorization. |

| 3gpp-Sbi-Discovery-preferred-locality | This header indicates the preferred target NF location. For example, geographic location and data center. |

Example

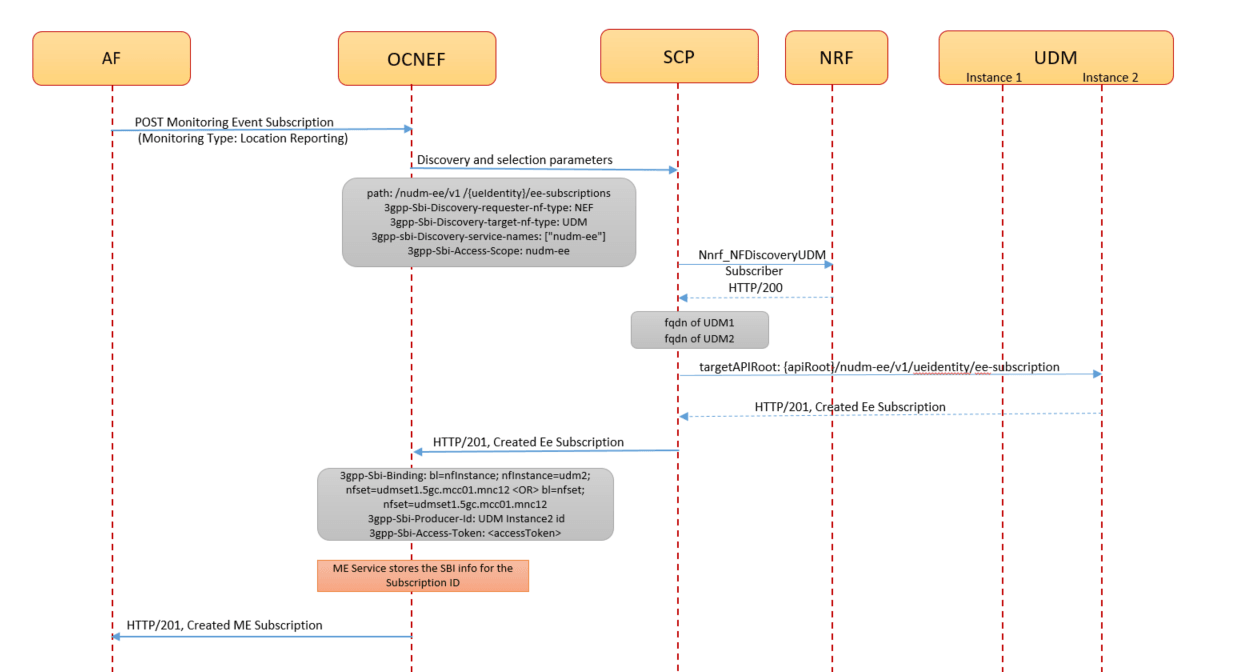

The following call flow describes an example of indirect communication between NEF and UDM using Model D functionality to cater to an ME Subscription request from AF:

Figure 3-6 Call Flow for ME subscription creation request through Model D indirect communication between NEF and UDM

- To subscribe to the location monitoring service, AF sends a

3gpp-monitoring-eventPOST request to NEF. - The request routes through the external Ingress Gateway to CAPIF.

After successful authorization, the CAPIF sends the

3gpp-monitoring-eventPOST request to the ME service with the AF identity (afid). - The ME service validates the request, creates a record in the

database, generates a subscription, and sends the subscription details to the

NEF 5GC Agent service. The 5GC Agent service processes and sends the following

discovery and selection parameters to SCP through the 5GC Egress GW service:

- parameters received through the NEF configuration

- (only for subsequent messages) parameters received through the binding headers

- SCP performs the NF discovery through NRF and receives the FQDN for the available UDM instances.

- SCP sends the

Nudm_EventExposurePOST request to UDM. The request contains the UE identity for which location reporting is to be enabled. - On successful processing, UDM sends the

HTTP/201 <CreatedEeSubscription>response to the SCP with the subscription details. - The SCP processes and sends the following information to ME Service

through the 5GC Egress GW service:

<CreatedEeSubscription>response- The binding headers containing the UDM instances details for subsequent requests

- ME service updates the NEF database with the following records:

- The updated subscription records

- The SBI information corresponding to the subscription ID. This information is used while routing the subsequent requests to the same UDM.

- ME service sends the

201/HTTP <MonitoringEventSubscriptionCreated>response to the AF.

Managing Support for Model D Communication

- Enable SBI routing by setting the values of all the flags in

routesConfig.metadatato true and configuring the sbiRoutingfilterName1underroutesConfig. For more information, see SbiRouting Configuration. - Create NF Communication profile for Model D communication. For more information, see Communication Profile Configuration.

- Assign the communication profile created for Model D communication to a target NF, such as UDM or GMLC. For more information, see Traget NF Communication Profile Mapping.

-

Configure SCP

To integrate NEF with the SCP for Model D communication, perform the

sbiRouting,routesConfig,sbiRoutingErrorCriteriaSets, andsbiRoutingErrorActionSetsconfigurations for the Egress GW section of the custom values file for NEF.Table 3-4 SCP Configuration

Parameter Description sbiRouting.sbiRoutingDefaultScheme The value specified in this field is considered when

3gpp-sbi-target-apirootheader is missing.The default value is http.

peerConfiguration Configurations for the list of peers. Each peer must contain the following: - id

- host

- port

- apiPrefix

peerConfiguration.peerSetConfiguration Configurations for the list of peer sets. Each peer set must contain the following: - id

- httpConfiguration

- httpsConfiguration

- apiPrefix

peerConfiguration.No two instances should have same priority for a given HTTP or HTTPS configuration. In addition, more than one virtual FQDN should not be configured for a given HTTP or HTTPS configuration.

routesConfig.id Specifies the ID of the route. routesConfig.uri Provide any dummy url, or leave the existing url with existing value routesConfig.path Specifies the path to be matched routesConfig.order Specifies the order of the execution of this route. routesConfig.metadata.httpRuriOnly Flag to enable httpRuriOnly functionality. When value is set to true, the RURI scheme is changed to http. For the value given as false, no changes are made to the scheme. routesConfig.metadata.httpsTargetOnly Flag to enable httpsTargetOnly functionality. When the value is set to true, SBI instances are selected for HTTPS list only (if 3gpp sbitarget root header is http). When the value is set to false, no changes are made to the existing scheme. routesConfig.metadata.sbiRoutingEnabled Flag to enable the sbiRouting for the selected route. routesConfig.filterName1.name Provide name as SBIRouting. routesConfig.filterName1.args.peerSetIdentifier Specifies the ID of the peerSetConfiguration. routesConfig.filterName1.args.customPeerSelectorEnabled routesConfig.filterName1.args.errorHandling The errorHandling section contains an array of errorcriteriaset and actionset mapping with priority. The errorcriteriaset and actionset are configured through Helm using

sbiRoutingErrorCriteriaSetsandsbiRoutingErrorActionSets.Note: To disable the rerouting under SBIRouting, delete the

errorHandlingconfigurations underroutesConfig.sbiRoutingErrorCriteriaSets The

sbiRoutingErrorCriteriaSetscontains an array of errorCriteriaSet , where each errorCriteriaSet depicts an ID, set of HTTP Methods, set of HTTP Response status codes, set of exceptions with headerMatching functionality.sbiRoutingErrorActionSets The

sbiRoutingErrorActionSetscontains an array of actionset, where each depicts an ID, action to be performed (Currently on REROUTE action is supported) and blocklist configurations.Note:

Note: The Model D functionality is based on SBIRouting. To enable SBI routing, the values of all the flags in

routesConfig.metadatamust be set to true and sbiRoutingfilterName1must be configured. -

Configure Communication Profiles

To configure the Model D communication profiles, perform the communicationProfiles configurations for the 5GCAgent section of the custom values file for NEF.

Table 3-5 Communication Profiles Configuration

Parameter Description customModelD.discoveryHeaderParams.targetNfType The target NF, with which NEF is going to have the indirect communication. This parameter is mapped with the

3gpp-Sbi-Discovery-target-nf-typediscovery header. The parameter remains available only in the subsequent requests.customModelD.discoveryHeaderParams.discoveryServices The service names for the discovery NF. This parameter is mapped with the

3gpp-Sbi-Discovery-service-namesheader.customModelD.discoveryHeaderParams.supportedFeatures This parameter is mapped with the 3gpp-Sbi-Discovery-supported-featuresheadercustomModelD.sendDiscoverHeaderInitMsg Indicates if the discovery headers must be sent in initial messages. customModelD.sendDiscoverHeaderSubsMsg Indicates if the discovery headers must be sent in subsequent messages. customModelD.sendRoutingBindingHeader Indicates if the routing binding header must be included or not customModel.discoveryHeaderParams.preferredLocality Preferred target NF location for the discovery NF. This parameter is mapped with 3gpp-Sbi-Discovery-preferred-locality. - Map Communication Profiles

To enable the Model D communication with specific NF, assign the Model D profiles created in above configurations to the requied NF. The following Helm parameters allows you to map the target NF type with the active profiles:

Table 3-6 Target NF Communication Profile Mapping

Parameter Description targetNfCommunicationProfileMapping.UDM The supported communication method for UDM

Possible values are:- model A

- model B

- The communication profile configured for Model D in the communicationProfiles configuration. For more details about configuring Model D profiles, see Configure.

targetNfCommunicationProfileMapping.GMLC The supported communication method for GMLC

Possible values are:- model A

- model B

- The communication profile configured for Model D in the communicationProfiles configuration. For more details about configuring Model D profiles, see Configure.

For more details related to configurations, see Oracle Communications Cloud Native Core, Network Exposure Function Installation, Upgrade, and Fault Recovery Guide.

- ocnef_5gc_agent_srv_req_total

- ocnef_5gc_agent_srv_resp_total

- ocnef_5gc_agent_srv_latency_seconds

- ocnef_translation_count

- ocnef_translation_failure_count

For more information about the metrics, see NEF Metrics.

3.6 Support for Security Token Generation

NEF supports the OAuth security method for service API invocation. This security procedure ensures that only authenticated service requests are directed to the core microservices for processing the request.

Note:

NEF supports only OAUTH security method for service

API invocation. There is no direct interface between AF and

the NEF, hence, all the security procedures are handled by

the CAPIF. The functionality returns only

OAUTH security method by invoking a

PUT operation.

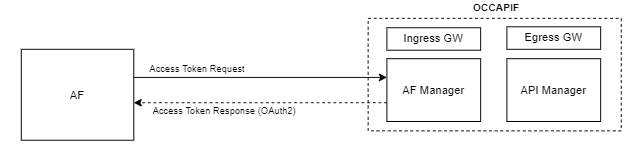

The following diagram depicts an example of OAuth token generation by AF Manager service of CAPIF:

Figure 3-7 Security Token Generation

The API Invoker or AF attaches the received OAuth token with every service request sent to NEF. The OAuth token gets authenticated by the API Router service before the processing of the request at NEF.

The AF obtains authorization by sending a POST request to CAPIF.

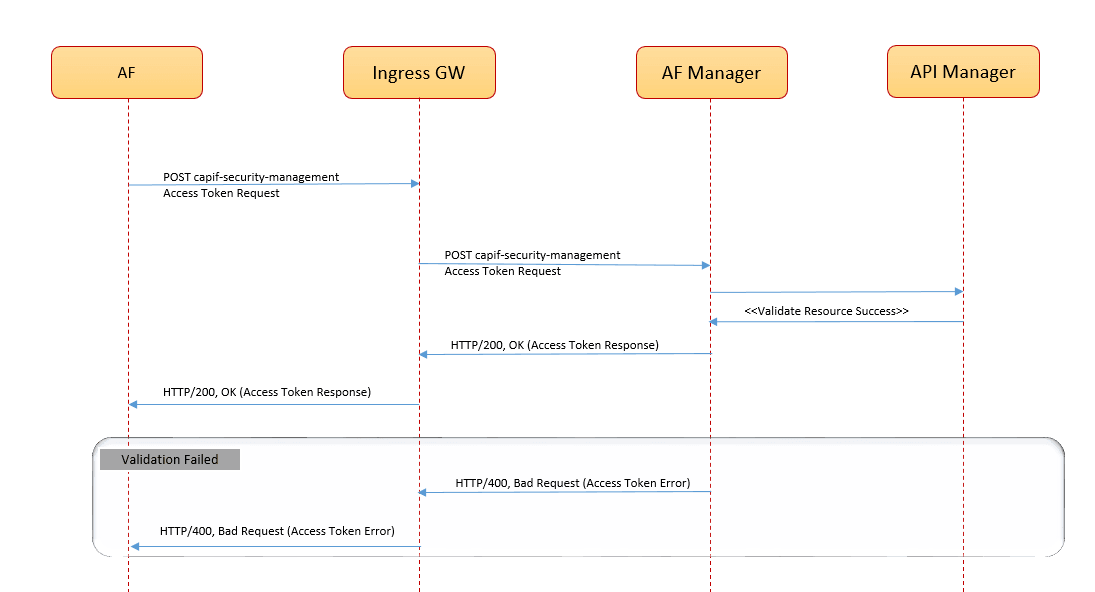

The following diagram shows a call flow where NEF receives an authentication token request from AF, and the AF Manager service generates the token to provide authorization:

Figure 3-8 Call Flow for Security Token Generation by AF Manager

Note:

The registration ID or API Exposing Function (AEF) ID changes during uninstallation of NEF. In such scenario, the manual deletion of security context and creation of new security context is necessary in order to generate new OAuth token. The AEF ID for security context can be obtained from NEF database.For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

Managing Security Token Generation

Security Token Generation is a core functionality of NEF. It remains enabled by default.

NEF provides the ME service related metrics for observing security functionality. For more information about the metrics, see CAPIF Metrics.

3.7 Monitoring Event Service in NEF

In the network deployments, operators must track the events of User Equipment (UE) to provide customized and enhanced network services. Any change in the UE location is considered as an event and NEF facilitates third-party applications or internal Application Functions (AFs) to monitor and get the report about such events.

- Unified Data Management (UDM)

- Access and Mobility Management Function (AMF)

- Session Management Function (SMF)

Depending on the specific monitoring event or information, UDM and AMF collects the information and sends it through NEF.

Monitoring Event Service

- Current location of UE

- Last known location of UE with time stamp

- PDU Session status

- Loss of Connectivity Event

- UE Reachability Event

- One-time reporting

- Maximum number of reports

- Maximum duration of reporting

- Monitoring type

Note:

- NEF scope for handling externalGroupId and maximumNumberOfReports:

- If a UE is part of multiple externalGroupId across multiple subscriptions, then NEF does not aggregate and sends one report for each UE. Instead, NEF forwards all the reports received from SMF to AF.

- NEF tracks only the number of UEs in a group and not the individual UE. NEF accepts a total of either (maximumNumberOfReports * numberOfUEs) cumulatively or until the subscription expiration based on the monitorExpireTime parameter, whichever is earlier.

- NEF does not track the number of reports at individual UE level in case of externalGroupId subscription.

- NEF scope for handling externalGroupId and groupReportGuardTime:

- For PDN_CONNECTIVITY_STATUS, NEF does not aggregate the reports based on the guard time. SMFs aggregate the reports and send them to NEF. Then, NEF forwards the same report to AF.

- For UE_REACHABILITY and LOSS_OF_CONNECTIVITY, NEF does not aggregate the reports based on the guard time. AMF forwards the request for each UE in the group and the same is forwarded to AF. There is no aggregation of reports occurs at NEF.

To provide the monitoring service to AF, NEF interacts with UDM by using the

Nudm_EventExposure service. For more information about this

service, see 3GPP Technical Specification, Unified

Data Management Services, Release 17.

The following diagram represents a high-level call flow where NEF receives a location tracking request from AF and interacts with UDM to obtain the required information:

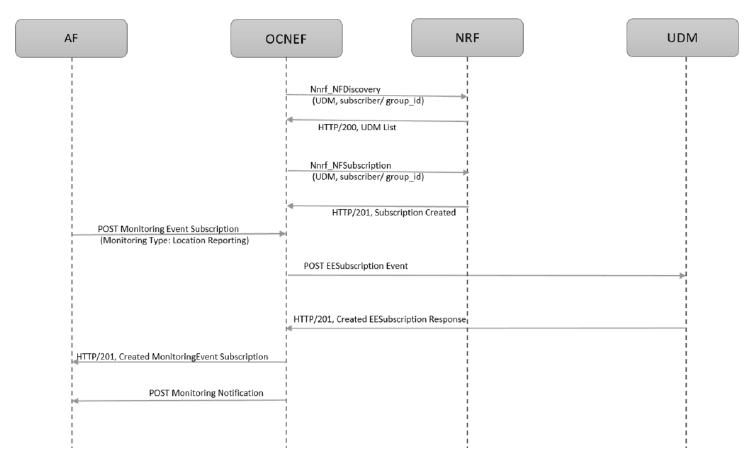

Figure 3-9 Call Flow for Monitoring Event Subscription

- The NRF Client communicates with NRF for UDM Discovery

(

Nnrf_NFDiscovery) and receives a list of available UDMs with their Subscriber IDs or Group IDs.Note:

The UDM discovery is performed even before NEF starts receiving any subscription requests from AF. - AF sends a POST request (

3gpp-monitoring-event) for monitoring event subscription to NEF. - After the authentication of the request through CAPIF, the request reaches the ME service.

- The ME service processes the request and sends a POST request

(

Nudm_EventExposure) for event notification subscription to the selected UDM from the list available through UDM discovery.NEF subscribes to this event to receive the monitoring event of a UE, and Updated Location of the UE when UDM becomes aware of a location change of the UE. For more information about

Nudm_EventExposure, see 3GPP Technical Specification, Release 16, Access and Mobility Management Services.

After successful subscription with the UDM, NEF starts receiving the event reports from AMF and sends it to AF.

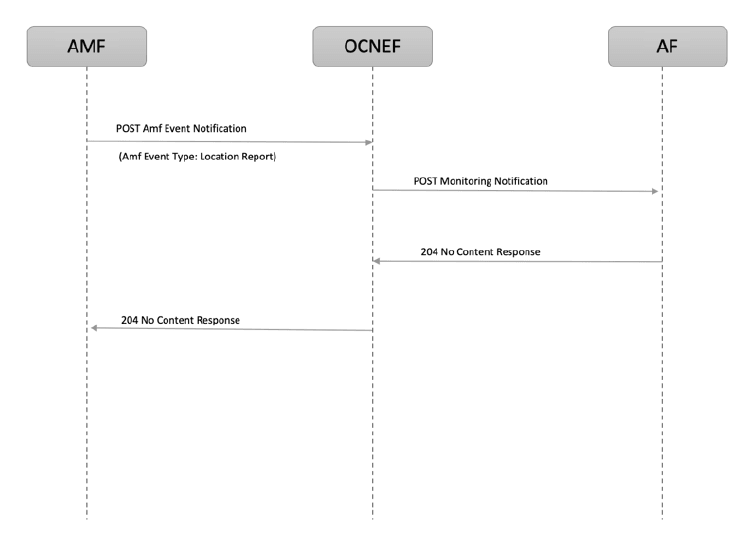

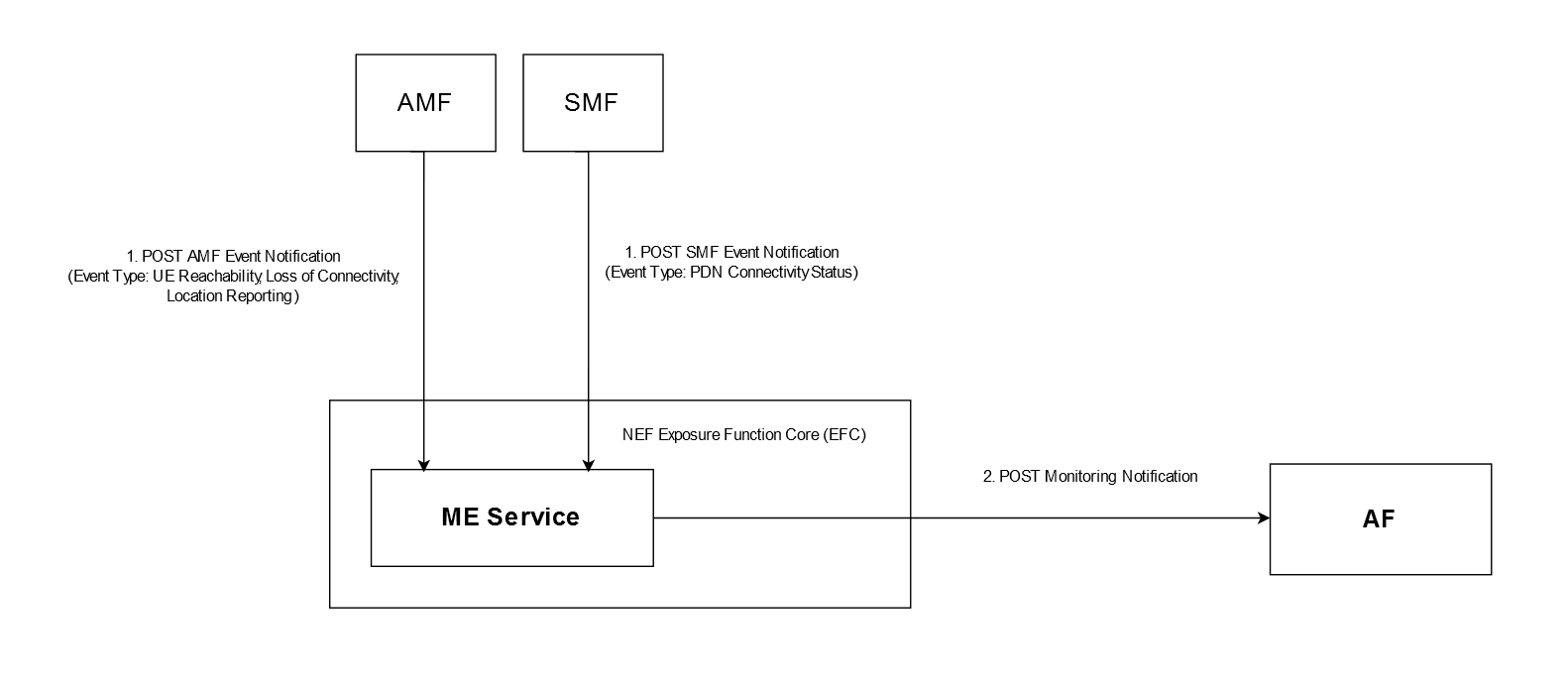

The following diagram represents a high-level call flow where NEF receives a event report from AMF and forwards it to AF:

Figure 3-10 Call Flow for Monitoring Event Reporting

- AMF sends a POST request (

AMF-event-notification) for monitoring event notification to NEF. - After the processing of the request NEF sends the event report to AF.

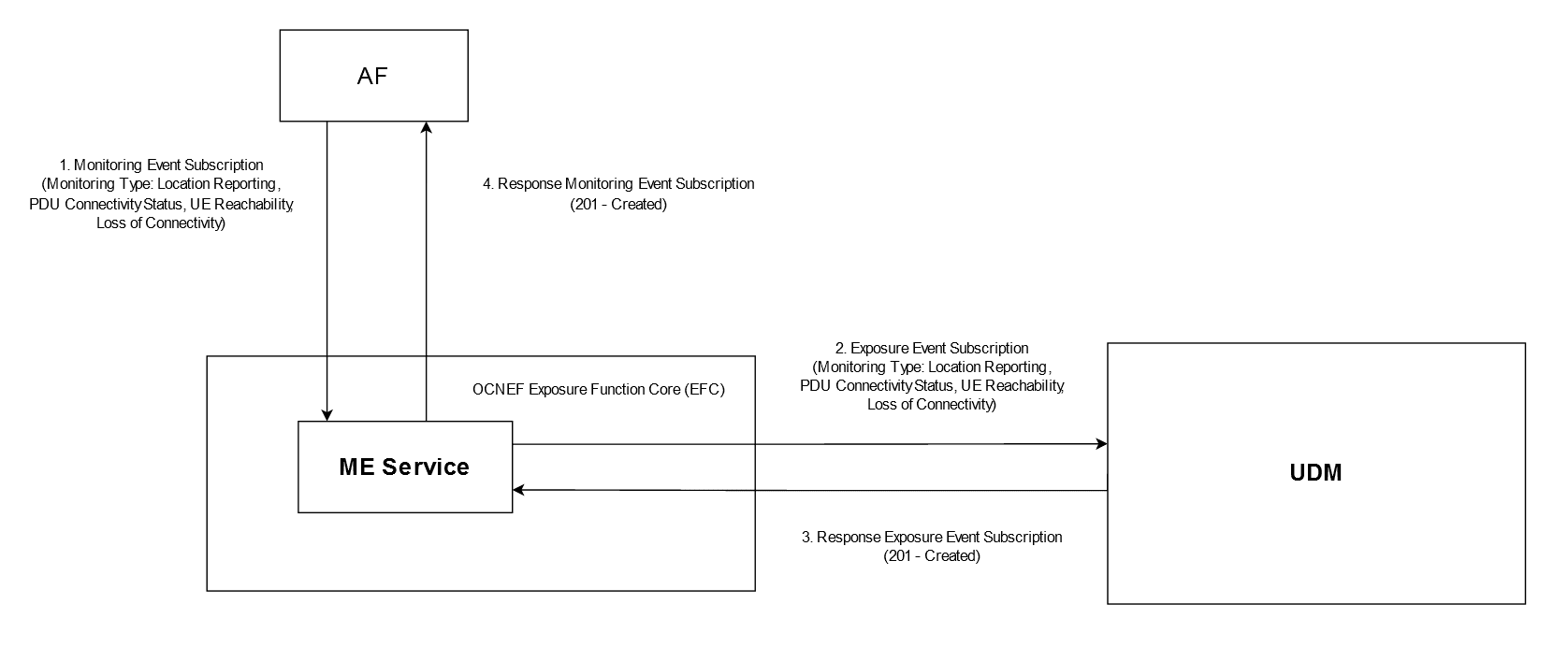

ME Subscription Creation

Figure 3-11 Example of Monitoring Event Subscription for Creation

- After successful registration and onboarding, the AF sends a

POST request (

3gpp-monitoring-event) to create a subscription for the supported monitoring event toward NEF. - When the request is authenticated by NEF Exposure Gateway (EG),

the ME service processes the request based on the type of the event.

For any monitoring type event, NEF sends a POST request (

Nudm_EventExposure) to create a subscription to UDM.NEF subscribes to the event in order to receive the notification for the event based on the monitoring type. For more details related to the

Nudm_EventExposureservice, see 3GPP Technical Specification, Unified Data Management Services, Release 17. - On successful processing of the request, UDM sends an acknowledgment for subscription creation. In case of failure, the error response is sent.

- After receiving the successful response from the UDM, the ME

service sends the subscription creation acknowledgment response to the AF.

This creates the ME service subscription.

Note:

The expired ME subscriptions get deleted by the NEF Audit service.

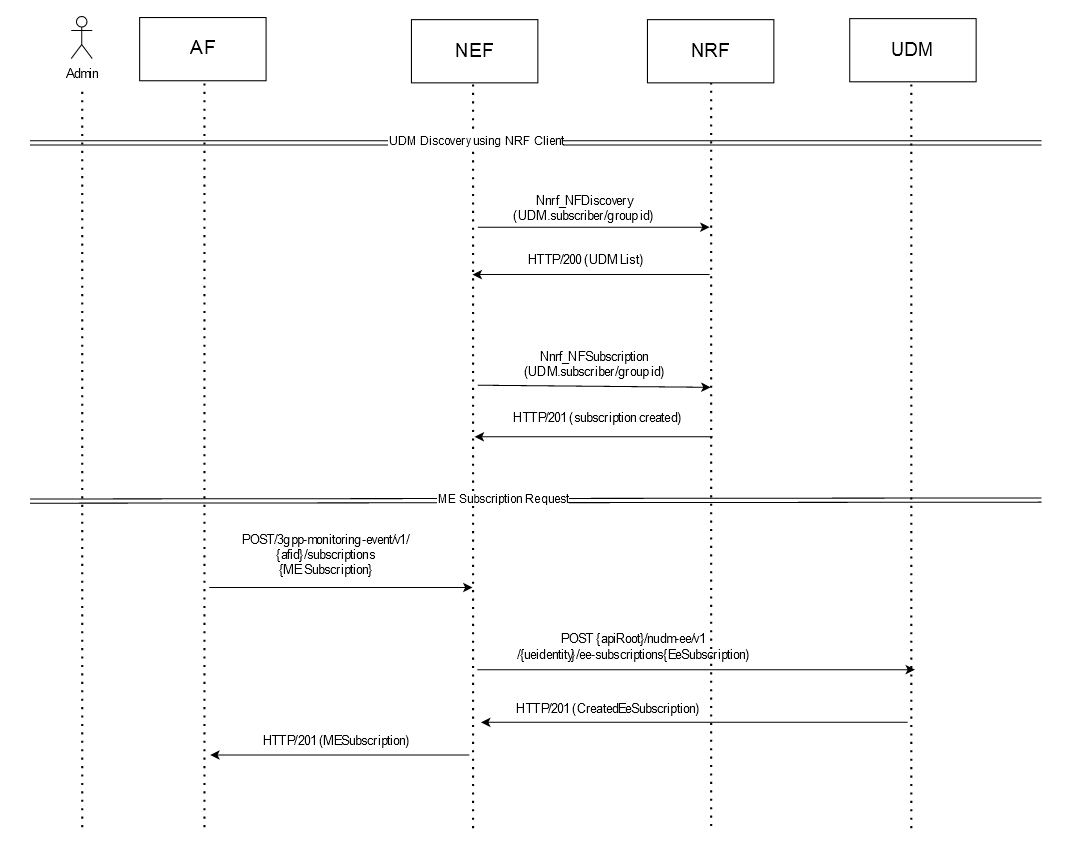

Create ME Subscription

Figure 3-12 Example of ME Subscription Call Flow

- By default, the NRF Client communicates with NRF for UDM

Discovery (

Nnrf_NFDiscovery) and receives a list of available UDMs with their Subscriber IDs or Group IDs.Note:

The UDM discovery is performed even before NEF starts receiving any subscription requests from AF. - To subscribe to ME service, AF sends a

3gpp-monitoring-eventPOST request to NEF. - NEF performs the following steps:

- The request routes through the external Ingress Gateway

to NEF Exposure Gateway (EG). The API Router performs the

authentication process and checks if the AF is authorized to access

the requested service. Based on the authentication result, one of

the following actions is performed:

- If the authorization fails, then API Router

sends the

HTTP/403 <Unauthorised>response to AF and closes the request. - If the authorization is successful, then API

Router sends the

HTTP/200 <Authorized>response to the API Manager.

- If the authorization fails, then API Router

sends the

- After successful authorization, the API Router service

sends the

3gpp-monitoring-eventPOST request to the ME service with the AF identity (afid). - The ME Service validates the request, creates a record in database, generates a subscription, and sends the subscription details to the 5GC Agent service.

- The 5GC Agent service processes the subscription information, interacts with the Config-Server service, and receives the list of UDMs available through NRF discovery.

- After successful UDM discovery, 5GC Agent sends the

Nudm_EventExposurePOST request to UDM through the 5GC Egress Gateway. The request contains the UE identity for which location reporting can be enabled.

- The request routes through the external Ingress Gateway

to NEF Exposure Gateway (EG). The API Router performs the

authentication process and checks if the AF is authorized to access

the requested service. Based on the authentication result, one of

the following actions is performed:

- After the processing is complete, UDM sends the

HTTP/200 <CreatedEeSubscription>response to the NEF with the subscription details. - The 5GC Agent service in NEF routes the

HTTP/200 <CreatedEeSubscription>response to ME Service. The service updates the NEF database with the updated subscription records and sends the200/HTTP <MonitoringEventSubscriptionCreated>response to the AF through the external Ingress Gateway. - This creates the monitoring event subscription in NEF for the

AF to monitor the location of the specified UE.

The subscription remains valid for the number of days defined in the POST request through the

monitorExpireTimeparameter.

Sending Notifications to AF

Figure 3-13 Example of Sending Event Reports through ME Notification

- After successful creation of the ME subscription, the AMF or

SMF sends POST request (

AMF-event-notification) for creating a monitoring event notification to NEF. - After processing the request, NEF starts sending the response to AF.

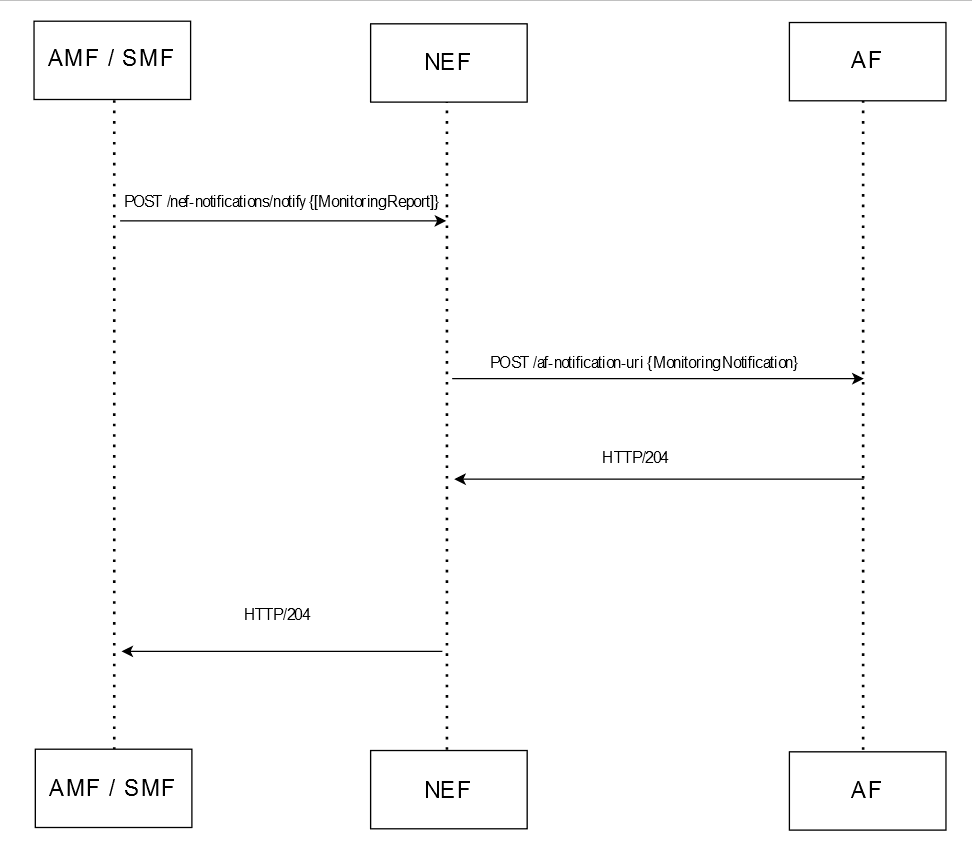

Send ME Notification

Figure 3-14 Example of ME Notification Call Flow

- AMF or SMF sends a

nef-notifications/notifyPOST request to NEF with the subscriber details corresponding to the ME subscription created in the above mentioned call flow. - The request routes through the 5GC Ingress Gateway and 5GC Agent services in NEF to ME Service.

- The ME Service processes the request, creates a record in

database, and sends the

ME NotificationPOST request to the corresponding AF with the subscriber location details. - This sends the response of the specified UE to the AF.

For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

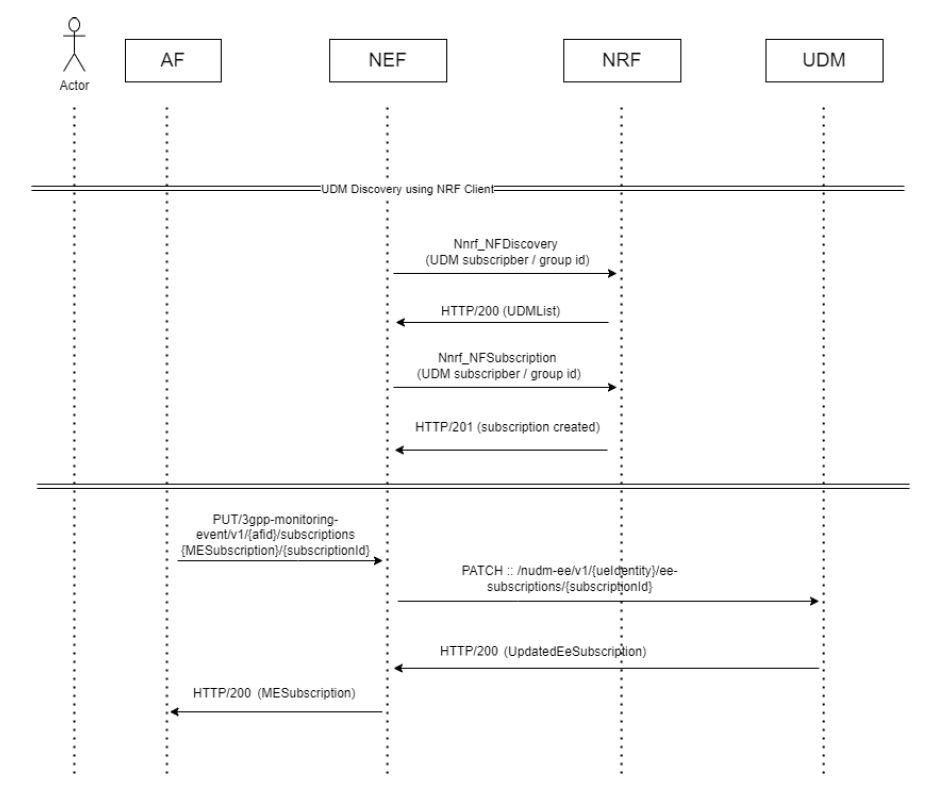

Update ME Subscription

Figure 3-15 Example of Updating a ME Subscription - Call Flow

- By default, the NRF Client communicates with NRF for UDM

Discovery (

Nnrf_NFDiscovery) and receives a list of available UDMs with their Subscriber IDs or Group IDs.Note:

The UDM discovery is performed even before NEF starts receiving any subscription requests from AF. - To update an ME subscription, AF sends a

3gpp-monitoring-eventPUT request to NEF. - NEF performs the following steps:

- The request routes through the external Ingress Gateway

to NEF Exposure Gateway (EG). The API Router performs the

authentication process and checks if the AF is authorized to access

the requested service. Based on the authentication result, one of

the following actions is performed:

- If the authorization fails, then API Router

sends the

HTTP/403 <Unauthorised>response to AF and closes the request. - If the authorization is successful, then API

Router sends the

HTTP/200 <Authorized>response to the API Manager.

- If the authorization fails, then API Router

sends the

- After successful authorization, the API Router service

sends the

3gpp-monitoring-eventPUT request to the ME service with the AF identity (afid). - The ME Service validates the request, updates the subscription, and sends the details to the 5GC Agent service.

- The 5GC Agent service processes the subscription information, interacts with the Config-Server service, and receives the list of UDMs available through NRF discovery.

- After successful UDM discovery, 5GC Agent sends the

Nudm_EventExposurePATCH request to UDM through the 5GC Egress Gateway. The request contains the UE identity for which location reporting can be enabled.

Note:

PUT functionality is supported from AF to NEF. PATCH functionality is supported from NEF to UDM. Hence, here PUT is converted to PATCH and then send through UDM. - The request routes through the external Ingress Gateway

to NEF Exposure Gateway (EG). The API Router performs the

authentication process and checks if the AF is authorized to access

the requested service. Based on the authentication result, one of

the following actions is performed:

- After the processing is complete, UDM sends the

HTTP/201 <UpdatedEeSubscription>response to the NEF with the subscription details. - The 5GC Agent service in NEF routes the

HTTP/201 <UpdatedEeSubscription>response to ME Service. The service updates the NEF database with the updated subscription records and sends the201/HTTP <MonitoringEventSubscriptionUpdated>response to the AF through the external Ingress Gateway. - This creates the monitoring event subscription in NEF for the

AF to monitor the location of the specified UE.

The subscription remains valid for the number of days defined in the PATCH request through the

monitorExpireTimeparameter. For more information about this parameter, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

Monitoring Event Reporting

Monitoring Events Reporting is a core functionality of NEF. It remains enabled by default.

NEF provides the ME service related metrics for ME Service feature. For more information about the metrics, see NEF Metrics.

3.7.1 Converged SCEF NEF Model for Monitoring Event

- AF sends request to NEF.

- NEF creates subscription on UDM.

- UDM sends the send request to AMF.

- On event detection, AMF sends notification to NEF.

If in case the UE is latched to a 4G network, then NEF cannot create subscription or receive notification from that network. NEF needs to be enabled with the Converged SCEF-NEF solution for specific services where interaction between the Service Capability Exposure Function (SCEF) and NEF is required.

When there is a UE capable of mobility between Evolved Packet Core (EPS) and 5G Core (5GC), the network is expected to associate the UE with an SCEF+NEF node for Service Capability Exposure.

Note:

- The remaining notification flow is similar to the existing flow from NEF to AF.

- The

convergedScefNefEnabledparameter:- introduces

enableFeature.convergedScefNefdiameter gateway for receiving diameter traffic from EPC network nodes. - enables EPC subscription parameters for subscriptions towards UDM (currently monitoringEvents Location reporting subscriptions).

- introduces

ocnef-diam-gateway:

clientPeers: |

- name: 'mme'

realm: 'mme.oracle.com'

identity: 'T6-client'

Note:

- During upgrade, only the subscription request on the new pod

creates subscription on HSS (epc network). This does not apply for Gateway

Mobile Location Centre (GMLC).

If GMLC returns fail, based on the

switchToUdmOnFailureparameter, NEF performs failover to UDM, then NEF sends the parameter to UDM to create subscription on Evolved Packet Core (EPC) when converged_scef_nef is enabled. SCEF-Reference-IDis the identifier for the notification from MME to find the corresponding subscription.- If subscription creation at UDM is successful, but there was

failure while creating subscription at HSS then the NEF considers the

subscription creation to be successful.

MeEPCAddSubscriptionFailureRateCrossedThresholdalert is triggered when this failure happens at EPC.

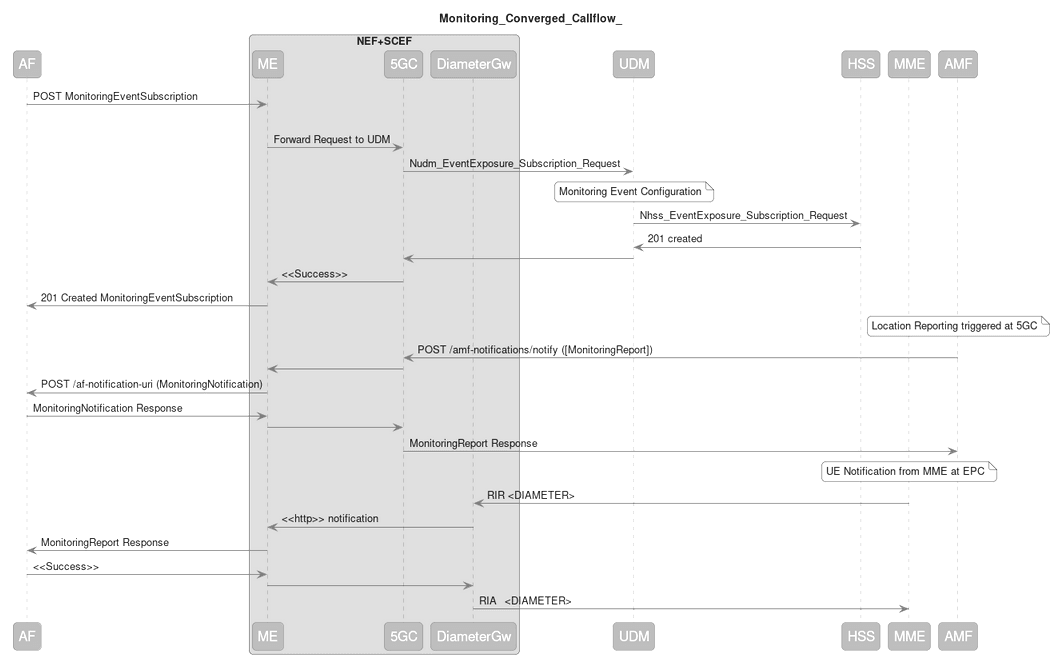

Monitoring Events Call Flow in Converged SCEF-NEF

This section provides call flow supported for Converged SCEF-NEF functionality with separate UDM and HSS.

- If the UE request is from 5G network

- Application Function (AF) sends request to NEF for ME subscription.

- NEF sends EeSubscription request to UDM.

- UDM creates the subscription on AMF.

- AMF sends this event notification to NEF directly.

-

If the UE request is from 4G network

- Application Function (AF) sends request to NEF for ME subscription.

- NEF sends EeSubscription request to UDM. If the epcAppliedInd parameter is set to True, the request also applies to 4G.

- UDM creates the subscription on HSS along with AMF.

- HSS creates subscription in MME.

- MME sends RIR message T6X interface event notification to NEF through DiameterGw.

In the following scenario, SCEF+NEF configures events at UDM, indicating that the event may occur in 5GC as well as EPC. Based on this information, UDM configures events at 5GC and also invokes event subscription at HSS, which in turn configures events at EPC.

Figure 3-16 Monitoring Events - SCEF-NEF Call Flow

- AF sends a MonitoringEventSubscription request to ME.

- ME forwards the request to 5GC.

- 5GC sends subscription request to UDM.

- UDM creates subscription on AMF and HSS. HSS sends information to MME.

- If the event detected is at 5G network, then AMF sends this response notification to 5GC. If the event detected is at 4G network, then MME sends this response notification to NEF through DiameterGw. Diameter is translated to HTTP internally in NEF.

- NEF sends the notification to AF.

- AF sends a success response to NEF.

- NEF sends this response directly to AMF and to MME through DiameterGw.

3.8 GMLC Based Location Monitoring

In the network deployments, there are certain scenarios when operators need highly accurate location details of subscribers that contains the geographic information. Such requests cannot be processed using the UDM or AMF based location monitoring. To cater to these type of requests, NEF supports the Gateway Mobile Location Center (GMLC) based location monitoring along with UDM and AMF based monitoing.

NEF selects the GMLC or UDM/AMF based location service as per the service requirements, such as location QoS, whether an immediate or deferred subscriber location is requested, and the availability of GMLC, UDM, or AMF.

The GMLC Based Location Monitoring feature enables NEF to monitor the current or last known location of subscribers or User Equipment (UE) based on the requests received from AFs. The feature caters to the following types of location monitoring requests:

- Immediate Requests: One time request for location monitoring of a subscriber

- Deferred Requests: Request to monitor the subscriber location

for:

- a specific number of location updates

- specific duration

To setup GMLC based location monitoring, NEF invokes the Home GMLC that is responsible to control the privacy checking of the target subscriber. The invoked GMLC further interacts with the core NFs to provide the subscriber location details.

NEF exposes the location information as and when received from GMLC to the AF. The information is exposed based on the 3GPP specifications provided for the Northbound APIs towards the AF. For more information about the specifications, see 3GPP TS 29.522 Rel. 16.9.

Immediate Requests

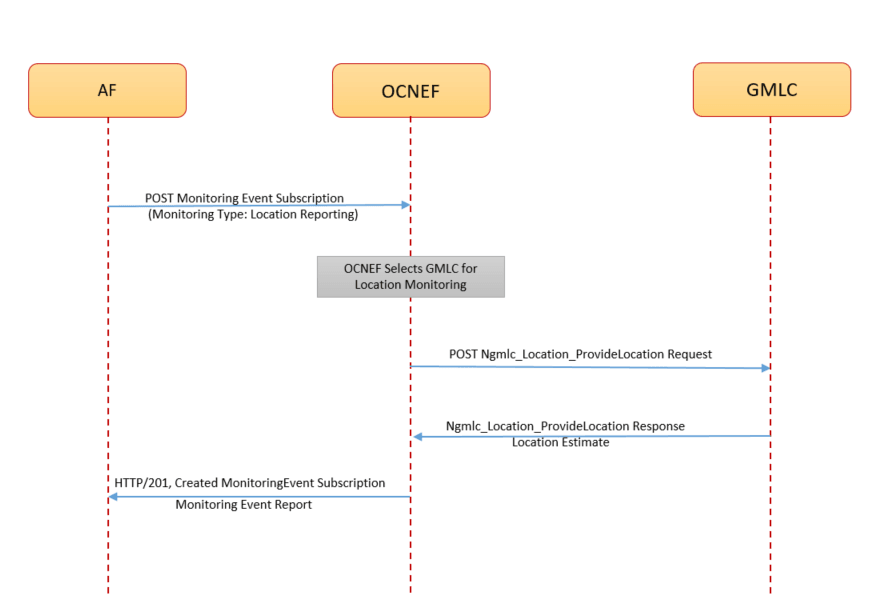

The following diagram shows a high-level call flow where NEF receives a subscription request for the immediate location of a subscriber from AF. NEF processes the request, evaluates the location service provider as GMLC, and interacts with GMLC to get the information:

Figure 3-17 Call Flow for GMLC Based Monitoring Event Subscription for Immediate Request

- AF sends a

3gpp-monitoring-eventPOST request to NEF to subscribe to an immediate location monitoring service.Note:

For an immediate location monitoring request, the MaximumNumberOfReports parameter value remains 1 and ldrType must not be present. For more information about the parameters, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide. - The request routes through the external Ingress Gateway to NEF ME service after a successful authentication process in NEF CAPIF.

- The ME service performs the following tasks:

- validates the request

- evaluates the Location Provider as GMLC

For more information about how NEF determines the location provider, see Table 3-7.

- generates a subscription

- sends the subscription details to the 5GC Agent service.

- The 5GC Agent service processes the subscription information, interacts with the Config-Server service, and performs the discovery of the GMLCs deployed within the network through NRF or SCP, based on the selected communication model.

- After successful GMLC discovery, the 5GC Agent sends the

Ngmlc_Location_Provide_LocationPOST request to GMLC through the 5GC Egress Gateway. The request contains the UE identity for which location reporting is to be enabled. - On successful processing, GMLC sends the

HTTP/200response to the 5GC Agent service. The response contains the subscriber location information. - NEF translates and forwards the location information to AF without storing the data

in the database.

Note:

NEF clears the database before sending the location information to the AF.

Deferred Requests

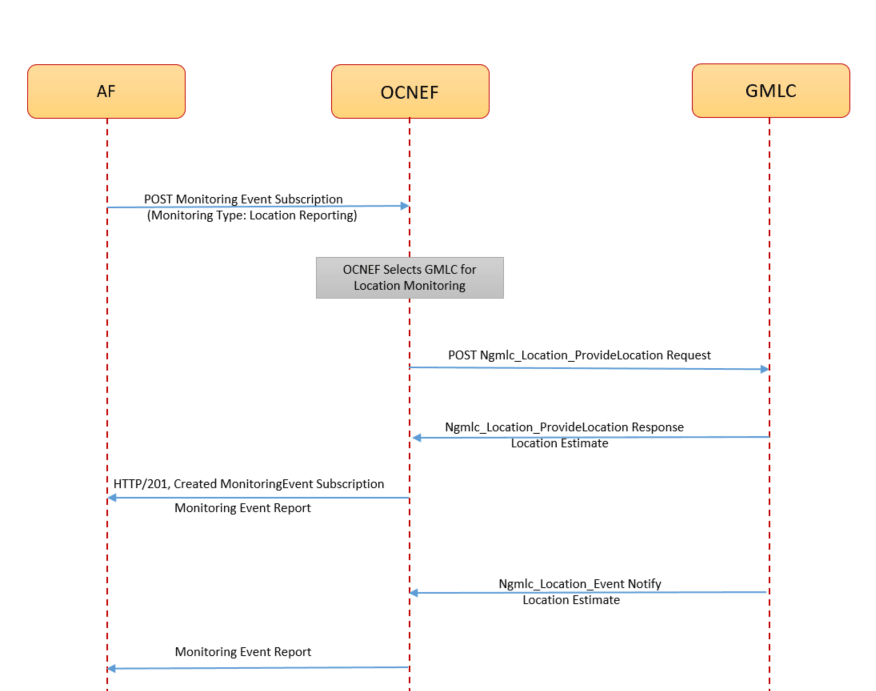

The following diagram shows a high-level call flow where NEF receives a subscriber location information for a defined period or based on the geographic area of a subscriber from AF. NEF processes the request, evaluates the location service provider as GMLC, and interacts with GMLC to get the information:

Figure 3-18 Call Flow for GMLC Based Monitoring Event Subscription for Deferred Request

- AF sends a

3gpp-monitoring-eventPOST request to NEF to subscribe to a deferred location monitoring service.Note:

For a deferred location monitoring request, the ldrType parameter must be present. For more information about the parameters, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide. - The request routes through the external Ingress Gateway to NEF ME service after a successful authentication process in CAPIF.

- The ME service performs the following tasks:

- validates the request

- evaluates the Location Provider as GMLC

For more information about how NEF determines the location provider, see Table 3-7.

- generates a subscription

- sends the subscription details to the 5GC Agent service.

- The 5GC Agent service processes the subscription information, interacts with the Config-Server service, and performs the discovery of the GMLCs deployed within the network through NRF or SCP, based on the selected communication model.

- After successful GMLC discovery, the 5GC Agent sends the

Ngmlc_Location_Provide_LocationPOST request to GMLC through the 5GC Egress Gateway. The request contains the UE identity for which location reporting is to be enabled. - On successful processing, GMLC sends the

HTTP/200response to the 5GC Agent service. The response contains the subscriber location information. - NEF translates and forwards the location information to AF without storing the data

in the database.

Note:

NEF clears the database before sending the location information to the AF. - GMLC uses the

Ngmlc_Location_Event Notifyservice to send further subscriber location information to NEF as per the deferred request.

NEF selects GMLC based location monitoring as per the operator configurations and the presence of the LocQos parameter in AF location monitoring request. The following table describes the logic used by NEF to select the GMLC based monitoring over the UDM/AMF based monitoring:

Table 3-7 Evaluation of Location Provider

| Is GMLC Feature Enabled | If LocQoS parameter is present in the ME Subscription Request | Selected Location Monitoring Destination |

|---|---|---|

| No | Yes | UDM/AMF |

| No | No | UDM/AMF |

| Yes | Yes | GMLC |

| Yes | No |

Based on operator configuration for destIfLocQosAbsent parameter in Custom YAML file. For more details about the configurations, see Managing GMLC Based Location Monitoring. |

On receiving the subscription request from AF, NEF verifies the configuration for the gmlcDeployed parameter in the helm configurations. For more details about the configurations, see Managing GMLC Based Location Monitoring.

- If the value for gmlcEnabled parameter is false, then the location tracking request is sent to UDM

- If the value for gmlcDeployed parameter

is true, then NEF checks if the subscription request contains the locQOS parameter. For more information about the request

parameters, see Oracle Communications Cloud Native Core, Network Exposure Function

REST Specification Guide.

- If the request does not contain the locQos parameter, NEF checks the configuration of the destIfLocQosAbsent parameter in the HELM

configurations:

- If the value of destIfLocQosAbsent is UDM, then the subscription request is sent to UDM

- If the value of destIfLocQosAbsent is GMLC, then the subscription request is sent to GMLC

- If the request contains the locQos

parameter, then NEF compares the values of hAccuracy and vAccuracy parameters of

the subscription request with the gmlchAccuracy

and gmlcVaccuracy parameters configured in HELM

chart.

- If the values of subscription request parameters are same or greater than the HELM configuration values, then the request is forwarded to GMLC.

- If the values of subscription request parameters are smaller than the HELM configuration values, then the request is forwarded to UDM.

- If the request does not contain the locQos parameter, NEF checks the configuration of the destIfLocQosAbsent parameter in the HELM

configurations:

For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

Managing GMLC Based Location Monitoring

The GMLC Based Location Monitoring functionality can be enabled during NEF deployment using the custom calue.yaml file.

To enable this feature, set the gmlcEnabled parameter to true under the global configurations in the custom-values.yaml file for NEF.

The gmlcEnabled parameter values can have the following values:

- true: The value states that GMLC is deployed in the current deployment and GMLC based location monitoring can be considered when NEF receives a location tracking request from AF

- false: The GMLC based location monitoring is not considered when NEF receives a location tracking request from AF.

The GMLC Based Location Monitoring functionality can be configured during NEF deployment using the custom-values.yaml file. The following parameters must be updated in the custom values file for NEF:

Table 3-8 GMLC Based Location Monitoring Parameters

| Parameter | Description |

|---|---|

| monitoringevents.gmlc.destIfLocQosAbsent | Indicates the location provider when LocQos is not present in the request. For more details about the LocQos parameter, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide. |

| monitoringevents.gmlc.switchToUdmOnFailure | Specifies if the location reporting event request must be sent to UDM when NEF receives failure from GMLC. |

| monitoringevents.gmlc.switchOnErrorCodes | Contains a list of error codes, based on which NEF switches to UDM. |

| monitoringevents.gmlc.explicitCancellation |

Indicates whether an explicit Cancel Location request should

be sent to GMLC in the following scenarios:

|

| monitoringevents.gmlc.gmlchAccuracy | Specifies the horizontal accuracy to select eLCS |

| monitoringevents.gmlc.gmlcVaccuracy | Specifies the vertical accuracy to chose eLCS |

| fivegcagent.gmlc.baseUrl | The base URL of GMLC |

| fivegcagent.gmlc.externalClientType | Default value to be sent to GMLC in externalClientType parameter in ProvideLocation Request. |

| fivegcagent.gmlc.reportingInterval | Indicates the time interval between each event report in seconds. |

NEF provides the metrics for observing GMLC Based Location Monitoring feature. For more information about the metrics, see NEF Metrics.

The NEF logs include the GMLC Based Location Monitoring information for the requests received or responses sent by NEF. To get this information, the log levels must be set to debug.

The logs

messages for the feature contain the GMLCRequestProcessing prefix.

The following table lists the logs that can be used for verifying the GMLC Based Location Monitoring functionality:

Table 3-9 Logs to Verify GMLC Based Location Monitoring Functionality

| Description | Log Message | Log Level |

|---|---|---|

| NEF Identifies that the request is for GMLC | GMLCRequestProcessing: LocationProvide identified as

GMLC |

INFO |

| Request translated from T8 to GMLC format | GMLCRequestProcessing: Request translated to GMLC

(Translated request) |

INFO |

| Response received from GMLC | GMLCRequestProcessing: Response received

(Response) |

INFO |

| GMLC not reachable | GMLCRequestProcessing: Communication with GMLC failed,

(Error details) |

ERROR |

| Request to be handled by UDM | GMLCRequestProcessing: Forwarding the subscription

request to UDM on GMLC failure |

INFO |

| Cancel Initiated by GMLC | GMLCRequestProcessing: Cancel Location from

GMLC |

INFO |

| Cancel Notify sent to AF | GMLCRequestProcessing: Request to cancel subscription

sent to AF |

INFO |

For more information about the logs, see Oracle Communications Cloud Native Core, Network Exposure Function Troubleshooting Guide.

Enable GMLC to UDM Failover

NEF allows you to enable failover to UDM when GMLC subscription fails for a specific reason.

- Set value of the gmlc.switchToUdmOnFailure parameter. For further information on

configuring, refer to Update GMLC Configuration section in Oracle

Communications Cloud Native Core, Network Exposure Function REST Specification

Guide. The applicable values are:

- IMMEDIATE: To enable the failover for subscription of Immediate Location Request.

- DEFERRED: To enable the failover for subscription of Deferred Location Request.

- ALL: To enable the failover for subscription of both Immediate and Deferred request.

- NONE: To disable the failover.

- Configure the gmlc.switchOnErrorCodes parameter to define the error codes and causes for which the failover must be performed. For further information on configuring, refer to Update GMLC Configuration section in Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

- Configure the global.configurableErrorCodes parameters for Egress gateway with the same

error code details that are provided in the gmlc.switchOnErrorCodes.

The following code snippet shows an example to set the parameters in the global configuration section to do the failover:

global: configurableErrorCodes: enabled: true errorScenarios: - exceptionType: "ConnectException" errorCode: "503" errorDescription: "Connection failure" errorCause: "Connection Refused" errorTitle: "ConnectException" - exceptionType: "UnknownHostException" errorCode: "503" errorDescription: "Connection failure" errorCause: "Unknown Host Exception" errorTitle: "UnknownHostException"

Note:

The values provided for the gmlc.switchOnErrorCodes parameters must also be configured in the global.configurableErrorCodes configurations of Egress GW to enable the successful failover.For more information about the configurations, see Oracle Communications Cloud Native Core, Network Exposure Function Installation, Upgrade, and Fault Recovery Guide

3.9 CAPIF Event Management

The CAPIF Event Management feature enables NEF to notify AFs or other services about the events occurring at CAPIF through the NEF Event Manager service.

- Supports AFs and other NEF services to subscribe to CAPIF event notifications.

- Sends notifications to the subscribers in case of an event.

- Unsubscribes AFs or NEF services from the CAPIF event notifications.

For more information about the Event Management functionality, see "Subscription, unsubscription and notifications for the CAPIF events" in 3GPP Technical Specification 23.222, Release 16.

Supported Events

Table 3-10 Supported CAPIF Events

| Event Name | Description |

|---|---|

| SERVICE_API_AVAILABLE | Events related to the availability of a service API

after the service APIs are published.

Consumer: AF Producer: API Manager |

| SERVICE_API_UNAVAILABLE | Events related to the unavailability of a service

API after the service APIs are unpublished.

Consumer: AF Producer: API Manager |

| API_INVOKER_ONBOARDED | Events related to API invoker status when the invoker

is onboarded to CAPIF

Consumer: AEF Producer: AF |

| API_INVOKER_OFFBOARDED | Events related to API invoker status when the

invoker is offboarded from CAPIF

Consumer: API Exposing Function (AEF) Producer: AF |

| SERVICE_API_INVOCATION_SUCCESS | Events related to the successful invocation of

service APIs

Consumer: Audit Service Producer: Security Manager |

| SERVICE_API_INVOCATION_FAILURE | Events related to the failed invocation of service

APIs

Consumer: Audit Service Producer: Security Manager |

| API_INVOKER_AUTHORIZATION_REVOKED | Events related to the revocation of the authorization

of API invokers to access a service API

Consumer: Security Manager Producer: OAM |

Note:

Currently, only for theAPI_INVOKER_OFFBOARDED event, subscription, unsubscription, and

notification is supported. For other events, only subscription and unsubscription is

supported.

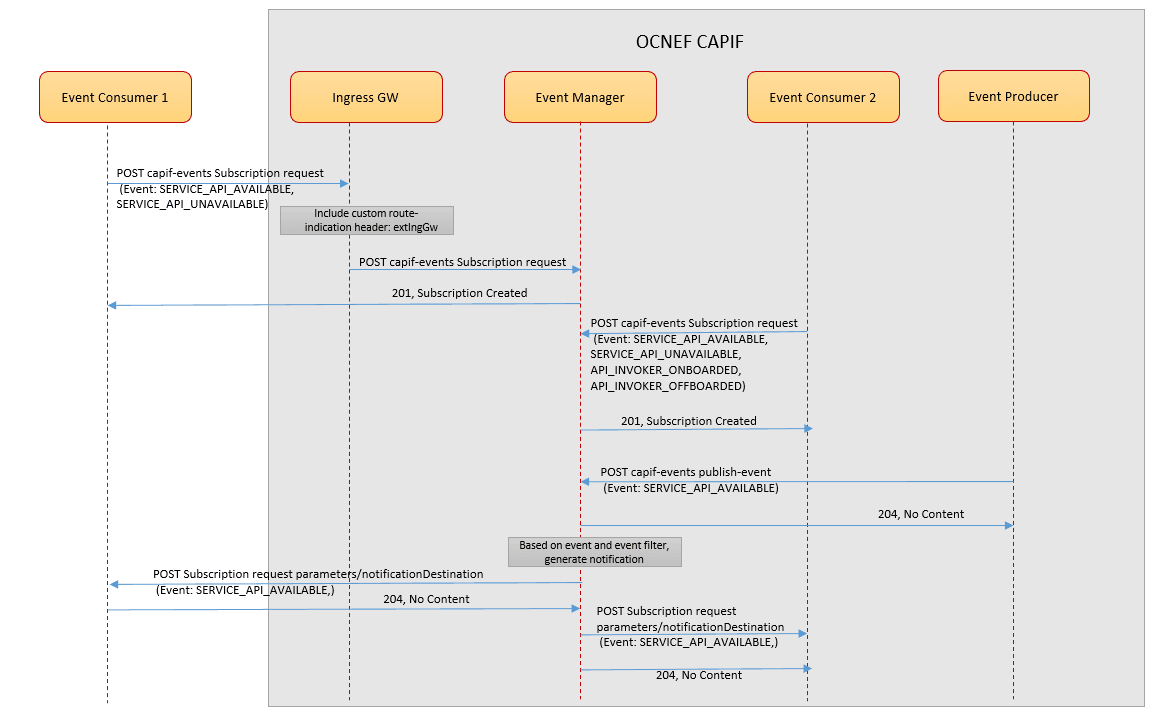

Example- Subscribing to Event

The following diagram shows a high-level call flow where the Event Manager service manages the subscription requests coming from the different event's consumers and sends notifications based on the event occurred at the producer:

Figure 3-19 Call Flow for CAPIF Event Management- Subscribe

- An external event consumer (Event Consumer1) sends a POST

capif-eventssubscription request to the Event Manager service through the external Ingress GW in NEF deployment. - The Event Manager service authenticates the request and creates a subscription.

- Similarly, an internal NEF service (Event Consumer2) sends a POST

capif-eventssubscription request to Event Manager and creates an event notification subscription. - The Event Manager receives a POST

capif-eventspublish request from the Event Producer. - The Event Manager processes the request and based on the parameter values received in the subscription requests, sends the notifications to both Event Consumer1 and the Event Consumer2 for the requested event.

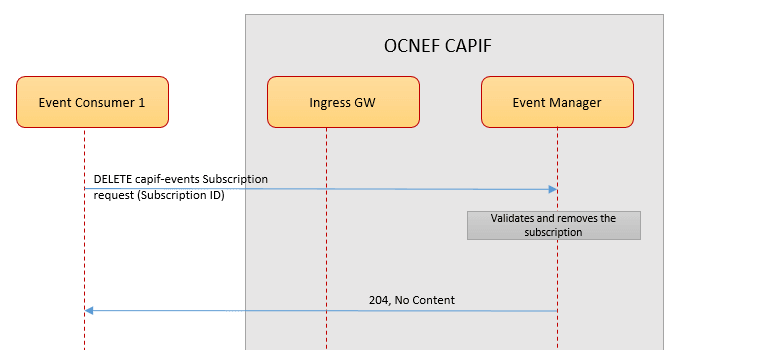

Example- Unsubscribing Event

The following diagram shows a high-level call flow where the Event Manager service manages the delete subscription request coming from an event consumer and deletes the subscription:

Figure 3-20 Call Flow for CAPIF Event Management - Unsubscribe

- A subscriber (Event Consumer1) sends a DELKET

capif-eventssubscription request to the Event Manager service through the external Ingress GW in NEF deployment. - The Event Manager service processes the request and validates the subscriber identity.

- After successful validation, the Event Manager deletes the subscription from NEF database.

For more information, see Oracle Communications Cloud Native Core, Network Exposure Function REST Specification Guide.

Managing CAPIF Event Management

The CAPIF Event Management is a core functionality of NEF. It remains enabled by default.

NEF provides the metrics for observing the CAPIF Event Management feature. For more information about the metrics, see the "Event Manager Metrics" in CAPIF Metrics.

3.10 Support for AF Session with QoS

In network deployments, operators have the requirement to offer services of a certain quality. There are scenarios when operators need to provide different Quality of Services (QoS) to different types of subscribers or UEs.

NEF enables the operators to manage the QoS using a set of parameters related to the traffic performance on networks. It also provides the capability to set up different QoS standards for different UE sessions based on the service requirements and other specifications. To perform this functionality, the NEF QoS service communicates with Policy Control Function (PCF) to set up, modify, and revoke an AF session with the required QoS.

The AF session with the QoS service feature allows AF to request a data session for a UE with a specific QoS.

The AF sends a request to NEF to provide QoS for the AF session using a QoS reference parameter, which refers to the predefined QoS information. NEF authorizes the request and communicates with the PCF. When the PCF authorizes the service information from the AF and generates a PCC rule, it derives the QoS parameters of the PCC rule based on the service information and the indicated QoS reference parameter. The AF may change the QoS by providing a different QoS reference parameter for an ongoing session. If this happens, the PCF updates the related QoS parameter sets in the PCC rule accordingly.

The AF Session with QoS Service functionality enables NEF to perform the following functionality:

- Set up an AF session with the required QoS

- Get the QoS session details for an AF

- Update the QoS subscription for an AF

- Delete an AF session with QoS

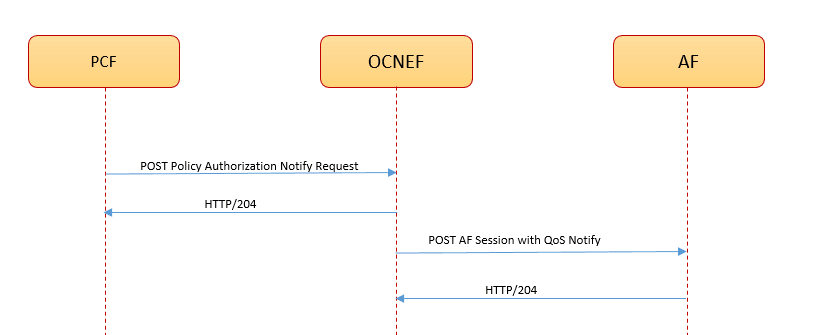

- Receive the QoS notifications, such as QoS Monitoring or Usage reports from the PCF and forward it to the subscribed AF

To set up AF sessions with QoS, NEF invokes the PCF that controls the privacy checking of the target subscriber. The invoked PCF authorizes the subscription or notification request, performs the required operation, and sends response to NEF. NEF exposes the information as and when received from the PCF to the AF.

Create Subscription Call Flow

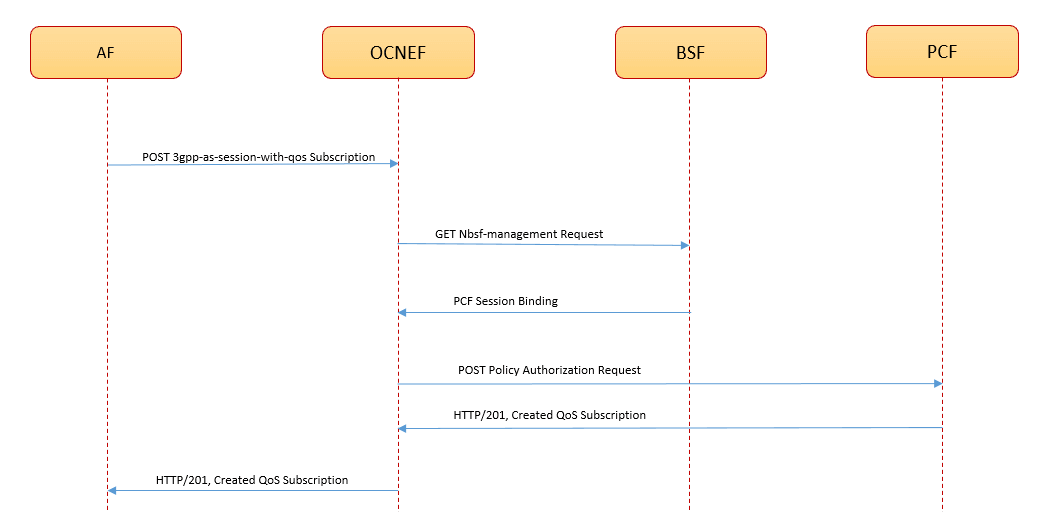

The following diagram is a high-level call flow where NEF receives a subscription request for QoS session from AF and interacts with a PCF to get the relevant information:

Figure 3-21 Call Flow for AF Session with QoS Subscription

- To set up a session with required QoS, AF sends a

3gpp-as-session-with-qosPOST request to NEF. - The request routes through the external Ingress Gateway to NEF QoS service after a successful authentication process at the CAPIF.

- The QoS service performs the following tasks:

- Retrieves the PCF session binding information for

the requested UE. The QoS service sends the

nbsf-managementGET request to the BSF and receives the SUPI, GPSI, PCF Fqdn, and binding level information in response. - Sends

npcf-policyauthorizationPOST request to the PCF to get the AF request authorized and create policies as requested for the associated PDU session.

Note:

In case, BSF is not enabled then the QoS service sends thenpcf-policyauthorizationPOST request to the PCF as per the pcfBaseUrl parameter value configured in the helm configurations. For more information about the configurations, see Managing AF Session with QoS. - Retrieves the PCF session binding information for

the requested UE. The QoS service sends the

- On successful processing, PCF creates an individual

session resource with the QoS monitoring subscription and sends the

HTTP/201 Createdresponse to the 5GC Agent service. - NEF forwards the

HTTP/201 Createdresponse to the AF.

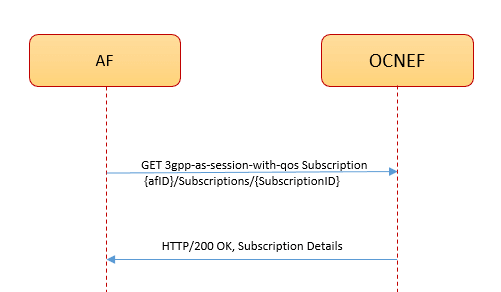

Get Subscription Call Flow

The following diagram is a high-level call flow where NEF receives a get subscription request for QoS session from AF and processes the request:

Figure 3-22 Call Flow for GET AF Session with QoS Subscription

- To retrieve a QoS subscription details AF sends a

3gpp-as-session-with-qosGET request to NEF. - The request routes through the external Ingress Gateway to NEF QoS service after a successful authentication process at the CAPIF.

- The QoS service retrieves the application session resource

from the NEF database and sends the

HTTP/200 OKresponse to the AF.

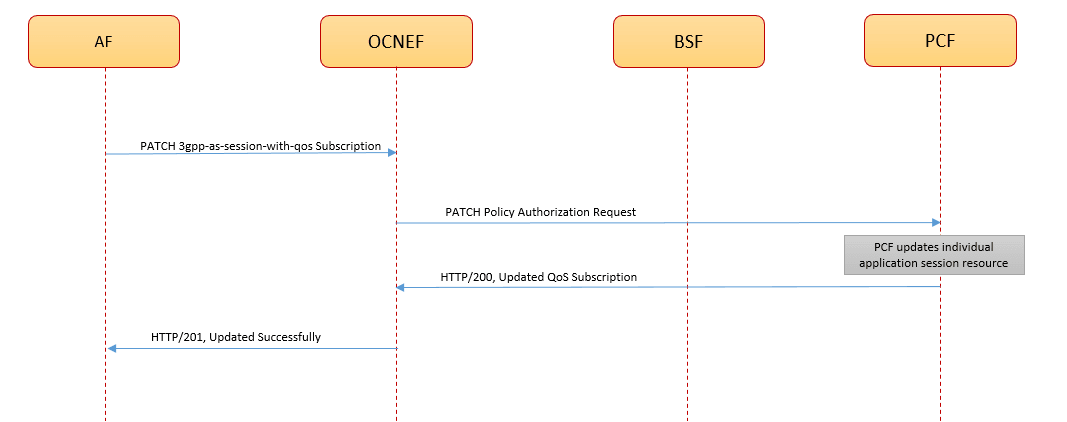

Update Subscription Call Flow

The following diagram is a high-level call flow where NEF receives a PUT or PATCH request for modifying a QoS subscription resource from AF and processes the request:

Figure 3-23 Call Flow for Update AF Session with QoS Subscription

- To modify a QoS subscription resource, AF sends a

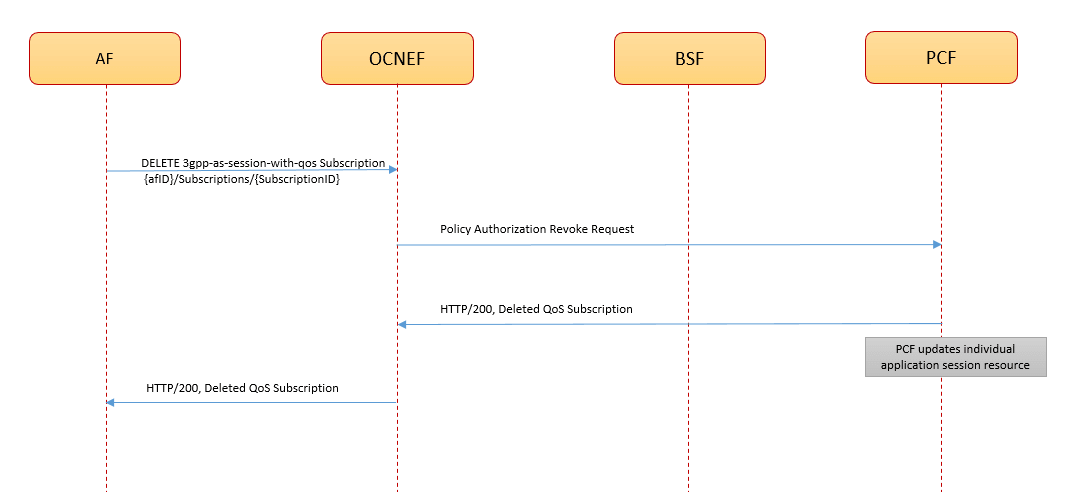

3gpp-as-session-with-qosPATCH request to NEF.Note: