2 ATS Framework Features

This chapter describes ATS Framework features.

The following table lists ATS framework features supported by different NFs:

Table 2-1 ATS Framework Features Compliance Matrix

| Features | BSF | NRF | NSSF | Policy | SCP | SEPP | UDR |

|---|---|---|---|---|---|---|---|

| ATS API | Yes | Yes | No | Yes | No | Yes | Partially compliant (In starting jobs, executing all test cases is only supported) |

| ATS Custom Abort | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| ATS Feature Activation and Deactivation | Yes | No | No | Yes | No | Yes | Yes |

| ATS GUI Enhancements | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| ATS Health Check | Yes | No | No | Yes | Yes | Yes | Yes |

| ATS Jenkins Job Queue | Yes | No | No | Yes | Yes | Yes | Yes |

| Application Log Collection | Yes | No | No | Yes | Yes | Yes | Yes |

| ATS Maintenance Scripts | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| ATS System Name and Version Display on Jenkins GUI | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| ATS Tagging Support | No | No | No | Yes | Yes | Yes | No |

| Custom Folder Implementation | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Health Check | Yes | No | Yes | Yes | No | Yes | Yes |

| Individual Stage Group Selection | Yes | No | No | Yes | Yes | Yes | No |

| Lightweight Performance | No | No | No | No | No | No | Yes |

| Managing Final Summary Report, Build Color, and Application Log | Yes | Partially compliant (Application Log is not supported.) | Yes | Yes | Yes | Yes | Yes |

| Modifying Login Password | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Multiselection Capability for Features and Scenarios | Yes | Partially compliant (Feature level selection is supported) | No | Yes | Partially compliant (Feature level selection is supported) | Partially compliant (Feature level selection is supported) | Partially compliant (Feature level selection is supported) |

| Parallel Test Execution | Partially compliant (Parallel Test Execution Framework integrated, but only supports sequential execution) | No | Partially compliant (Parallel Test Execution Framework integrated, but only supports sequential execution) | Yes | Yes | Partially compliant (Parallel Test Execution Framework integrated, but ATS test cases need to be organized to utilize the parallel execution) | Yes (UDR, SLF, and EIR) |

| Parameterization | Yes | Partially compliant (Supports only new features) | No | Yes | Yes | Yes | Yes |

| PCAP Log Collection | No | No | No | No | Yes | Yes | Yes |

| Persistent Volume | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Single Click Job Creation | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Support for ATS Deployment in OCI | No | No | No | No | Yes | Yes | No |

| Support for Transport Layer Security | No | No | No | No | Yes | Yes | No |

| Test Result Analyzer | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Test Case mapping and Count | Yes | No | No | Yes | Yes | Yes | Yes |

2.1 ATS API

- Start: To initiate one of the three test suites, such as Regression, New Features, or Performance.

- Monitor: To obtain the progress of a test suite's execution.

- Stop: To cancel an active test suite.

- Get Artifacts: To retrieve the JUNIT format XML test result files for a completed test suite.

For more information about configuring the tasks, see Use the RESTful Interfaces.

2.1.1 Generating an API Token for a User

An API token that has to be generated for the user to perform routine ATS tasks using the Restful Interfaces API. Any API call requires the use of an API token for authentication. You can generate the API token, and it works until it is revoked or deleted.

Perform the following procedure to generate an API token for a user:

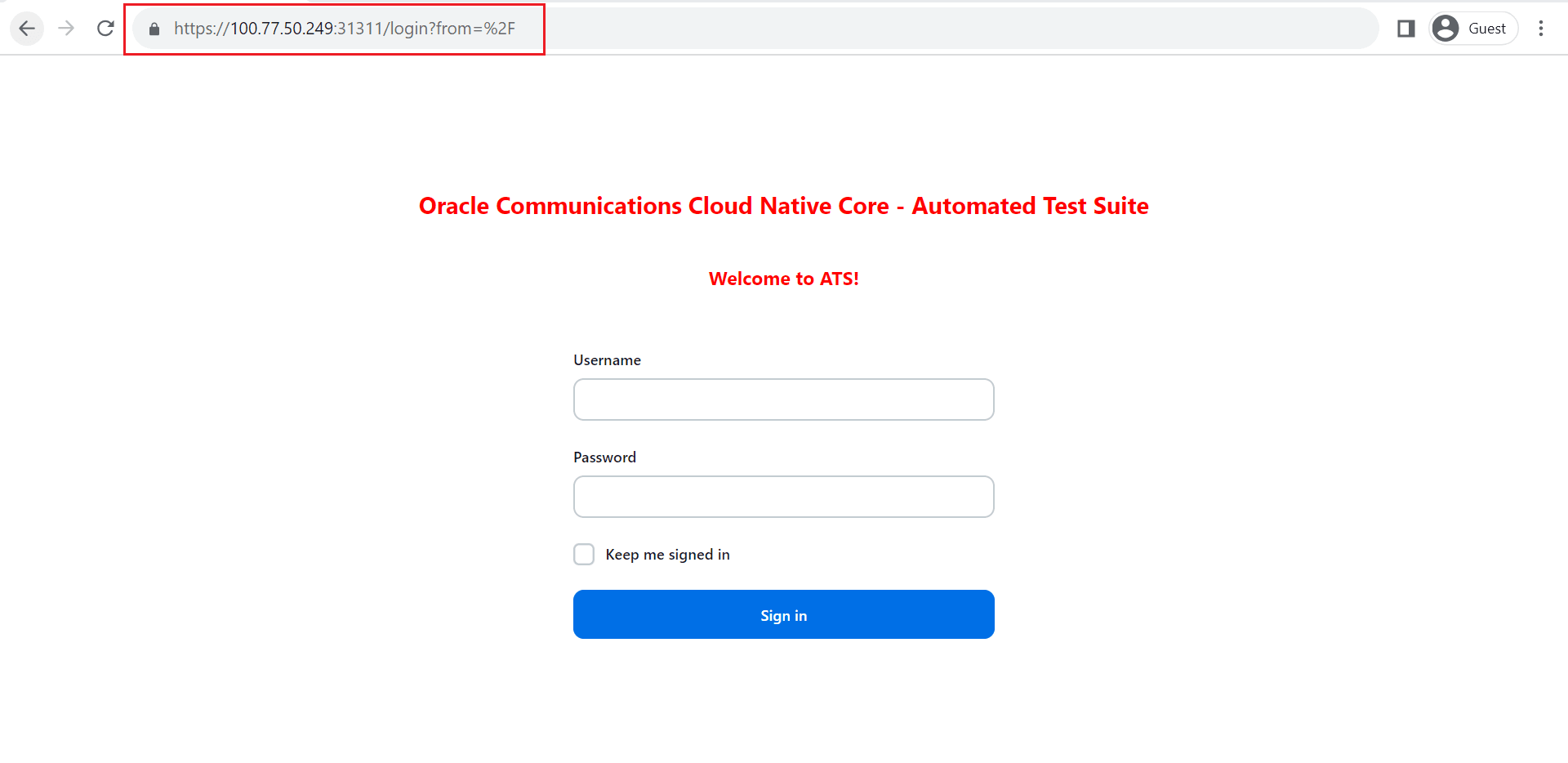

- Log in to Jenkins as an NF API user to generate an API token.

Figure 2-1 ATS Login Page

- Click the user name in the upper right corner of the GUI, and then click

Security.

Figure 2-2 Add Token

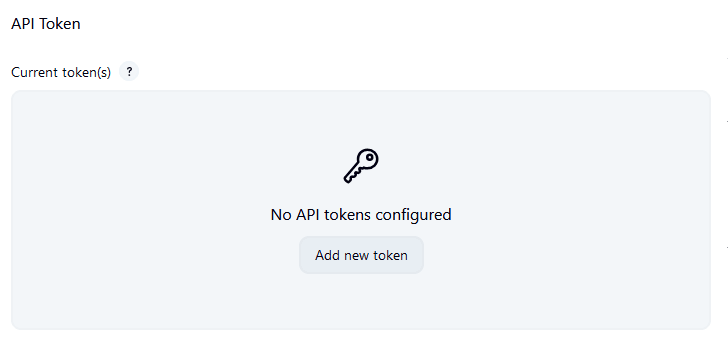

- In the API Token section, click Add new token.

Figure 2-3 Add New Token

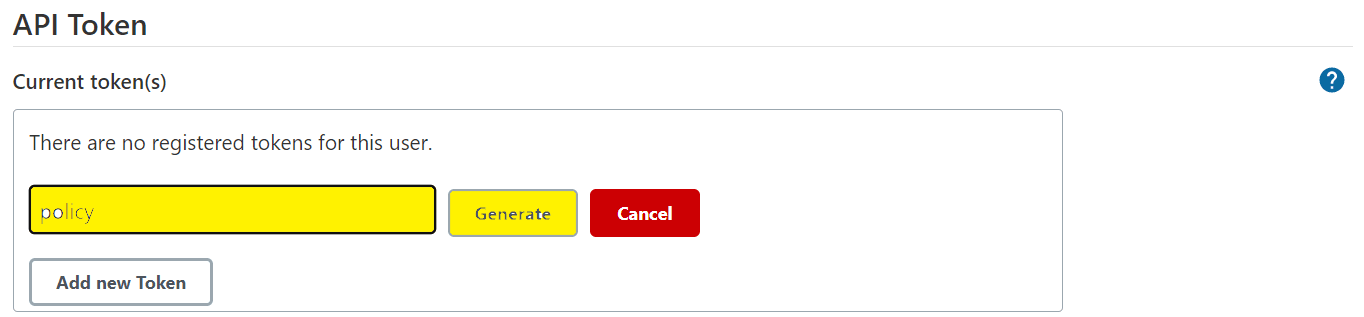

- Enter a suitable name for the token, such as policy, and then click

Generate.

Figure 2-4 Generate Token

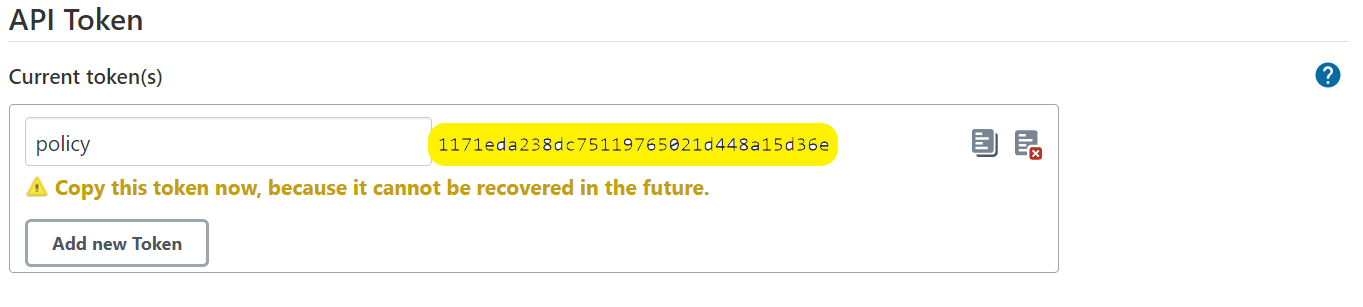

- Copy and save the generated token.

You cannot retrieve the token after closing the prompt.

Figure 2-5 Save Generated Token

- Click Save.

An API token is generated and can be used for starting, monitoring, and stopping a job using the REST API.

2.1.2 Use the RESTful Interfaces

This section provides an overview of each RESTful interface.

2.1.2.1 Configuring Host

The host to access the ATS GUI will remain the same in non-OCI setup.

For OCI Setup

The following two ways are supported to access the ATS API in OCI:

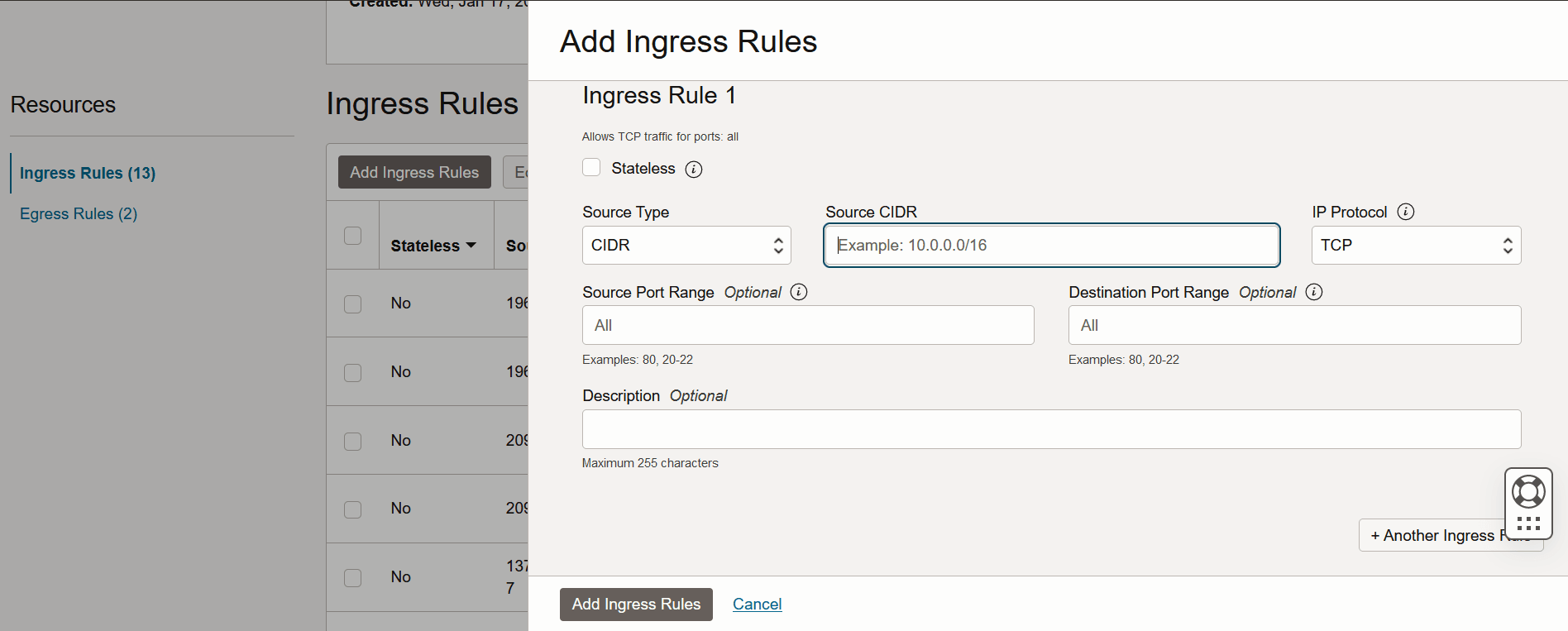

- Add proper Ingress/Egress security rules for ATS API port (5001) and ATS service nodeport corresponding to ATS API port in loadbalancer (nf_lb_subnet) and node subnet (nf_node_subnet). To add ingress and egress security rules, see the Adding Ingress and Egress Rules to Access the OCI Console section.

- If this step is not performed to access GUI, then insert the

following annotations under the Metadata section to

assign an external IP (Loadbalancer

IP):

oci-network-load-balancer.oraclecloud.com/security-list-management-mode: None oci.oraclecloud.com/load-balancer-type: nlb - Edit and save the ATS service after ATS deployment:

For example,

kubectl edit svc ats-service-name -n ats-namespace - Access the GUI using URL:

<http/https>://<Loadbalancer IP>:5001Note:

The assignment of Loadbalancer IP to the ATS service is subject to availability. If the Loadbalancer IP is not assigned to the ATS service even after applying the required annotations, try to debug on the OCI side.

- Add an ingress security rule for the node subnet (nf_node_subnet) to allow TCP traffic on all ports from the operator subnet. To add ingress and egress security rules, see the Adding Ingress and Egress Rules to Access the OCI Console section.

- Run the following ssh tunneling command from a bash terminal on your local

PC:

ssh -f -N -i <operator instance private key> -o StrictHostKeyChecking=no -o ProxyCommand="ssh -i <bastion private key> -o StrictHostKeyChecking=no -W %h:%p <bastion username>@<bastion IP>" <operator instance username>@<operator instance IP> -L <desired system port>:<Worker Node IP>:<ATS API NodePort> -o ServerAliveInterval=60 -o ServerAliveCountMax=300For example,

ssh -f -N -i id_rsa -o StrictHostKeyChecking=no -o ProxyCommand="ssh -i id_rsa -o StrictHostKeyChecking=no -W %h:%p opc@129.287.66.123" opc@10.1.76.7 -L 5009:10.9.60.118:32018 -o ServerAliveInterval=60 -o ServerAliveCountMax=300Here, ATS GUI URLis http://localhost:5009

TROUBLESHOOTING

If the ATS API returns an error stating "Network is unreachable," ensure that there is a proper ingress security rule in the loadbalancer subnet (nf_lb_subnet) allowing traffic from the system where the ATS API is being utilized.

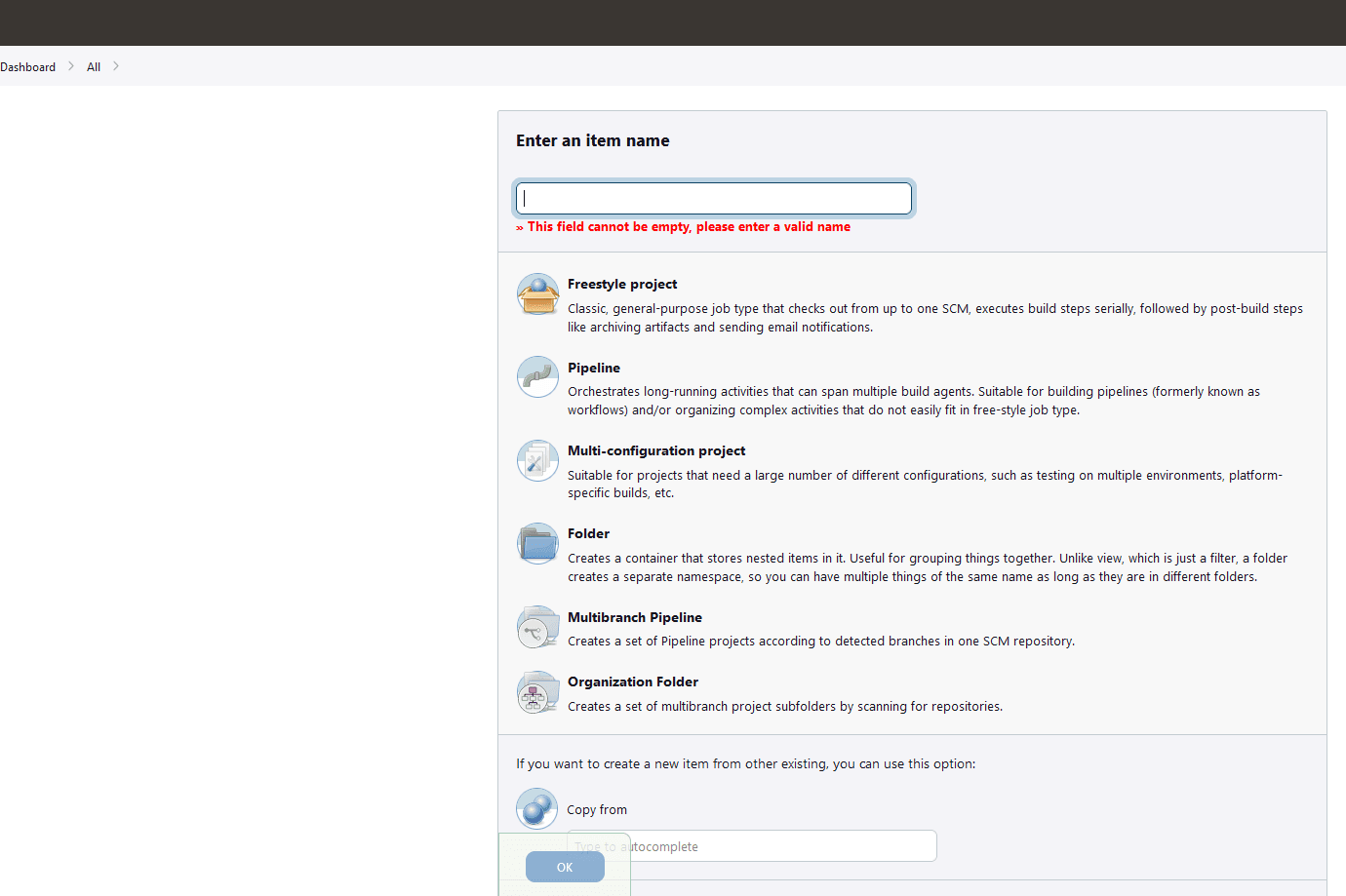

2.1.2.2 Starting Jobs

- Default Jenkins API: The default Jenkins API to start a pipeline job

- Custom API: To start a job forcibly

- Run the following command to start a job (Default Jenkins

method):

curl --request POST <Jenkins_host_port>/job/<Pipeline_name>/buildWithParameters –user <username>:<API_token> --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST <Jenkins_host_port>/job/<Pipeline_name>/buildWithParameters --user <username>:<API_token> --verbose --cacert <path_to_root_certificate>For example,curl --request POST http://10.123.154.163:30427/job/Policy-NewFeatures/buildWithParameters --user policyapiuser:111ad02d7471cec9ca689696e9c7a55c62 --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/buildWithParameters --user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert caroot.cer

Starting a Job Forcibly using Custom API

If another job is already running and has not been started by an API user, the running job is aborted, along with all other jobs in the queue that have not been started by an API user, and a new job is started.

If the running job is started by the API user, the new job does not start, and the start job request fails, returning a message in response: Build <job_id> of pipeline <pipeline_name> is already running, triggered by an API user.

Builds are aborted gracefully by a forceful API, such as when a running scenario completes its execution and cleanup before the corresponding build is aborted.

The forceful API now returns an

aborted-builds parameter in response, which contains job IDs

for all the aborted builds. It also returns a parameter called

cancelled_builds_in_queue, which contains queue IDs for all the

builds aborted in queue.

If a job ID is assigned to a build in queue, it contains a list of two values: [queueid, jobid] rather than just the queue ID.

Run the following command to start a job forcibly:

curl -s --request POST <Startjob_host_port>/build -H "Content-Type: application/json" -d '{"pipelineName": "<Pipeline_name>", "pageAndQuery": "<pageAndQuery>"}' --user <username>:<token> --verboseatsGuiTLSEnabled parameter is set to

true:curl -s --request POST

<Startjob_host_port>/build -H "Content-Type: application/json" -d '{"pipelineName": "<Pipeline_name>", "pageAndQuery":

"<pageAndQuery>"}' --user <username>:<token> --verbose

--cacert <path_to_root_certificate>curl --request POST http://10.75.217.25:31170/build -H "Content-Type: application/json" -d

'{"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters"}'

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 --verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30170/build -H "Content-Type: application/json" -d

'{"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters"}'

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 --verbose --cacert

caroot.cerTable 2-2 API Parameters

| Parameters | Mandatory | Default Value | Description |

|---|---|---|---|

| username | YES | NA | This parameter indicates the name of API user. |

| token | YES | NA | This parameter indicates the API token for API user. |

| Startjob_host_port | YES | NA | This parameter's format is

<host>:<port>

|

| pipelineName | YES | NA | This parameter indicates the name of the pipeline for which build is to be triggered. |

| pageAndQuery | YES | NA | This parameter can have two values:

|

| jenkins_wait_time | NO | 5 | This parameter indicates the wait time for

Jenkins in seconds.

|

Customizing Job Parameters

paramx=valuex.

- Append

paramx=valuetobuildWithParameters?.Example 1,

curl --request POST 10.75.217.40:31378/job/Policy-NewFeatures/buildWithParameters?paramx=valuex --user policyapiuser:110ed65222b9e63445689314998ff8c3bk -- verboseExample 2,

curl --request POST 10.75.217.4:32476/build -H "Content-Type: application/json" -d '{ "pipelineName": "Policy-NewFeatures", "pageAndQuery": "buildWithParameters?paramx=valuex" }'--user <username>:<token> -–verbose - To add more than 1 parameter, such as

paramx=valuexandparamy=valuey, append the other parameters to the API call using&.Example 1,

curl --request POST 10.75.217.40:31378/job/Policy-NewFeatures/buildWithParameters?paramx=valuex¶my=valuey --user policyapiuser:110ed65222b9e63445689314998ff8c3bk -- verboseExample 2,

curl --request POST 10.75.217.4:32476/build -H "Content-Type: application/json" -d '{"pipelineName": "Policy-NewFeatures", "pageAndQuery": "buildWithParameters?paramx=valuex¶my=valuey" }' --user <username>:<token> --verbose - Replace

buildWithParameters?withbuildfor non-parametrized pipeline jobs. - Start the pipeline by using the default Jenkins API or by

changing the pageAndQuery parameter's value to build in the following

way:

curl --request POST <Jenkins_host_port>/job/<Pipeline_name>/build --user <username>:<API_token> --verboseExample 1,

curl --request POST 10.75.217.40:31378/job/Policy-NewFeatures/build --user policyapiuser:110ed65222b9e63445689314998ff8c3bk -- verboseExample 2,

curl --request POST 10.75.217.4:32476/build -H "Content-Type: application/json" -d '{ "pipelineName": "Policy-NewFeatures", "pageAndQuery": "build" }'--user <username>:<token> –verbose

curl --request

POST <IP>:<PORT>/build -H

"Content-Type: application/json" -d '{"pipelineName": "<NF PIPELINE>", "pageAndQuery": "buildWithParameters", "otherBuildParameters": { }}' --user

<NF>apiuser:<token> --verbose atsGuiTLSEnabled parameter is set to

true:curl --request POST <IP>:<PORT>/build \

-H "Content-Type: application/json" \

-d '{

"pipelineName": "<NF PIPELINE>",

"pageAndQuery": "buildWithParameters",

"otherBuildParameters": {

}

}' \

--user <NF>apiuser:<Token> \

--verboseNote:

The API continues to support the same functionality as in the previous release. In addition, to provide extended support, a new key "otherBuildParameters" has been introduced. This key can be included in the JSON payload sent to the server.Table 2-3 otherBuildParameters Details

| Parameter | Mandatory/Optional | Default Value | Description |

|---|---|---|---|

| otherBuildParameters | Optional | NA | "otherBuildParameters" is a dictionary of build

parameters. You can add key-value pairs to customize the build

process. For example:"otherBuildParameters" : {

"Features" : "YamlSchema_Import_Export,Custom_Jsons",

"Stages" : "stage2,stage3", "Groups" : { "stage1" :

"group1,group4", "stage4" : "group4,group5" } } This dictionary supports the following keys:

|

Note:

If none of the keys in "otherBuildParameters" are included in the API request, all the test cases with the given execution options will be triggered.Figure 2-6 Execution Option

- Example format for executing features, stages, scenarios, or

groups through the API

request:

curl --request POST <IP>:<PORT>/build \ -H "Content-Type: application/json" \ -d '{ "pipelineName": "<NF PIPELINE>", "pageAndQuery": "buildWithParameters", "otherBuildParameters": { "Features": "<Feature List>", "Stages": "<stages>", "Groups":{ "<stage-n>":"<group-a,group-b>","<stage-m>":"<group-p,group-q>" }, "Scenarios": "", "Featuresandscenarios": { "<Feature 1>": "<Scenario1>", "<Feature2>": "<Scenario1>,<Scenario2>" } } }' \ --user <NF>apiuser:<Token> \ --verbose - Example format for executing test cases based on provided tags.

If tags are specified, other keys such as "Features", "Scenarios", "Stages",

and so on, should not be

included.

curl --request POST <IP>:<PORT>/build \ -H "Content-Type: application/json" \ -d '{ "pipelineName": "<NF PIPELINE>", "pageAndQuery": "buildWithParameters", "otherBuildParameters": { "Feature_Include_Tags": "<tags>", "Feature_Exclude_Tags": "<tags>", "Scenario_Include_Tags": "<Tags>", "Scenario_Exclude_Tags": "<Tags>" } }' \ --user <NF>apiuser:<Token> \ --verbose

Starting a job with otherBuildParameters

Execute Features

"otherBuildParameters" : { "Features" : "<Comma separated FeatureList>" }curl --request POST 10.75.217.25:30100/build \

-H "Content-Type: application/json" \

-d '{

"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters",

"otherBuildParameters": {

"Features": "NF_Scoring,ManualGetDeleteSession_DeleteSelectivepcfBindingswithSUPI",

}

}' \

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 \

--verboseExecute Scenarios

- Using "Scenarios" key

In the API request, the user can include all parameters such as SUT, Fetch_Log_Upon_Failure, and so on, under the "pageAndQuery" key. Scenarios can be specified in the request using the "otherBuildParameters" dictionary as follows:

"otherBuildParameters" : { "Scenarios" : "<Comma separated Scenarios List>" }For example:curl --request POST 10.75.217.25:30100/build \ -H "Content-Type: application/json" \ -d '{ "pipelineName": "Policy-Regression", "pageAndQuery": "buildWithParameters", "otherBuildParameters": { "Scenarios": "Re_Import_YamlSchema_Verify_SMPolicy,AM_Terminate_Notify_Timeout,AM_Notify_With_Header_Timeout" } }' \ --user policyapiuser:11c1a628f808972c846c510151afa13ba2 \ --verbose - Using "Featuresandscenarios" key

The API request allows the user to include all parameters such as SUT, Fetch_Log_Upon_Failure, and so on, using the "pageAndQuery" key. Scenarios can be specified within the "otherBuildParameters" dictionary, as follows:

"otherBuildParameters" : { "Featuresandscenarios" : { "<Feature -1>" : "<Comma separated scenarios from Feature -1>" , "Feature -2" : "<Comma separated scenarios from Feature -2>" } }For example:curl --request POST 10.75.217.25:30100/build \ -H "Content-Type: application/json" \ -d '{ "pipelineName": "Policy-Regression", "pageAndQuery": "buildWithParameters", "otherBuildParameters": { "Featuresandscenarios":{ "YamlSchema_Import_Export" : "Re_Import_YamlSchema_Verify_SMPolicy" } } }' \ --user policyapiuser:11c1a628f808972c846c510151afa13ba2 \ --verbose

Execute Stages

"otherBuildParameters" : { "Stages" : "<Comma separated Stages list>" }curl --request POST 10.75.217.25:30100/build \

-H "Content-Type: application/json" \

-d '{

"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters?Configuration_Type=Custom_Config",

"otherBuildParameters": {

"Stages" : "stage2,stage4"

}

}' \

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 \

--verboseExecute Groups

"otherBuildParameters" : { "Groups" : { "<stage n>" : "<Comma

separated Groups List from stage n>", "<stage m>" : "<Comma separated Group list from

stage m>" } }curl --request POST 10.75.217.25:30100/build \

-H "Content-Type: application/json" \

-d '{

"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters",

"otherBuildParameters": {

"Groups":{"stage1":"group1" , "stage2" : "group3,group6"}

}

}' \

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 \

--verboseeExecute with Tags

In the API request, the user can specify all parameters such as SUT, Fetch_Log_Upon_Failure, and so on, using the "pageAndQuery" key. When using tags, it is mandatory to include "FilterWithTags" in the "pageAndQuery". If tags are provided by the user, any other parameters such as features, scenarios, stages, or groups will not be considered. Only tags will be considered as input.

"otherBuildParameters": { "Feature_Include_Tags":"<tags>", "Feature_Exclude_Tags":"<tags>", "Scenario_Include_Tags":"<Tags>" , "Scenario_Exclude_Tags":"<Tags>" }Note:

The provided tags must be separated by commas.curl --request POST 10.75.217.25:30100/build \

-H "Content-Type: application/json" \

-d '{

"pipelineName": "Policy-Regression",

"pageAndQuery": "buildWithParameters",

"otherBuildParameters": {

"Feature_Include_Tags":"cne-common,cm-service", "Scenario_Include_Tags":"cleanup,sanity"

}

}' \

--user policyapiuser:11c1a628f808972c846c510151afa13ba2 \

--verboseNote:

The API behaves in a manner similar to that of the UI. For instance, when running a set of scenarios, the user selects them, and only those chosen are executed upon triggering the build. If any stages, groups, or features are also selected in the features section, they are ignored. Similarly, if stages, groups, or features are included alongside scenarios in the API request, only the scenarios will be executed.2.1.2.3 Monitoring Jobs

This Default Jenkins API is used to monitor the progress of the job that was started.

- A qid is obtained from the Location header in the

response for

starting a job. The first API uses this qid to get queue status about the corresponding job, including its job_id. - The second API uses the job_id to obtain further information about the job status.

curl --request POST <Jenkins_host_port>/queue/item/<qid>/api/json --user <username>:<API_token> --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST <Jenkins_host_port>/queue/item/<qid>/api/json --user <username>:<API_token> --verbose --cacert <path_to_root_certificate>For example,curl --request POST http://10.123.154.163:30427/queue/item/5/api/json--user policyapiuser:111ad02d7471cec9ca689696e9c7a55c62 --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST https://10.75.217.25:30301/queue/item/27/api/json --user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert caroot.cercurl --request POST <Jenkins_host_port>/job/<Pipeline_name>/<job_id>/api/json --user <username>:<API_token> --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST <Jenkins_host_port>/job/<Pipeline_name>/<job_id>/api/json --user <username>:<API_token> --verbose --cacert<path_to_root_certificate>For example,curl --request POST http://10.123.154.163:30427/job/Policy-NewFeatures/3/api/json--user policyapiuser:111ad02d7471cec9ca689696e9c7a55c62 --verboseWhen theatsGuiTLSEnabledparameter is set to true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/2/api/json --user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert caroot.cer

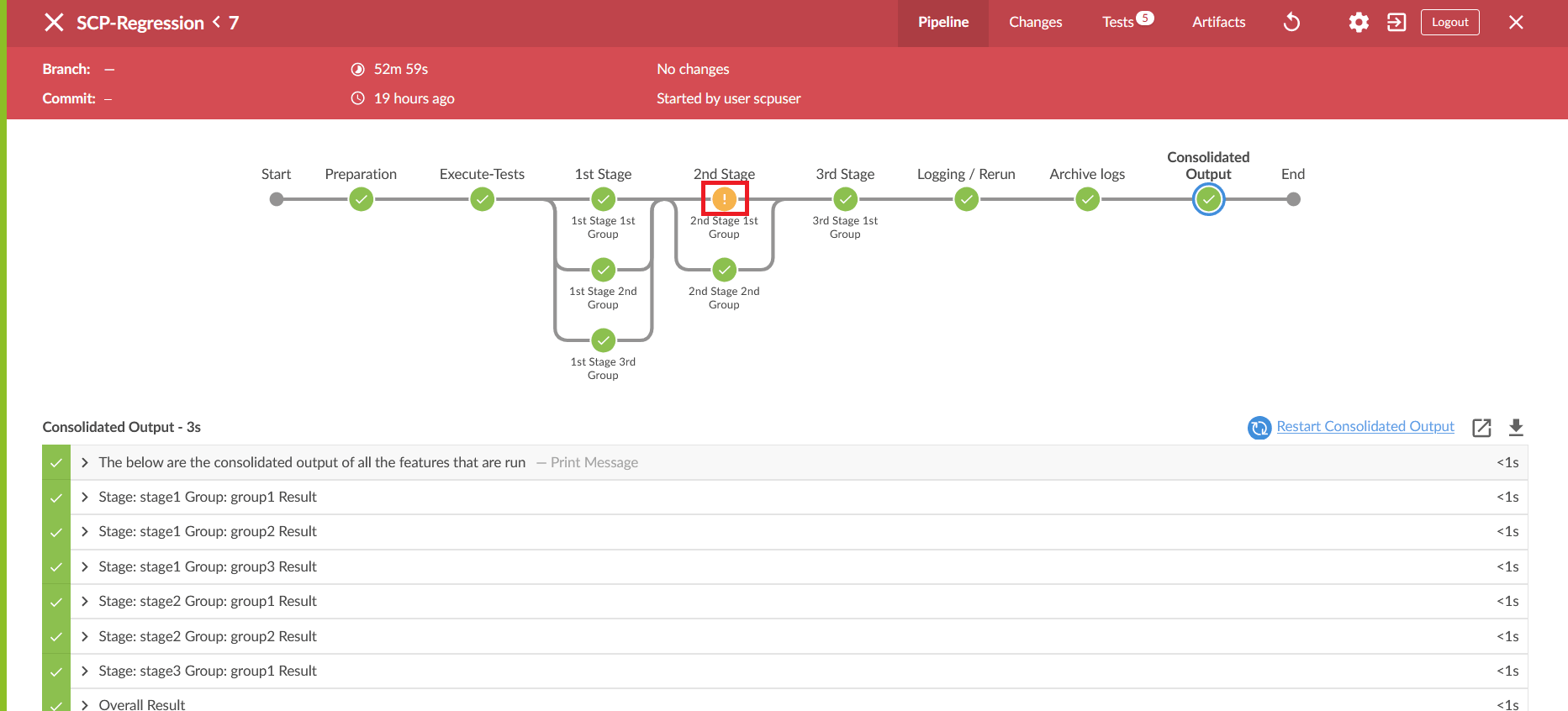

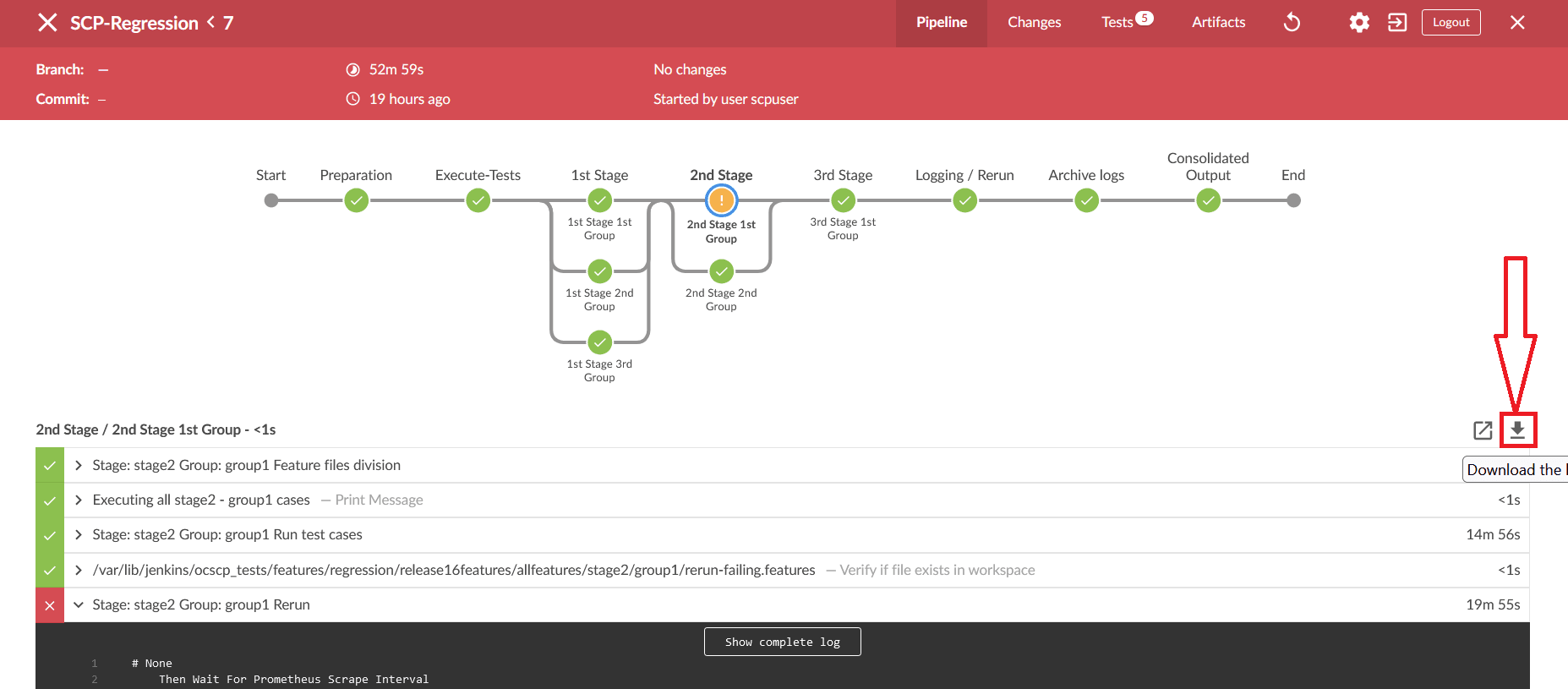

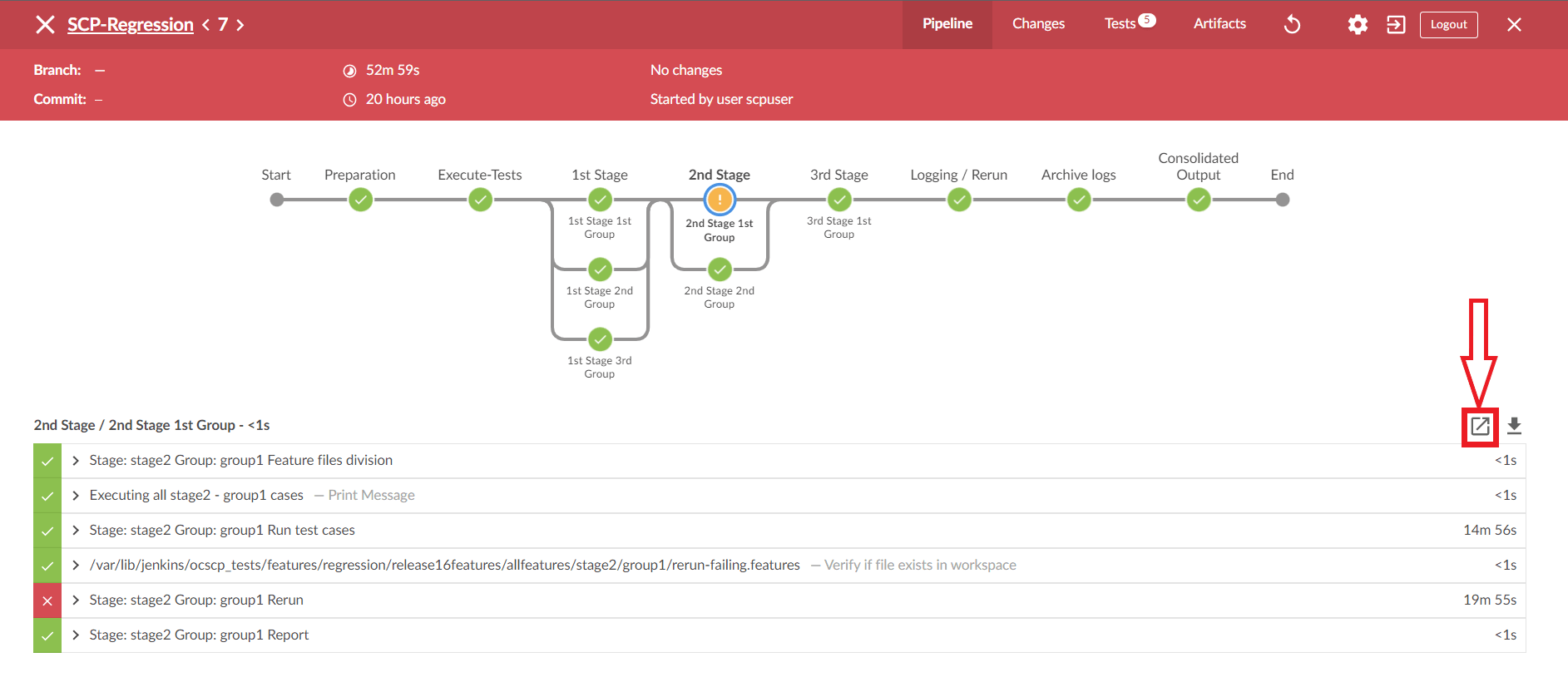

Figure 2-7 Monitoring a Job

2.1.2.4 Stopping Jobs

Stop API is used to stop the currently running job using its job_id. It is also a default Jenkins API.

Stopping a Job

curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/stop --user <username>:<API_token>

--verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/stop --user <username>:<API_token> --verbose –cacert

<path_to_root_certificate>curl --request POST http://10.75.217.4:31881/job/UDR-Regression/21/stop --user

udrapiuser:1139a72213e0a686972cbff4a2f9333a9f --verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/2/stop --user

scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert caroot.cerNote:

- If the rerun count is greater than zero, the job must be stopped twice.

- This Stop API call does not abort the build gracefully.

curl --request POST

<Stopjob_host_port>/job/<Pipeline_name>/<job_id>/stop --user <username>:<API_token>

--verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST

<Stopjob_host_port>/job/<Pipeline_name>/<job_id>/stop --user <username>:<API_token> --verbose --cacert

<path_to_root_certificate>curl --request POST http://10.75.217.4:32476/job/UDR-Regression/21/stop --user

udrapiuser:1139a72213e0a686972cbff4a2f9333a9f --verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30170/job/SCP-Regression/2/stop --user

scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert caroot.cerTable 2-4 Stop API Details

| Parameter | Mandatory | Default Value | Description |

|---|---|---|---|

| userName | Yes | NA | Name of API user |

| token | Yes | NA | The API token for the API user |

| Stopjob_host_port | Yes | NA | Format is <host>:<port>

|

| pipelineName | Yes | NA | Name of the pipeline for which build is to be stopped |

| immediate | No | False | To stop the build immediately, send a query

parameter ("immediate=true") with API call.

For

example,

|

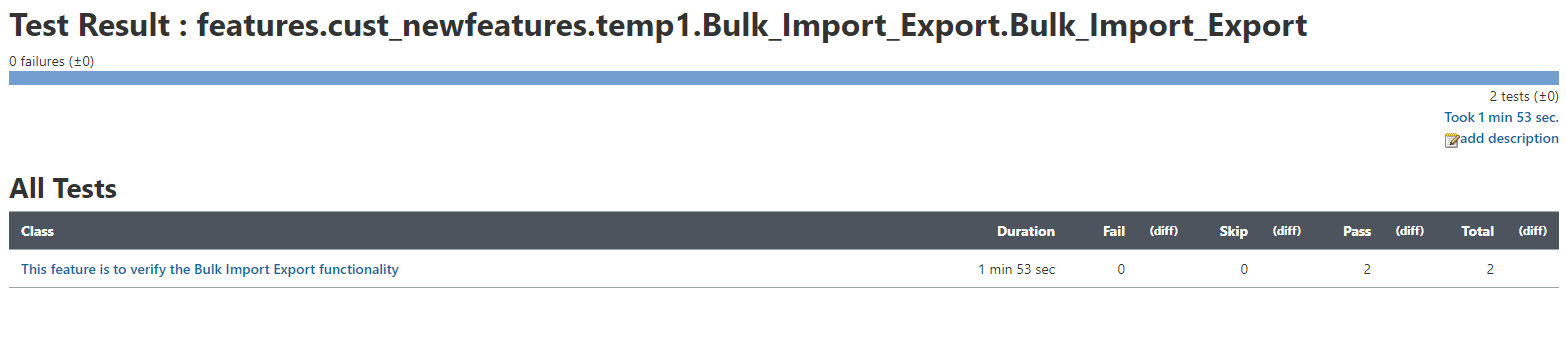

2.1.2.5 Getting Test Suite Artifacts

Default Jenkins API is used to get the JUNIT-formatted XML test result files for a completed test suite.

- For getting an overall build summary

- For getting a JUNIT XML test result file for every feature file that ran

curl --request POST <Jenkins_host_port>/job/<Pipeline_name>/<job_id>/testReport/api/xml?exclude=testResult/suite --user <username>:<API_token> --verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/testReport/api/xml?exclude=testResult/suite --user

<username>:<API_token> --verbose --cacert <path_to_root_certificate>curl --request POST http://10.123.154.163:30427/job/Policy-NewFeatures/4/testReport/api/xml?exclude=testResult/suite--user policyapiuser:111ad02d7471cec9ca689696e9c7a55c62 --verboseatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/1/testReport/api/xml?exclude=testResult/suite

--user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert

caroot.cerFor getting Feature-wise XML, Select_Option = All:

curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/artifact/test-results/reports/*zip*/test-results.zip

--user <username>:<API_token> --verbose --output test-results.zipatsGuiTLSEnabled parameter is set to

true:curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/artifact/test-results/reports/*zip*/test-results.zip

--user <username>:<API_token> --verbose --output test-results.zip --cacert

<path_to_root_certificate>curl --request POST http://

10.75.217.4:31881/job/Policy-NewFeatures/21/artifact/test-results/reports/*zip*/test-results.zip --user policyapiuser:11c3344996c4fda01ded2124bec4f9aa17

--verbose –-output test-results.zipatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/1/artifact/test-results/reports/*zip*/test-results.zip

--user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert

caroot.cerFor getting Feature-wise XML, Select_Option = Single/MultipleFeatures:

curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/artifact/test-results/reports/

*.<Feature1_name>.xml,*.<Feature2_name>.xml/*zip*/test-results.zip --user <username>:<API_token>

--verbose --output test-results.zipatsGuiTLSEnabled parameter is set to

true:curl --request POST

<Jenkins_host_port>/job/<Pipeline_name>/<job_id>/artifact/test-results/reports/

*.<Feature1_name>.xml,*.<Feature2_name>.xml/*zip*/test-results.zip --user <username>:<API_token>

--verbose --output test-results.zip –-cacert

<path_to_root_certificate>curl --request POST http://

10.75.217.4:31881/job/Policy-NewFeatures/21/artifact/test-results/reports/*.goldenfeature.xml,*.AMPolicy.xml/*zip*/test-results.zip

–user policyapiuser:11c3344996c4fda01ded2124bec4f9aa17 --verbose --output test-results.zipatsGuiTLSEnabled parameter is set to

true:curl --request POST https://10.75.217.25:30301/job/SCP-Regression/1/artifact/test-results/reports/*.SCP_Registration_With_PLMNList.xml/*zip*/test-results.zip

--user scpapiuser:11c2fde49cea6eb8f332ad23a7877ea2de --verbose --cacert

caroot.cerAPI calls for Select_Option = All and Select_Option =

Single/MultipleFeatures return a zip file with JUNIT XMLs, one XML for

each feature.

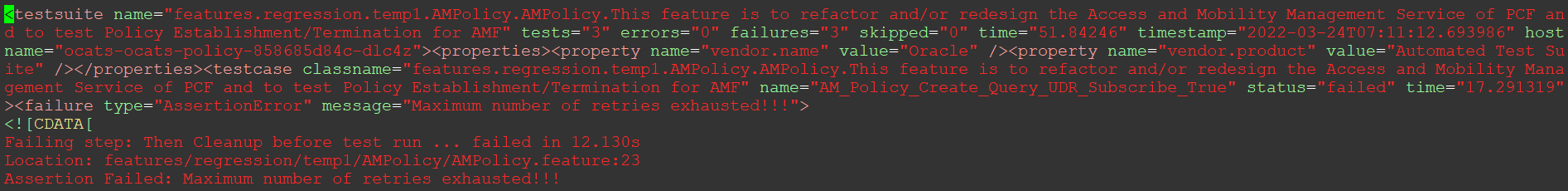

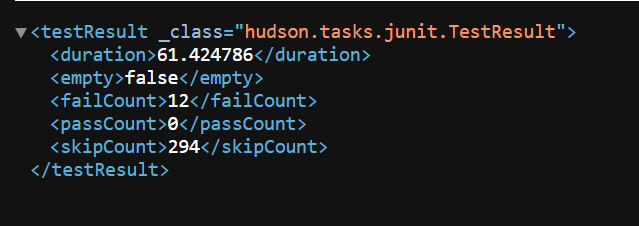

Figure 2-8 Sample XML Output for AMPolicy.feature

In the API call, specify other selected features in comma-separated form

as

/*<Feature1_name>.xml,*<Feature2_name>.xml,*<Feature3_name>.xml,*<Feature4_name>.xml/

for Select_Option = Single/MultipleFeatures.

duration, failCount, passCount, and

skipCount for the current build.

Figure 2-9 Sample Output

It is recommended to maintain a gap of at least a few seconds between two API calls. This gap depends on the time Jenkins takes to complete the API request.

2.2 ATS Custom Abort

ATS Custom Abort feature allows you to gracefully abort the ongoing build directly from the Graphical User Interface (GUI).

- Using the Abort Button on the GUI: This method, supported by Jenkins, allows you to abort builds directly from the user interface.

- Using the ATS API: This is a partially manual method for

aborting builds.

When the ATS API is used to abort a build, ATS will wait for any running scenarios to complete their cleanup process before finalizing the abort. This ensures that there are no issues related to cleanup when using the ATS API.

Manual Abort

By default, Jenkins provides a cancel icon for every pipeline on the dashboard whenever a job is running. However, using the manual abort or cancel icon has some limitations, especially in parallel execution scenarios.

When the manual abort or cancel icon is used, Jenkins sends a kill signal to stop all current executions, regardless of whether test cases or other operations are in progress. In cases of parallel execution with multiple stages, the manual abort or cancel icon may need to be clicked multiple times due to the presence of multiple stages. This approach can lead to pending cleanup for test cases, which may cause failures in subsequent executions.

To address the issues with manual abort, the release 24.3.0 introduces an Abort_Build menu in all new features and regression pipelines, ensuring a more controlled and graceful termination of builds.

The cancel icon will not be displayed for currently running builds. However, if there are any builds in the queue, they will still have the cancel icon available for use.

Figure 2-10 Stopping Builds

Abort_Build

The Abort_Build is available on every regression and new features pipeline. It supports the graceful termination of builds. This Abort button available in the GUI triggers a stop API request to the ATS API server. This request ensures that the execution is stopped gracefully, allowing all currently running scenarios to complete.

- Enter the login credentials and click Sign

in.

The screens displays preconfigured pipelines for NF individually.

- Click NF-NewFeatures or

NF-Regression in the Name

column.

The NF-NewFeatures or NF-Regression screen appears.

- Click Abort_Build in the left navigation

pane.

Figure 2-11 Abort Build

You will be redirected to the Pipeline Abort-helper page.

- If no builds are currently running or in progress, the page will display the message: "No builds are running."

- If builds are running, the page will display the following:

- Running_Builds: This section lists the running

pipeline names and build numbers.

Figure 2-12 Running_Builds

- Abort: Click the button to start the abort

process.

Figure 2-13 Aborting Build

- Back: Click the button to return to the pipeline page or remain on the current page while the abort process completes.

- Running_Builds: This section lists the running

pipeline names and build numbers.

- If the abort is initiated during stages such as "Preparation" or "POST," the build stops within a few minutes.

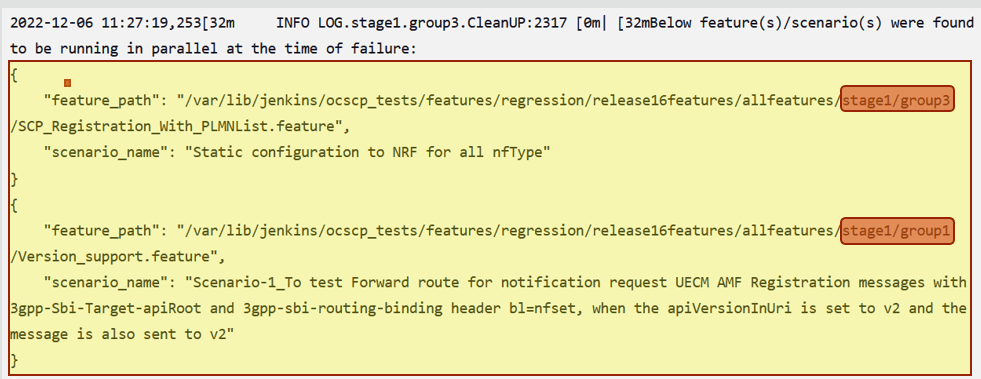

- If parallel test case execution is not enabled for NFs, and there is only one stage, such as "Execute-tests" or "stage1/group1", the time taken for a graceful abort depends on the duration of the currently running scenarios.

- If parallel test case execution is enabled for NFs, the time required for abort depends on the scenario that takes the longest time to complete among the currently running groups.

Figure 2-14 Manual Abort or Cancel icon

Note:

It is recommended to use the new Abort button instead of the manual abort or cancel icon provided by Jenkins for a more reliable abort process.2.3 ATS Feature Activation and Deactivation

Note:

Once these features are removed, they cannot be reinstated in the deployed ATS. However, users have the option to reinstall the ATS to restore the disabled features.Note:

These parameters can be edited in the ATS deployment file (values.yaml).

Table 2-5 Enable or Disable ATS Feature

| Features | Parameter | Description |

|---|---|---|

| Support for Test Case Mapping and Count | testCaseMapping | Set this parameter to true to activate the feature

in the ATS GUI.

|

| Application Log Collection and PCAP Log Collection | logging | Set this parameter to true to collect the ATS

logs. If the parameter is set to false, the logs will not be collected.

|

| Lightweight Performance | lightWeightPerformance | Set this parameter to true to activate the feature

in the ATS GUI. If the parameter is set to false, the performance pipeline will not

be accessible.

|

| ATS Health Check | healthcheck | Set this parameter to true to activate the

feature in the ATS GUI. If the parameter is set to false, the health check pipeline

will not be accessible.

|

| ATS API | atsApi | Set this parameter to true to activate the

feature. If the parameter is set to false, the ATS API feature on the 5001 port will

be disabled.

|

| Parameterization | parameterization | Set this parameter to true to activate the

feature in the ATS GUI. If the parameter is set to false, the Configuration_Type

parameter on the GUI will not be available.

|

| Parallel Test Execution | parallelFrameworkChangesIntegrated | Set this parameter to true if all parallel test

case execution features are picked by NF and changes are made to files.

Note: Do not change the values provided in the

|

| Parallel Test Execution | parallelTestCaseExecution | Set this parameter to true to activate the

feature in the ATS GUI. If set to false, all the features will be copied into a

single stage or group, resulting in sequential execution.

Note: It is not advisable to edit the default value given in the values.yaml file for this parameter. |

| Parallel Test Execution | mergedExecution | Set this parameter to true to activate the

feature in the ATS GUI. If set to false, the option to include other pipelines for

mergedExecution will not be available.

Note: It is not advisable to edit the default value given in the values.yaml file for this parameter. |

| ATS Support to Execute Scenarios | scenarioSelection | Set this parameter to true to activate the

feature in the ATS GUI. If set to false, the ability to select single or multiple

scenarios will be removed. It is dependent on the testCaseMapping parameter. If this

parameter is set to false, the scenarioSelection parameter will be set to false too.

Note: It is not advisable to edit the default value given in the values.yaml file for this parameter. |

| ATS Tagging Support | executionWithTagging | Set this parameter to true to activate the feature

in the ATS GUI. If set to false, the ability to execute test cases based on tags

will be removed. It is dependent on the testCaseMapping parameter. If this parameter

is set to false, the executionWithTagging parameter will be set to false

too.

|

| Stage or Group Level Execution | individualStageGroupSelection | Set this parameter to true to activate the feature

in the ATS GUI. If set to false, the ability to select all test cases from

individual stages or groups using a single checkbox will be removed.

Note: It is not advisable to edit the default value given in the values.yaml file for this parameter. |

| Support for Transport Layer Security | atsGUITLSEnabled | Set this parameter to true to activate the feature

in the ATS GUI. If set to false, ATS will work in HTTP mode.

|

Note:

- You can edit the parameters relating to the features that NF supports. Keep the default value for the remaining parameters.

- For the current release, mergedExecution, individualStageGroupSelection, and parallelTestCaseExecution parameters value should not be modified.

2.4 ATS GUI Enhancements

Figure 2-15 Layout Enhancement

Figure 2-16 Test Cases Visibility

Figure 2-17 Tooltips

Figure 2-18 Multiselect Features and Scenarios

2.5 ATS Health Check

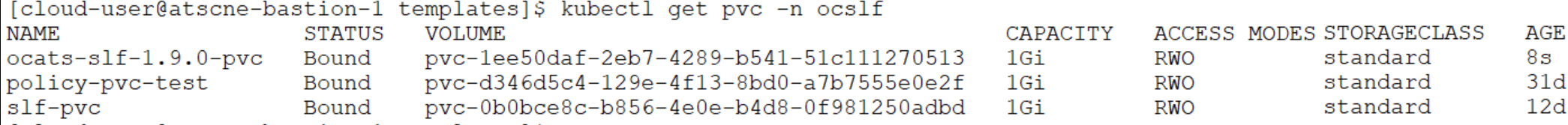

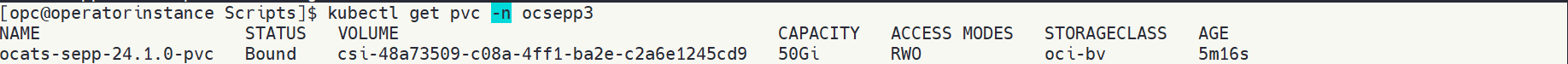

The ATS Health Check feature allows you to evaluate the health of the ATS deployment by conducting a comprehensive series of checks. ATS health checks are performed using the Health Check tool. After installation, it ensures the health of CNCATS pods, their services, and associated configurations.

Overview of Health Check Tool Functionality

- Initial Setup: After installation, the Health Check tool begins by running a series of predefined tests to establish a baseline.

- CPU and Memory Verification: The Health check tool verifies whether the CPU and memory allocated to CNCATS pods meet the minimum requirements set. It compares current resource allocations with these thresholds and flags any shortfalls, recommending necessary adjustments.

- Service Verification: It checks the operational status of the CNCATS services (ATS API and ATS GUI), including verifying service endpoints, ensuring services are running, and confirming they respond as expected.

- Test Folder Validation: It inspects the test folder to ensure that all necessary test files are present and properly configured.

- Configuration Checks: The tool reviews the authentication configurations required for running the System Under Test (SUT/NF) health check, verifying that all configurations are correct to facilitate smooth run.

- PVC Verification: It confirms whether the Persistent Volume Claim (PVC) is in a bound state and properly connected to a Persistent Volume. Any issues with PVC binding are flagged for further investigation.

By performing these checks, the tool ensures that the CNCATS pod and its associated services are functioning correctly, identifying potential issues before they affect system performance.

Note:

- This feature is available starting from CNCATS 24.3.0 Build.

- For initial checks, view the ATS Health Check in pod logs using

the

command:

.kubectl logs <podname> -n <namespace> - For subsequent checks, rerun the tool through ATS bash with the

command:

.healthtest

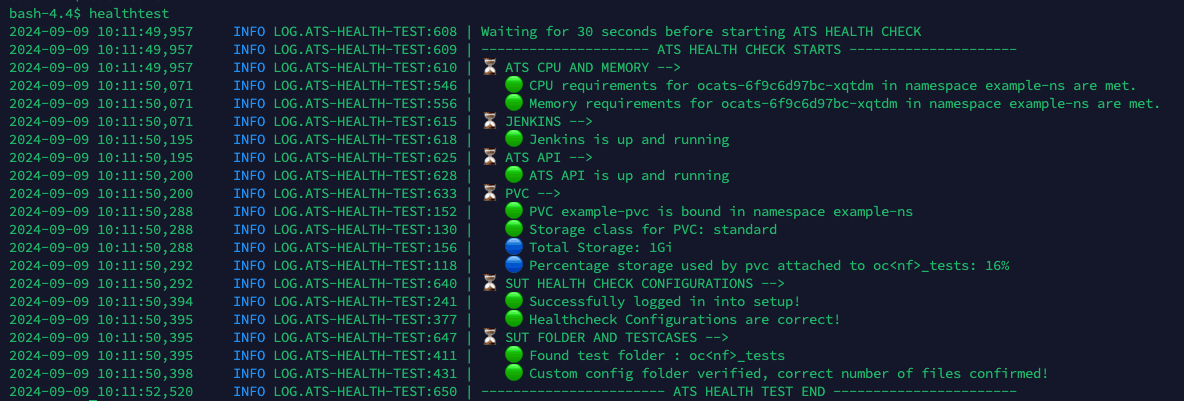

Figure 2-19 Success Health Check

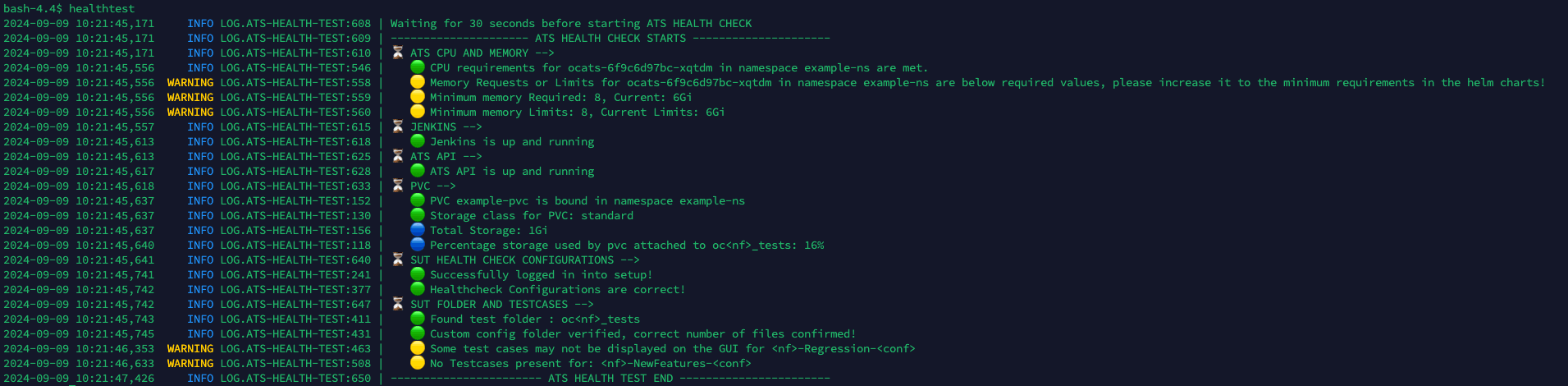

Figure 2-20 Warning and Errors

Note:

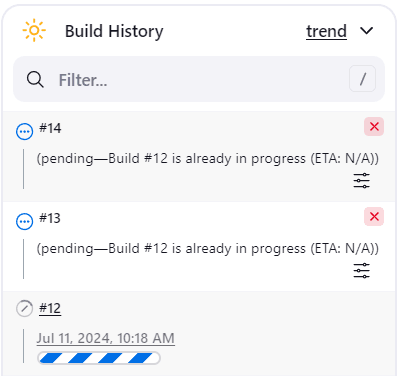

The highlighted areas illustrate a health check with warnings and errors that require further investigation or action.2.6 ATS Jenkins Job Queue

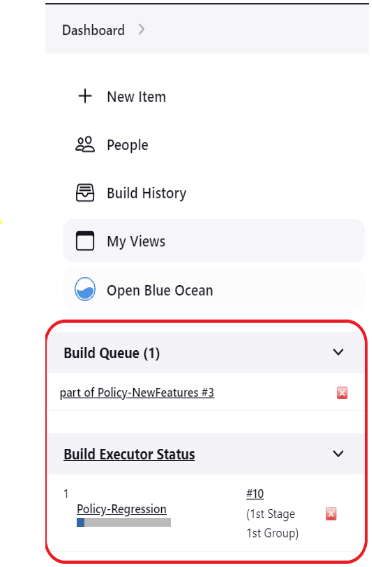

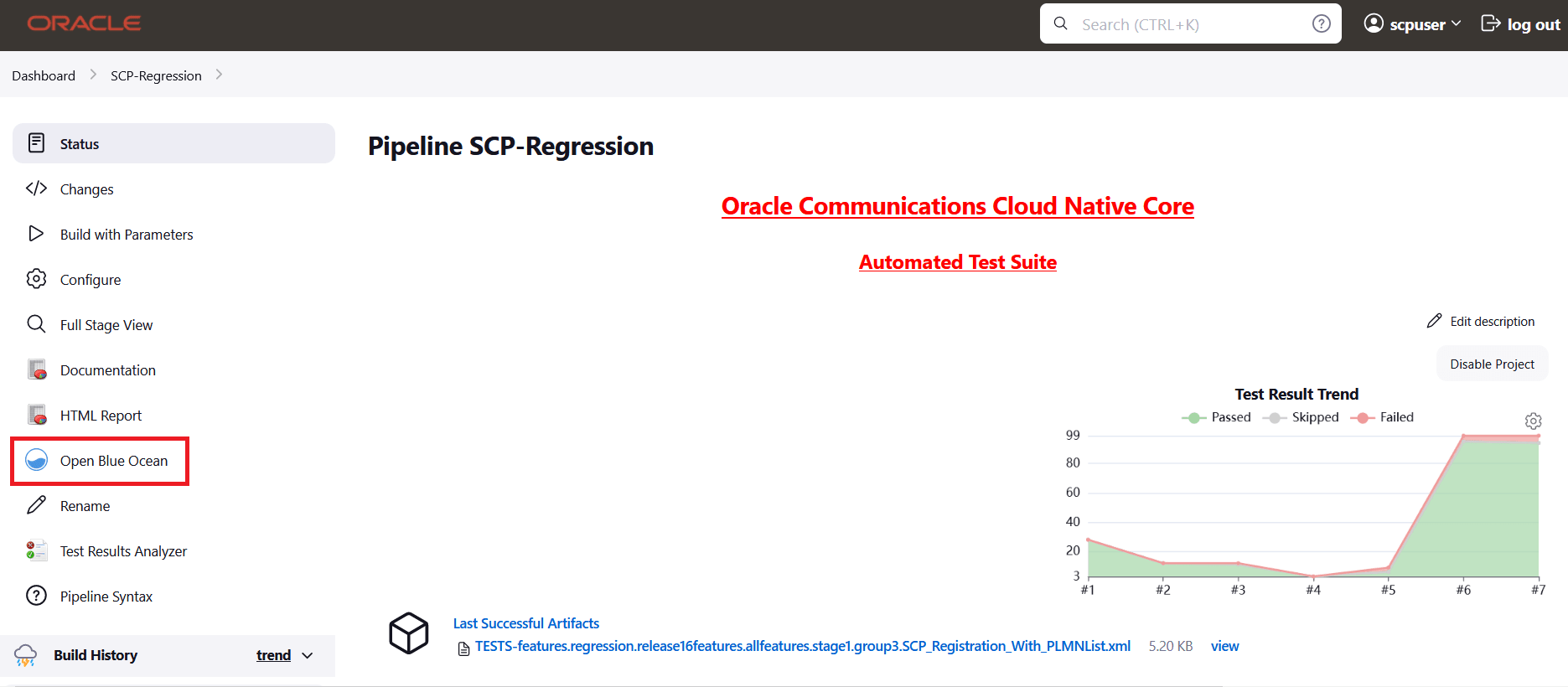

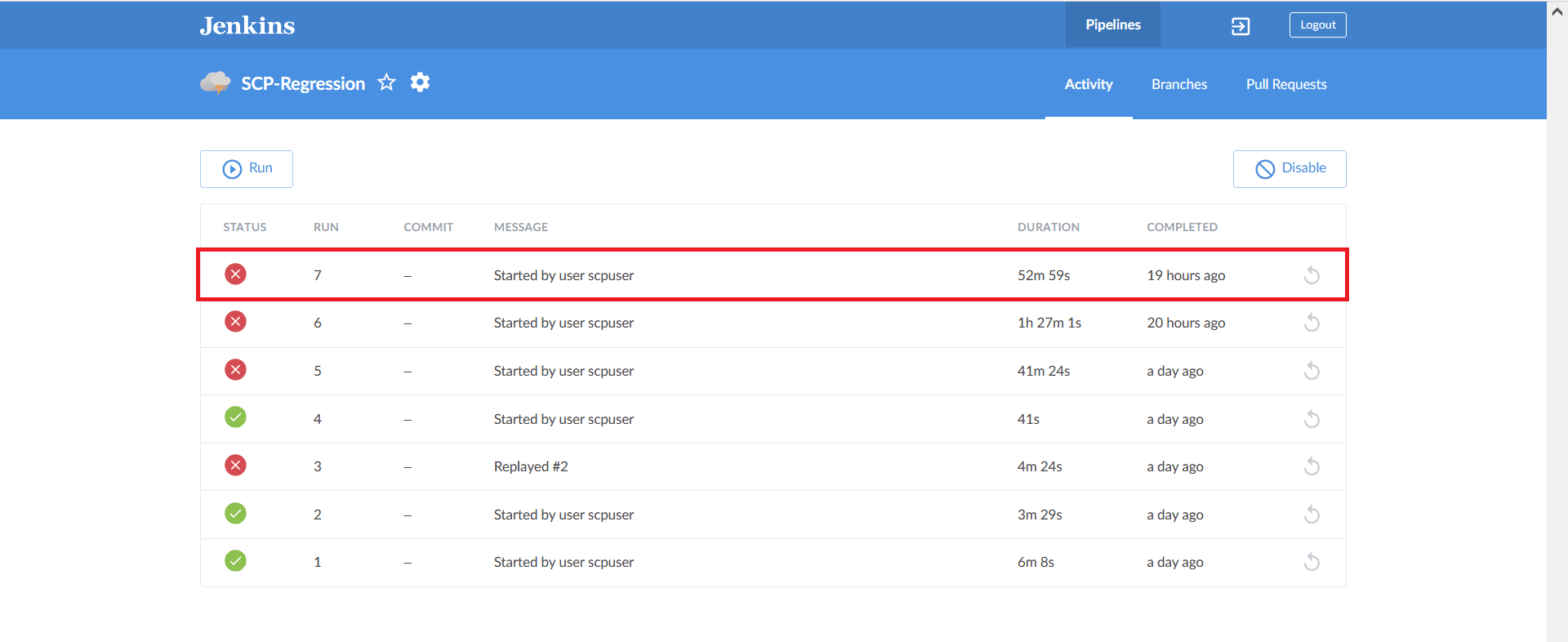

The ATS Jenkins Job Queue feature places the second job in a queue if the current job is already running from the same or different pipelines to prevent jobs from running in parallel to one another.

Figure 2-21 Build Executor Status

2.7 Application Log Collection

Using Application Log Collection, you can debug a failed test case by collecting the application logs for NF System Under Test (SUT). Application logs are collected for the duration that the failed test case was run.

Application Log Collection can be implemented by using OpenSearch or Kubernetes Logs. In both these implementations, logs are collected per scenario for the failed scenarios.

Application Log Collection Using OpenSearch

- Log in to ATS using respective <NF> login credentials.

- On the NF home page, click any new feature or regression pipeline, from where you want to collect the logs.

- In the left navigation pane, click Build with Parameters.

- Select YES or NO from the drop-down menu of

Fetch_Log_Upon_Failure to select whether the log

collection is required for a particular run.

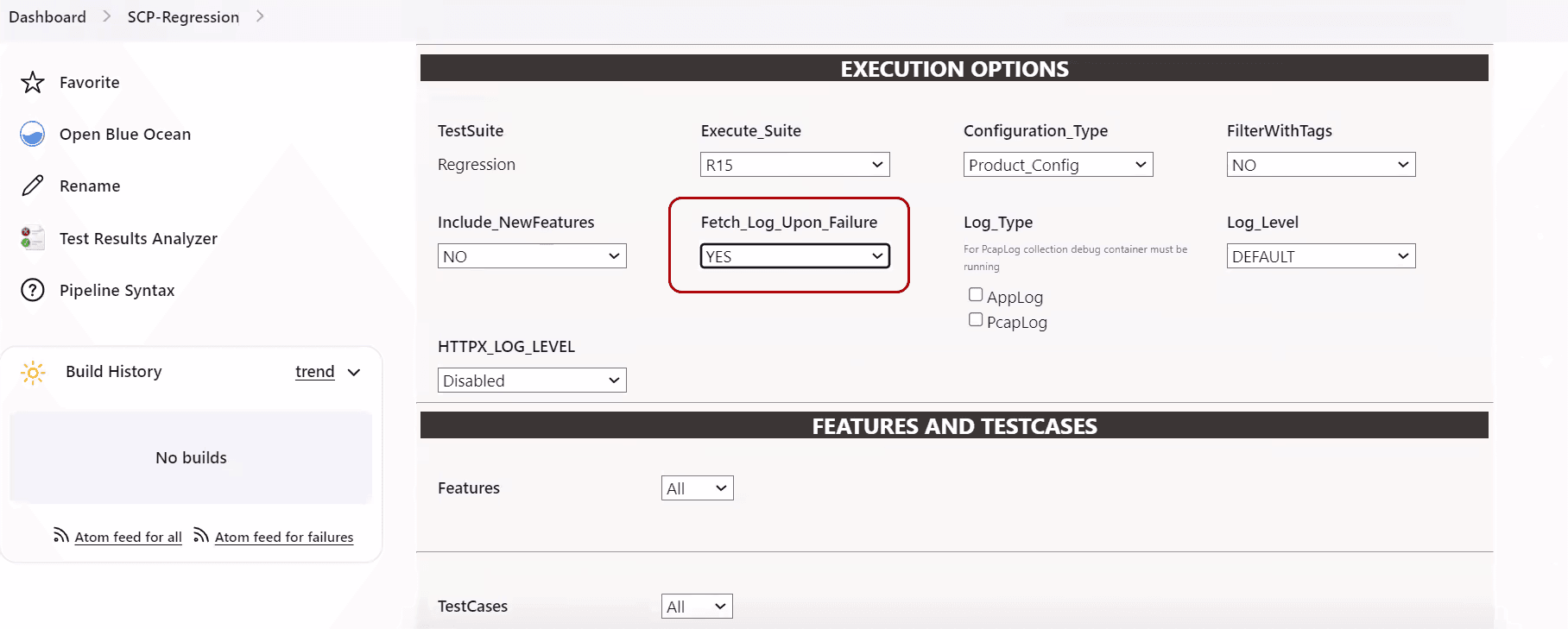

Figure 2-22 Fetch_Log_Upon_Failure

- If option Log_Type is also available, select value AppLog for it.

- Select the Log Level from the drop-down menu of Log_Level to set

the log level for all the microservices. The possible values for Log_Level

are as follows:

- WARN: Designates potentially harmful situations.

- INFO: Designates informational messages that highlight the progress of the application at coarse-grained level.

- DEBUG: Designates fine-grained informational events that are most useful to debug an application.

- ERROR: Designates error events that might still allow the application to continue running.

- TRACE: The TRACE log level captures all the details

about the behavior of the application. It is mostly diagnostic and

is more granular and finer than DEBUG log level.

Note:

Log_Level values are NF dependent.

- After the build execution is complete, go into the ATS pod,

then navigate to following path to find the

applogs:

.jenkins/jobs/<Pipeline Name>/builds/<build number>/For example,

.jenkins/jobs/SCP-Regression/builds/5/Applogs is present in zip form. Unzip it to get the log files.

The following tasks are carried out in the background to collect logs:

- OpenSearch API is used to access and fetch logs.

- Logs are fetched from OpenSearch for the failed scenarios

- Hooks (after scenario) within the cleanup file initiate an API call to OpenSearch to fetch Application logs.

- Duration of the failed scenario is calculated based on the time stamp and passed as a parameter to fetch the logs from OpenSearch.

- Filtered query is used to fetch the records based on Pod name, Service name, and timestamp (Failed Scenario Duration).

- For OpenSearch, there is no rollover or rotation of logs over time.

- The following configuration parameters are used for collecting logs

using OpenSearch:

- OPENSEARCH_WAIT_TIME: Wait time to connect to OpenSearch

- OPENSEARCH_HOST: OpenSearch HostName

- OPENSEARCH_PORT: OpenSearch Port

Application Log Collection Using Kubernetes Logs

- On the NF home page, click any new feature or regression pipeline, from where you want to collect the logs.

- In the left navigation pane, click Build with Parameters.

- Select YES or NO from the drop-down menu of Fetch_Log_Upon_Failure to select whether the log collection is required for a particular run.

- Select the Log Level from the drop-down menu of Log_Level to

set the log level for all the microservices. The possible values for

Log_Level are as follows:

- WARN: Designates potentially harmful situations.

- INFO: Designates informational messages that highlight the progress of the application at coarse-grained level.

- DEBUG: Designates fine-grained informational events that are most useful to debug an application.

- ERROR: Designates error events that might still allow

the application to continue running.

Note:

Log_Level values are NF dependent.

The following tasks are carried out in the background to collect logs:

- Kube API is used to access and fetch logs.

- For failed scenarios, logs are directly fetched from microservices.

- Hooks (after scenario) within the cleanup file initiate an API call to Kubernetes Logs to fetch Application logs.

- The duration of the failed scenario is calculated based on the time stamp and passed as a parameter to fetch the logs from microservices.

- Logs roll can occur while fetching the logs for a failed scenario. The maximum loss of logs is confined to a single scenario.

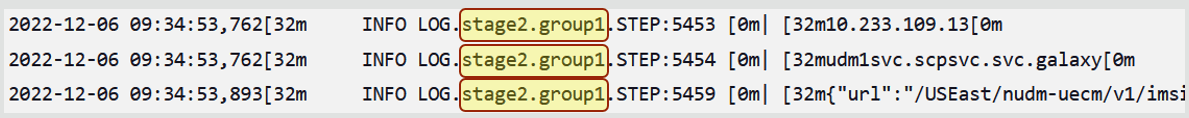

2.7.1 Application Log Collection and Parallel Test Execution Integration

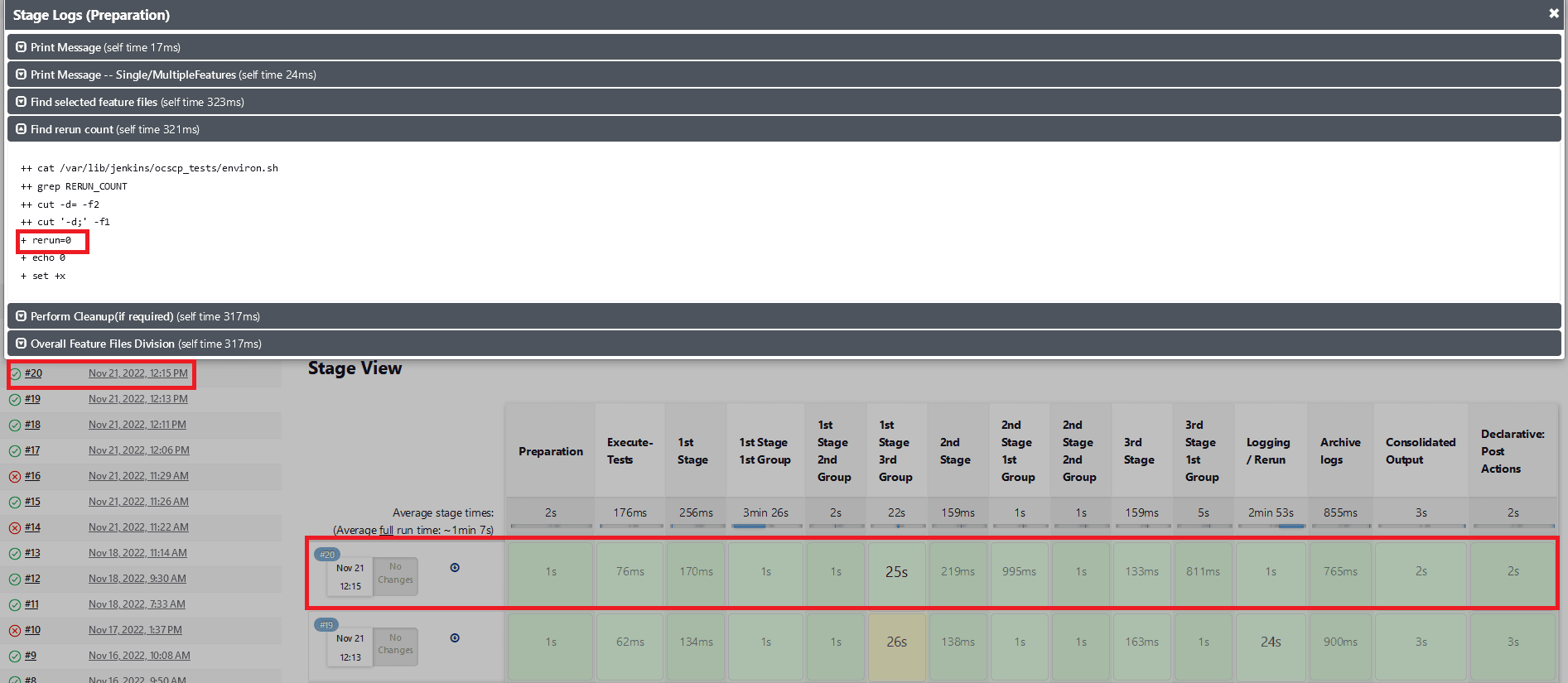

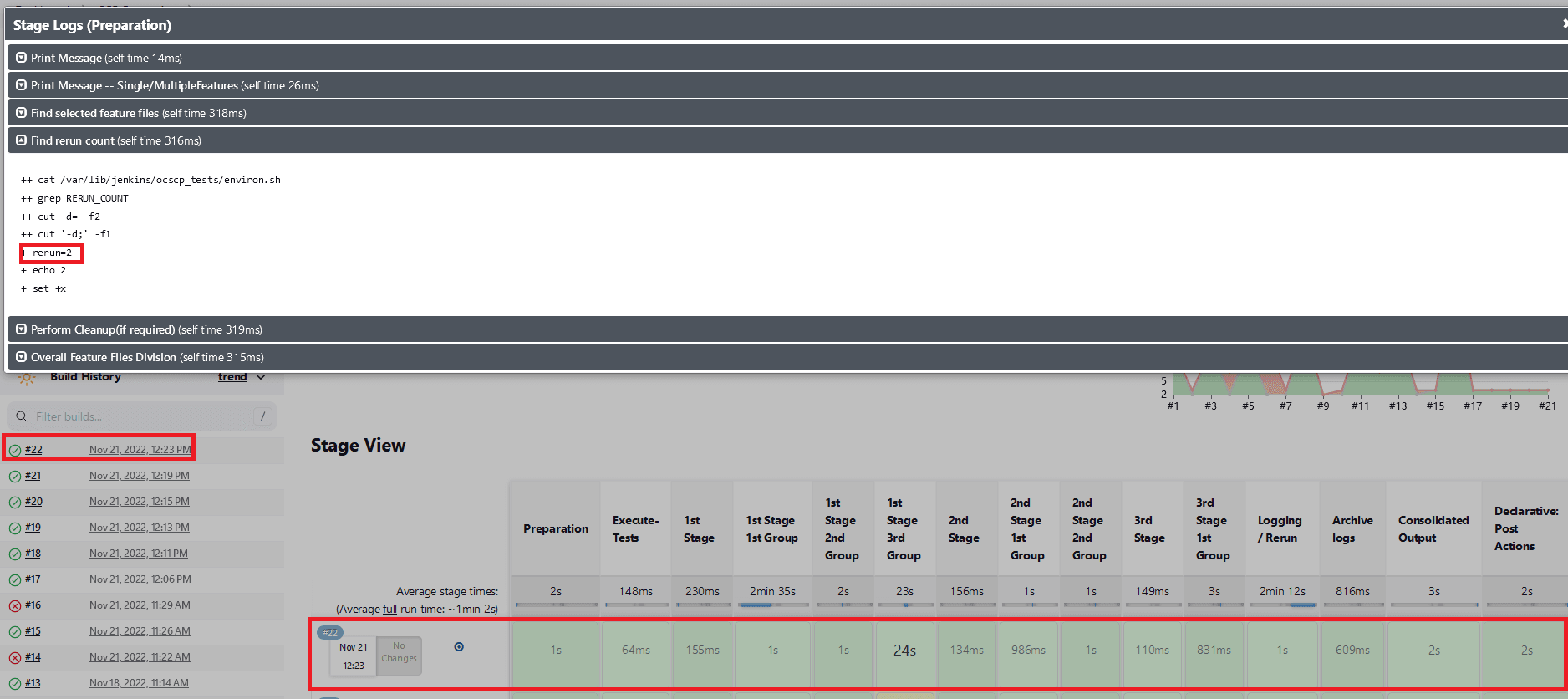

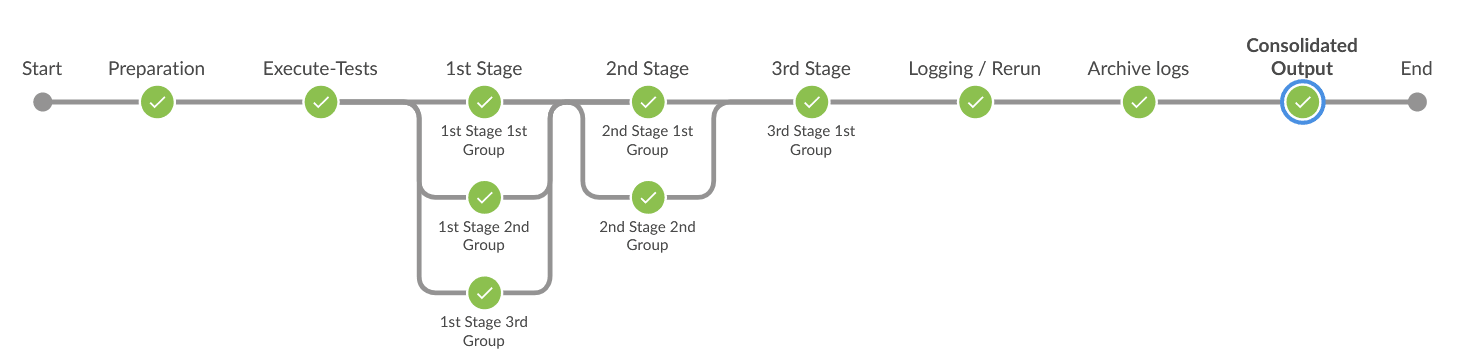

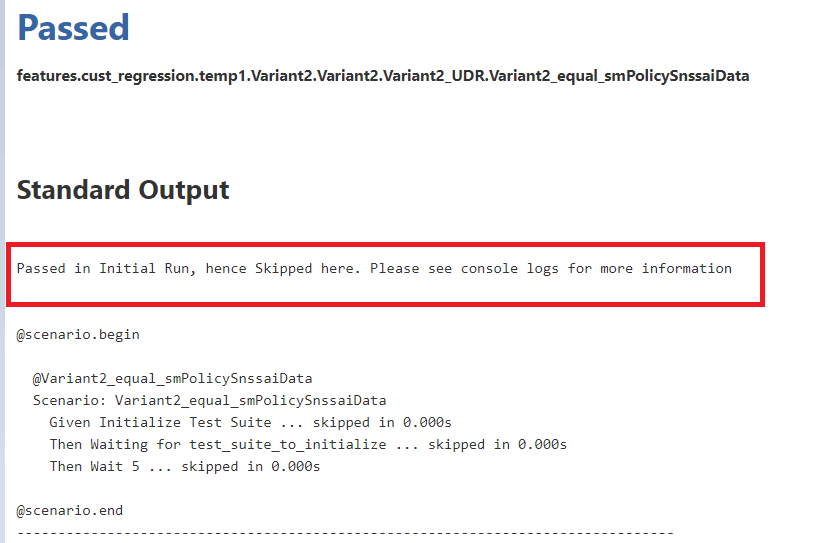

A new stage,"Logging/Rerun", has been added at the end of the Execute-Tests stage to collect rerun logs, such as applog and PCAP logs, by running the failed test cases in a sequence.

Figure 2-23 Logging/Rerun new stage

Fetch_Log_Upon_Failure parameter is set to YES and if any

test case fails in the initial run, then:

- The failed test case reruns and log collection start in the Logging/Rerun stage after the initial run is completed for all the test cases.

- The logs from the initial execution are collected, but they might be incorrect.

- Even if the

rerunparameter is set to 0, the failed test case reruns in the Logging/Rerun stage and the log is collected.Note:

Not applicable for all the NFs. - If the

Fetch_Log_Upon_Failureparameter is set to NO and if any test case fails in the initial run, then the failed test case rerun starts in the same stage after the initial execution is over for all the test cases in its group.

2.8 ATS Maintenance Scripts

- Taking a backup of the ATS custom folders and Jenkins pipeline.

- Viewing the configuration and restoring the Jenkins pipeline.

- Viewing the configuration and installing or uninstalling ATS and stubs.

ATS maintenance scripts are present in the ATS image at the

following path: /var/lib/jenkins/ocats_maint_scripts

kubectl cp <NAMESPACE>/<POD_NAME>:/var/lib/jenkins/ocats_maint_scripts <DESTINATION_PATH_ON_BASTION> podkubectl cp ocpcf/ocats-ocats-policy-694c589664-js267:/var/lib/Jenkins/ocats_maint_scripts /home/meta-user/ocats_maint_scripts pod2.8.1 ATS Scripts

ATS maintenance scripts are used to perform various task related to ATS and Jenkin pipeline.

- ats_backup.sh: This script requires the user's input and

takes a backup of the ATS custom folders, Jenkins jobs, and user's folders on

the user's system. The backup can be of the Jenkins jobs and user's folder, the

custom folders, or both. The custom folders include cust_regression,

cust_newfeatures, cust_performance, cust_data, and custom_config. For a Jenkins

job or a user's folder, the script only takes a backup of the config.xml file.

Also, the script requires the user to store a backup on the user's system (the

default path is the location from where the script is being run) and to create a

backup folder on the system and take the backup of the chosen folder from the

corresponding ATS into the backup folder. The backup folder name can be of the

following notation:

ats_<version>_backup_<date>_<time>. - ats_uninstall.sh: This script requires the user's input and uninstalls the corresponding ATS.

- ats_install.sh: This script requires the user's input and

installs a new ATS. If PVEnabled is set to

true, the script also reads the PVC name

from values.yaml and creates values.yaml before installation. Also, if needed,

the script performs the postinstallation steps, such as copying tests and

Jenkins jobs' folders from the

ats_datatar file to the ATS pod when PV is deployed, and then restarts the pod. - ats_restore.sh: This script requires the user's inputs,

restores the new release ATS pipeline, and views the configuration by referring

to the last release ATS Jenkins jobs and the user's configuration. It depends on

the user whether to use the backup folders from the user's system to restore the

ATS configuration. If the user instructs the script to use the backup from the

system, the script requires the path of the backup and uses the backup to

restore. Otherwise, the script requires the last ATS Helm release name to refer

to its Jenkins jobs and the user's configuration to restore.

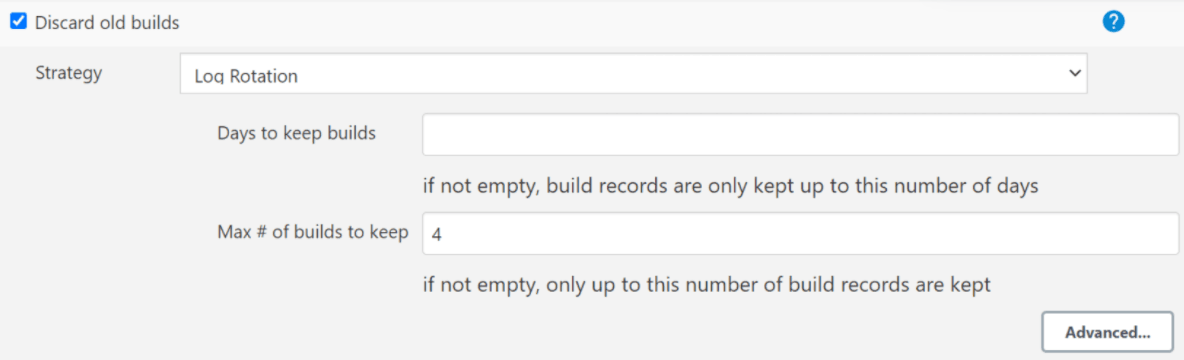

The script refers to the last release of ATS Jenkins pipelines and sets the Discard old builds property if this property is set in the last release of ATS for a pipeline but not in the current release. If this property is set in both releases, the script just updates the values according to the last release. Also, the script restores the pipeline environment variables values as per the last release of ATS. If any custom pipeline (created by the user) was present in the last release of ATS, the script restores that as well. It also restores the extra views created by NF users, for example, policy users, SCP users, and NRF users. Moreover, the script displays messages about the pending configuration that the user needs to perform manually. For example, a new pipeline or a new environment variable (for a pipeline) is introduced in the new release.

While deploying ATS without PV, Jenkins needs to be restarted for the restore process to complete. If the last release ATS contains the Configuration_Type parameter, the Configuration_Type script needs to be approved with the In Process Script Approval setting under Manage ATS in Jenkins for the restore process to complete.

2.8.2 Updating ats_install.sh

Currently, the ats_install.sh script copies the tests folder and Jenkins jobs folder into the ATS pod and then restarts the pod when deployed with PV.

How to Update ats_install.sh

Other NFs can also use the ats_install.sh scripts. However, additional post installation steps may have to be performed manually for a few NFs.

- In the ats_install.sh script,

there is a post install section between ####POST_INSTALL_START#### and ####

POST_INSTALL_END ####.

- Add the required post install commands.

Note:

These commands are NF-specific. - Use the following commands:

$namespacefor the namespace value$pod_namefor the pod name$ats_data_pathfor theats_datafolder path (it has tests folder and Jenkins jobs folder provided as tar file in ATS package)

- In the if-else block related to whether PV is enabled

or not, add the following:

- Add a command specific to PVEnabled=true in the if block.

- Add a command specific to PVEnabled=false in the else block.

- Add the required post install commands.

- For additional inputs, enter the required code between #### INPUT_START #### and #### INPUT_END ####.

2.8.3 Restarting Jenkins without Restarting Pod

Perform the following procedure to restart Jenkins without restarting pods:

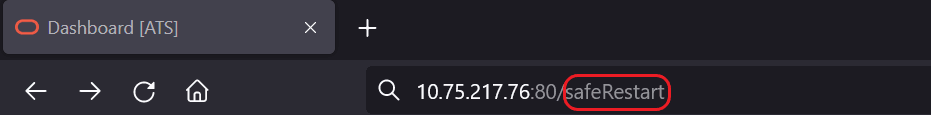

- Log in as the Jenkins admin.

- Go to the

<Jenkins_IP>:<port>/safeRestart, for example,10.87.73.32:32156/safeRestart.Figure 2-24 Safe Restart

- Click Yes.

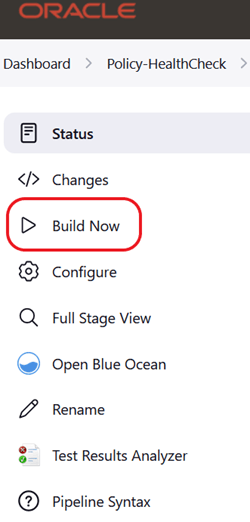

Figure 2-25 Restart Jenkins

2.8.4 Updating Stub Scripts

- stub_uninstall.sh: This script requires the user's inputs and uninstalls all the stubs.

- stub_install.sh: This script requires the user's inputs and installs all the stubs.

Note:

Currently, stub_uninstall.sh and stub_install.sh work.- Go to the

stubfolder. - From each script:

- Remove the CNC Policy-specific stubs inputs (dns, amf, and ldap), and add the input code blocks for NRF-specific stubs.

- For the stubs to uninstall, change the value of the

stubUninstallList variable,

and delete the variables for the CNC Policy-specific stubs below it.

Note:

stubUninstallList contains the Helm release names of the common stubs that are deployed generally. - Declare the variables for the NRF-specific stubs below the stubUninstallList line.

- Remove the Helm uninstallation commands of the policy-specific stubs, and add the Helm uninstallation commands of the NRF-specific stubs.

- For the stubs to install, change the value of the

stubInstallList variable, and

delete the variables for the CNC Policy-specific stubs below it.

Note:

stubInstallList contains the Helm release names of the common stubs that are deployed generally. - Declare the variables for the NRF-specific stubs below the stubInstallList line.

- Remove the Helm installation commands of the CNC Policy-specific stubs, and add the Helm installation commands of the NRF-specific stubs.

2.8.5 Running ATS and Stub Deployment Scripts

Perform the following procedure to run ATS and stub deployment scripts:

Note:

If you want to take a backup of the custom folders or Jenkins jobs and user's configuration or both, run the ats_backup.sh script.- Run the ats_install.sh script to install the new release ATS (values.yaml of the ATS Helm chart must be updated before this step).

- Run the ats_restore.sh script to

restore the new ATS pipeline and view configuration.

Note:

- You might perform the manual steps required for the restore script.

- You must copy all the necessary changes to the new release ATS from the last release ATS. To get the changes in the last release, you must refer to the custom folders in the last release ATS backup on the system with an existing backup using ats_backup.sh before this step.

- You can remove the last release ATS pod using the ats_uninstall.sh script while continuing to retain the last release PVC. You can use the last release PVC to port backward. Delete the last release PVC when you do not require the backward porting.

- Run the stub_install.sh script to install all the new release stubs values.yaml of the stub Helm charts must be updated before this step.

- Run the stub_uninstall.sh script to uninstall all the last release stubs.

2.9 ATS System Name and Version Display on the ATS GUI

This feature displays the ATS system name and version on the ATS GUI.

- ATS system name: Abbreviated product name followed by NF name.

- ATS Version: Release version of ATS.

2.10 ATS Tagging Support

The ATS Tagging Support feature assists in running the feature files after filtering features and scenarios based on tags. Instead of manually navigating through several feature files, the user can save time by using this feature.

- Feature_Include_Tags: The features that contain either of the tags

available in the Feature_Include_Tags field are

considered for tagging.

- For example, "cne-common", "config-server". All the features that have either "cne-common" or "config-server" tags are taken into consideration.

- Feature_Exclude_Tags: The features that contain neither of the tags

available in the Feature_Exclude_Tags field are

considered for tagging.

- For example, "cne-common","config-server". All the features that have neither "cne-common" nor "config-server" as tags are taken into consideration.

- Scenario_Include_Tags: The scenarios that contain either of the

tags available in the Scenario_Include_Tags field are

considered.

- For example, "sanity", "cleanup". The scenarios that have either "sanity" or "cleanup" tags are taken into consideration.

- Scenario_Exclude_Tags: The features that contain neither of the tags

available in the Scenario_Exclude_Tags field are

considered.

- For example, "sanity", "cleanup". The scenarios that have neither "sanity" nor "cleanup" as tags are taken into consideration.

Filter with Tags

- On the NF home page, click any new feature or regression pipeline, where you want to use this feature.

- In the left navigation pane, click Build with

Parameters. The following image appears.

Figure 2-26 Filter with Tags

- Select Yes from the

FilterWithTags drop-down menu. The result shows

four input fields.

The default value of FilterWithTags field is "No".

- The input fields serve as a search or filter, displaying all

tags that match the prefix entered. You can select one or multiple tags.

Figure 2-27 Tags Matching with Entered Prefix

- Select the required tags from the different tags list and click Submit.

The specified feature-level tags are used to filter out features that contain any one of the include tags and none of the exclude tags. Here, any or both the fields may be left empty. All features are automatically taken into consideration when both fields are empty.

The scenario level tags are used to filter out the scenarios from the features filtered above. Only scenarios with any of the include tags and none of the exclude tags are considered. Any or both fields can be empty. When both fields are empty, all the scenarios from the above filtered feature files are considered.

Note:

- If you select the Select_Option as 'All', all the displayed features and scenarios will run.

- If you select the Select_Option as 'Single/MultipleFeatures, it enables you to select some features, and only those features and respective scenarios are going to run.

2.10.1 Combination of Tags and their Results

The combination of tags and expected results are as follows.

Table 2-6 Result of Filtered Tags

| Feature_Include | Feature_Exclude | Scenario_Include | Scenario_Exclude | Results |

|---|---|---|---|---|

| - | - | - | - | All the features and scenarios are taken into consideration. |

| "abc","def" | - | - | - | Features with either "abc" or "def" tags and all scenarios from the filtered features are taken into consideration. |

| - | "abc","def" | - | - | All the features with neither "abc" nor "def" tags and all scenarios from the filtered features are taken into consideration. |

| - | - | "sanity","cne" | - | Scenarios with either "sanity" or "cne" tags and features having these scenarios are taken into consideration. |

| - | - | - | "sanity","cne" | Scenarios with neither "sanity" nor "cne" tags and features having these filtered scenarios are taken into consideration. |

| "abc","def" | "ghi" | - | - | Features with either "abc" or "def" tags but without the "ghi" tag and all scenarios from filtered features are taken into consideration. |

| "abc","def" | - | "sanity","cne" | - | Scenarios only with either "sanity" or "cne" tags and only features that contain these scenarios and have either "abc" or "def" as feature tags are taken into consideration. |

| "abc","def" | - | - | "sanity","cne" | Scenarios with neither "sanity" nor "cne" tags and only features that contain the filtered scenarios and have either "abc" or "def" feature tags are taken into consideration. |

| - | "ghi" | "sanity","cne" | - | Features without the "ghi" tag and scenarios with either "sanity" or "cne" tags from the filtered features are taken into consideration. |

| - | "ghi" | - | "sanity","cne" | Features without the "ghi" tag and scenarios without the "sanity" and "cne" tags from filtered features are taken into consideration. |

| - | - | "sanity","cne" | "cleanup" | Scenarios with either the "sanity" or "cne" tags and without the "cleanup" tag and features with filtered scenarios are taken into consideration. |

| "abc","def" | "ghi" | "sanity","cne" | - | Scenarios with either the "sanity" or "cne" tags and features that have these scenarios and have either the "abc" or "def" tags but not the "ghi" tag are taken into consideration. |

| "abc","def" | - | "sanity","cne" | "cleanup" | Scenarios with either the "sanity" or "cne" tags and without the "cleanup" tag, and features having the filtered scenarios and having the feature tags either "abc" or "def" are taken into consideration. |

| "abc","def" | "ghi" | - | "cleanup" | Scenarios without the tag "cleanup", and features with filtered scenarios and having either "abc" or "def" as feature tags but not the "ghi" tag are taken into consideration. |

| - | "ghi" | "sanity","cne" | "cleanup" | Scenarios with either the "sanity" or "cne" tags and without the "cleanup" tag, and features with filtered scenarios and not the tag "ghi," are taken into consideration. |

| "abc","def" | "ghi" | "sanity","cne" | "cleanup" | Scenarios with either "sanity" or "cne" tags and without the "cleanup" tag, and features with filtered scenarios and feature tags either "abc" or "def" but without the tag "ghi" are taken into consideration. |

Note:

- The tags mentioned in the table are just examples; they may or may not be actually used.

- The Replay option in the Jenkins GUI is not supported for tag-related test case execution. Always trigger builds related to tagging from the Build with Parameter step, and do not replay any previous builds.

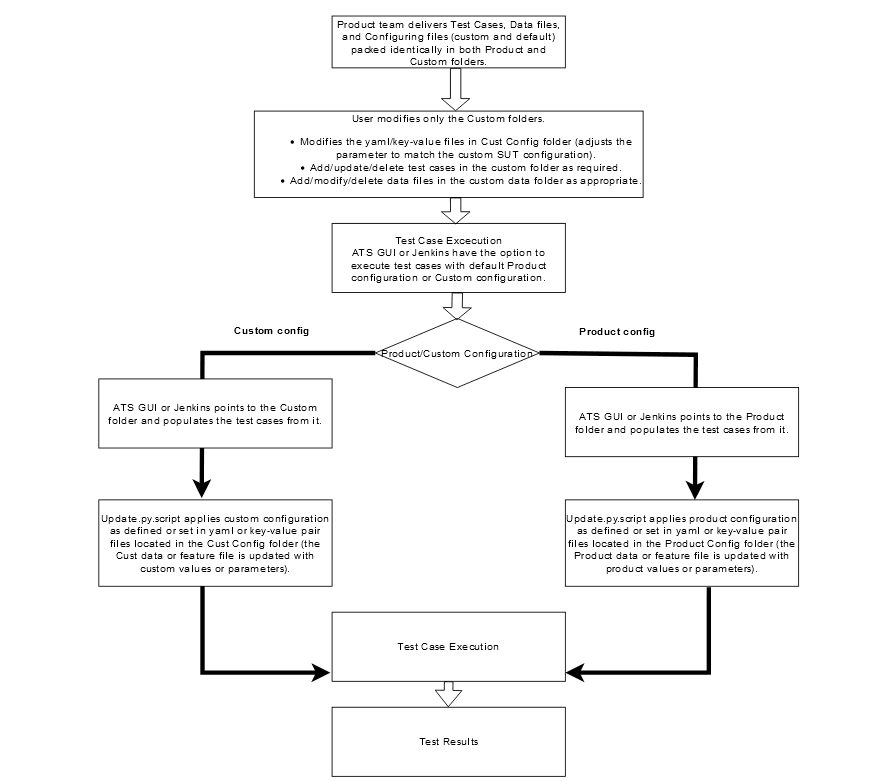

2.11 Custom Folder Implementation

The Custom Folder Implementation feature allows the user to update, add, or delete test cases without affecting the original product test cases in the new features, regression, and performance folders. The implemented custom folders are cust_newfeatures, cust_regression, and cust_performance. The custom folders contain the newly created, customised test cases.

Initially, the product test case folders and custom test case folders will have the same set of test cases. The user can perform customization in the custom test case folders, and ATS always runs the test cases from the custom test case folders. If the option "Configuration_Type" is present on the GUI,the user needs to set its value to "Custom_Config" to populate test cases from the custom test case folders.

Figure 2-28 Custom Config Folder

- Separate folders such as cust_newfeatures, cust_regression, and cust_performance are created to hold the custom cases.

- The prepackaged test cases are available in the newfeature and regression Folder.

- The user copies the required test cases to the cust_newfeatures and cust_regression folders, respectively.

- Jenkins always points to the cust_newfeatures and cust_regression

folders to populate them in the menu.

If someone initially launches ATS, they will not see any test cases in the menu if the cust folders are not populated. To avoid this, it is recommended to prepopulate both the folders, cust and original, and ask the user to modify only the cust folder if needed.

Figure 2-29 Summary of Custom Folder Implementation

2.12 Health Check

Health Check functionality is to check the health of the System Under Test (SUT)

Earlier, ATS used Helm test functionality to check the health of the System Under Test (SUT). With the implementation of the ATS Health Check pipeline, the SUT health check process has been automated. ATS health checks can be performed on webscale and non-webscale environments.

Convert a Value in Base64

echo-n "value"| base64echo-n "126.98.76.43"| base64Deploying Health Check in a Webscale Environment

- Set the Webscale to 'true' and the following parameters by encoding them with base64 in the ATS values.yaml file:

- Set the following parameter to encrypted

data:

webscalejumpserverip: encrypted-data webscalejumpserverusername: encrypted-data webscalejumpserverpassword: encrypted-data webscaleprojectname: encrypted-data webscalelabserverFQDN: encrypted-data webscalelabserverport: encrypted-data webscalelabserverusername: encrypted-data webscalelabserverpassword: encrypted-data

Encrypted data is the value of parameters encrypted in base64. Fundamentally, Base64 is used to encode the parameters.

For example:

webscalejumpserverip=$(echo -n '10.75.217.42' | base64), Where Webscale Jump server ip needs to be provided

webscalejumpserverusername=$(echo -n 'cloud-user' | base64), Where Webscale Jump server Username needs to be provided

webscalejumpserverpassword=$(echo -n '****' | base64), Where Webscale Jump server Password needs to be provided

webscaleprojectname=$(echo -n '****' | base64), Where Webscale Project Name needs to be provided

webscalelabserverFQDN=$(echo -n '****' | base64), Where Webscale Lab Server FQDN needs to be provided

webscalelabserverport=$(echo -n '****' | base64), Where Webscale Lab Server Portneeds to be provided

webscalelabserverusername=$(echo -n '****' | base64), Where Webscale Lab Server Username needs to be provided

webscalelabserverpassword=$(echo -n '****' | base64), Where Webscale Lab Server Password needs to be provided

Running Health Check Pipeline in an Webscale Environment

- Log in to ATS using respective <NF> login credentials.

- Click <NF>HealthCheck pipeline and then click

Configure.

Note:

<NF> denotes the network function. For example, in Policy, it is called as Policy-HealthCheck pipeline.Figure 2-30 Configure Healthcheck

- Provide parameter a with Helm

release name deployed. If there are multiple releases, use comma to provide

all Helm release

names.

//a = helm releases [Provide Release Name with Comma Separated if more than 1 ]Provide parameter c with the appropriate Helm command, such as helm, helm3, or helm2.

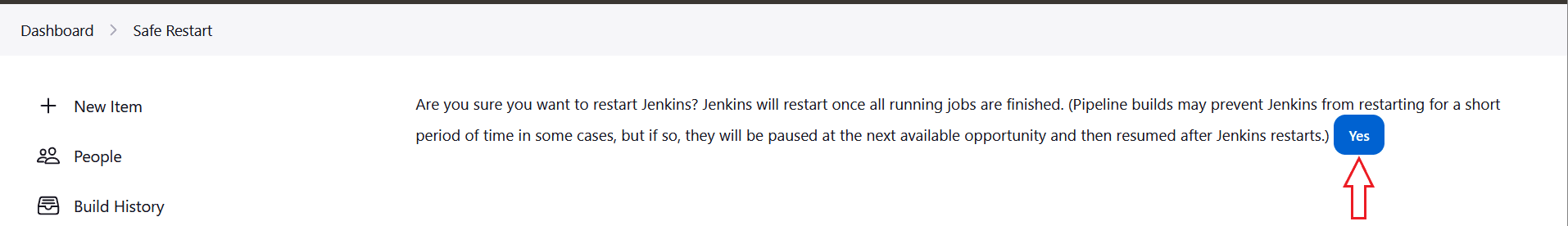

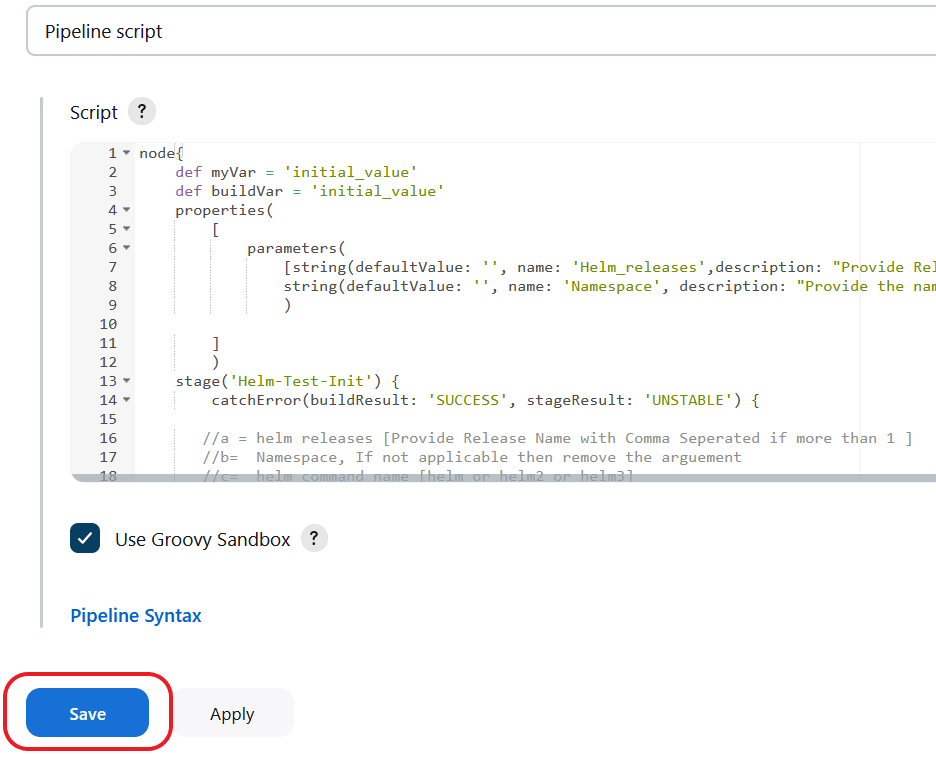

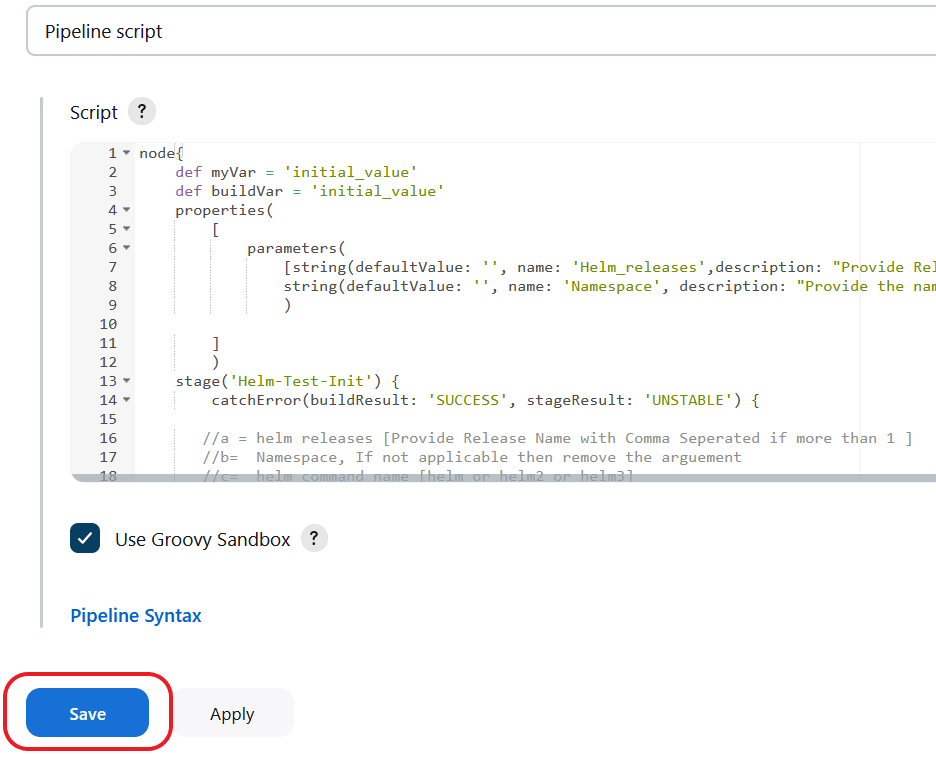

//c = helm command name [helm or helm2 or helm3]Figure 2-31 Save the Changes

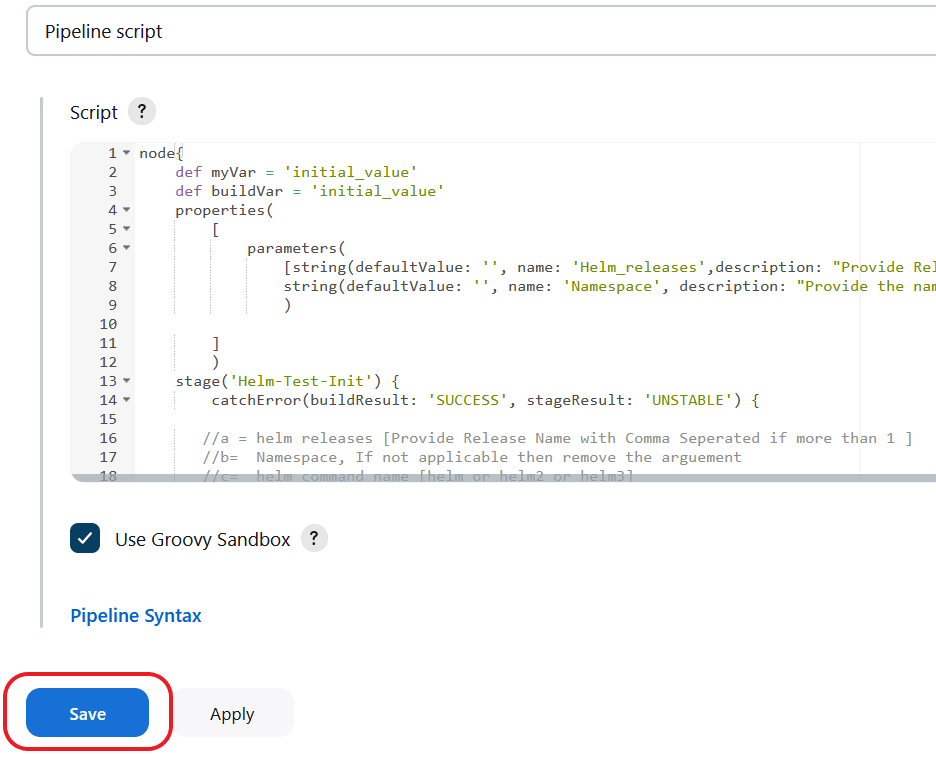

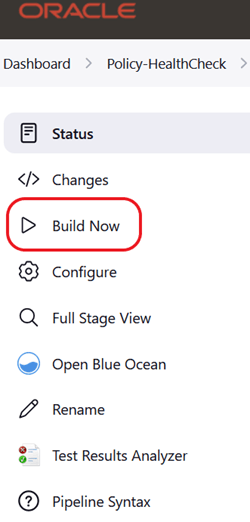

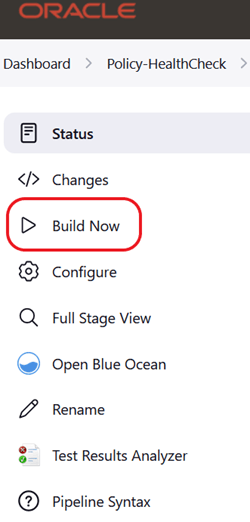

- Save the changes and click Build Now. ATS runs the

health check on respective network function.

Figure 2-32 Build Now

Deploying Health Check Pipeline in an OCI Environment

To use a ssh private key, create healthcheck-oci-secret and set the value of the key "passwordAuthenticationEnabled" to false.

Creating healthcheck-oci-secret

kubectl create secret generic healthcheck-oci-secret --from-file=bastion_key_file='<path of bastion ssh private key file>' --from-file=operator_instance_key_file='<path of operator instance ssh private key file>' -n <ATS namespace>kubectl create secret generic healthcheck-oci-secret --from-file=bastion_key_file='/tmp/bastion_private_key' --from-file=operator_instance_key_file='/tmp/operator_instance_private_key' -n seppsvcNote:

- Maintain the name of the secret as "healthcheck-oci-secret".

- Ensure that the '--from-file' keys retain the same names: "bastion_key_file" and "operator_instance_key_file".

- If the SSH private key is identical for both the bastion and operator instance, you can use the same path for both in the secret creation command.

Perform the following procedure to deploy ATS Health Check in a OCI environment:

- To use password, provide base64 encoded values for key "password" for both bastion and operator instances, and set the value of key passwordAuthenticationEnabled to "true".

- Set the following parameter to encrypted data:

envtype: encrypted-data ociHealthCheck: passwordAuthenticationEnabled: true or false bastion: ip: encrypted-data username: encrypted-data password: encrypted-data operatorInstance: ip: encrypted-data username: encrypted-data password: encrypted-data

Note:

All fields are mandatory except for passwords. When the "passwordAuthenticationEnabled" field is set to true, only the "password" field needs to be updated; otherwise, it can remain with its default value.

Running Health Check Pipeline in an OCI Environment

- Log in to ATS using respective <NF> login credentials.

- Click <NF>HealthCheck pipeline and then click

Configure.

Note:

<NF> denotes the network function. For example, in Policy, it is called as Policy-HealthCheck pipeline.Figure 2-33 Configure Healthcheck

- Provide parameter a with Helm

release name deployed. If there are multiple releases, use comma to provide

all Helm release

names.

//a = helm releases [Provide Release Name with Comma Separated if more than 1 ]Provide parameter c with the appropriate Helm command, such as helm, helm3, or helm2.

//c = helm command name [helm or helm2 or helm3]Figure 2-34 Save the Changes

- Save the changes and click Build Now. ATS runs the

health check on respective network function.

Figure 2-35 Build Now

Deploying Health Check in a Non-Webscale or Non-OCI Environment

Perform the following procedure to deploy ATS Health Check in a non-webscale or non-OCI environment such as OCCNE:

Set the Webscale parameter set to 'false' and following parameters by encoding it with base64 in the ATS values.yaml file:

occnehostip: encrypted-data

occnehostusername: encrypted-data

occnehostpassword: encrypted-data

Example:

occnehostip=$(echo -n '10.75.217.42' | base64) , Where occne host ip needs to be provided

occnehostusername=$(echo -n 'cloud-user' | base64), Where occne host username needs to be provided

occnehostpassword=$(echo -n '****' | base64), Where password of host needs to be provided

Running Health Check Pipeline in a Non-Webscale or Non-OCI Environment

Perform the following procedure to run the ATS Health Check pipeline in a non-webscale or non-OCI environment such as OCCNE:

- Log in to ATS using respective <NF> login credentials.

- Click <NF>HealthCheck pipeline and then click Configure.

- Provide parameter a with Helm

release name deployed. If there are multiple releases, use comma to provide

all Helm release names.

Provide parameter b with SUT deployed namespace name.

Provide parameter c with the appropriate Helm command, such as helm, helm3, or helm2.

//a = helm releases [Provide Release Name with Comma Separated if more than 1 ] //b = Namespace, If not applicable to WEBSCALE environment then remove the argument //c = helm command name [helm or helm2 or helm3]Figure 2-36 Save the Changes

- Save the changes and click Build Now. ATS runs the

health check on respective network function.

Figure 2-37 Build Now

By clicking Build Now, you can run the health check on ATS and store the result in the console logs.

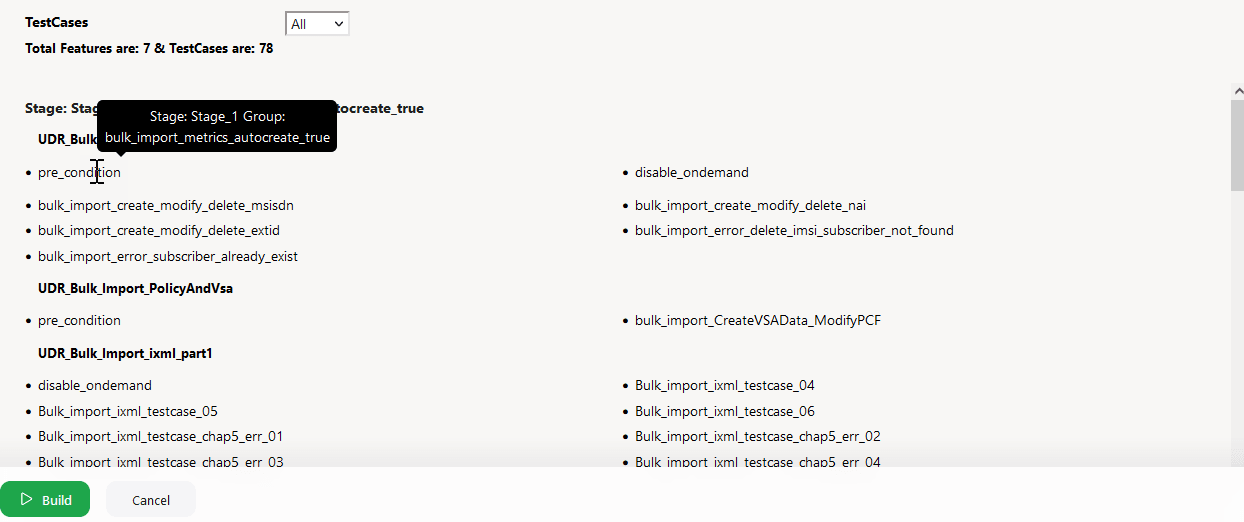

2.13 Individual Stage Group Selection

The Individual Stage Group Selection feature allows you to select and execute a single or multiple stages or groups by selecting a check box for the corresponding stage or group.

- Click NF-Regression or NF-NewFeatures, and then click Build with Parameters.

- On the FEATURE and TESTCASES section, click Select from the Features drop-down menu.

- Select the corresponding check box to select any number of stages or

groups you want to run from the list available for execution.

Figure 2-38 Stages or Groups Selection

- Scroll down to click Build.

2.14 Lightweight Performance

The Lightweight Performance feature allows you to run performance test cases. In ATS, a new pipeline known as "<NF>-Performance", where NF stands for Network Function, is introduced, for example, Policy-Performance.

Figure 2-39 Sample Screen: Home Page

The <NF>-Performance pipeline verifies from 500 to 1k TPS (Transactions per Second) of traffic using the http-go tool, a tool used to run the traffic on the backend. It also helps to monitor the CPU and memory of microservices while running lightweight traffic.

The duration of the traffic run can be configured on the pipeline.

2.14.1 Configure <NF>-Performance Pipeline

- On the NF home page, click <NF>-Performance pipeline,

and then click Configure.

The General tab appears. The user must wait for the page to load completely.

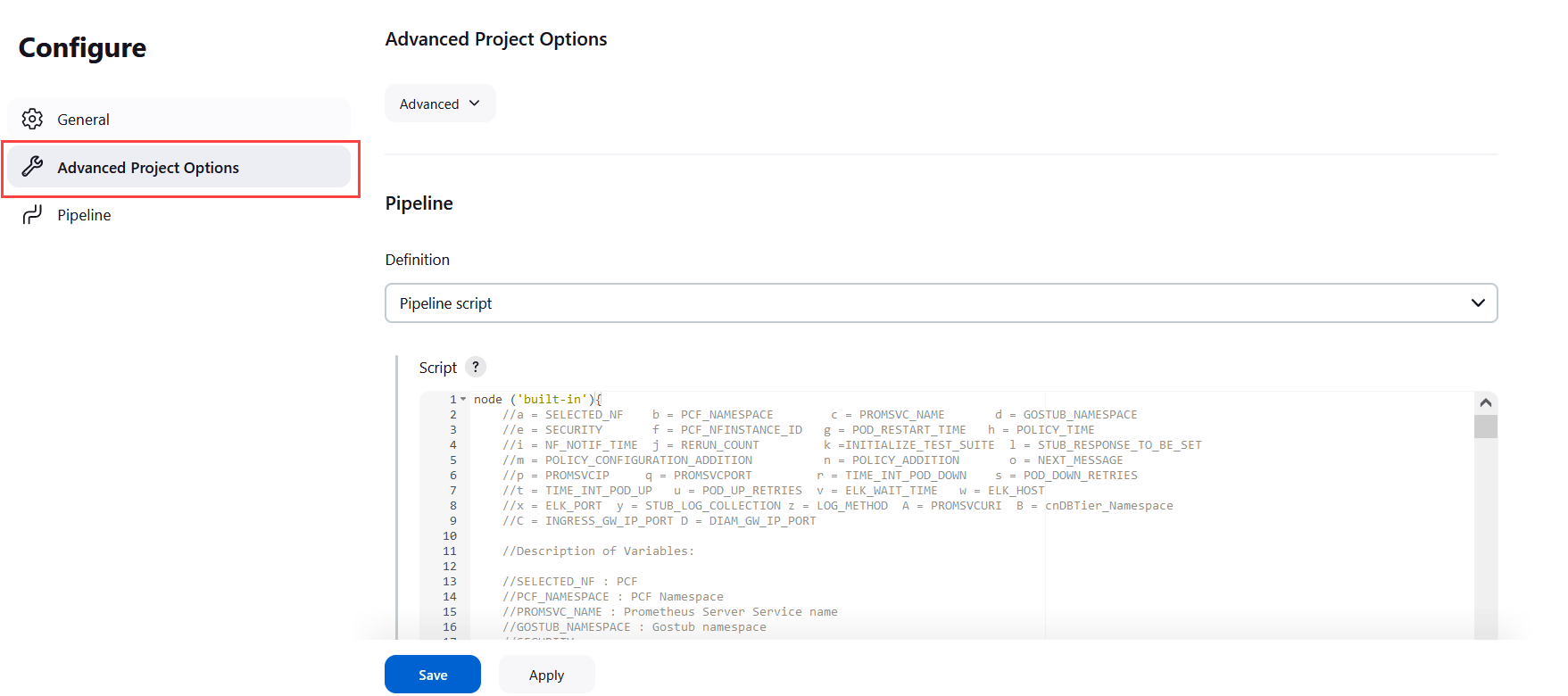

- Click the Advanced Project Options tab.

Scroll down to reach the Pipeline configuration

section.

Figure 2-40 Advanced Project Options

- Update the configurations as per your NF requirements and click Save. The Pipeline <NF>-Performance page appears.

- Click Build Now. This triggers lightweight traffic for the respective network function.

2.15 Managing Final Summary Report, Build Color, and Application Log

This feature displays an overall execution summary, such as the total run count, pass count, and fail count.

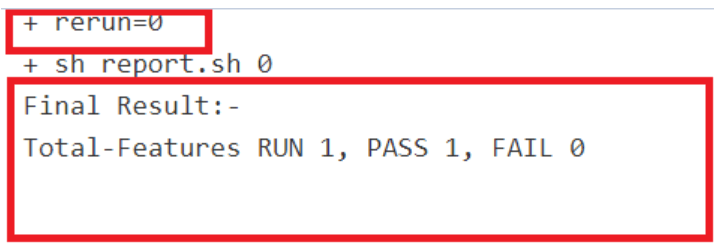

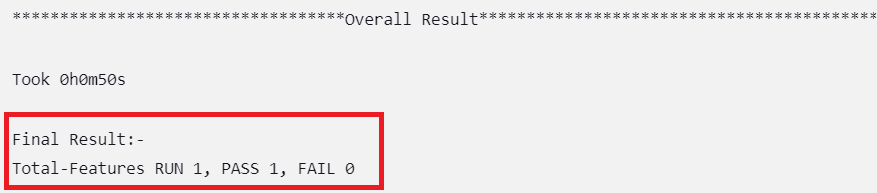

Supports Implementation of Total-Features- If rerun is set to 0, the test result report shows the following

result:

Figure 2-41 Total-Features = 1, and Rerun = 0

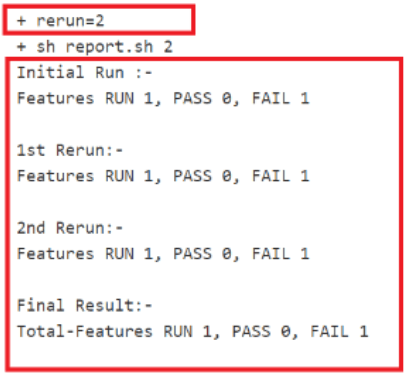

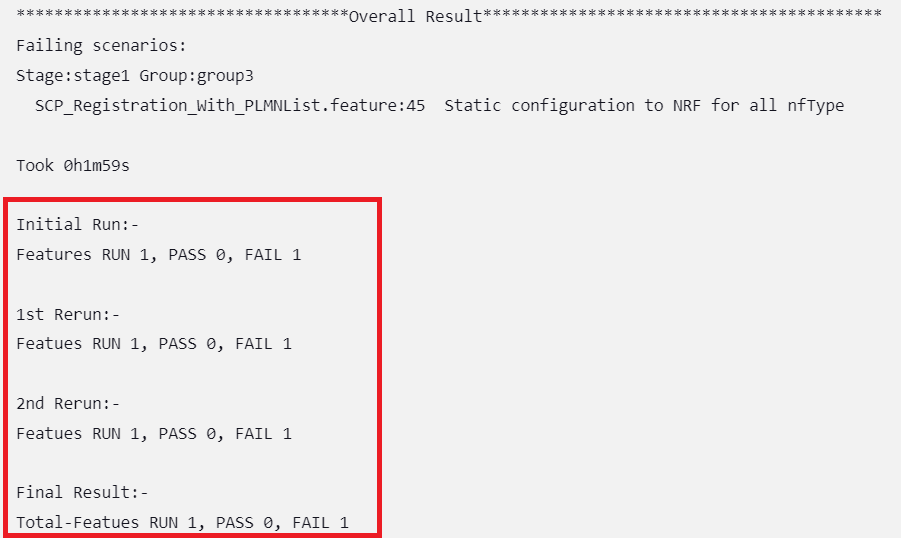

- If rerun is set to non-zero, the test result report shows the

following result:

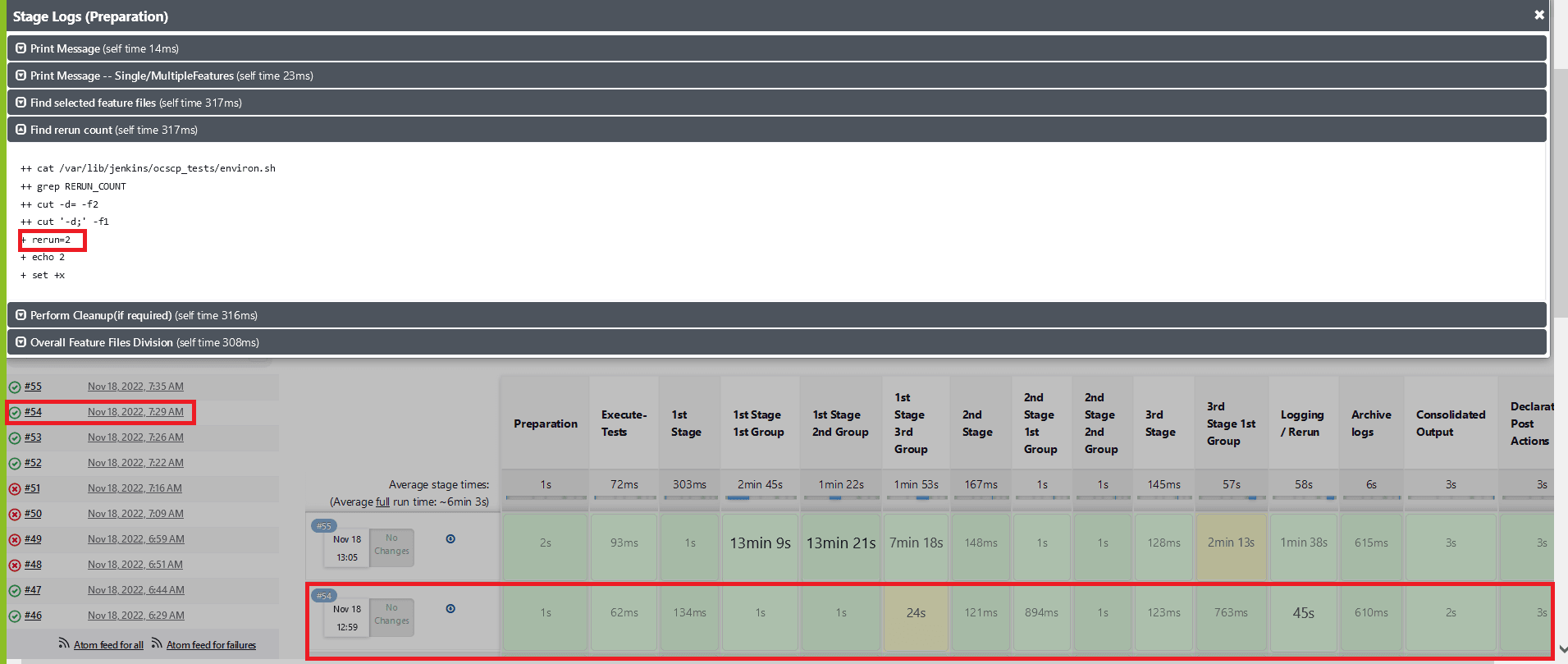

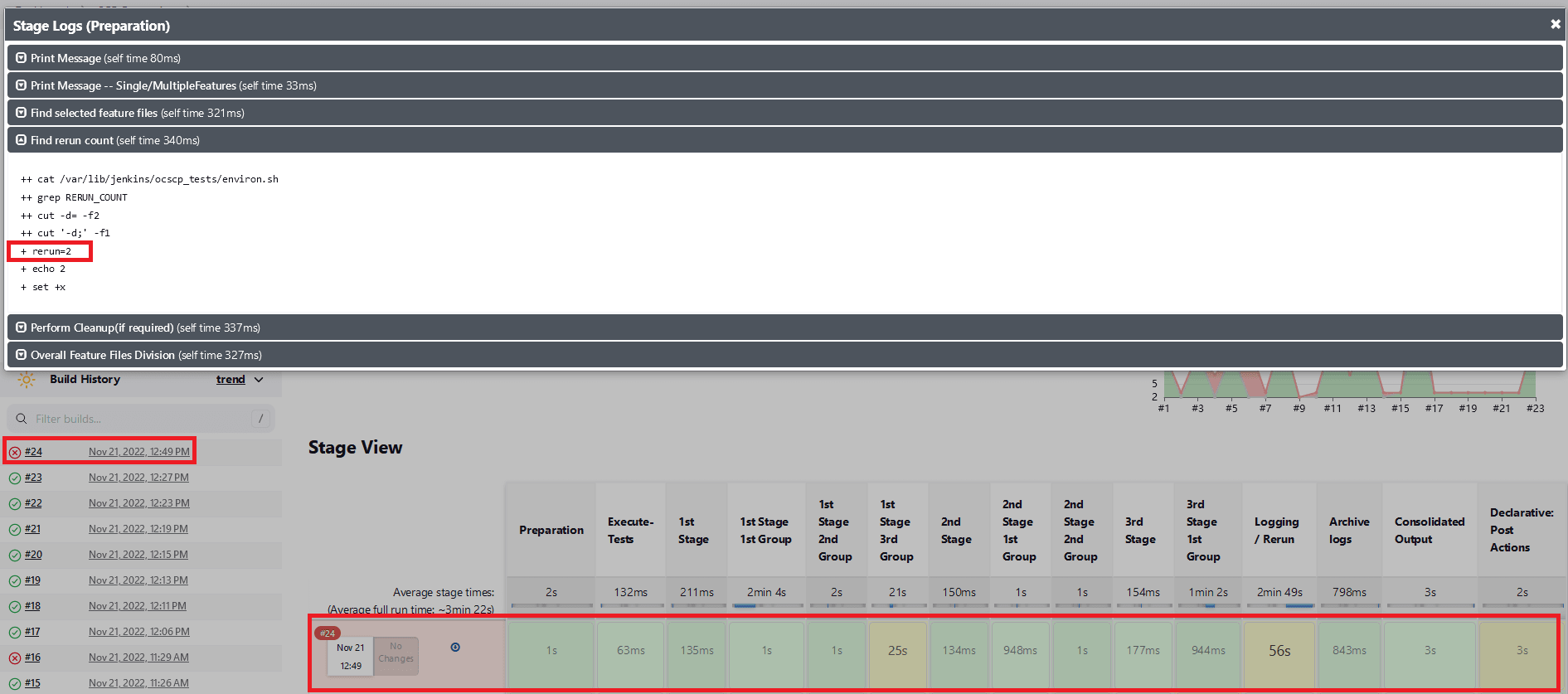

Figure 2-42 Total-Features = 1, and Rerun = 2

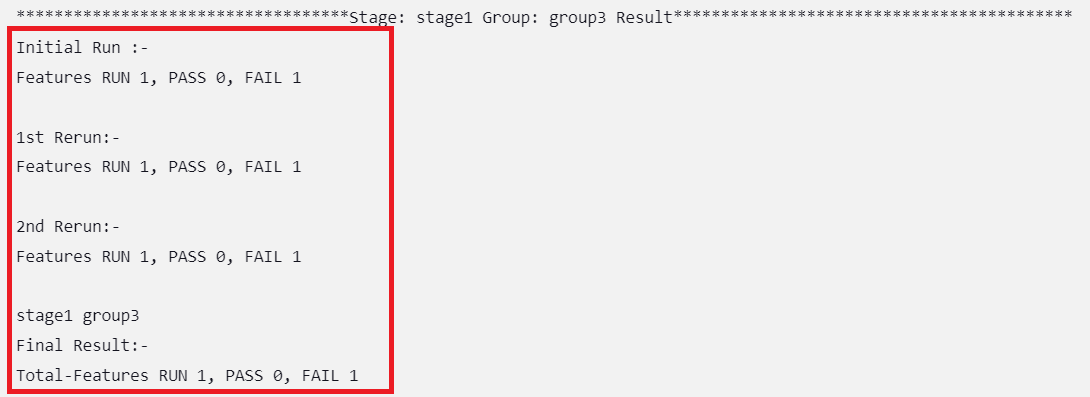

After incorporating the Parallel Test Execution feature, the following results were obtained:

Final Summary Report Implementations

Figure 2-43 Group Wise Results

Figure 2-44 Overall Result When Selected Feature Tests Pass

Figure 2-45 Overall Result When Any of the Selected Feature Tests Fail

Implementing Build Colors

Table 2-7 Build Color Details

| Rerun Values | Rerun set to zero | Rerun set to non-zero | |||

|---|---|---|---|---|---|

| Status of Run | All Passed in Initial Run | Some Failed in Initial Run | All Passed in Initial Run | Some Passed in Initial Run, Rest Passed in Rerun | Some Passed in Initial Run, Some Failed Even After Rerun |

| Build Status | SUCCESS | FAILURE | SUCCESS | SUCCESS | FAILURE |

| Pipeline Color | GREEN | Execution Stage where test cases failed shows YELLOW color, rest of the successful stages are GREEN. | GREEN | GREEN | Execution Stage where test cases failed shows YELLOW color, rest of the successful stages are GREEN |

| Status Color | BLUE | RED | BLUE | BLUE | RED |

- the rerun count and the pass or fail status of test cases in the initial run

- the rerun count and the pass or fail status of test cases in the final run

For the parallel test case execution, the pipeline status also depends

on another parameter, "Fetch_Log_Upon_Failure," which is given in the

build with parameters page. If the parameter

Fetch_Log_Upon_Failure is not there, its default value is

considered "NO".

Table 2-8 Pipeline Status When Fetch_Log_Upon_Failure = NO

| Rerun Values | Rerun set to zero | Rerun set to non-zero | |||

|---|---|---|---|---|---|

| Passed/Failed | All Passed in Initial Run | Some Failed in Initial Run | All Passed in Initial Run | Some Passed in Initial Run, Rest Passed in Rerun | Some Passed in Initial Run, Some Failed Even After Rerun |

| Status | SUCCESS | FAILURE | SUCCESS | SUCCESS | FAILURE |

Table 2-9 Pipeline Status When Fetch_Log_Upon_Failure = YES

| Rerun Values | Rerun set to zero | Rerun set to non-zero | ||||

|---|---|---|---|---|---|---|

| Passed/Failed | All Passed in Initial Run | Some Failed in Initial Run and Failed in Rerun | Some Failed in Initial Run and Passed in Rerun | All Passed in Initial Run | Some Passed in Initial Run, Rest Passed in Rerun | Some Passed in Initial Run, Some Failed Even After Rerun |

| Status | SUCCESS | FAILURE | SUCCESS | SUCCESS | SUCCESS | FAILURE |

rerun_count, Fetch_Log_Upon_Failure, and

pass/fail status of test cases in initial and final run and the

corresponding build colors are as follows:

- When

Fetch_Log_Upon_Failureis set to YES andrerun_countis set to 0, test cases pass in the initial run. The pipeline will be green, and its status will show as blue.Figure 2-46 Fetch_Log_Upon_Failure is set to YES and rerun_count is set to 0, test cases pass

- When

Fetch_Log_Upon_Failureis set to YES andrerun_countis set to 0, test cases fail on the initial run but pass during the rerun. The initial execution stage is yellow and all subsequent successful stages will be green, and the status will be blue.Figure 2-47 Test Cases Fail on the Initial Run but Pass in the Rerun

- When

Fetch_Log_Upon_Failureis set to YES andrerun_countis set to 0, test cases fail in both the initial and the rerun. Execution stages will show as yellow, all other successful stages will be shown as green, and the overall pipeline status will be red.Figure 2-48 Test Cases Fail in Both the initial and the Rerun

- When

Fetch_Log_Upon_Failureis set to YES and thererun countis set to non-zero. If all of the test cases pass in the first run, no rerun will be initiated because the cases have already been passed. The pipeline will be green, and the status will be indicated in blue.Figure 2-49 All of the Test cases Pass in the Initial Run

- When

Fetch_Log_Upon_Failureis set to YES and thererun countis set to non-zero. If some of the test cases fail in the initial run and the remaining ones pass in one of the remaining reruns, then the initial test case execution stages will show as yellow, the remaining stages as green, and the overall pipeline status as blue.Figure 2-50 Test Cases Fail in the Initial Run and the Remaining Ones Pass

- When

Fetch_Log_Upon_Failureis set to YES and thererun countis set to non-zero. If some of the test cases fail in the initial run and the remaining ones fail in all the remaining reruns, the stages of test case execution will be shown in yellow, the remaining stages in green, and the overall pipeline status in red.Figure 2-51 Test Cases Fail in the Initial and Remaining Reruns

- Whenever any of the multiple Behave processes that are running

in the ATS are exited without completion, the stage in which the process

exited and the consolidated output stage are shown as yellow, and the

overall pipeline status will be yellow. Also in the consolidated output

stage, near the respective stage result, the exact run in which the Behave

processes exited without completion will be printed.

Figure 2-52 Stage View When Behave Process is Incomplete

Figure 2-53 Consolidated Report for a Group When a Behave Process was Incomplete

Implementing Application Log

ATS automatically fetches the SUT Debug logs during the rerun cycle if it encounters any failures and saves them in the same location as the build console logs. The logs are fetched for the rerun time duration only using the timestamps. If, for some microservices, there are no log entries in that time duration, it does not capture them. Therefore, the logs are fetched only for the microservices that have an impact or are associated with the failed test cases.

Location of SUT Logs:

/var/lib/jenkins/.jenkins/jobs/PARTICULAR-JOB-NAME/builds/BUILD-NUMBER/date-timestamp-BUILD-N.txt

Note:

The file name of the SUT log is added as a suffix with the date, timestamp, and build number (for which the logs are fetched). These logs share the same retention period as build console logs, set in the ATS configuration. It is recommended to set the retention period to optimal owing to the Persistent Volume Claim (PVC) storage space availability.

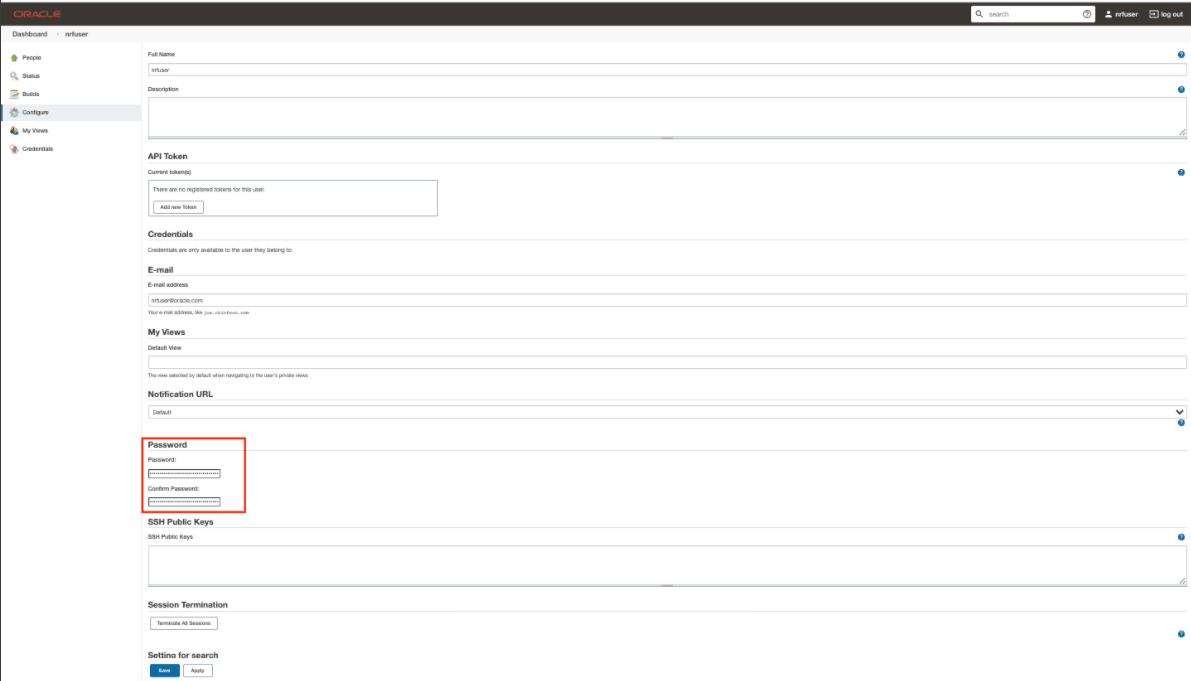

.2.16 Modifying Login Password

You can log in to the ATS application using the default login credentials. The default login credentials are shared for each NF in the respective chapter of this guide.

- Log in to the ATS application using the default login credentials. The home page of the respective NF appears.

- Click the down arrow next to the user name.

- Click Configure.

- In the Password section, enter the new password in the

Password and Confirm Password

fields..

Figure 2-54 Logged-in User Details

- Click Save.

A new password is set for you.

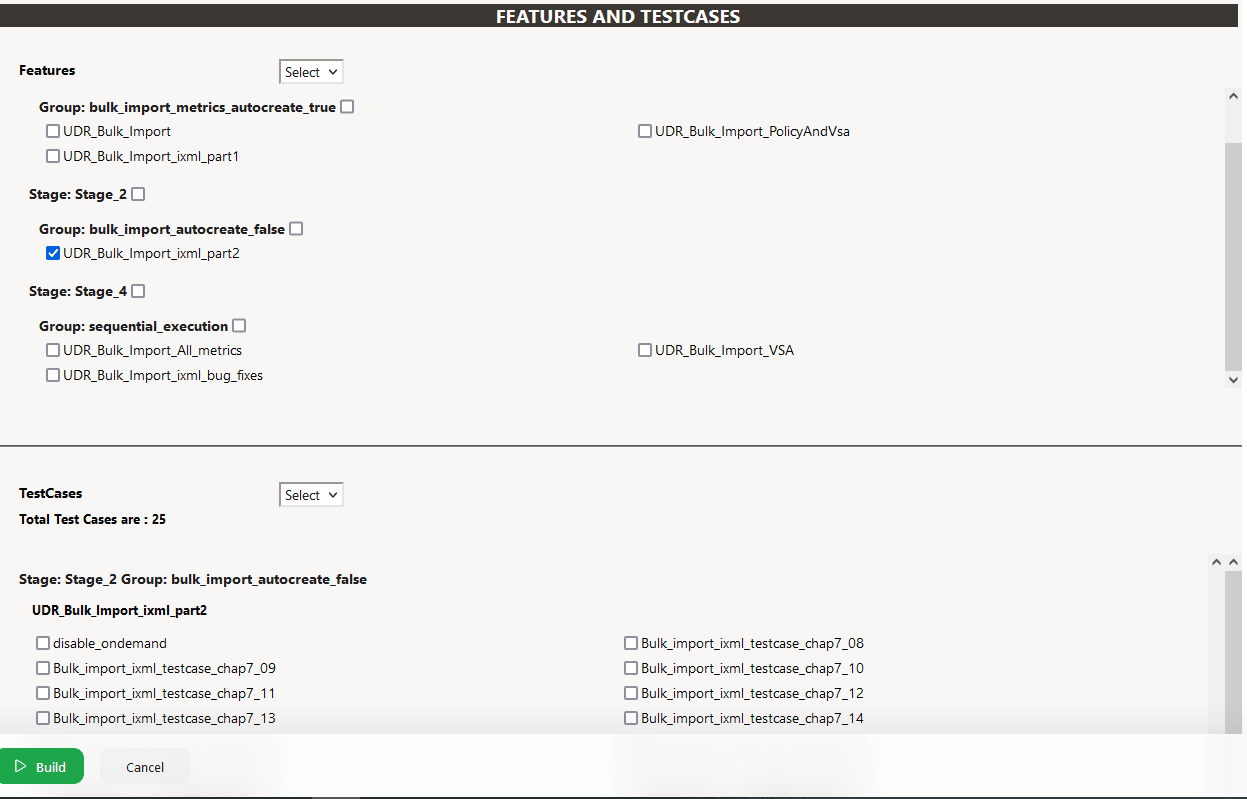

2.17 Multiselection Capability for Features and Scenarios

ATS allows you to select and run single or multiple features and scenarios by selecting a check box for the corresponding features or scenarios.

2.17.1 Feature Level Selection

- Log in to ATS using the respective <NF> login credentials.

- On the NF home page, click any new feature or regression pipeline from where you want to run the feature.

- In the left navigation pane, click Build with Parameters.

- Scroll down to the FEATURES AND TEST CASES section.

- Click Select from the

Features drop-down.

Figure 2-55 Feature Selection

- Select any number of features by selecting the check box for the corresponding feature you want to run from the list available for execution.

- Click Build.

2.17.2 Scenario Selection

- Log in to ATS using the respective <NF> login credentials.