3 Installing ATS for Different Network Functions

This section describes how to install ATS for different network functions. It includes:

3.1 Installing ATS for BSF

The BSF ATS installation procedure covers two steps:

- Locating and downloading the ATS package for BSF.

- Deploying ATS and stub pods in Kubernetes cluster.

This includes installation of three stubs (nf1stub, nf11stub, and nf12stub), ocdns-bind stub, and BSF ATS in BSF namespace.

3.1.1 Resource Requirements

This section describes the ATS resource requirements for Binding Support Function.

Overview - Total Number of Resources

The following table describes the overall resource usage in terms of CPUs, memory, and storage:

Table 3-1 BSF - Total Number of Resources

| Resource Name | Non-ASM CPU | Non-ASM Memory (GB) | ASM CPU | ASM Memory (GB) |

|---|---|---|---|---|

| BSF Total | 41 | 36 | 73 | 52 |

| ATS Total | 11 | 11 | 23 | 17 |

| cnDBTier Total | 107.1 | 175.2 | 137.1 | 190.2 |

| Grand Total BSF ATS | 159.1 | 222.2 | 233.1 | 259.2 |

BSF Pods Resource Requirements Details

This section describes the resource requirements, which are needed to deploy BSF ATS successfully.

Table 3-2 BSF Pods Resource Requirements Details

| BSF Microservices | Max CPU | Memory (GB) | Max Replica | Isito ASM CPU | Isito ASM Memory (GB) | Non-ASM Total CPU | Non-ASM Memory (GB) | ASM Total CPU | ASM Total Memory (GB) |

|---|---|---|---|---|---|---|---|---|---|

| oc-app-info | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 3 | 2 |

| oc-diam-gateway | 4 | 2 | 1 | 2 | 1 | 4 | 2 | 6 | 3 |

| alternate-route | 2 | 4 | 1 | 2 | 1 | 2 | 4 | 4 | 5 |

| oc-config-server | 4 | 2 | 1 | 2 | 1 | 4 | 2 | 6 | 3 |

| ocegress_gateway | 4 | 6 | 1 | 2 | 1 | 4 | 6 | 6 | 7 |

| ocingress_gateway | 4 | 6 | 1 | 2 | 1 | 4 | 6 | 6 | 7 |

| nrf-client-mngt | 1 | 1 | 2 | 2 | 1 | 2 | 2 | 6 | 4 |

| oc-audit | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 4 | 2 |

| oc-config-mgmt | 4 | 2 | 2 | 2 | 1 | 8 | 4 | 12 | 6 |

| oc-query | 2 | 1 | 2 | 2 | 1 | 4 | 2 | 8 | 4 |

| oc-perf-info | 1 | 1 | 2 | 2 | 1 | 2 | 2 | 6 | 4 |

| bsf-management-service | 4 | 4 | 1 | 2 | 1 | 4 | 4 | 6 | 5 |

| BSF Totals | 41 | 36 | 73 | 52 | |||||

ATS Resource Requirements details for BSF

This section describes the ATS resource requirements, which are needed to deploy BSF ATS successfully.

Table 3-3 ATS Resource Requirements Details

| ATS Microservices | Max CPU | Max Memory (GB) | Max Replica | Isito ASM CPU | Isito ASM Memory (GB) | Non- ASM Total CPU | Non-ASM Total Memory (GB) | ASM Total CPU | ASM Total Memory (GB) |

|---|---|---|---|---|---|---|---|---|---|

| ocstub1-py | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 4 | 3 |

| ocstub2-py | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 4 | 3 |

| ocstub3-py | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 4 | 3 |

| ocats-bsf | 3 | 3 | 1 | 2 | 1 | 3 | 3 | 5 | 4 |

| ocdns-bind | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 3 | 2 |

| ocdiam-sim | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 3 | 2 |

| ATS Totals | 11 | 11 | 23 | 17 | |||||

cnDBTier Resource Requirements Details for BSF ATS

This section describes the cnDBTier resource requirements, which are needed to deploy BSF ATS successfully.

Note:

For cnDBTier pods, a minimum of 4 worker nodes are required.Table 3-4 cnDBTier Resource Requirements Details

| cnDBTier Microservices | Min CPU | Min Memory (GB) | Min Replica | Isito ASM CPU | Isito ASM Memory (GB) | Total CPU | Total Memory (GB) | ASM Total CPU | ASM Total Memory (GB) |

|---|---|---|---|---|---|---|---|---|---|

| db_monitor_svc | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 3 | 2 |

| db_replication_svc | 2 | 12 | 1 | 2 | 1 | 2 | 12 | 4 | 13 |

| db_backup_manager_svc | 0.1 | 0.2 | 1 | 2 | 1 | 0.1 | 0.2 | 2.1 | 1.2 |

| ndbappmysqld | 8 | 10 | 4 | 2 | 1 | 32 | 40 | 40 | 44 |

| ndbmgmd | 4 | 10 | 2 | 2 | 1 | 8 | 20 | 12 | 22 |

| ndbmtd | 10 | 18 | 4 | 2 | 1 | 40 | 72 | 48 | 76 |

| ndbmysqld | 8 | 10 | 2 | 2 | 1 | 16 | 20 | 20 | 22 |

| db_infra_moditor_svc | 8 | 10 | 1 | 2 | 1 | 8 | 10 | 8 | 10 |

| cnDBTier Total | 107.1 | 175.2 | 137.1 | 190.2 | |||||

3.1.2 Downloading the ATS Package

This section provides information on how to locate and download BSF ATS package file from My Oracle Support (MOS).

Locating and Downloading BSF ATS Package

To locate and download the ATS Image from MOS, perform the following steps:

- Log in to My Oracle Support using the appropriate credentials.

- Select the Patches and Updates tab.

- In the Patch Search window, click Product or Family (Advanced).

- Enter Oracle Communications Cloud Native Core - 5G in the Product field.

- Select Oracle Communications Cloud Native Binding Support Function <release_number> from Release drop-down.

- Click Search. The Patch Advanced Search Results list appears.

- Select the required patch from the search results. The Patch Details window appears.

- Click Download. The File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to downlaod the BSF ATS package file.

- Untar the gzip file

ocats-bsf-tools-25.1.200.0.0.tgzto access the following files:ocats-bsf-pkg-25.1.200.0.0.tgz ocdns-pkg-25.1.203.tgz ocstub-pkg-25.1.201.tgz ocdiam-sim-25.1.203.tgzThe contents included in each of these files are as follow:

ocats-bsf-tools-25.1.200.0.0.tgz | |_ _ _ocats-bsf-pkg-25.1.200.tgz | |_ _ _ _ _ _ ocats-bsf-25.1.200.tgz (Helm Charts) | |_ _ _ _ _ _ ocats-bsf-images-25.1.200.tar (Docker Images) | |_ _ _ _ _ _ ocats-bsf-data-25.1.200.tgz (BSF ATS and Jenkins job Data) | |_ _ _ocstub-pkg-25.1.201.0.0.tgz | |_ _ _ _ _ _ ocstub-py-25.1.201.tgz(Helm Charts) | |_ _ _ _ _ _ ocstub-py-image-25.1.201.tar (Docker Images) | |_ _ _ocdns-pkg-25.1.203.0.0.tgz | |_ _ _ _ _ _ ocdns-bind-25.1.203.tgz(Helm Charts) | |_ _ _ _ _ _ ocdns-bind-image-25.1.203.tar (Docker Images) | |_ _ _ocdiam-pkg-25.1.203.0.0.tgz | |_ _ _ _ _ _ ocdiam-sim-25.1.203.tgz(Helm Charts) | |_ _ _ _ _ _ ocdiam-sim-image-25.1.203.tar (Docker Images) - Copy the tar file from the downloaded package to CNE, OCI, or Kubernetes cluster where you want to deploy ATS.

3.1.3 Deploy ATS with TLS Enabled

Note:

- OCATS and Python stubs support both TLS 1.2. and TLS 1.3.

- DiamSim pod do not support secure calls.

Follow the steps in this section to create a Java KeyStore (JKS) file and enable the BSF ATS GUI with HTTPS during installation.

3.1.3.1 Generate JKS File for Jenkins Server

To access Jenkins ATS GUI access through HTTPS, a JKS file should be created.

Perform the following steps to generate the JKS file:

Generate the Root Certificate

- If the user has a Certificate Authority (CA) signed root

certificate such as

caroot.certand key, then the user can use those files. - If the root certificate is not already available, the user can generate one

self signed root certificate. This root certificate created needs to be

added to the

truststoresuch as a Browser like Firefox or Chrome. User can follow the Browser specific documentation to upload the root certificate. The root certificate is used to sign the application, or ATS certificate. - Generate a root key with the following command:

openssl genrsa 2048 > caroot.keyThis will generate a key called

caroot.key - Generate a

carootcertificate with the following command:openssl req -new -x509 -nodes -days 1000 -key <root_key> > <root_certificate>For example,

[cloud-user@platform-bastion-1]$ openssl req -new -x509 -nodes -days 1000 -key caroot.key > caroot.cer You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [XX]:IN State or Province Name (full name) []:KA Locality Name (eg, city) [Default City]:BLR Organization Name (eg, company) [Default Company Ltd]:ORACLE Organizational Unit Name (eg, section) []:CGBU Common Name (eg, your name or your server's hostname) []:ocats Email Address []: [cloud-user@platform-bastion-1]$

Generate Application or Client Certificate

- Create a

ssl.conffile. - Edit the

ssl.conffile. In the "[alt_names]" section, list the IPs that are used to access ATS GUI as shown in the following samplessl.conffile:[ req ] default_bits = 4096 distinguished_name = req_distinguished_name req_extensions = req_ext [ req_distinguished_name ] countryName = Country Name (2 letter code) countryName_default = IN stateOrProvinceName = State or Province Name (full name) stateOrProvinceName_default = KN localityName = Locality Name (eg, city) localityName_default = BLR organizationName = Organization Name (eg, company) organizationName_default = ORACLE commonName = Common Name (e.g. server FQDN or YOUR name) commonName_max = 64 commonName_default = ocats.ocbsf.svc.cluster.local [ req_ext ] keyUsage = critical, digitalSignature, keyEncipherment extendedKeyUsage = serverAuth, clientAuth basicConstraints = critical, CA:FALSE subjectAltName = critical, @alt_names [alt_names] IP.1 = 127.0.0.1 IP.2 = 10.75.217.5 IP.3 = 10.75.217.76 DNS.1 = localhost DNS.2 = ocats.ocbsf.svc.cluster.localNote:

- To access the GUI with DNS, make sure that the

commonName_default is the same as the DNS name being

used.

-

Ensure the DNS is in this format:

<service_name>.<namespace>.svc.cluster.localMultiple DNSs, such as DNS.1, DNS.2, and so on, can be added.

-

- To support the ATS API, it is necessary to add the IP 127.0.0.1 to the list of IPs.

- To access the GUI with DNS, make sure that the

commonName_default is the same as the DNS name being

used.

- Create a Certificate Signing Request (CSR) with the following command:

openssl req -config ssl.conf -newkey rsa:2048 -days 1000 -nodes -keyout rsa_private_key_pkcs1.key > ssl_rsa_certificate.csrOutput:[cloud-user@platform-bastion-1 ocbsf]$ openssl req -config ssl.conf -newkey rsa:2048 -days 1000 -nodes -keyout rsa_private_key_pkcs1.key > ssl_rsa_certificate.csr Ignoring -days; not generating a certificate Generating a RSA private key ...+++++ ........+++++ writing new private key to 'rsa_private_key_pkcs1.key' ----- You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [IN]: State or Province Name (full name) [KA]: Locality Name (eg, city) [BLR]: Organization Name (eg, company) [ORACLE]: Common Name (e.g. server FQDN or YOUR name) [ocbsf]: [cloud-user@platform-bastion-1 ocbsf]$ - To display all the components of the CSR file and to verify the

configurations run the following command:

openssl req -text -noout -verify -in ssl_rsa_certificate.csr - Sign the CSR file with root certificate by running the following command:

openssl x509 -extfile ssl.conf -extensions req_ext -req -inssl_rsa_certificate.csr -days 1000 -CA ../caroot.cer -CAkey ../caroot.key -set_serial 04 > ssl_rsa_certificate.crtOutput:

[cloud-user@platform-bastion-1 ocbsf]$ openssl x509 -extfile ssl.conf -extensions req_ext -req -in ssl_rsa_certificate.csr -days 1000 -CA ../caroot.cer -CAkey ../caroot.key -set_serial 04 > ssl_rsa_certificate.crt Signature ok subject=C = IN, ST = KA, L = BLR, O = ORACLE, CN = ocbsf Getting CA Private Key [cloud-user@platform-bastion-1 ocbsf]$ - Verify if the certificate is signed by the root certificate by running the

following command:

[cloud-user@platform-bastion-1 ocbsf]$ openssl verify -CAfile caroot.cer ssl_rsa_certificate.crtOutput:

[cloud-user@platform-bastion-1 ocbsf]$ openssl verify -CAfile caroot.cer ssl_rsa_certificate.crt ssl_rsa_certificate.crt: OK - Save the generated application certificate and root certificate.

- Add the

caroot.certo the browser as a trusted author. - The generated application/client certificates cannot be directly

given to the Jenkins server. Hence generate the

.p12 keystorefile for the client certificate with the following command:[cloud-user@platform-bastion-1 ocbsf]$ openssl pkcs12 -inkey rsa_private_key_pkcs1.key -inssl_rsa_certificate.crt -export-out certificate.p12 Enter Export Password: Verifying - Enter Export Password: - In the prompt, create a password and save it for future use.

- Convert the .p12 keystore file into a JKS format file using the following command:

[cloud-user@platform-bastion-1 ocbsf]$ keytool -importkeystore -srckeystore ./certificate.p12 -srcstoretype pkcs12 -destkeystore jenkinsserver.jks -deststoretype JKSOutput:

[cloud-user@platform-bastion-1 ocbsf]$ keytool -importkeystore -srckeystore ./certificate.p12 -srcstoretype pkcs12 -destkeystore jenkinsserver.jks -deststoretype JKS Importing keystore ./certificate.p12 to jenkinsserver.jks... Enter destination keystore password: Re-enter new password: Enter source keystore password: Entry for alias 1 successfully imported. Import command completed: 1 entries successfully imported, 0 entries failed or cancelled - In the prompt, use the same password used while creating

.p12 keystorefile.Note:

Ensure that the .p12 keystore and JKS files has the same passwords. - The generated JKS file,

jenkinserver.jksis added to the Jenkins path, where Jenkins server can access it.

For more details about the ATS TLS feature, refer to Deploy ATS with TLS Enabled section.

3.1.3.2 Enable TLS on Python Stubs

caroot.cer file.

- Create a

ssl.conffile. - Edit

ssl.conffile. Ensure that the DNS is in the format of*.<namespace>.svcA sample stub_ssl.conf file:[ req ] default_bits = 4096 distinguished_name = req_distinguished_name req_extensions = req_ext [ req_distinguished_name ] countryName = Country Name (2 letter code) countryName_default = IN stateOrProvinceName = State or Province Name (full name) stateOrProvinceName_default = KN localityName = Locality Name (eg, city) localityName_default = BLR organizationName = Organization Name (eg, company) organizationName_default = ORACLE commonName = svc.cluster.local [ req_ext ] keyUsage = critical, digitalSignature, keyEncipherment extendedKeyUsage = serverAuth, clientAuth basicConstraints = critical, CA:FALSE subjectAltName = critical, @alt_names [alt_names] IP.1 = 127.0.0.1 DNS.1 = *.ocats.svc - Create a Certificate Signing Request (CSR) for the stubs using the

following command:

openssl req -config stub_ssl.conf -newkey rsa:2048 -days 1000 -nodes -keyout rsa_private_key_stub_pkcs2.key > stub_ssl_rsa_certificate1.csrOutput:

[cloud-user@platform-bastion-1 stub_certs]$ openssl req -config stub_ssl.conf -newkey rsa:2048 -days 1000 -nodes -keyout rsa_private_key_stub_pkcs2.key > stub_ssl_rsa_certificate1.csr Ignoring -days; not generating a certificate Generating a RSA private key ....................+++++ ...+++++ writing new private key to 'rsa_private_key_stub_pkcs2.key' ----- You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [IN]: State or Province Name (full name) [KN]: Locality Name (eg, city) [BLR]: Organization Name (eg, company) [ORACLE]: svc.cluster.local []:*.ocbsf.svc - Sign the certificate with the CA root using the following

command:

openssl x509 -extfile stub_ssl.conf -extensions req_ext -req -in stub_ssl_rsa_certificate1.csr -days 1000 -CA ../ocbsf-caroot.cer -CAkey ../ocbsf-caroot.key -set_serial 05 > stub_ssl_rsa_certificate1.crtOutput:

[cloud-user@platform-bastion-1 stub_certs]$ openssl x509 -extfile stub_ssl.conf -extensions req_ext -req -in stub_ssl_rsa_certificate1.csr -days 1000 -CA ../ocbsf-caroot.cer -CAkey ../ocbsf-caroot.key -set_serial 05 > stub_ssl_rsa_certificate1.crt Signature ok subject=C = IN, ST = KN, L = BLR, O = ORACLE, CN = *.ocbsf.svc Getting CA Private Key - Create a secret for the stub and associate it with the namespace

using the following command:

kubectl create secret generic ocats-stub-secret1 --from-file=stub_ssl_rsa_certificate1.crt --from-file=rsa_private_key_stub_pkcs2.key --from-file=../ocbsf-caroot.cer -n ocbsfOutput:

[cloud-user@platform-bastion-1 ocbsf]$ kubectl create secret generic ocbsf-stub-secret1 --from-file=stub_ssl_rsa_certificate1.crt --from-file=rsa_private_key_stub_pkcs2.key --from-file=../ocbsf-caroot.cer -n ocbsf secret/ocats-stub-secret1 created - Update

values.yamlfile of each Python stub in specific NF namespace with following details:NF: "<NF-Name>" cert_secret_name: "ocats-stub-secret" ca_cert: "ocbsf-caroot.cer" client_cert: "ocbsf-stub_ssl_rsa_certificate.crt" private_key: "ocbsf-rsa_private_key_stub_pkcs1.key" expose_tls_service: true CLIENT_CERT_REQ: trueNote:

If the Helm,cert_secret_nameparameter, is null, thenca_cert,client_cert, andprivate_keyvalues are not considered by the TLS. - Ensure to update the deployment of all the Python stubs installed on the setup.

For more details about the ATS TLS feature, refer to Support for Transport Layer Security section.

3.1.3.3 Enable ATS GUI with HTTPS

Follow the steps to secure or enable TLS on the server.

- Create a Kubernetes secret by adding the above created files:

kubectl create secret generic ocats-tls-secret --from-file=jenkinsserver.jks --from-file=ssl_rsa_certificate.crt --from-file=rsa_private_key_pkcs1.key --from-file=caroot.cer -n ocbsfWhere,

jenkinsserver.jks: This file is needed when

atsGuiTLSEnabledis set to true. This is necessary to open ATS GUI with secured TLS protocol.ssl_rsa_certificate.crt: This is client application certificate.

rsa_private_key_pkcs1.key: This is RSA private key.

caroot.cer: This file used during creation of jks file needs to be passed for Jenkins/ATS API communication.

The sample of created secret:

[cloud-user@platform-bastion-1 ~]$ kubectl describe secret ocats-tls-secret -n ocbsf Name: ocats-tls-secret Namespace: ocats Labels: <none> Annotations: <none> Type: Opaque Data ==== caroot.cer: 1147 bytes ssl_rsa_certificate.crt: 1424 bytes jenkinsserver.jks: 2357 bytes rsa_private_key_pkcs1.key: 1675 bytes - Apply the following changes in

values.yamlfile.

The user can install the ATS, using the helm install command. Change thecertificates: cert_secret_name: "ocats-tls-secret" ca_cert: "caroot.cer" client_cert: "ssl_rsa_certificate.crt" private_key: "rsa_private_key_pkcs1.pem" jks_file: "jenkinsserver.jks" # This parameter is needed when atsGuiTLSEnabled is set to true. This file is necessary for ATS GUI to be opend with secured TLS protocol. jks_password: "123456" #This is the password given to the jks file while creation.atsGuiTLSEnabledHelm parameter value to true for ATS to get the certificates and support HTTPS for GUI. - Upload the

caroot.cerfile to the browser, before accessing it usinghttpsprotocol.For more details about the uploading the file to the browser, refer Adding a Certificate in Browser section in Enable ATS GUI with HTTPS.

- A user can now start ATS with HTTPS the protocol. The link to open

the ATS GUI format is

https://<IP>:<port>, for example,https://10.75.217.25:30301.The lock symbol in the browser indicates that the server is secured or TLS enabled.

3.1.4 Pushing the Images to Customer Docker Registry

This section describes the pre-deployment steps for deploying ATS and stub pods.

Preparing to deploy ATS and Stub Pods in Kubernetes Cluster

To deploy ATS and Stub pods in a Kubernetes Cluster, perform the following steps:

- Run the following command to extract

the tar file content:

tar -zxvf ocats-bsf-tools-25.1.200.0.0.tgzThe output of this command is:ocats-bsf-pkg-25.1.200.tgz ocstub-pkg-25.1.201.tgz ocdns-pkg-25.1.203.tgz ocdiam-pkg-25.1.203.0.0tgz - Go to the

ocats-bsf-tools-25.1.200.0.0folder and run the following command to extract the helm charts and docker images of ATS:tar -zxvf ocats-bsf-pkg-25.1.200.0.0.tgzThe output of this command is:

ocats-bsf-25.1.200.tgz ocats-bsf-images-25.1.200.tar ocats-bsf-data-25.1.200.tgz - Run the following command in your

cluster to load the ATS docker image:

docker load --input ocats-bsf-images-25.1.200.tar - Run the following commands to tag and push the ATS

images

docker tag ocats-bsf:25.1.200 <registry>/ocats-bsf:25.1.200 docker push <registry>/ocats-bsf:25.1.200Example:

docker tag ocats-bsf:25.1.200 localhost:5000/ocats-bsf:25.1.200 docker push localhost:5000/ocats-bsf:25.1.200 - Run the following command to untar the

helm charts, in

ocats-bsf-25.1.200.tgztar -zxvf ocats-bsf-25.1.200.tgz - Update the registry name, image name

and tag in the

ocats-bsf/values.yamlfile as required. For this, you need to update theimage.repositoryandimage.tagparameters in theocats-bsf/values.yamlfile. - In the

ocats-bsf/values.yamlfile, theatsFeaturesparameter is configured to control ATS feature deliveries.atsFeatures: ## DO NOT UPDATE this section without My Oracle Support team's support testCaseMapping: true # To display Test cases on GUI along with Features logging: true # To enable feature to collect applogs in case of failure lightWeightPerformance: false # The Feature is not implemented yet executionWithTagging: true # To enable Feature/Scenario execution with Tag scenarioSelection: false # The Feature is not implemented yet parallelTestCaseExecution: true # To run ATS features parallel parallelFrameworkChangesIntegrated: true # To run ATS features parallel mergedExecution: false # To execute ATS Regression and NewFeatures pipelines together in merged manner individualStageGroupSelection: false # The Feature is not implemented yet parameterization: true # When set to false, the Configuration_Type parameter on the GUI will not be available. atsApi: true # To trigger ATS using ATS API healthcheck: true # TO enable/disable ATS Health Check. atsGuiTLSEnabled: false # To run ATS GUI in https mode. atsCommunicationTLSEnabled: false #If set to true, ATS will get necessary variables to communicate with SUT, Stub or other NFs with TLS enabled. It is not required in ASM environment.Note:

It is recommended to avoid alteringatsFeaturesflags.

3.1.5 Configuring ATS

3.1.5.1 Enabling Static Port

ocats-bsf/values.yaml

file under the service section, set the value of staticNodePortEnabled parameter to true and enter a valid

nodePort value for staticNodePort

parameter.service:

customExtension:

labels: {}

annotations: {}

type: LoadBalancer

ports:

http:

port: "8080"

staticNodePortEnabled: false

staticNodePort: ""3.1.5.2 Enable Static API Node Port

service:

customExtension:

labels: {}

annotations: {}

type: LoadBalancer

ports:

api:

port: "5001"

staticNodePortEnabled: false

staticNodePort: ""3.1.5.3 Service Account Requirements

rules:

- apiGroups: ["extensions"]

resources: ["deployments", "replicasets"]

verbs: ["watch", "get", "list", "update"]

- apiGroups: ["apps"]

resources: ["deployments", "replicasets"]

verbs: ["watch", "get", "list", "update"]

- apiGroups: [""]

resources: ["pods", "services", "secrets", "configmaps"]

verbs: ["watch", "get", "list", "delete", "update", "create"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get", "list"]3.1.5.4 Enabling Aspen Service Mesh

This section provides information on how to enable Aspen service mesh while deploying ATS for Binding Support Function. The configurations mentioned in this section are optional and should be performed only if ASM is required.

To enable service mesh for BSF ATS, perform the following steps:

- In the service section of the

values.yamlfile, the serviceMeshCheck parameter is set to false by default. To enable service mesh, set the value for serviceMeshCheck to true. The following is a snippet of the service section in the yaml file:service: customExtension: labels: {} annotations: {} type: LoadBalancer ports: https: port: "8443" staticNodePortEnabled: false staticNodePort: "" http: port: "8080" staticNodePortEnabled: false staticNodePort: "" api: port: "5001" staticNodePortEnabled: false staticNodePort: "" serviceMeshCheck: true - If the ASM is not enabled on the global level for the namespace,

run the following command to enable it before deploying the

ATS:

kubectl label --overwrite namespace <namespace_name> istio-injection=enabledFor example:kubectl label --overwrite namespace ocbsf istio-injection=enabled - Uncomment and add the following annotation under the

lbDeployments and nonlbDeployments section of the global

section in

values.yamlfile as follows:traffic.sidecar.istio.io/excludeInboundPorts: "9000"traffic.sidecar.istio.io/excludeOutboundPorts: "9000"The following is a snippet from the

values.yamlof BSF:/home/cloud-user/ocats-bsf/ocats-bsf-tools-25.1.200.0.0/ocats-bsf-pkg-25.1.200.0.0/ocats-bsf/ vim values.yaml customExtension: allResources: labels: {} annotations: { #Enable this section for service-mesh based installation traffic.sidecar.istio.io/excludeInboundPorts: "9000", traffic.sidecar.istio.io/excludeOutboundPorts: "9000" }lbDeployments: labels: {} annotations: { traffic.sidecar.istio.io/excludeInboundPorts: "9000", traffic.sidecar.istio.io/excludeOutboundPorts: "9000"} - If service mesh is enabled, then create a

destination rule for fetching the metrics from the Prometheus. In most of the

deployments, Prometheus is kept outside the service mesh so you need a

destination rule to communicate between TLS enabled entity (ATS) and non-TLS

entity (Prometheus). You can create a destination rule using the following

sample yaml

file:

kubectl apply -f - <<EOF apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: prometheus-dr namespace: ocats spec: host: oso-prometheus-server.pcf.svc.cluster.local trafficPolicy: tls: mode: DISABLE EOFIn the destination rule:- name indicates the name of destination rule.

- namespace indicates where the ATS is deployed.

- host indicates the hostname of the prometheus server.

- Update the

ocbsf_custom_values_servicemesh_config_25.1.200.yamlwith the below additional configuration under virtualService section for Egress Gateway:virtualService: - name: nrfvirtual1 host: ocbsf-ocbsf-egress-gateway destinationhost: ocbsf-ocbsf-egress-gateway port: 8000 exportTo: |- [ "." ] attempts: "0"Where,

host or destination name uses the format - <release_name>-<egress_svc_name>.

You must update the host or destination name as per the deployment.

- For ServerHeader and SessionRetry features, the user

needs to perform the following configurations under the envoyFilters for

nf1stub, nf11stub, and nf12stub in the

ocbsf-servicemesh-config-custom-values-25.1.200.yaml:Note:

occnp_custom_values_servicemesh_config yaml file and helm charts version names would differ based on the deployed BSF NF version. For example, "occnp_custom_values_servicemesh_config_24.3.0.yaml" or "occnp_custom_values_servicemesh_config_24.3.1.yaml".envoyFilters: - name: serverheaderfilter-nf1stub labelselector: "app: nf1stub-ocstub-py" configpatch: - applyTo: NETWORK_FILTER filtername: envoy.filters.network.http_connection_manager operation: MERGE typeconfig: type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager configkey: server_header_transformation configvalue: PASS_THROUGH - name: serverheaderfilter-nf11stub labelselector: "app: nf11stub-ocstub-py" configpatch: - applyTo: NETWORK_FILTER filtername: envoy.filters.network.http_connection_manager operation: MERGE typeconfig: type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager configkey: server_header_transformation configvalue: PASS_THROUGH - name: serverheaderfilter-nf12stub labelselector: "app: nf12stub-ocstub-py" configpatch: - applyTo: NETWORK_FILTER filtername: envoy.filters.network.http_connection_manager operation: MERGE typeconfig: type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager configkey: server_header_transformation configvalue: PASS_THROUGH - Perform helm upgrade on the ocbsf-servicemesh-config release using

the modified

ocbsf_custom_values_servicemesh_config_25.1.200.yamlfile.helm upgrade <helm_release_name_for_servicemesh> -n <namespace> <servicemesh_charts> -f <servicemesh-custom.yaml>For example,helm upgrade ocbsf-servicemesh-config ocbsf-servicemesh-config-25.1.200.tgz -n ocbsf -f ocbsf_custom_values_servicemesh_25.1.200.yaml - Configure DNS for Alternate Route service. For more information, see Post-Installation Steps.

3.1.5.5 Enabling Health Check

This section describes how to enable Health Check for ATS.

To enable Health Check, in the ocats-bsf/values.yaml

file, set the value of healthcheck parameter to

true and enter a valid value to select either Webscale

or OCCNE environment.

envtype to

OCCNE and update the values of the following parameters:

Webscale- Update the value as falseenvtype- T0NDTkU= (i.e envtype=$(echo -n 'OCCNE' | base64))occnehostip- OCCNE Host IP addressoccnehostusername- OCCNE Host Usernameoccnehostpassword- OCCNE Host Password

Webscale- Update the value as trueenvtype- T0NDTkU= (i.e envtype=$(echo -n 'OCCNE' | base64))

After the configurations are done, encrypt the parameters and provide the values as shown in the following snippet:

atsFeatures: ## DO NOT UPDATE this section without Engineering team's permission

healthcheck: true # TO enable/disable ATS Health Check.

sshDetails:

secretname: "healthchecksecret"

envtype: "T0NDTkU="

occnehostip: "MTAuMTcuMjE5LjY1"

occnehostusername: "dXNlcm5hbWU"

occnehostpassword: "KioqKg=="Webscale- Update the value as trueenvtype- V0VCU0NBTEU= (i.e envtype=$(echo -n 'WEBSCALE' | base64))

After the configurations are done, encrypt the parameters and provide the values as shown in the following snippet:

atsFeatures: ## DO NOT UPDATE this section without Engineering team's permission

healthcheck: true # TO enable/disable ATS Health Check.

sshDetails:

secretname: "healthchecksecret"

envtype: "V0VCU0NBTEU="

webscalejumpip: "MTAuNzAuMTE3LjQy"

webscalejumpusername: "dXNlcm5hbWU="

webscalejumppassword: "KioqKg=="

webscaleprojectname: "KioqKg=="

webscalelabserverFQDN: "KioqKg=="

webscalelabserverport: "KioqKg=="

webscalelabserverusername: "KioqKg=="

webscalelabserverpassword: "KioqKg=="Note:

Once the ATS is deployed with HealthCheck feature enabled or disabled, then it cannot be changed. To change the configuration, you are required to re-install.3.1.5.6 Enabling Persistent Volume

Note:

The steps provided in this section are optional and required only if Persistent Volume needs be to enabled.ATS supports Persistent storage to retain ATS historical build execution data, test cases and one-time environment variable configurations. With this enhancement, the user can decide whether to use persistent volume based on their resource requirements. By default, the persistent volume feature is not enabled.

To enable persistent storage, perform the following steps:- Create a PVC using PersistentVolumeClaim.yaml file and associate the same

to the ATS pod.

Sample PersistentVolumeClaim.yaml file:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: <Enter the PVC Name> annotations: spec: storageClassName: <Provide the Storage Class Name> accessModes: - ReadWriteOnce resources: requests: storage: <Provide the size of the PV>- Set PersistentVolumeClaim to the PVC file name.

- Enter the storageClassName to the Storage Class Name.

- Set storage to and size of the persistent volume.

Sample PVC configuration:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: bsf-pvc-25.1.200 annotations: spec: storageClassName: standard accessModes: - ReadWriteOnce resources: requests: storage: 1Gi

- Run the following command to create

PVC:

kubectl apply -f <filename> -n <namespace>For example:

kubectl apply -f PersistentVolumeClaim.yaml -n ocbsfOutput:

persistentvolumeclaim/bsf-pvc-25.1.200 created - Once the PVC is created, run the following command to verify that it is

bound to the namespace and is

available.

kubectl get pvc -n <namespace used for pvc creation>For example:

kubectl get pvc -n ocbsfSample output:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE bsf-pvc-25.1.200 Bound pvc-65484045-3805-4064-9fc3-f9eeeaccc8b8 1Gi RWO standard 11sVerify that the

STATUSisBoundand rest of the parameters likeNAME,CAPACITY,ACCESS MODES, andSTORAGECLASSare as mentioned in thePersistentVolumeClaim.yamlfile.Note:

Do not proceed further with the next step if there is an issue with the PV creation and contact your administrator to get the PV Created.

- Enable PVC:

- Set the PVEnabled flag to true.

- Set PVClaimName to the PVC created in Step

1.

PVEnabled: true PVClaimName: "ocbsf-pvc-25.1.200"

Note:

Make sure that ATS is deployed before proceeding to the further steps. - Copy the

<nf_main_folder>and<jenkins jobs>folders from the tar file to their ATS pod and restart the pod.- Extract the tar file.

tar -xvf ocats-bsf-data-25.1.200.tgz - Run the following commands to copy the desired folder.

kubectl cp ocats-bsf-data-25.1.200/ocbsf_tests <namespace>/<pod-name>:/var/lib/jenkins/kubectl cp ocats-bsf-data-25.1.200/jobs <namespace>/<pod-name>:/var/lib/jenkins/.jenkins/ - Restart the pod.

kubectl delete po <pod-name> -n <namespace>

- Extract the tar file.

- Once the Pod is up and running, log in to the Jenkins console and configure the

Discard old Builds option to configure the number of Jenkins

builds, which must be retained in the persistent volume.

Figure 3-1 Discarding Old Builds

Note:

If Discard old Builds is not configured, Persistent Volume can get filled when there are huge number of builds.

For more details on Persistent Volume Storage, see Persistent Volume for 5G ATS.

3.1.5.7 ATS-BSF API Extended Support

The ATS application programming interface (API) feature provides APIs, to perform routine ATS tasks such as starting the ATS suite, monitoring and stopping the ATS suite etc.

values.yaml

file.

atsFeatures:

atsApi: trueFor more details about the ATS API feature, refer to ATS API section.

This ATS feature is extended to provide the ability of running single features, or

scenarios, or stages, or groups, or based on tags execution using the API. This also

allows running of test cases by providing the features, or scenarios, or stages, or

groups, or tags in the curl request to the server.

For more details about the API interfaces, refer to Use the RESTful Interfaces section.

3.1.6 Deploying ATS and Pods

3.1.6.1 Deploying ATS in Kubernetes Cluster

Important:

This Procedure is for Backwards porting purpose only and should not be considered as the Subsequent Release POD Deployment Procedure.

Prerequisite: Make sure that the old PVC, which contains the old release POD data is available.

To deploy ATS, perform the following steps:

- Run the following command to deploy ATS

using the updated helm charts:

Note:

Ensure that all the the components, that is, ATS, stub pods and CNC BSF are deployed in the same namespace.Using Helm

helm install -name <release_name> ocats-bsf-25.1.200.tgz --namespace <namespace_name> -f <values-yaml-file>For example:

helm install -name ocats ocats-bsf-25.1.200.tgz --namespace ocbsf -f ocats-bsf/values.yaml - Run the following command to verify ATS

deployment:

helm ls -n ocbsfThe output of the command is as follows:If the deployment is successful, the status is Deployed.NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE ocats 1 Mon Nov 14 14:56:11 2020 DEPLOYED ocats-bsf-25.1.200 25.1.200.0.0 ocbsf

3.1.6.2 Deploying Stub Pod in Kubernetes Cluster

-

Navigate to

ocats-bsf-tools-25.1.200.0.0folder and run the following command:tar -zxvf ocstub-pkg-25.1.201.0.0.tgzThe output of the command shows:ocstub-py-25.1.201.tgzocstub-py-image-25.1.201.tar

- Deploy

the additional stubs required to validate the session retry feature.

You can use

nf11stubornf12stubas alternte FQDN fornf1stub.- Run the following command to load the stub image.

docker load --input ocstub-py-image-25.1.201.tar - Tag and push the image to your docker registry using below commands.

docker tag ocstub-py:25.1.201 localhost:5000/ocstub-py:25.1.201 docker push localhost:5000/ocstub-py:25.1.201 - Untar the helm charts

ocstub-py-25.1.201.tgzand update the registry name, image name and tag (if required) inocstub-py/values.yamlfile.tar -zxvf ocstub-py-25.1.201.0.0.tgzNote:

From 24.2.0 onwards, service port names are configurable in ocstub-py. But as per Istio standard, it's advisable to keep the default values as it as.Example:

names: http: "http" h2c: "http2-h2c" h2: "http2-h2" - If required, change

apiVersiontoapps/v1inocstub-py/templates/deployment.yamlfile.apiVersion: apps/v1Note:

If the support for Predefined_priming feature is required, perform the following steps to configure Predefined_priming.

-

Copy

ocstub-py/values.yamlfile to a new file with namepre_priming_values.yaml. - Edit the

ocstub-py/pre_priming_values.yamlfile. - Set the value of

preConfigflag totrueand replace the default configuration with below configurations underpredefined_prime_configurationsection.Predefined_priming configuration:

preConfig: enabled: true predefined_prime_configuration: |+ [ { "method": "GET", "statuscode": "200", "url": "/nnrf-nfm/v1/nf-instances/fe7d992b-0541-4c7d-ab84-c6d70b1b0666", "data": "{\"nfInstanceId\": \"fe7d992b-0541-4c7d-ab84-c6d70b1b0666\", \"nfType\": \"BSF\", \"nfStatus\": \"REGISTERED\", \"heartBeatTimer\": 2, \"fqdn\": \"ocbsf1-2-api-gateway.bsf1-2.svc.atlantic.morrisville.us.lab.oracle.com\", \"priority\": 1, \"capacity\": 1, \"load\": 2, \"bsfInfo\": {\"ipv4AddressRanges\": [{\"start\": \"10.0.0.1\", \"end\": \"10.113.255.255\"}], \"ipv6PrefixRanges\": [{\"start\": \"2800:a00:cc03::/64\", \"end\": \"2800:a00:cc04::/64\"}]}, \"nfServices\": [{\"serviceInstanceId\": \"03063893-cf9e-4f7a-9827-111111111111\", \"serviceName\": \"nbsf-management\", \"versions\": [{\"apiVersionInUri\": \"v1\", \"apiFullVersion\": \"1.0.0\", \"expiry\": \"2019-08-03T18:66:08.871+0000\"}], \"scheme\": \"http\", \"nfServiceStatus\": \"REGISTERED\", \"fqdn\": \"ocbsf1-2-api-gateway.bsf1-2.svc.atlantic.morrisville.us.lab.oracle.com\", \"interPlmnFqdn\": null, \"ipEndPoints\": [{\"ipv4Address\": \"10.233.22.149\", \"transport\": \"TCP\", \"port\": 80}], \"apiPrefix\": null, \"allowedNfTypes\": [\"PCF\", \"AF\", \"NEF\"], \"priority\": 1, \"capacity\": 1, \"load\": 2}]}", "headers": "{\"Content-Type\": \"application/json\"}" }, { "method": "PUT", "statuscode": "201", "url": "/nnrf-nfm/v1/nf-instances/fe7d992b-0541-4c7d-ab84-c6d70b1b0666", "data": "{\"nfInstanceId\": \"fe7d992b-0541-4c7d-ab84-c6d70b1b0666\", \"nfType\": \"BSF\", \"nfStatus\": \"REGISTERED\", \"heartBeatTimer\": 30, \"fqdn\": \"ocbsf1-2-api-gateway.bsf1-2.svc.atlantic.morrisville.us.lab.oracle.com\", \"priority\": 1, \"capacity\": 1, \"load\": 2, \"bsfInfo\": {\"ipv4AddressRanges\": [{\"start\": \"10.0.0.1\", \"end\": \"10.113.255.255\"}], \"ipv6PrefixRanges\": [{\"start\": \"2800:a00:cc03::/64\", \"end\": \"2800:a00:cc04::/64\"}]}, \"nfServices\": [{\"serviceInstanceId\": \"03063893-cf9e-4f7a-9827-111111111111\", \"serviceName\": \"nbsf-management\", \"versions\": [{\"apiVersionInUri\": \"v1\", \"apiFullVersion\": \"1.0.0\", \"expiry\": \"2019-08-03T18:66:08.871+0000\"}], \"scheme\": \"http\", \"nfServiceStatus\": \"REGISTERED\", \"fqdn\": \"ocbsf1-2-api-gateway.bsf1-2.svc.atlantic.morrisville.us.lab.oracle.com\", \"interPlmnFqdn\": null, \"ipEndPoints\": [{\"ipv4Address\": \"10.233.22.149\", \"transport\": \"TCP\", \"port\": 80}], \"apiPrefix\": null, \"allowedNfTypes\": [\"PCF\", \"AF\", \"NEF\"], \"priority\": 1, \"capacity\": 1, \"load\": 2}]}", "headers": "{\"Content-Type\": \"application/json\"}" }, { "method": "PATCH", "statuscode": "204", "url": "/nnrf-nfm/v1/nf-instances/fe7d992b-0541-4c7d-ab84-c6d70b1b0666", "data": "{}", "headers": "{\"Content-Type\": \"application/json\"}" }, { "method": "POST", "statuscode": "201", "url": "/nnrf-nfm/v1/subscriptions", "data": "{\"nfStatusNotificationUri\": \"http://ocbsf-ocbsf-ingress-gateway.ocpcf.svc/nnrf-client/v1/notify\", \"reqNfType\": \"BSF\", \"subscriptionId\": \"2d77e0de-15a9-11ea-8c5b-b2ca002e6839\", \"validityTime\": \"2050-12-26T09:34:30.816Z\"}", "headers": "{\"Content-Type\": \"application/json\"}" } ]Note:

- The

predefined_prime_configurationcontains variables such asnfInstanceId,nfType, andfqdnin the data's content. Make sure to verify and update the variables based on the payload message that must be included in the response from the NRF on a request. - The default value of

nfInstanceIdvariable isfe7d992b-0541-4c7d-ab84-c6d70b1b0666.

- The

- Deploy the

stub:

helm install -name <release_name> ocstub-py --set env.NF=<NF> --setenv.LOG_LEVEL=<DEBUG/INFO> --set service.name=<service_name> --set service.appendReleaseName=false --namespace=<namespace_name> -f <valuesyaml-file>Install nf1stub and nf11stub with updated ocstub-py/pre_priming_values.yaml file.

helm install -name nf1stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf1stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/pre_priming_values.yaml helm install -name nf11stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf11stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/pre_priming_values.yamlInstall nf12stub with default values.yaml.

helm install -name nf12stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf12stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/values.yamlIf the support for Predefined_priming feature is not required, helm installation must be performed using

default values.yamlfile.helm install -name <release_name> ocstub-py --set env.NF=<NF> --set env.LOG_LEVEL=<DEBUG/INFO> --set service.name=<service_name>--set service.appendReleaseName=false --namespace=<namespace_name> -f <valuesyaml-file>For example,

helm install -name nf1stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf1stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/values.yaml helm install -name nf11stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf11stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/values.yaml helm install -name nf12stub ocstub-py --set env.NF=BSF --set env.LOG_LEVEL=DEBUG --set service.name=nf12stub --set service.appendReleaseName=false --namespace=ocbsf -f ocstub-py/values.yaml - Run the following command to verify the stub deployment:

helm ls -n ocbsfSample output:

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE nf11stub 1 Thu Jul 29 05:55:48 2024 DEPLOYED ocstub-py-25.1.201 25.1.201.0.0 ocbsf nf12stub 1 Thu Jul 29 05:55:50 2024 DEPLOYED ocstub-py-25.1.201 25.1.201.0.0 ocbsf nf1stub 1 Thu Jul 29 05:55:47 2024 DEPLOYED ocstub-py-25.1.201 25.1.201.0.0 ocbsf - Run the following command to verify the ATS and Stubs

deployment

status:

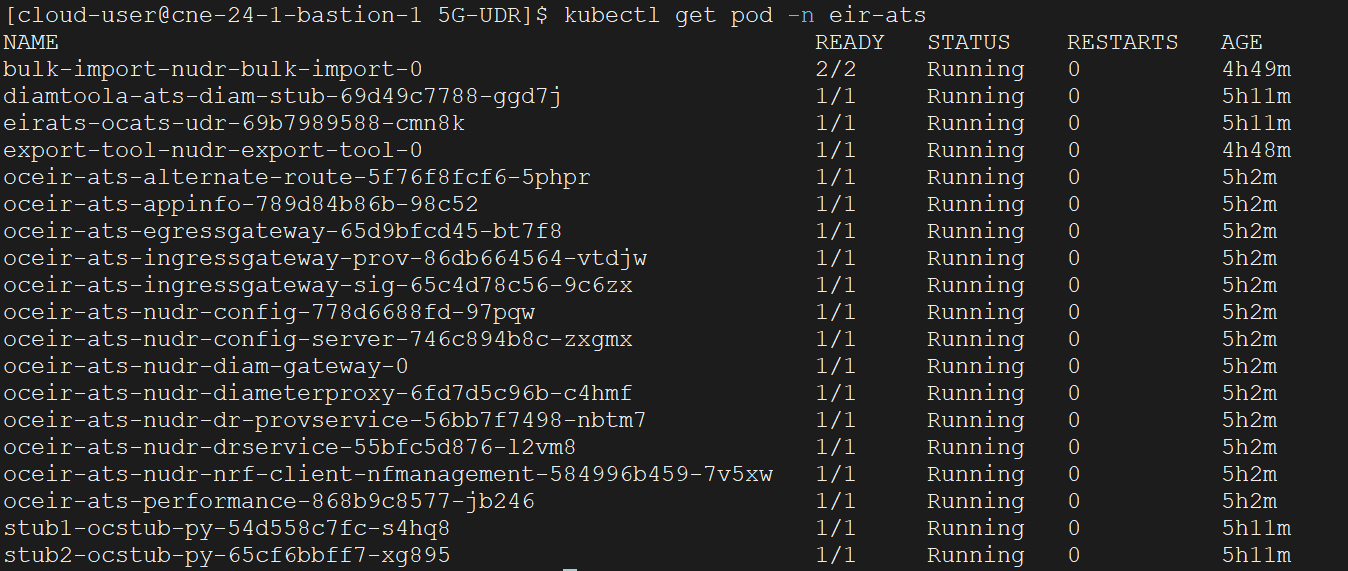

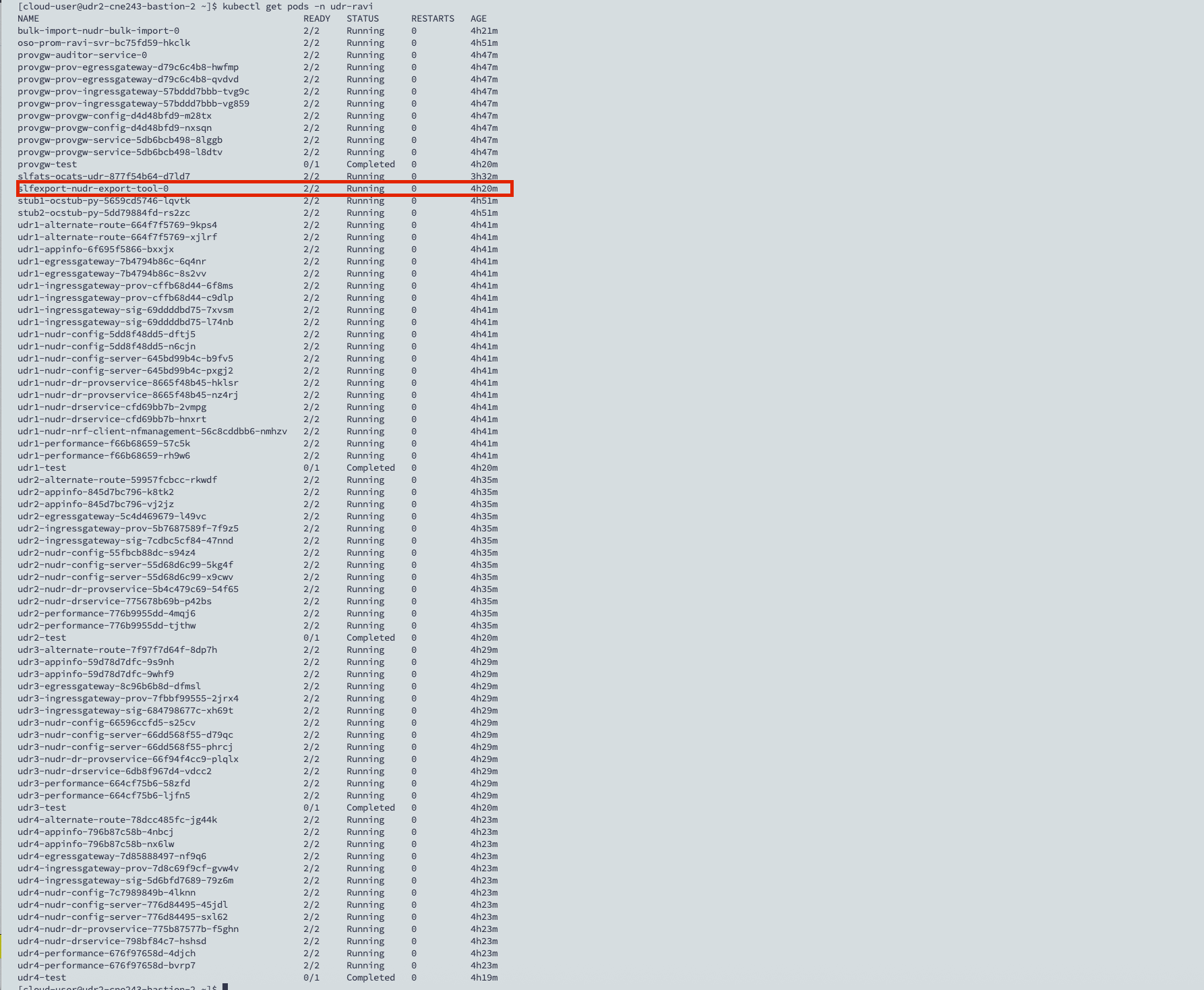

helm status -n ocbsf - Run the following command to verify if all the services are

installed.

kubectl get po -n ocbsfSample output:

NAME READY STATUS RESTARTS AGE nf11stub-ocstub-py-7bffd6dcd7-ftm5f 1/1 Running 0 3d23h nf12stub-ocstub-py-547f7cb99f-7mpll 1/1 Running 0 3d23h nf1stub-ocstub-py-bdd97cb9-xjrkx 1/1 Running 0 3d23h

- Run the following command to load the stub image.

- Verify the changes related to stub predefined prime

configuration.

- Run the following command to verify the status of all the

config-map.

kubectl get cm -n ocbsfNotice the change in the number of config-map counts. It includes two extra config-maps of stubs and the number will be the same as of stubs count.

For example:

NAME DATA AGE cm-pystub-nf1stub 1 3h35m cm-pystub-nf11stub 1 3h35m

- Run the following command to verify the status of all the

config-map.

Updating the Predefined_priming configurations

Note:

This procedure is applicable only when Predefined_priming configuration is enabled.- Run the following command to verify the status of all the

config-maps.

kubectl get cm -n ocbsf -

Perform the following steps separately for nf1stub and nf11stub pods.

-

Edit the config-map of the pod.

To edit the config-map of nf1stub,

kubectl edit cm cm-pystub-nf1stub -n ocbsfTo edit the config-map of nf11stub,

kubectl edit cm cm-pystub-nf11stub -n ocbsf - Edit the configurations as required, save and close the config-maps.

- Restart the nf1stub and nf11stub pods.

- Verify the logs of both these pods to confirm the changes.

-

3.1.6.3 Deploying DNS Stub in Kubernetes Cluster

Note:

Ensure there are sufficient resources and limit for DNS Stub. Set the resource request and limit values in the resources section of the values.yaml file as follows:

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'. # limits:

# cpu: 1000m

# memory: 1024Mi

# requests:

# cpu: 500m

# memory: 500Mi- Go to the

ocats-bsf-tools-25.1.200.0.0folder and run the following command to extract the ocstub tar file content:tar -zxvf ocdns-pkg-25.1.203.0.0.tgzSample output:

[cloud-user@platform-bastion-1 ocdns-pkg-25.1.203.0.0]$ ls -ltrh total 211M -rw-------. 1 cloud-user cloud-user 211M Mar 14 14:49 ocdns-bind-image-25.1.203.tar -rw-r--r--. 1 cloud-user cloud-user 2.9K Mar 14 14:49 ocdns-bind-25.1.203.tgz - Run the following command in your

cluster to load the DNS STUB image:

docker load --input ocdns-bind-image-25.1.203.tar - Run the following commands to tag and push the DNS STUB

image:

docker tag ocdns-bind:25.1.203 localhost:5000/ocdns-bind:25.1.203 docker push localhost:5000/ocdns-bind:25.1.203 - Run the following command to untar the

helm charts,

ocdns-bind-25.1.203.tgz.tar -zxvf ocdns-bind-25.1.203.tgz - Update the registry name, image name

and tag (if required) in the

ocdns-bind/values.yamlfile as required. For this, open the values.yaml file and update theimage.repositoryandimage.tagparameters. - Run the following command to deploy the

DNS Stub.

Using Helm:

helm install -name ocdns ocdns-bind-25.1.203.tgz --namespace ocbsf -f ocdns-bind/values.yaml -

Capture the

cluster nameof the deployment,namespacewhere nfstubs are deployed, and the cluster IP of DNS Stub.To capture the DNS Stub cluster IP:kubectl get svc -n ocbsf | grep dnsSample output:

[cloud-user@platform-bastion-1 ocdns-pkg-25.1.203.0.0]$ kubectl get svc -n ocbsf | grep dns NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ocdns ClusterIP 10.233.11.45 <none> 53/UDP,6236/TCP 19hTo capture the cluster name:kubectl -n kube-system get configmap kubeadm-config -o yaml | grep clusterNameSample output:clusterName: platform

3.1.6.4 Deploying ocdiam Simulator in Kubernetes Cluster

- Go to the

ocats-bsf-tools-25.1.200.0.0folder and run the following command to extract the ocstub tar file content:tar -zxvf ocdiam-pkg-25.1.203.0.0.tgzSample output:

[cloud-user@platform-bastion-1 ocdiam-pkg-25.1.203.0.0]$ ls -ltrh total 908M -rw-------. 1 cloud-user cloud-user 908M Mar 14 14:49 ocdiam-sim-image-25.1.203.tar -rw-r--r--. 1 cloud-user cloud-user 3.8K Mar 14 14:49 ocdiam-sim-25.1.203.tgz - Run the following command in your

cluster to load the Diameter Simulator image:

docker load --input ocdiam-sim-image-25.1.203.tar - Run the following commands to tag and push the Diameter Simulator

image:

docker tag ocdiam-sim:25.1.203 localhost:5000/ocdiam-sim:25.1.203 docker push localhost:5000/ocdiam-sim:25.1.203 - Run the following command to untar the

helm charts,

ocdiam-sim-25.1.203.tgz.tar -zxvf ocdiam-sim-25.1.203.tgz - Update the registry name, image name

and tag (if required) in the

ocdiam-sim/values.yamlfile as required. For this, open the values.yaml file and update theimage.repositoryandimage.tagparameters. - Run the following command to deploy the

Diameter Simulator.

Using Helm:

helm install -name ocdiam-sim ocdiam-sim --namespace ocbcf -f ocdiam-sim/values.yamlOutput:

ocdiam-sim-69968444b6-fg6ks 1/1 Running 0 5h47m

Sample of BSF namespace with BSF and ATS after installation:

[cloud-user@platform-bastion-1 ocstub-pkg-25.1.201.0.0]$ kubectl get po -n ocbsf

NAME READY STATUS RESTARTS AGE

ocbsf-appinfo-6fc99ffb85-f96j2 1/1 Running 1 3d23h

ocbsf-bsf-management-service-df6b68d75-m77dv 1/1 Running 0 3d23h

ocbsf-oc-config-79b5444f49-7pwzx 1/1 Running 0 3d23h

ocbsf-oc-diam-connector-77f7b855f4-z2p88 1/1 Running 0 3d23h

ocbsf-oc-diam-gateway-0 1/1 Running 0 3d23h

ocbsf-ocats-bsf-5d8689bc77-cxdvx 1/1 Running 0 3d23h

ocbsf-ocbsf-egress-gateway-644555b965-pkxsb 1/1 Running 0 3d23h

ocbsf-ocbsf-ingress-gateway-7558b7d5d4-lfs5s 1/1 Running 4 3d23h

ocbsf-ocbsf-nrf-client-nfmanagement-d6b955b48-4pptk 1/1 Running 0 3d23h

ocbsf-ocdns-ocdns-bind-75c964648-j5fsd 1/1 Running 0 3d23h

ocbsf-ocpm-cm-service-7775c76c45-xgztj 1/1 Running 0 3d23h

ocbsf-ocpm-queryservice-646cb48c8c-d72x4 1/1 Running 0 3d23h

ocbsf-performance-69fc459ff6-frrvs 1/1 Running 4 3d23h

ocbsfnf11stub-7bffd6dcd7-ftm5f 1/1 Running 0 3d23h

ocbsfnf12stub-547f7cb99f-7mpll 1/1 Running 0 3d23h

ocbsfnf1stub-bdd97cb9-xjrkx 1/1 Running 0 3d23h

ocdiam-sim-69968444b6 1/1 Running 0 3d23h3.1.7 Post-Installation Steps

The section describes post-installation steps that users should perform after deploying ATS and stub pods.

Alternate Route Service Configurations

To edit the Alternate Route Service deployment file (ocbcf-ocbsf-alternate-route) that points to DNS Stub, perform the following steps:

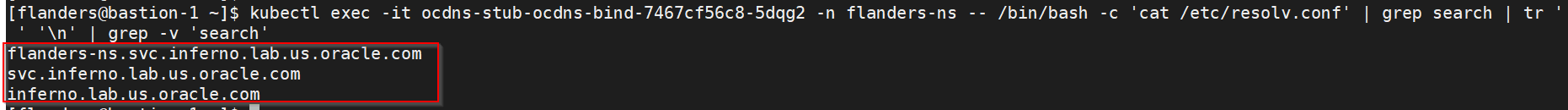

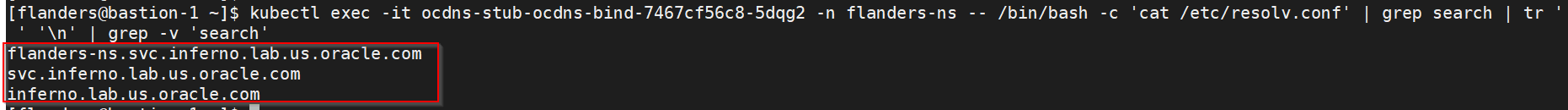

- Run the following command to get searches information from dns-bind

pod to enable communication between Alternate Route and dns-bind

service:

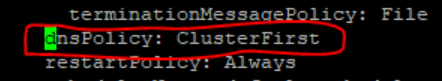

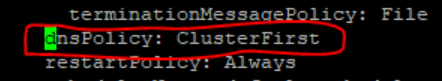

The following output is displayed after running the command:kubectl exec -it <dns-bind pod> -n <NAMESPACE> -- /bin/bash -c 'cat /etc/resolv.conf' | grep search | tr ' ' '\n' | grep -v 'search'By default alternate service will point to CoreDNS and you will see following settings in deployment file:Figure 3-2 Sample Output

Figure 3-3 Alternate Route Service Deployment File

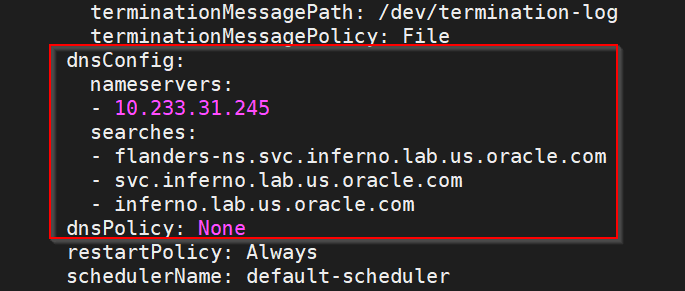

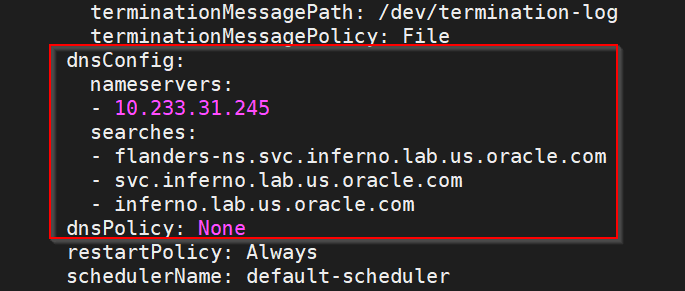

- Run the following command to edit the deployment file and add the following

content in alternate service to query DNS

stub:

$kubectl edit deployment ocpcf-occnp-alternate-route -n ocpcf- Add the IP Address of the nameserver that you have recorded after installing the DNS stub (cluster IP Address of DNS Stub).

- Add the search information one by one which you recorded earlier.

- Set dnsPolicy to

"None".

dnsConfig: nameservers: - 10.233.33.169 // cluster IP of DNS Stub searches: - ocpcf.svc.occne15-ocpcf-ats - svc.occne15-ocpcf-ats - occne15-ocpcf-ats dnsPolicy: None

For example:Figure 3-4 Example

NRF client configmap

- In the application-config configmap, configure the following

parameters with the respective values:

primaryNrfApiRoot=nf1stub.<namespace_gostubs_are_deployed_in>.svc:8080Example:

primaryNrfApiRoot=nf1stub.ocats.svc:8080secondaryNrfApiRoot=nf11stub.<namespace_gostubs_are_deployed_in>.svc:8080Example:

secondaryNrfApiRoot=nf11stub.ocats.svc:8080virtualNrfFqdn = nf1stub.<namespace_gostubs_are_deployed_in>.svcExample:

virtualNrfFqdn=nf1stub.ocats.svc

Note:

To get all configmaps in your namespace, run the following command:kubectl get configmaps -n <BSF_namespace> - (Optional) If persistent volume is used, follow the post-installation steps provided in the Persistent Volume for 5G ATS section.

3.2 Installing ATS for NRF

3.2.1 Resource Requirements

This section describes the ATS resource requirements for NRF.

Overview - Total Number of Resources

- NRF SUT

- cnDBTier

- ATS

Table 3-5 NRF - Total Number of Resources

| Resource Name | CPU | Memory (Gi) | Storage (Mi) |

|---|---|---|---|

| NRF SUT Totals | 61 | 69 | 0 |

| DBTier Totals | 40.5 | 50.5 | 720 |

| ATS Totals | 7 | 6 | 0 |

| Grand Total NRF ATS | 108.5 | 125.5 | 720 |

NRF Pods Resource Requirements Details

For NRF Pods resource requirements, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

ATS Resource Requirements details for NRF

This section describes the ATS resource requirements, which are needed to deploy NRF ATS successfully.

Table 3-6 ATS Resource Requirements Details

| Microservice | CPUs Required per Pod | Memory Required per Pod (GB) | Storage PVC Required per Pod (GB) | # Replicas (regular deployment) | # Replicas (ATS deployment) | CPUs Required - Total | Memory Required - Total (GB) | Storage PVC Required - Total (GB) |

|---|---|---|---|---|---|---|---|---|

| ATS Behave | 2 | 1 | 1 | 1 | 1 | 2 | 1 | 0 |

| ATS Stub (Python) | 1 | 1 | 1 | 1 | 5 | 5 | 5 | 0 |

| ATS Totals | 7 | 6 | 0 | |||||

cnDBTier Resource Requirements Details for NRF

This section describes the cnDBTier resource requirements, which are needed to deploy NRF ATS successfully.

Note:

For cnDBTier pods, a minimum of 4 worker nodes are required.Table 3-7 cnDBTier Services Resource Requirements

| Service Name | Min Pod Replica # | Min CPU/Pod | Min Memory/Pod (in Gi) | PVC Size (in Gi) | Min Ephemeral Storage (Mi) |

|---|---|---|---|---|---|

| MGMT (ndbmgmd) | 2 | 4 | 6 | 15 | 90 |

| DB (ndbmtd) | 4 | 5 | 5 | 4 | 90 |

| SQL (ndbmysqld) | 2 | 4 | 5 | 8 | 90 |

| SQL (ndbappmysqld) | 2 | 2 | 3 | 1 | 90 |

| Monitor Service (db-monitor-svc) | 1 | 0.4 | 490 (Mi) | NA | 90 |

| Backup Manager Service (db-backup-manager-svc) | 1 | 0.1 | 130(Mi) | NA | 90 |

| Replication Service - Leader | 1 | 2 | 2 | 2 | 90 |

| Replication Service - Other | 0 | 1 | 2 | 0 | 90 |

3.2.2 Downloading the ATS Package

Locating and Downloading ATS Images

To locate and download the ATS image from MOS:

- Log in to My Oracle Support using the appropriate credentials.

- Select the Patches & Updates tab.

- In the Patch Search window, click Product or Family (Advanced).

- Enter Oracle Communications Cloud Native Core - 5G in the Product field.

- Select Oracle Communications Cloud Native Core Network Repository Function <release_number> from the Release drop-down.

- Click Search. The Patch Advanced Search Results list appears.

- Select the required ATS patch from the list. The Patch Details window appears.

- Click Download. The File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to download the NRF ATS package file.

- Untar the zip file to access all the ATS images. The

<p********_<release_number>_Tekelec>.zip directory has

the following files:

ocats_ocnrf_csar_25_1_200_0_0.zip ocats_ocnrf_csar_25_1_200_0_0.zip.sha256 ocats_ocnrf_csar_mcafee-25.1.200.0.0.log -

Note:

The above zip file contains all the images and custom values required for 25.1.200 release of OCATS-NRF.Theocats_ocnrf_csar_25_1_200_0_0.zipfile has the following files and folders:├── Definitions │ ├── ocats_ocnrf_ats_tests.yaml │ └── ocats_ocnrf.yaml ├── Files │ ├── ChangeLog.txt │ ├── Helm │ │ └── ocats-ocnrf-25.1.200.tgz │ ├── Licenses │ ├── ocats-nrf-25.1.200.tar │ ├── Oracle.cert │ ├── ocstub-py-25.1.202.tar │ └── Tests ├── ocats_ocnrf.mf ├── Scripts │ ├── ocats_ocnrf_custom_serviceaccount_25.1.200.yaml │ ├── ocats_ocnrf_custom_values_25.1.200.yaml │ └── ocats_ocnrf_tests_jenkinsjobs_25.1.200.tgz └── TOSCA-Metadata └── TOSCA.meta - Copy the zip file to Kubernetes cluster where you want to deploy ATS.

3.2.3 Pushing the Images to Customer Docker Registry

Preparing to Deploy ATS and Stub Pod in Kubernetes Cluster

To deploy ATS and Stub Pod in Kubernetes Cluster:

- Run the following command to extract

tar file

content:

unzip ocats_ocnrf_csar_25_1_200_0_0.zipThe following docker image tar files are located at Files folder:- ocats-nrf-25.1.200.tar

- ocstub-py-25.1.202.tar

- Run the following commands in your

cluster to load the ATS docker image, '

ocats-nrf-25.1.200.tar' and Stub docker image 'ocstub-py-25.1.202.tar', and push it to your registry.$ docker load -i ocats-nrf-25.1.200.tar $ docker load -i ocstub-py-25.1.202.tar $ docker tag ocats/ocats-nrf:25.1.200 <local_registry>/ocats/ocats-nrf:25.1.200 $ docker tag ocats/ocstub-py:25.1.202 <local_registry>/ocats/ocstub-py:25.1.202 $ docker push <local_registry>/ocats/ocats-nrf:25.1.200 $ docker push <local_registry>/ocats/ocstub-py:25.1.202 - Create a copy of the custom values located at Scripts/ocats_ocnrf_custom_values_25.1.200.yaml and update it for image name, tag and other parameters as per the requirement.

3.2.4 Configuring ATS

3.2.4.1 Enabling Static Port

- To enable static port:

Note:

ATS supports static port. By default, this feature is not available.- In the ocats-ocnrf-custom-values.yaml file under service

section, set the staticNodePortEnabled parameter value to 'true' and

staticNodePort parameter value with valid

nodePort.

service: customExtension: labels: {} annotations: {} type: LoadBalancer port: "8080" staticNodePortEnabled: true staticNodePort: "32385"

- In the ocats-ocnrf-custom-values.yaml file under service

section, set the staticNodePortEnabled parameter value to 'true' and

staticNodePort parameter value with valid

nodePort.

3.2.4.2 Enabling Aspen Service Mesh

To enable service mesh for ATS:

- To enable service mesh, set the value for serviceMeshCheck

to true. The following is a snippet of the service

section in the yaml

file:

ocats-nrf: serviceMeshCheck: true - If the ASM is not enabled on the global level for the namespace,

run the following command to enable it before deploying the

ATS:

kubectl label --overwrite namespace <namespace_name> istio-injection=enabledFor example:kubectl label --overwrite namespace ocnrf istio-injection=enabled - Add the following annotations under the lbDeployments and

nonlbDeployments section of the global section in

ocats-nrf-custom-values.yaml file for ATS deployment as follows:

traffic.sidecar.istio.io/excludeInboundPorts: "8080"traffic.sidecar.istio.io/excludeOutboundPorts: "9090"For example:

lbDeployments: labels: {} annotations: traffic.sidecar.istio.io/excludeInboundPorts: "8080" traffic.sidecar.istio.io/excludeOutboundPorts: "9090"lbDeployments: labels: {} annotations: traffic.sidecar.istio.io/excludeInboundPorts: "8090" traffic.sidecar.istio.io/excludeOutboundPorts: "9090" nonlbServices: labels: {} annotations: {} nonlbDeployments: labels: {} annotations: traffic.sidecar.istio.io/excludeInboundPorts: "8090" traffic.sidecar.istio.io/excludeOutboundPorts: "9090" - Add the following annotations in NRF deployment to work with ATS in

service mesh environment:

For example:

oracle.com/cnc: "true" traffic.sidecar.istio.io/excludeInboundPorts: "9090,8095,8096,7,53" traffic.sidecar.istio.io/excludeOutboundPorts: "9090,8095,8096,7,53"lbDeployments: labels: {} annotations: oracle.com/cnc: "true" traffic.sidecar.istio.io/excludeInboundPorts: "9090,8095,8096,7,53" traffic.sidecar.istio.io/excludeOutboundPorts: "9090,8095,8096,7,53" nonlbServices: labels: {} annotations: {} nonlbDeployments: labels: {} annotations: oracle.com/cnc: "true" traffic.sidecar.istio.io/excludeInboundPorts: "9090,8095,8096,7,53" traffic.sidecar.istio.io/excludeOutboundPorts: "9090,8095,8096,7,53"

Note:

If the above annotations are not provided in NRF deployment under lbDeployments and nonlbDeployments, all the metrics and alerts related test cases will fail.

3.2.4.3 Enabling Persistent Volume

ATS supports Persistent storage to retain ATS historical build execution data, test cases, and one-time environment variable configurations.

To enable persistent storage:- Create a PVC and associate the same to the ATS pod.

- Set the PVEnabled flag to true.

- Set PVClaimName to PVC that is created for

ATS.

deployment: customExtension: labels: {} annotations: {} PVEnabled: true PVClaimName: "ocats-nrf-25.1.200-pvc"

For more details on Persistent Volume Storage, you can refer to Persistent Volume for 5G ATS.

3.2.4.4 Enabling NF FQDN Authentication

Note:

This procedure is applicable only if the NF FQDN Authentication feature is being tested else, proceed to the "Deploying ATS and Stub in Kubernetes Cluster" section.You must enable this feature while deploying Service Mesh. For more information on how to enable NF FQDN Authentication feature, see Oracle Communications Cloud Native Core, Network Repository Function User Guide.

- Use previously unzipped file

"ocats-nrf-custom-serviceaccount-25.1.200.yaml" to create

a service account. Add the following annotation in the

"ocats-nrf-custom-serviceaccount-25.1.200.yaml" file where

the kind is

ServiceAccount."certificate.aspenmesh.io/customFields": '{ "SAN": { "DNS": [ "<NF-FQDN>" ] } }'Sample format:

apiVersion: v1 kind: ServiceAccount metadata: name: ocats-custom-serviceaccount namespace: ocnrf annotations: "certificate.aspenmesh.io/customFields": '{ "SAN": { "DNS": [ "AMF.d5g.oracle.com" ] } }'Note:

"AMF.d5g.oracle.com" is the NF FQDN that you must provide in the serviceaccount DNS field. - Run the following command to create a service account:

kubectl apply -f ocats-nrf-custom-serviceaccount-25.1.200.yaml - Update the service account name in the

ocats-ocnrf-custom-values-25.1.200.yaml file as

follows:

ocats-nrf: serviceAccountName: "ocats-custom-serviceaccount"

3.2.5 Deploying ATS and Stub in Kubernetes Cluster

Note:

It is important to ensure that all the three components; ATS, Stub and NRF are in the same namespace.ATS and Stub supports Helm3 for deployment.

ocats-ocnrf-custom-values.yaml file

under

ocats-nrf.nrfReleaseName.

ocats-nrf:

nrfReleaseName: "ocnrf"If the namespace does not exists, run the following command to create a namespace:

kubectl create namespace ocnrf

Using Helm for Deploying ATS:

helm install <release_name> ocats-ocnrf-25.1.200.tgz --namespace <namespace_name> -f <values-yaml-file>

helm install ocats ocats-ocnrf-25.1.200.tgz --namespace ocnrf -f ocats-ocnrf-custom-values.yamlNote:

The abovehelm install command will deploy ATS along with the

stub servers required for ATS executions, which include 1 ATS pod and 5 stub server

pods.

3.2.6 Verifying ATS Deployment

helm status

<release_name>

Checking Pod Deployment:

kubectl get pod -n ocnrf

Checking Service Deployment:

kubectl get service -n ocnrf

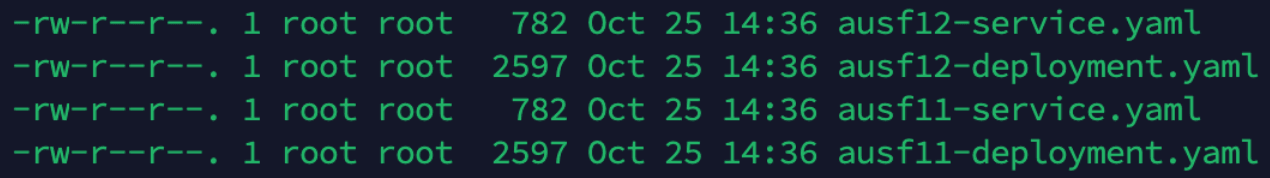

Figure 3-5 Checking Pod Deployment without Service Mesh

Figure 3-6 Checking Service Deployment without Service Mesh

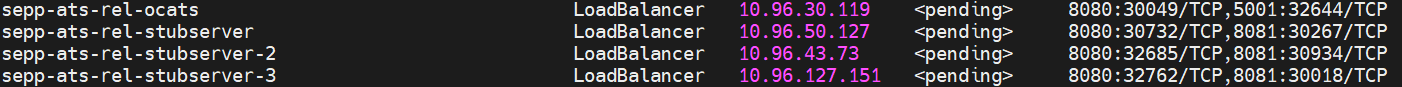

If ATS is deployed with side car of service mesh, ensure that both ATS and Stub pods have two containers in ready state and shows "2/2" as follows:

Figure 3-7 ATS and Stub Deployed with Service Mesh

Figure 3-8 ATS and Stub Deployed with Service Mesh

3.2.7 Post-Installation Steps (if Persistent Volume is Used)

If persistent volume is used, follow the post-installation steps mentioned in the Persistent Volume for 5G ATS section.

3.3 Installing ATS for NSSF

This section describes Automated Testing Suite (ATS) installation procedures for Network Slice Selection Function (NSSF) in a cloud native environment. You must perform ATS installation procedures for NSSF in the same sequence as outlined in the following sections.

3.3.1 Resource Requirements

Total Number of Resources

The total number of resource requirements are as follows:

Table 3-8 Total Number of Resources

| Resource | CPUs | Memory(GB) | Storage(GB) |

|---|---|---|---|

| NSSF SUT Total | 30.2 | 22 | 4 |

| cnDBTier Total | 40 | 40 | 20 |

| ATS Total | 14 | 14 | 0 |

| Grand Total NSSF ATS | 79.2 | 72 | 24 |

Resource Details

The details of resources required to install NSSF-ATS are as follows:

Table 3-9 Resource Details

| Microservice | CPUs Required per Pod | Memory Required per Pod (GB) | Storage PVC Required per Pod (GB) | Replicas (regular deployment) | Replicas (ATS deployment) | CPUs Required - Total | Memory Required - Total (GB) | Storage PVC Required - Total (GB) |

|---|---|---|---|---|---|---|---|---|

| NSSF Pods | ||||||||

| ingressgateway | 4 | 4 | 0 | 2 | 1 | 4 | 4 | 0 |

| egressgateway | 4 | 4 | 0 | 2 | 1 | 4 | 4 | 0 |

| nsselection | 4 | 2 | 0 | 2 | 1 | 4 | 2 | 0 |

| nsavailability | 4 | 2 | 0 | 2 | 1 | 4 | 2 | 0 |

| nsconfig | 2 | 2 | 0 | 1 | 1 | 2 | 2 | 0 |

| nssubscription | 2 | 2 | 0 | 1 | 1 | 2 | 2 | 0 |

| nrf-client-discovery | 1 | 1 | 0 | 2 | 1 | 1 | 1 | 0 |

| nrf-client-management | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 0 |

| appinfo | 0.2 | 1 | 0 | 2 | 1 | 0.2 | 1 | 0 |

| perfinfo | 0.2 | 0.5 | 0 | 1 | 1 | 0.2 | 0.5 | 0 |

| config-server | 0.2 | 0.5 | 0 | 1 | 1 | 0.2 | 0.5 | 0 |

| NSSF SUT Totals | 22.6 CPU | 20 GB | 0 | |||||

| ATS | ||||||||

| ATS Behave | 4 | 4 | 0 | 0 | 1 | 4 | 4 | 0 |

| ATS AMF Stub (Python) | 3 | 3 | 0 | 0 | 1 | 3 | 3 | 0 |

| ATS NRF Stub (Python) | 2 | 2 | 0 | 0 | 1 | 2 | 2 | 0 |

| ATS NRF Stub1 (Python) | 2 | 2 | 0 | 0 | 1 | 2 | 2 | 0 |

| ATS NRF Stub2 (Python) | 2 | 2 | 0 | 0 | 1 | 2 | 2 | 0 |

| OCDNS-BIND | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 0 |

| ATS Totals | 14 | 14 | 0 | |||||

| cnDBTier Pods (minimum of 4 worker nodes required) | ||||||||

| vrt-launcher-dt-1.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-dt-2.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-dt-3.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-dt-4.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-mt-1.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-mt-2.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-mt-3.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-sq-1.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-sq-2.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| vrt-launcher-db-installer.cluster.local | 4 | 4 | 2 | 2 | 1 | 4 | 4 | 2 |

| cnDBTier Totals | 40 | 40 | 20 | |||||

3.3.2 Locating and Downloading ATS and Simulator Images

To locate and download the ATS Image from MOS:

- Log in to My Oracle Support using the appropriate credentials.

- Select the Patches and Updates tab.

- In the Patch Search window, click Product or Family (Advanced).

- Enter Oracle Communications Cloud Native Core - 5G in the Product field.

- Select Oracle Communications Cloud Native Core Network Slice Selection Function <release_number> from Release drop-down.

- Click Search. The Patch Advanced Search Results list appears.

- Select the required ATS patch from the search results. The Patch Details window appears.

- Click Download. The File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to download the NSSF ATS package file.

- Untar the zip file to get

ocats-nssfdirectory, which consists of all the ATS Images. Theocats-nssfdirectory has the following files:Note:

Prerequisites:- To run oauth test cases for NSSF, oauth secrets needs to be generated. For more information, see "Configuring Secrets to Enable Access Token " section in Oracle communications Cloud Native Core, Network Slice Selection Function Installation, Upgrade, Fault Recovery Guide.

- To ensure the functionality of the

Virtual_Host_NRF_Resolution_By_NSSF_Using_DNSSRVATS feature, the following configuration must be enabled as per the engineering team's guidance:nrf-client: # This config map is for providing inputs to NRF-Client configmapApplicationConfig: enableVirtualNrfResolution=true virtualNrfFqdn=nrfstub.changeme-ocats.svc virtualNrfScheme=http - The necessary changes to the NSSF custom-values.yaml file are outlined

below.

For instance, if the NSSF ATS is deployed in the "

ocnssfats" namespace, thevirtualNrfFqdnconfiguration must be updated as follows:nrf-client: # This config map is for providing inputs to NRF-Client configmapApplicationConfig: enableVirtualNrfResolution=true virtualNrfFqdn=nrfstub.ocnssfats.svc virtualNrfScheme=http - If the NSSF is installed with a HELM release name different from

"

ocnssf" (for example, the HELM release name is "ocnssfats"), the following parameter must be updated accordingly.If the HELM release name is "ocnssf", no changes are required.# Alternate Route Service Host Value # Replace ocnssf with Release Name alternateRouteServiceHost: ocnssf-alternate-route - If the HELM release name for NSSF is

"

ocnssfats", the following parameter must be updated accordingly.# Alternate Route Service Host Value # Replace ocnssf with Release Name alternateRouteServiceHost: ocnssfats-alternate-routeocats-nssf ├── ocats-nssf-custom-configtemplates-25.1.200-README.txt - file contains all the information required for the package. ├── ocats-nssf-custom-configtemplates-25.1.200.zip - contains serviceaccount,PVC File and Custom values file ├── ocats-nssf-tools-pkg-25.1.200-README.txt - file contains all the information required for the package. └── ocats-nssf-tools-pkg-25.1.200.tgz - file has the following images and charts packaged as tar files

- Untar the

ocats-nssf-tools-pkg-25.1.200.tgztar fileThe structure of the file looks as given below:ocats-nssf-tools-pkg-25.1.200 ├── amfstub-25.1.200.tar - AMF Stub Server Docker image ├── amfstub-25.1.200.tar.sha256 ├── ats_data-25.1.200.tar - ATS data, After untar "ocnssf_tests" folder will gets created in which ATS feature files present ├── ats_data-25.1.200.tar.sha256 ├── ocats-nssf-25.1.200.tar - NSSF ATS Docker Image ├── ocats-nssf-25.1.200.tar.sha256 ├── ocats-nssf-25.1.200.tgz - ATS Helm Charts, after untar "ocats-nssf" ats charts folder gets created. ├── ocats-nssf-25.1.200.tgz.sha256 ├── ocdns-bind-25.1.200.tar. - NSSF DNS Stub Server Docker Image ├── ocdns-bind-25.1.200.tar.sha256 └── README.md - Copy the

ocats-nssf-tools-pkg-25.1.200.tgztar file to the CNE or Kubernetes cluster where you want to deploy ATS. - Along with the above packages, there is

ocats-nssf-custom-configtemplates-25.1.200.zipat the same location.The readme file

ocats-nssf-custom-configtemplates-25.1.200-README.txtcontains information about the content of this zip file.Content ofocats-nssf-custom-configtemplates-25.1.200.zipis as below:Archive: ocats-nssf-custom-configtemplates-25.1.200.zip inflating: nssf_ats_pvc_25.1.200.yaml inflating: ocats_nssf_custom_values_25.1.200.yaml inflating: ocats_ocnssf_custom_serviceaccount_25.1.200.yamlCopy these files to CNE or Kubernetes cluster where you want to deploy ATS.ocats-nssf-tools-pkg-25.1.200 ├── amfstub-25.1.200.tar - AMF Stub Server Docker image ├── ats_data-25.1.200.tar - ATS data, After untar "ocnssf_tests" folder will gets created in which ATS feature files present ├── ocats-nssf-25.1.200.tar - NSSF ATS Docker Image ├── ocats-nssf-25.1.200.tgz - ATS Helm Charts, after untar "ocats-nssf" ats charts folder gets created. ├── ocdns-bind-25.1.200.tar. - NSSF DNS Stub Server Docker Image

3.3.3 Deploying ATS in Kubernetes Cluster

To deploy ATS in Kubernetes Cluster:

- Verify checksums of the tarballs mentioned in the file

Readme.txt. - Run the following commands to extract tar file content, Helm charts, and

Docker images of ATS:

tar -xvzf ocats-nssf-tools-pkg-25.1.200.tgzThe output of this command will return the following files:ocats-nssf-tools-pkg-25.1.200 ├── amfstub-25.1.200.tar - AMF Stub Server Docker image ├── amfstub-25.1.200.tar.sha256 ├── ats_data-25.1.200.tar - ATS data, After untar "ocnssf_tests" folder will gets created in which ATS feature files present ├── ats_data-25.1.200.tar.sha256 ├── ocats-nssf-25.1.200.tar - NSSF ATS Docker Image ├── ocats-nssf-25.1.200.tar.sha256 ├── ocats-nssf-25.1.200.tgz - ATS Helm Charts, after untar "ocats-nssf" ats charts folder gets created. ├── ocats-nssf-25.1.200.tgz.sha256 ├── ocdns-bind-25.1.200.tar. - NSSF DNS Stub Server Docker Image ├── ocdns-bind-25.1.200.tar.sha256 └── README.md - NSSF-ATS and Stub Images Load and Push: Run the following

commands in your cluster to load the

ocatsimage andamf stubserverimage:Docker Commands:

docker load -i ocats-nssf-<version>.tardocker load -i amfstub-<version>.tardocker load -i ocdns-bind-<version>.tarExamples:

docker load -i ocats-nssf-25.1.200.tardocker load -i amfstub-25.1.200.tardocker load -i ocdns-bind-25.1.200.tarPodman Commands:

podman load -i ocats-nssf-<version>.tarpodman load -i amfstub-<version>.tarpodman load -i ocdns-bind-<version>.tarExamples:

podman load -i ocats-nssf-25.1.200.tarpodman load -i amfstub-25.1.200.tarpodman load -i ocdns-bind-25.1.200.tar - Run the following commands to tag and push the ATS image registry.

- Run the following commands to grep the

image:

docker images | grep ocats-nssfdocker images | grep amfstubdocker images | grep ocdns - Copy the Image ID from the output of the grep command and

change the tag (version number) to your registry.

Docker Commands:

docker tag <Image_ID> <your-registry-name/ocats-nssf:<tag>>docker push <your-registry-name/ocats-nssf:<tag>>docker tag <Image_ID> <your-registry-name/amfstub:<tag>>docker push <your-registry-name/amfstub:<tag>>docker tag <Image_ID> <your-registry-name/ocdns-bind:<tag>>docker push <your-registry-name/ocdns-bind:<tag>>Podman Commands:

podman tag <Image_ID> <your-registry-name/ats/ocats-nssf:<tag>>podman push <your-registry-name/ocats-nssf:<tag>>podman tag <Image_ID> <your-registry-name/amfstub:<tag>>podman push <your-registry-name/amfstub:<tag>>docker tag <Image_ID> <your-registry-name/ocdns-bind:<tag>>docker push <your-registry-name/ocdns-bind:<tag>>

- Run the following commands to grep the

image:

- ATS Helm Charts : Run the following command to get "ATS" Helm

charts as shown

below:

tar -xvzf ocats-nssf-25.1.200.tgzThe above command creates "ocats-nssf" helm charts of ATS.

- ATS Data: Run the following command to get ATS data, which

contains feature files and

data:

tar -xvf ats_data-25.1.200.tarThe above command creates "ocnssf_tests" ATS feature files "dat" and "jobs" folder, which are copied inside after the ATS installation is complete.

- Copy the

ocnssf_testsfolder under NSSF ATS pod as shown below:kubectl cp ocnssf_tests <namespace>/<nssf ats podname>:/var/lib/jenkins/Example:

kubectl cp ocnssf_tests cicdnssf-241204053638/ocats-nssf-8566d64cfb-cgmvc:/var/lib/jenkins/ - Copy the jobs folder under NSSF ATS pod as shown

below:

kubectl cp jobs <namespace>/<nssf ats podname>:/var/lib/jenkins/.jenkins/Example:kubectl cp jobs cicdnssf-241204053638/ocats-nssf-8566d64cfb-cgmvc:/var/lib/jenkins/.jenkins/

- Copy the

- <Optional> Go to certificate folder inside ocats-nssf and run the

following

command:

kubectl create secret generic ocnssf-secret --from-file=certificates/rsa_private_key_pkcs1.pem --from-file=certificates/trust.txt --from-file=certificates/key.txt --from-file=certificates/ocnssf.cer --from-file=certificates/caroot.cer -n ocnssf - ATS Custom Values File Changes: Update the image name and tag in

the

ocats_nssf_custom_values_25.1.200.yamlfile as required.- For this, open the

ocats_nssf_custom_values_25.1.200.yamlfile. - Update the

image.repositoryandimage.tagparameters forocats-nssf,ocats-amf-stubserver,ocats-nrf-stubserver,ocats-nrf-stubserver1,ocats-nrf-stubserver2, andocdns-bind. - Save and close the file after making the updates.

- For this, open the

- <Optional>To enable static port: ATS supports static port.

By default, this feature is not available.

In the

ocats-nssf/values.yamlfile under service section, set the value ofstaticNodePortEnabledparameter as 'true' and provide a validnodePortvalue forstaticNodePort. - ATS Service Account Creation: In

ocats-nssf-custom-serviceaccount_.yaml, change namespace as below:sed -i "s/changeme-ocats/${namespace}/g" ocats_ocnssf_custom_serviceaccount_.yaml - Run the following command to apply

ocats-nssf-custom-serviceaccount_.yamlfile:kubectl apply -f <serviceaccount.yaml file> -n <namespace_name>For example:

kubectl apply -f ocats-nssf-custom-serviceaccount_.yaml -n ocnssf - Pointing NSSF to Stub Servers: Follow this step to point out NSSF to

NRF-Stubserver and AMF-Stubserver in NSSF custom values

file: