4 Running NF Test Cases Using ATS

4.1 Running BSF Test Cases using ATS

This section describes how to run BSF test cases using ATS.

Note:

Restart the NRF-client pod of BSF for UDR and CHF discovery as part of each test case.4.1.1 Prerequisites

- Deploy BSF 25.1.200 with default helm configurations using helm charts to run all test cases. The

ATS version must be compatible with BSF 25.1.200.

For more information on how to install BSF, see Oracle Communications Cloud Native Core, Binding Support Function Installation, Upgrade, and Fault Recovery Guide.

- Go-STUB must be installed in the same namespace where ocbsf is installed.

-

Add the following to Kubernetes namespace to grant role access:

PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- pods/log [] [] [get list] configmaps [] [] [watch get list delete update create] pods [] [] [watch get list delete update create] secrets [] [] [watch get list delete update create] services [] [] [watch get list delete update create] deployments.apps [] [] [watch get list update] replicasets.apps [] [] [watch get list update] deployments.extensions [] [] [watch get list update] replicasets.extensions [] [] [watch get list update] - ATS Prometheus metrics validation works only when:

- the metrics suffixes are not configured

- installation has a single pod for each microservice in the BSF deployment

- You can customize test cases in the custom test case folders (cust_newfeatures, cust_regression and cust_performance). You can add new test cases, remove unwanted test cases and modify existing test cases. It does not impact the original product packaged test cases available in the newfeatures, regression and performance folders respectively. For more details about custom test case folders, see Custom Folder Implementation.

- Install Prometheus server in the cluster.

- Database cluster is in the running state with all required tables. Verify that there are no previous entries in the database before running test cases.

- Do not initiate a job in two different pipelines at the same time.

- For

running ATS features, ensure to update the following mandatory parameters in

ocbsf_custom_values_25.1.200.yaml file only when you are not using the minimal

custom values.yaml

file.

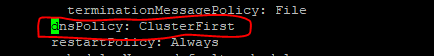

logging: burst: rate: 500 max: 3000 onMismatch: DENY logLevel: DEBUGNote:

Please ensure that you use the latest version of the Custom Values file when installing BSF initially. - For using Controlled Shutdown feature, ensure that

enableControlledShutdownparameter is enabled on BSF during installation. If this parameter is not enabled, the test case for this feature will fail. -

In the

application-configconfigmap, configure the following parameters with the respective values:-

primaryNrfApiRoot=nf1stub.<namespace_gostubs_are_deployed_in>.svc:8080For example:

primaryNrfApiRoot=nf1stub.ocats.svc:8080 -

secondaryNrfApiRoot=nf11stub.<namespace_gostubs_are_deployed_in>.svc:8080For example:

secondaryNrfApiRoot=nf11stub.ocats.svc:8080 -

virtualNrfFqdn = nf1stub.<namespace_gostubs_are_deployed_in>.svcFor example:

virtualNrfFqdn=nf1stub.ocats.svc retryAfterTime=PT30S

-

- To

enable ATS BSF GUI with the HTTPS protocol, the above mentioned

application-configconfigmap related following parameters should be configured with the respective values:# Please edit the object below. Lines beginning with '#' will be ignored, # and an empty file will abort the edit. If an error occurs while saving this file will be # reopened with the relevant failures. # apiVersion: v1 data: profile: | - [appcfg] primaryNrfApiRoot=nf1stub.ocats.svc:8443 secondaryNrfApiRoot=nf11stub.ocats.svc:8443 nrfScheme=https virtualNrfPort=8443 virtualNrfScheme=https -

Before running ATS Test suite, restart

nrf-client-nfdiscoveryandnrf-client-nfmanagementpods.-

Run the following command to get all the configmaps in your namespace.

kubectl get configmaps -n <BSF_namespace> -

Edit the

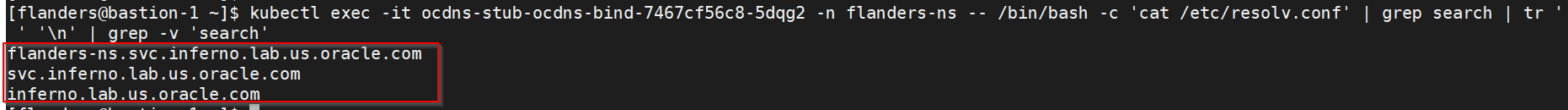

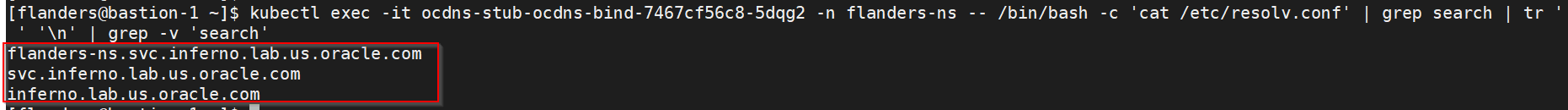

alternate route servicedeployment pointing towards DNS Stub.Run the following command to get searches information from

dns-bindpod to enable communication between Alternate Route anddns-bindservice.kubectl exec -it <dns-bind pod> -n <NAMESPACE> -- /bin/bash -c 'cat /etc/resolv.conf' | grep search | tr ' ' '\n' | grep -v 'search'Example:

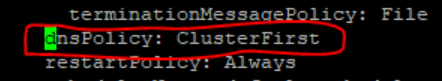

Figure 4-1 Editing Alternate Route Service deployment pointing towards DNS Stub

By default, Alternate Route Service points to CoreDNS.

Figure 4-2 Alternate Route Service settings in deployment file

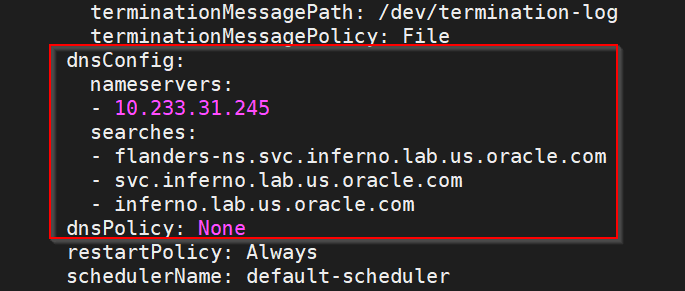

Change the deployment file to add following content in alternate service to query DNS stub.

kubectl edit deployment ocbsf-occnp-alternate-route -n ocbsf- Add the nameservers IPaddress.

- Add all the search information.

- Set dnsPolicy to

None.dnsConfig: nameservers: - <dns_stub_cluster_ip_address> searches: - dns-bind search - dns-bind search - dns-bind search dnsPolicy: NoneFigure 4-3 dnsConfig

-

If Service Mesh check is enabled, create a destination rule to fetch the metrics from the Prometheus. For destination rule to communicate between TLS enabled entity (ATS) and non-TLS entity (Prometheus), Prometheus is kept outside of the service mesh. The rule can be created as follows:

kubectl apply -f - <<EOF apiVersion:networking.istio.io/v1alpha3 kind:DestinationRule metadata: name:prometheus-dr namespace:ocats spec: host:oso-prometheus-server.ocbsf.svc.cluster.local trafficPolicy: tls: mode:DISABLE EOFname: indicates the name of destination rule.namespace: indicates where the ATS is deployed.host: indicates the hostname of the prometheus server.

-

-

For Bsf_To_Nrf_Late_Arrival feature file to be executed, nfInstanceId should be configured in Bsf_To_Nrf_Late_Arrival.yaml parameterization file. This nfInstanceId must be the same as the one under BSF nfInstanceId, that is configured during BSF installation. The nfInstanceId must to be configured in ATS UI under pipeline configuration (BSF_NFINSTANCE_ID).

-

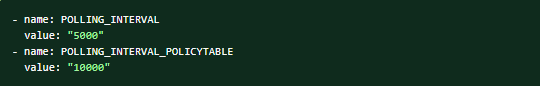

To ensure consistent functioning of ATS related to the audit service, modify the Audit deployment by reducing the value of AUDIT_NOTIFY_SCHEDULER_POLLING_INTERVAL_MILLISEC from 30000 to 1000 (changing from 30 seconds to 1 second).

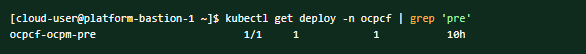

$ kubectl get deploy -n ocbsf | grep 'audit' ocbsf-ocpm-audit-service 1/1 1 1 10h$ kubectl edit deploy ocbsf-ocpm-audit-service -n ocbsfThe deployment will open. Scroll down until below fields are seen

- name: AUDIT_NOTIFY_SCHEDULER_POLLING_INTERVAL_MILLISEC value: "30000"Update the value of AUDIT_NOTIFY_SCHEDULER_POLLING_INTERVAL_MILLISEC to 1000 if it isn't already.

Application Config map changes for BSF registrations over TLS

- NRF port from 8080 to 8443

- nrfScheme to https

apiVersion: v1

data:

profile: |-

[appcfg]

primaryNrfApiRoot=nf1stub.ocats.svc:8443

secondaryNrfApiRoot=nf11stub.ocats.svc:8443

nrfScheme=https

virtualNrfPort=8443

virtualNrfScheme=httpsNote:

In the config map of application-config, delete the lines which has supportedDataSetId or secondaryNrfApiRoot strings.

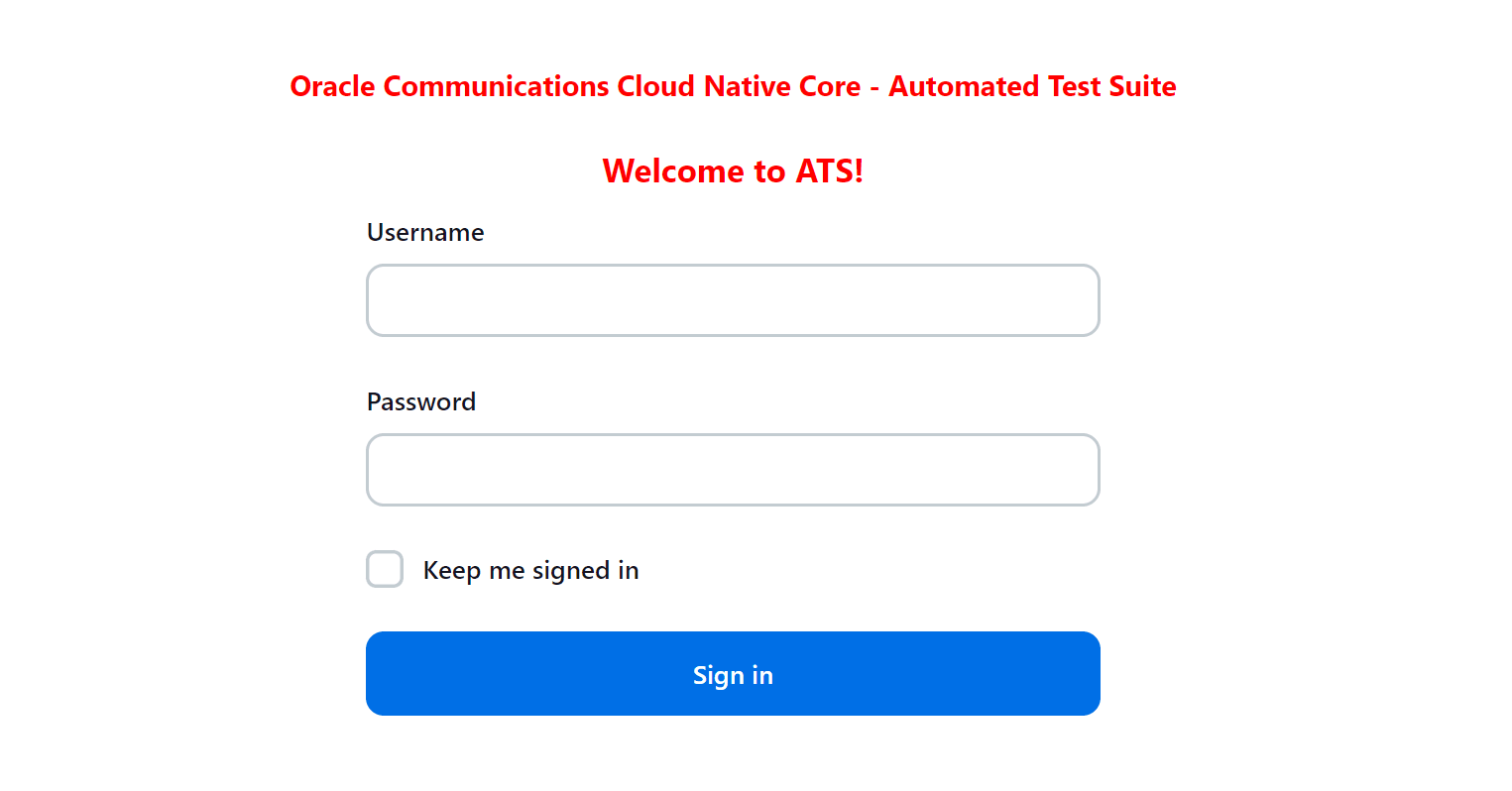

4.1.2 Logging into ATS

Before logging into ATS GUI, it is important to get the worker node external IP and node port of the service, 'ocats-bsf'.

Run the following command to get the external IP for the worker node:

Example:

kubectl get nodes -owideocbsf-k8s-node-1 Ready <none> 111d v1.16.7 192.168.200.26 10.75.152.111 Oracle Linux Server 7.8 4.14.35-1902.303.5.3.el7uek.x86_64 containerd://1.2.10Run the following command to get the nodeport:

kubectl get svc -n <BSF_namespace>Example:

kubectl get svc -n ocbsfocbsf-ocats-ocats-bsf LoadBalancer 10.233.53.144 10.75.225.49 8080:31944/TCP 19hhttp://<Worker-Node-IP>:<Node-Port-of-ATS>

If the 'ocats-bsf' Service has an external IP available, <SVC external IP> can also be used to log in to ATS.

http://<External IP of ATS Service>:8080

http://10.75.225.49:8080Running ATS

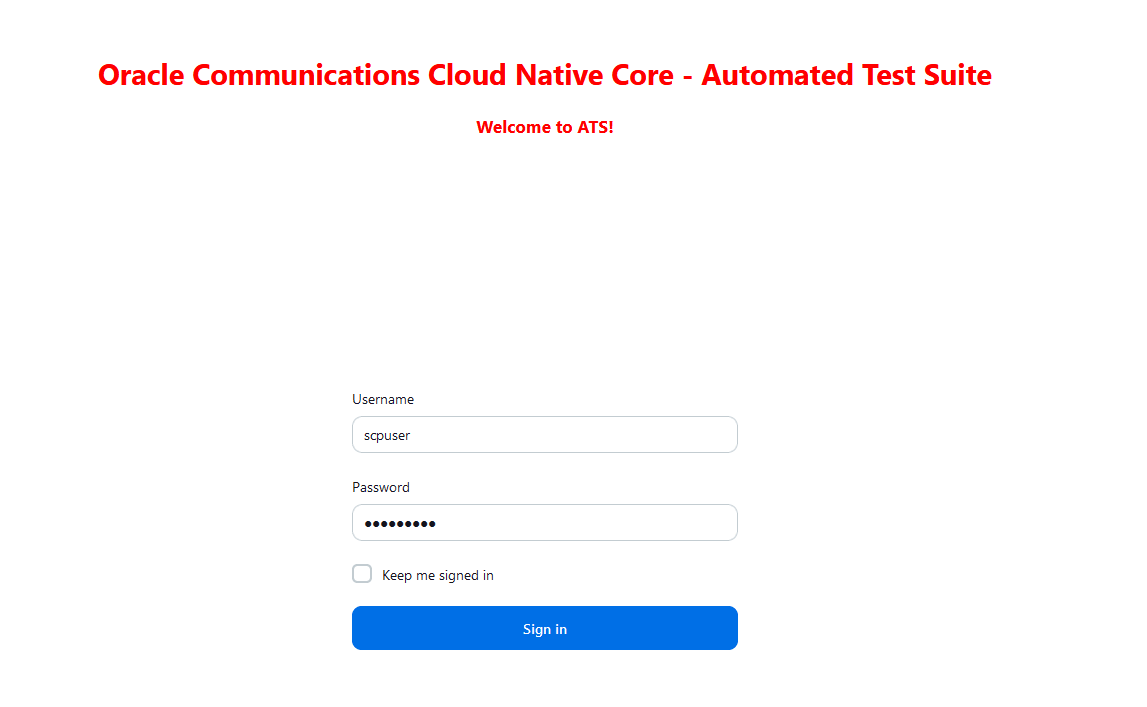

To run ATS test cases, perform the following steps:

- Enter the username as bsfuser and Password as bsfpasswd.

- Click Sign

in.

Note:

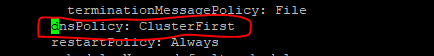

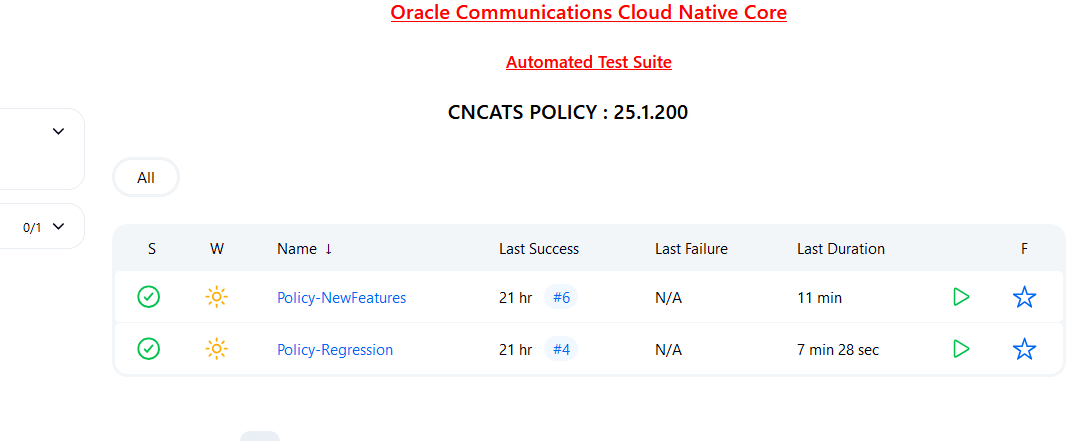

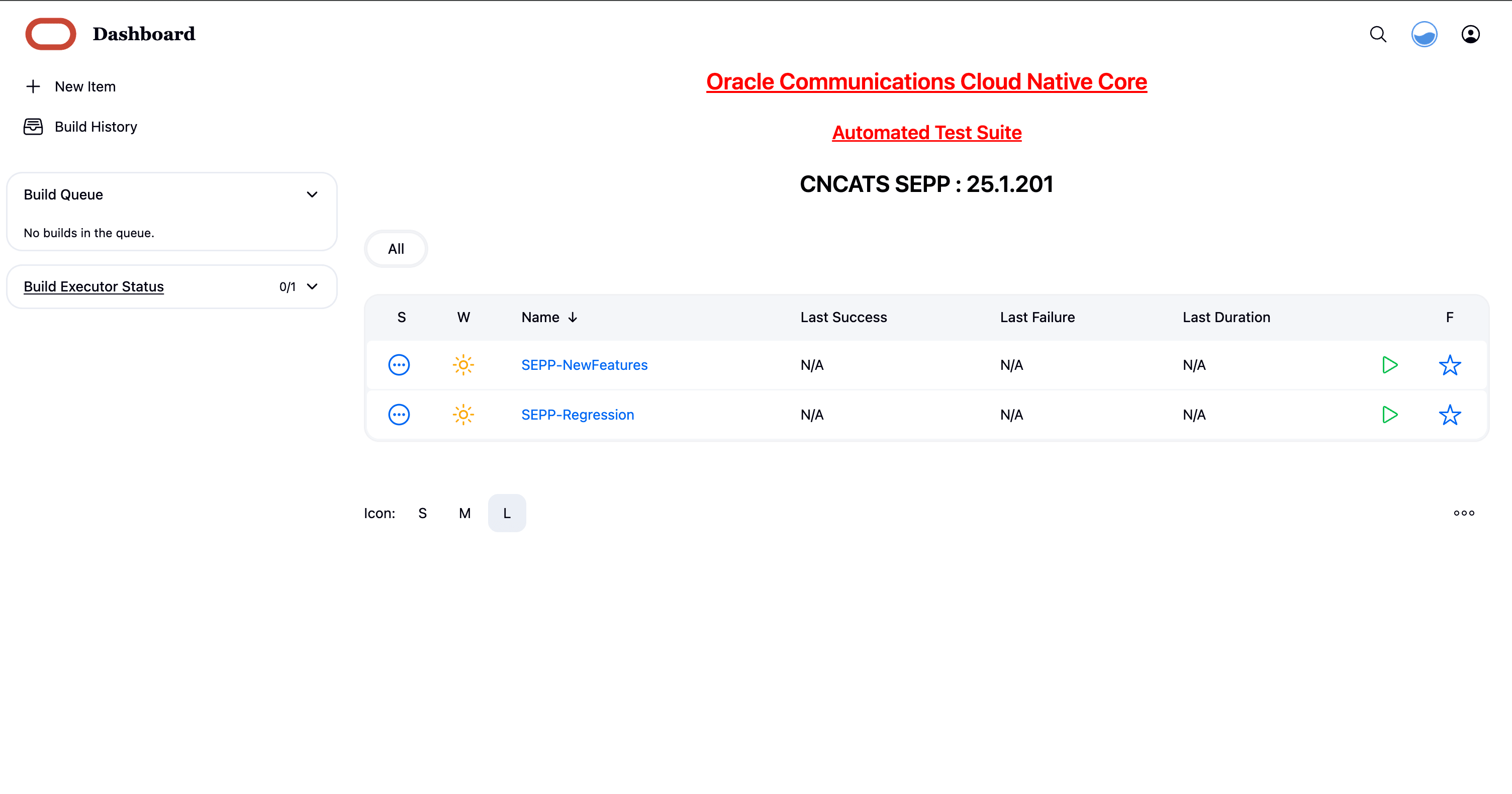

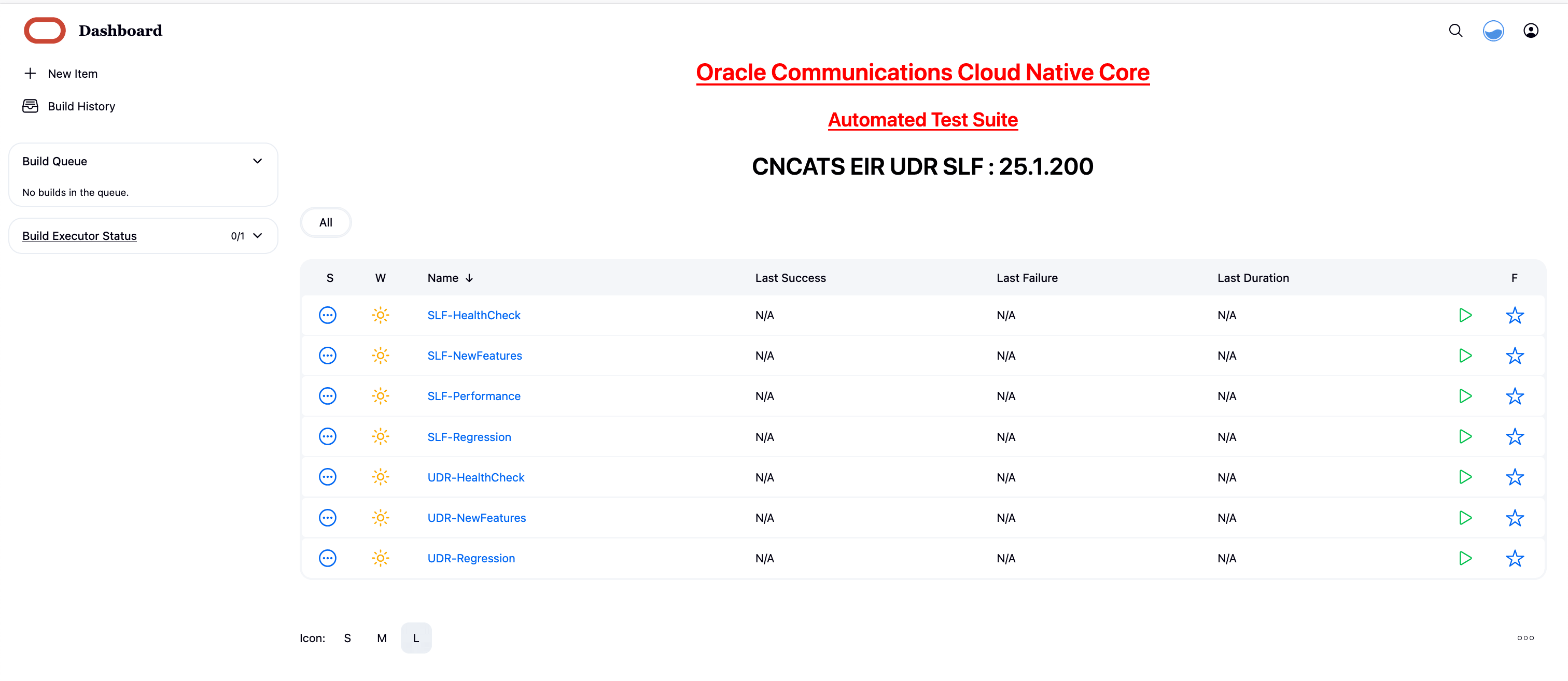

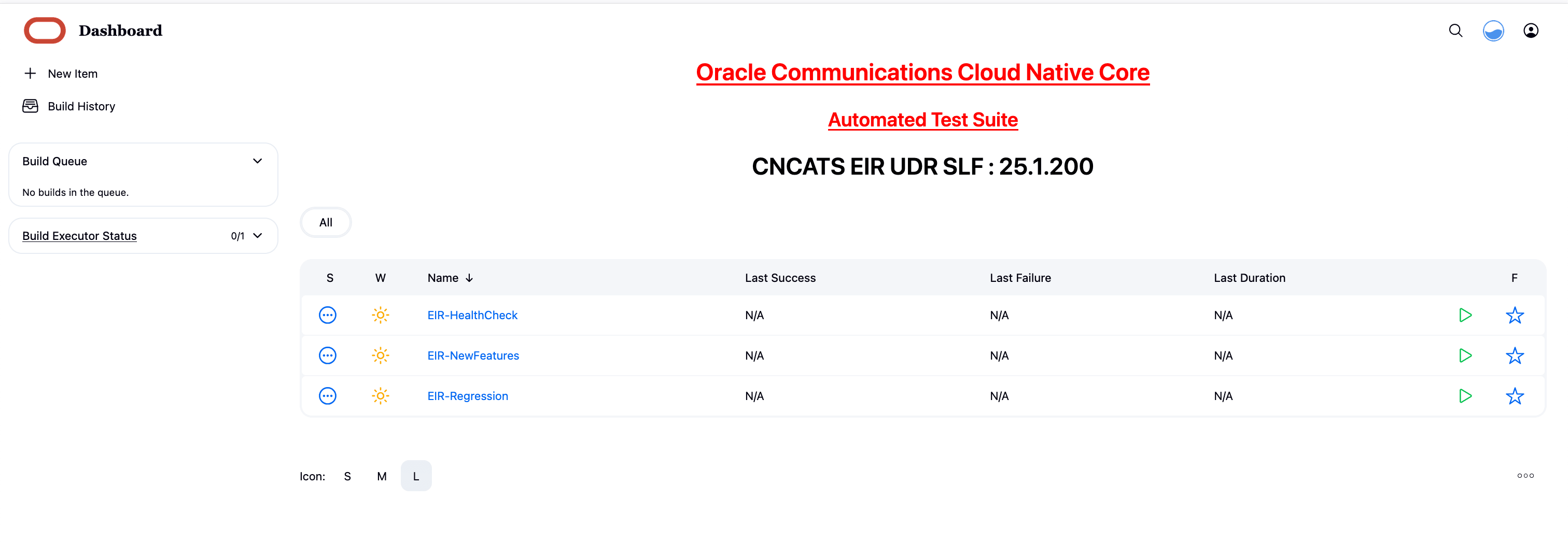

To modify default login password, see Modifying Login Password.On successful log in, users should see the following pipelines:Figure 4-4 Pre-configured Pipelines

- BSF-NewFeatures: This pipeline has all the new test cases delivered for BSF 25.1.200.

- BSF-Performance: This pipeline is not operational as of now. It is reserved for future releases of ATS.

- BSF-HealthCheck: This pipeline checks if BSF and ATS are deployed correctly. This shows only when the user has enabled this feature at the time of installing BSF ATS.

- BSF-Regression: This pipeline has all the test cases delivered in BSF ATS - 25.1.200.

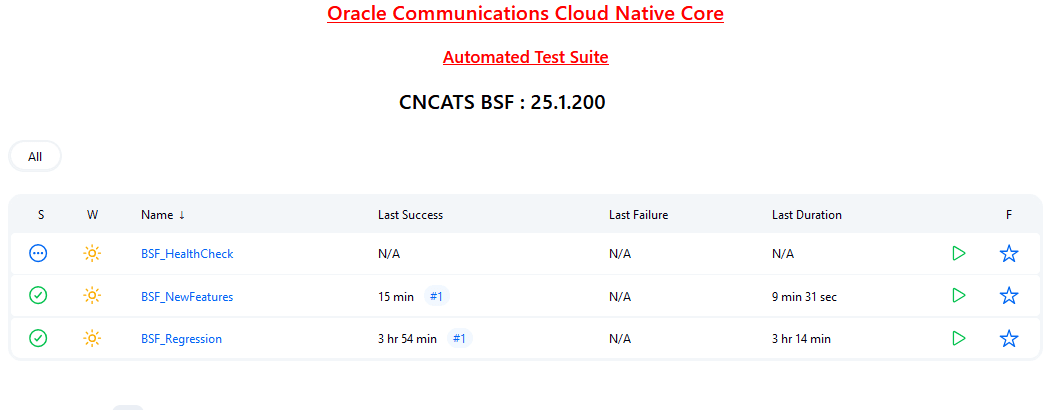

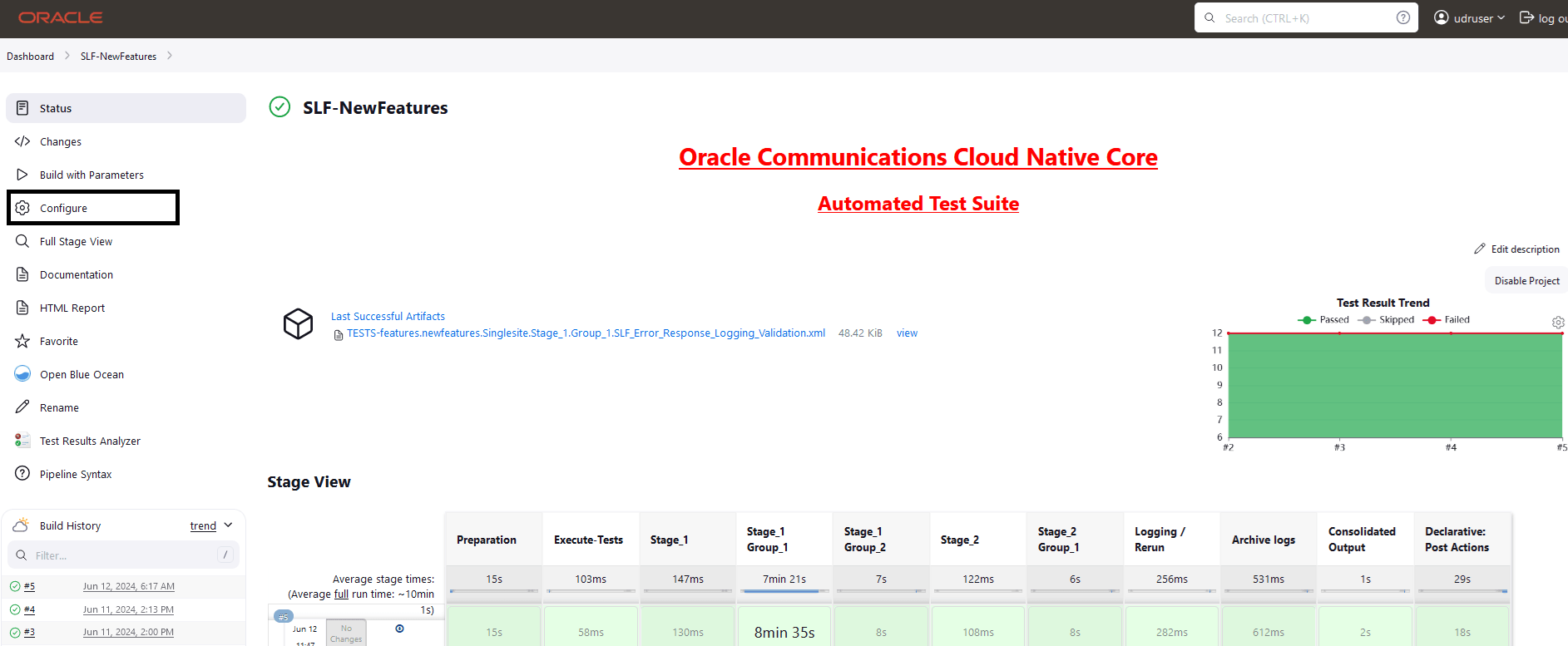

4.1.3 Running BSF_NewFeatures Pipeline

BSF_NewFeatures Pipeline

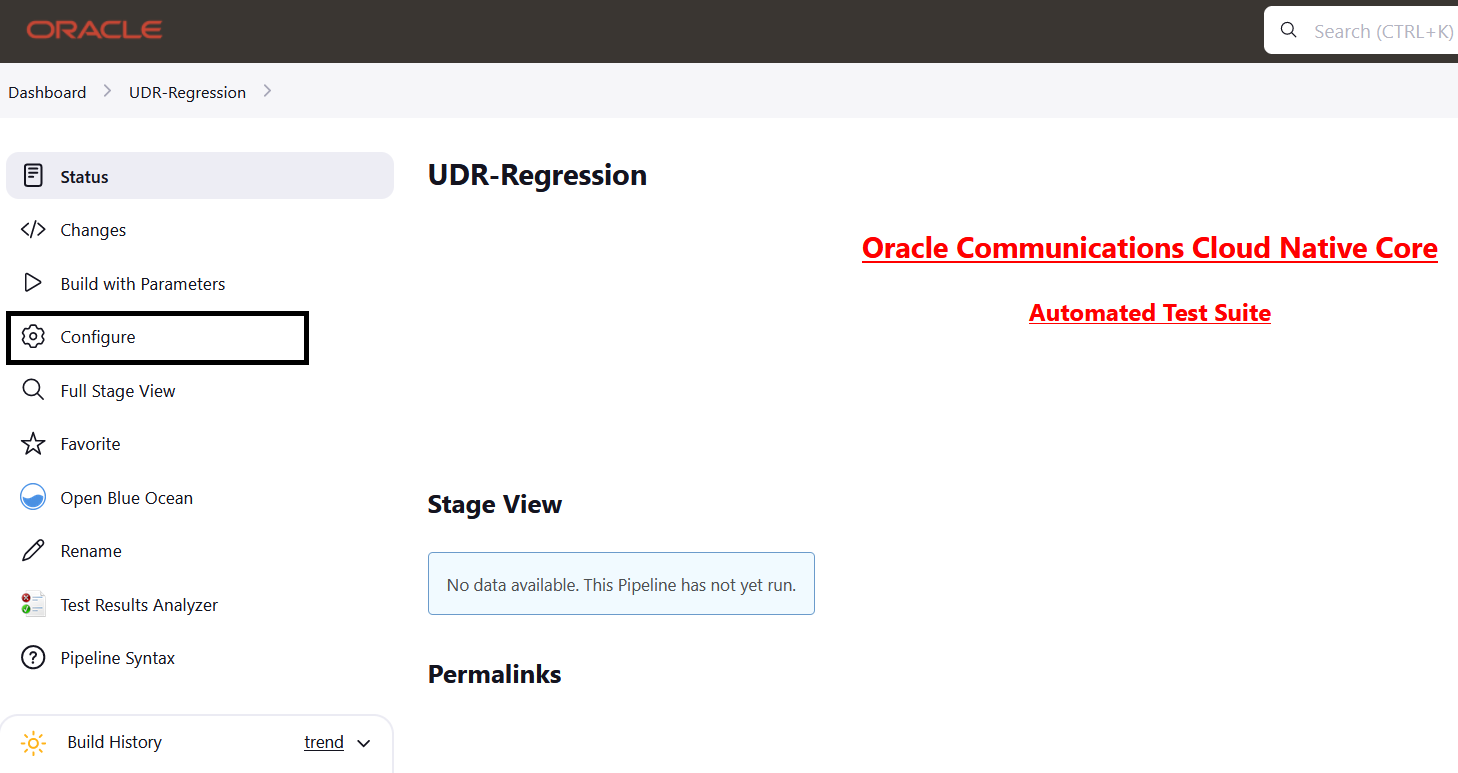

- Click BSF_NewFeatures in the Name column and then,

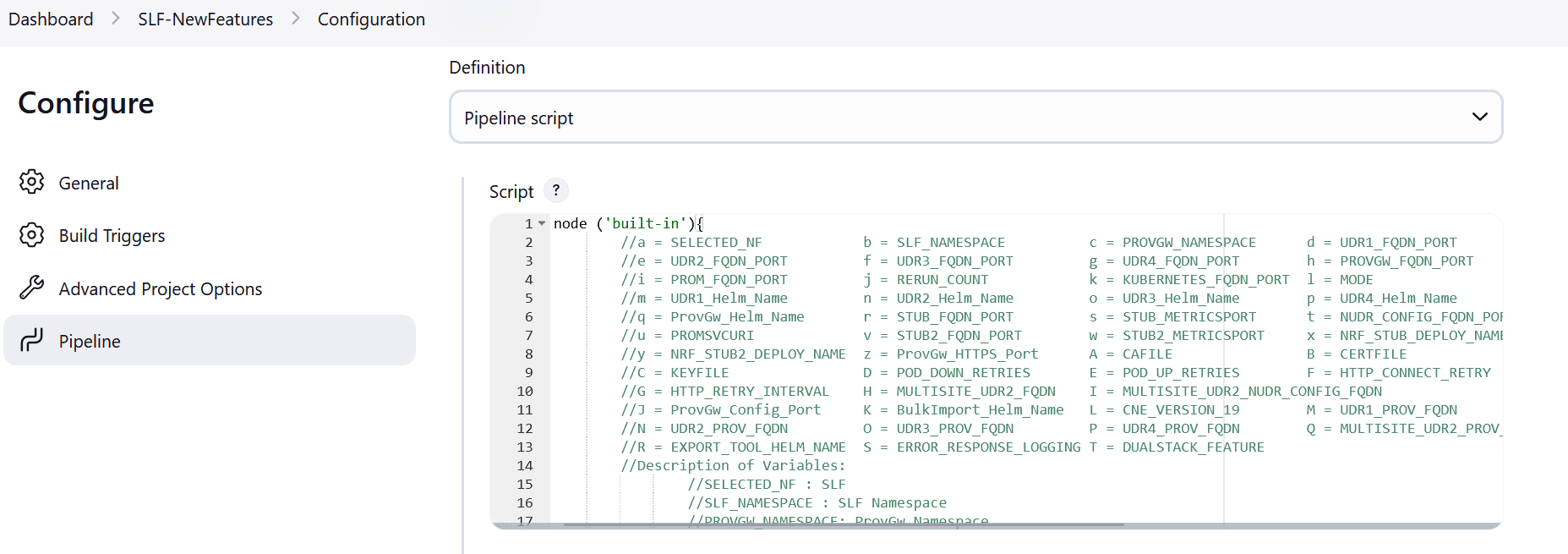

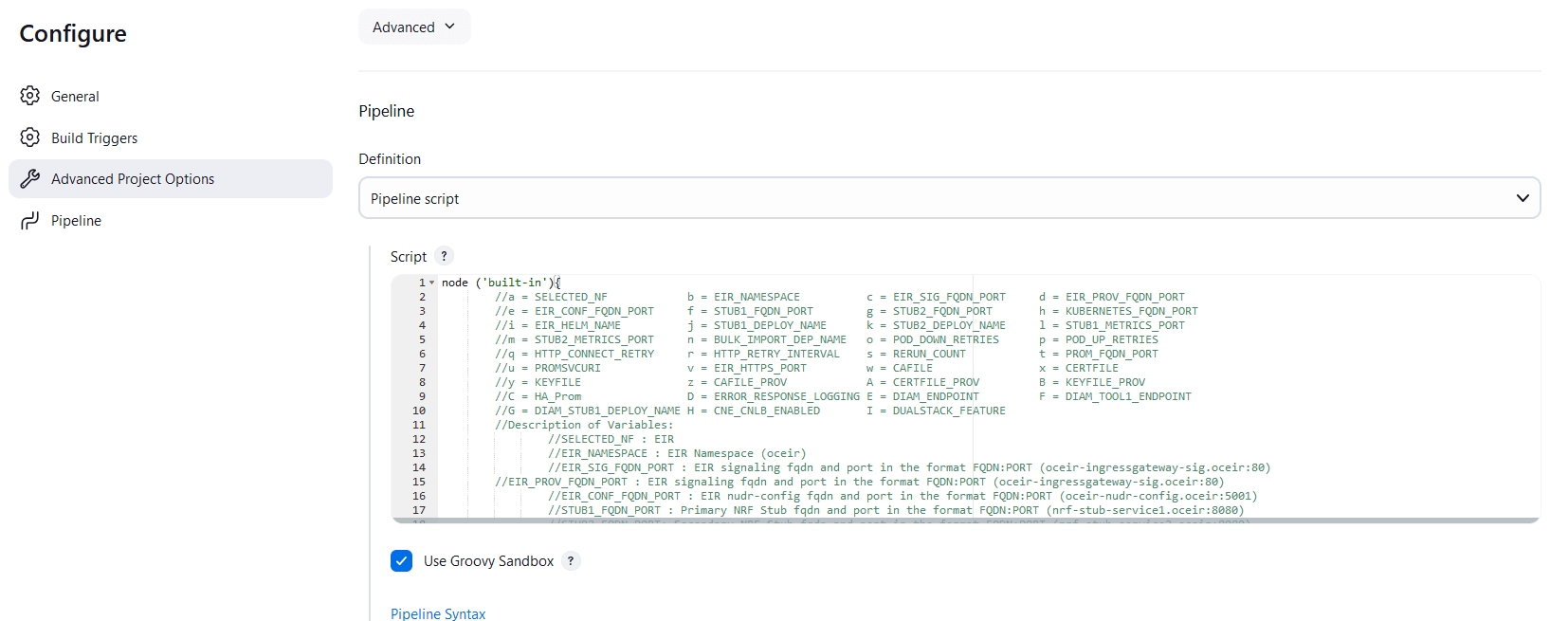

click Configure in the left navigation pane as shown below:

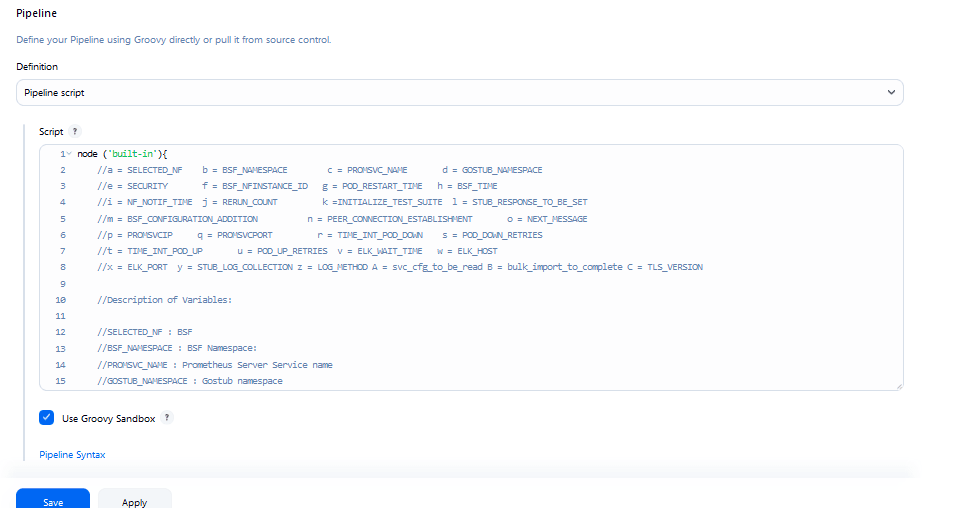

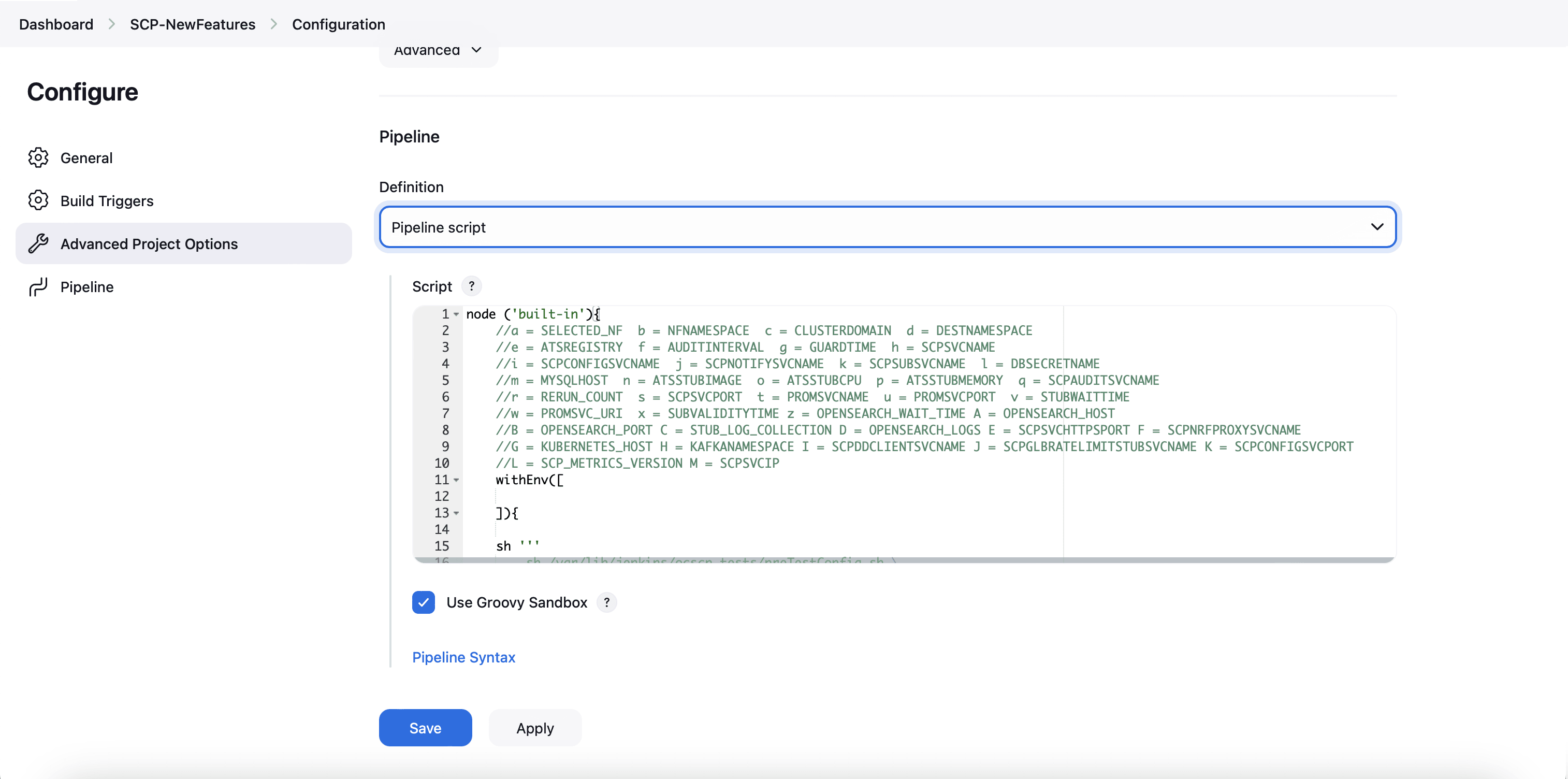

Figure 4-5 BSF New Feature Pipeline

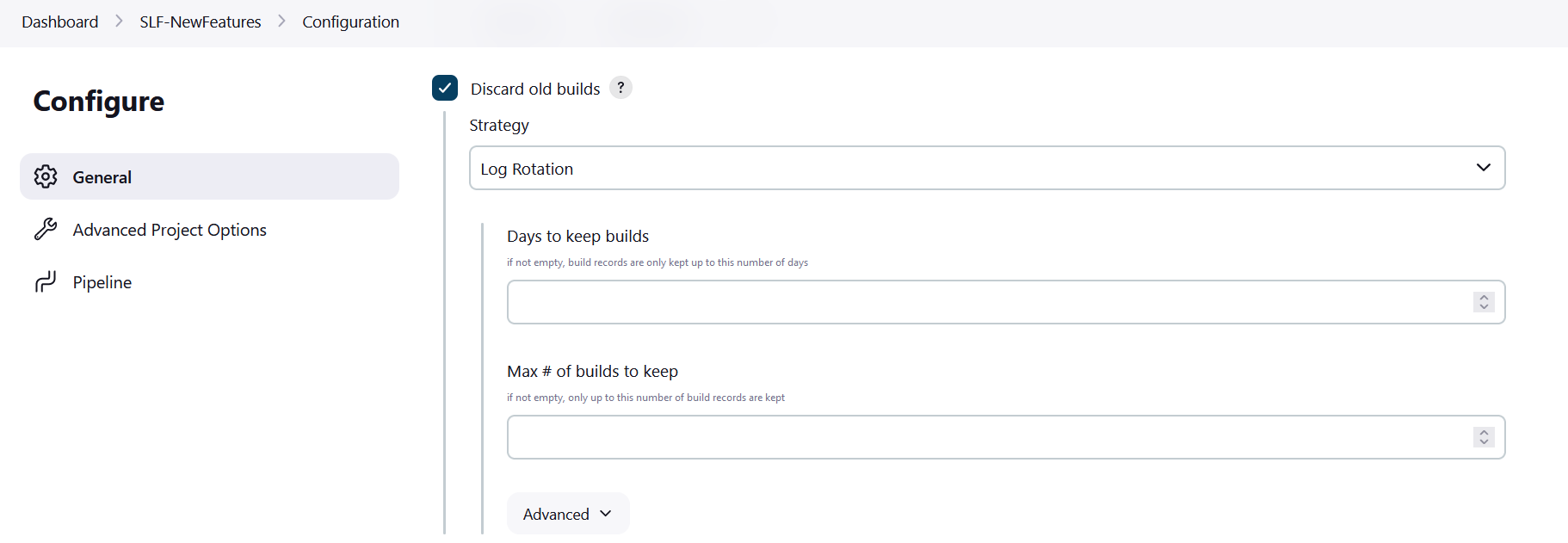

- The BSF_NewFeatures, General tab appears. Make sure that the screen loads completely.

- Scroll-down to the end. The control moves from General

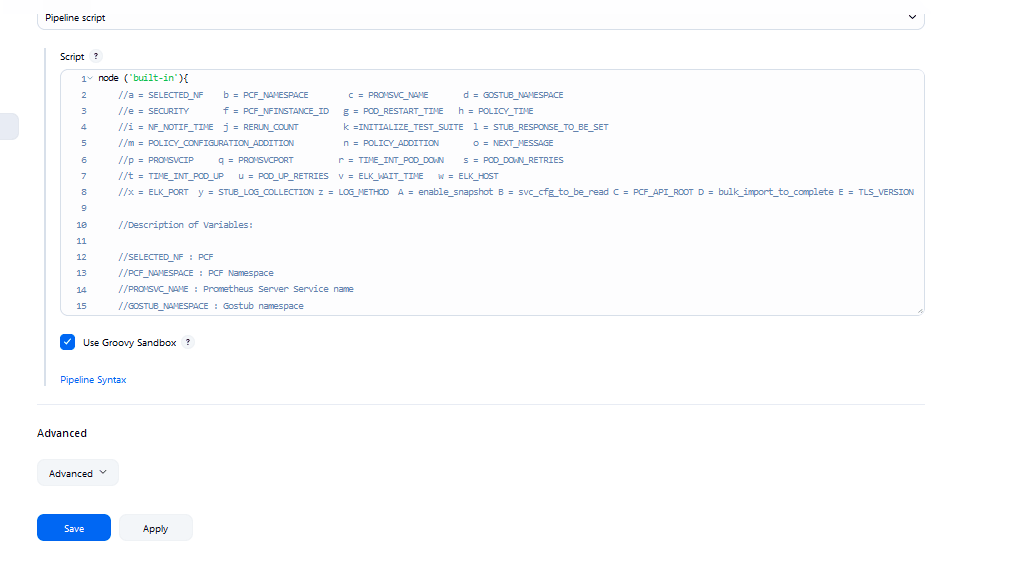

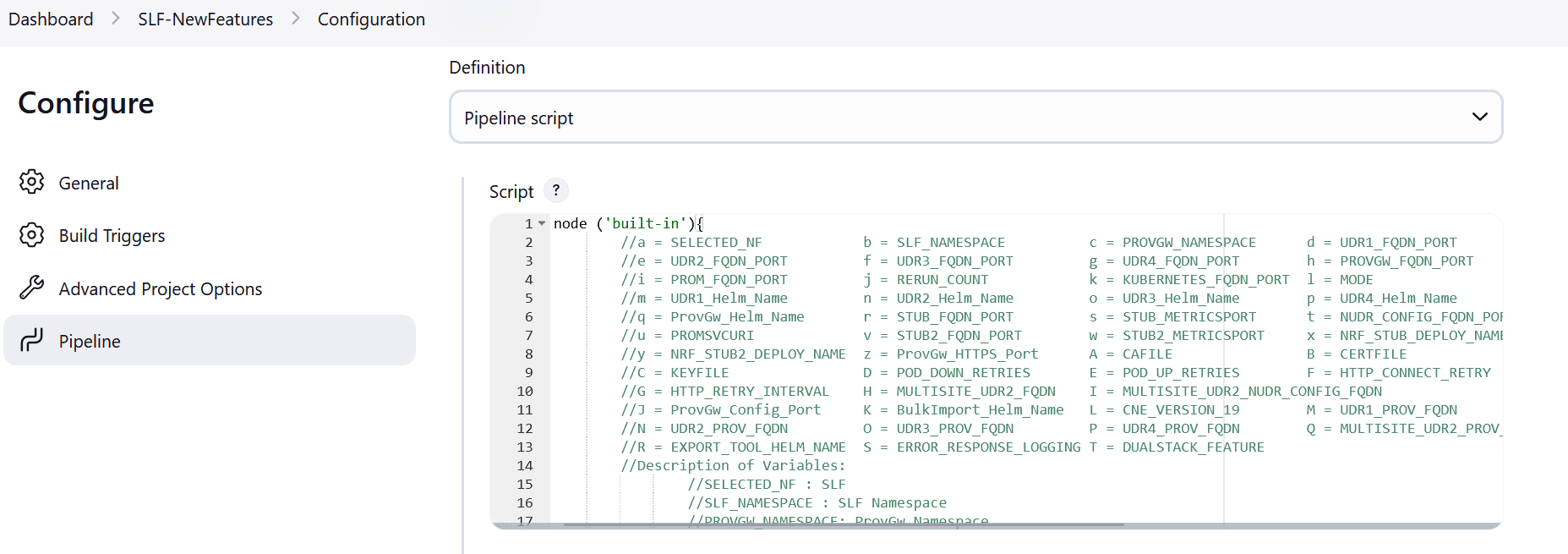

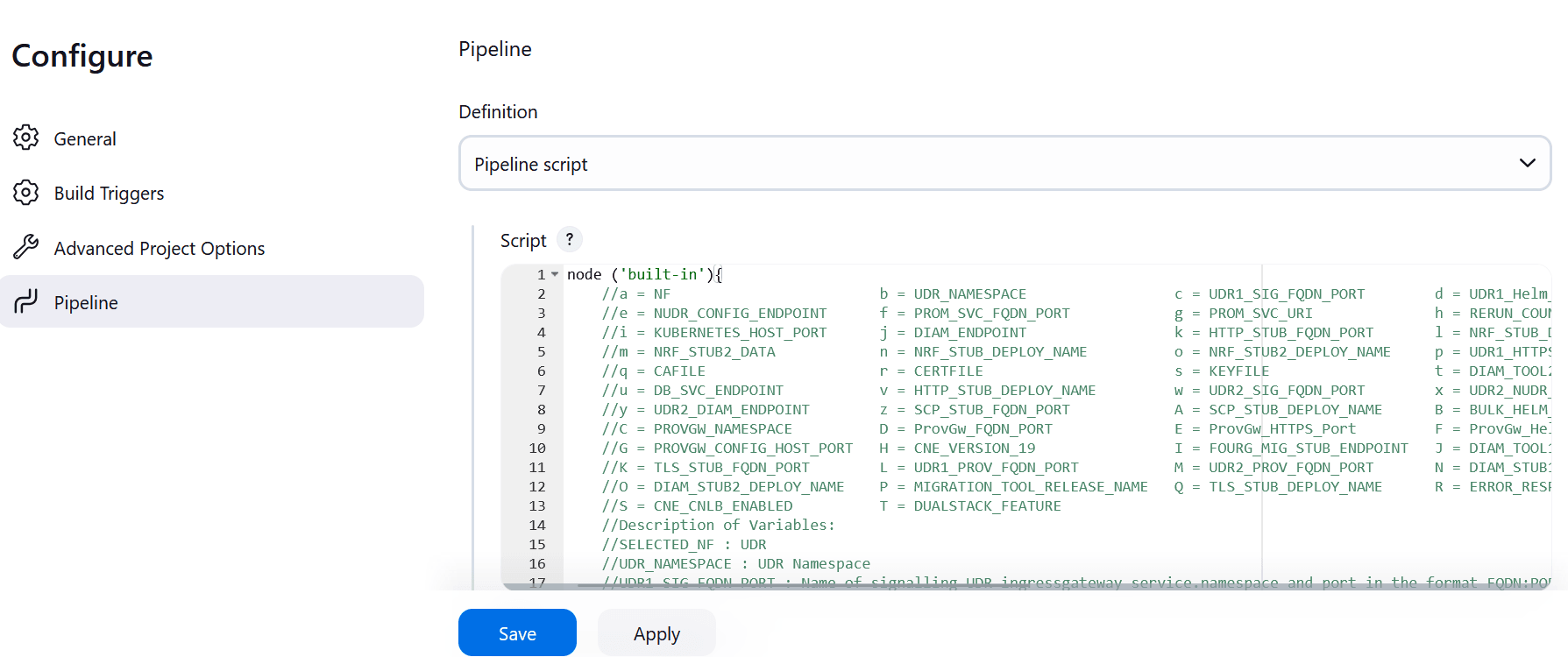

tab to the Pipeline tab as shown below:

Figure 4-6 BSF New Features Pipeline Configuration

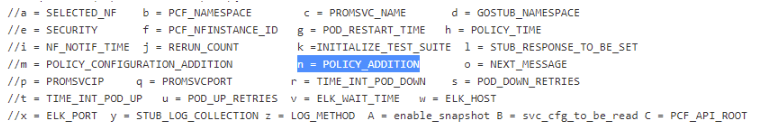

You can change the parameter values from "a" - to - "x" as per user requirement. The parameter details are available as comments from line number 2 - to - 7. In the Script area of the Pipeline section, you can change the values of the following parameters:

You can change the parameter values from "a" - to - "x" as per user requirement. The parameter details are available as comments from line number 2 - to - 7. In the Script area of the Pipeline section, you can change the values of the following parameters:- a: Name of the NF to be tested in capital (BSF)

- b: Change this parameter to update the namespace where BSF was deployed in your bastion.

- c: Name of Prometheus service namespace (occne-prometheus-server)

- d: Change this parameter to update the namespace where your gostubs are deployed in your bastion.

- e: Set this parameter as 'unsecure', if you intend to run ATS in TLS disabled mode. Else, set this parameter as 'secure'.

- f: Configure this parameter to set BSF_NFINSTANCE_ID.

- g: Set a value more than 45 seconds for this parameter. The default wait time for the pod to come up is 45 seconds. Every TC requires restart of the nrf-client-management pod.

- h: Set a value more than 60 seconds for this parameter. The default wait time to add a configurations to the database is 60 secs.

- i: Set this parameter to more than 140 secs. The default wait time for Nf_Notification Test Cases is given as 140 secs.

- k: Use this parameter to set the waiting time to initialize Test Suite.

- l: Use this parameter to set the waiting time to get response from Stub.

- m: Use this parameter to set the waiting time after adding BSF Configuration.

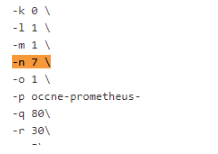

- n: Use this parameter to set the waiting time for Peer connection establishment.

- o: Use this parameter to set the waiting time before sending next message.

- p: Use this parameter to set Prometheus Server IP.

- q: Use this parameter to set Prometheus Server Port.

- r: Use this parameter to set the interval after which the POD status is checked when it is down.

- s: Use this parameter to set number of retry attempt in which will check the pod down status

- t: Use this parameter to set the interval after which we check the POD status if its UP.

- u: Use this parameter to set number of retry attempt in which will check the pod up status.

- v: Use this parameter to set Wait time to connect to Elastic Search.

- w: Use this parameter to set Elastic Search HostName.

- x: Use this parameter to set Elastic Search Port.

- y: Use this parameter to set To Enable/Disable Stub logs collection.

- z: Use this parameter to set Log collection endpoint either Elasticsearch or Kubernetes.

- A: Use this parameter to set Timer to wait for importing service configurations.

- B: Use bulk_import_to_complete to add custom time in Jenkins post bulk imports.

- C: Use this parameter to set TLS version (1.2 or 1.3). The default value is 1.2.

(Optional)To collect application logs per failed scenario, user can configure the values for the following parameters:- z: If you want log collection to happen

through Elastic search, set the value for this parameter as

Elasticsearch. If not, specify the

value as Kubernetes.

If you want to collect logs through Elastic search, it is required to configure the values for the following parameters:

- v: Specifies the wait time to connect

to Elastic search (

ELK_WAIT_TIME). - w: Specifies the host name of Elastic

search (

ELK_HOST). For example,occne-elastic-elasticsearch-master.occne-infra/ - x: Specifies the port for Elastic

search (

ELK_PORT). For example, 9200.

- v: Specifies the wait time to connect

to Elastic search (

- y: If you want to collect stub logs, set the value for this parameter as yes. If not, specify the value as no.

-

Click Save after updating the parameter values. The BSF_NewFeatures Pipeline page appears.

Note:

It is recommended to save a copy of the pipeline script in your local machine that you may refer while restarting ATS pods.Attention:

Do not modify anything other than the parameter values described in this section.

Extracting Application Logs

- Log in to the ATS

pod:

kubectl exec -it pod/ocats-bsf-6f6dfc76b5-jbgzt -n ocbsf bash - Go to Jenkins build

directory:

cd $JENKINS_HOME/jobs/$JOB_NAME/builds/$BUILD_NUMBER/For example:cd /var/lib/jenkins/.jenkins/jobs/BSF_Regression/builds/2 - Extract the

applogs.zipfile:unzip applogs.zip - After successfully unzipping the file, open the

applogfolder to view the pod logs for failed scenarios:(env) [jenkins@ocats-bsf-6f6dfc76b5-jbgzt applog]$ pwd /var/lib/jenkins/.jenkins/jobs/BSF_Regression/builds/2/applog (env) [jenkins@ocats-bsf-6f6dfc76b5-jbgzt applog]$ ls -ltrh total 760K -rw-r--r--. 1 jenkins jenkins 250K Nov 19 11:08 Initial_Run-Register_BSF_With_NFSetIDList.log -rw-r--r--. 1 jenkins jenkins 249K Nov 19 11:08 1st_Rerun-Register_BSF_With_NFSetIDList.log -rw-r--r--. 1 jenkins jenkins 255K Nov 19 11:09 2nd_Rerun-Register_BSF_With_NFSetIDList.log

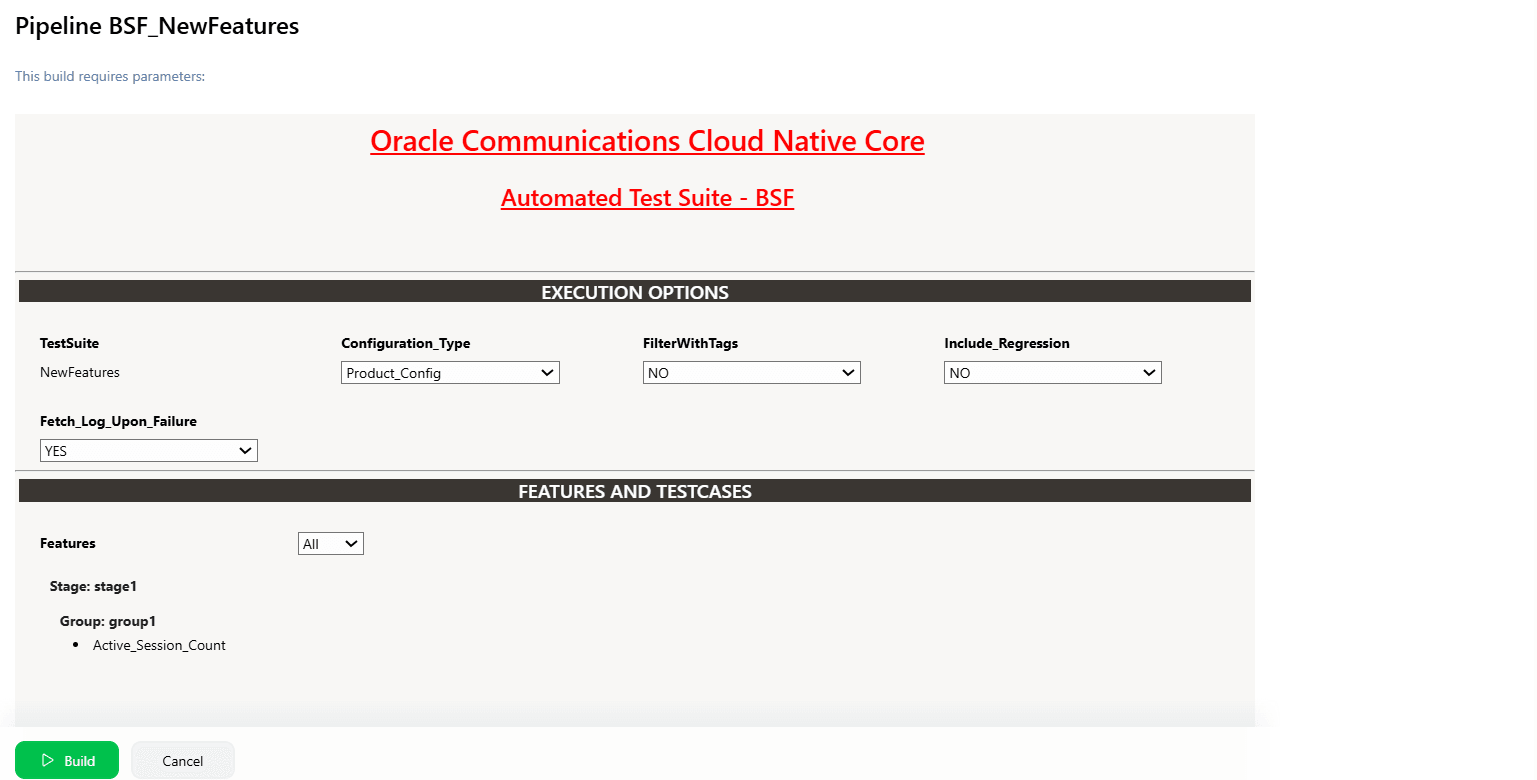

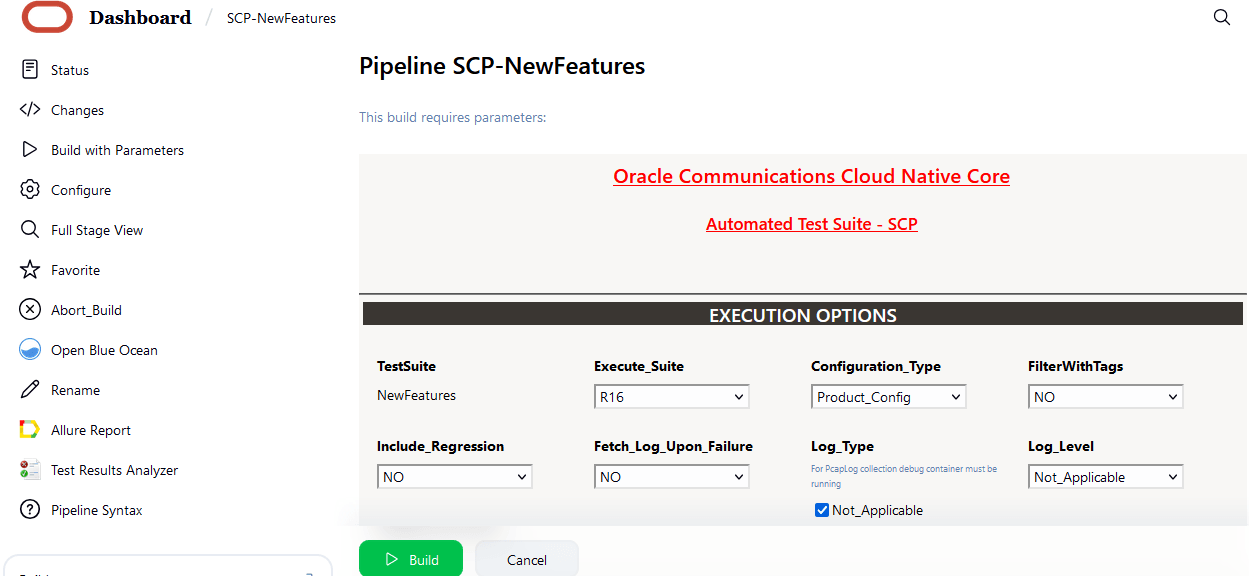

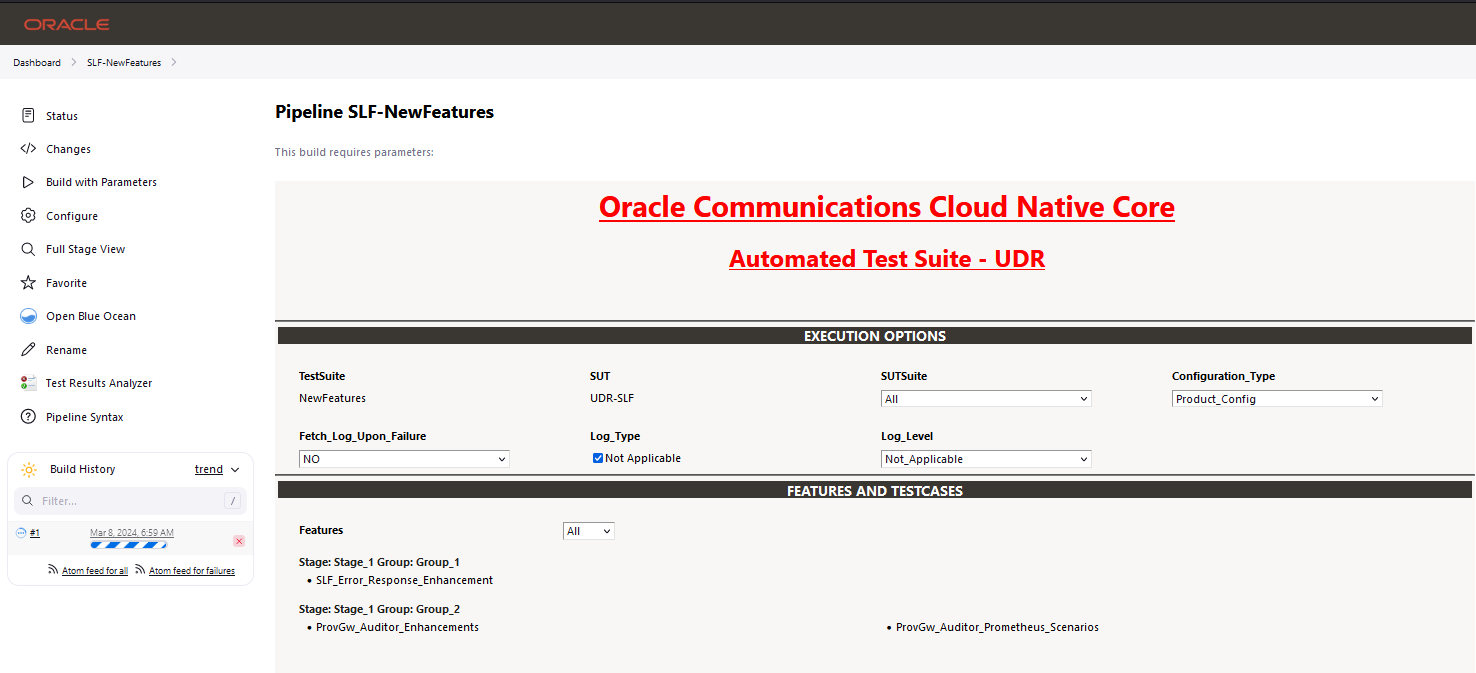

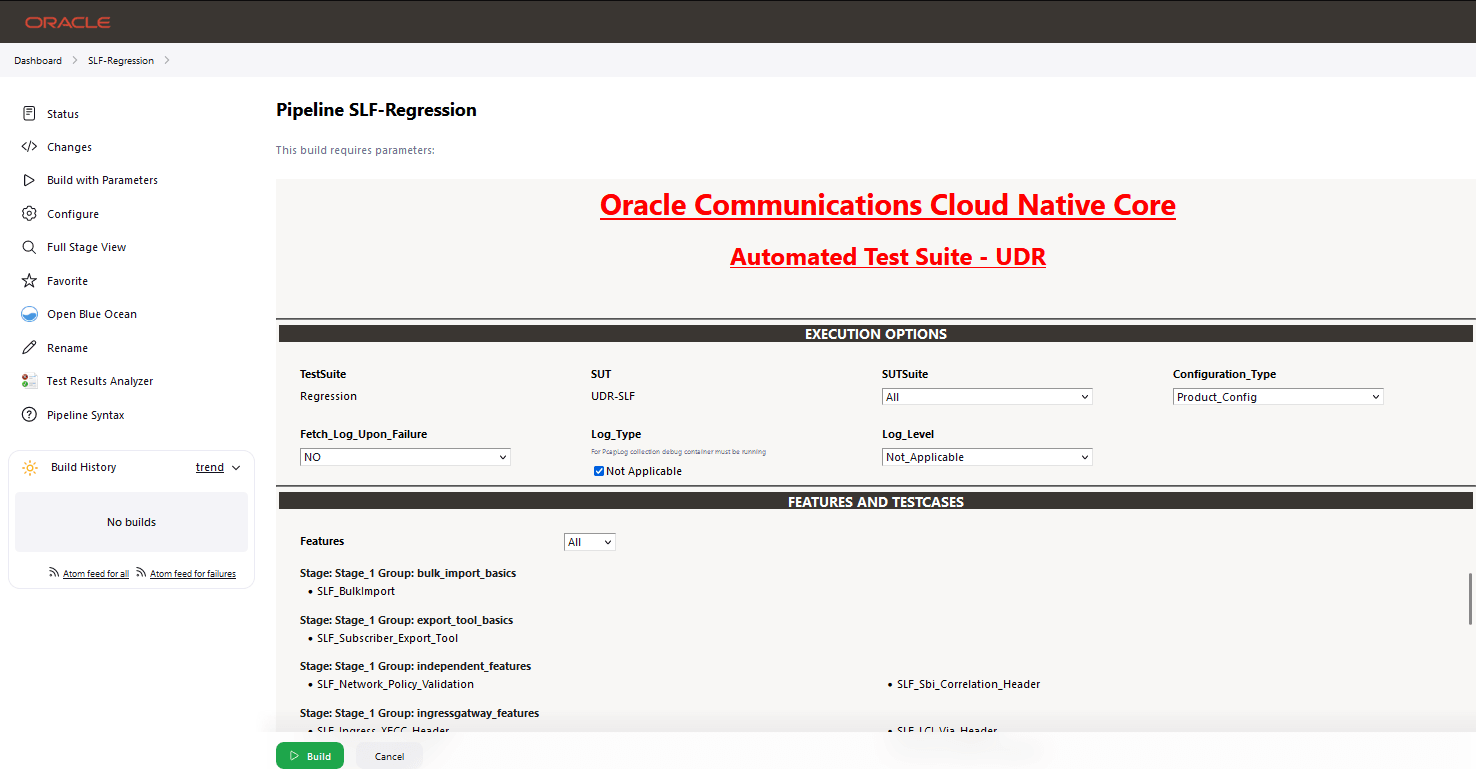

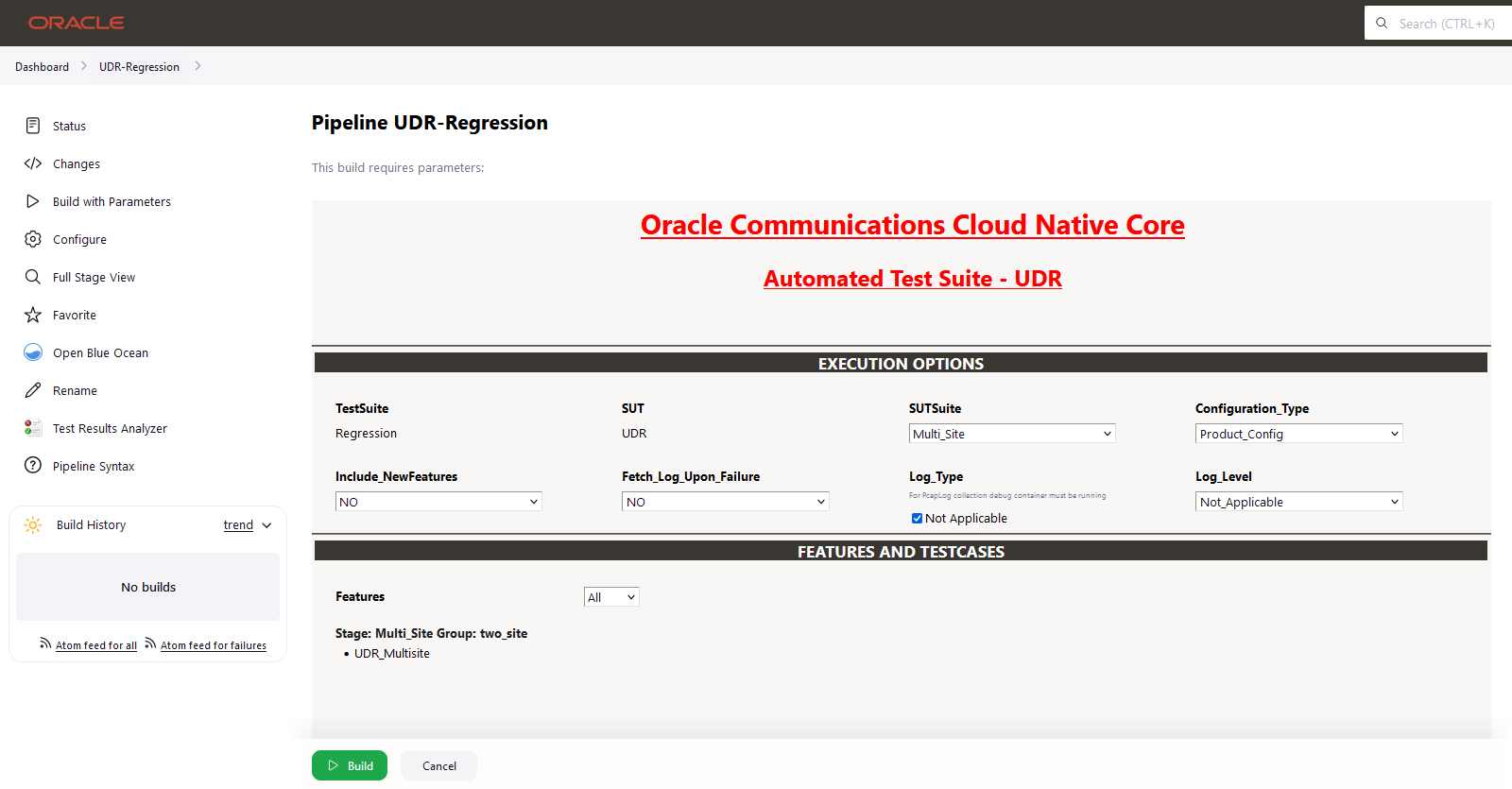

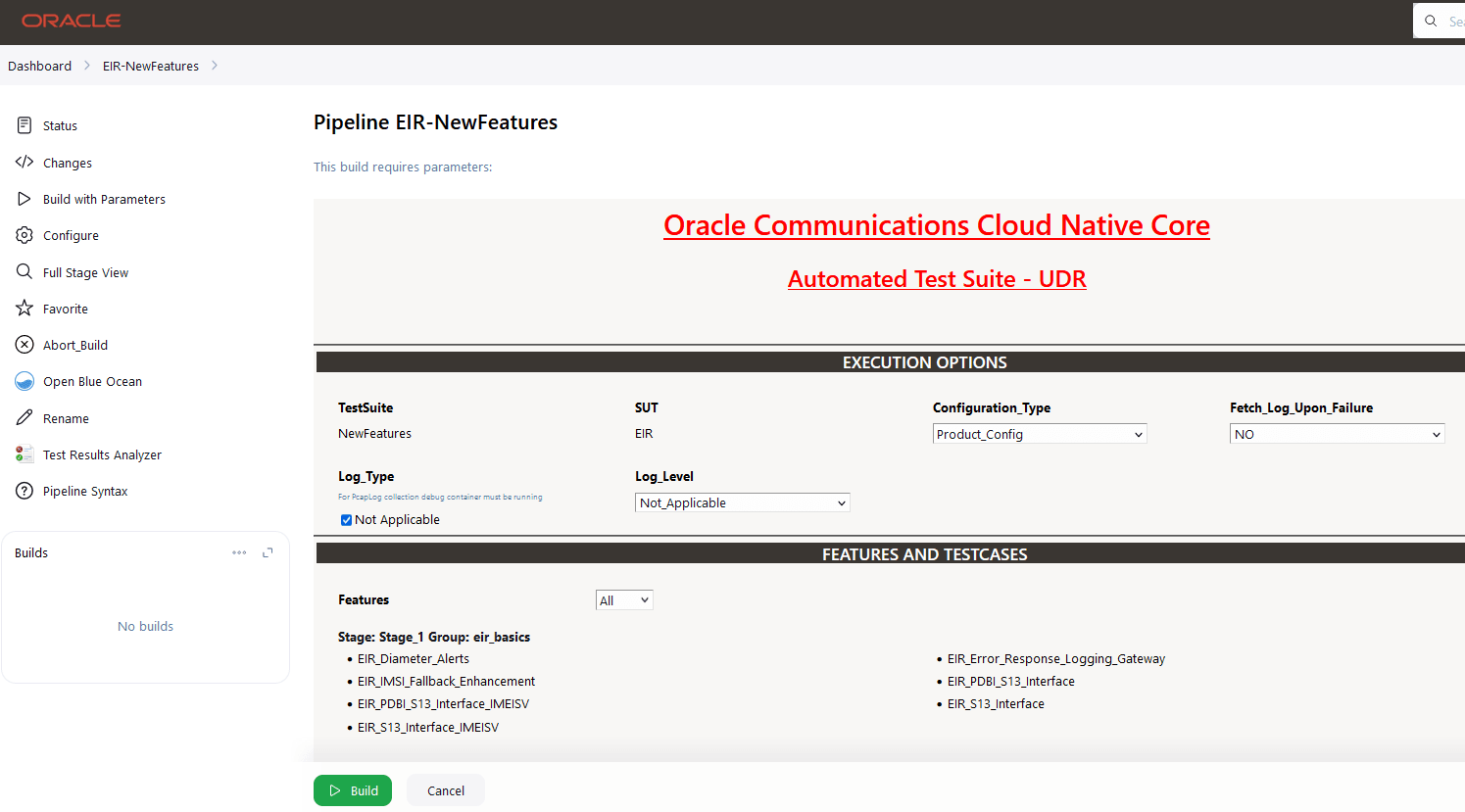

Running BSF Test Cases

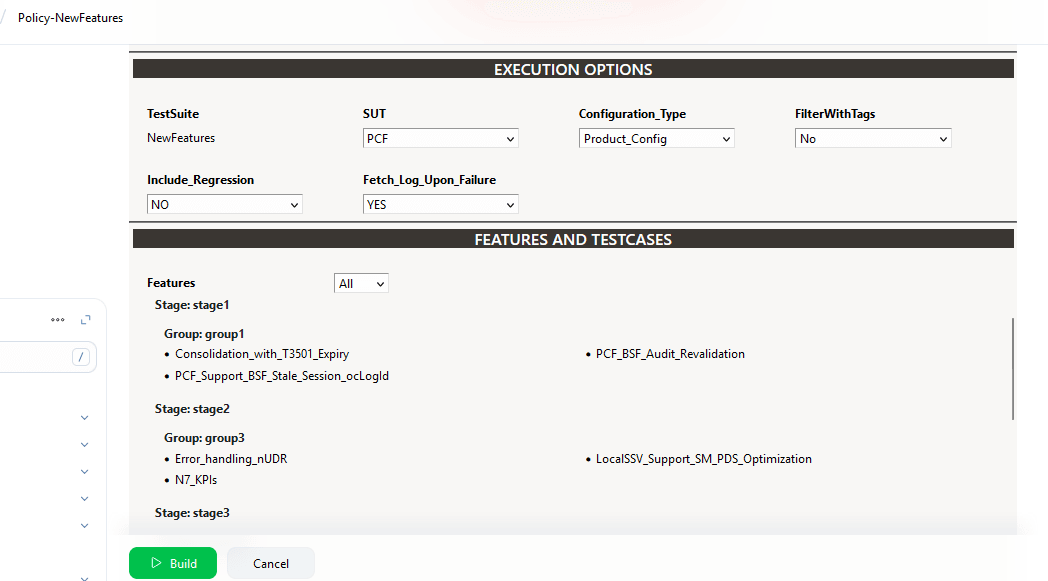

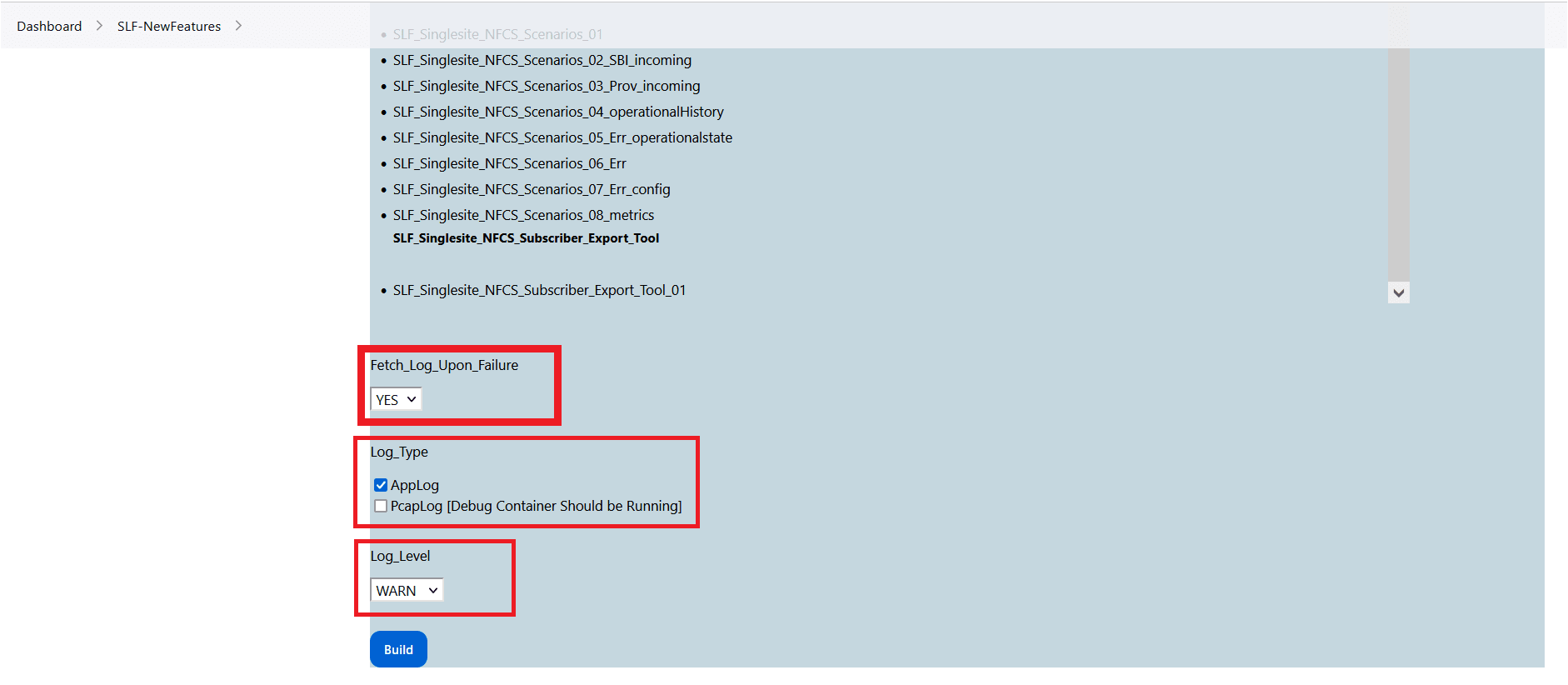

- Click the Build with Parameters link available in the

left navigation pane of the BSF_NewFeatures Pipeline

screen. The following page appears.

Figure 4-7 BSF New Features Build with Parameters

Note:

Make sure that the value of FilterWithTags and Include_NewFeatures is selected as NO.

- If you want to collect logs for any given build, select YES from the drop-down menu of Fetch_Log_Upon_Failure.

-

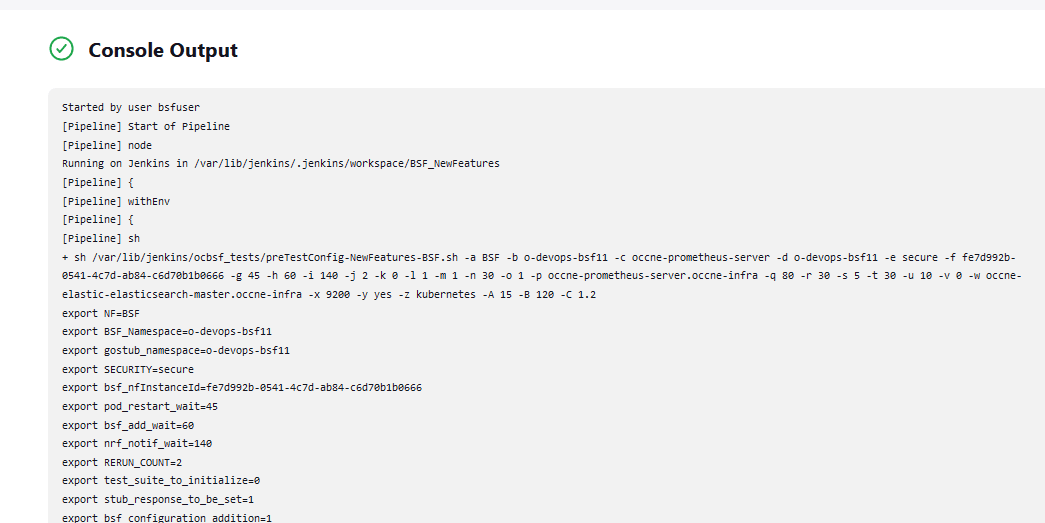

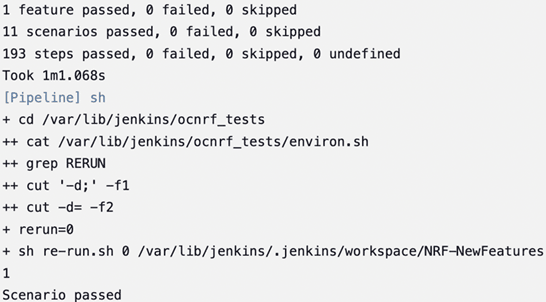

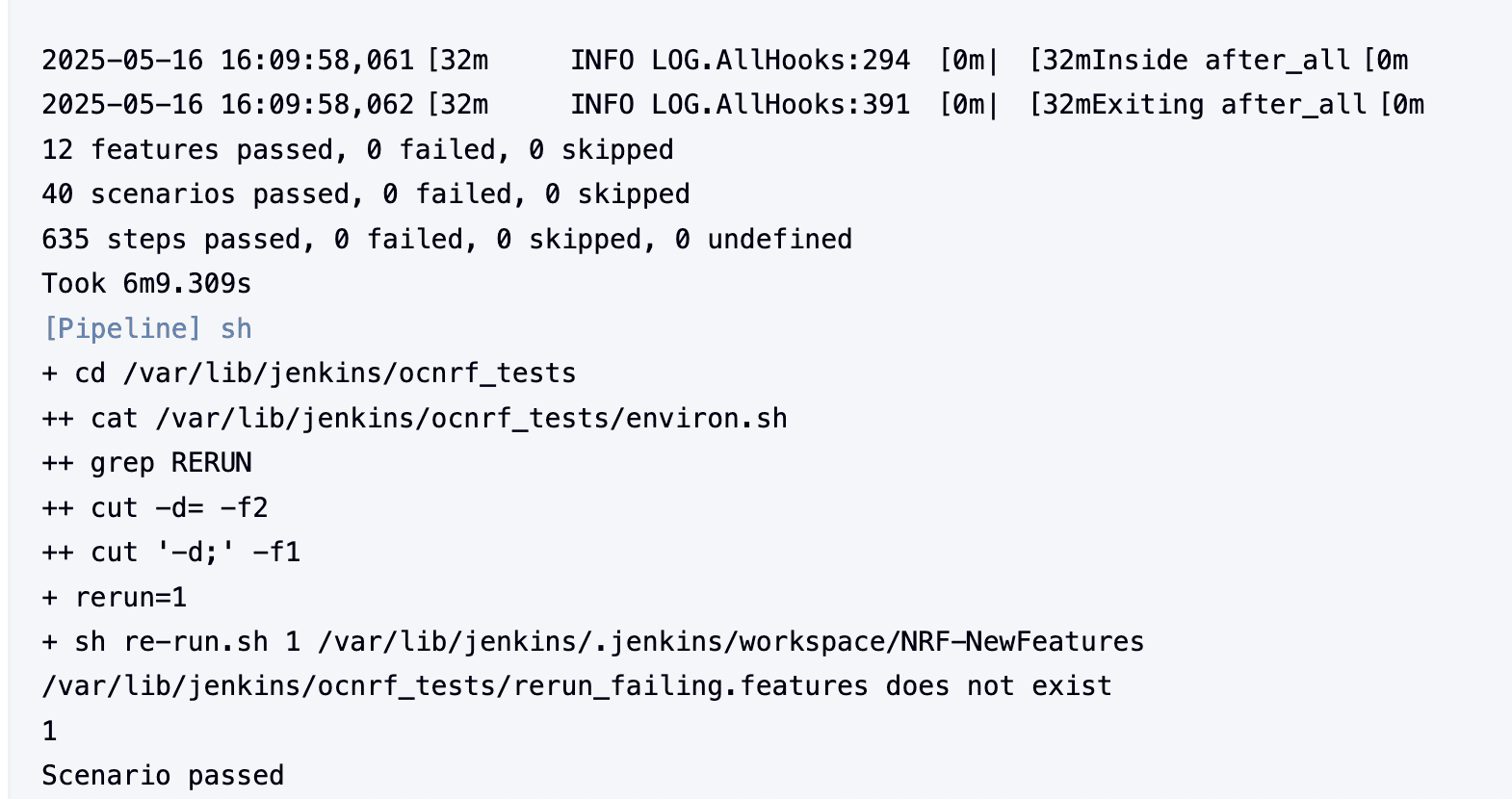

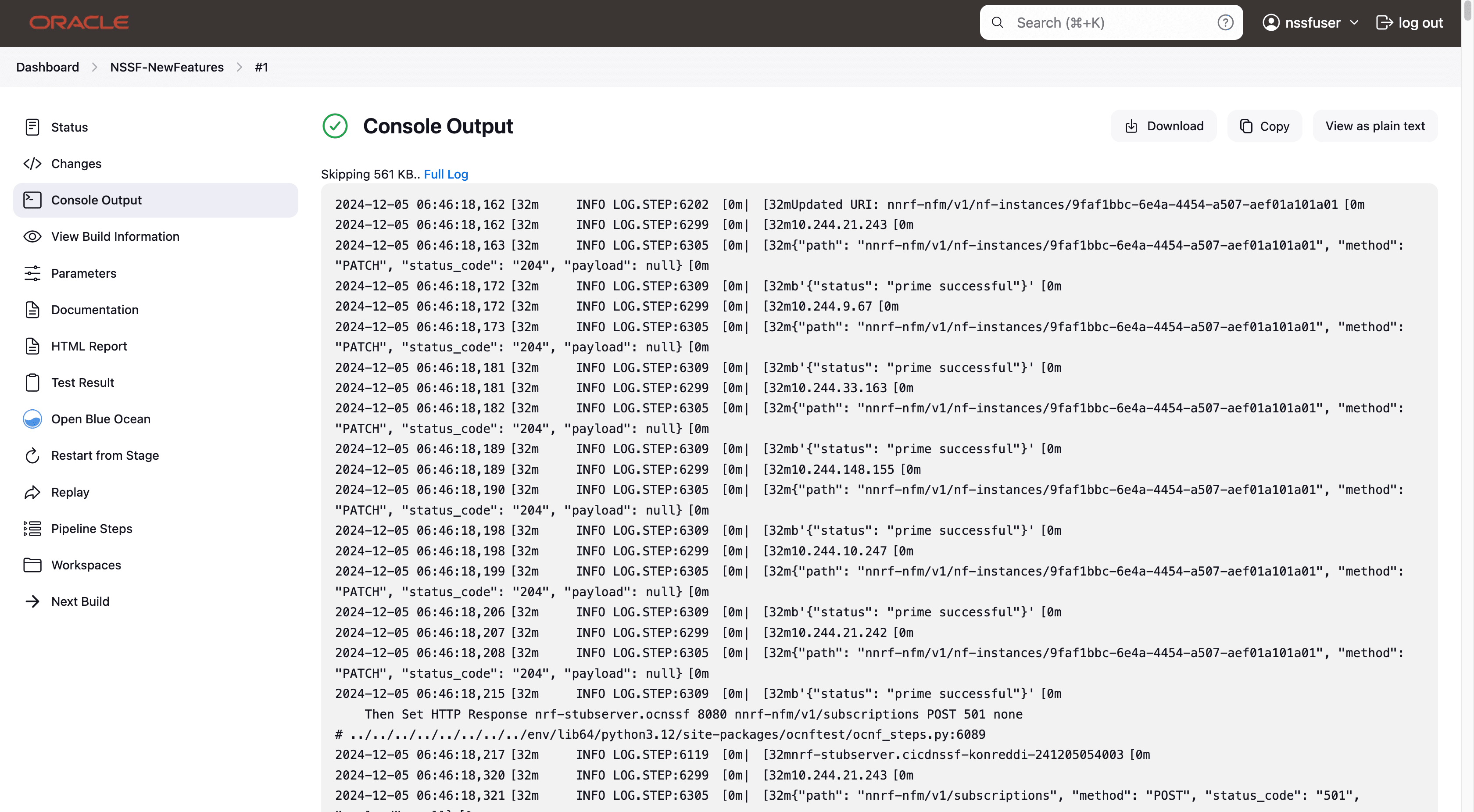

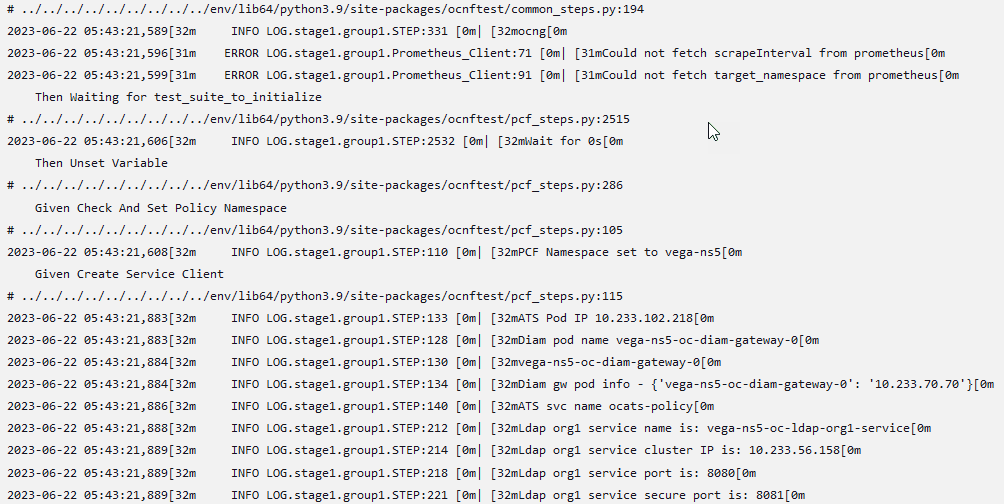

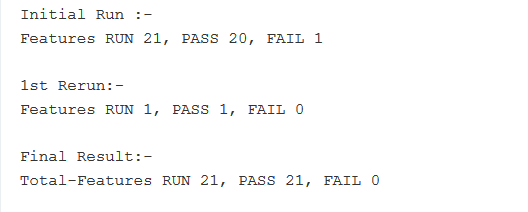

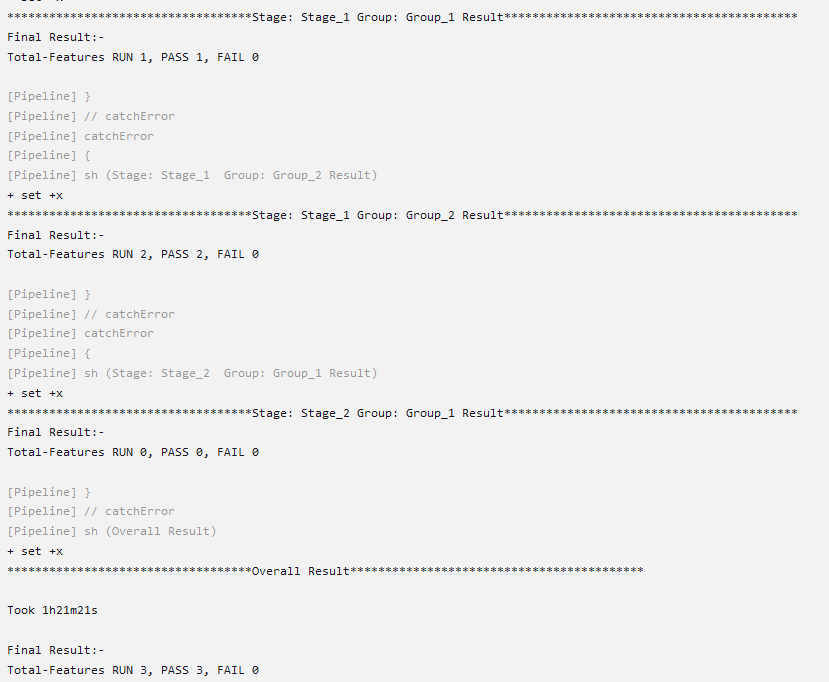

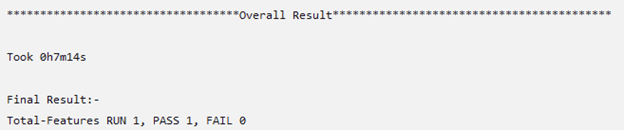

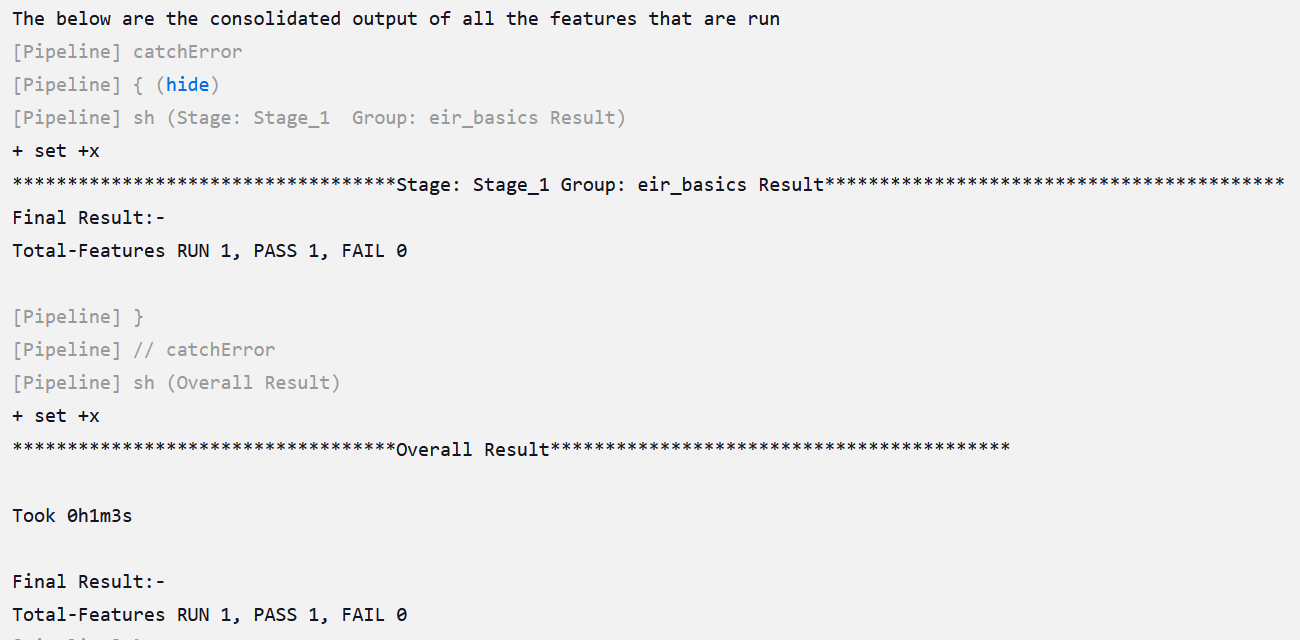

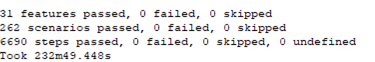

Click Build and select Console Output to view the test results. The following is a sample test result ouput:

Figure 4-8 Sample: Test Result Output in Console

Note:

For more details on consolidated test report, see Managing Final Summary Report, Build Color, and Application Log.Queuing Jenkins Jobs

Using this feature, you can queue a second job even when current job is still running. The second job can be triggered either from the same or a different pipeline.

Table 4-1 Queuing Jenkins Jobs

| Concurrent Builds | New FeaturesCurrent Build | New FeaturesNew Build | RegressionCurrent Build | RegressionNew Build Build | Result |

|---|---|---|---|---|---|

| Enabled | Running | Triggered | NA | NA | New-Build of New-Features is added to queue. |

| Enabled | Running | NA | NA | Triggered | New-Build of Regression is added to queue. |

| Disabled | NA | NA | Running | Triggered | New-Build of Regression is added to queue. |

| Disabled | NA | Triggered | Running | NA | New-Build of New-Features is added to queue. |

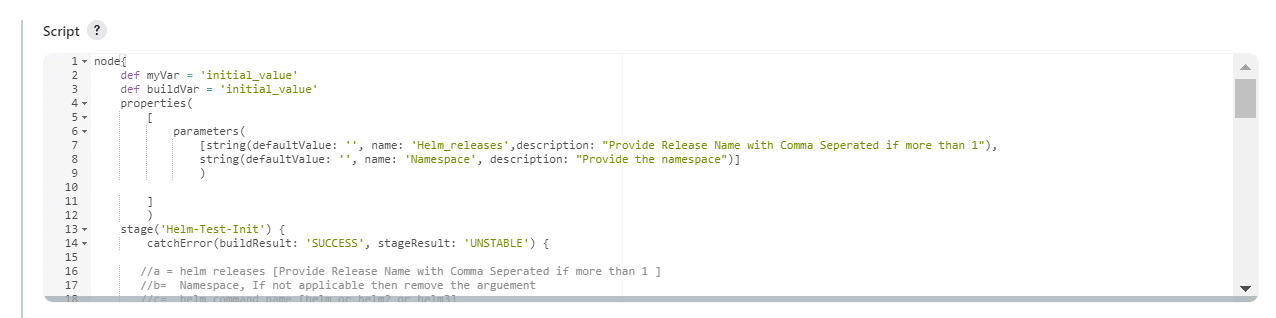

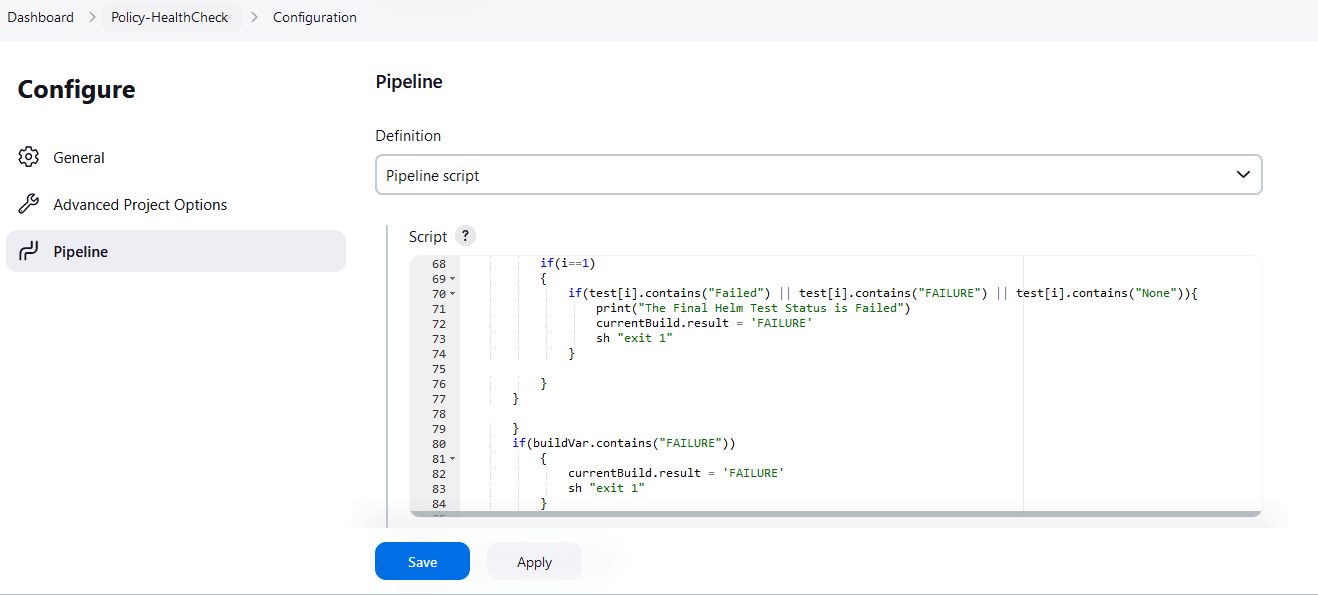

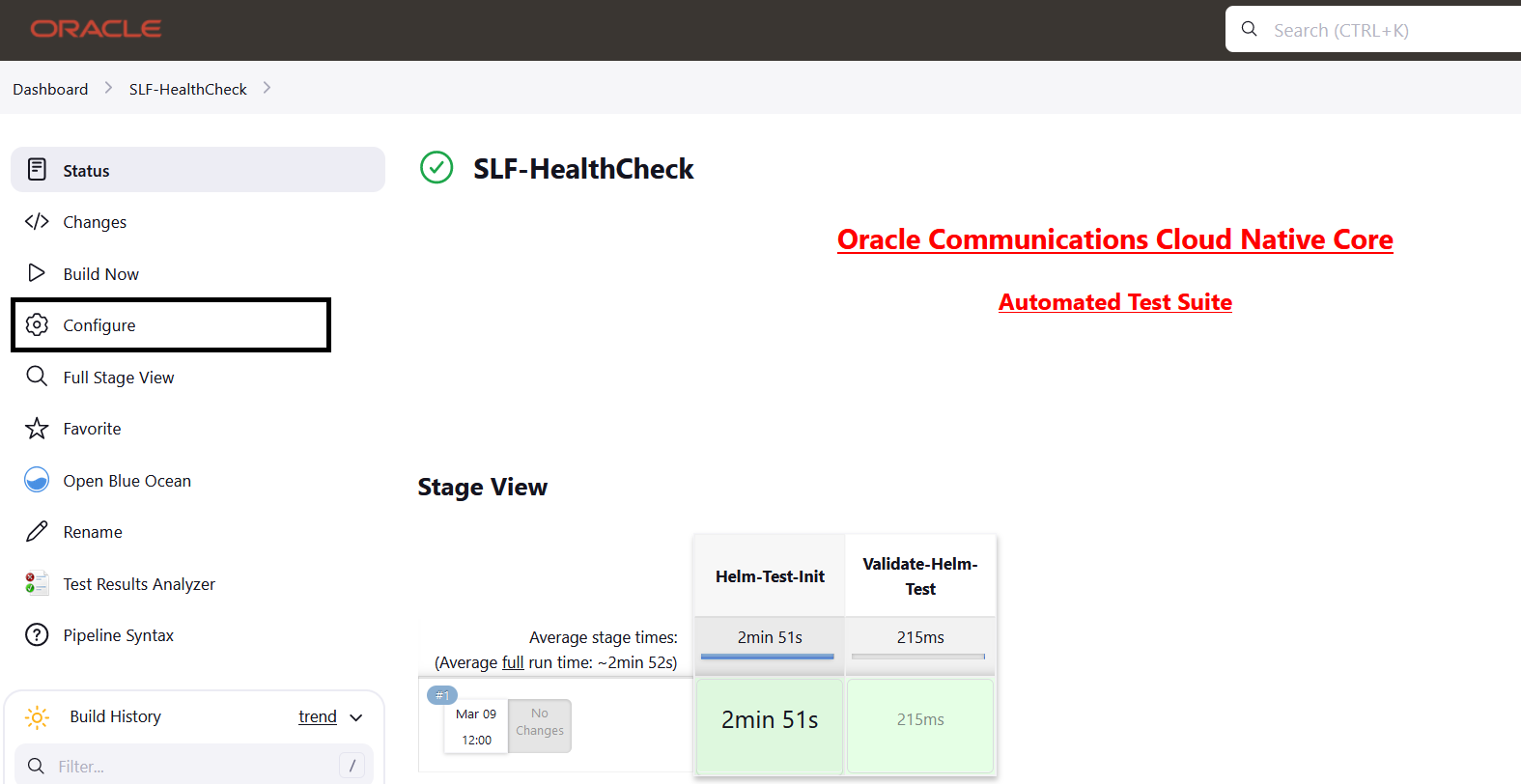

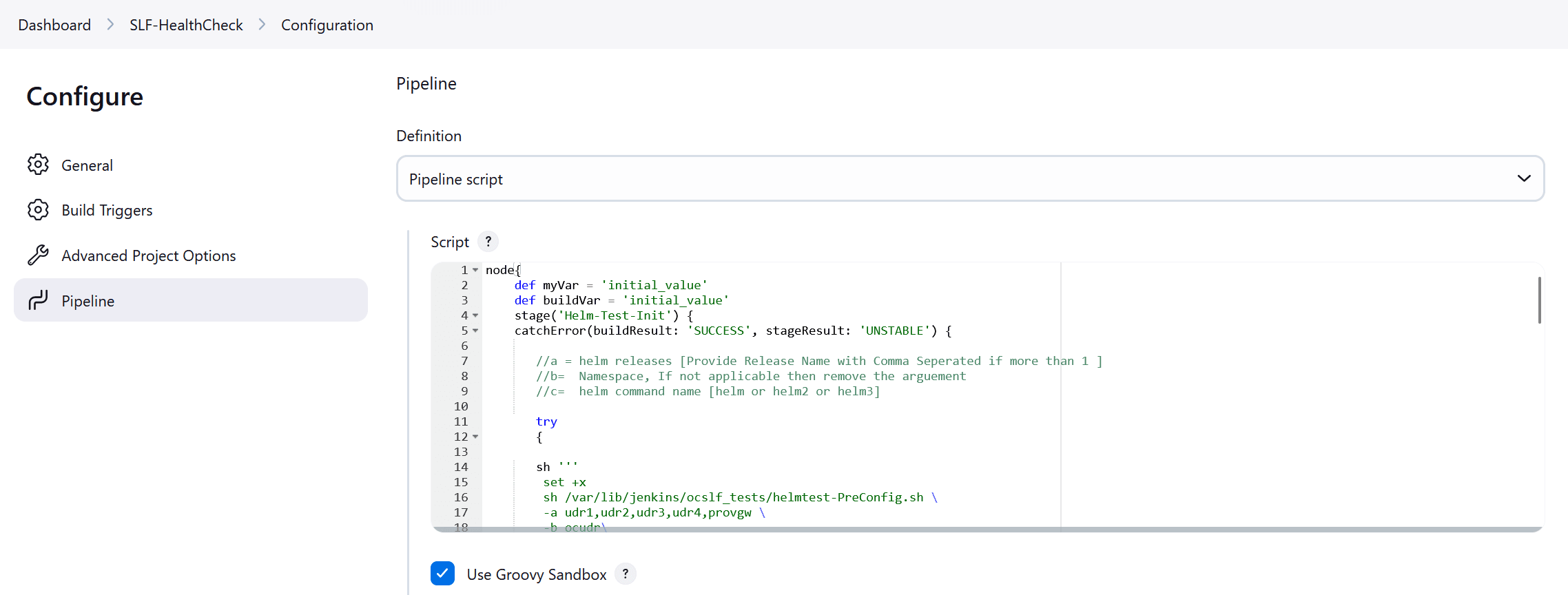

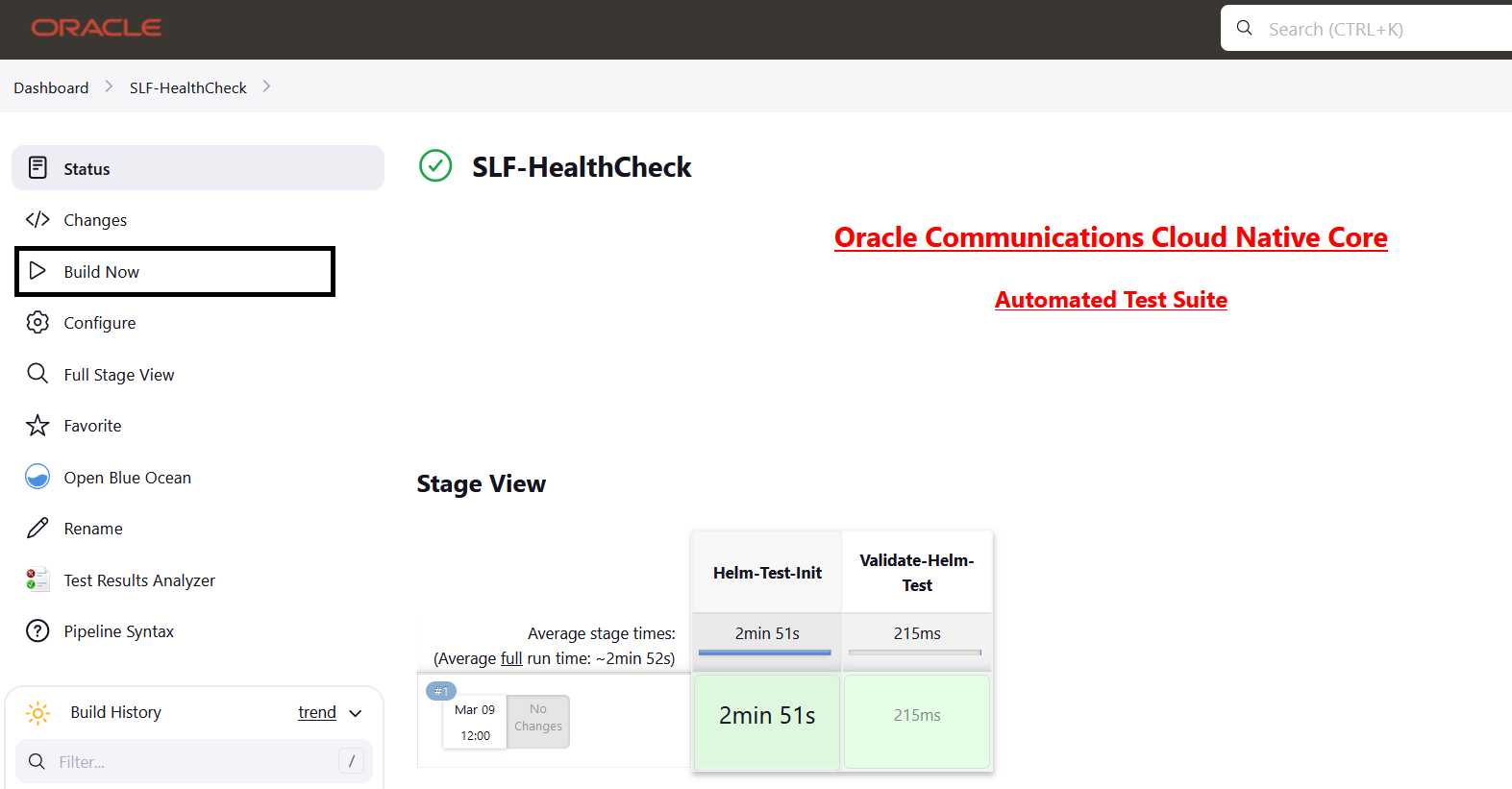

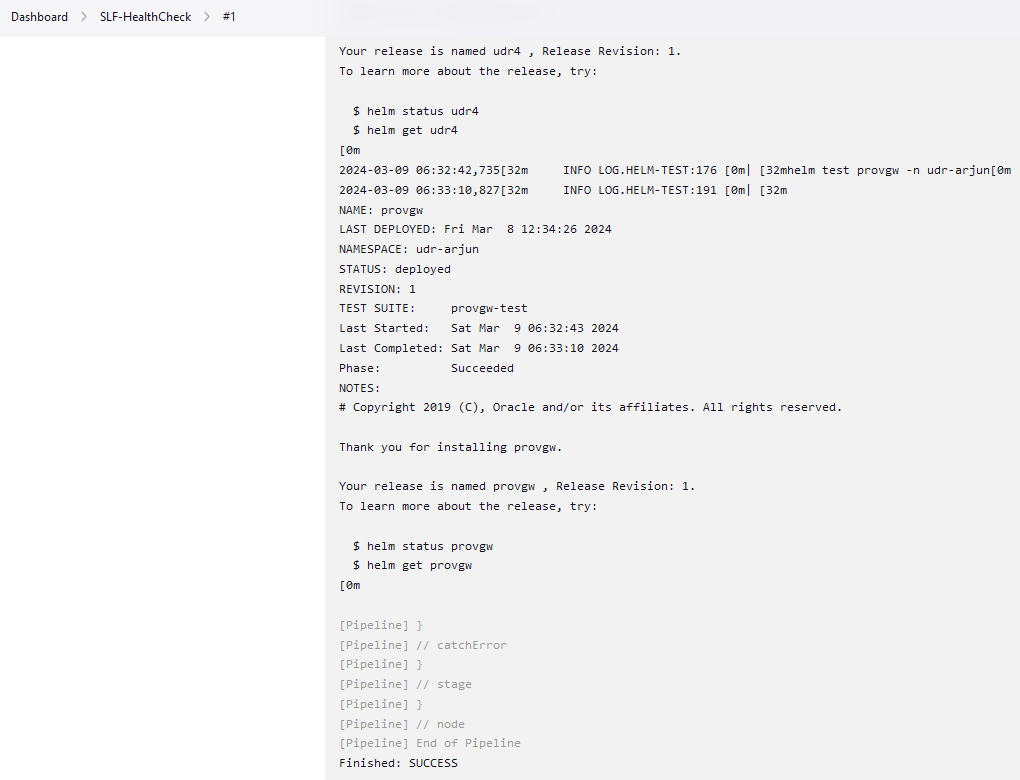

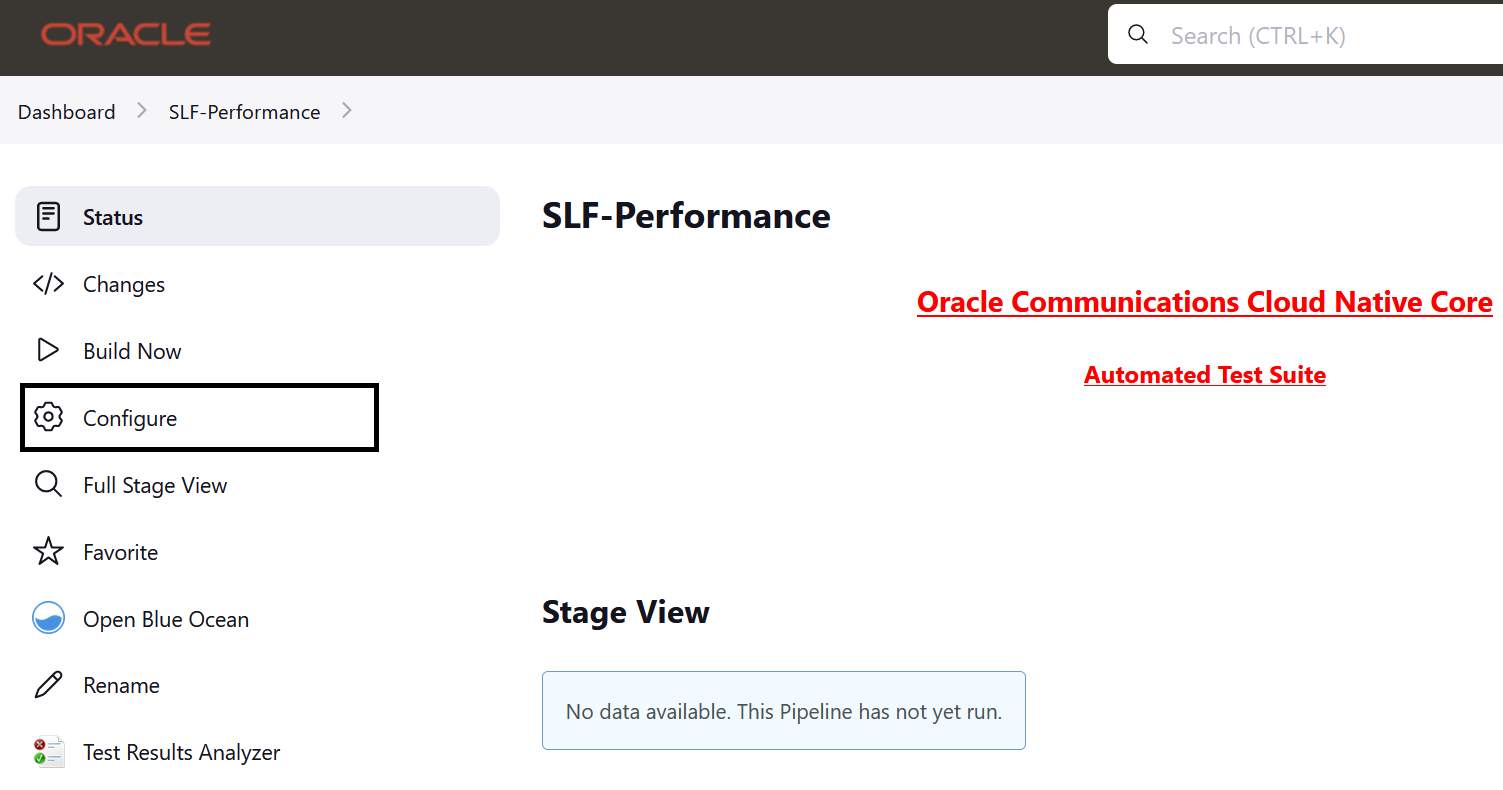

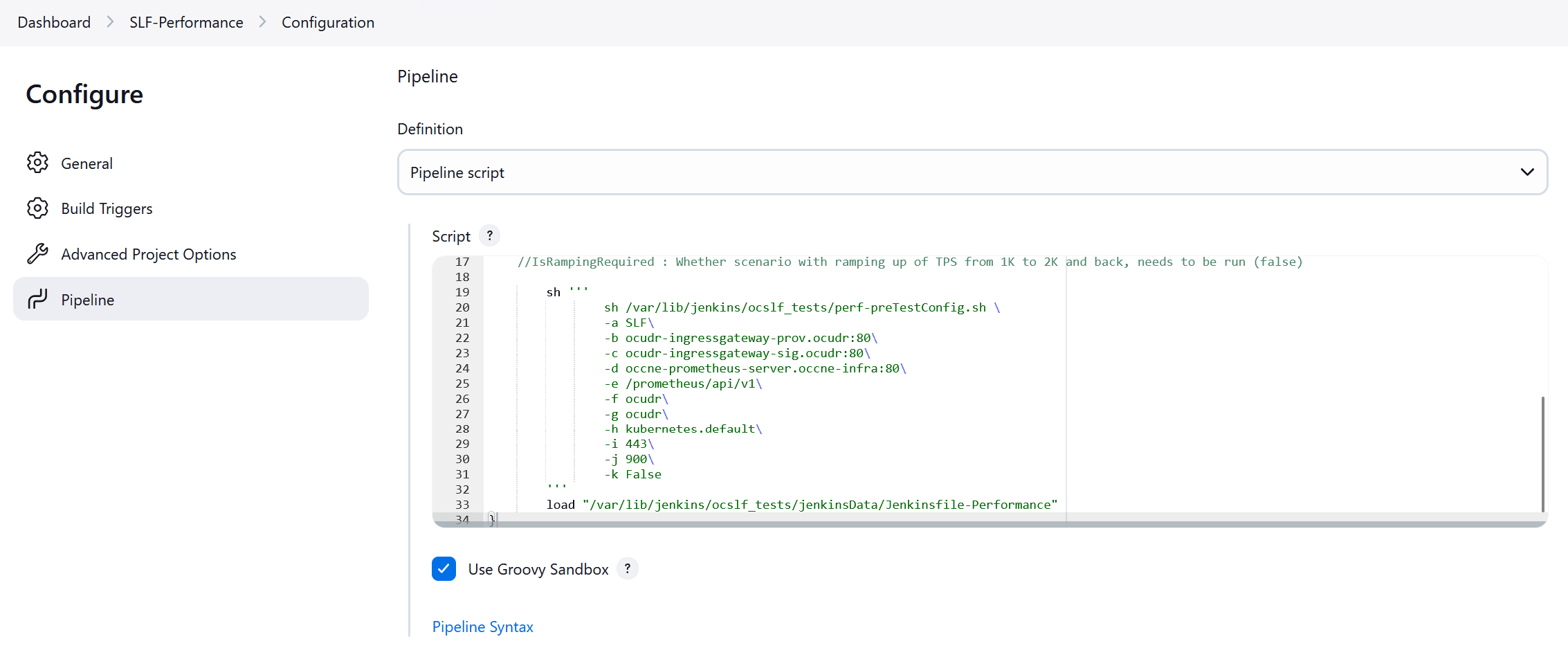

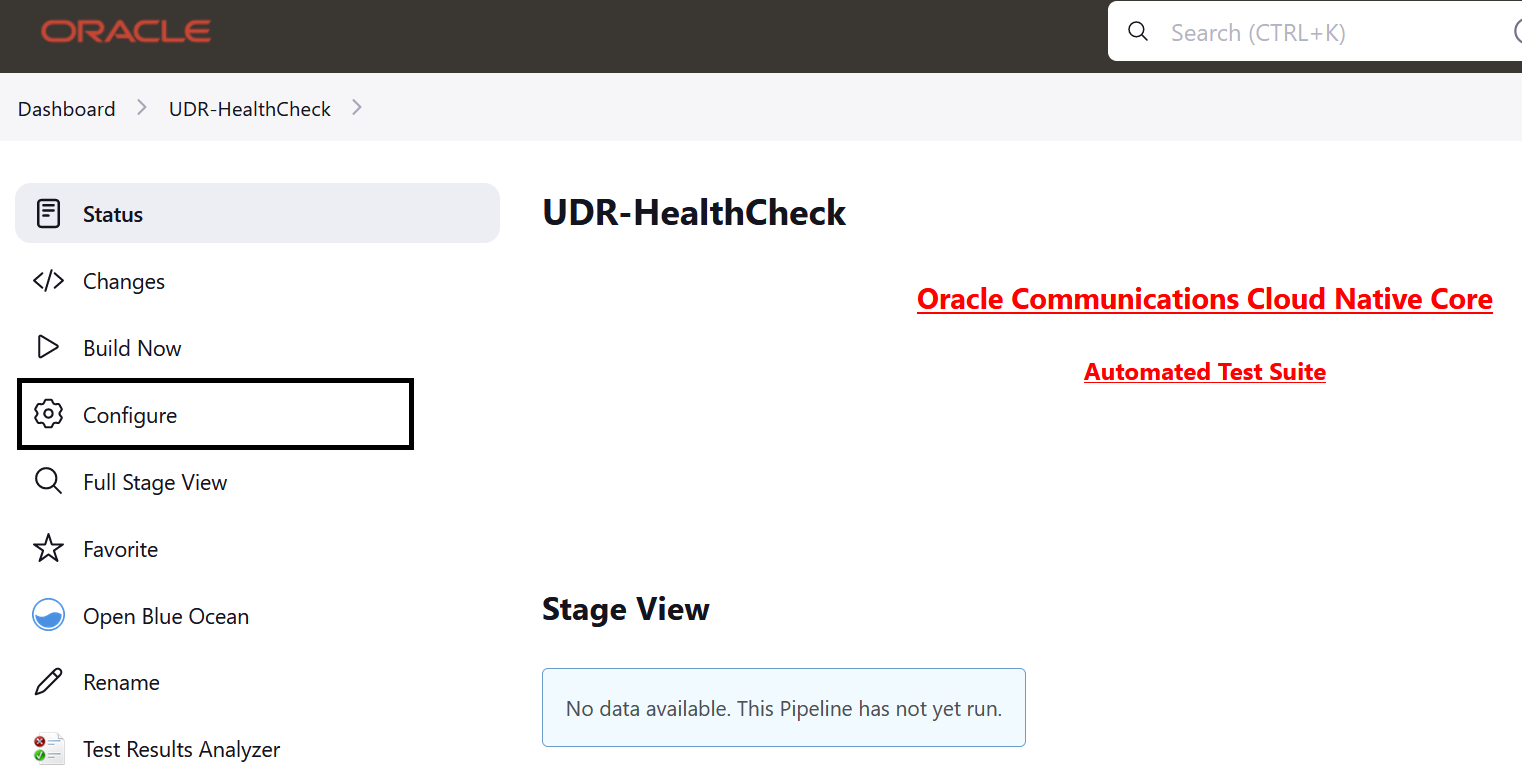

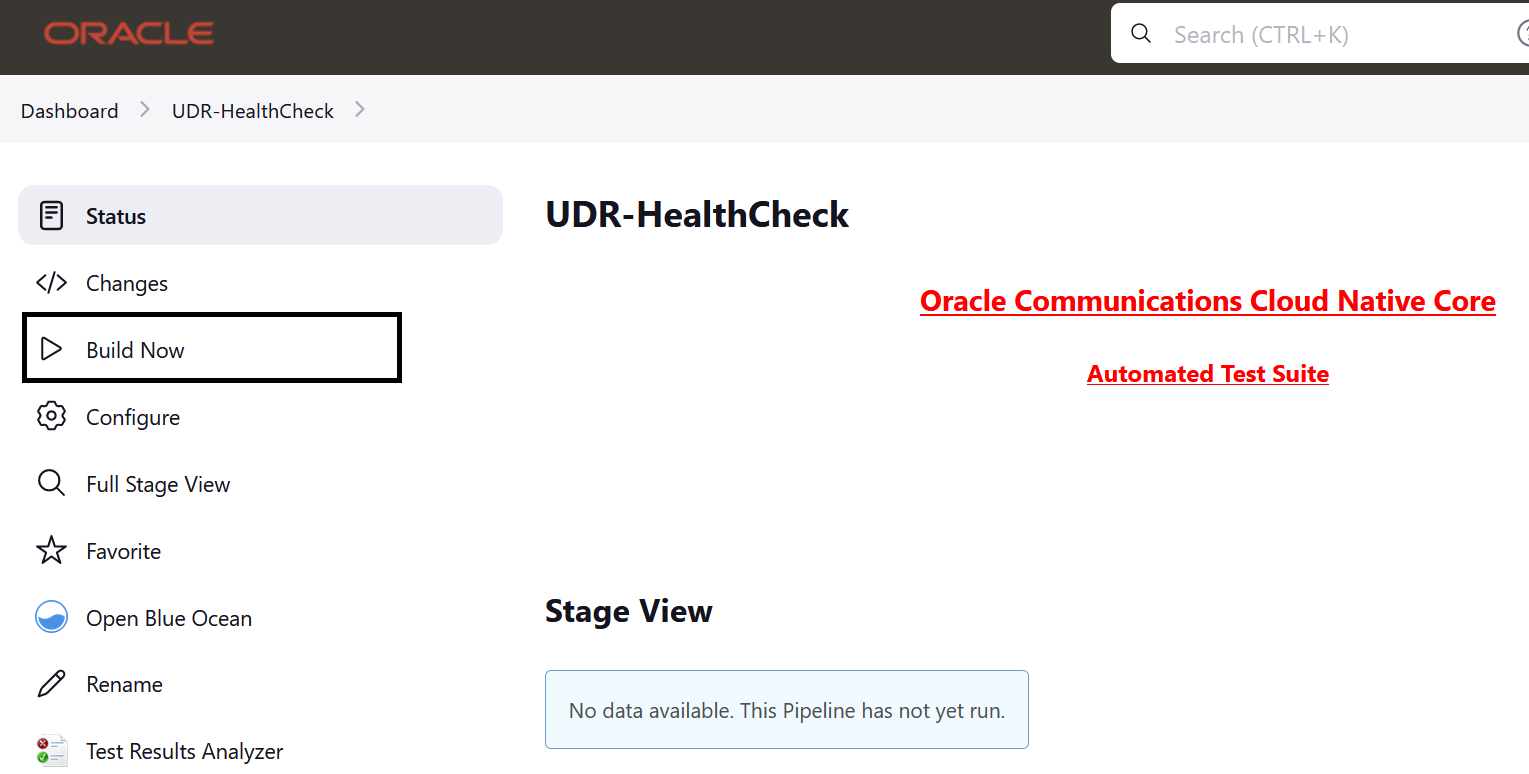

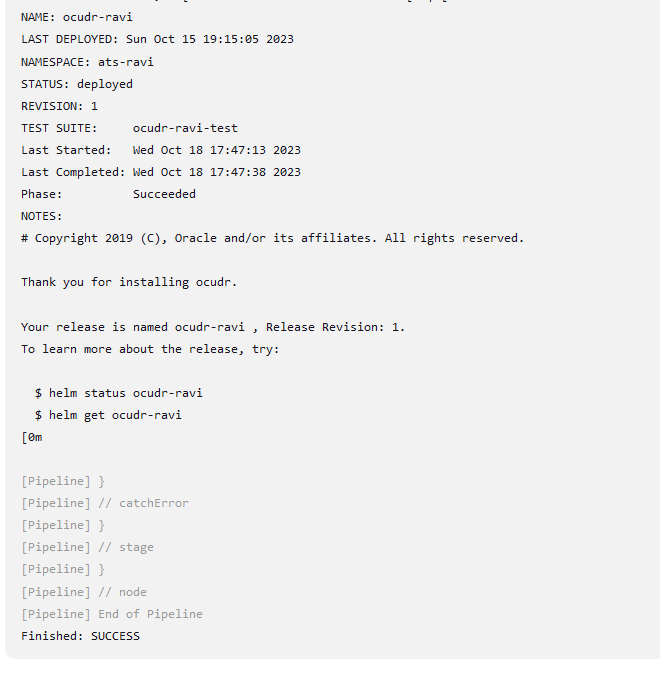

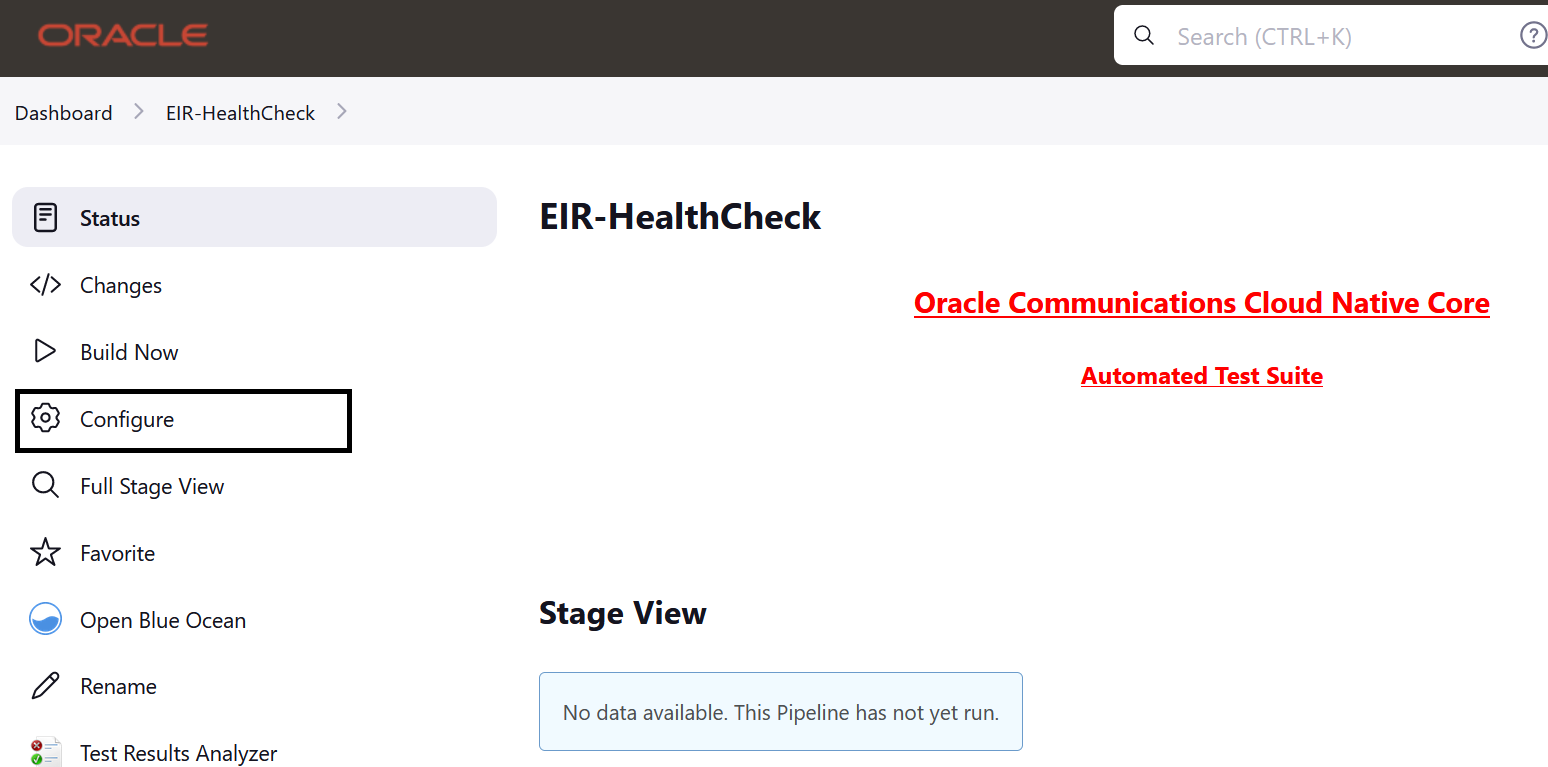

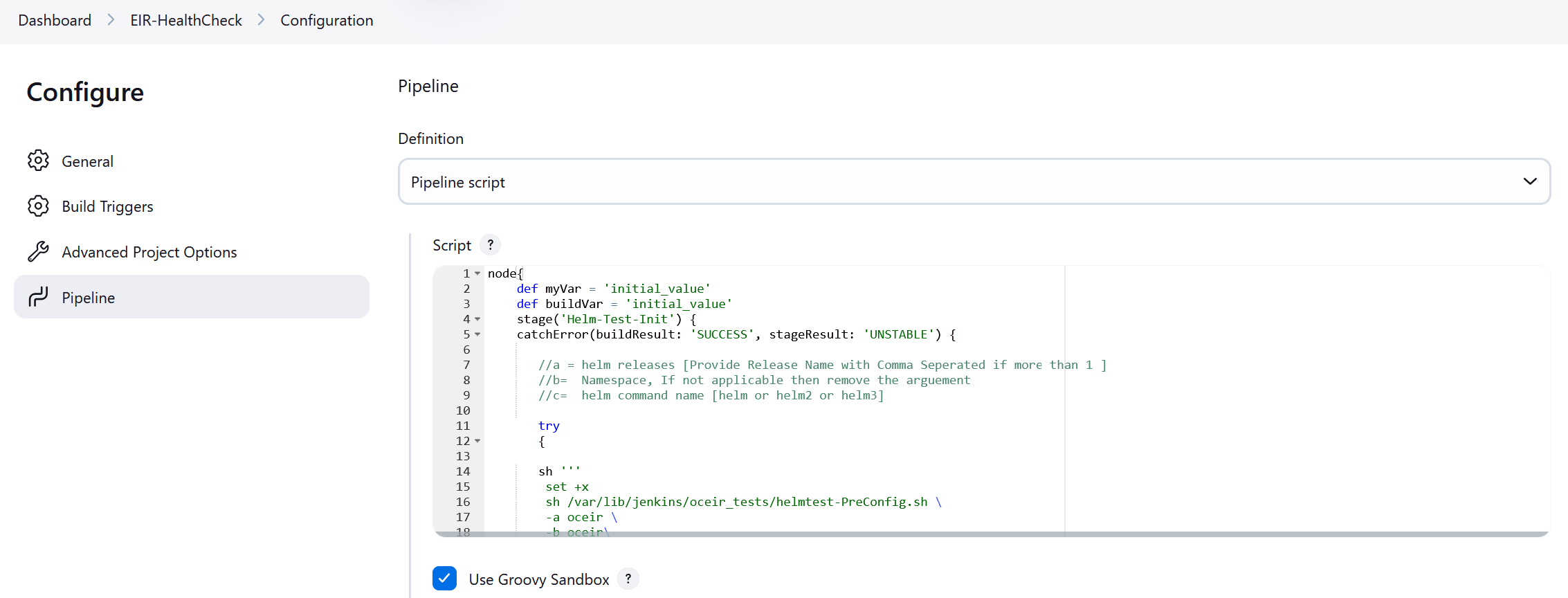

4.1.4 Running BSF_HealthCheck Pipeline

This is a pre-configured pipeline where ATS performs a test probe with SUT. It triggers helm test and provides the results in Jenkins Console Logs.

You can run BSF_HealthCheck pipeline to check if all BSF pods are up and running. If yes, it provides the status as successful. If any pod is down due to any reason, then the pipeline fails.

- Click BSF_HealthCheck in the Name column.

- Click Configure in the left navigation pane.

- When you scroll-down, the General tab becomes active. Be sure that the screen loads completely.

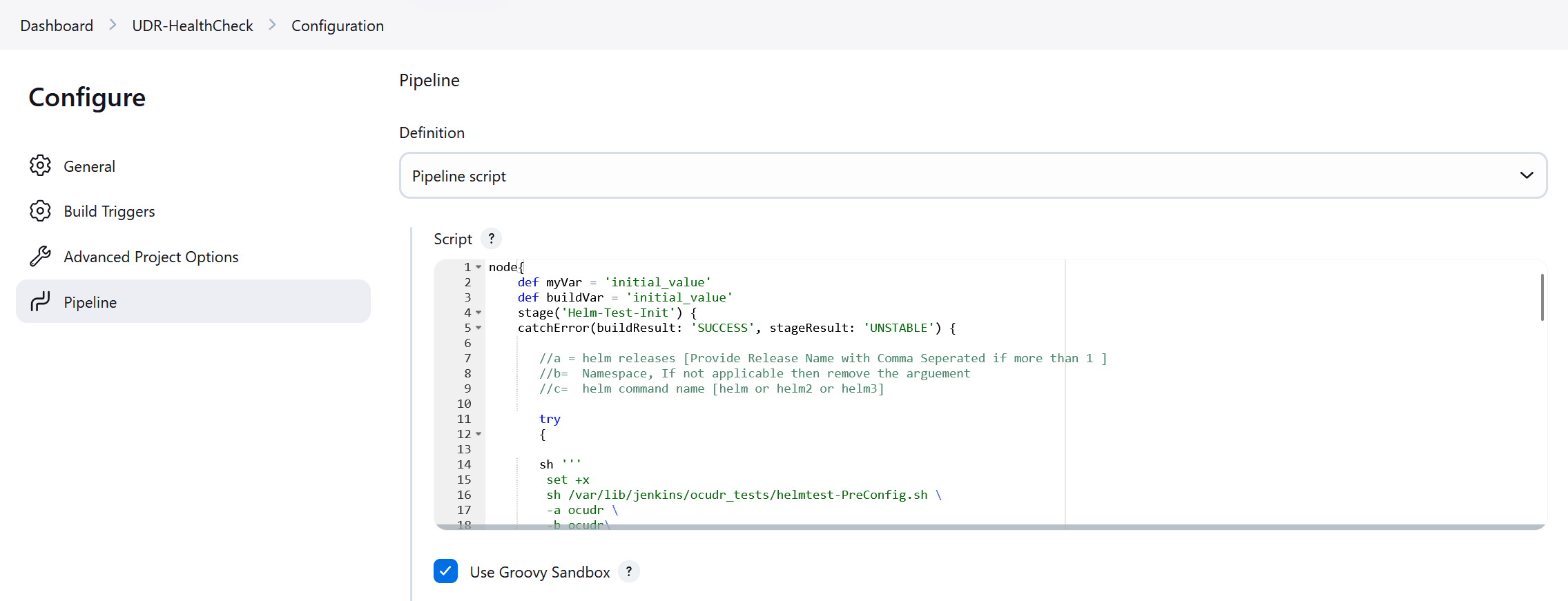

- Continue scrolling down until the

Pipeline tab becomes active. The following is a

screen capture that shows the Pipeline script:

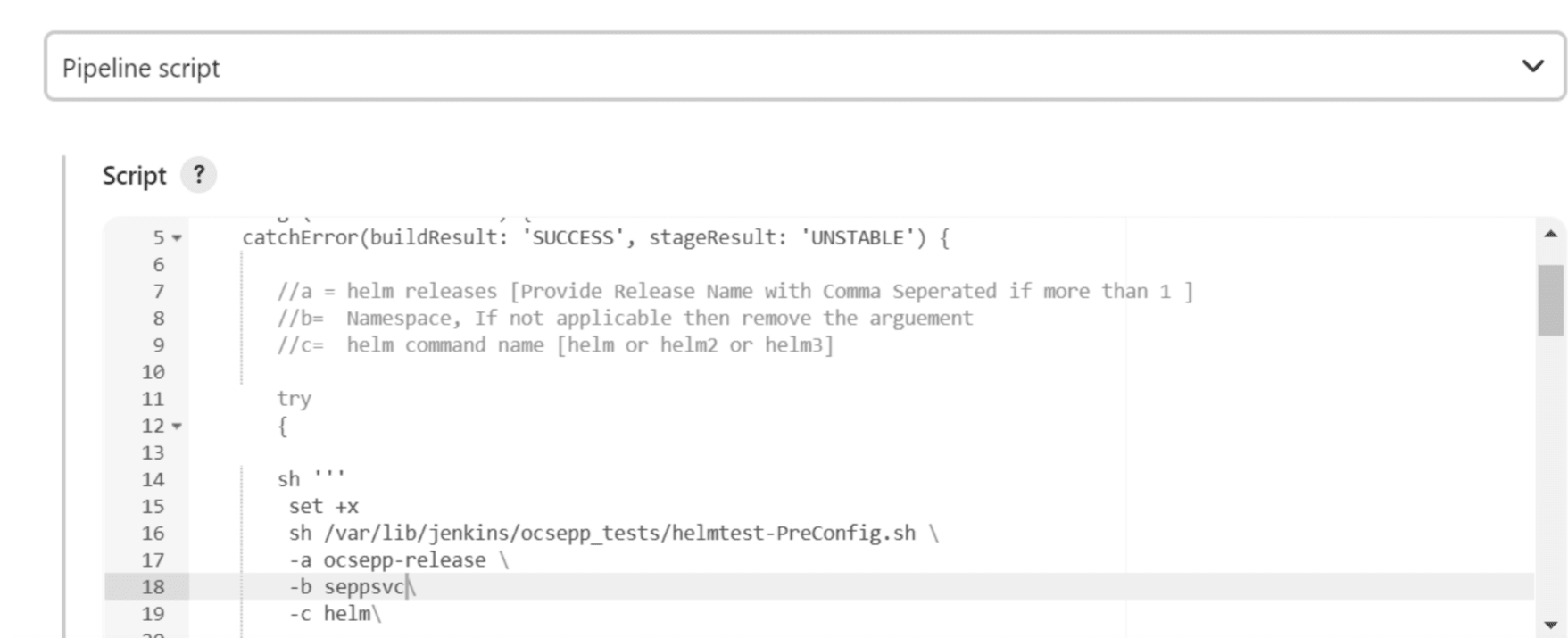

Figure 4-9 Helm Test Script

- In the Script area under the

Pipeline section, users may customize the values for the following

parameters:

Attention:

Do not modify values of parameters other than the ones described in this section.- a: Change this parameter to update the helm release name where BSF is deployed in your bastion.

- b: Change this parameter to update the namespace where BSF is deployed in your bastion.

- Click Save to update the values.

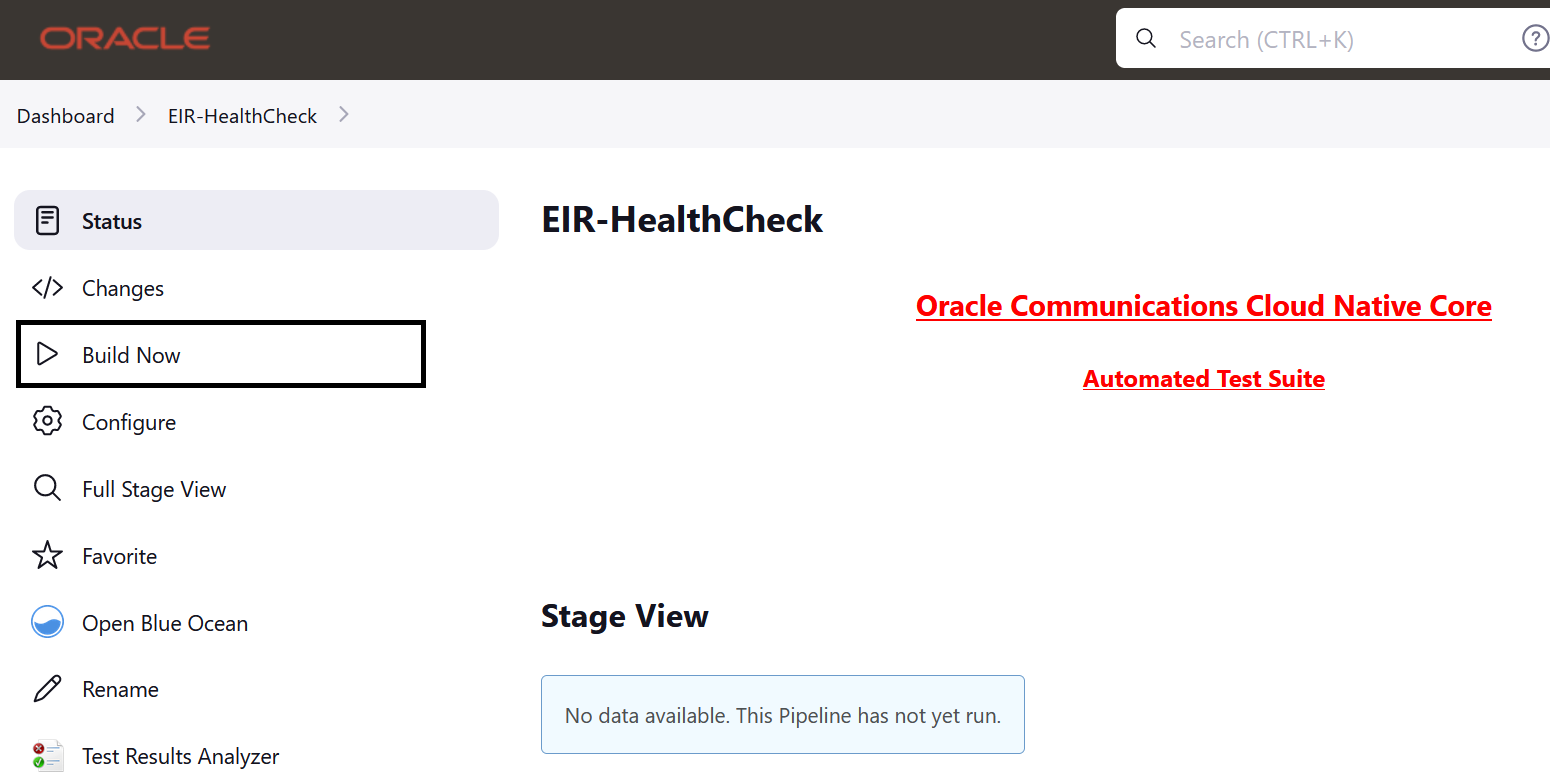

Running Helm Test

To run BSF test cases, click Build Now.

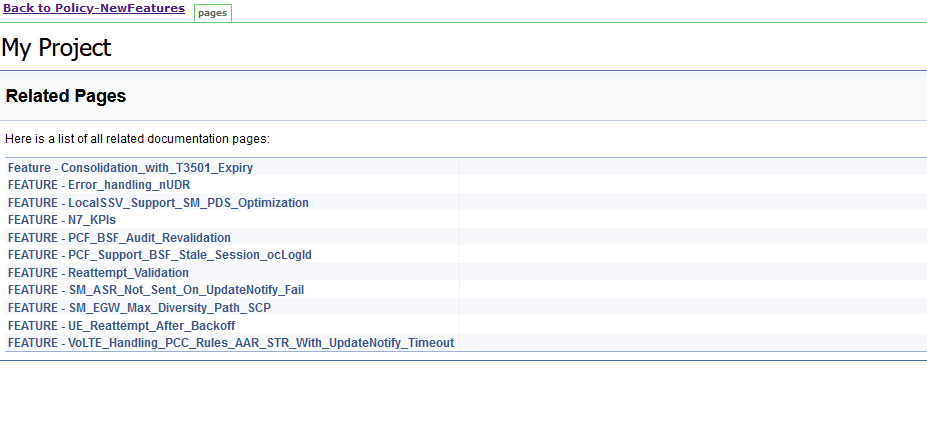

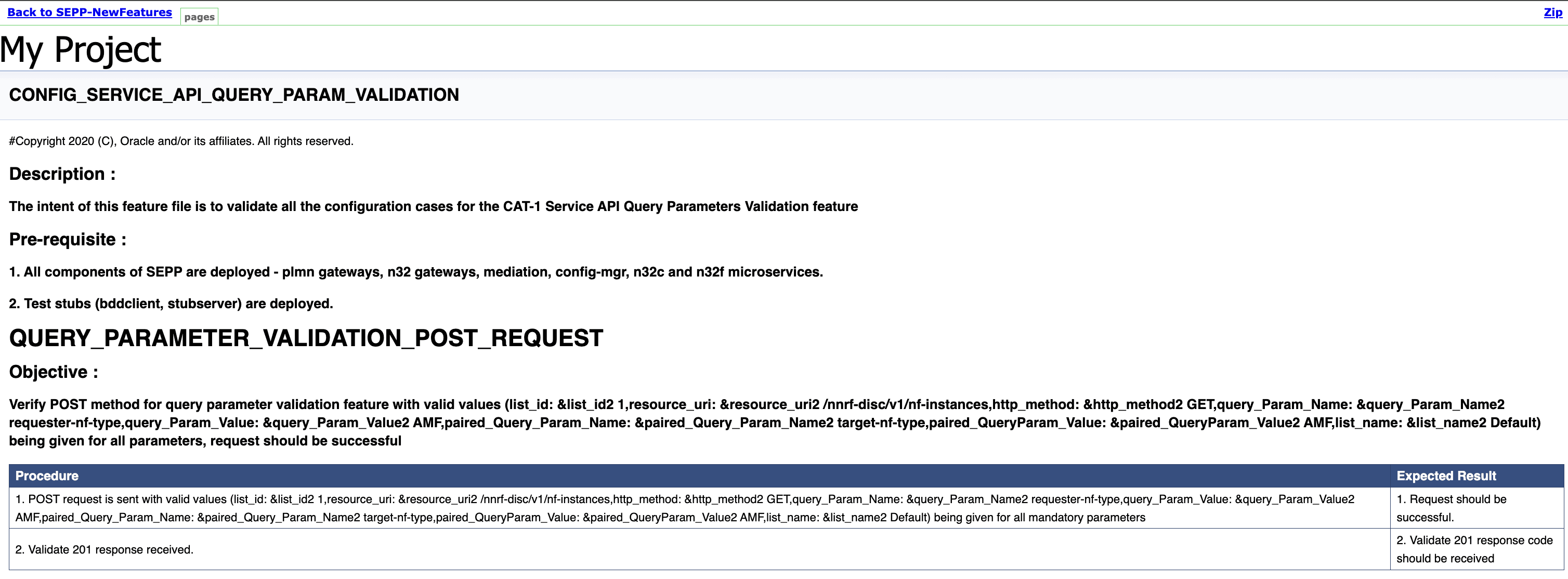

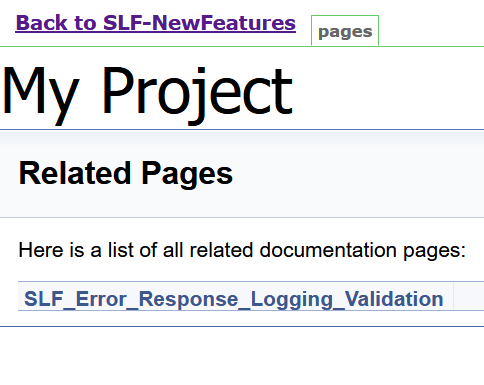

4.1.5 BSF_NewFeatures Documentation

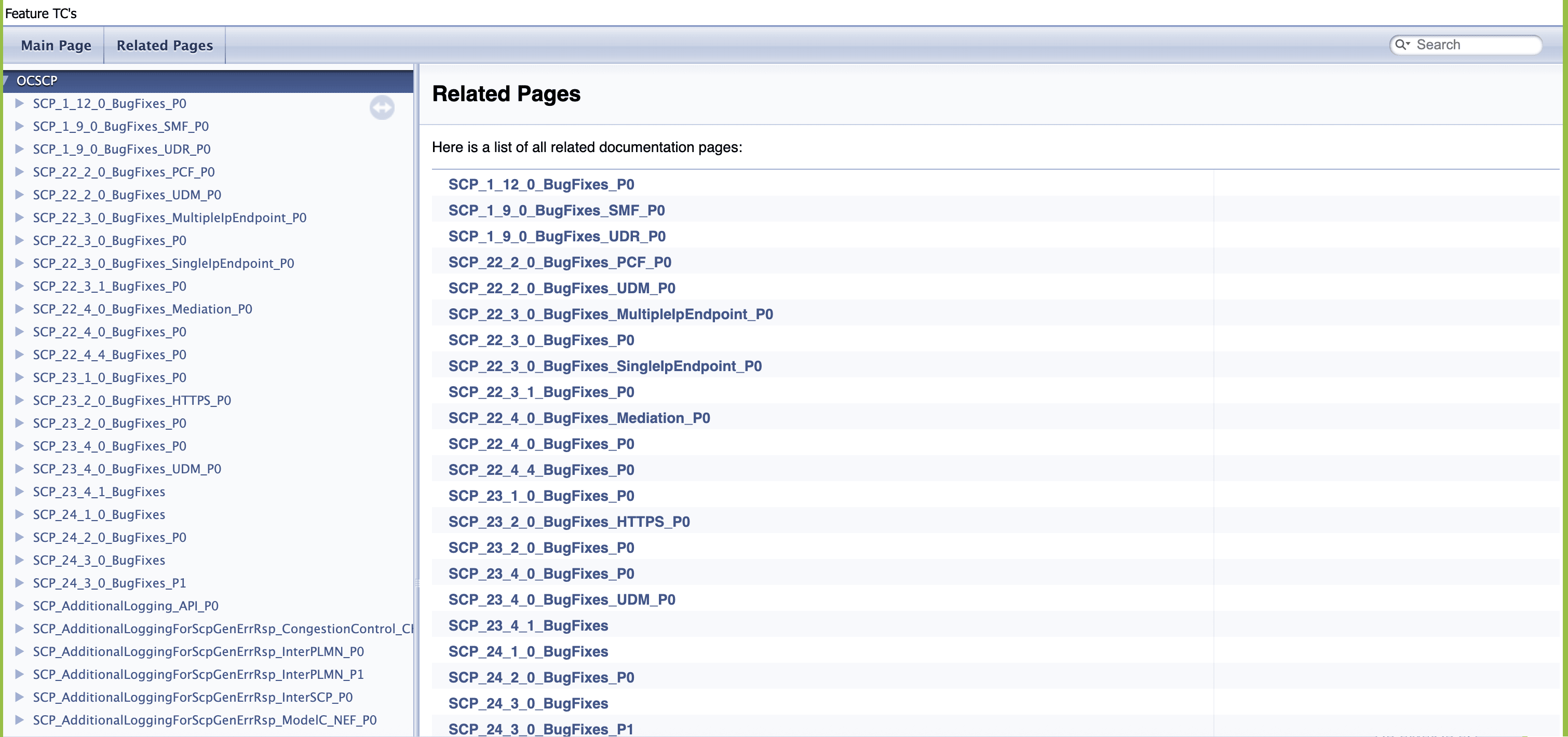

Figure 4-10 BSF_NewFeatures Feature List Documentation

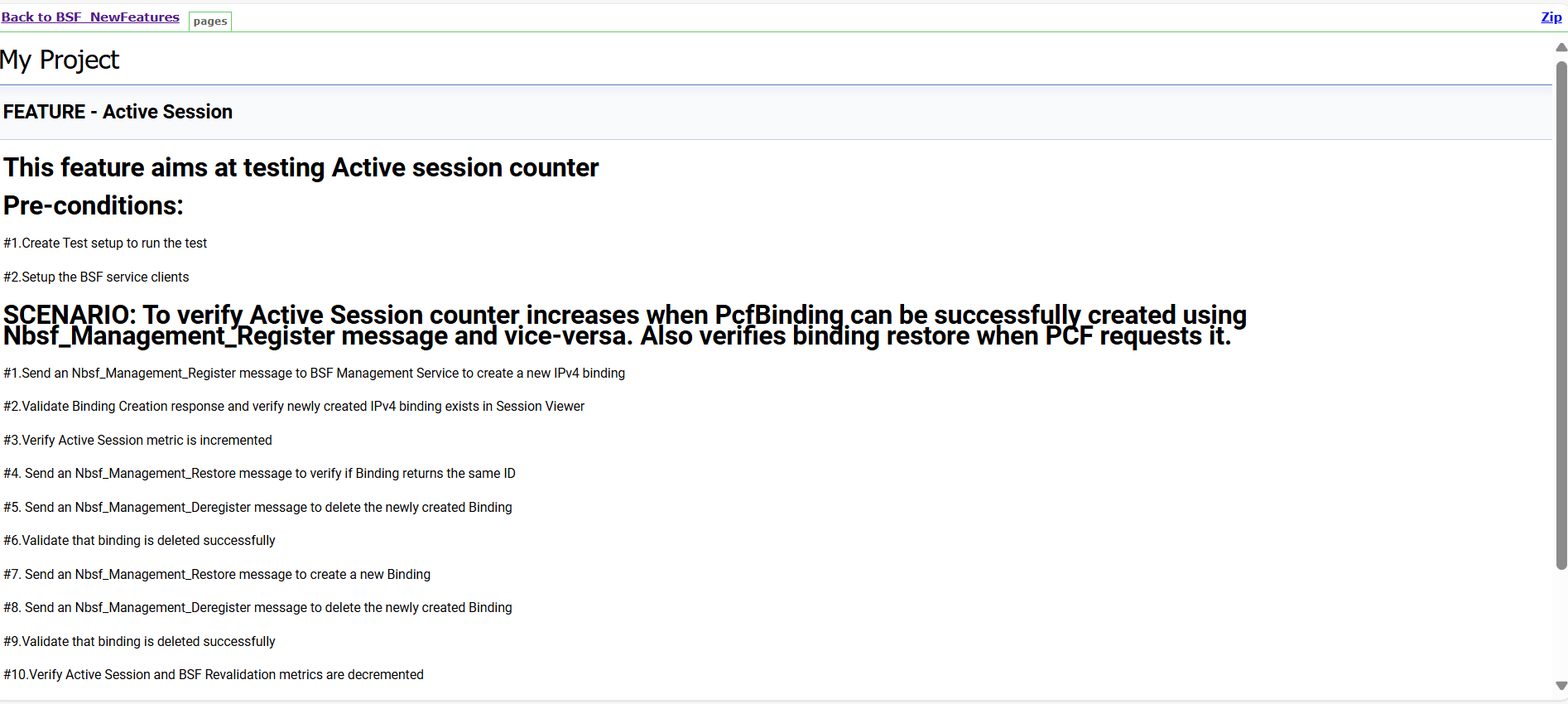

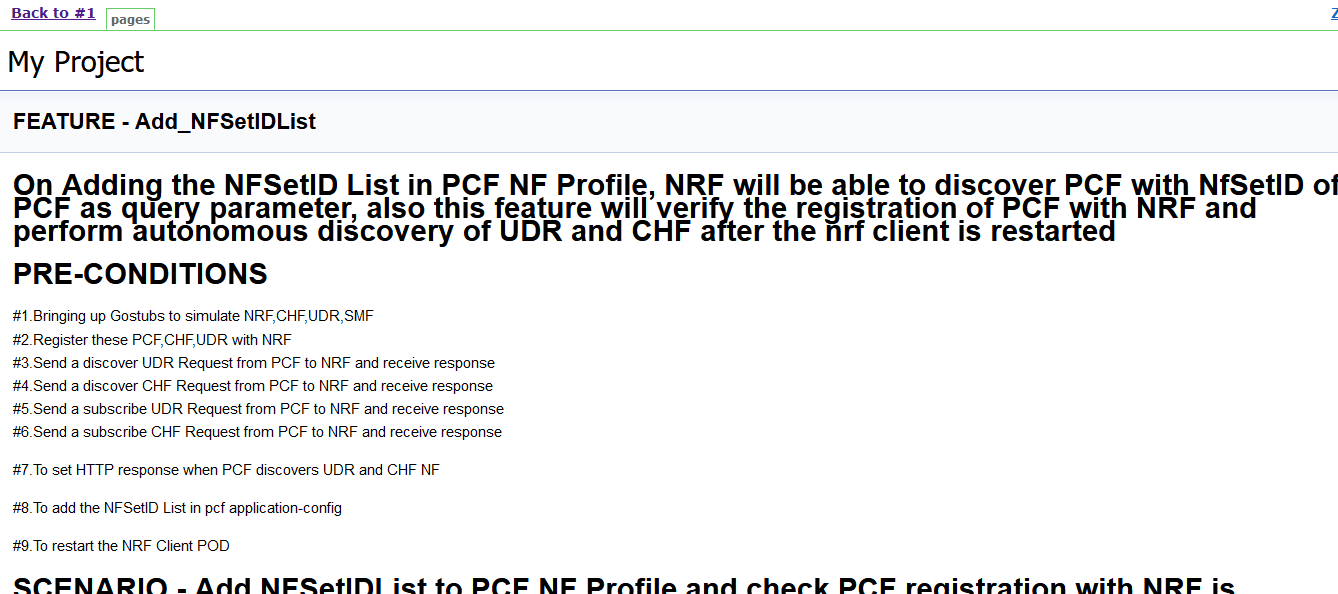

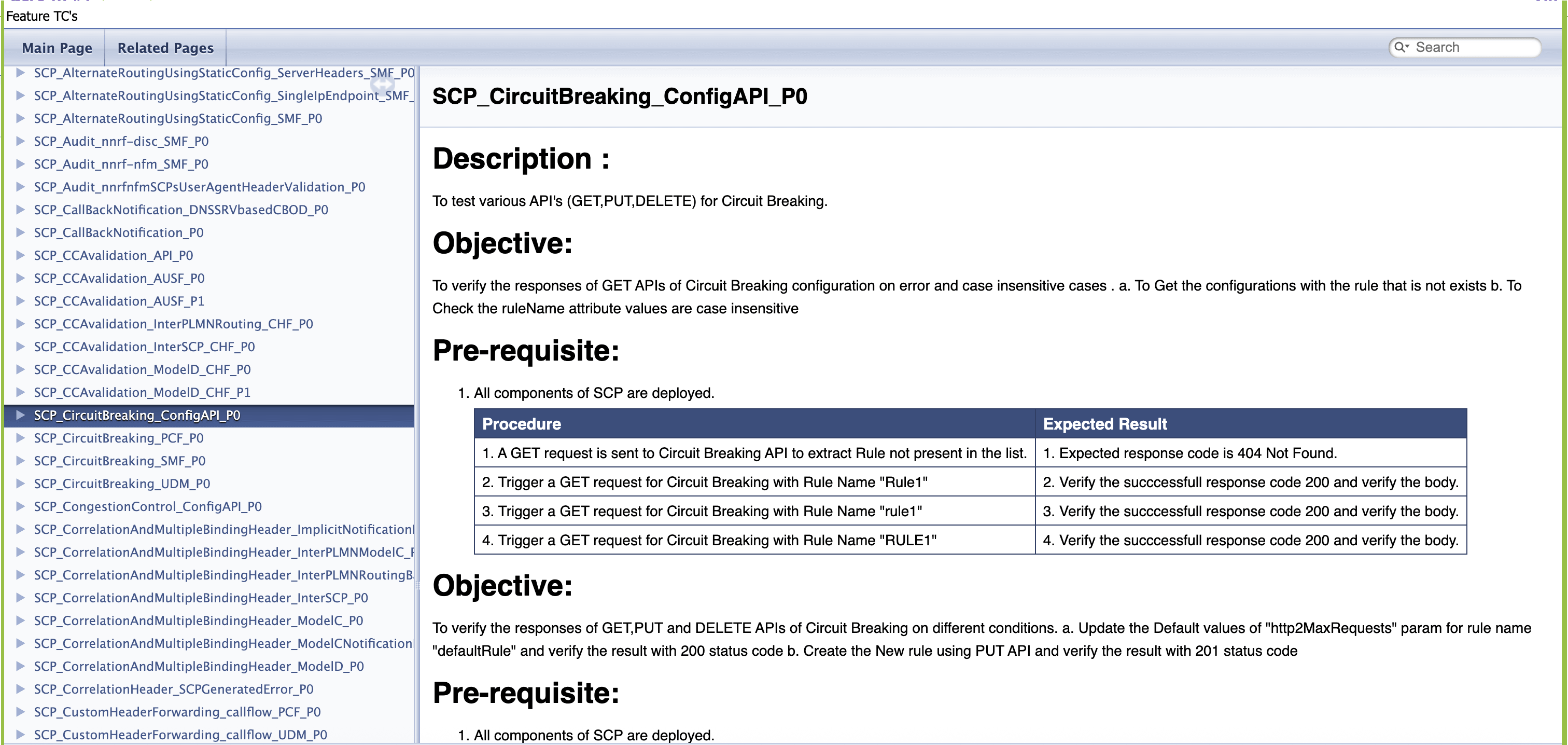

Click any functionality to view its test cases and scenarios of each test case. For example, when you click FEATURE - BSF_Error_Response_Enhancements, the following test description appears:

Figure 4-11 Test Cases and Scenarios of Feature - BSF_Error_Response_Enhancements

Based on the functionalities covered under Documentation, the Build Requires Parameters screen displays test cases. To navigate back to the pipeline BSF-NewFeatures screen, click Back to BSF_NewFeatures link available on top left corner of the screen.

Test Result Analyzer

Using the Test Result Analyzer plug-in available in ATS, user can view consolidated and detailed reports. For more information, see Test Results Analyzer section.

Test Case Mapping to Features and Display Total Counts

With this feature, users can view Total Count of Features, TestCases/Scenarios and TestCase mapping to each Feature of BSF in ATS View. For more information, see Support for Test Case Mapping and Count section.

Stub Predefined_priming Support

Stub Predefined_priming configuration in ATS enables ATS to respond back with the payload message instead of default message when prime configuration does not match with the feature level priming or when the sub is not primed.

- Stub checks against the feature level prime configuration.

- If a match is found in feature level prime, stub replies with the payload message.

- If there is no match found, stub replies with the default

response.

stub_log: No match found in prime configuration for sending the response. Sending default - 200 ATS log: {default_response}

- Stub checks against the feature level prime configuration.

- If a match is found in feature level prime, feature level prime is used for responding to the request.

- If a match is not found in the feature level prime, but found in the pre-configured prime, pre-configured prime is used for responding to the request.

- If a match is found in both pre-primed as well as feature level prime, feature level prime configuration is given priority and the same is used for responding to the request.

- If a match is not found in both pre-primed as well as feature level prime, stub sends a default response.

4.1.6 Running BSF Regression Pipeline

This section describes how to run test cases for Binding Support Function (BSF) Regression pipeline.

The BSF_Regression pipeline is a pre-configured pipeline where all the test cases of previous releases are available. For example, for BSF 25.1.200, this pipeline has all the test cases released till BSF 25.1.200.

- Click BSF_Regression in the Name column.

- Click Build with Parameters in the left navigation pane.

- Copy the required test cases that are available in the BSF folder and place them appropriately within the custom folder for BSF_Regression.

- Reload the page to view the test cases available in the custom Regression folder.

- Click Build.

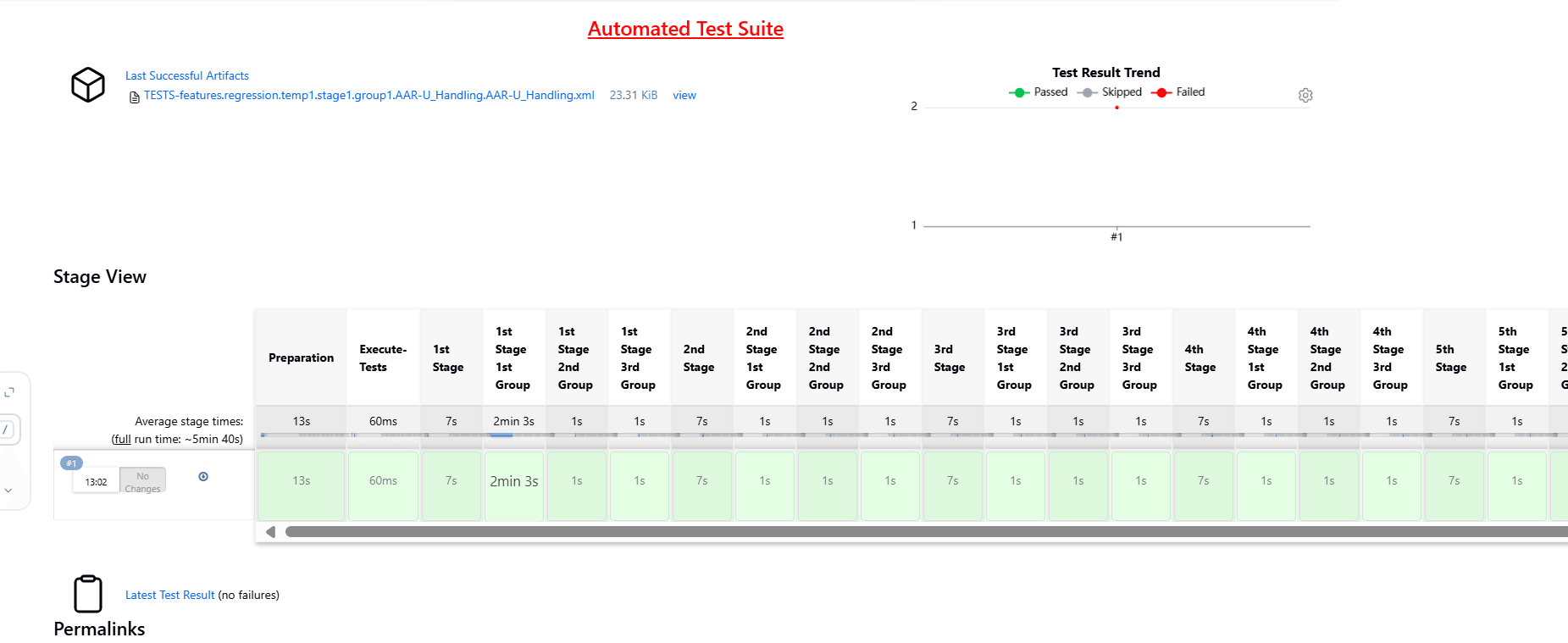

Figure 4-12 BSF_Regression Pipeline

Note:

The Regression pipeline does not have any sanity option. However, users must perform all the steps performed in the BSF_NewFeatures pipeline. Ensure that the pipeline script is configured according to the environment variables.4.1.7 BSF_Regression Documentation

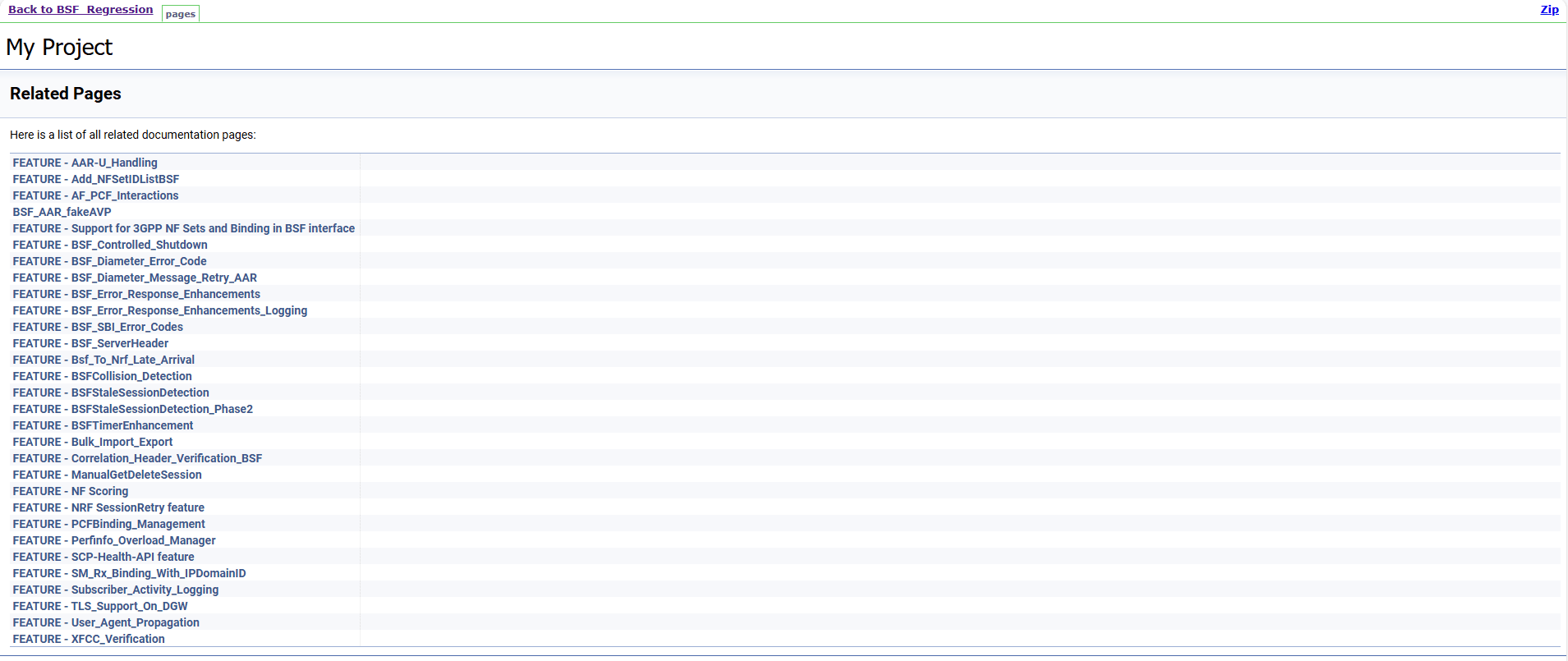

This section describes the documentation for BSF_Regression pipeline.

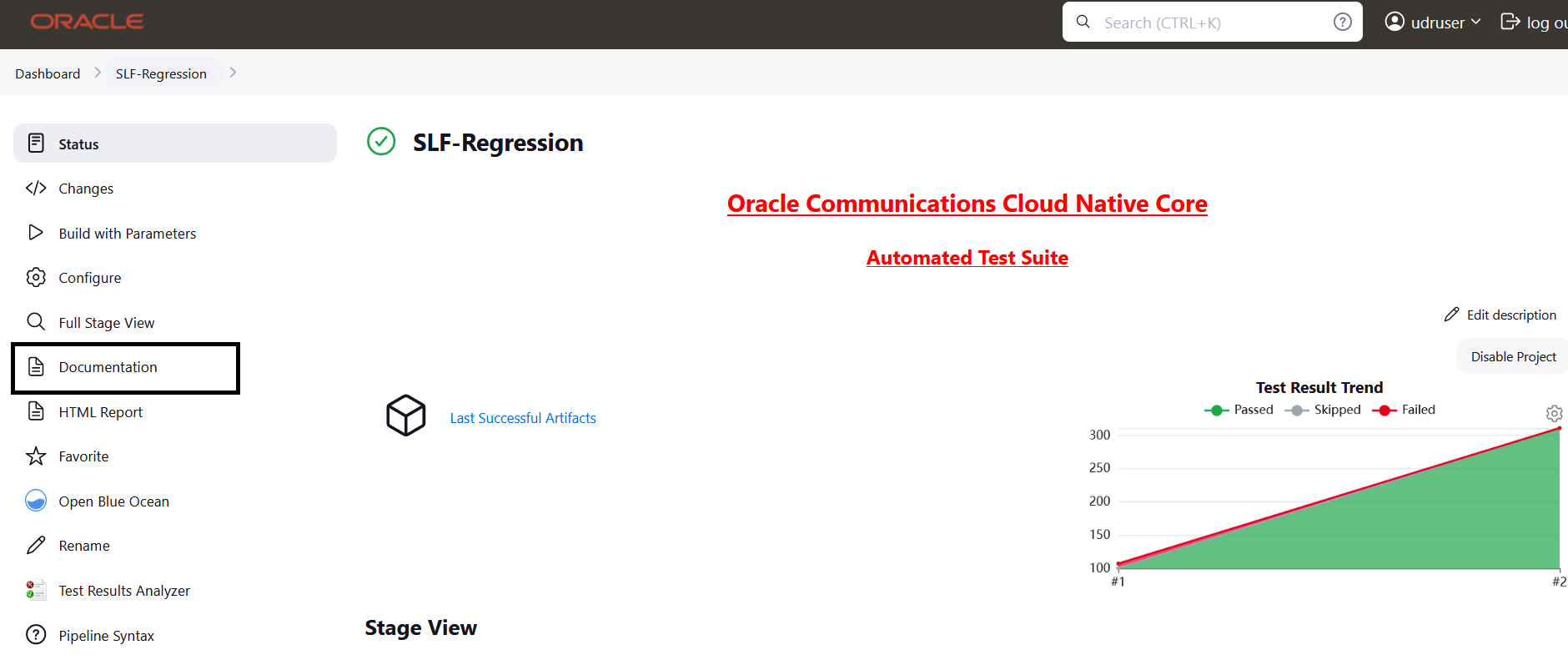

To view the documentation for any of the BSF features, on the ATS home page, click BSF_Regression. Then, click Documentation in the left navigation pane.

This page shows features of only those test cases that are released in previous releases.

Figure 4-13 BSF_Regression Features Documentation

4.2 Running NRF Test Cases using ATS

4.2.1 Prerequisites

To run NRF test cases using NRF ATS 25.1.200, you need to ensure that following prerequisites are fulfilled.

- For running three-site georedundancy test cases, NRF 25.1.200 should be deployed on three sites with DB replication enabled. Georedundancy feature must be enabled on all the NRF sites before running these test cases.

- For running two-site georedundancy test cases, NRF 25.1.200 should be deployed on two sites with DB replication enabled. These test cases are executed separately as it requires two different NRFs. These can be executed on a three-site georedundancy setup as well. Georedundancy feature must be enabled on all the NRF sites before running these test cases.

- For running NRF-Growth test cases, two standalone NRF 25.1.200 instances should be deployed. These test cases are executed separately as it requires two different NRFs.

- All the ATS pipelines other than georedundancy, three-site georedundancy, and NRF Growth must be executed with only one NRF deployment (standalone NRF).

- The following NRF database tables should not have any entries:

- nrfApplicationDB.NfInstances

- nrfApplicationDB.NfStatusMonitor

- nrfApplicationDB.NfSubscriptions

- NRF/s should be deployed with the default Helm and REST

configurations, other than

ocnrfHostandocnrfPort. - To run NF-FQDN-Authentication-Feature test cases, deploy NRF and NRF ATS, both with NF Authentication feature changes.

- All microservices of NRF must be up and running including Alternate route and Artisan microservice.

- For running Alerts test cases, configure NRF Alerts on the AlertManager on the Prometheus Server. For more details on configuring alerts, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Before deploying NRF, user should create RSA and ECDSA certificates and keys (public and private) for AccessToken microservice.

- Deploy NRF 25.1.200 with default helm configurations using helm charts to run all test cases except NF-FQDN-Authentication-Feature cases.

- Ensure all microservices of NRF should be up and running including Accesstoken microservice. A key with RSA and another with ECDSA algorithm is mandatory.

- Copy the public keys (RSA and ECDSA) to the ATS pod at the /var/lib/jenkins/ocnrf_tests/public_keys location, with the names ec_public.pem and rs_public.pem respectively for ECDSA and RSA public keys. Create a copy of rs_public.pem and save it as new_public.pem in the same location.

- Create JSON files for key and certificate details and copy them to ATS at /var/lib/jenkins/ocnrf_tests/ location. Refer to KID feature specific Prerequisite.

- Deploy ATS using helm charts.

- For NRF 25.1.200, deploy five stub servers for running Roaming, SLF, Forwarding, and SLF via SCP functionality test cases. The service name for the stub servers must be notify-stub-service, notify-stub-service02, notify-stub-service03, amf1-cluster1-net2-amf-5gc-mnc016-mcc310-3gppnetwork-org, and slf-stub-service01.

- The slf-stub-service01 stub must be deployed with service port as 80. For more information, see Installing ATS for NRF.

- Replace the existing content with the following content in the

alternate-route section of the

ocnrf_custom_values.yamlfile:The following configuration changes are required to accommodate alternate routing test cases based on the latest configuration.

staticVirtualFqdns: - name: https://abc.test.com alternateFqdns: - target: notify-stub-service03 port: 8080 priority: 10 weight: 20 - target: notify-stub-service03 port: 8080 priority: 20 weight: 30 - name: http://xyz.test.com alternateFqdns: - target: notify-stub-service03 port: 8080 priority: 10 weight: 20 - target: notify-stub-service03 port: 8080 priority: 20 weight: 30 dnsSrvEnabled: false - Ensure Prometheus service is up and running.

- Deploy ATS and Stubs in the same namespace as NRF, as default ATS deployment is with role binding. In addition, deploy test stubs in the same namespace as NRF.

- User must not initiate a job in two different pipelines at the same time.

- User must not abort a running job, this may lead to data corruption in database.

- For getting PVC support to retain NRF ATS environment variables and pipeline console histories, follow the installation step. By default PVC support is disabled.

- If installation is done with Service mesh, the NRF must be deployed

with below annotation in lbDeployments and nonlbDeployments

section:

oracle.com/cnc: "true" traffic.sidecar.istio.io/excludeInboundPorts: "9090,8095,8096,7,53" traffic.sidecar.istio.io/excludeOutboundPorts: "9090,8095,8096,7,53"where, first one is for connecting to Operations Services Overlay (OSO) for Alerts test cases. Second one is for connecting to ATS for fetching the metrics from pods.

- If Service Mesh is enabled, then create a destination rule to fetch

the metrics from the Prometheus. In most of the deployments, Prometheus is kept

outside the service mesh and a destination rule is required to communicate

between TLS enabled entity (ATS) and non-TLS enabled entity (Prometheus).

To create a rule:

kubectl apply -f - <<EOF apiVersion:networking.istio.io/v1alpha3 kind:DestinationRule metadata: name:prometheus-dr namespace:ocnrf spec: host:oso-prometheus-server.ocnrf.svc.cluster.local trafficPolicy: tls: mode:DISABLE EOFIn the above rule,- name indicates the name of destination rule.

- namespace indicates where the ATS is deployed.

- host indicates the hostname of the Prometheus server.

- In case of NRF is deployed with network policy enabled, then before

NRF-ATS execution a new network policy must be created to access NRF-ATS Jenkins

GUI. Following is the

sample:

kubectl apply -f nodePort.yaml -n ocnrf apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-from-node-port spec: podSelector: matchLabels: app: ocats-nrf policyTypes: - Ingress ingress: - ports: - protocol: TCP port: 8080 - To run with CNE

HA Prometheus, update the prom_type value in

product_config/global.yamlandcustom_config/global.yamlfile to "cne".

Custom Folder Implementation

ATS provides custom test case folders (cust_newfeatures, cust_regression, and cust_performance) using which you can add new test cases, remove unwanted test cases, and modify existing test cases. It does not impact the original product packaged test cases available in the newfeatures, regression and performance folders. For more details, refer to Custom Folder Implementation.

Apart from cust_newfeatures and cust_regression, NRF ATS has cust_FQDNauthentication, cust_GeoRedundancy, and cust_3SiteGeo directories that contains test cases related to NF-FQDN and georedundancy respectively.

Key Identifier (KID) feature specific Prerequisite

- set_keyDetailsList.json

- set_currenKeyId.json

- tokenSigningDetails.json

$ cat set_keyDetailsList.json

{

"tokenSigningDetails": {

"defaultK8SecretDetails": {

"k8SecretNameSpace": "ocnrf",

"k8SecretName": "ocnrfaccesstoken-secret"

},

"keyDetailsList": [{

"keyID": "EcdsaKid",

"algorithm": "ES256",

"privateKey": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "ecdsa_private_key.pem"

},

"certificate": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "ecdsa_certificate.crt"

}

},

{

"keyID": "RsaKid",

"algorithm": "RS256",

"privateKey": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "rsa_private_key.pem"

},

"certificate": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "rsa_certificate.crt"

}

},

{

"keyID": "newKey",

"algorithm": "RS256",

"privateKey": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "rsa_private_key.pem"

},

"certificate": {

"k8SecretName": "ocnrfaccesstoken-secret",

"k8SecretNameSpace": "ocnrf",

"fileName": "rsa_certificate.crt"

}

}

]

}

}Note:

Update the following parameters in the above files:- k8SecretName

- k8SecretNameSpace

- fileName (name of the private keys and corresponding certificates)

For more information about parameter values, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

After updating the files, copy them under the /var/lib/jenkins/ocnrf_tests/ directory.

$ cat set_currenKeyId.json

{

"tokenSigningDetails":

{"currentKeyID":"EcdsaKid"

}

}Note:

User need not update thecurrentKeyID name (for example: EcdsaKid), if

the same file name as in keyID is used while configuring the

set_currenKeyId.json file.

$ cat tokenSigningDetails.json

{

"presentCurrentKey" : {

"algorithm" : "ES256",

"privateKey" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "ecdsa_private_key.pem"

},

"certificate" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "ecdsa_certificate.crt"

}

},

"newCurrentKey" : {

"algorithm" : "RS256",

"privateKey" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "rsa_private_key.pem"

},

"certificate" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "rsa_certificate.crt"

}

}

}$ cat set_currenKeyId.json

{

"tokenSigningDetails":

{"currentKeyID":"EcdsaKid"

}

}Note:

User doesn't want to update the name of the key if the same name was used while configuring.$ cat tokenSigningDetails.json

{

"presentCurrentKey" : {

"algorithm" : "ES256",

"privateKey" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "ecdsa_private_key.pem"

},

"certificate" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "ecdsa_certificate.crt"

}

},

"newCurrentKey" : {

"algorithm" : "RS256",

"privateKey" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "rsa_private_key.pem"

},

"certificate" : {

"k8sSecretName" : "ocnrfaccesstoken-secret",

"fileName" : "rsa_certificate.crt"

}

}

}Note:

Here, user should update k8SecretName fileName (name of private keys and the corresponding certificates.Note:

Update the following parameters in the above code snippet:- k8SecretName

- fileName (name of the private keys and corresponding certificates)

For more information about parameter values, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

$ kubectl cp tokenSigningDetails.json ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/tokenSigningDetails.json -n ocnrf

$ kubectl cp set_keyDetailsList.json ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/set_keyDetailsList.json -n ocnrf

$ kubectl cp set_currenKeyId.json ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/set_currenKeyId.json -n ocnrf

$ kubectl cp ec_public.pem ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/public_keys/ec_public.pem -n ocnrf

$ kubectl cp rs_public.pem ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/public_keys/rs_public.pem -n ocnrf

$ kubectl cp rs_public.pem ocats-ocats-nrf-58fc5dcbb9-zjsks:/var/lib/jenkins/ocnrf_tests/public_keys/new_public.pem -n ocnrfPrerequisites to Run Testcases for the CCA-Header Feature

To run the pipeline for the CCA-Header feature, NRF must be deployed with the following changes:

- During installation of NRF, the namespace, secret name and the

caroot.cerfile name must be provided in NRF's custom values, in ingress-gateway section, as follows:ingress-gateway: ccaHeaderValidation: k8SecretName: ocingress-secret k8NameSpace: ocnrf fileName: caroot.cer - In NRF's custom values,

metadata.ccaHeaderValidation.enabledshould be set to true in the 'accesstoken_mapping' object from theingress-gateway.routesConfiglist.Note:

If ccaHeaderValidation is enabled in NRF, only CCA-Header pipeline should be run. The other pipelines (Newfeatures, Regression, 2SiteGeo, 3SiteGeo, or FQDN-Authentication) will not work with the feature enabled.ingress-gateway: routesConfig: - id: accesstoken_mapping uri: http://{{ template "accesstoken.service.fullname" . }}:{{ template "accesstoken.service.port" . }} path: /oauth2/token order: 4 filters: controlledShutdownFilter: applicableShutdownStates: - COMPLETE_SHUTDOWN metadata: ccaHeaderValidation: enabled: true - Create a secret with the

caroot.cerfile provided in the ocats_ocnrf_tests_jenkinsjobs_25.1.200.tgz, using the following command. This samecaroot.cerfile is required to be configured in the secret to run the CCA-Header cases.kubectl create secret generic <secret name> --from-file=<ca root file name> -n <namespace>For example:kubectl create secret generic ocingress-secret --from-file=caroot.cer -n ocnrf - Update the secret name from step 3 in global.yaml file in either /var/lib/jenkins/ocnrf_tests/product_config or /var/lib/jenkins/ocnrf_tests/custom_config based on the whether the pipeline will be run with Product_Config option or Custom_Config option, under the ccaHeaderSecretName parameter.

Prerequisites to Run Testcases for the NRF Growth Features

To run the pipeline for the NRF Growth feature, the following prerequisites have to be done.

- Install two NRFs that are not georedundant with each other.

- The pipeline configuration has to be set to the below before running

the pipeline. The values of options "u" to "x" have to be set to

0.1.

node ('master'){ //a = SELECTED_NF b = NF_NAMESPACE c = FT_ENDPOINT d = GATEWAY_IP e = GATEWAY_PORT //f = CONFIG_IP g = CONFIG_PORT h = STUB_IP i = STUB_PORT j = NFINSTANCEID //k = PROMETHEUS_IP l = PROMETHEUS_PORT m = RERUN_COUNT n = PROMETHEUS_URI o = HELM_RELEASE_NAME //p = MYSQL_HOST q = PRIVILEGED_USER_SECRET_NAME r = DISCOVERY_WAIT_TIME //s = REPLICATION_STATUS_URI t = CLUSTER_DOMAIN u = REGISTER_WRITE_WAIT_TIME //v = SUBSCRIPTION_WAIT_TIME w = ACCESSTOKEN_WAIT_TIME x = REGISTER_READ_WAIT_TIME sh ''' sh /var/lib/jenkins/ocnrf_tests/preTestConfig.sh \ -a NRF \ -b occne-cndbtierone,occne-cndbtiertwo \ -c ocnrf-ingressgateway.ocnrf.svc.cluster.local:80,ocnrf-1-ingressgateway.ocnrf.svc.cluster.local:80 \ -d ocnrf-ingressgateway.occne-cndbtierone,ocnrf-1-ingressgateway.occne-cndbtiertwo \ -e 80,80 \ -f ocnrf-nrfconfiguration.occne-cndbtierone,ocnrf-1-nrfconfiguration.occne-cndbtiertwo \ -g 8080,8080 \ -h notify-stub-service.occne-cndbtierone,notify-stub-service02.occne-cndbtierone \ -i 8080,8080 \ -j 6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c,6faf1bbc-6e4a-4454-a507-a14ef8e1bc5d \ -k oso-prom-svr.oso \ -l 80 \ -m 2 \ -n /prometheus/api/ \ -o ocnrf,ocnrf-1 \ -p mysql-connectivity-service.occne-cndbtierone,mysql-connectivity-service.occne-cndbtiertwo \ -q privilegeduser-secret \ -r 0.5 \ -s http://ocnrf-ocnrf-app-info:5906/status/category/replicationstatus,http://ocnrf-1-ocnrf-app-info:5906/status/category/replicationstatus \ -t svc.cluster.local \ -u 0.1 \ -v 0.1 \ -w 0.1 \ -x 0.1 ''' if(env.Include_NewFeatures && "${Include_NewFeatures}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Regression" } } }

4.2.2 Logging into ATS

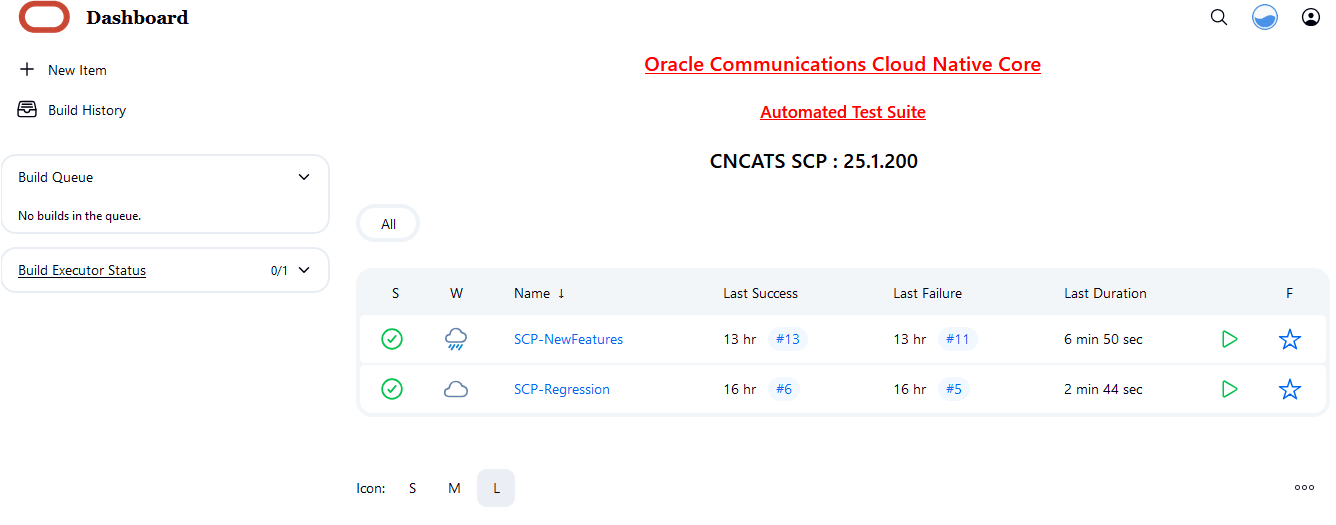

Running ATS

Note:

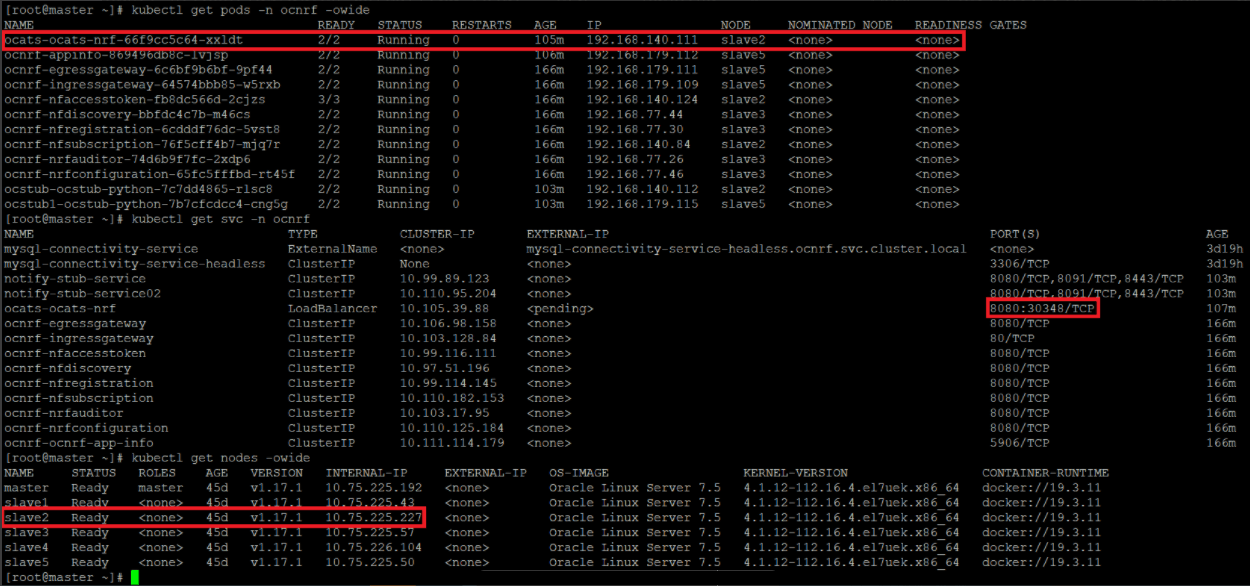

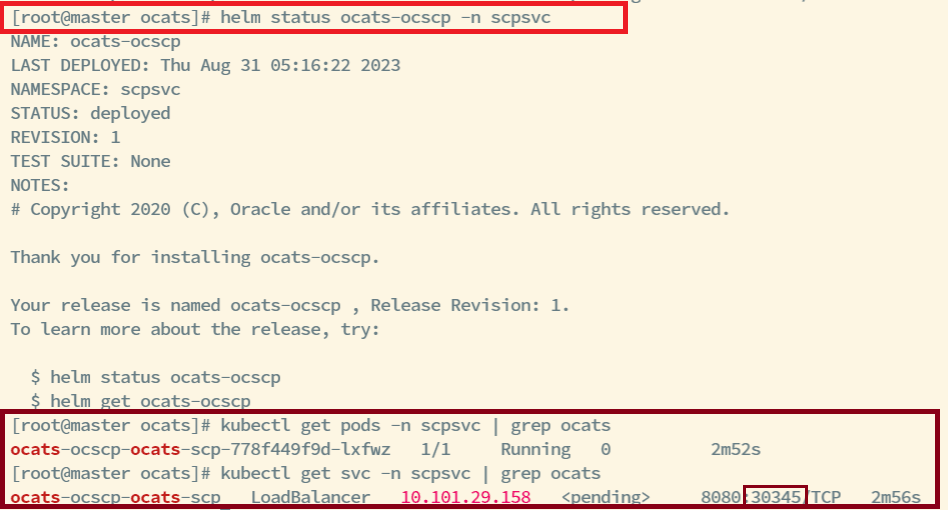

To modify default log in password, refer to Modifying Login Password.Figure 4-14 Verifying ATS Pod

For more information on verifying ATS deployment, see Verifying ATS Deployment.

Note:

In the Verifying ATS Pod screen, slave2 is the node where ATS is deployed, 30348 is the ATS nodeport and 10.75.225.227 is the worker node IP, highlighted in red. For more details on ATS deployment, refer to Installing ATS for NRF.Figure 4-15 ATS Login

- Enter the login credentials. Click

Sign in. The

following screen appears.

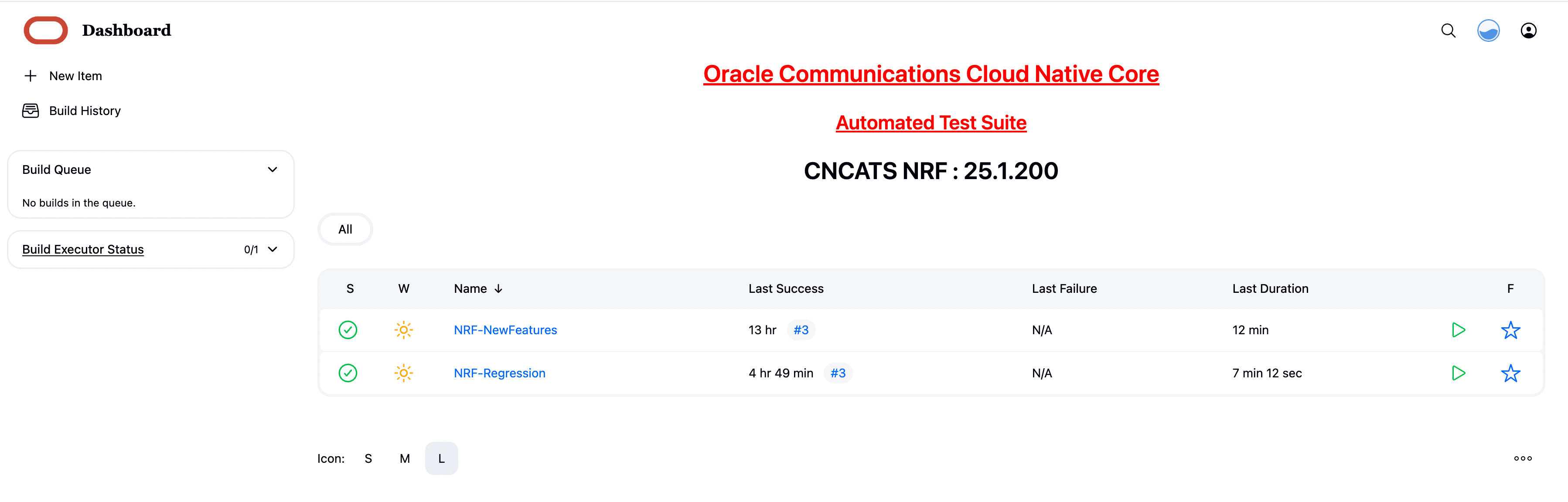

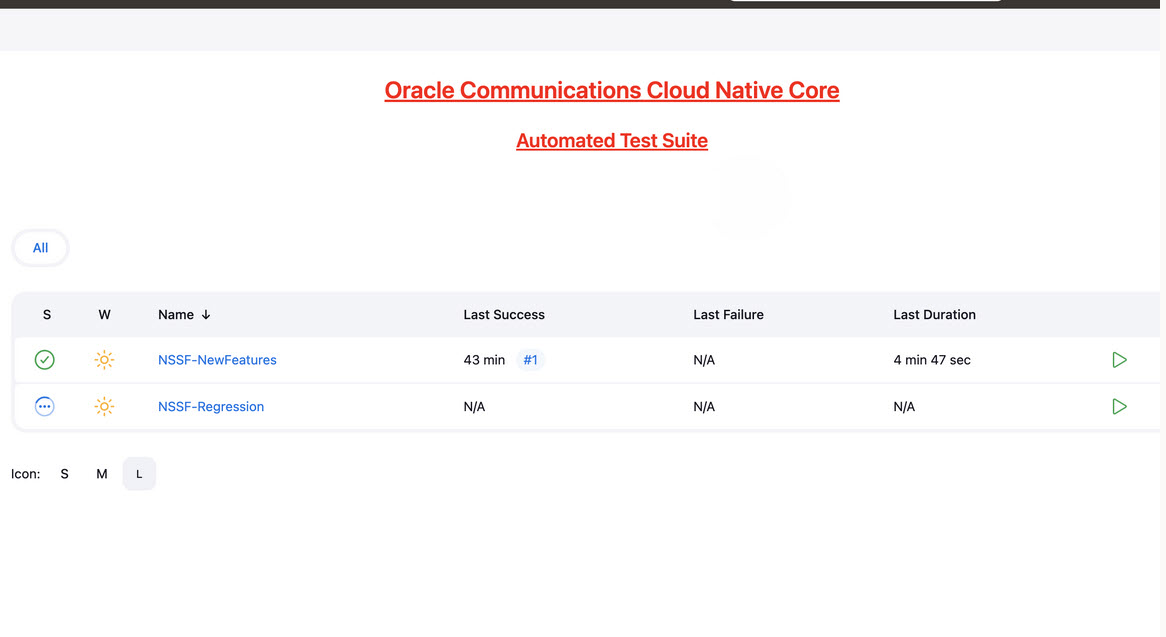

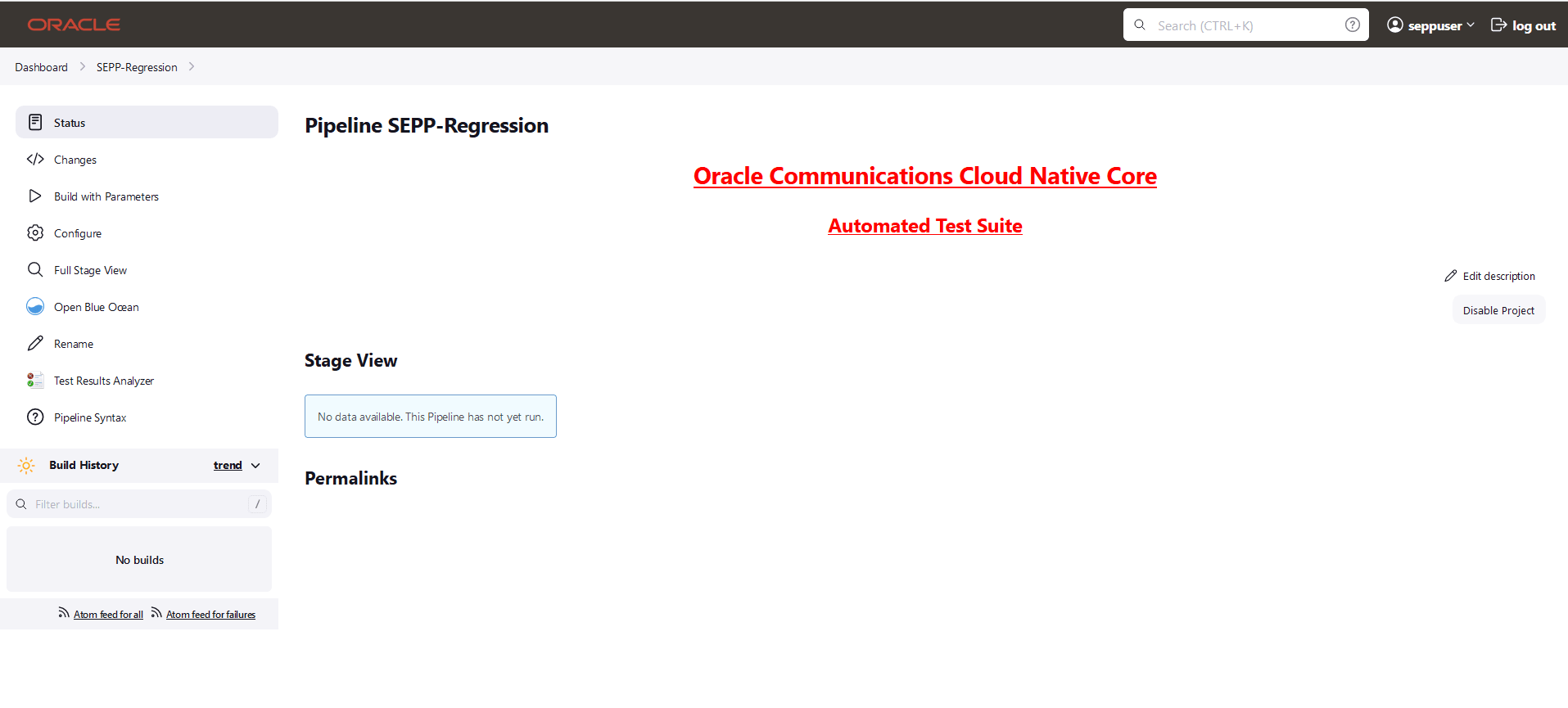

Figure 4-16 NRF Pre-Configured Pipelines

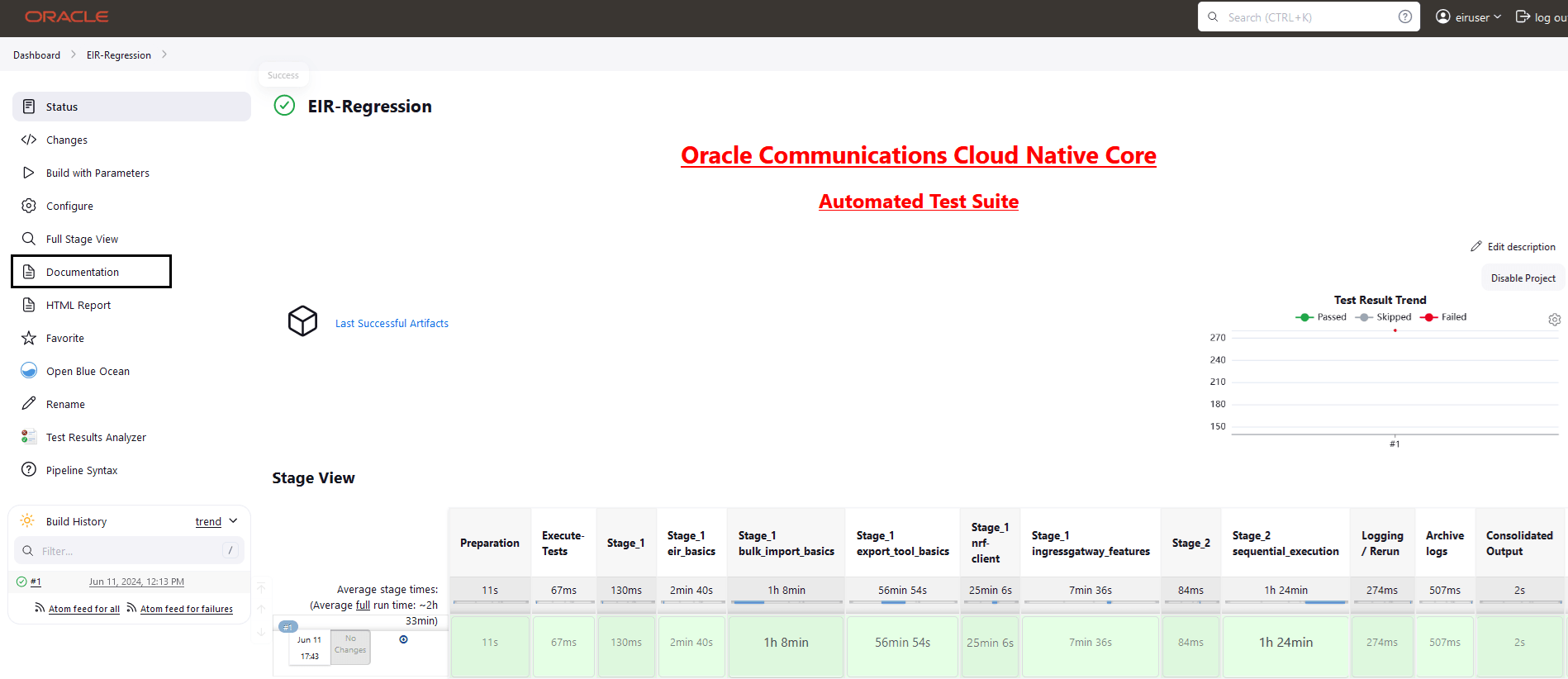

NRF ATS has two pre-configured pipelines.

- NRF-NewFeatures: This pipeline has all the test cases delivered as part of NRF ATS - 25.1.200.

- NRF-Regression: This pipeline has all the test cases delivered so far in the previous releases.

4.2.3 NRF-NewFeatures Pipeline

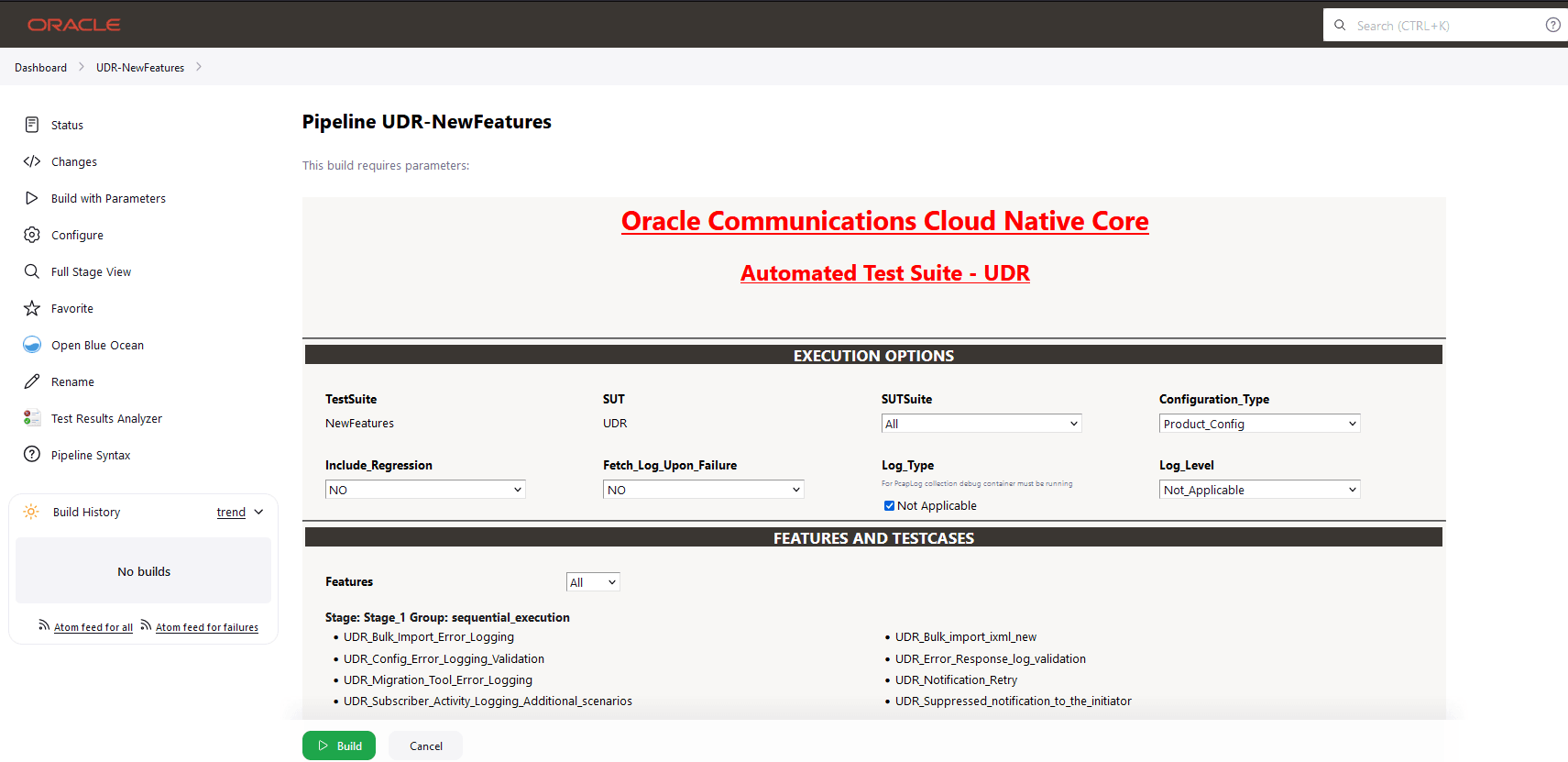

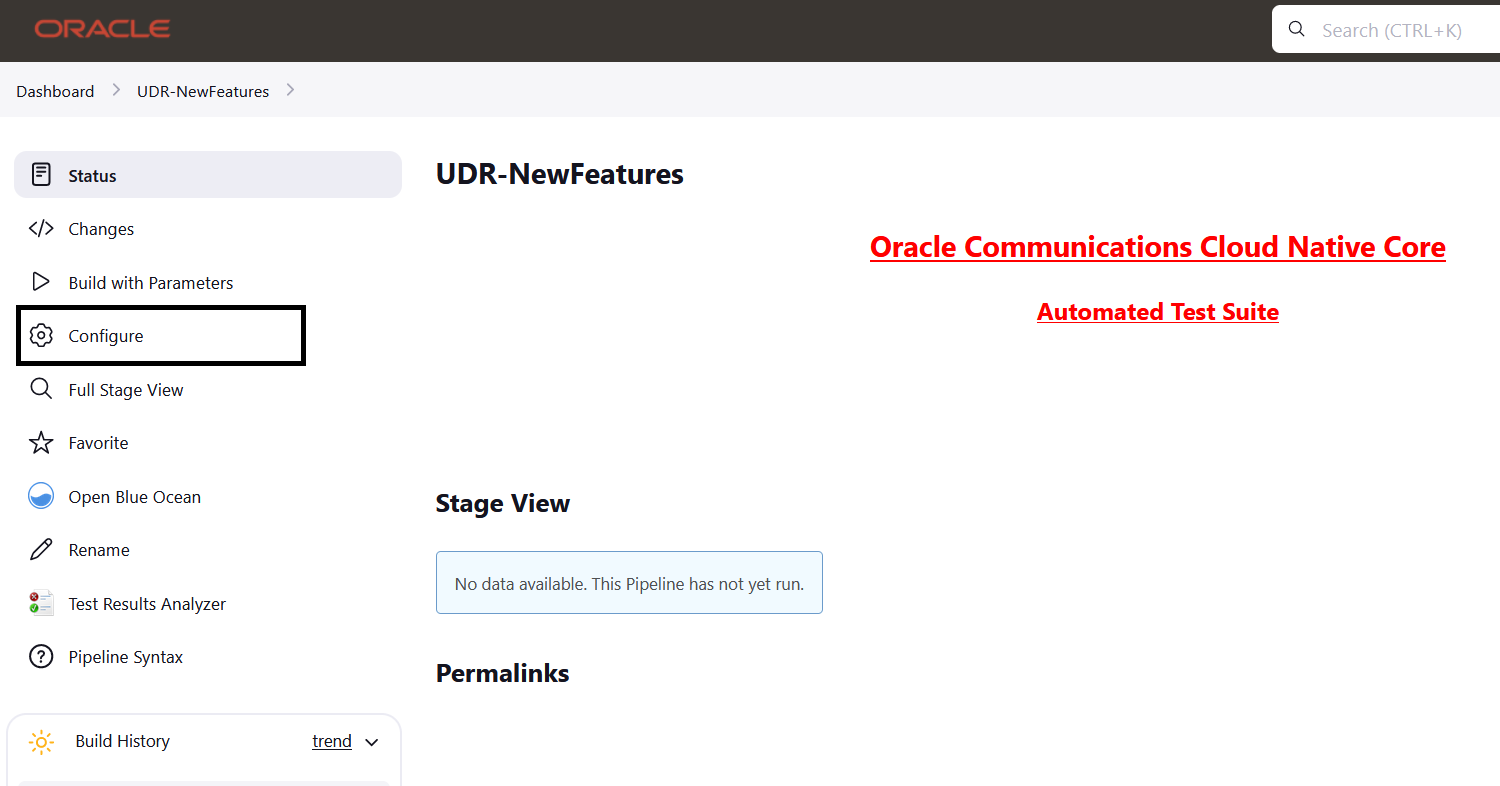

After identifying the NRF pipelines, configure ATS as one-time activity as per NRF deployment. In this pipeline, all the new testcases related to NRF are deployed. To configure its parameters:

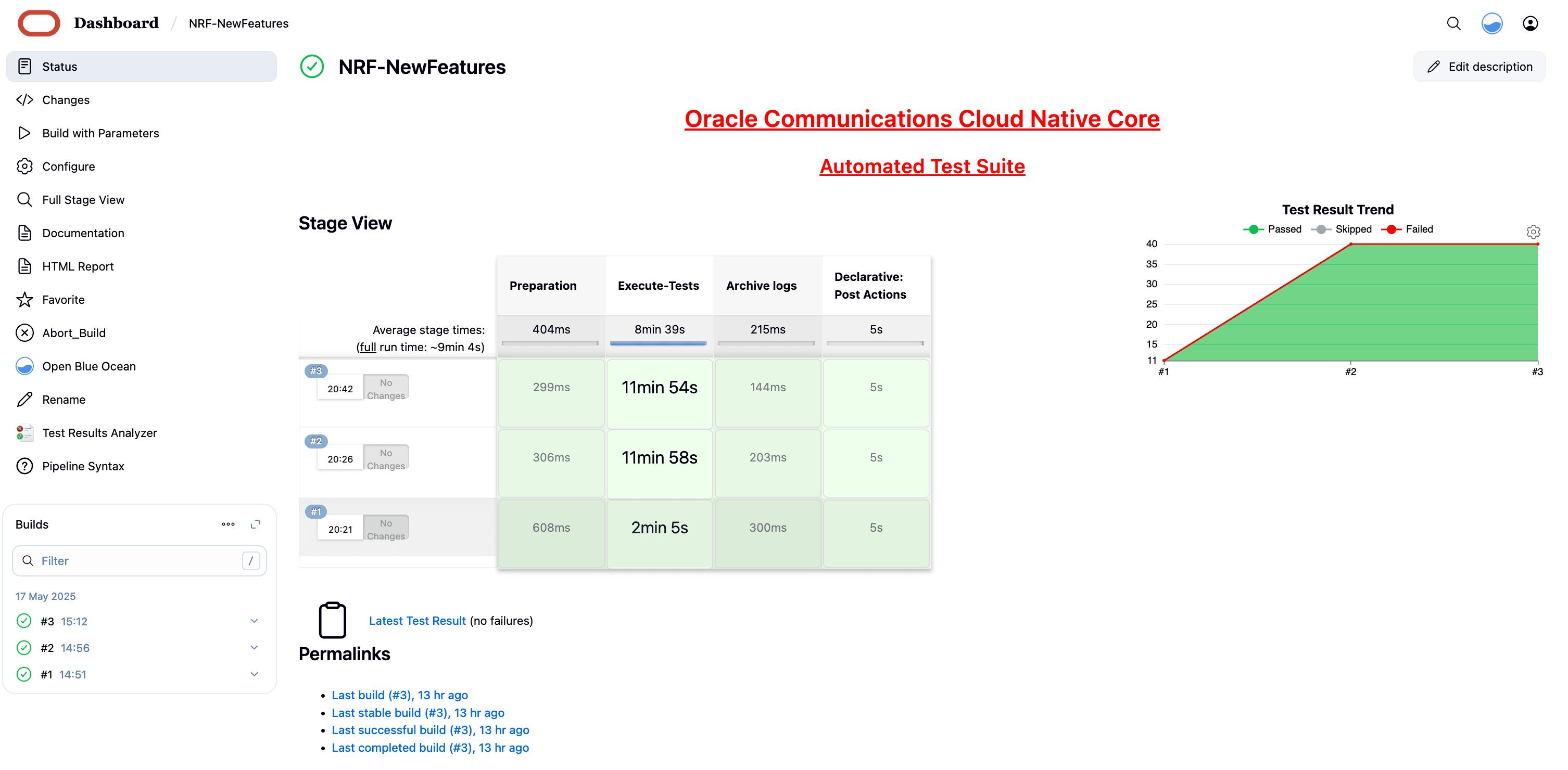

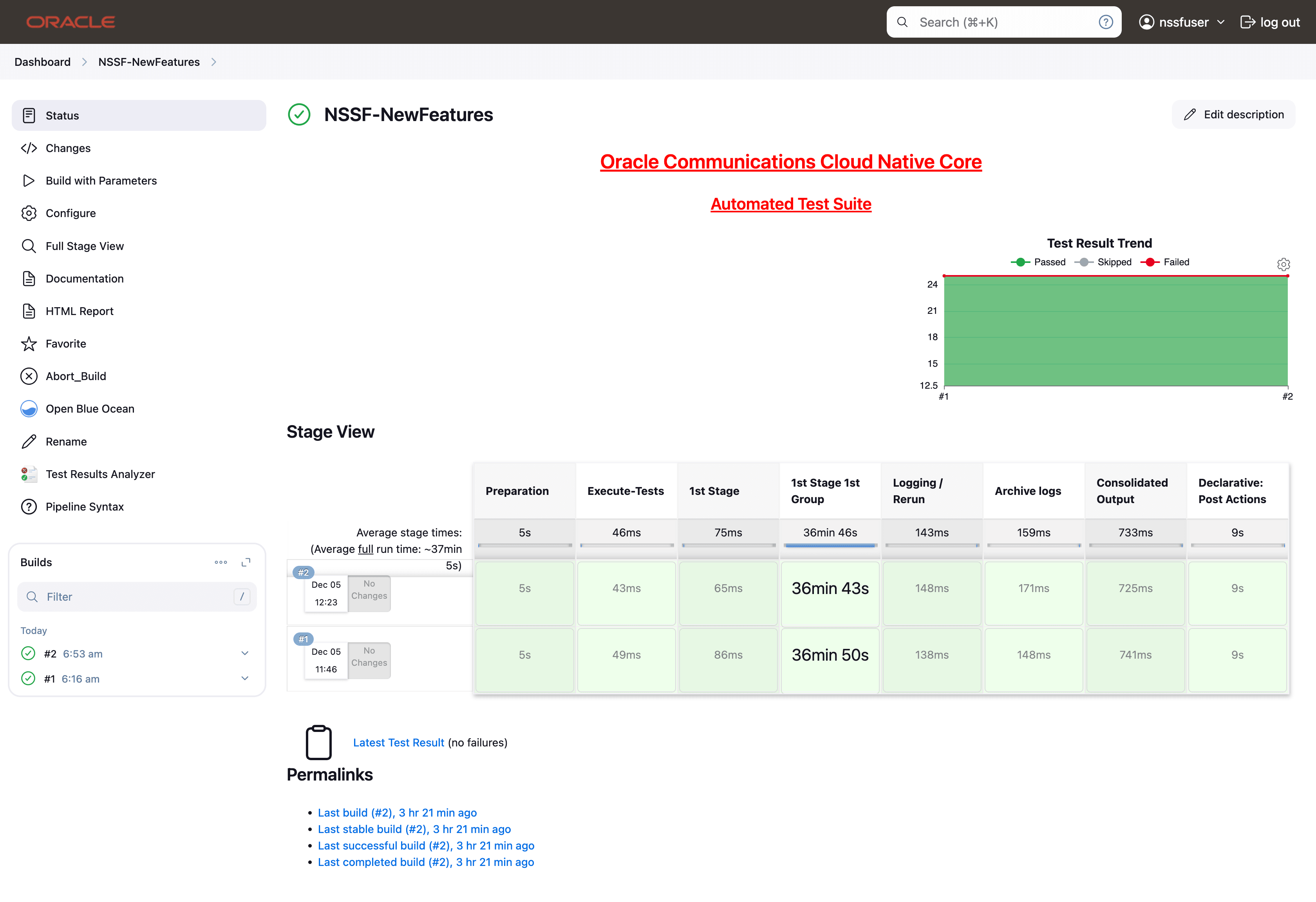

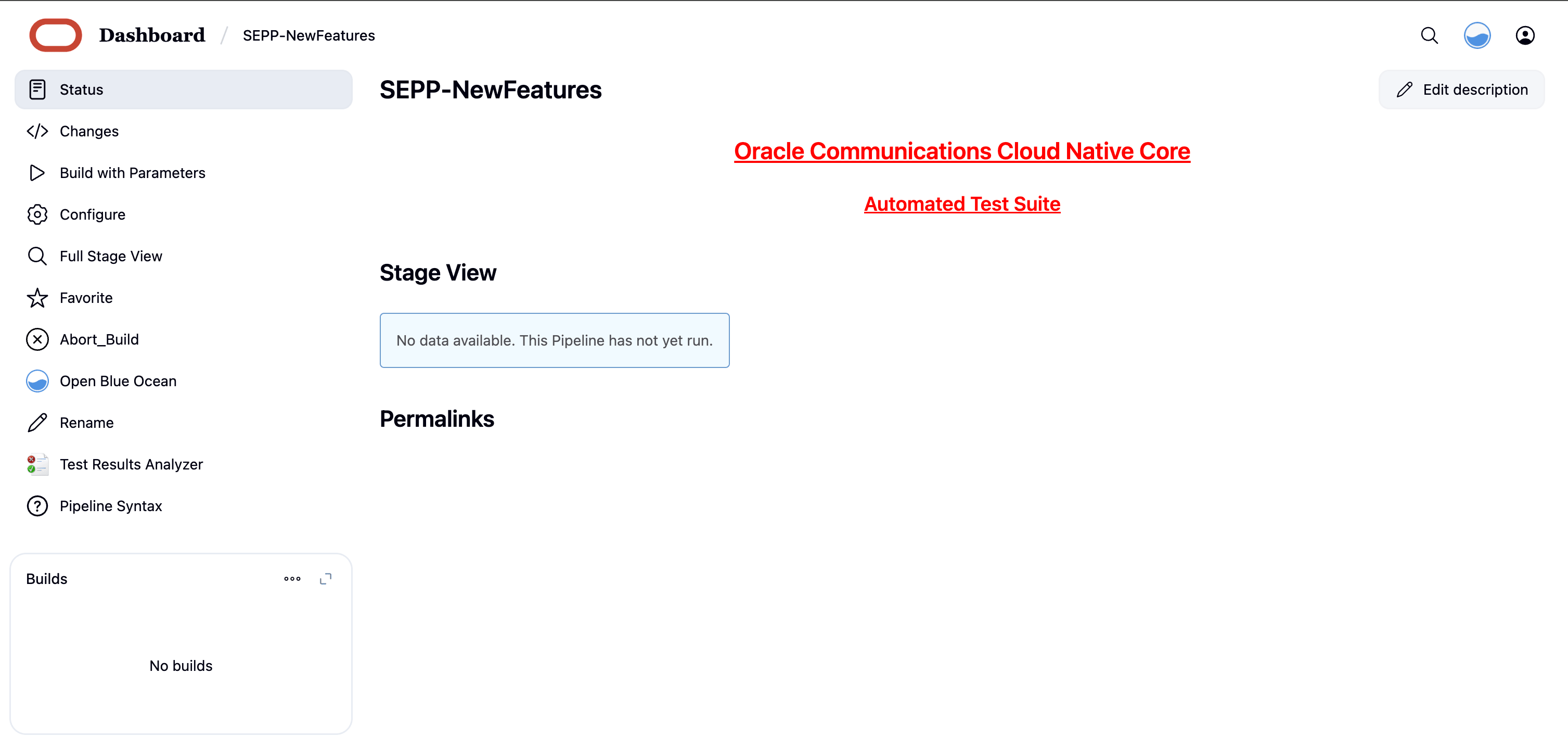

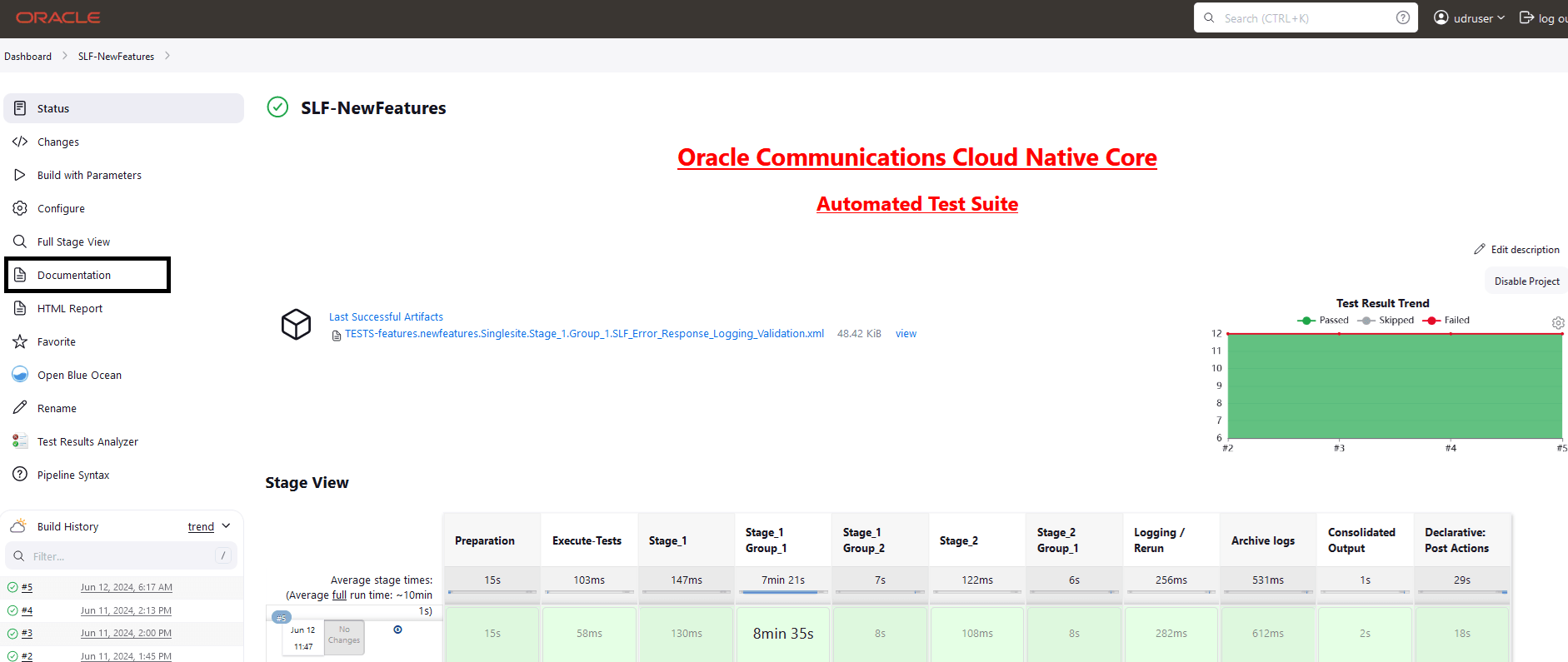

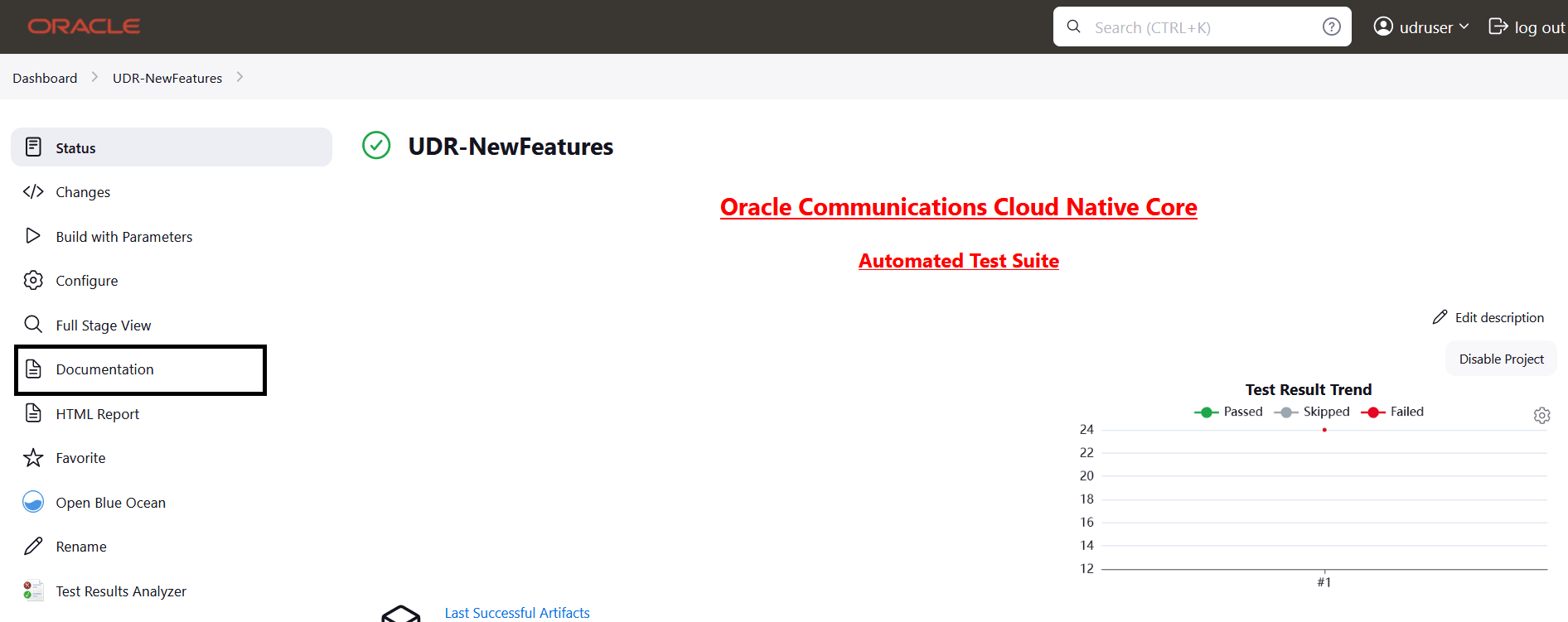

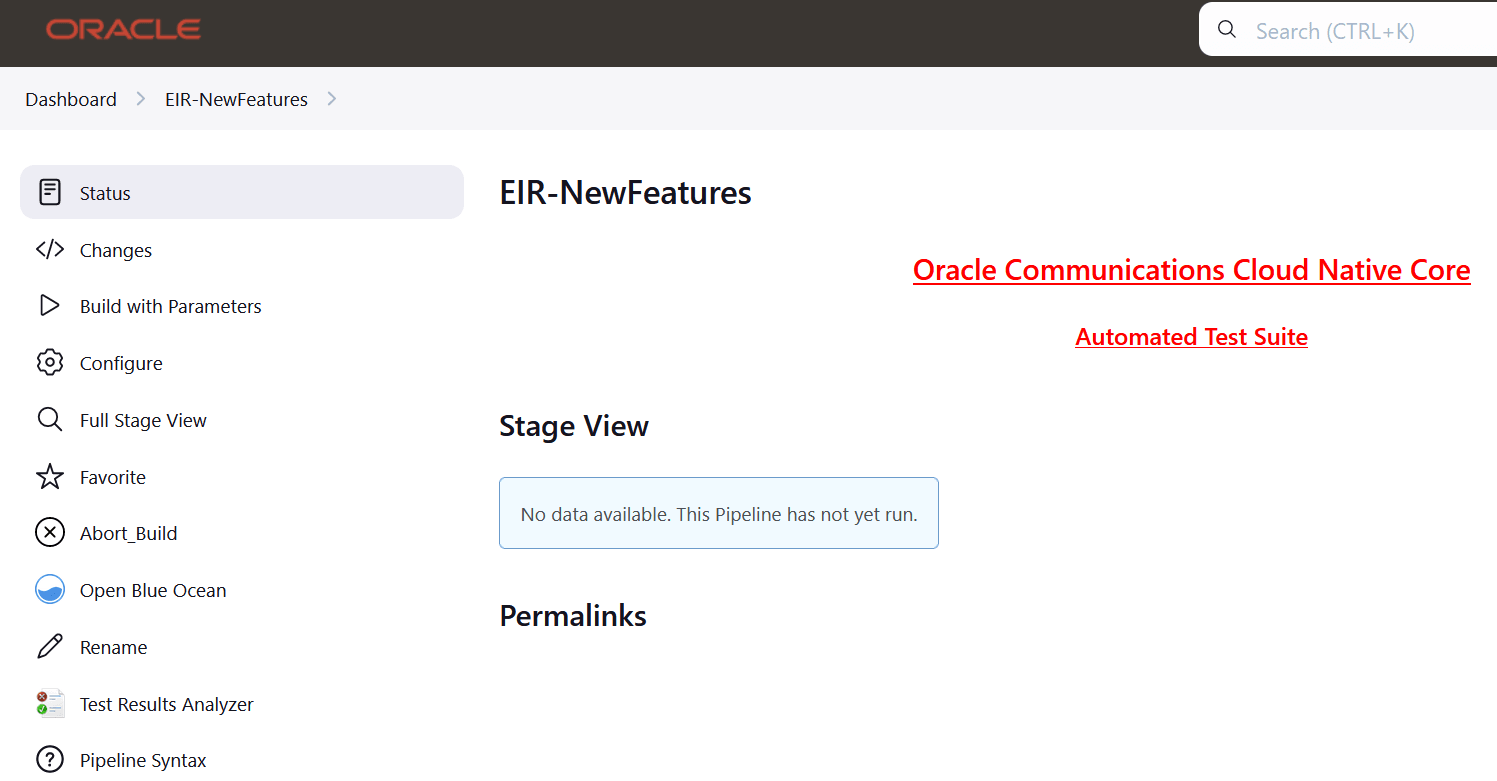

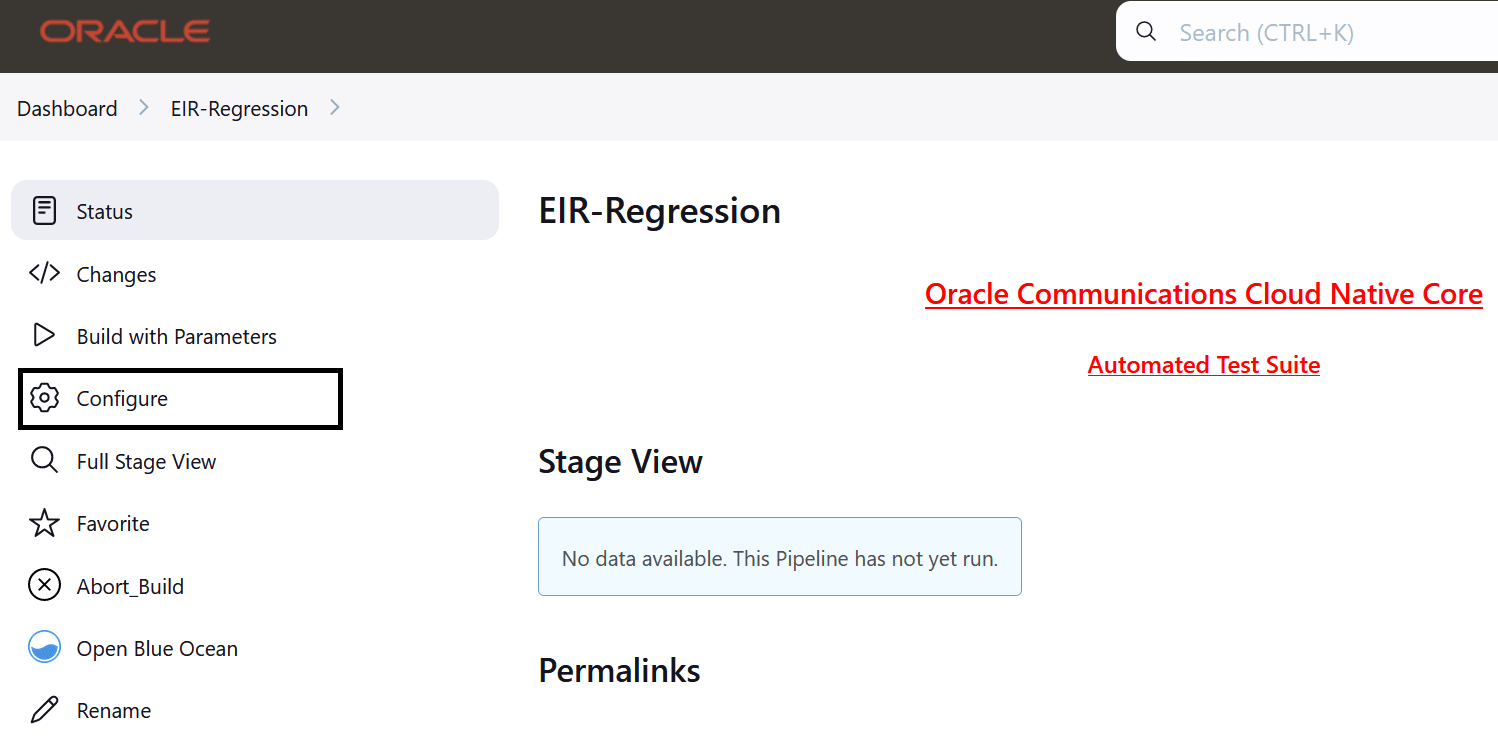

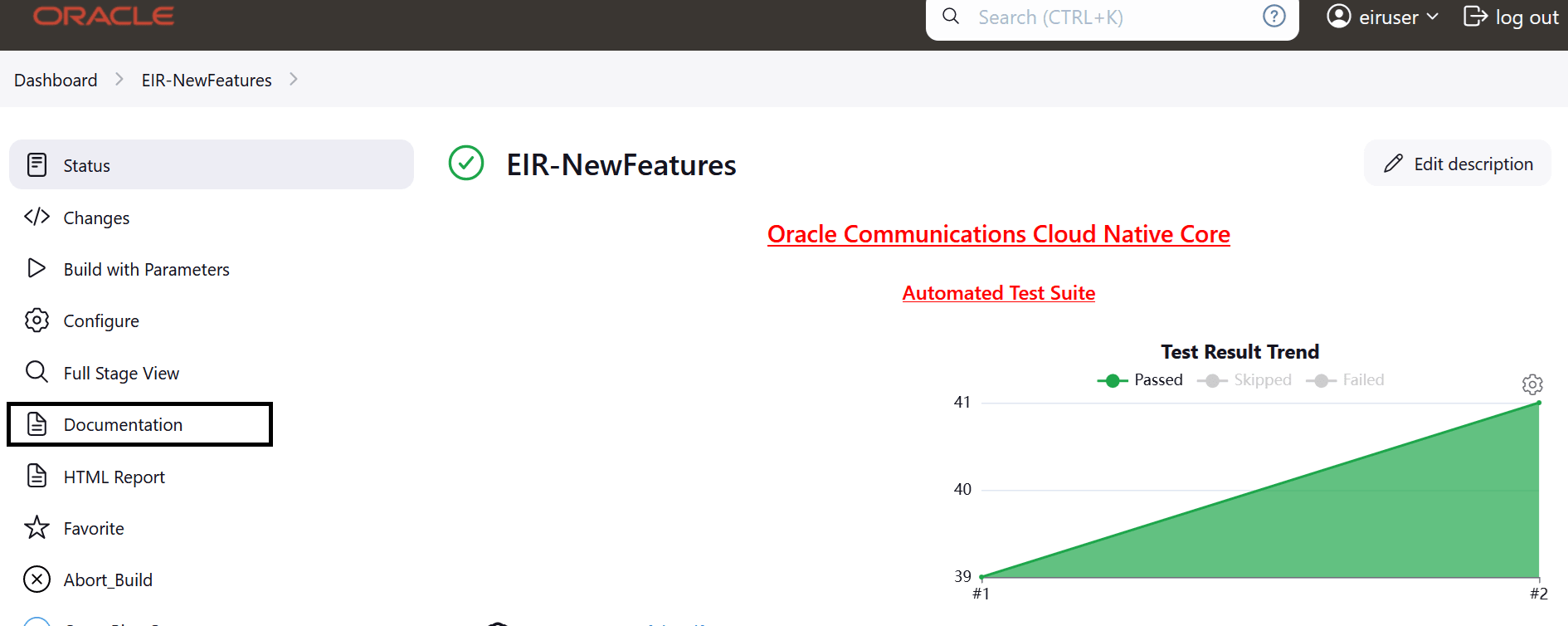

- Click NRF-NewFeatures in the Name column. The

following screen appears:

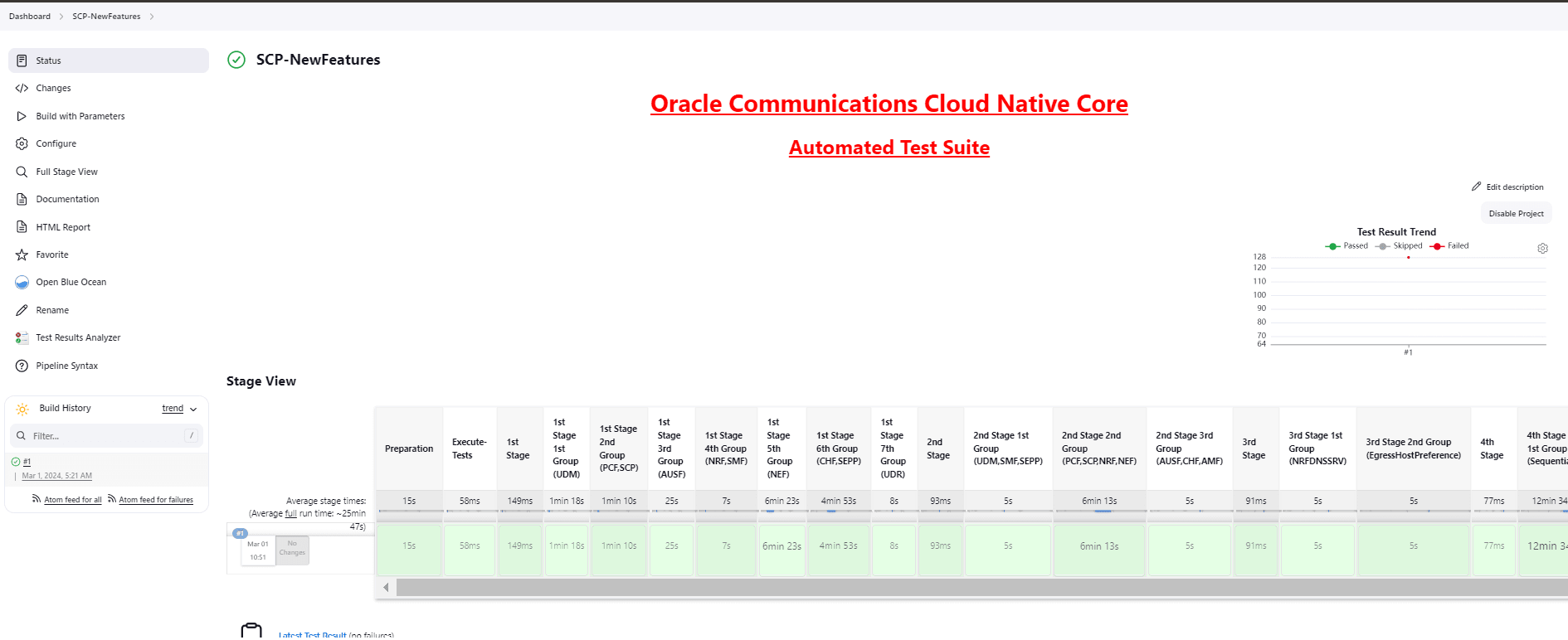

Figure 4-17 Configuring NRF-NewFeatures

In the above screen:

In the above screen:- Click Configure to configure NRF-New Features.

- Click Documentation to view the documented test cases, which are part of this NRF release.

- Click the blue dots inside Build History box to view the success console logs of the "Sanity", "All-NewFeatures" respectively.

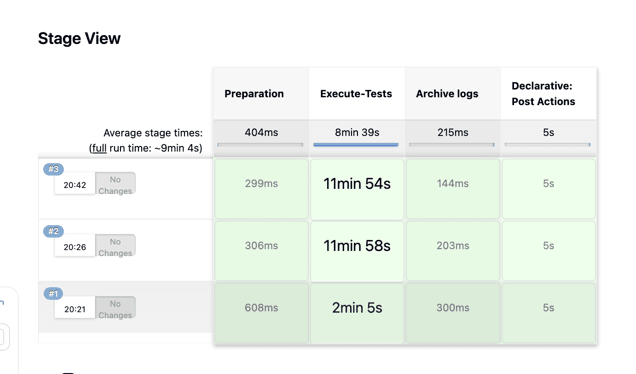

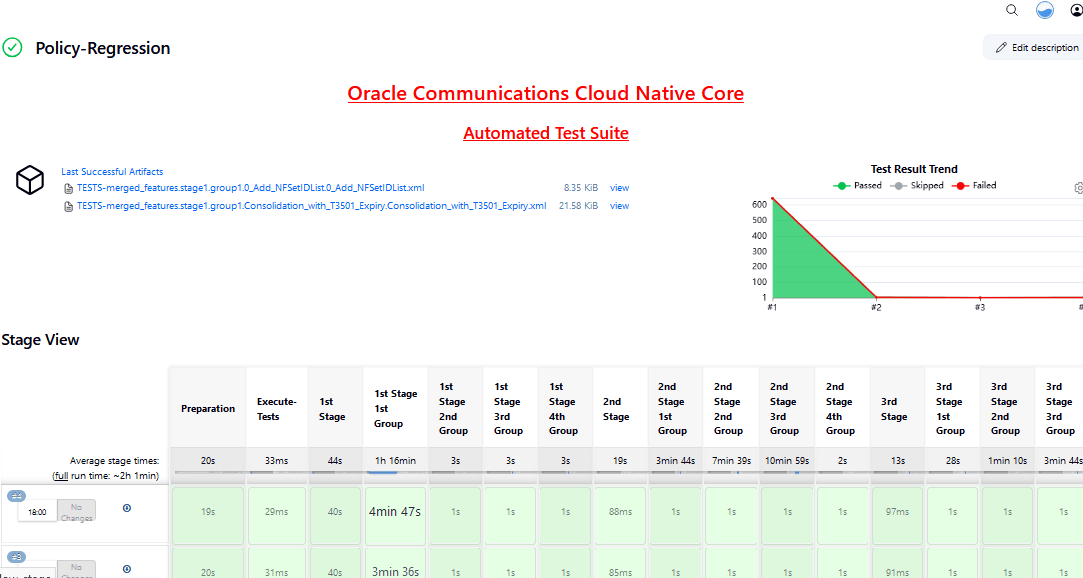

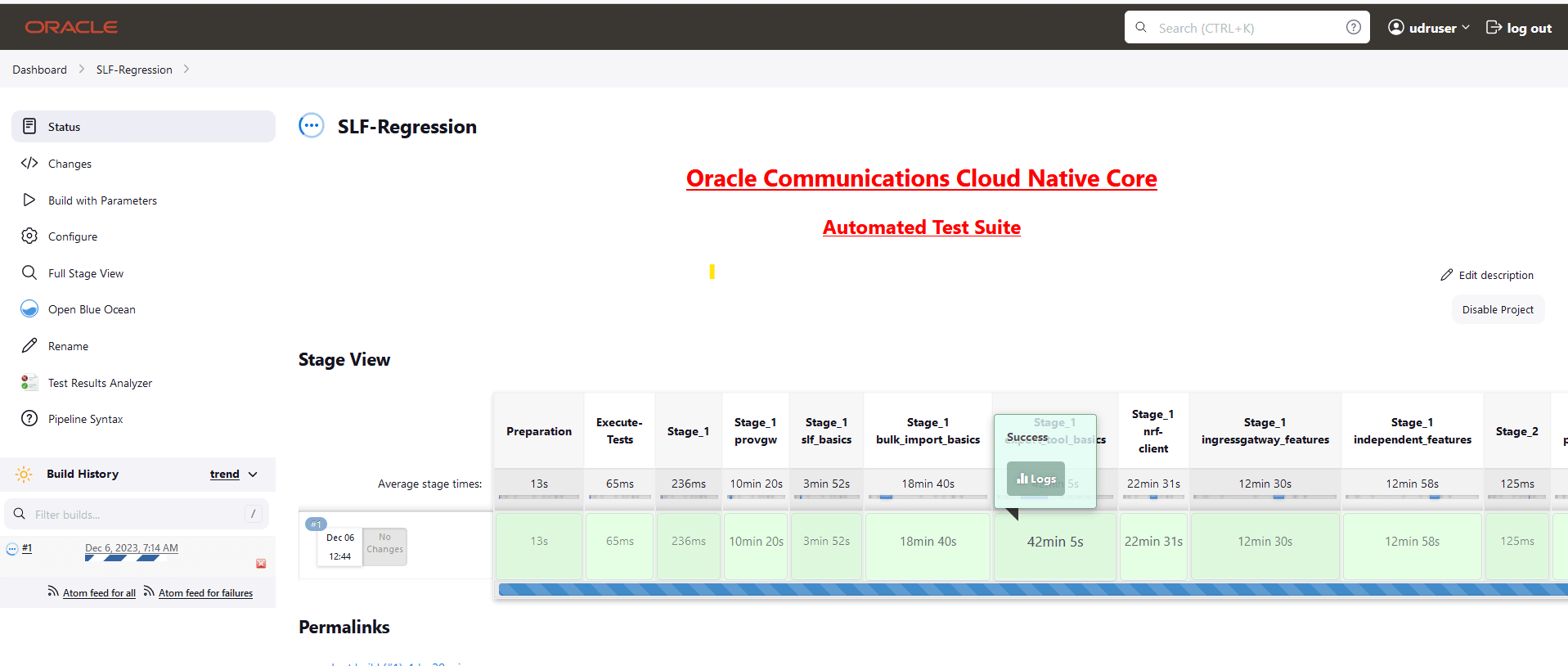

- The Stage View represents the already deployed pipeline for the customer reference.

- The Test Results Analyzer is the new plugin integrated in the NRF-ATS. This option can be used to display the previous history of all the execution build-wise. It will provide a graphical representation of the past execution together.

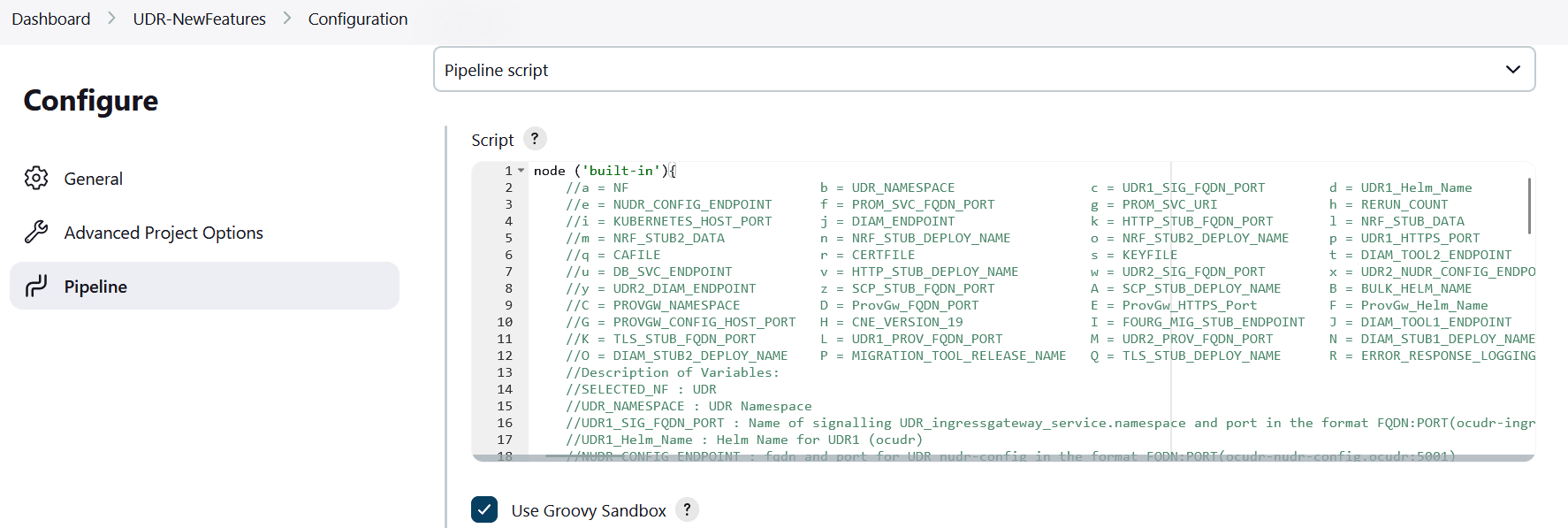

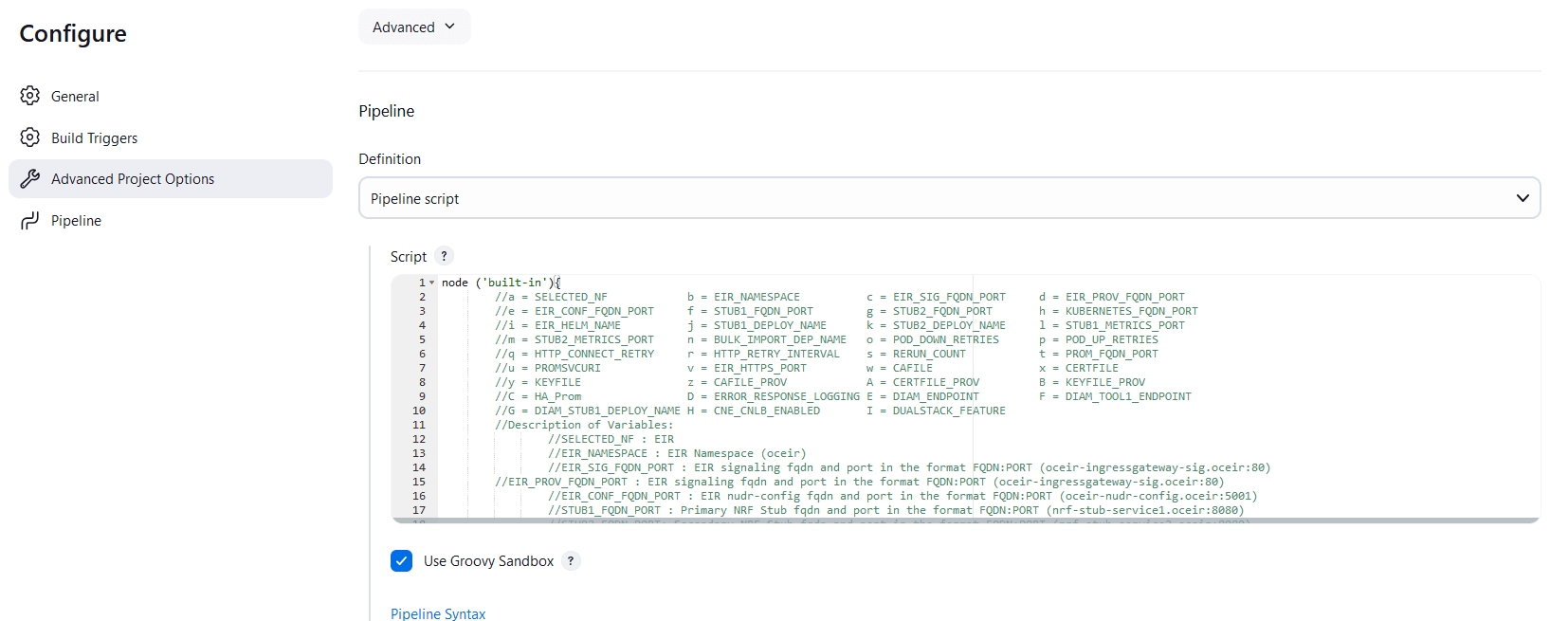

- Click Configure. User must wait for the page to load

completely. Once the page loads completely, click the Pipeline tab:

Note:

Make sure that the following page loads completely before you perform any action on it. Also, do not modify any configuration other than shown below.Figure 4-18 Pipeline Tab

The Pipeline section of the configuration page appears as follows:

The Pipeline section of the configuration page appears as follows:Figure 4-19 Pipeline Section

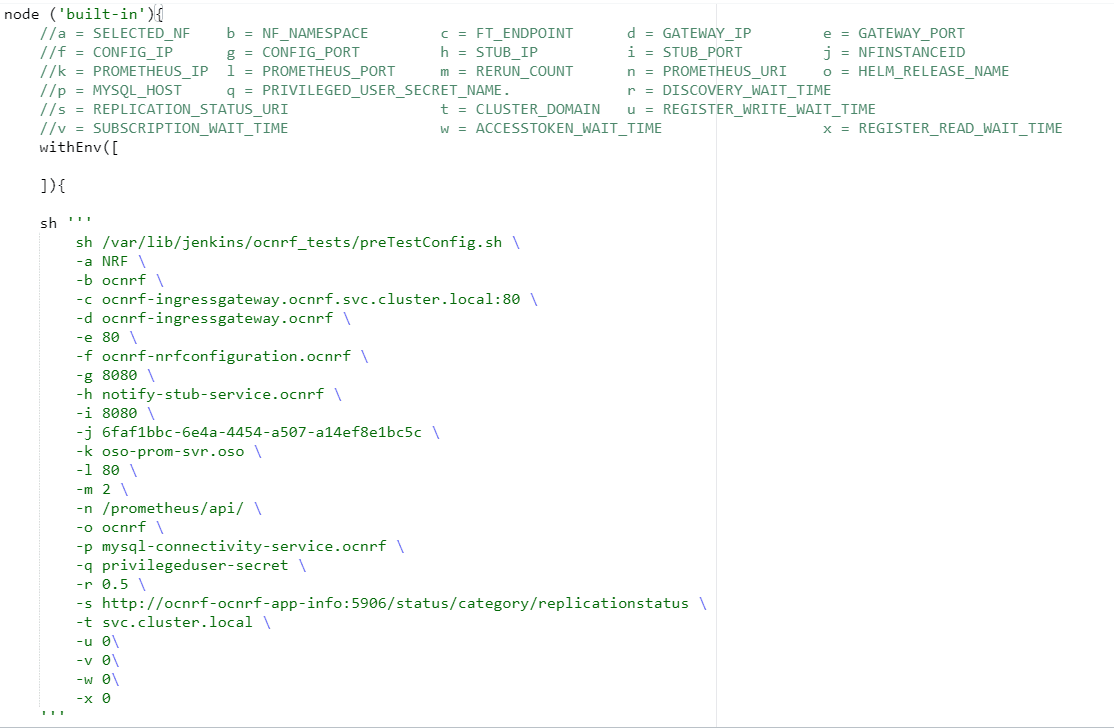

In the above screenshot, change the values of the 'Pipeline script'. The content of the pipeline script is as follows:

In the above screenshot, change the values of the 'Pipeline script'. The content of the pipeline script is as follows:Figure 4-20 Pipeline Script

Note:

The user must not change any other value apart from line number 15 to line 37.You can change the parameter values from "a" - to - "x" as per user requirement. The parameter details are available as comments from line number 2 - to - 7.- a: Name of the NF to be tested in capital (NRF).

- b: Namespace in which the NRF is deployed.

- c: endPointIP: endPointPort value used while deploying NRF with the help of helm chart.

- d: Comma separated values of NRF1 and NRF2 ingress gateway service (For example: ocnrf-ingressgateway.ocnrf,1.1.1.1). It is also known as cluster_domain.

- e: Comma separated values of NRF1 and NRF2 port of ingressgateway service (For example: 80,31000).

- f: Comma separated values of NRF1 and NRF2 configuration service (For example: ocnrf-nrfconfiguration.ocnrf,1.1.1.1). It is also known as cluster_domain.

- g: Comma separated values of NRF1 and NRF2 port of configuration service (For example: 8080, 31001).

- h: Name_of_stub_service.namespace (notify-stub-service.ocnrf).

- i: Port of stub service (8080).

- j: NRF_Instance ID (6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c).

- k: Name_of_Prometheus_service.namespace (occne-prometheus-server.occne-infra).

- l: Port of Prometheus service (80).

- m: Number of times the re-run of failed case is allowed (default as 2).

-

n: URI of Prometheus service (/api/) -

- Use "/api/" for CNE version till 1.6.0 and "/prometheus/api/" for CNE version 1.7.0 and 1.8.0.

- For, CNE 1.9.x, CNE 1.10.x, and CNE 22.1.0, cluster name is needed in the URI. The URI must be <cluster_name>/prometheus/api. For example: /bmw/prometheus/api (For more details on the Prometheus URI, check corresponding CNE version's user guide.)

- If OSO is being used, use "/api/" for OSO-1.6.1 or earlier and use "/prometheus/api/" for OSO-1.6.2 or later.

- For OSO-1.10.0, the URI must be <cluster_name>/prometheus/api. For example: /bmw/prometheus/api (For more details on the Prometheus URI, check corresponding OSO version's user guide)

- o: Helm_release_name used to deploy NRF

- p: Host name of MySQL (For example: mysql-connectivity-service)

- q: Privileged User secret name (For example: privilegeduser-secret)

- r: wait time before sending discovery request (0.5)

- s: comma-separated values of replicationStatusUri(s) of the appinfo(s).

- t: the cluster domain

- u: wait time before sending registration requests for write operations

- v: wait time before sending subscription requests

- w: wait time before sending accesstoken requests

- x: wait time before sending registration requests for read operation

Note:

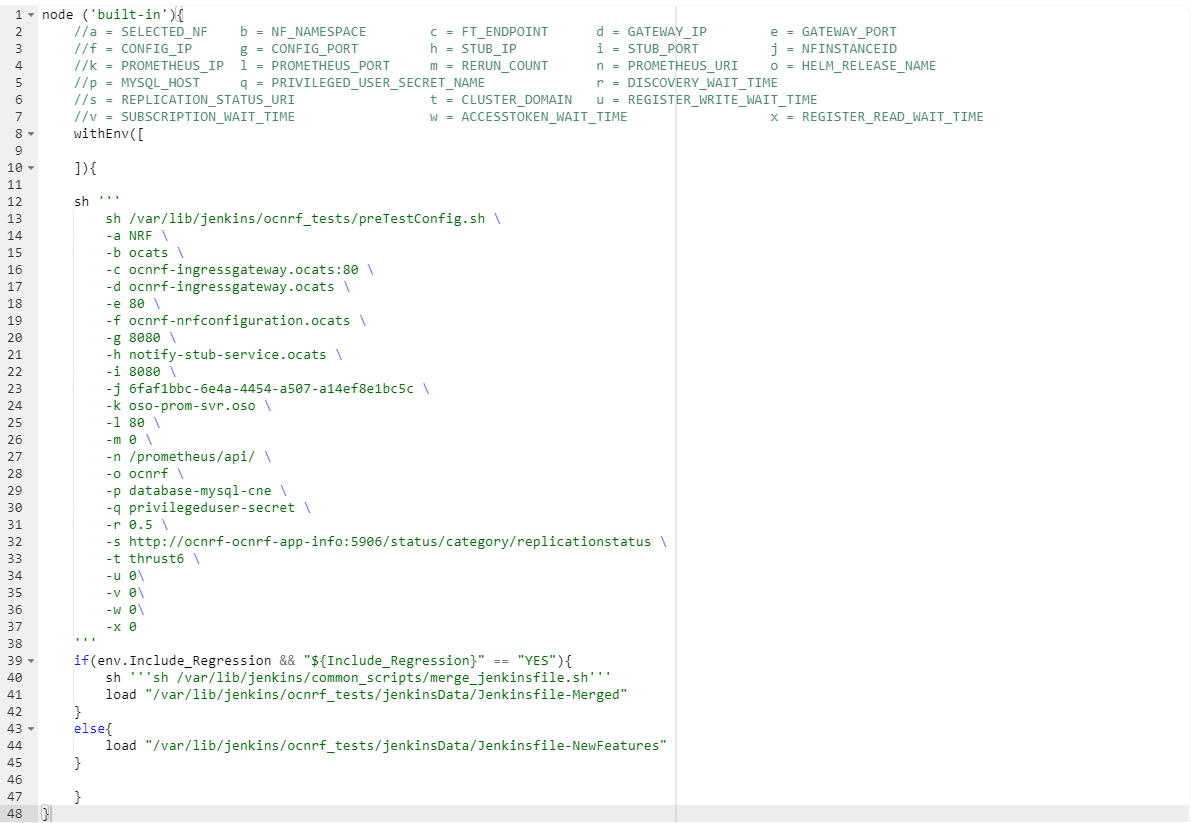

- If the 2-Site-Geo cases are run, the user has to

provide values for NRF2 in corresponding environment variables in

the above script as per the deployment. A sample configuration is

given as

below:

node ('master'){ //a = SELECTED_NF b = NF_NAMESPACE c = FT_ENDPOINT d = GATEWAY_IP e = GATEWAY_PORT //f = CONFIG_IP g = CONFIG_PORT h = STUB_IP i = STUB_PORT j = NFINSTANCEID //k = PROMETHEUS_IP l = PROMETHEUS_PORT m = RERUN_COUNT n = PROMETHEUS_URI o = HELM_RELEASE_NAME //p = MYSQL_HOST q = PRIVILEGED_USER_SECRET_NAME r = DISCOVERY_WAIT_TIME //s = REPLICATION_STATUS_URI t = CLUSTER_DOMAIN u = REGISTER_WRITE_WAIT_TIME //v = SUBSCRIPTION_WAIT_TIME w = ACCESSTOKEN_WAIT_TIME x = REGISTER_READ_WAIT_TIME sh ''' sh /var/lib/jenkins/ocnrf_tests/preTestConfig.sh \ -a NRF \ -b occne-cndbtierone,occne-cndbtiertwo \ -c ocnrf-ingressgateway.ocnrf.svc.cluster.local:80,ocnrf-1-ingressgateway.ocnrf.svc.cluster.local:80 \ -d ocnrf-ingressgateway.occne-cndbtierone,ocnrf-1-ingressgateway.occne-cndbtiertwo \ -e 80,80 \ -f ocnrf-nrfconfiguration.occne-cndbtierone,ocnrf-1-nrfconfiguration.occne-cndbtiertwo \ -g 8080,8080 \ -h notify-stub-service.occne-cndbtierone,notify-stub-service02.occne-cndbtierone \ -i 8080,8080 \ -j 6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c,6faf1bbc-6e4a-4454-a507-a14ef8e1bc5d \ -k oso-prom-svr.oso \ -l 80 \ -m 2 \ -n /prometheus/api/ \ -o ocnrf,ocnrf-1 \ -p mysql-connectivity-service \ -q privilegeduser-secret \ -r 0.5 \ -s http://ocnrf-ocnrf-app-info:5906/status/category/replicationstatus,http://ocnrf-1-ocnrf-app-info:5906/status/category/replicationstatus \ -t svc.cluster.local \ -u 0 \ -v 0 \ -w 0 \ -x 0 ''' if(env.Include_NewFeatures && "${Include_NewFeatures}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Regression" } } } - If 3SiteGeo and georedundancy suite in

Regression pipeline cases are run, the user has to provide values

for NRF2, NRF3 in corresponding environment variables in the above

script as per the deployment. A sample configuration is given as

below:

node ('master'){ //a = SELECTED_NF b = NF_NAMESPACE c = FT_ENDPOINT d = GATEWAY_IP e = GATEWAY_PORT //f = CONFIG_IP g = CONFIG_PORT h = STUB_IP i = STUB_PORT j = NFINSTANCEID //k = PROMETHEUS_IP l = PROMETHEUS_PORT m = RERUN_COUNT n = PROMETHEUS_URI o = HELM_RELEASE_NAME //p = MYSQL_HOST q = PRIVILEGED_USER_SECRET_NAME r = DISCOVERY_WAIT_TIME //s = REPLICATION_STATUS_URI t = CLUSTER_DOMAIN u = REGISTER_WRITE_WAIT_TIME //v = SUBSCRIPTION_WAIT_TIME w = ACCESSTOKEN_WAIT_TIME x = REGISTER_READ_WAIT_TIME sh ''' sh /var/lib/jenkins/ocnrf_tests/preTestConfig.sh \ -a NRF \ -b occne-cndbtierone,occne-cndbtiertwo,occne-cndbtierthree \ -c ocnrf-ingressgateway.ocnrf.svc.cluster.local:80,ocnrf-1-ingressgateway.ocnrf.svc.cluster.local:80,ocnrf-2-ingressgateway.ocnrf.svc.cluster.local:80 \ -d ocnrf-ingressgateway.occne-cndbtierone,ocnrf-1-ingressgateway.occne-cndbtiertwo,ocnrf-2-ingressgateway.occne-cndbtierthree \ -e 80,80,80 \ -f ocnrf-nrfconfiguration.occne-cndbtierone,ocnrf-1-nrfconfiguration.occne-cndbtiertwo,ocnrf-2-nrfconfiguration.occne-cndbtierthree \ -g 8080,8080,8080 \ -h notify-stub-service.occne-cndbtierone,notify-stub-service02.occne-cndbtierone,notify-stub-service03.occne-cndbtierone \ -i 8080,8080,8080 \ -j 6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c,6faf1bbc-6e4a-4454-a507-a14ef8e1bc5d,6faf1bbc-6e4a-4454-a507-a14ef8e1bc5e \ -k oso-prom-svr.oso \ -l 80 \ -m 2 \ -n /prometheus/api/ \ -o ocnrf,ocnrf-1,ocnrf-2 \ -p mysql-connectivity-service \ -q privilegeduser-secret \ -r 0.5 \ -s http://ocnrf-ocnrf-app-info:5906/status/category/replicationstatus,http://ocnrf-1-ocnrf-app-info:5906/status/category/replicationstatus,http://ocnrf-2-ocnrf-app-info:5906/status/category/replicationstatus \ -t svc.cluster.local \ -u 0 \ -v 0 \ -w 0 \ -x 0 ''' if(env.Include_NewFeatures && "${Include_NewFeatures}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnrf_tests/jenkinsData/Jenkinsfile-Regression" } } }

Note:

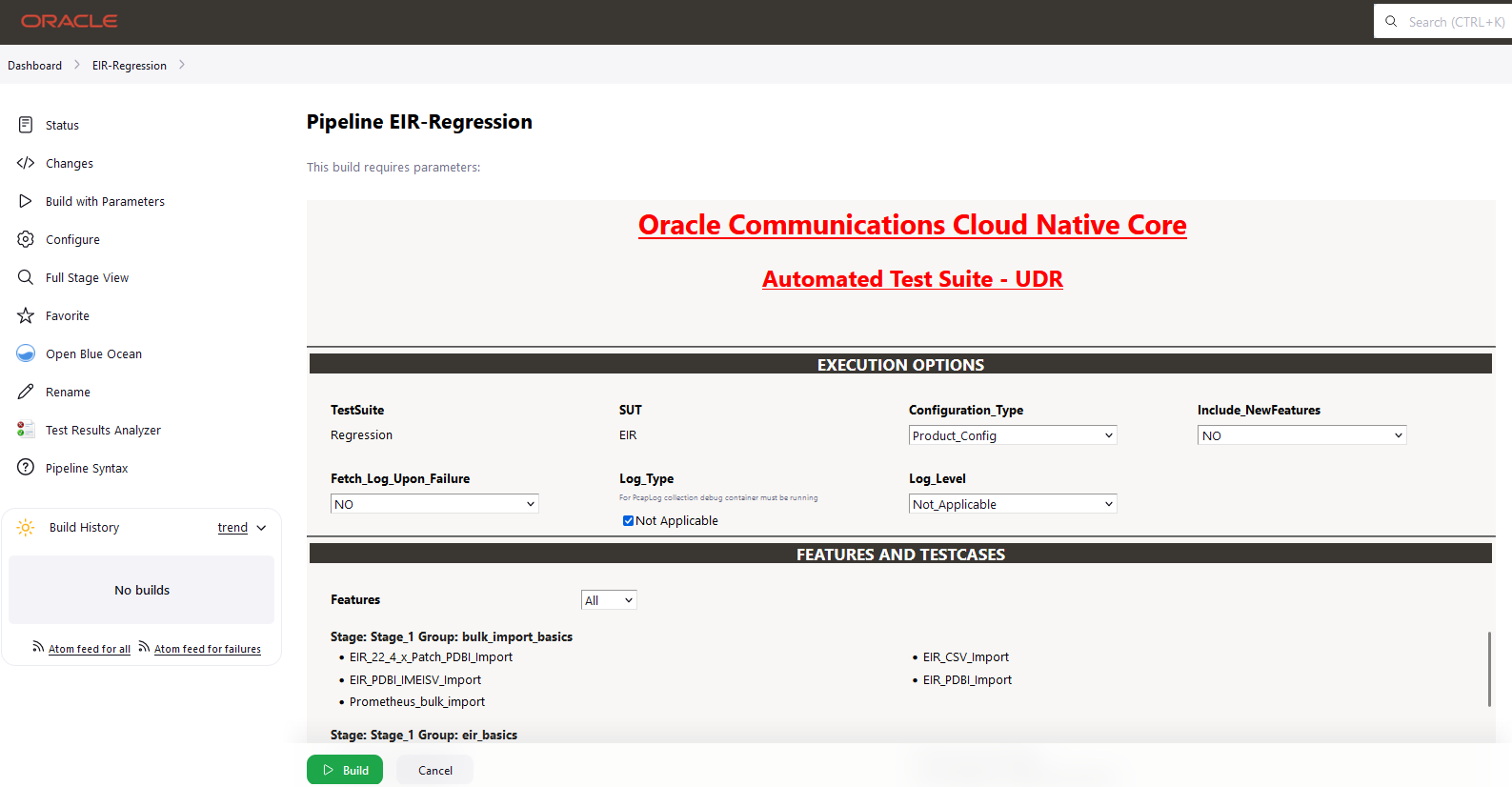

If user is using CNE1.6 or OSO 1.6.1 or earlier, change the Prometheus service URI (-n) to "/api/" and Prometheus service name and port to appropriate values. For CNE 1.7.0 or OSO 1.6.2 or later, retain the default value "/prometheus/api/". If OSO 1.10.0 is used, use the URI along with the cluster_name (for example: /bmw/prometheus/api). - Click Save after making necessary changes. The

NRF-NewFeatures screen appears. Click the Build with Parameters link

available in the left navigation pane of the NRF-NewFeatures Pipeline

screen.

In the Pipeline screen, there are three Select_Option(s), which are:

- All: This is the default option. It runs all the NRF test cases. Scroll down and click Build to execute all the test cases.

- Sanity: It is recommended to run sanity before running any test case. It ensures all the deployments are done properly.

- Single/MultipleFeatures: This option allows you

to select any number of test cases that you want to run from the

list of total test cases available for execution.

After selecting the test cases, scroll down and click Build to run the selected NRF test cases.

- Select one of the following configuration types:

- Product_Config: On selecting this option, test cases from product folders are populated on ATS UI and product configuration is applied to them via the key-value pair and yaml files defined or present in the "Product Config" folder.

- Custom_Config: On selecting this option, test

cases from custom folders are populated on ATS UI and custom

configuration is applied to them via the key-value pair and yaml files

defined or present in the "Custom Config" folder. To use the Parameterization feature, always select the

Custom_Config option. User can copy, add, or delete the required test

cases that are available for the NRF and place them appropriately within

the custom folder for NRF-NewFeatures. Reload the page to view the test

cases available in the custom NewFeatures folder.

For more information, see Parameterized approach for SUT custom configuration.

- NRF Sanity - This feature file contains all the basic sanity cases for NRF ATS to validate the deployment is correct or not. It is advisable for user to run these cases before starting a complete suite.

- Discovery - These feature files are listed with a prefix as "Disc". All the discovery microservice related cases will be listed once this option is selected.

- NRF Functional - These feature files are listed with a prefix as "Feat". All the functional cases will be listed once this option is selected.

- Registration - These feature files are listed with a prefix as "Upd". These are related to update operation of registered profiles. All the registration cases will be listed once this option is selected.

- Subscription- These feature files are listed with a prefix as "Subs". All the subscription microservice related cases will be listed once this option is selected.

- Roaming -These feature files are listed with a prefix as "Roaming". All the roaming cases will be listed once this option is selected.

Figure 4-21 Sample Screen: NRF-ATS Full Execution

Figure 4-22 Test Cases Result - Sanity

Note:

For more details on consolidated test report, see Managing Final Summary Report, Build Color, and Application Log.Figure 4-23 Test Cases Result - All-NewFeatures

Parameterized approach for SUT custom configuration

- Add new test cases by adding datafiles

- Remove test cases

- Modify the parameters and their values in the key-value pair or

<feature>.yamlfiles

Cust ConfigN

where N can be any number

At the time of execution, ensure to rename the required folder to Cust Config folder as Jenkins always retrieves data from this folder when user selects Custom_Config.

To use

Custom_Config, it is required to change the value of cust_folder

from data to cust_data in

global.yaml file. In addition, you can customize the

parameters and their respective values in the global.yaml

as per the requirements.

<feature>.yaml files for parameterized

feature:

- In addition to

global.yamlparameters, feature files may also contain parameters for which user can update values at the time of running pipelines. - Changing the values of parameters tagged as "Feature Specific Value" may cause failures at the time of running pipelines.

- Values for parameters tagged with #START_GLOBAL and

#END_GLOBAL tags take values from

global.yaml.

Note:

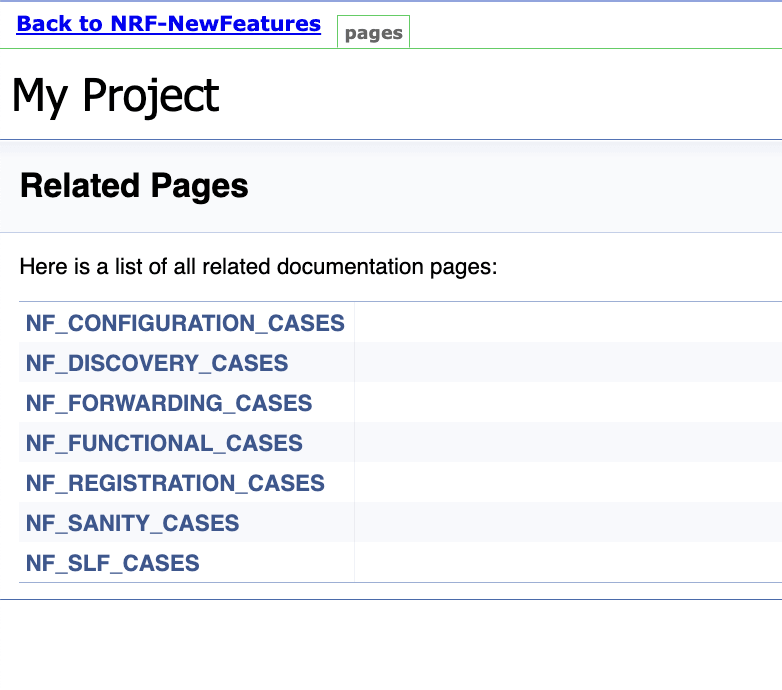

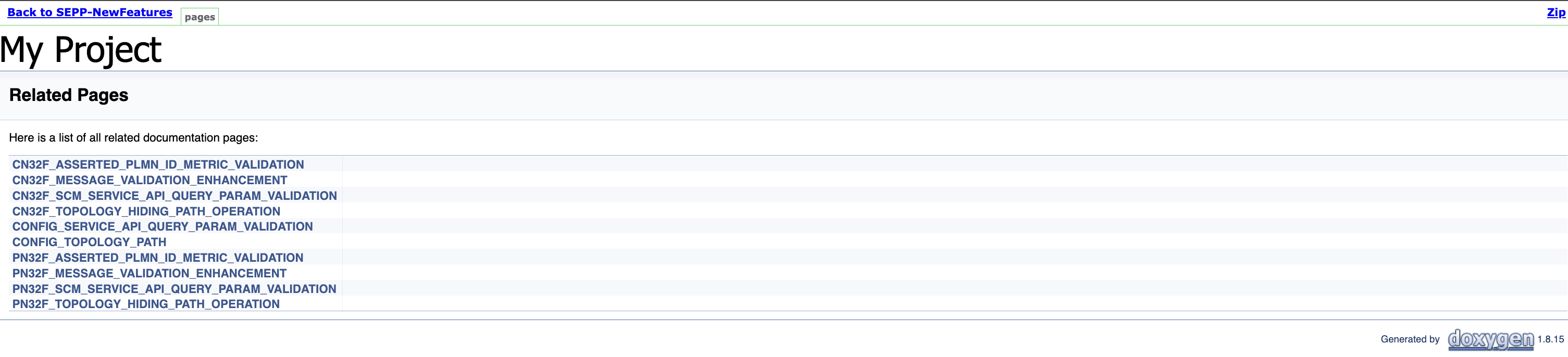

For NRF-ATS release 25.1.200, parameterization is supported for NRF NewFeatures pipeline only.4.2.4 NRF-NewFeatures Documentation

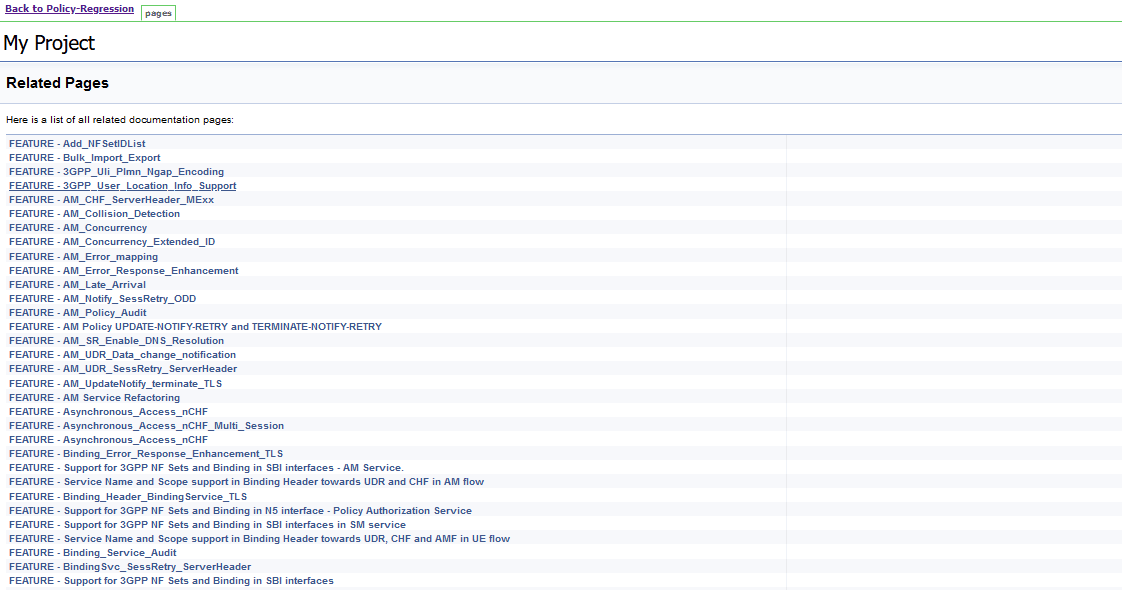

To view NRF test cases, go to NRF-NewFeatures pipeline and click the Documentation link in the left navigation pane. It lists all the test cases provided as part of NRF ATS -25.1.200 along with sanity cases.

The NRF test cases are divided into multiple groups based on the functionality.

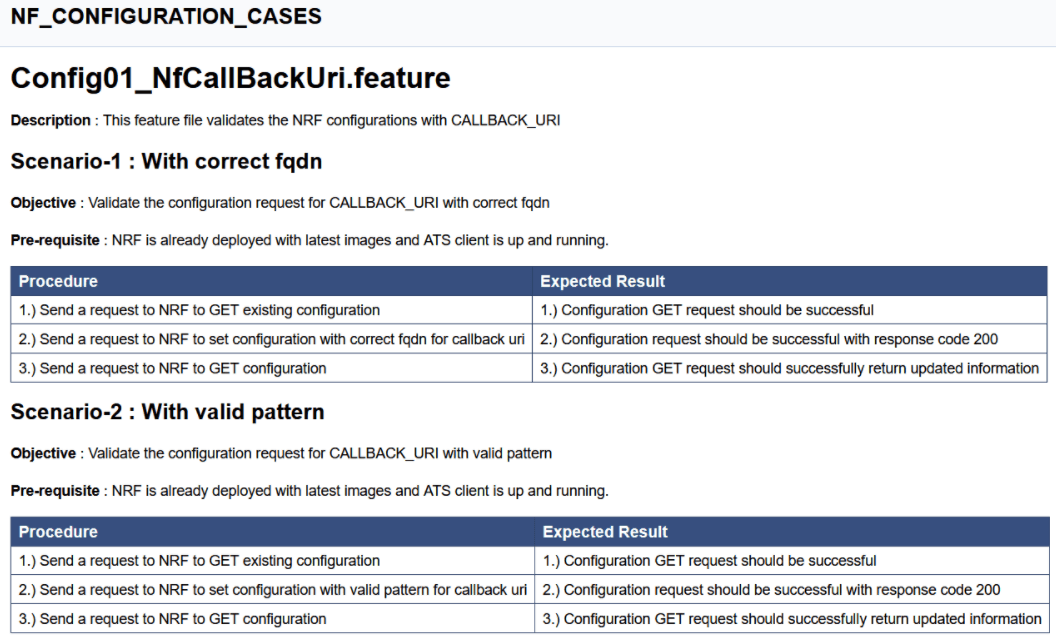

- NF_CONFIGURATION_CASES- All the cases related to NRF configuration will be listed once this option is selected.

- NF_DISCOVERY_CASES - All the discovery microservice related cases will be listed once this option is selected.

- NRF_FORWARDING_CASES - All the forwarding related cases will be listed once this option is selected.

- NF_FUNCTIONAL_CASES - All the functional cases will be listed once this option is selected.

- NF_REGISTRATION_CASES- All the registration cases will be listed once this option is selected.

- NF_SANITY_CASES- All the sanity cases will be listed once this option is selected.

- NF_SLF_CASES - All the SLF related cases will be listed once this option is selected.

Figure 4-24 NRF-NewFeatures Documentation

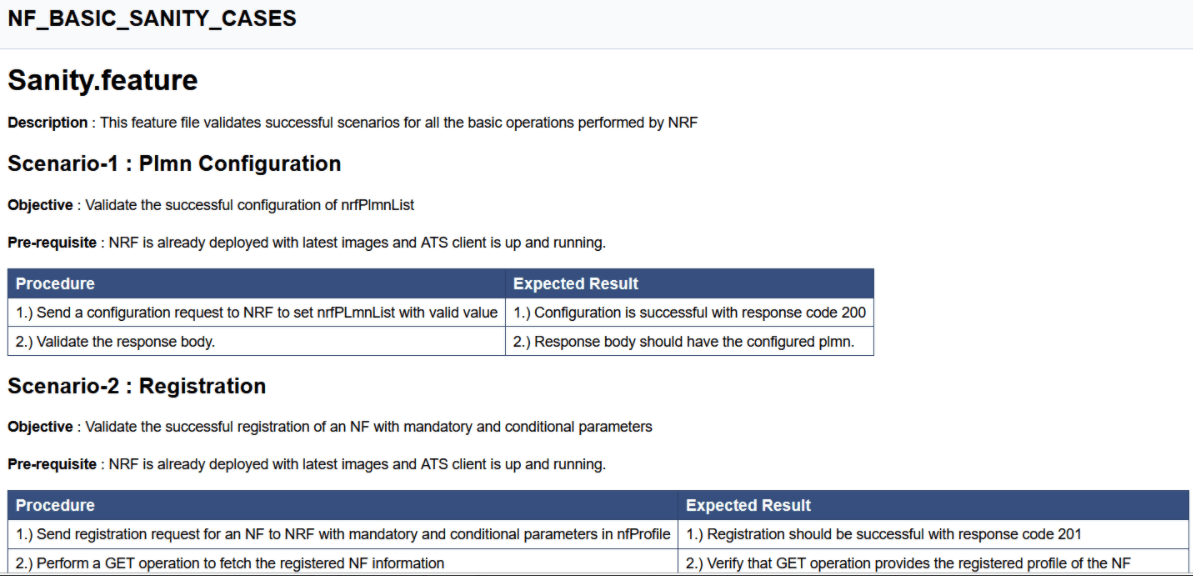

Figure 4-25 Sample Feature: NF_BASIC_SANITY_CASES

Based on the functionalities covered under Documentation, the Build Requires Parameters screen displays test cases. To navigate back to the Pipeline NRF-NewFeatures screen, click the Back to NRF-NewFeatures link available on top left corner of the screen.

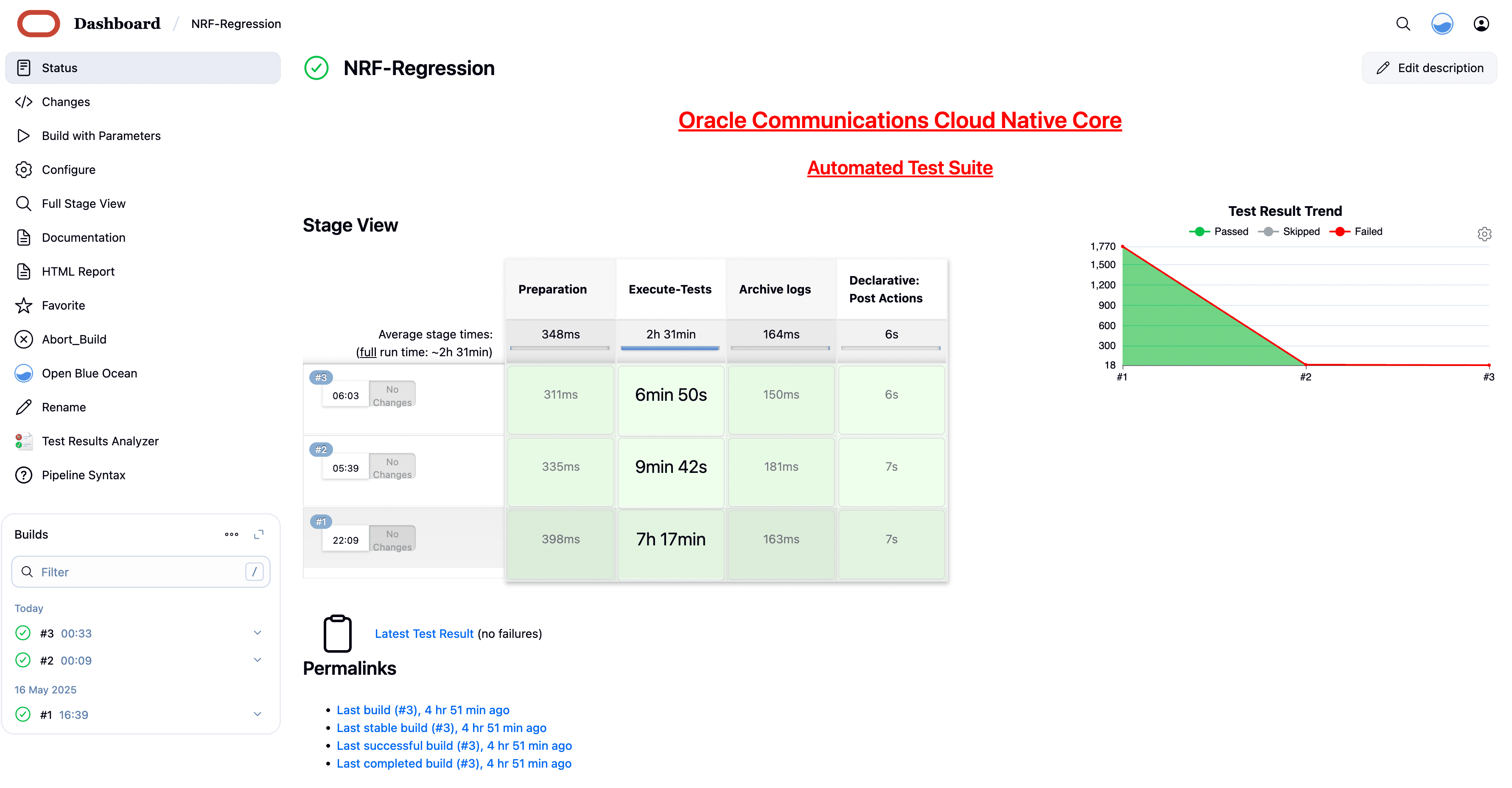

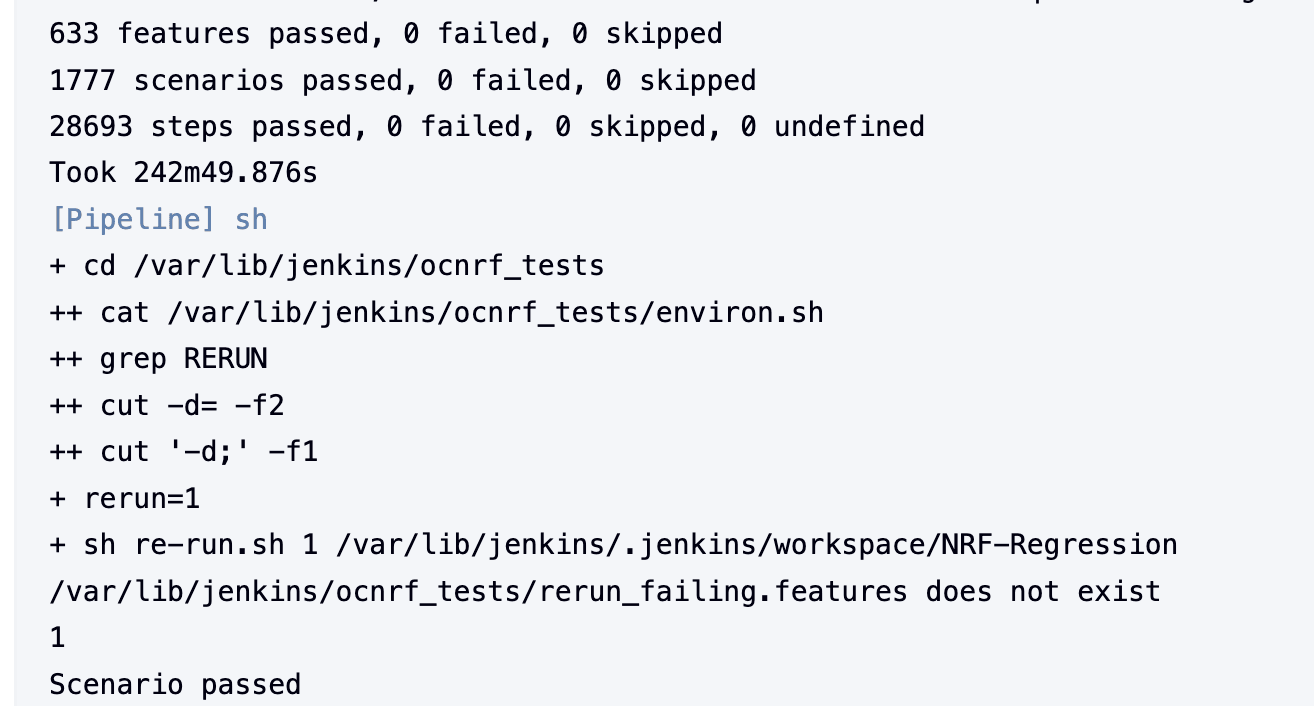

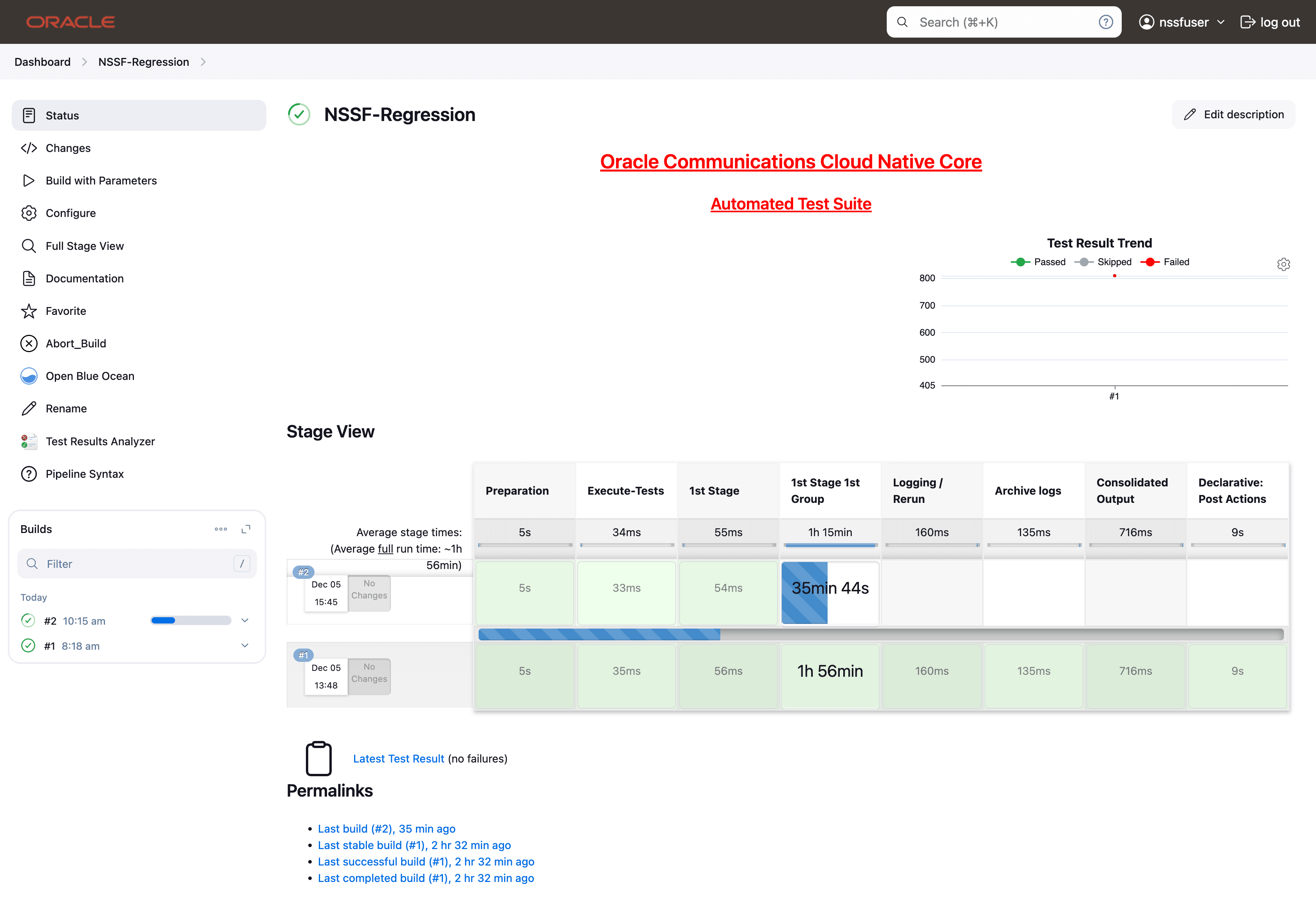

4.2.5 NRF-Regression Pipeline

This pre-configured pipeline contains all the test cases delivered till NRF ATS 25.1.200. However, some test cases are updated as per new implementation of NRF.

The configuration method and parameters are same as the NewFeatures pipeline.

Only difference in this pipeline is, while executing 2-Site-Geo and 3-Site-Geo test cases, user has to provide appropriate values for NRF2 and NRF3 in the Pipeline script. In this pipeline, user will not get option to run Sanity.

- 2-Site-Geo - These feature files are listed with a prefix as "Geo_Redundancy".

- 3-Site-Geo- These feature files contain all the cases which are executed on 3 Site GEO NRF.

- CCA-Header- These feature files contain all cases related to CCA Header feature.

- NF-FQDN-Authentication - These feature files are listed with a prefix as "NfAuthentication".

- AccessToken - These feature files are listed with a prefix as "oAuth" and "AccessToken".

- Alerts - These feature files are list with a prefix as "Alert".

- Configuration - These feature files are listed with a prefix as "Config" and "SystemOptions".

- Discovery - These feature files are listed with a prefix as "Disc".

- NRF Forwarding - These feature files are listed with a prefix as "Forwarding".

- NRF Functional - These feature files are listed with a prefix as "Feat".

- Key-ID - These feature files are listed with a prefix as "Kid".

- NRF State Data - These feature files are listed with a prefix as "State".

- Preferred Locality - These feature files are listed with a prefix as "Pref".

- Registration - These feature files are listed with a prefix as "HBTimerEnhancement", "Reg" and "Upd". These are related to update operation of registered profiles.

- NRF SLF - These feature files are listed with a prefix as "SLF".

- Subscription - These feature files are listed with a prefix as "Subs".

- NRF Growth - These feature files contain all cases related to NRF Growth feature.

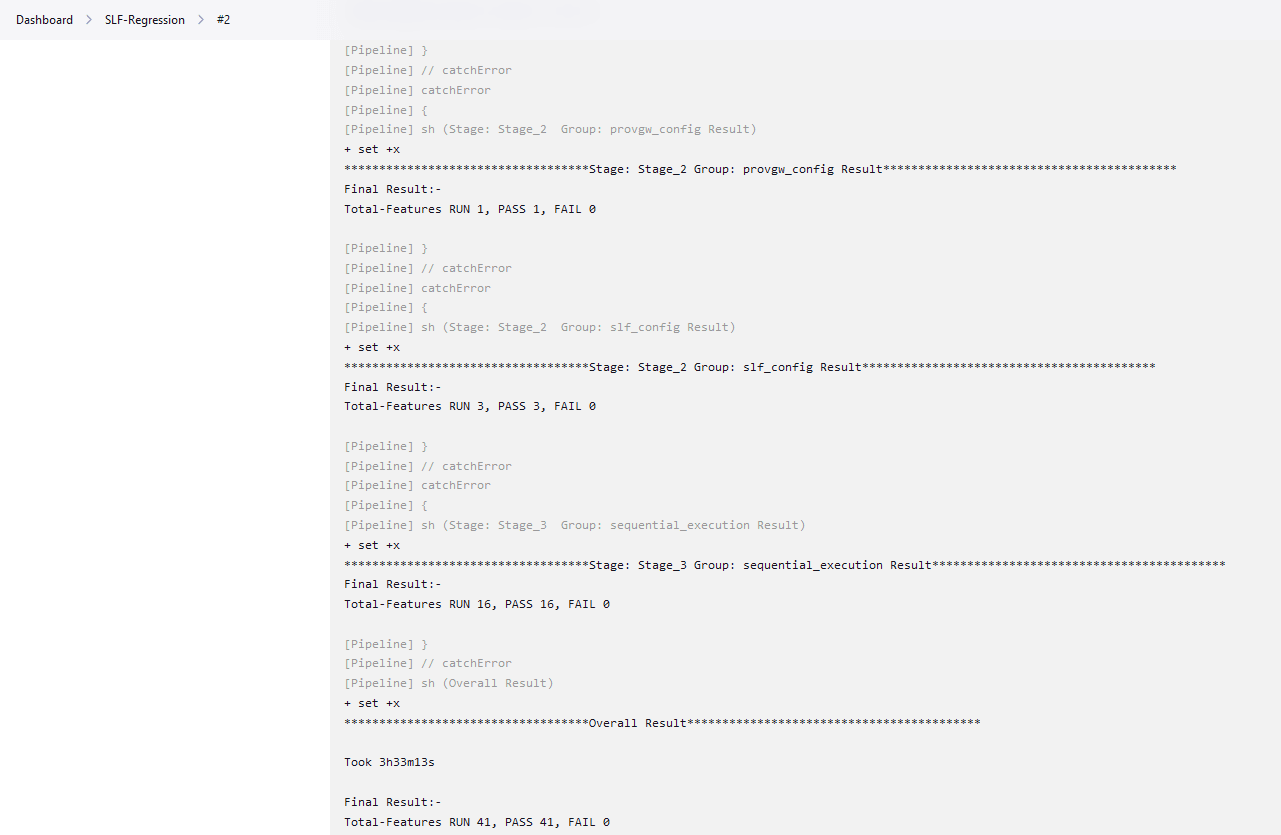

Figure 4-26 NRF-Regression

Figure 4-27 NRF-Regression - All-default-Regression

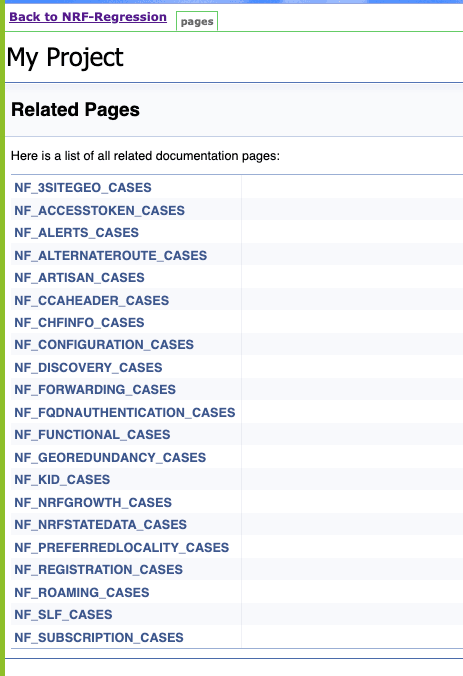

4.2.6 NRF-Regression Documentation

Click Documentation in the left navigation pane of the NRF-Regression pipeline to view all the test cases provided till NRF ATS 25.1.200.

- NF_3SITEGEO_CASES- All the 3-Site Geo-Redundancy related cases will be listed once this option is selected.

- NF_ACCESSTOKEN_CASES - All the test cases for validating accesstoken operation will be listed once this option is selected.

- NF_ALERTS_CASES - Test case for validating alerts will be listed once this option is selected.

- NF_ALTERNATEROUTE_CASES- All the alternate route microservice related cases will be listed once this option is selected.

- NF_ARTISAN_CASES- All the NRF artisan microservice related cases will be listed once this option is selected.

- NF_CCAHEADER_CASES - Cases related to CCAHeader feature will be listed once this option is selected.

- NF_CHFINFO_CASES - All the ChfInfo enhancement cases will be listed once this option is selected.

- NF_CONFIGURATION_CASES - Cases related to NRF configuration will be listed once this option is selected.

- NF_DISCOVERY_CASES - All the discovery microservice related cases will be listed once this option is selected.

- NF_FORWARDING_CASES - All the forwarding related cases will be listed once this option is selected.

- NF_FQDNAUTHENTICATION_CASES - All the NF FQDN authentication cases will be listed once this option is selected.

- NF_FUNCTIONAL_CASES - All the functional cases will be listed once this option is selected.

- NF_GEOREDUNDANCY_CASES - All the Geo-Redundancy related cases (2-Site-GEO and 3-Site-GEO both) will be listed once this option is selected.

- NF_KID_CASES - All the K-ID feature related cases will be listed once this option is selected.

- NF_NRFGROWTH_CASES - All growth related cases will be listed once this option is selected.

- NF_NRFSTATE_DATA_CASES - All the nfStateData related cases will be listed once this option is selected.

- NF_PREFERRED_LOCALITY_CASES - All the preferred locality feature related cases will be listed once this option is selected.

- NF_REGISTRATION_CASES - All the registration related cases will be listed once this option is selected.

- NF_ROAMING_CASES - All the roaming related cases will be listed once this option is selected.

- NF_SLF_CASES - All the SLF related cases will be listed once this option is selected.

- NF_SUBSCRIPTION_CASES - All subscription related cases will be listed once this option is selected.

Figure 4-28 NRF-Regression Documentation

Figure 4-29 Sample Screen: NRF-Regression Documentation

4.3 Running NSSF Test Cases using ATS

This section describes how to run NSSF test cases using ATS.

4.3.1 Prerequisites

The prerequisites to run NSSF Test Cases using NSSF ATS 25.1.200 are:

- Deploy NSSF 25.1.200 with default helm configurations using helm charts.

- All NSSF microservices must be up and running.

- Both NSSF ATS and ATS Deployment needs to be same namespace.

- For NSSF ATS 25.1.200, deploy one stub server and the service name should be "amf-stubserver". It is required to run AMF-subscription Notification functionality test cases.

- For NSSF ATS 25.1.200, deploy one stub server and the service name should be "nrf-stubserver". It is required to run NRF-subscription Notification functionality test cases.

- For NSSF ATS 25.1.200, deploy one stub server and the service name should be "nrf-stubserver1". It is required to run NRF-selection based on DNS SRV.

- For NSSF ATS 25.1.200, deploy one stub server and the service name should be "nrf-stubserver2". It is required to run NRF-selection based on DNS SRV.

- For NSSF 25.1.200, deploy one DNS stub server and the service name should be "ocdns-bind". It is required to run NRF-selection based on DNS SRV.

4.3.2 Logging into ATS

Before logging into ATS, deploy ATS using HELM charts as shown below:

Verify ATS deployment

[opc@ocnssf-oci-phx-einstein-bastion-01 ~]$ helm status ocats

NAME: ocats

LAST DEPLOYED: Thu May 30 10:21:40 2024

NAMESPACE: cicdnssf-240530102024

STATUS: deployed

REVISION: 1

TEST SUITE: NoneThere are two ways to log in to ATS GUI.

- When an external load balancer (metalLB in case of OCCNE) is available and you provide an external IP to the ATS service, the user can log in to ATS GUI using <External-IP>:8080.

- When you do not provide an external IP to the ATS service, open the

browser and enter the external IP of the worker node and nodeport of the ATS

service to log in to the ATS GUI.

<Worker-Node-IP>:<Node-Port-of-ATS>Note:

In the Verifying ATS Deployment screenshot, the ATS nodeport is highlighted in red as 32013. For more details on ATS deployment, refer to NSSF ATS Installation Procedure.

Open a browser and enter the IP Address and port details as <Worker-Node-IP>:<NodePort-of-ATS> (In the above example, the Worker-Node-IP and NodePort-of-ATS are 10.98.101.177:32013, which are shown as highlighted in the screenshot above).

The ATS login screen appears.

- Enter the username as 'nssfuser' and password as 'nssfpasswd'.

Click Sign in. A page with preconfigured pipelines appears.

A page with preconfigured pipelines appears.

Note:

To modify the default login password, refer to Modifying Login Password.

- NSSF-New-Features: This pipeline has all the test cases delivered as part of NSSF ATS - 25.1.200.

- NSSF-Regression: This pipeline has the test cases of all the previous releases.

4.3.3 NSSF-NewFeatures Pipeline

In this pipeline, you can configure ATS, which is a one-time activity as per System Under Test (SUT) deployment. You can also run all the new NSSF test cases using the pipeline. To configure its parameters:

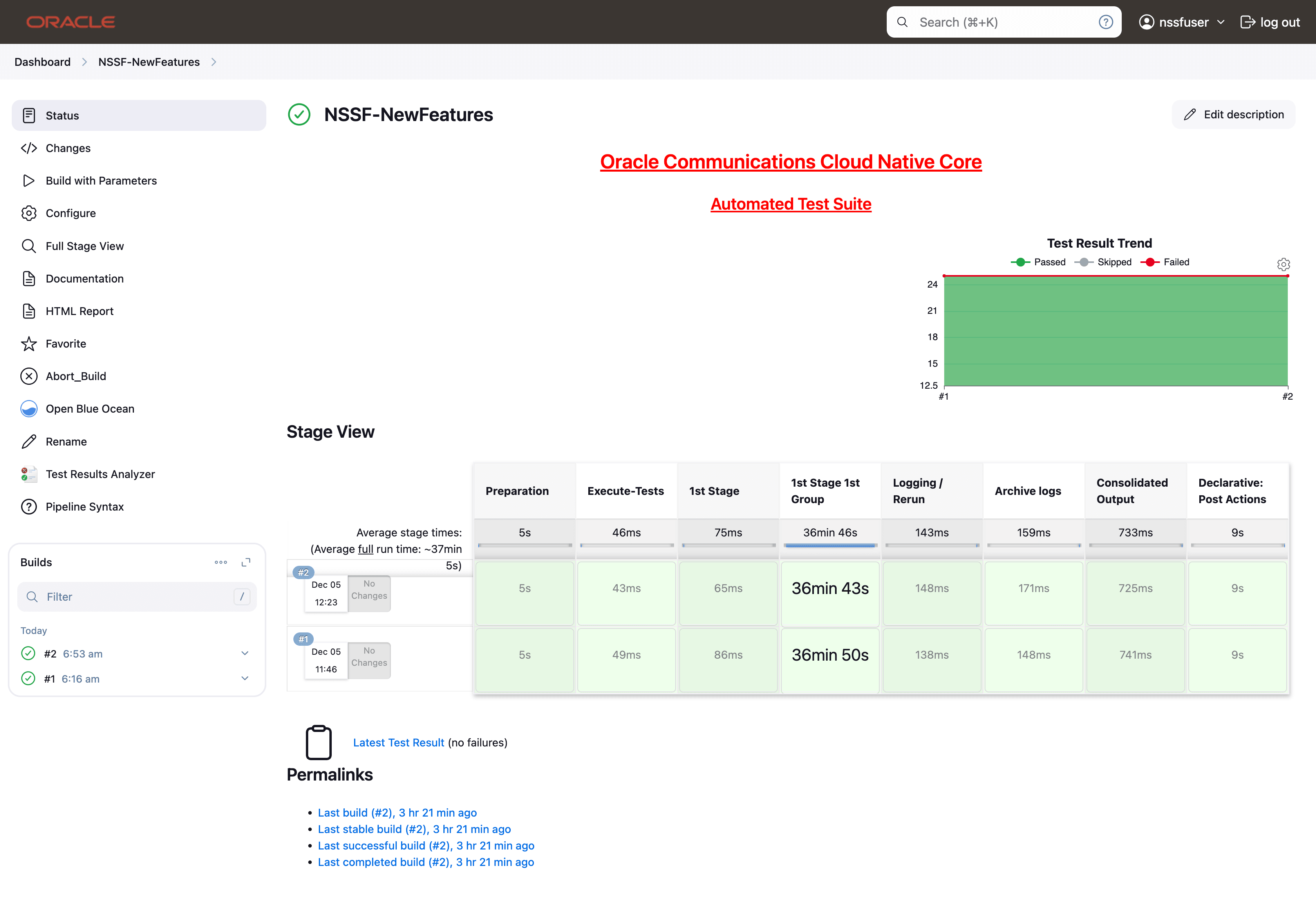

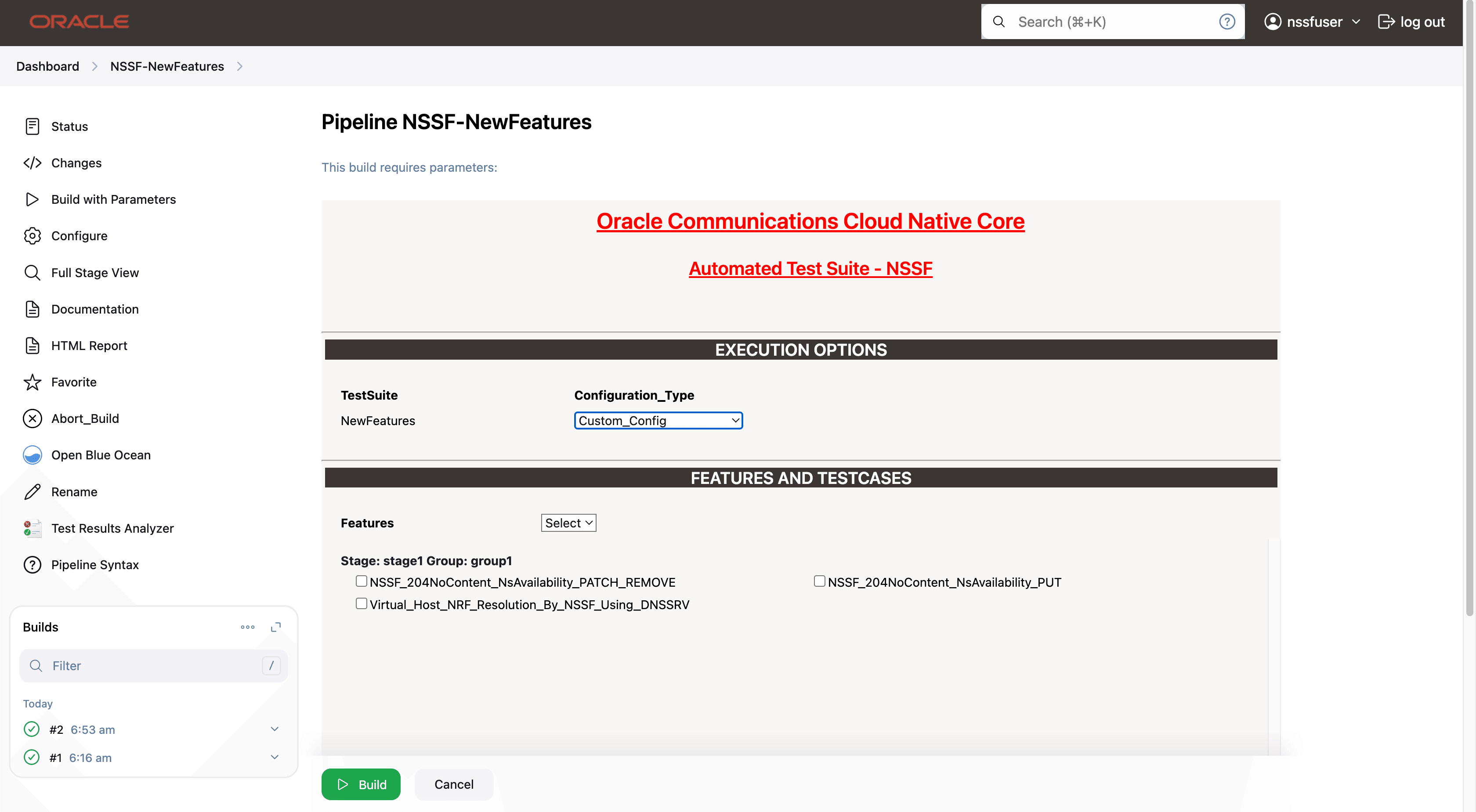

- Click NSSF-NewFeatures in the Name column. The

following screen appears:

In the above screen:

In the above screen:- Click Configure to access the configuration screen.

- Click Documentation to view the documented test cases.

- Click blue dots inside the Build History box to view the success console logs of the "All" and "Sanity" respectively.

- The Stage View represents the already run pipeline for customer reference.

- Click Configure. Users MUST wait for the page to load

completely. Once the page loads completely, click the Pipeline tab to

reach the Pipeline configuration as shown below:

WARNING:

Make sure that the screen shown above loads completely before you perform any action on it. Also, do not modify any configuration other than that discussed below.

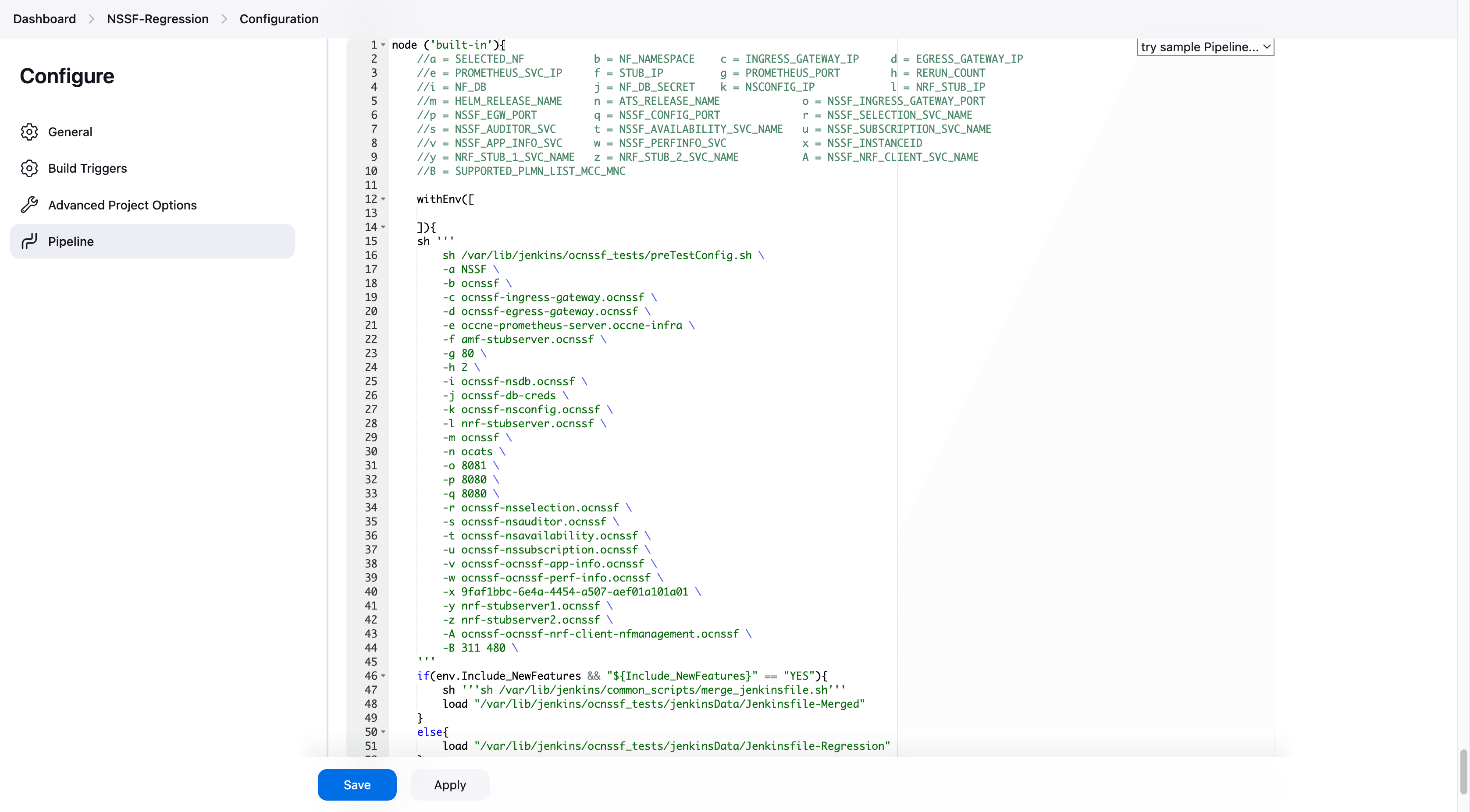

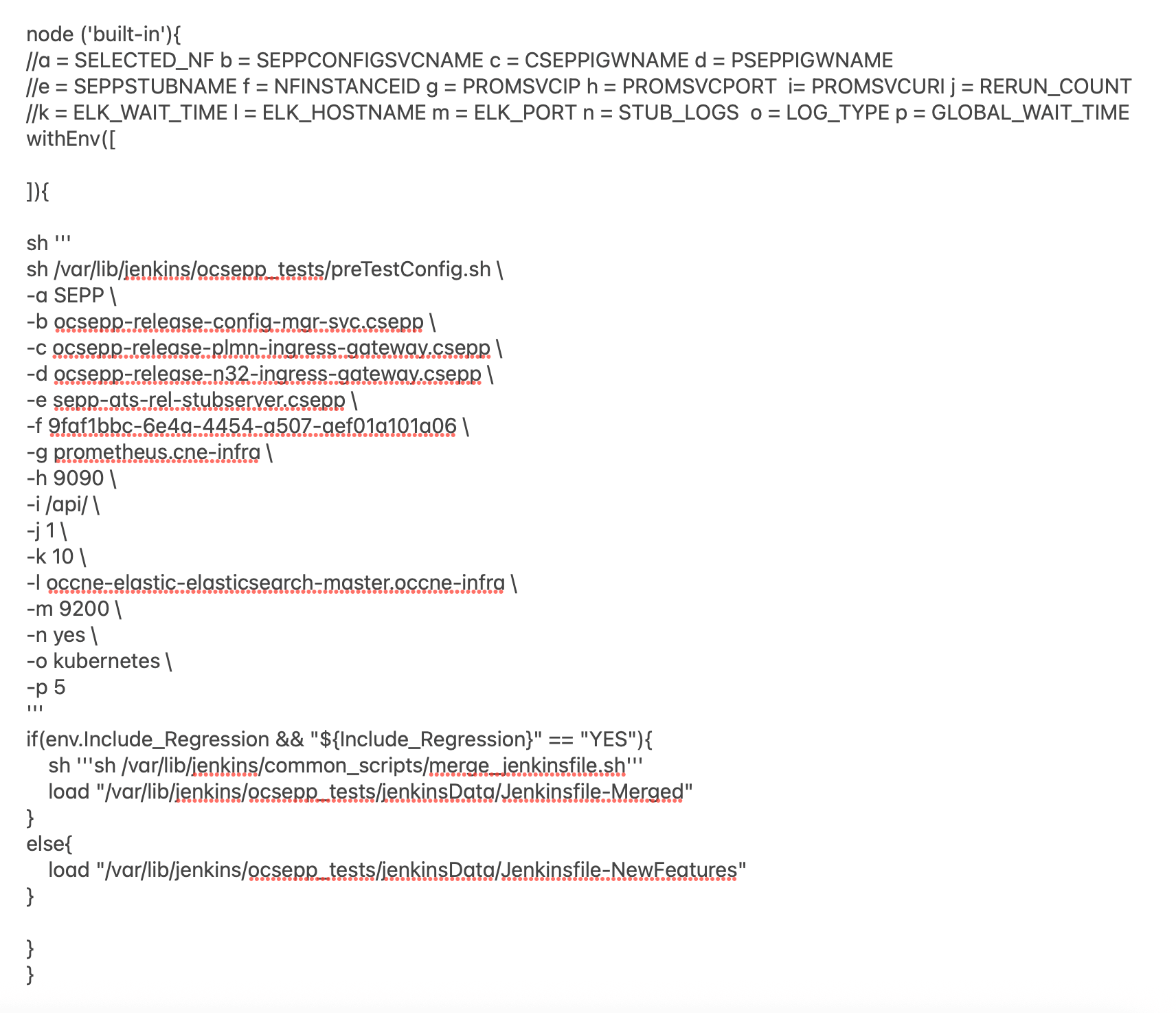

- You can modify script pipeline parameters from "a" to "B" on the

basis of your deployment environment and click Save. The content of the pipeline

script is as

follows:

node ('built-in'){ //a = SELECTED_NF b = NF_NAMESPACE c = INGRESS_GATEWAY_IP d = EGRESS_GATEWAY_IP //e = PROMETHEUS_SVC_IP f = STUB_IP g = PROMETHEUS_PORT h = RERUN_COUNT //i = NF_DB j = NF_DB_SECRET k = NSCONFIG_IP l = NRF_STUB_IP //m = HELM_RELEASE_NAME n = ATS_RELEASE_NAME o = NSSF_INGRESS_GATEWAY_PORT //p = NSSF_EGW_PORT q = NSSF_CONFIG_PORT r = NSSF_SELECTION_SVC_NAME //s = NSSF_AUDITOR_SVC t = NSSF_AVAILABILITY_SVC_NAME u = NSSF_SUBSCRIPTION_SVC_NAME //v = NSSF_APP_INFO_SVC w = NSSF_PERFINFO_SVC x = NSSF_INSTANCEID //y = NRF_STUB_1_SVC_NAME z = NRF_STUB_2_SVC_NAME A = NSSF_NRF_CLIENT_SVC_NAME //B = SUPPORTED_PLMN_LIST_MCC_MNC withEnv([ ]){ sh ''' sh /var/lib/jenkins/ocnssf_tests/preTestConfig.sh \ -a NSSF \ -b ocnssf \ -c ocnssf-ingress-gateway.ocnssf \ -d ocnssf-egress-gateway.ocnssf \ -e occne-prometheus-server.occne-infra \ -f amf-stubserver.ocnssf \ -g 80 \ -h 2 \ -i ocnssf-nsdb.ocnssf \ -j ocnssf-db-creds \ -k ocnssf-nsconfig.ocnssf \ -l nrf-stubserver.ocnssf \ -m ocnssf \ -n ocats \ -o 8081 \ -p 8080 \ -q 8080 \ -r ocnssf-nsselection.ocnssf \ -s ocnssf-nsauditor.ocnssf \ -t ocnssf-nsavailability.ocnssf \ -u ocnssf-nssubscription.ocnssf \ -v ocnssf-ocnssf-app-info.ocnssf \ -w ocnssf-ocnssf-perf-info.ocnssf \ -x 9faf1bbc-6e4a-4454-a507-aef01a101a01 \ -y nrf-stubserver1.ocnssf \ -z nrf-stubserver2.ocnssf \ -A ocnssf-ocnssf-nrf-client-nfmanagement.ocnssf \ -B 311 480 \ ''' if(env.Include_Regression && "${Include_Regression}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-NewFeatures" } } }Note:

The User MUST NOT change any other value apart from these parameters.The description of these parameters is as follows:

- a: Name of the NF to be tested in capital (NSSF).

- b: Namespace in which the NSSF is deployed (default is ocnssf)

- c: Ingress Gateway IP address (default is ocnssf-ingress-gateway.ocnssf)

- d: Egress Gateway IP address (default is ocnssf-egress-gateway.ocnssf)

- e: Prometheus service IP address (default is prometheus.cne-infra)

- f: Stub service IP address (default is ocats-amf-stubserver.ocnssf)

- g: Port of Prometheus service (default is 80)

- h: Number of times the re-run of failed case is allowed (default is 2).

- i: Database name (default is ocnssf-nsdb.ocnssf)

- j Database secrets (default ocnssf-db-creds)

- k: NSSF config ip address (ocnssf-nsconfig.ocnssf)

- l: NRF stub server IP address (ocats-nrf-stubserver.ocnssf)

- m: NSSF release name (ocnssf)

- n: ATS release name (ocats)

- o: NSSF Ingress Gateway Port

- p: NSSF Egress Gateway Port

- q: NSSF Config Port

- r: NSSF Selection Service Name

- s: NSSF Auditor Service Name

- t: NSSF Availability Service Name

- u: NSSF Subscription Service Name

- v: APP Info Service Name

- w:Perf info Service Name

- x: NSSF Supported PLMN

- A: NRF Client Managment Service Name (ocnssf-ocnssf-nrf-client-nfmanagement.ocnssf)

- B: NSSF PLMN List (311 480 )

Note:

- Do not change any value if the OCCNE cluster is used and NSSF, ATS, and STUB are deployed in the ocnssf namespace.

- In the above image NSSF Helm release name is "ocnssf",

ATS Helm Release Name is "ocats" and namespace is "ocnssf". If any

change in helm release name of NSSF, ATS and namespace needs to be

updated accordingly.

For example, NSSF Helm release name is "ocnssfats" and ATS Helm release name is "ocatsnssf" and namespace is "ocnssf2510", then above Pipeline Configuration should be edited as shown below.

- NSSF Helm release name should be updated in "c d i k m r s t u v w" if any change in NSSF helm release name apart from "ocnssf".

- The NSSF Instance ID needs to be updated in the ATS

Jenkins pipeline parameters if there are any changes in the NSSF

custom values (CV) file. By default, the NSSF Instance ID is set to

"

9faf1bbc-6e4a-4454-a507-aef01a101a01". If the Instance ID is modified in the NSSF CV file, the same changes must be reflected in the Jenkins pipeline parameters.By default, NSSF Instance ID in NSSF custom values file as below:#InstanceId of NSSF used in case of GR nfInstanceId: &nfInstanceId "9faf1bbc-6e4a-4454-a507-aef01a101a01"For example, if the nfInstanceId in the NSSF custom values file is modified from "9faf1bbc-6e4a-4454-a507-aef01a101a01" to "9faf1bbc-6e4a-4454-a507-aef01a101a20", the same change must be updated in the "x" Jenkins pipeline parameters as shown below:node ('built-in'){ //a = SELECTED_NF b = NF_NAMESPACE c = INGRESS_GATEWAY_IP d = EGRESS_GATEWAY_IP //e = PROMETHEUS_SVC_IP f = STUB_IP g = PROMETHEUS_PORT h = RERUN_COUNT //i = NF_DB j = NF_DB_SECRET k = NSCONFIG_IP l = NRF_STUB_IP //m = HELM_RELEASE_NAME n = ATS_RELEASE_NAME o = NSSF_INGRESS_GATEWAY_PORT //p = NSSF_EGW_PORT q = NSSF_CONFIG_PORT r = NSSF_SELECTION_SVC_NAME //s = NSSF_AUDITOR_SVC t = NSSF_AVAILABILITY_SVC_NAME u = NSSF_SUBSCRIPTION_SVC_NAME //v = NSSF_APP_INFO_SVC w = NSSF_PERFINFO_SVC x = NSSF_INSTANCEID //y = NRF_STUB_1_SVC_NAME z = NRF_STUB_2_SVC_NAME A = NSSF_NRF_CLIENT_SVC_NAME //B = SUPPORTED_PLMN_LIST_MCC_MNC withEnv([ ]){ sh ''' sh /var/lib/jenkins/ocnssf_tests/preTestConfig.sh \ -a NSSF \ -b ocnssf2510 \ -c ocnssfats-ingress-gateway.ocnssf2510 \ -d ocnssfats-egress-gateway.ocnssf2510 \ -e occne-prometheus-server.occne-infra \ -f amf-stubserver.ocnssf2510 \ -g 80 \ -h 2 \ -i ocnssf-nsdb.ocnssf \ -j ocnssf-db-creds \ -k ocnssfats-nsconfig.ocnssf2510 \ -l nrf-stubserver.ocnssf2510 \ -m ocnssfats \ -n ocats \ -o 8081 \ -p 8080 \ -q 8080 \ -r ocnssfats-nsselection.ocnssf2510 \ -s ocnssfats-nsauditor.ocnssf2510 \ -t ocnssfats-nsavailability.ocnssf2510 \ -u ocnssfats-nssubscription.ocnssf2510 \ -v ocnssfats-ocnssf-app-info.ocnssf2510 \ -w ocnssfats-ocnssf-perf-info.ocnssf2510 \ -x 9faf1bbc-6e4a-4454-a507-aef01a101a20 \ -y nrf-stubserver1.ocnssf2510 \ -z nrf-stubserver2.ocnssf2510 \ -A ocnssfats-ocnssf-nrf-client-nfmanagement.ocnssf2510 \ -B 311 480 \ ''' if(env.Include_Regression && "${Include_Regression}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-NewFeatures" } } }

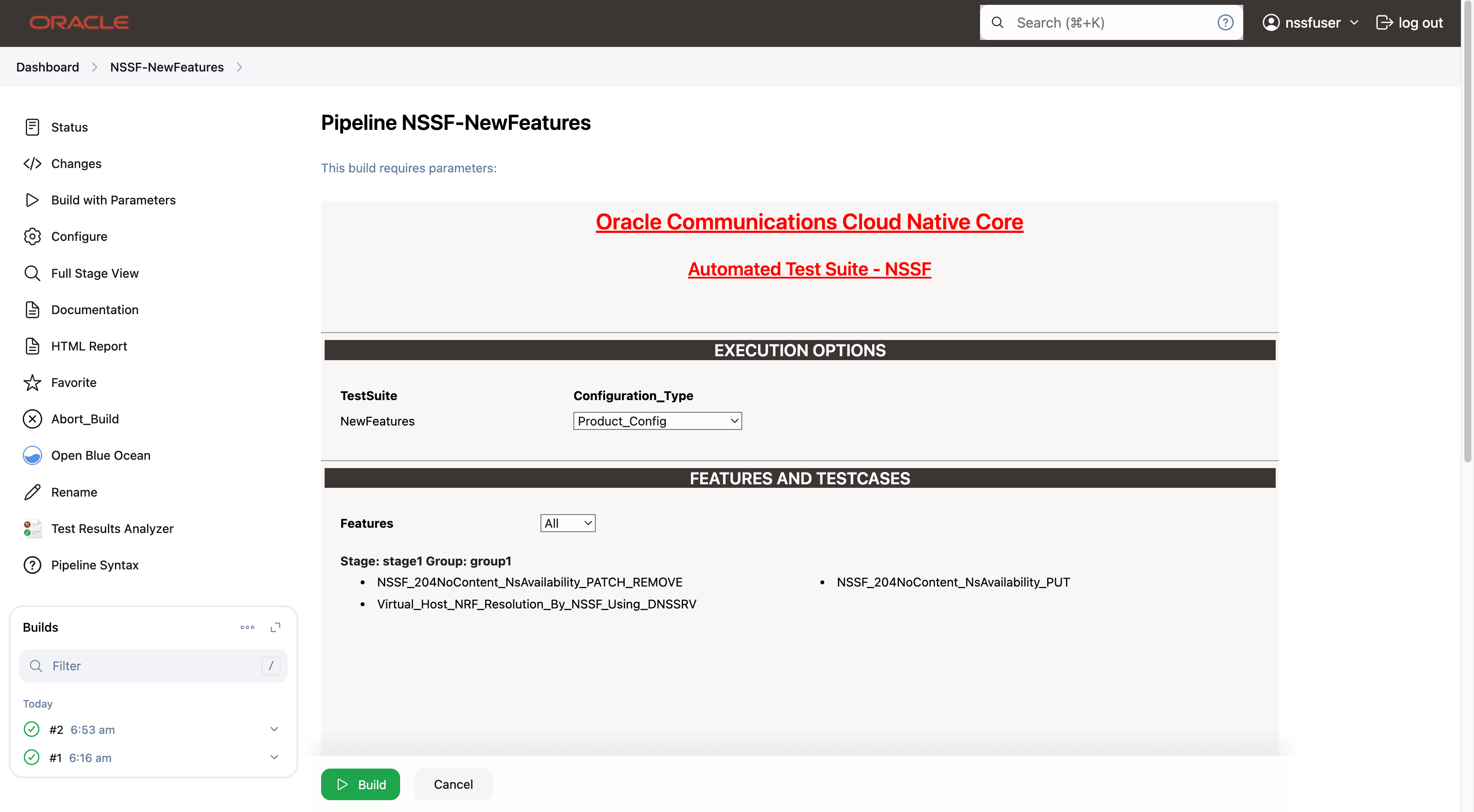

- Click Save after making necessary changes. The Pipeline NSSF-NewFeatures screen appears.

Running NSSF New Features Test Cases

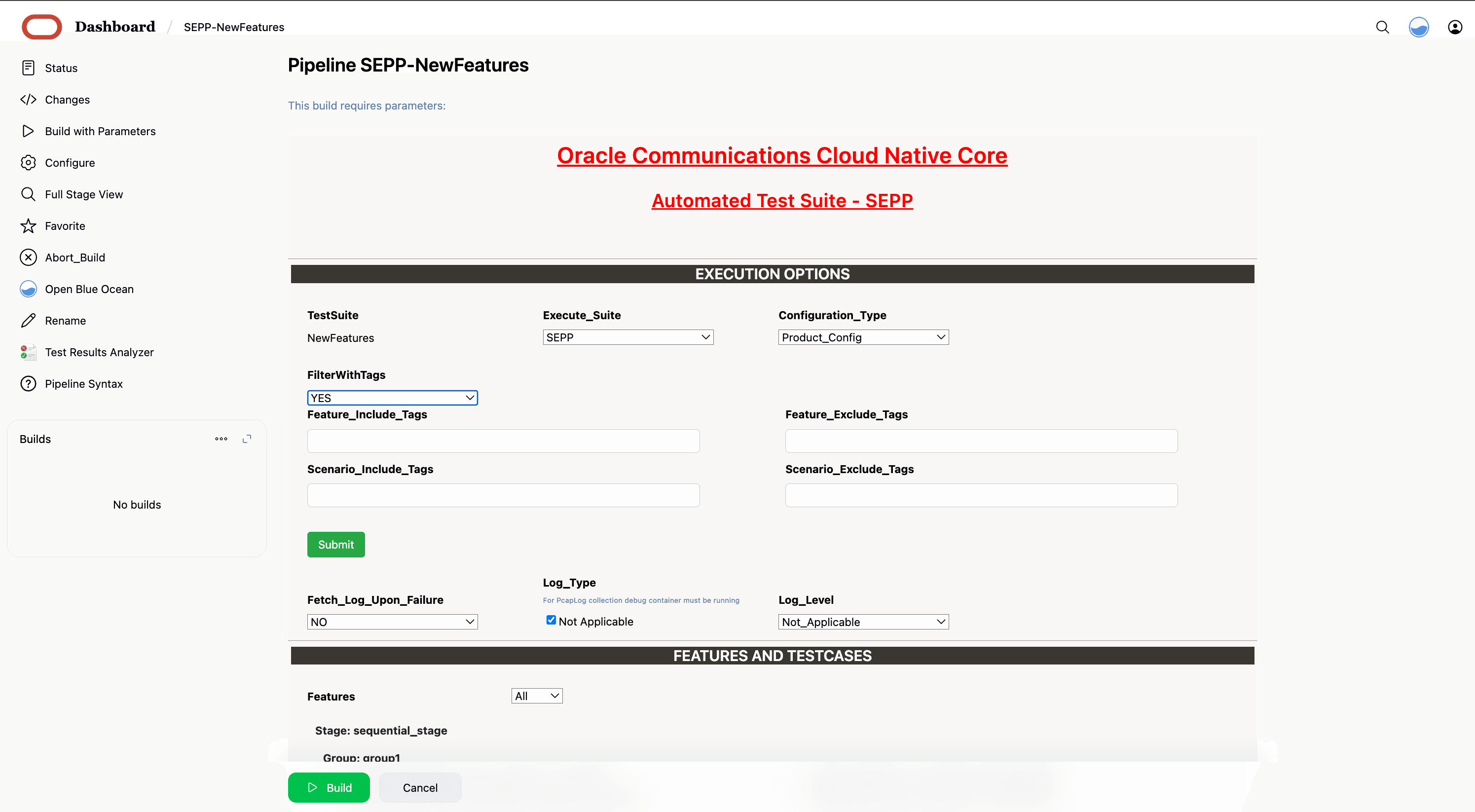

To run NSSF New Features test cases:

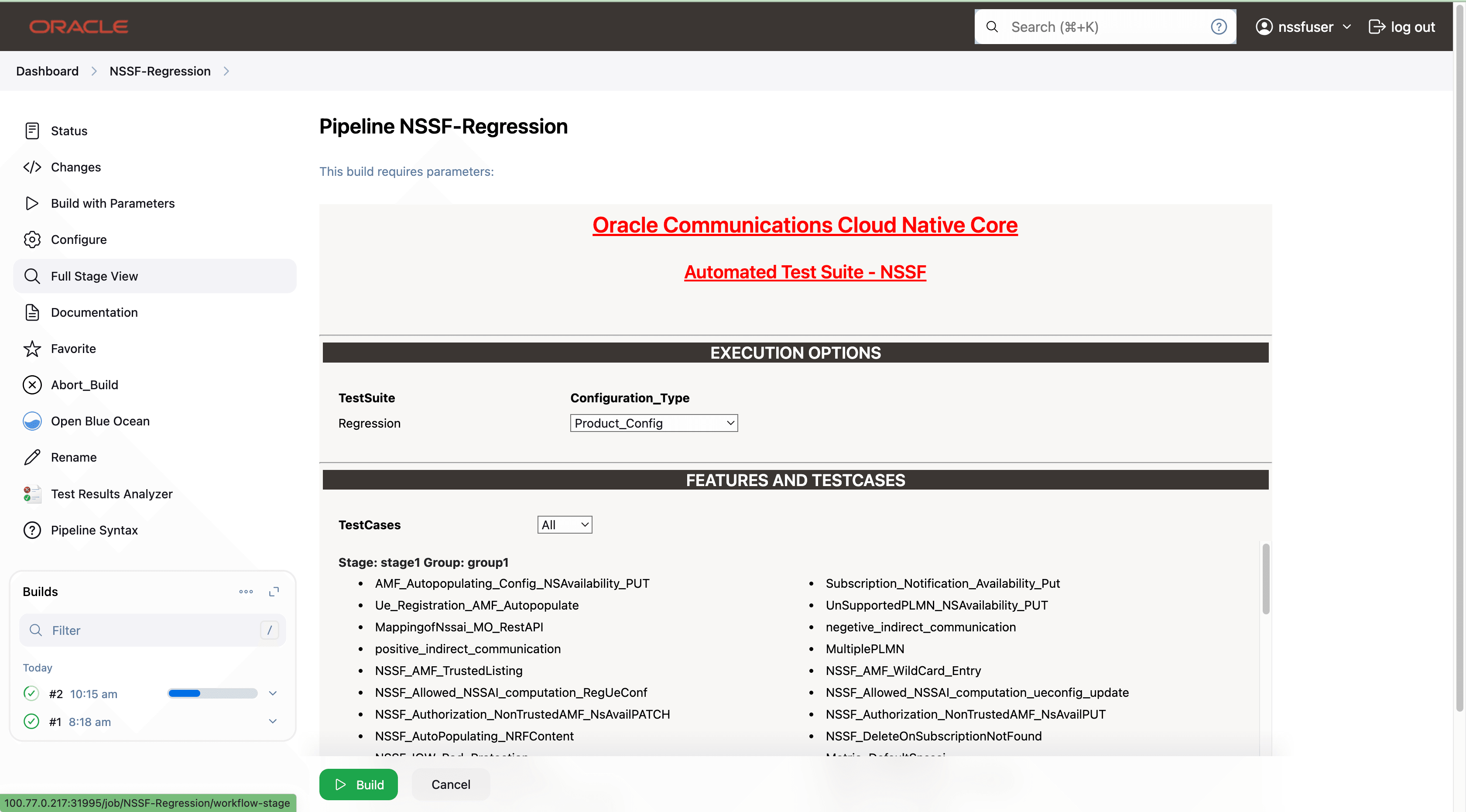

- Go back to new features and Click Build with Parameters

present on the NSSF-NewFeatures screen in the extreme left column

corresponding to NSSF-NewFeatures row as shown below:

- The following screen appears:

In the above screen, there are three Select_Option(s), which are:

- By default, features 'ALL', configuration type 'Product_config', include regression 'NO' will be selected, and all test cases will be executed once clicked on BUILD, as shown above.

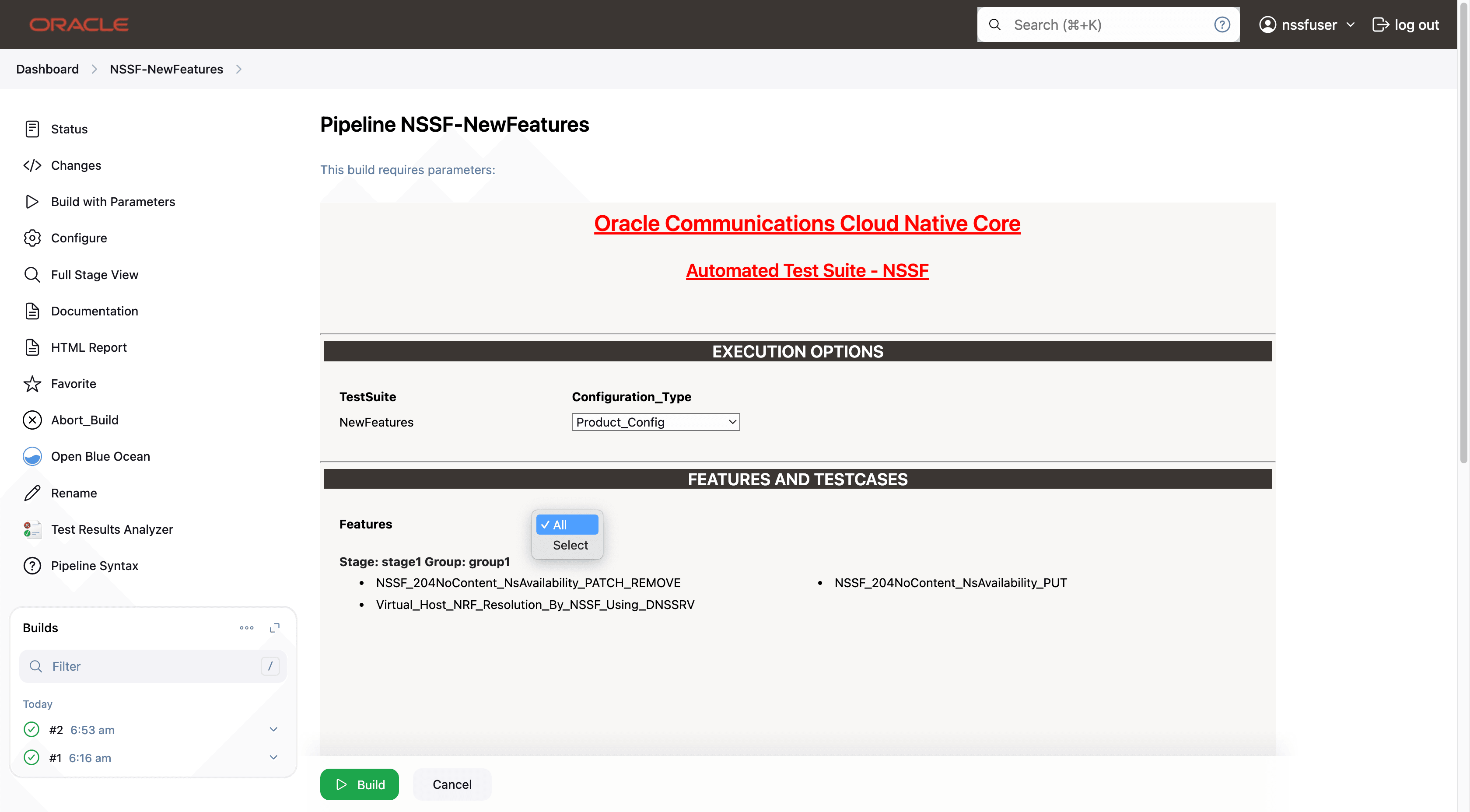

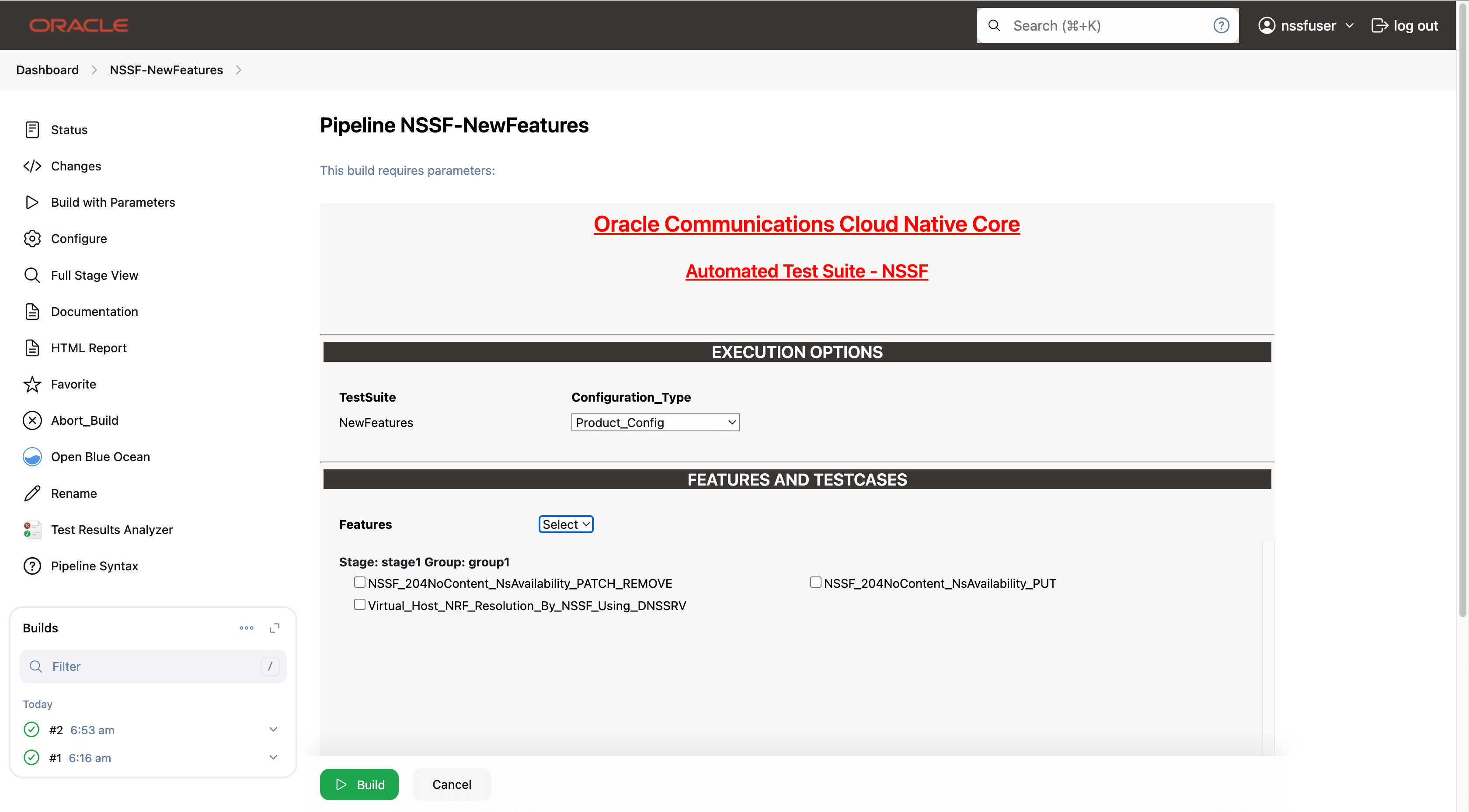

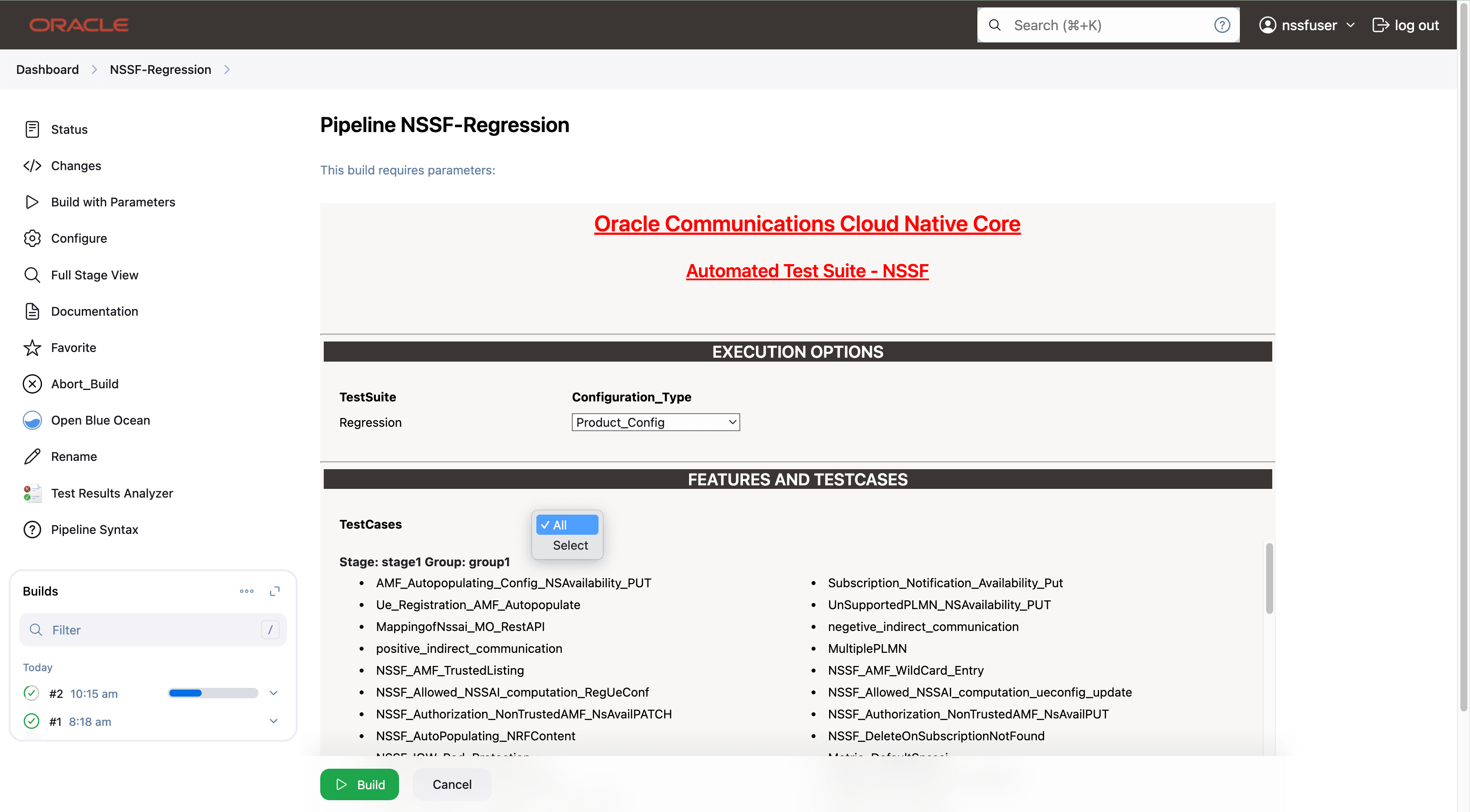

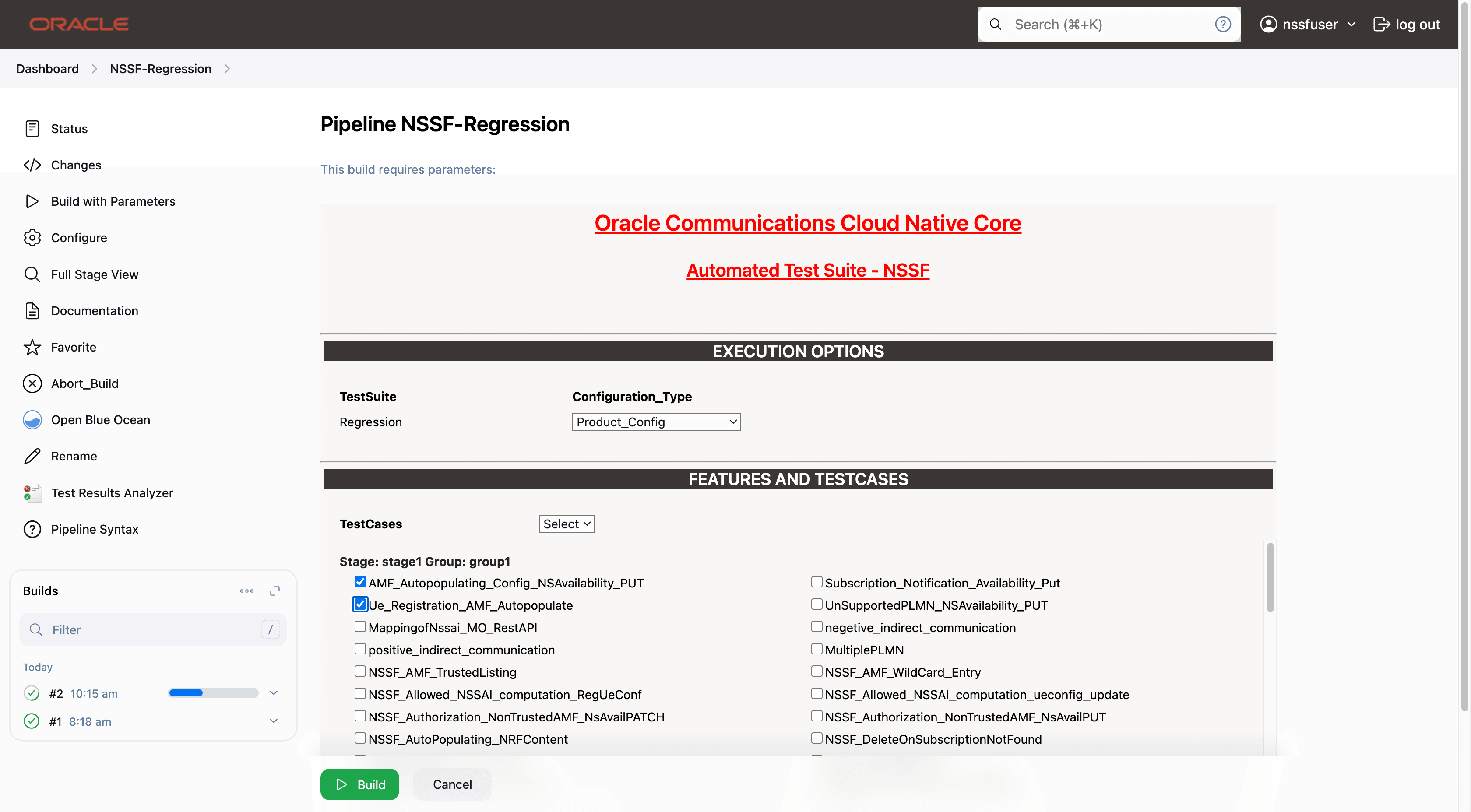

- Features: ALL. Once you click on ALL, a dropdown

'Select' option will appear. After selecting 'Select,' options will

appear to choose feature files. To run the selected feature files,

click on 'Build,' as shown below.

- Select one of the following configuration types:

- Product_Config: On selecting this option, test cases from product folders are populated on ATS UI and product configuration is applied to them via the keyvalue pair and yaml files defined or present in the "Product Config" folder.

- Custom_Config: On selecting this option, test cases from custom folders are populated on ATS UI and custom configuration is applied to them via the keyvalue pair and yaml files defined or present in the "Custom Config" folder. To use the Parameterization feature, always select the Custom_Config option. User can copy, add, or delete the required test cases that are available for the NSSF and place them appropriately within the custom folder for NSSF-NewFeatures. Reload the page to view the test cases available in the custom NewFeatures folder. For more information, see Parameterized approach for SUT custom configuration.

The NSSF test cases are divided into NSSF Service operations as follows:

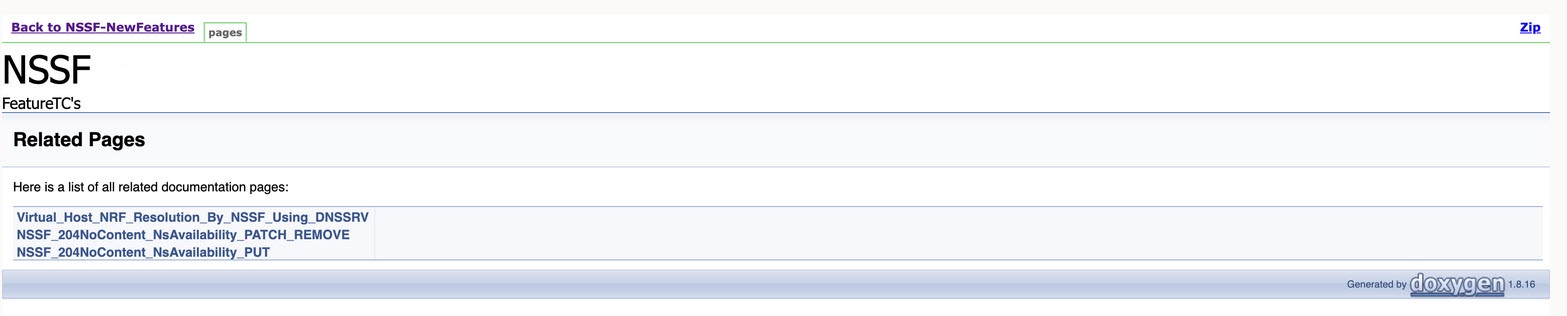

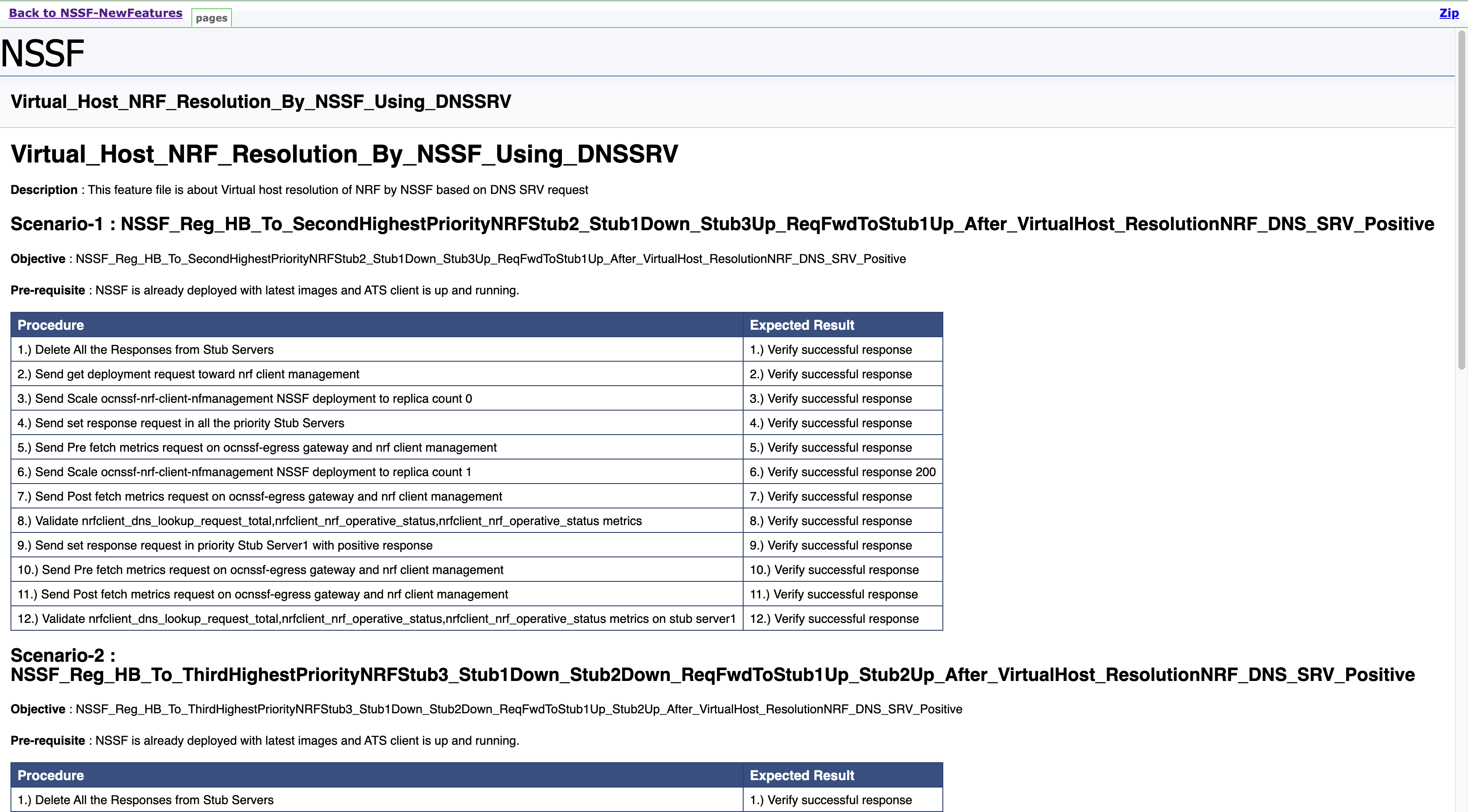

- NSSF_204NoContent_NsAvailability_PATCH_REMOVE- This feature file contains test cases related to PATCH or PUT request for deleting all slices.

- Virtual_Host_NRF_Resolution_By_NSSF_Using_DNSSRV- This feature file contains test cases related to DNS SRV based selection of NRF in NSSF.

If you want to run custom_config, select below parameter as custom_config, where the framework automatically points out to custom_config and cust_data.

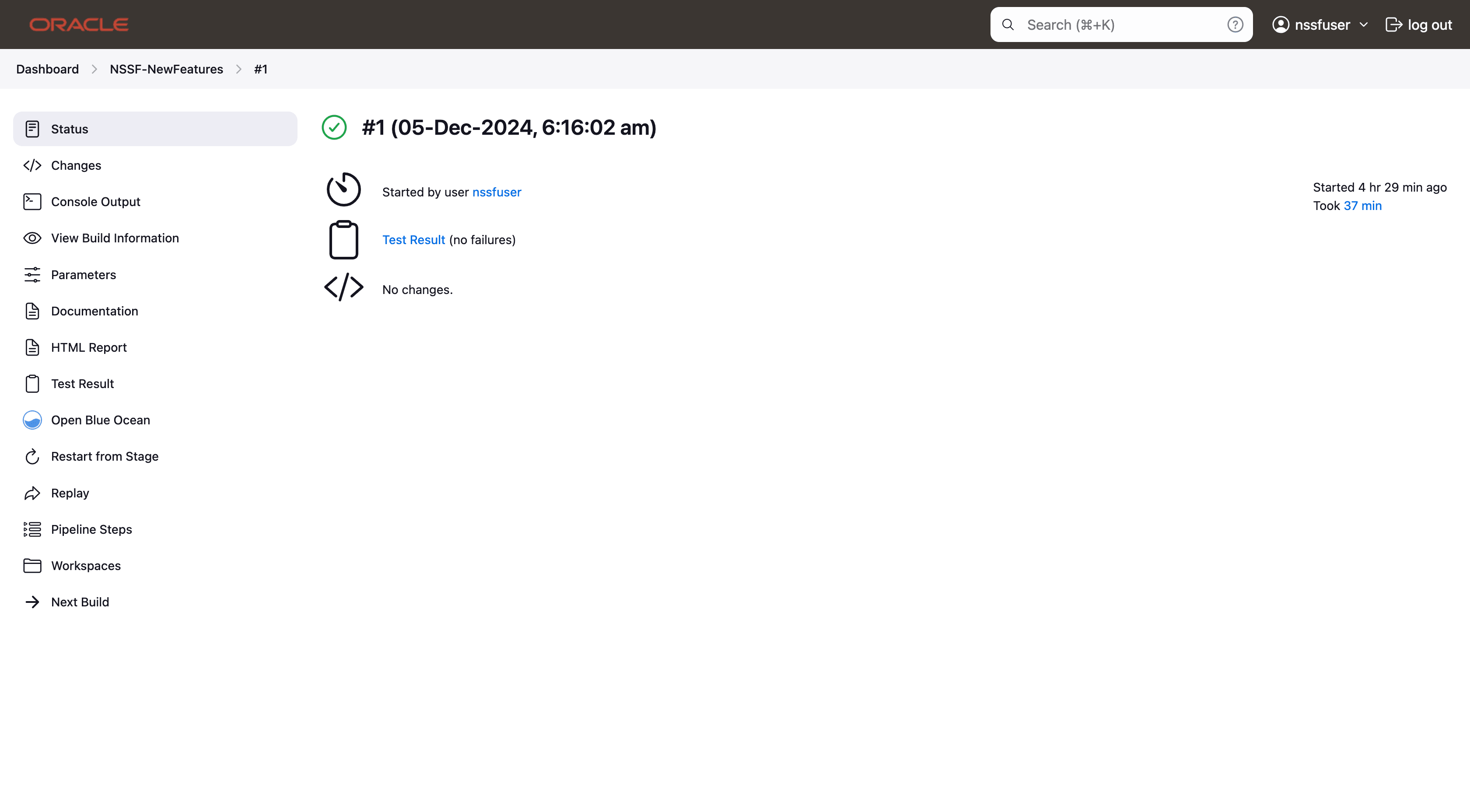

3 features passed, 0 failed, 0 skipped 25 scenarios passed, 0 failed, 0 skipped 546 steps passed, 0 failed, 0 skipped, 0 undefined Took 36m30.275s

Parameterized approach for SUT custom configuration

Using this feature, user can make the following customizations to custom folders:

- Add new test cases by adding datafiles

- Remove test cases

- Modify the parameters and their values in the key-value pair or

<feature>.yaml files

To run ATS test cases, user can maintain as many versions of Custom_Config folder by using the following naming convention:

Cust ConfigN

where N can be any number

At the time of execution, ensure to rename the required folder to Custom Config folder as Jenkins always retrieves data from this folder when user selects Custom_Config.

Updating Global Parameters

To use Custom_Config, it is required to change the value of cust_folder from data to cust_data in global.yaml file. In addition, you can customize the parameters and their respective values in the global.yaml as per the requirements.

Updating Feature Parameters

Consider the following points when customizing <feature>.yaml files for parameterized feature:

- In addition to global.yaml parameters, feature files may also contain parameters for which user can update values at the time of running pipelines.

- Changing the values of parameters tagged as "Feature Specific Value" may cause failures at the time of running pipelines.

- Values for parameters tagged with #START_GLOBAL and #END_GLOBAL tags take values from global.yaml.

Note:

For NSSF-ATS release 25.1.200, parameterization is supported for NSSF NewFeatures pipeline only.4.3.4 NSSF-NewFeatures Documentation

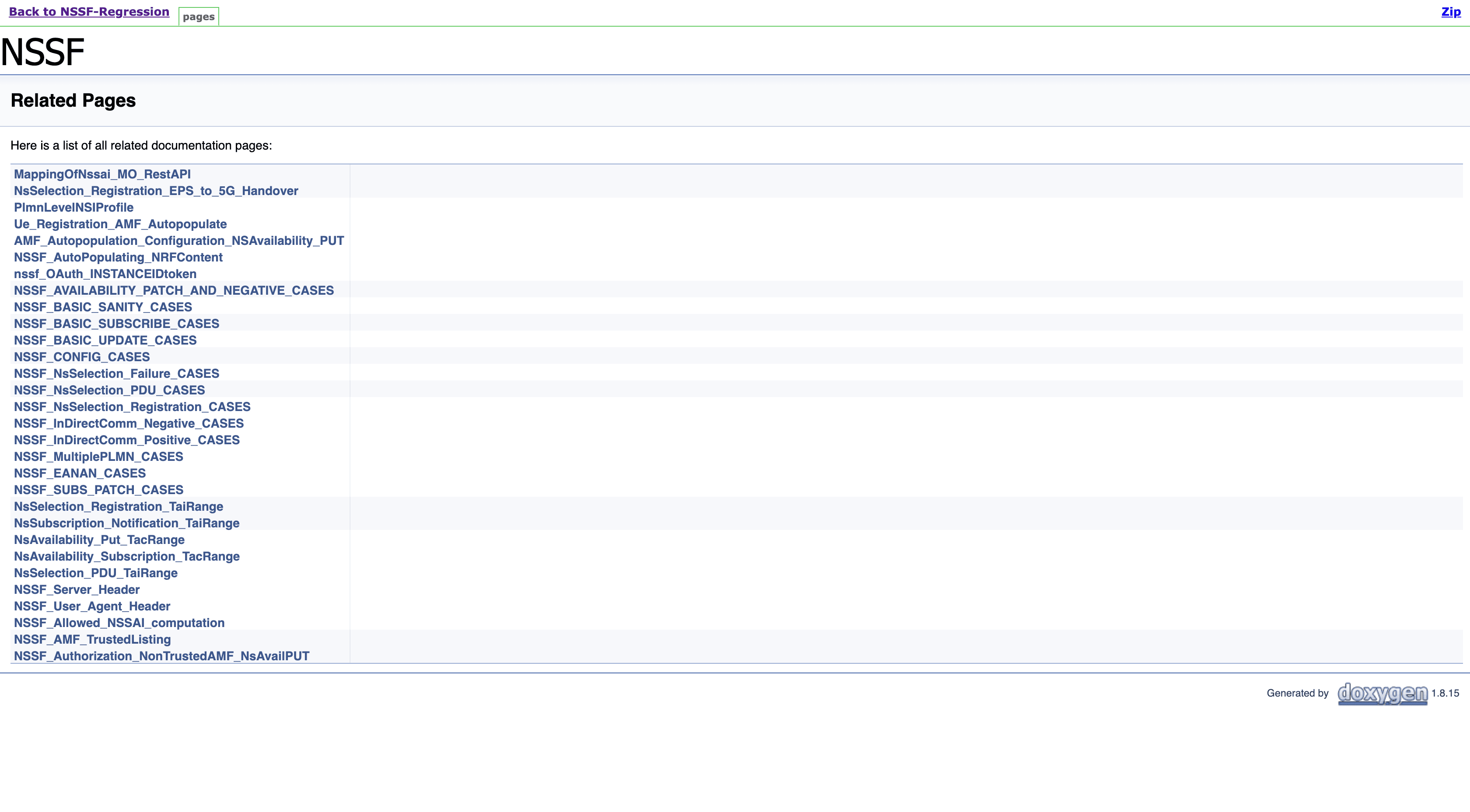

To view NSSF functionalities, go to NSSF-NewFeatures pipeline latest build and click the Documentation link in the left navigation pane. The following screen appears. Click any functionality to view its test cases and scenarios of each test case as shown in the sample screenshot below:

A sample of a few documentation features are as follows:

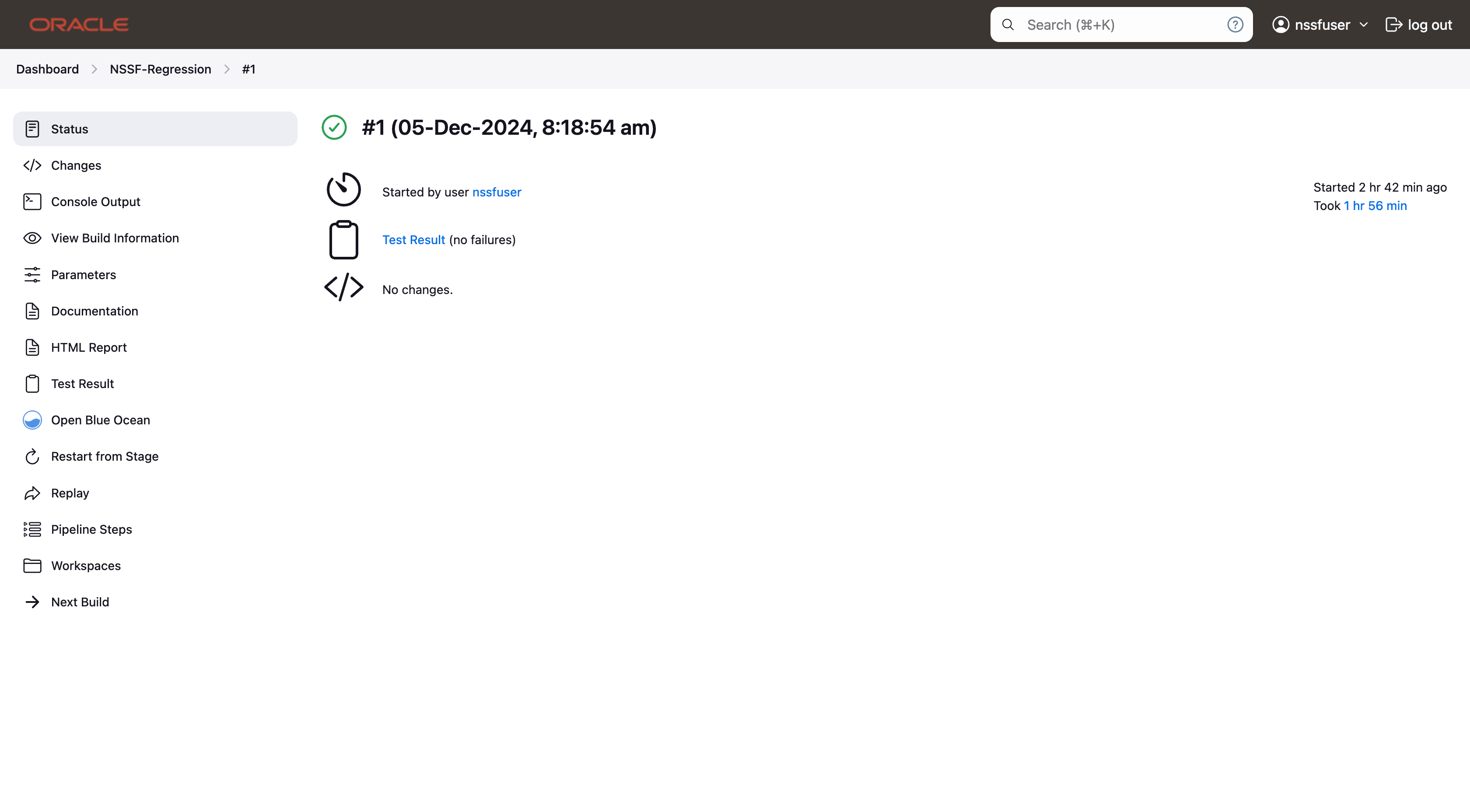

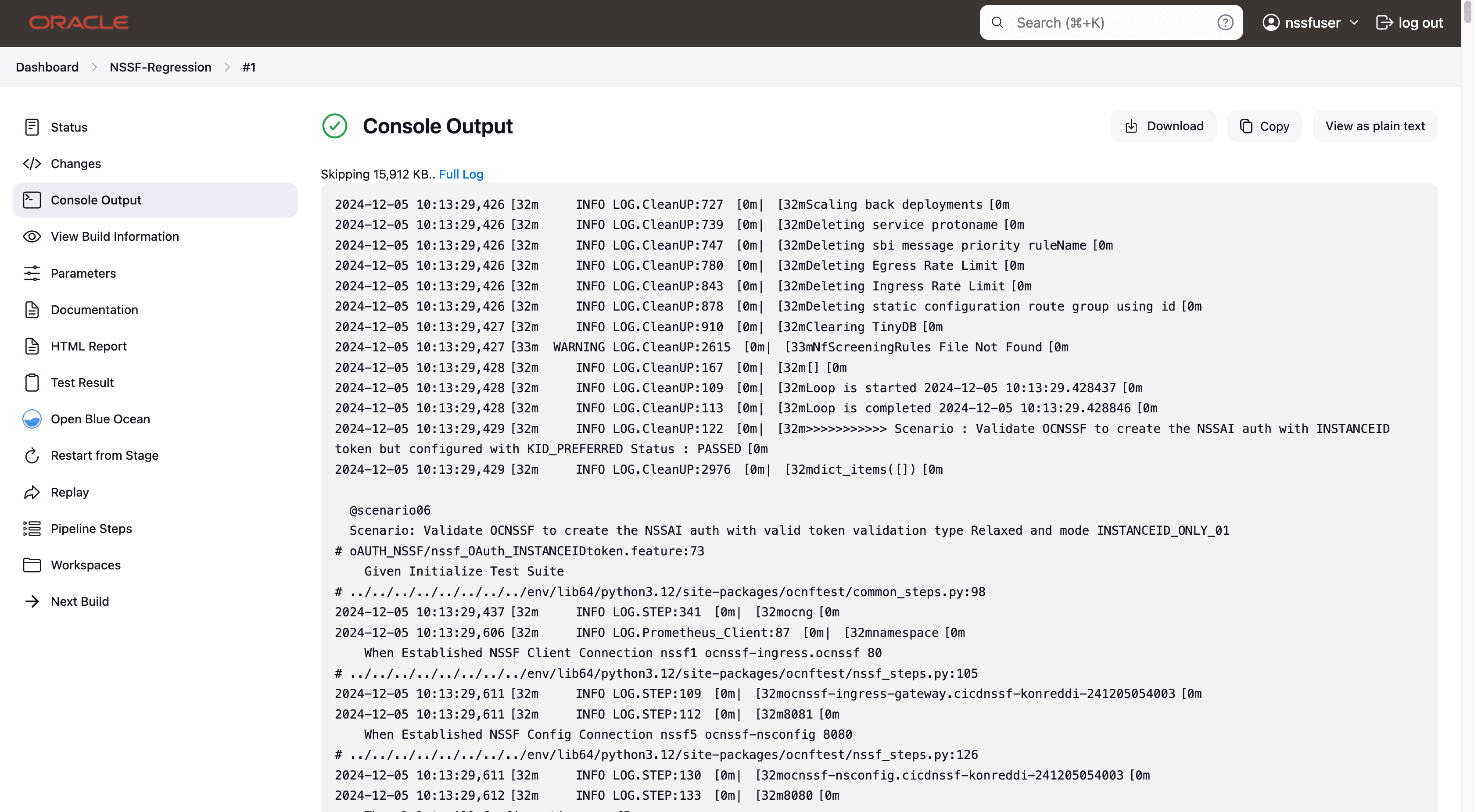

Once the run of new features done successfully, click on the build number whose logs are to be viewed and then click on Console Output on left side navigation.

Wait till the page is loaded completely, then click Download to download the new features logs.

'

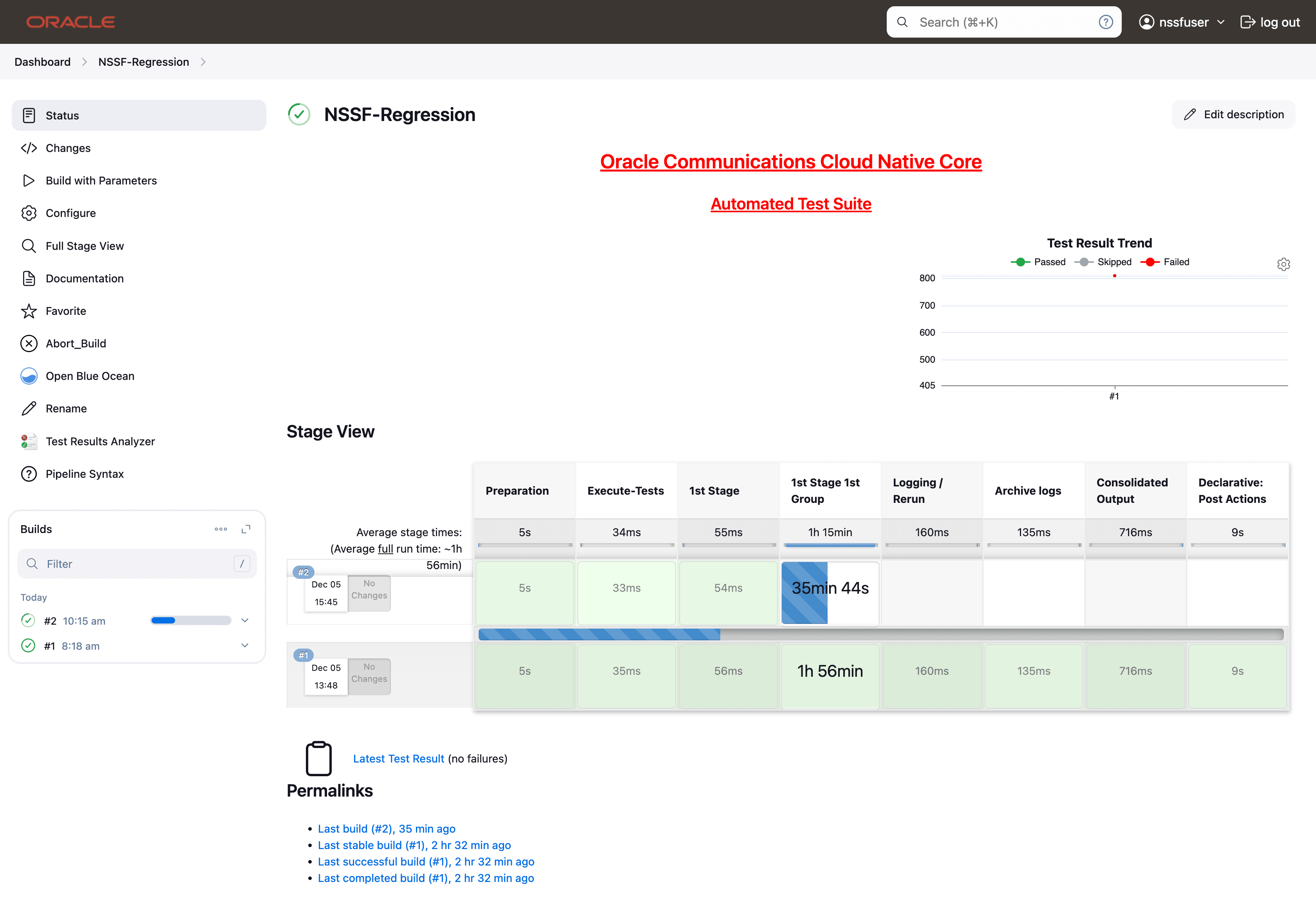

4.3.5 NSSF-Regression Pipeline

This pipeline contains test cases from the previous versions.

Some of the test cases are updated as per the new implementation of NSSF.

The configuration method and parameters are the same as the NewFeatures pipeline.

- Availability Update: These feature files are listed with a prefix as "Update".

- Configuration: These feature files are listed with a prefix as "failure".

- Registration: These feature files are listed with a prefix as "NsSelection_Registration".

- PDU Session: These feature files are listed with a prefix as "NsSelection_PDU".

- SuportedFeatures: This feature file has Feature Negotiation test cases.

- subscription_patch: This feature file has SUBMOD test cases.

- eanan: This feature file has EANAN test cases.

- TAI Range: This feature file has TAI Range supported NsAvailability and NsSelection test cases.

- NSSF_Sending_Notification_via_ARS_SCP: This feature file has test cases related to NRF discovery and subscription.

- NSSF Sanity: This feature file contains all the basic sanity cases for NSSF ATS 24.3.0.

- NSSF_Allowed_NSSAI_computation_RegUeConf: This feature file contains test cases related to NSSF Allowed SNSSAI Computation based on enabling and disabling of System Options PLMN based.

- NSSF_Allowed_NSSAI_computation_ueconfig_update: This feature file contains test cases related to NSSF Allowed SNSSAI Computation based on enabling and disabling of System Options PLMN based.

- NSSF_Authorization_NonTrustedAMF_NsAvailPATCH: This feature file contains test cases related to PATCH request for Update operation of NSSAI Availability in cases of Non Trusted AMFs.

- NSSF_Authorization_NonTrustedAMF_NsAvailPUT: This feature file contains test cases related to PUT request for Update operation of NSSAI Availability in cases of Non Trusted AMFs.

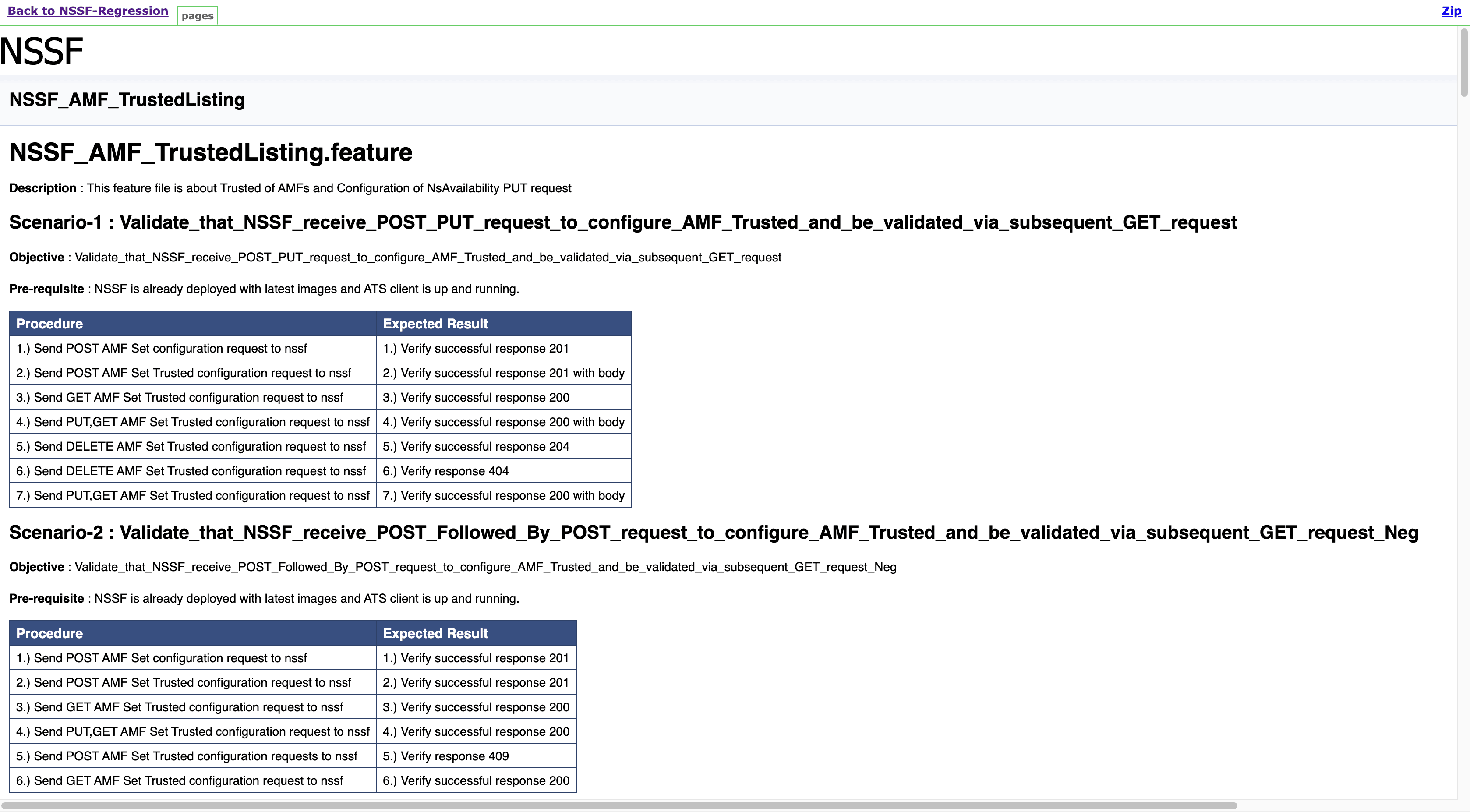

- AMF_TrustedListing: This feature file contains test cases related to Trusted of AMFs and Configuration of NsAvailability PUT request.

- In the above screen:

- Click Configure to access the configuration screen.

- Click Documentation to view the documented test cases.

- Click blue dots inside the Build History box to view the success console logs of the "All" and "Sanity" respectively.

- The Stage View represents the already run pipeline for customer reference.

- Click Configure. Users MUST wait for the page to load

completely. Once the page loads completely, click the Pipeline tab to

reach the Pipeline configuration as shown below:

WARNING:

Make sure that the screen shown above loads completely before you perform any action on it. Also, do not modify any configuration other than that discussed below.

- You can modify script pipeline parameters from "a" to "B" on the

basis of your deployment environment and click Save. The content of the pipeline

script is as

follows:

node ('built-in'){ //a = SELECTED_NF b = NF_NAMESPACE c = INGRESS_GATEWAY_IP d = EGRESS_GATEWAY_IP //e = PROMETHEUS_SVC_IP f = STUB_IP g = PROMETHEUS_PORT h = RERUN_COUNT //i = NF_DB j = NF_DB_SECRET k = NSCONFIG_IP l = NRF_STUB_IP //m = HELM_RELEASE_NAME n = ATS_RELEASE_NAME o = NSSF_INGRESS_GATEWAY_PORT //p = NSSF_EGW_PORT q = NSSF_CONFIG_PORT r = NSSF_SELECTION_SVC_NAME //s = NSSF_AUDITOR_SVC t = NSSF_AVAILABILITY_SVC_NAME u = NSSF_SUBSCRIPTION_SVC_NAME //v = NSSF_APP_INFO_SVC w = NSSF_PERFINFO_SVC x = NSSF_INSTANCEID //y = NRF_STUB_1_SVC_NAME z = NRF_STUB_2_SVC_NAME A = NSSF_NRF_CLIENT_SVC_NAME //B = SUPPORTED_PLMN_LIST_MCC_MNC withEnv([ ]){ sh ''' sh /var/lib/jenkins/ocnssf_tests/preTestConfig.sh \ -a NSSF \ -b ocnssf \ -c ocnssf-ingress-gateway.ocnssf \ -d ocnssf-egress-gateway.ocnssf \ -e occne-prometheus-server.occne-infra \ -f amf-stubserver.ocnssf \ -g 80 \ -h 2 \ -i ocnssf-nsdb.ocnssf \ -j ocnssf-db-creds \ -k ocnssf-nsconfig.ocnssf \ -l nrf-stubserver.ocnssf \ -m ocnssf \ -n ocats \ -o 8081 \ -p 8080 \ -q 8080 \ -r ocnssf-nsselection.ocnssf \ -s ocnssf-nsauditor.ocnssf \ -t ocnssf-nsavailability.ocnssf \ -u ocnssf-nssubscription.ocnssf \ -v ocnssf-ocnssf-app-info.ocnssf \ -w ocnssf-ocnssf-perf-info.ocnssf \ -x 9faf1bbc-6e4a-4454-a507-aef01a101a01 \ -y nrf-stubserver1.ocnssf \ -z nrf-stubserver2.ocnssf \ -A ocnssf-ocnssf-nrf-client-nfmanagement.ocnssf \ -B 311 480 \ ''' if(env.Include_NewFeatures && "${Include_NewFeatures}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-Merged" } else{ load "/var/lib/jenkins/ocnssf_tests/jenkinsData/Jenkinsfile-Regression" } } }Note:

The User MUST NOT change any other value apart from these parameters.The description of these parameters is as follows:

- a: Name of the NF to be tested in capital (NSSF).

- b: Namespace in which the NSSF is deployed (default is ocnssf)

- c: Ingress Gateway IP address (default is ocnssf-ingress-gateway.ocnssf)

- d: Egress Gateway IP address (default is ocnssf-egress-gateway.ocnssf)

- e: Prometheus service IP address (default is prometheus.cne-infra)

- f: Stub service IP address (default is ocats-amf-stubserver.ocnssf)

- g: Port of Prometheus service (default is 80)

- h: Number of times the re-run of failed case is allowed (default is 2).

- i: Database name (default is ocnssf-nsdb.ocnssf)

- j Database secrets (default ocnssf-db-creds)

- k: NSSF config ip address (ocnssf-nsconfig.ocnssf)

- l: NRF stub server IP address (ocats-nrf-stubserver.ocnssf)

- m: NSSF release name (ocnssf)

- n: ATS release name (ocats)

- o: NSSF Ingress Gateway Port

- p: NSSF Egress Gateway Port

- q: NSSF Config Port

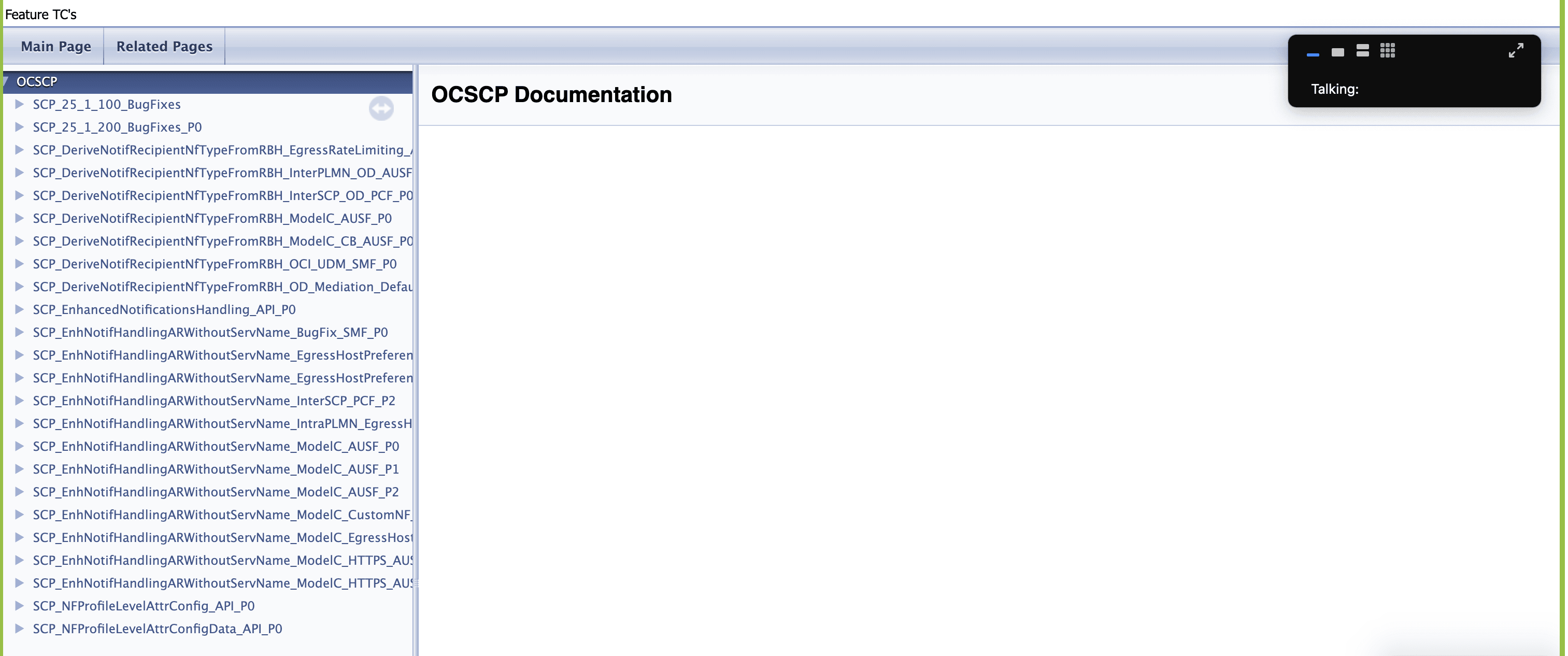

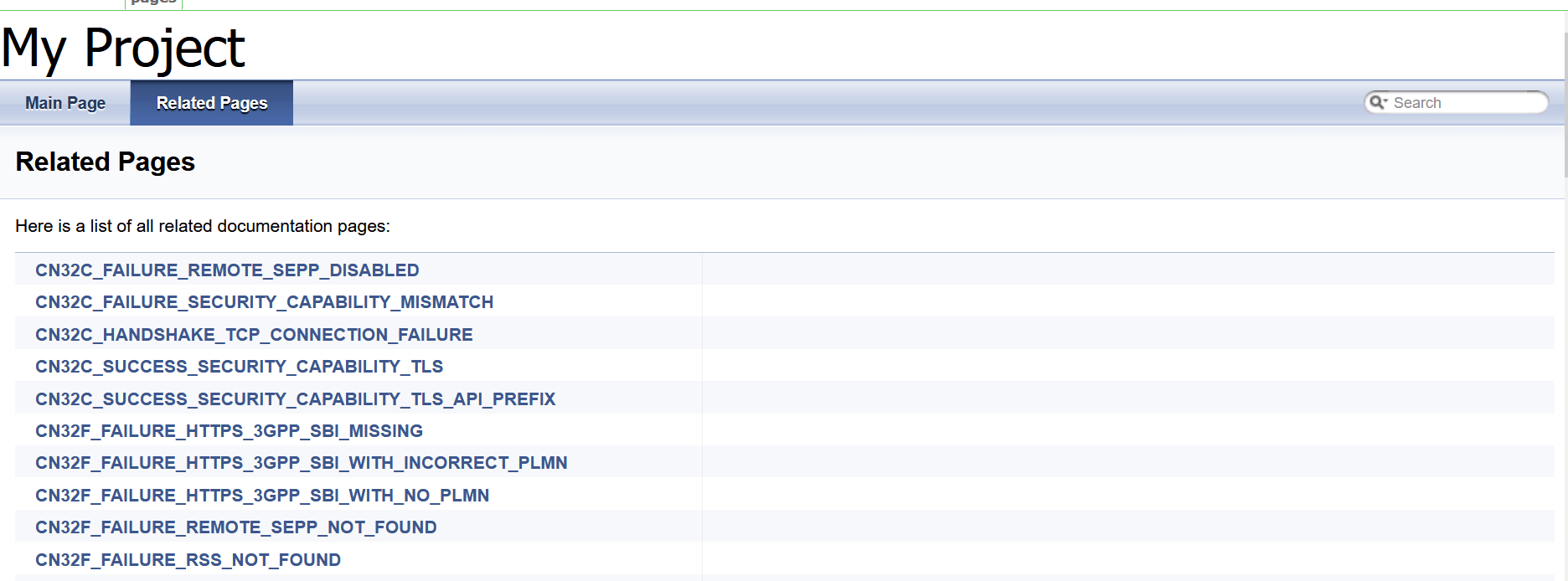

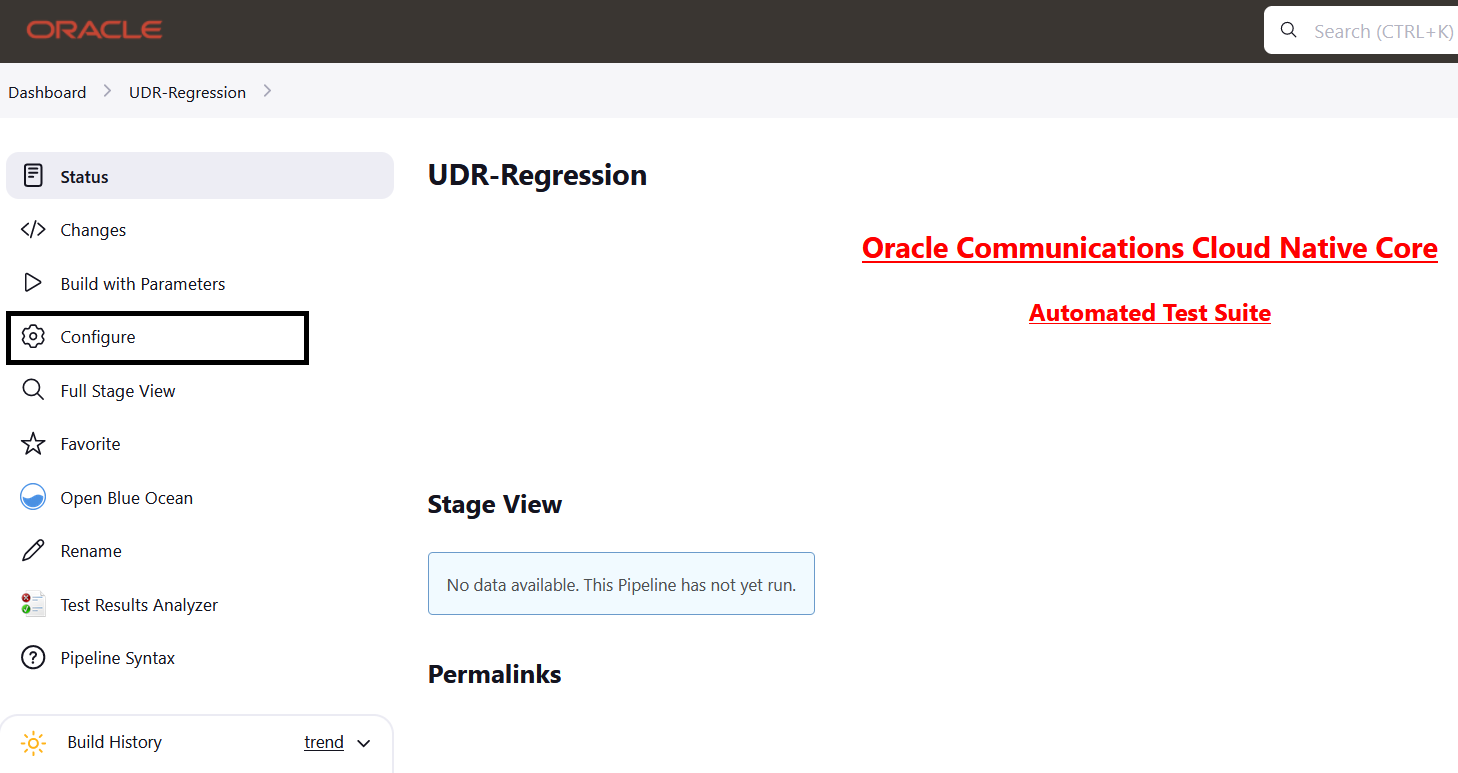

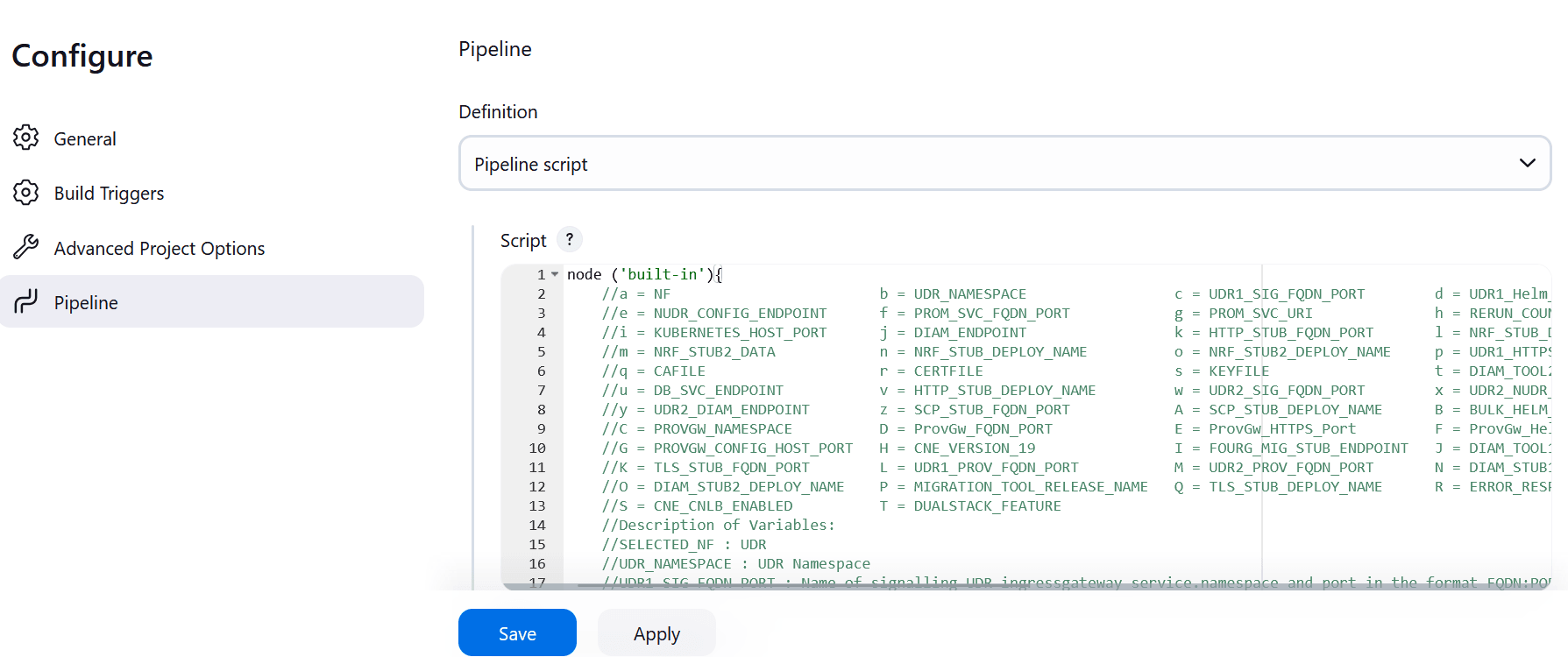

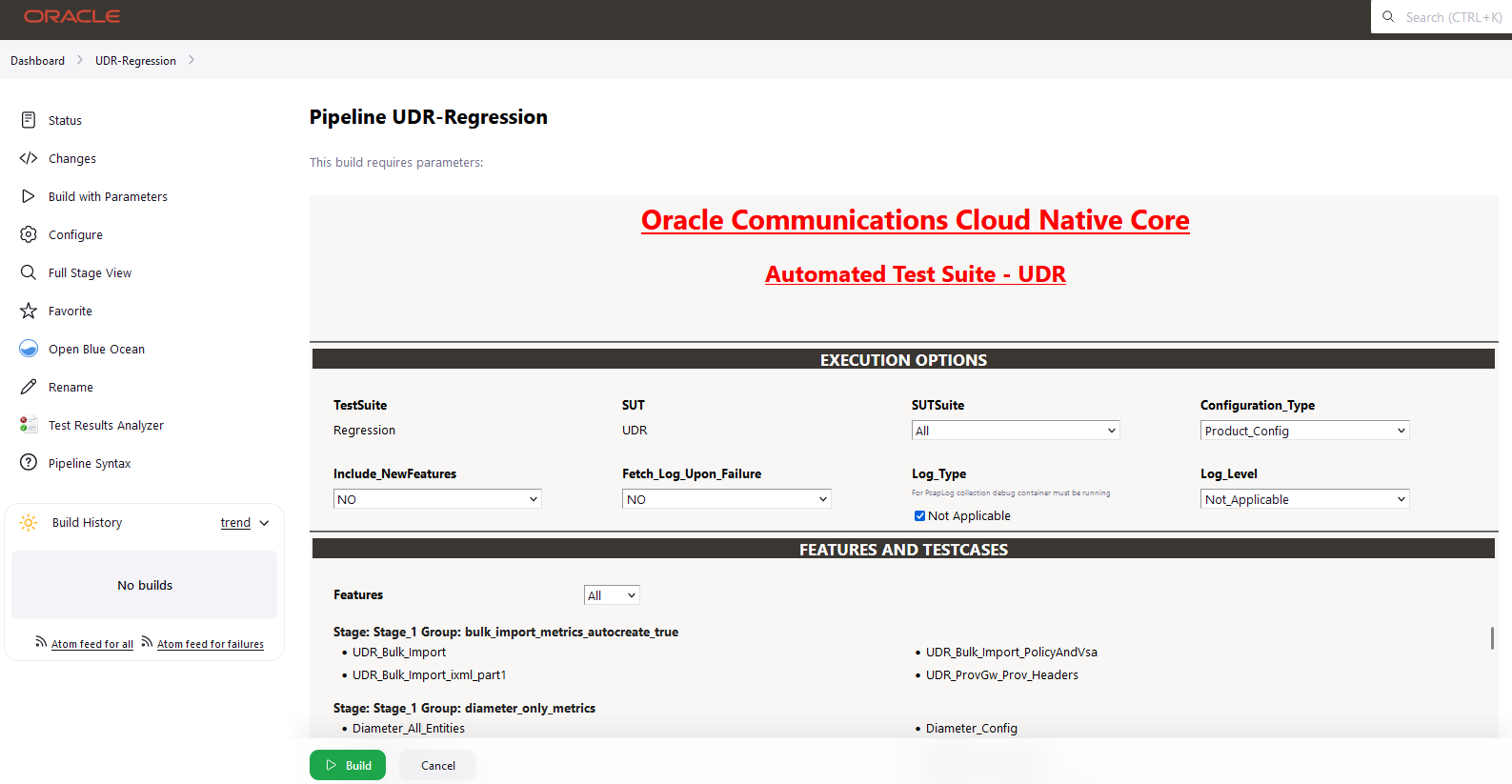

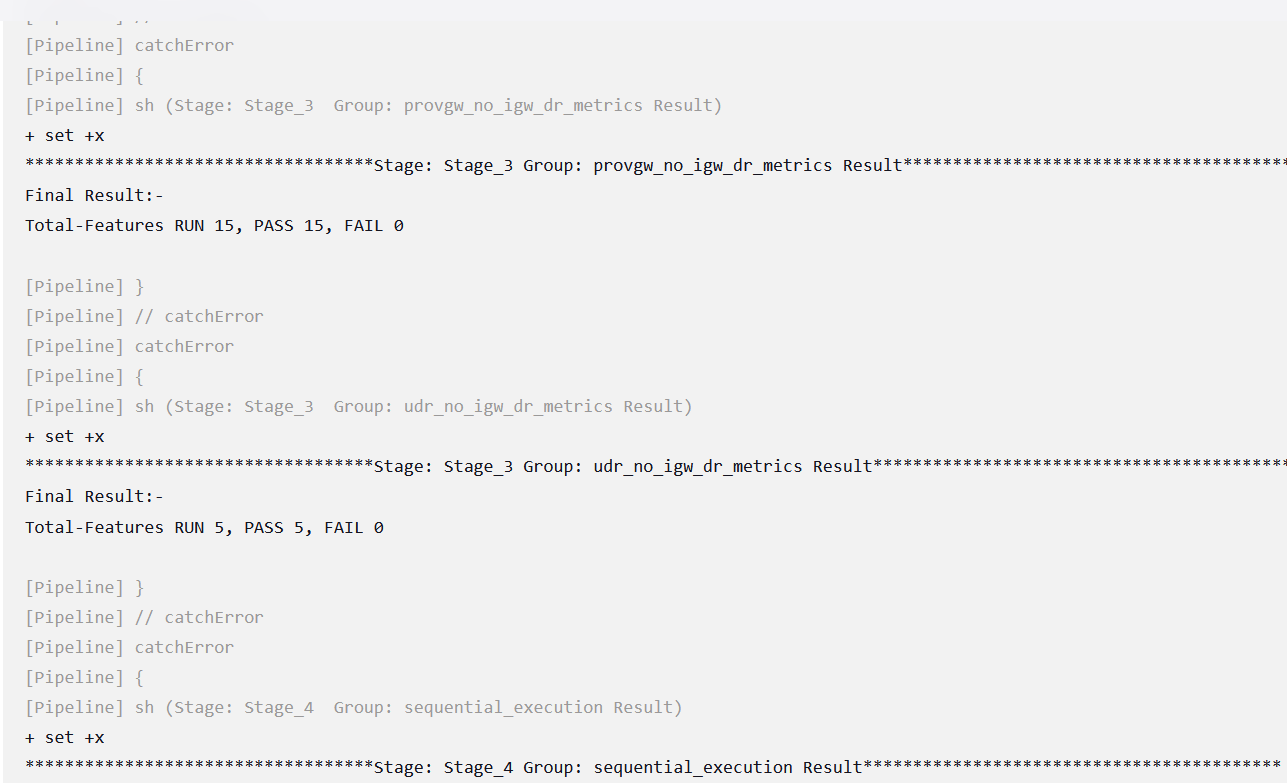

- r: NSSF Selection Service Name