4 NRF Features

This section explains the NRF features.

4.1 Pod Protection Using Rate Limiting

- Ingress Gateway Pod Protection Using Rate Limiting

- Egress Gateway Pod Protection Using Rate Limiting

4.1.1 Egress Gateway Pod Protection Using Rate Limiting

The Egress Gateway microservice manages all outgoing traffic from Network Functions (NFs). The Egress Gateway microservice may become vulnerable due to overload conditions during unexpected traffic spikes, uneven traffic distribution, or bursts caused by network fluctuations. To ensure system stability and prevent potential network outages, a protection mechanism for the Egress Gateway microservice is necessary.

With the implementation of this feature, rate-limiting mechanism is applied for Egress Gateway pods. This mechanism allows to set a maximum number of requests that a pod can process. When the request rate exceeds the threshold, the pods take action to protect themselves. They either reject additional requests with a custom error code or allow them, depending on the configuration. Each Egress Gateway pod has its own Pod Protection Policer, which is applied individually.

The Egress Gateway's configuration for this feature includes:

- Fill Rate: This sets the maximum number of requests a pod can handle in a defined time interval. It is also possible to allocate the specific percentage for various path or method combinations.

- Error Code Profiles: This allows to reject the requests with a predefined error code profile.

- Congestion Configuration: This allows to configure the congestion levels based on CPU resource utilization.

- Default Priority: This allows to configure the priority for different routes.

- Denied Request Actions: This defines the action to be taken when the fill rate is exceeded. This action is based on the priority defined in the denied request actions and the action configured. The possible options for action are 'CONTINUE', which allows requests to be processed, or 'REJECT', which rejects requests with a specified error code. Egress Gateway will reject all the requests which exceeds the fill rate unless specific 'CONTINUE' action is defined in the denied request actions.

The fill rate represents the maximum number of requests a pod can handle when traffic is uniformly distributed within a span of one second interval. However, in bursty traffic scenarios where the traffic is not uniformly distributed within a span of one second interval, even if the average traffic received is within the fill rate, still traffic failure may be expected.

Congestion level is checked in a fixed interval

(congestionconfig.refreshInterval) and the action will be decided

based on the congestion level in that interval. It is possible that the CPU levels might

toggle between these intervals causing the congestion levels to switch frequently. To

know the detected congestion level, see the metric

oc_egressgateway_congestion_level_bucket_total.

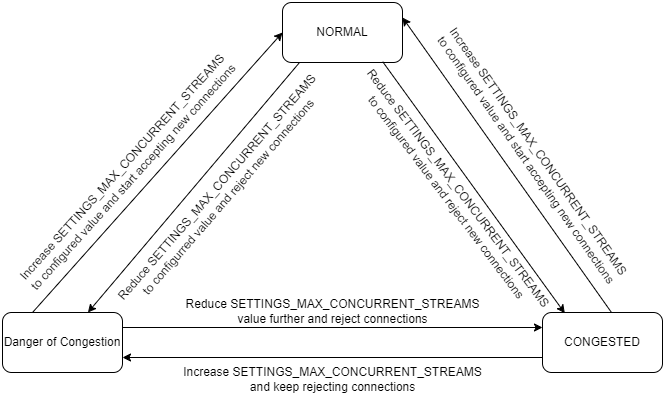

The following table describes NRF's configuration for different congestion levels for Egress Gateway pods:

Table 4-1 Congestion Levels of Pods

| Level Value | Abatement Threshold | Onset Threshold | Level Name | Description |

|---|---|---|---|---|

| 1 | 58 | 65 | Normal |

The default congestion level of pod is "0". The pod moves from Normal to default when the CPU is less than the abatement of Normal (58). The pod moves from default to Normal state when the CPU exceeds onset of Normal (65). The pod moves from Danger of Congestion to Normal state, when the CPU is less than abatement of Danger of Congestion (70). |

| 2 | 70 | 75 | Danger of Congestion |

The pod moves from Danger of Congestion to Normal, when the CPU is less than abatement of Danger of Congestion (70). The pod moves from Normal to Danger of Congestion state when the CPU exceeds onset of Danger of Congestion (75). The pod moves from Congested to Danger of Congestion when the CPU is less than abatement of Congested (80). |

| 3 | 80 | 85 | Congested |

The pod moves from Congested to Danger of Congestion when the CPU is less than abatement of Congested (80). The pod moves from Danger of Congestion to Congested state when the CPU exceeds onset of Congested (85). |

Note:

- If the current level is at L3 (Congested with onset value of 85) and the CPU value is 66, the level would be marked as L1 (Normal). The abatement value is considered only when the switching is between the adjacent levels (L2 (Danger of Congestion) to L1 (Normal) and L3 (Congested) to L2 (Danger of Congestion)).

- When NRF Discovery microservice receives an overload response from Egress Gateway microservice, it does not retry or re-reroute this request. An error response will be generated based on the specific feature configurations like NRF Forwarding, Subscriber Location Function, and Roaming.

- Egress Gateway microservice performs routes validation before

applying any other gateway service filters. Consequently, requests that don't

match a valid route in

routesConfigin REST are not subject to rate limiting. NRF has always a default route configured. Unless the routes are not explicitly removed, all the EGW messages will go through rate limiting. For more details on routesConfig, see Oracle Communications Cloud Native Core, Network Repository Function REST API Guide.

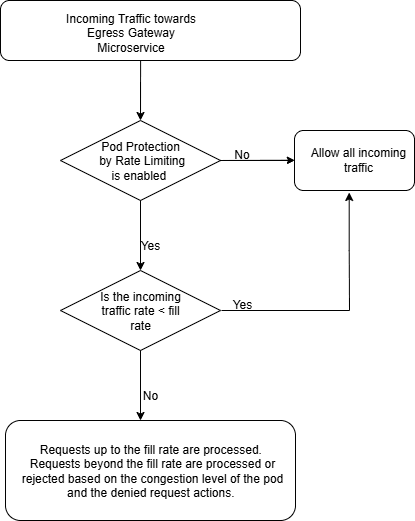

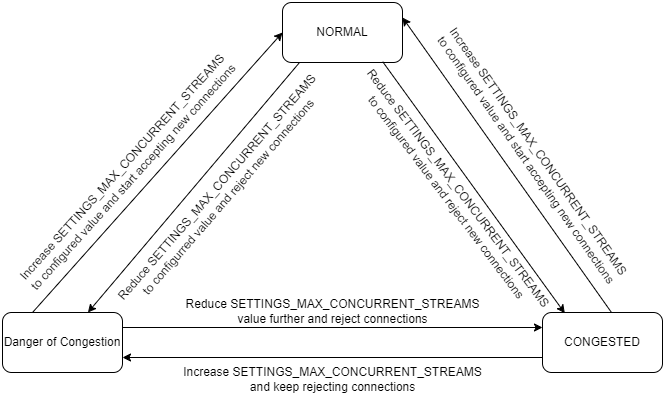

The following diagram explains the Egress Gateway Pod Protection Using Rate Limiting mechanism:

Figure 4-1 Egress Gateway Pod Protection Using Rate Limiting

- NRF send traffic to the Egress Gateway microservice.

- Egress Gateway pod checks if the Pod Protection Using Rate Limiting feature is

enabled or not.

- If the feature is enabled, Egress Gateway compares the outgoing traffic rate

with the fill rate that is defined for the rate limiting feature.

- If the outgoing request rate is below the fill rate, all the outgoing traffic are processed.

- If the outgoing request rate exceeds the fill rate, requests up to

the fill rate are processed. The requests beyond the fill rate are

processed or rejected based on the congestion level of the pod and

the denied request actions (priority and action defined). If there

is no priority defined in the denied request actions all the

requests will be rejected.

- At default or Normal congestion levels all the requests are processed.

- At Danger of Congestion, the requests with the defined priority (1-2) traffic are processed.

- At Congested level, only the highest priority traffic (priority 1) is processed; lower-priority traffic is rejected.

- If the feature is enabled, Egress Gateway compares the outgoing traffic rate

with the fill rate that is defined for the rate limiting feature.

Examples

Consider the following configuration for the

{apiRoot}/nrf/nf-common-component/v1/egw/podProtectionByRateLimiting

API:

{

"enabled": true,

"fillRate": 2700,

"routes": [

{

"id": 1,

"methods": [

"GET"

],

"path": "/nudr-group-id-map/v1/nf-group-ids",

"percentage": 70,

"defaultPriority": 3

},

{

"id": 2,

"methods": [

"GET"

],

"path": "/nnrf-disc/v1/nf-instances",

"percentage": 4,

"defaultPriority": 2

},

{

"id": 3,

"methods": [

"POST"

],

"path": "/nnrf-nfm/v1/subscriptions",

"percentage": 3,

"defaultPriority": 2

},

{

"id": 4,

"methods": [

"POST"

],

"path": "/oauth2/token",

"percentage": 3,

"defaultPriority": 2

},

{

"id": 5,

"methods": [

"GET"

],

"path": "/nnrf-nfm/v1/nf-instances/**",

"percentage": 10,

"defaultPriority": 2

},

{

"id": 6,

"methods": [

"POST"

],

"path": "/**",

"percentage": 10,

"defaultPriority": 1

}

],

"errorCodeProfile": "error429",

"deniedRequestActions": [

{

"id": 1,

"congestionLevel": 0,

"action": "CONTINUE"

},

{

"id": 2,

"congestionLevel": 1,

"action": "CONTINUE"

},

{

"id": 3,

"priority": "1-2",

"congestionLevel": 2,

"action": "CONTINUE"

},

{

"id": 4,

"priority": "1",

"congestionLevel": 3,

"action": "CONTINUE"

}

],

"priorityHeaderName": "3gpp-Sbi-Message-Priority",

"defaultPriority": 24

}Any requests which are not matching with the action configured and the priority level configured in the denied request actions are rejected.

For example, in congestion level 2, requests other than priority 1 and 2 are candidates for rejection. Similarly for congestion level 3, requests other than priority 1 are candidates for rejection. There is no need for defining rejection explicitly for these actions.

- Route Id 1: 70% allocation (1890 out of 2700), priority 3

- Route Id 2: 4% allocation (108 out of 2700), priority 2

- Route Id 3: 3% allocation (81 out of 2700), priority 2

- Route Id 4: 3% allocation (81 out of 2700), priority 2

- Route Id 5: 10% allocation (270 out of 2700), priority 2

- Route Id 6: 10% allocation (270 out of 2700), priority 1

Example 1-Handling TPS of 2835 in Danger of Congestion Mode

If the incoming Transactions Per Second (TPS) is 2835, this exceeds the configured fill rate by 135 requests. As a result, the system enters a "Danger of Congestion" state.

- Route Ids 2, 3, 4, and 6 receive incoming TPS equal to

their allocated fill rates. Therefore, all requests for these routes are

accepted and processed:

- Route Id 2: 108 requests (4%)

- Route Id 3: 81 requests (3%)

- Route Id 4: 81 requests (3%)

- Route Id 6: 270 requests (10%)

- Route Id 5 receives 135 requests, which is less than its allocated fill rate of 270 requests (10%). As such, all 135 requests are accepted for processing.

- Route Id 1 receives 2160 requests, exceeding its allocation of 1890 requests (70%). The system initially accepts up to the allocated 1,890 requests for processing. Since Route Id 5 processed fewer requests than its allocation (135 instead of 270), the unused capacity (135 requests) is applied to Route Id 1, allowing a total of 2025 requests for processing on this route.

- The remaining 135 requests from Route Id 1 are rejected, as the configured fill rate is already met and Route Id 1 has the lowest priority (priority 3). According to the specified congestion management policy, requests with priority 3 are rejected at congestion level 2.

Example 2- Handling TPS of 2970 in Congested Mode

When the incoming Transactions Per Second (TPS) reaches 2970, it exceeds the fill rate of 2700 by 270 requests. The system enters Congested mode.

Route processing details

- Route Id 2 receives 108 requests, corresponding to the defined 4%. All 108 requests are accepted for processing.

- Route Id 3 receives 81 requests, which meets the defined 3%. All 81 requests are processed.

- Route Id 4 also receives 81 requests, equaling the defined 3%. All requests are processed.

- Route Id 5 receives 270 requests, aligning with the defined 10%. All 270 requests are allowed.

- Route Id 6 receives 540 requests, exceeding the defined 10%. However, since Route Id 6 has the highest default priority (priority 1) and the denied action request is set to CONTINUE for this priority, all 540 requests are processed.

- Route Id 1 receives 1890 requests, meeting the defined 70% allocation. Due to lower priority (priority 3) under congestion, only 1620 requests are accepted, and the remaining 270 requests are rejected.

Managing the feature

Prerequisite

Note:

This feature is disabled by default. It is recommended to enable the Egress Gateway Pod Protection Using Rate Limiting feature over the Egress Gateway Pod Throttling feature. The Egress Gateway Pod Throttling feature will be deprecated in the upcoming releases.You must disable the Egress Gateway Pod Throttling feature if enabled by performing a Helm upgrade before proceeding to enable this feature.For more information about disabling the existing Egress Gateway Pod Throttling feature, see Egress Gateway Pod Throttling.

global.egwGeneratedErrorCheckDuringOverLoadglobal.egwResponseCodesDuringOverLoadglobal.noRetryDuringEgwOverload

For more information about the above parameters, see the "Global Parameters" section in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Configure

- Configure using REST API: Perform the following

configurations for this feature:

- Configure the Error code profile using the

{apiRoot}/nrf/nf-common-component/v1/egw/errorcodeprofilesAPI. -

Configure the congestion level of the pods based on CPU using the

{apiRoot}/nrf/nf-common-component/v1/egw/congestionConfigAPI.

- Configure the Error code profile using the

For more information about these APIs, see Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide.

- Configure using CNC Console:

- Configure the Error code Profiles, as described in Error Code Profile.

- Create or update the Congestion Level Configuration, as in Congestion Level Configuration.

Enable

- Enable using REST API: Configure the attributes in the

{apiRoot}/nrf/nf-common-component/v1/egw/podProtectionByRateLimitingAPI to enable the feature.For more information about this API, see "Pod Protection By Rate Limiting" in Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide.

- Enable using CNC Console: Configure the parameters as mentioned in Pod Protection By Rate Limiting.

Note:

Use the recommended values in the above configuration to enable this feature.Observability

Metrics

The following metrics are added for this feature:

oc_egressgateway_http_request_ratelimit_values_totaloc_egressgateway_http_request_ratelimit_reject_chain_durationoc_egressgateway_http_request_ratelimit_reject_chain_lengthoc_egressgateway_http_request_ratelimit_denied_count_totaloc_egressgateway_congestion_cpu_stateoc_egressgateway_congestion_system_stateoc_egressgateway_system_state_duration_percentageoc_egressgateway_congestion_level_totaloc_egressgateway_congestion_level_bucket_totaloc_egressgateway_congestion_cpu_percentage_bucket

For more information about the metrics, see Egress Gateway Metrics.

Alerts

The following alerts are added in the Egress Gateway Pod Protection Using Rate Limiting section:

KPIs

The following KPIs are added for this feature:

- Allowed Requests

- Reject Chain Length

- Discard Action

- Congestion Level

For more information about KPIs, see Egress Gateway Pod Protection Using Rate Limiting.

Maintain

If you encounter alerts at system or application levels, see the NRF Alerts section for resolution steps.

In case the alerts still persist, perform the following:

- Collect the logs: For more information on collecting logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

- Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.1.2 Ingress Gateway Pod Protection Using Rate Limiting

The Ingress Gateway microservice manages all incoming traffic from Network Functions (NFs). The Ingress Gateway microservice may become vulnerable due to overload conditions during unexpected traffic spikes, uneven traffic distribution, or bursts caused by network fluctuations. To ensure system stability and prevent potential network outages, a protection mechanism for the Ingress Gateway microservice is necessary.

With the implementation of this feature, rate-limiting mechanism is applied for Ingress Gateway pods. This mechanism allows to set a maximum number of requests that a pod can process. When the request rate exceeds the threshold, the pods take action to protect themselves. They either reject additional requests with a custom error code or allow them, depending on the configuration. Each Ingress Gateway pod has its own Pod Protection Policer, which is applied individually.

The Ingress Gateway's configuration for this feature includes:

- Fill Rate: This sets the maximum number of requests a pod can handle in a defined time interval. It is also possible to allocate the specific percentage for various path or method combinations.

- Error Code Profiles: This allows to reject the requests with a predefined error code profile.

- Congestion Configuration: This allows to configure the congestion levels based on CPU resource utilization.

- Denied Request Actions: This defines the action to be taken when

the fill rate is exceeded. The options are 'CONTINUE', which allows requests to be

processed, or 'REJECT', which rejects requests with a specified error code.

Ingress Gateway will reject all the requests which exceeds the fill rate unless specific 'CONTINUE' action is defined in the denied request actions.

The fill rate represents the maximum number of requests a pod can handle when traffic is uniformly distributed within a span of one second interval. However, in bursty traffic scenarios where the traffic is not uniformly distributed within a span of one second interval, even if the average traffic received is within the fill rate, still traffic failure may be expected.

Congestion level is checked in a fixed interval

(congestionconfig.refreshInterval) and the action will be decided

based on the congestion level in that interval. It is possible that the CPU levels might

toggle between these intervals causing the congestion levels to switch frequently. To

know the detected congestion level, see the metric

oc_ingressgateway_congestion_level_bucket_total.

The following table describes NRF's configuration for different congestion levels for Ingress Gateway pods:

Table 4-2 Congestion Levels of Pods

| Level Value | Abatement Threshold | Onset Threshold | Level Name | Description |

|---|---|---|---|---|

| 1 | 58 | 65 | Normal |

The default congestion level of pod is "0". The pod moves from Normal to default when the CPU is less than the abatement of Normal (58). The pod moves from default to Normal state when the CPU exceeds onset of Normal (65). The pod moves from Danger of Congestion to Normal state, when the CPU is less than abatement of Danger of Congestion (70). |

| 2 | 70 | 75 | Danger of Congestion |

The pod moves from Danger of Congestion to Normal, when the CPU is less than abatement of Danger of Congestion (70). The pod moves from Normal to Danger of Congestion state when the CPU exceeds onset of Danger of Congestion (75). The pod moves from Congested to Danger of Congestion when the CPU is less than abatement of Congested (80). |

| 3 | 80 | 85 | Congested |

The pod moves from Congested to Danger of Congestion when the CPU is less than abatement of Congested (80). The pod moves from Danger of Congestion to Congested state when the CPU exceeds onset of Congested (85). |

Note:

- If the current level is at L3 (Congested with onset value of 85) and the CPU value is 66, the level would be marked as L1 (Normal). The abatement value is considered only when the switching is between the adjacent levels (L2 (Danger of Congestion) to L1 (Normal) and L3 (Congested) to L2 (Danger of Congestion)).

- When NRF rejects the requests, it is expected that the consumer NFs reroute to alternate NRFs.

- Ingress Gateway microservice rate limiting only applies to signaling messages and does not impact internal messages, such as requests from perf-info to Ingress Gateway microservice, message copy requests.

- Ingress Gateway microservice performs routes validation before

applying any other gateway service filters. Consequently, requests that don't

match a valid route in

routesConfigin Helm are not subject to rate limiting. For more details on routesConfig, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide .

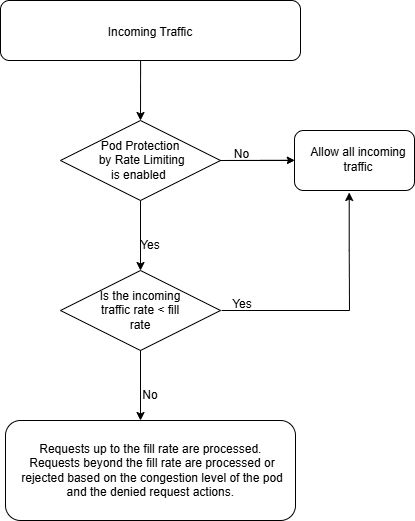

The following diagram explains the Ingress Gateway Pod Protection Using Rate Limiting mechanism:

Figure 4-2 Ingress Gateway Pod Protection Using Rate Limiting

- Consumer NFs send traffic to the Ingress Gateway microservice.

- Ingress Gateway pod checks if the Pod Protection Using Rate Limiting

feature is enabled or not.

- If the feature is enabled, Ingress Gateway compares the

incoming traffic rate with the fill rate that is defined for the rate

limiting feature.

- If the incoming request rate is below the fill rate, all the incoming traffic are processed.

- If the incoming request rate exceeds the fill rate, requests up to the fill rate are processed. The requests beyond the fill rate are processed or rejected based on the congestion level of the pod and the denied request actions.

- If the feature is enabled, Ingress Gateway compares the

incoming traffic rate with the fill rate that is defined for the rate

limiting feature.

- if fillRate is configured as 1000,

- if the incoming Transaction Per Second (TPS) is 1100, congestionLevel is Normal, and action is configured as CONTINUE, all the 1100 requests are processed.

- if the incoming TPS is 1300, congestionLevel is Danger of Congestion, and action is configured as CONTINUE, all the 1300 requests are processed.

- if the incoming TPS is 1500, congestionLevel is Congested, and action is configured as REJECT, 1000 requests are processed successfully and the 500 requests are rejected with the configured error code.

- if two routes, id1 configured with 30 percentage and id2 with 70

percentage, and fillRate is configured as 1000,

- if the incoming TPS is 1100, congestionLevel is Normal, and action is configured as CONTINUE, all the 1100 requests are processed.

- if the incoming TPS is 1300, fill rate is 1000, congestionLevel is Danger of Congestion, and action is configured as CONTINUE, all requests are processed successfully.

- if the incoming TPS is 1500, congestionLevel is Congested, and action is configured as REJECT, 300 requests from route id1 is processed and 700 requests from route id2 is processed, and 500 remaining TPS will be rejected.

Managing the feature

Prerequisites

Note:

It is recommended to enable the Ingress Gateway Pod Protection Using Rate Limiting feature over the Ingress Gateway pod protection feature. The Ingress Gateway Pod Protection feature will be deprecated in the upcoming releases. You must disable the Ingress Gateway Pod Protection before proceeding to this feature. For more information about disabling the existing pod protection feature, see Ingress Gateway Pod Protection.Configure

- Configure using REST API: Perform the following

configurations for this feature:

- Configure the Error code profile using the

{apiRoot}/nrf/nf-common-component/v1/igw/errorcodeprofilesAPI.Configure the

ingressgateway.errorCodeProfilesin Helm. Perform a Helm upgrade. Thisingressgateway.errorCodeProfilesvalue will be used when request is rejected at Ingress Gateway. - Configure the congestion level of the pods based on CPU

using the

{apiRoot}/nrf/nf-common-component/v1/igw/congestionConfigAPI.For more information about these APIs, see Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide.

- Configure the Error code profile using the

- Configure using CNC Console:

- Configure the Error code Profiles, as described in Error Code Profile.

Configure the

ingressgateway.errorCodeProfilesin Helm. Perform a Helm upgrade. Thisingressgateway.errorCodeProfilesvalue will be used when request is rejected at Ingress Gateway. - Create or update the Congestion Level Configuration, as in Congestion Level Configuration.

- Configure the Error code Profiles, as described in Error Code Profile.

Enable

- Enable using REST API: Configure the attributes in the

{apiRoot}/nrf/nf-common-component/v1/igw/podProtectionByRateLimiting}API to enable the feature.For more information about this API, see "Pod Protection By Rate Limiting" in Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide.

- Enable using CNC Console: Configure the parameters as mentioned in Pod Protection By Rate Limiting.

Note:

Use the recommended values in the above configuration to enable this feature.Observability

Metrics

The following metrics are added for this feature:

oc_ingressgateway_http_request_ratelimit_values_totaloc_ingressgateway_http_request_ratelimit_reject_chain_durationoc_ingressgateway_http_request_ratelimit_reject_chain_lengthoc_ingressgateway_http_request_ratelimit_denied_count_totaloc_ingressgateway_congestion_cpu_stateoc_ingressgateway_congestion_system_stateoc_ingressgateway_system_state_duration_percentageoc_ingressgateway_congestion_level_totaloc_ingressgateway_congestion_level_bucket_totaloc_ingressgateway_congestion_cpu_percentage

For more information about the metrics, see Ingress Gateway Metrics.

Alerts

The following alerts are added in the Ingress Gateway Pod Protection Using Rate Limiting section.

KPIs

The following KPIs are added for this feature:

- Allowed Request Rate Per Route Id

- Total Rejections Chain Length

- Discard Request Action Traffic Rate

- Pod Congestion Level

For more information about KPIs, see Ingress Gateway Pod Protection Using Rate Limiting.

Maintain

If you encounter alerts at system or application levels, see the NRF Alerts section for resolution steps.

In case the alerts still persist, perform the following:

- Collect the logs: For more information on collecting logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

- Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.2 NF Profile Size Limit

The Nnrf_NFManagement service allows an NF to register, update, or deregister its profile in the NRF. The NF Profile consists of general parameters of the NF Instance and also the parameters of the different NF service instances exposed by the NF Instance. NRF uses the registered profiles for service operations like NfListRetrieval, NfProfileRetrieval, NfDiscover, and NFAccessToken. It is observed that when the size of the NF Profile registered is large, the performance of these service operations degrades due to increased processing time of larger profiles and can cause higher resource utilization and latency.

This feature allows to specify the maximum limit of the NF Profile size that can be registered with NRF. The NF Profile size is evaluated during the NFRegister or NFUpdate service operation, and if the size is within the configured maximum limit, the service operation is allowed. If the profile size breaches the configured thresholds, the service operation gets rejected.

- NFRegister or NFUpdate (PUT):

- NRF receives the NF Profile from the NF and evaluates the size of the NF Profile against the configured maximum allowed size.

- The NFRegister or NFUpdate service operation succeeds if the NF Profile size is below the configured size.

- If the size of the NF Profile has breached the configured size, the NFRegister or NFUpdate service operation fails with a configurable error response.

- NFUpdate (Partial Update):

NRF receives the update that the NF wishes to apply on NF Profiles registered at NRF. NRF evaluates the size of the NF Profile after applying the updates on registered nfProfile.

If the size of the NF Profile is below the configured size, the NFUpdate service operation succeeds.

If the size of the NF Profile has breached the configured size, the NFUpdate service operation fails with a configurable error response.

- NFHeartBeat:

NfProfile size is not evaluated for NFHeartbeat service operation. The NFs can continue to Heartbeat even if their profile size has breached the threshold. This can occur only if the bigger profile was registered before enabling the feature.

The ocnrf_nfProfile_size_limit metric shows the size of the

nfProfile registered at NRF. The size of the nfProfile is calculated as per the payload

received while registering or updating the nfProfile.

Calculating NF Profile Size

- Save the profile payload in a text file without spaces.

- Verify the size of the nfProfile payload using Linux tools like wc.

{"nfInstanceId":"ae137ab7-740a-46ee-aa5c-951806d77b0d","nfType":"BSF","nfStatus":"REGISTERED","heartBeatTimer":30,"plmnList":[{"mcc":"310","mnc":"14"}],"sNssais":[{"sd":"4eaaab","sst":124},{"sd":"daaac8","sst":54},{"sd":"f4aaa6","sst":73}],"nsiList":["slice-1","slice-2"],"fqdn":"BSF.oracle.com","interPlmnFqdn":"BSF.oracle.com","ipv4Addresses":["192.168.2.100","192.168.3.100","192.168.2.110","192.168.3.110"],"ipv6Addresses":["2001:0db8:85a3:0000:0000:8a2e:0370:7334"],"capacity":2000,"load":0,"locality":"US

East","bsfInfo":{"ipv4AddressRanges":[{"start":"192.168.0.0","end":"192.168.0.100"}],"ipv6PrefixRanges":[{"start":"2001:db8:8513::/48","end":"2001:db8:8513::/96"}],"dnnList":["abc","DnN-OrAcLe-32","ggsn-cluster-A.mnc012.mcc345.gprs","lemon"]},"nfServiceList":{"ae137ab7-740a-46ee-aa5c-951806d77b0d":{"serviceInstanceId":"ae137ab7-740a-46ee-aa5c-951806d77b0d","serviceName":"nbsf-management","versions":[{"apiVersionInUri":"v1","apiFullVersion":"1.0.0","expiry":"2018-12-03T18:55:08.871Z"}],"scheme":"http","nfServiceStatus":"REGISTERED","fqdn":"BSF.oracle.com","interPlmnFqdn":"BSF.oracle.com","apiPrefix":"","capacity":500,"load":0,"supportedFeatures":"80000000"}}}

$ cat bsf.txt |wc -c

1177 Note:

- The

{apiRoot}/nrf-configuration/v1/nfManagementOptionsAPI allows to configure the maximum size of the profile per nfType. If not configured for a specific nfType, the evaluation is performed using "ALL_NF_TYPES". This is a mandatory configuration. - The calculated total size of the

NfProfilemay vary due to updates made by the NRF. The size is determined based on the profile after the NRF has applied its modifications. For instance, the NRF may update certain attributes such asexpiry, which follow a timestamp format.

Managing the feature

Enable

- Enable Using REST API: Set the value of

featureStatusto ENABLED in the{apiRoot}/nrf-configuration/v1/nfManagementOptionsURI. For more details, see Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide. - Enable Using CNC Console: Set the value of Feature Status to ENABLED in the NF Management Options.

Observability

Metrics

The ocnrf_nfProfile_size_limit_breached metric is added in the NRF NF Metrics section.

Alerts

There are no alerts introduced for this feature.

KPIs

The "NF Profile Size Limit Breached" KPI is added in the NRF Service KPIs section.

Maintain

If you encounter alerts at system or application levels, see the NRF Alerts section for resolution steps.

In case the alerts still persist, perform the following:

- Collect the logs: For more information on collecting logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

- Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.3 Egress Gateway Pod Throttling

Note:

NRF recommends to enable the Egress Gateway Pod Protection Using Rate Limiting feature over the Egress Gateway Pod Throttling feature. The Egress Gateway Pod Throttling feature will be deprecated in the upcoming releases. For more information about enabling the Egress Gateway Pod Protection Using Rate Limiting feature, see Egress Gateway Pod Protection Using Rate Limiting.Egress Gateway microservice handles all the outgoing traffic from NRF to other NFs. In the event of unexpected high traffic, uneven traffic distribution, or traffic bursts due to network fluctuations, the Egress Gateway pods may get overloaded. This further may impact the system latency and performance. It is required to protect the Egress Gateway microservice from these conditions to prevent high impact on the outgoing traffic.

With the implementation of this feature, each Egress Gateway pod monitors its incoming traffic and if the traffic exceeds the defined capacity, the excess traffic is not processed and gets rejected. The traffic capacity is applied at each pod and applicable to all the incoming requests irrespective of the message type.

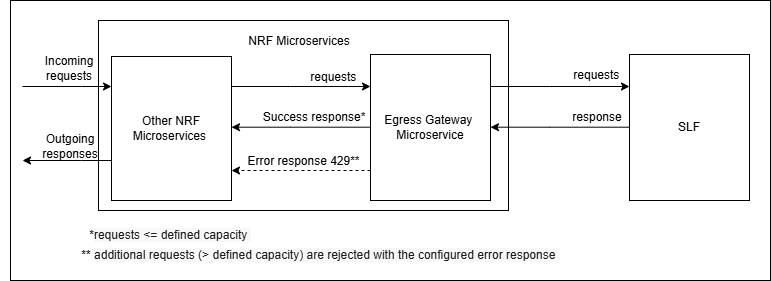

Egress Gateway Pod Throttling Mechanism

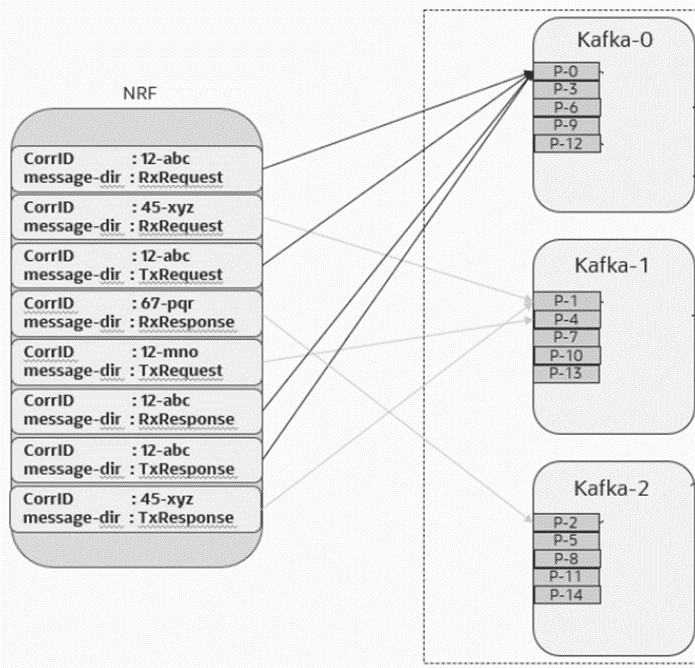

The following image describes the pod throttling mechanism when the requests received from NRF microservices like NFDiscovery, NFRegistration, NFSubscription, and NFAccessToken exceeds the defined capacity in Egress Gateway microservice.

Figure 4-3 Egress Gateway Pod Throttling Mechanism

- NRF microservice sends the requests to the Egress Gateway microservice.

- Egress Gateway microservice evaluates the number of requests received

from the other NRF microservices:

- If the number of incoming requests are 2500 and the maximum defined limit is 2700, then Egress Gateway microservice processes all the 2500 requests.

- If the number of incoming requests are 3000 and the maximum defined limit is 2700, then Egress Gateway microservice processes only 2700 requests, and rejects the additional 300 requests with response code 429 to the NRF microservice.

Note: When NRF Discovery microservice receives an overload response from Egress Gateway microservice, it does not retry or re-reroute this request. An error response will be generated based on the specific feature configurations like NRF Forwarding, Subscriber Location Function, and Roaming.

Managing the Egress Gateway Pod Throttling

Enable

This feature can be enabled or disabled at the time of NRF deployment using the following Helm Parameters:

Perform the following configuration to enable this feature using the Helm:

- Open the

ocnrf_custom_values_25.1.203.yamlfile. - Set the value of

egressgateway.podLevelMessageThrottling.enabledto true under Egress Gateway Microservice parameters section.Note:

- This feature is enabled by default.

- This feature also uses the following parameters

which are read-only:

egressgateway.podLevelMessageThrottling.durationegressgateway.podLevelMessageThrottling.requestLimitglobal.egwGeneratedErrorCheckDuringOverLoadglobal.egwResponseCodesDuringOverLoadglobal.noRetryDuringEgwOverload

For more information about the above parameters, see the "Egress Gateway Microservice" and "Global Parameters" sections in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Save the file.

- Install NRF. For more information about installation procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Run Helm upgrade, if you are enabling this feature after NRF deployment. For more information about upgrade procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Disable

This feature can be disabled using the following Helm Parameter:

Perform the following configuration to disable this feature using Helm:

- Open the

ocnrf_custom_values_25.1.203.yamlfile. - Set the value of

egressgateway.podLevelMessageThrottling.enabledto false under the Egress Gateway Microservice parameters section. For more information about the parameter, see the "Egress Gateway Microservice" and "Global Parameters" sections in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide. - Save the file.

- Install NRF. For more information about installation procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Run Helm upgrade, if you are enabling this feature after NRF deployment. For more information about upgrade procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Observability

Metrics

Following metrics are added in the Egress Gateway Metrics section:

oc_egressgateway_podlevel_throttling_allowed_totaloc_egressgateway_podlevel_throttling_discarded_total

The route_id dimension is added in NRF Metrics.

Alerts

Following alerts are added in the Egress Gateway Pod Throttling section:

For more information about alerts, see NRF Alerts section.

KPIs

Added the Egress Gateway Pod Throttling KPI in the Egress Gateway Pod Throttling section.

Maintain

If you encounter alerts at system or application levels, see NRF Alerts section for resolution steps.

In case the alert persists, perform the following:

1. Collect the logs and Troubleshooting Scenarios: For more information on how to collect logs and troubleshooting information, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

2. Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.4 Traffic Segregation

This feature provides an option for traffic segregation at NRF based on traffic types. Within a Kubernetes cluster, traffic segregation can divide applications or workloads into distinct sections such as Operations Administration and Maintenance (OAM), Service Based Interface (SBI), Kubernetes control traffic, and so on. The Multus Container Network Interface (CNI) plugin for Kubernetes enables attaching multiple network interfaces to pods to help segregate traffic to/from NRF edge microservices like Ingress and Egress Gateway microservices.

This feature addresses the challenge of logically separating IP traffic of different traffic types, which are typically handled through a single network (Kubernetes overlay). The new functionality ensures that critical networks are not cross-connected or sharing the same routes, thereby preventing network congestion.

The feature requires configuration of separate networks, Network Attachment Definitions (NADs), and the Cloud Native Load Balancer (CNLB). These configurations are crucial for facilitating Ingress/Egress gateway traffic segregation, and optimizing load distribution in NRF.

Note:

The Traffic Segregation feature is only available in NRF if OCCNE is installed with CNLB.Cloud Native Load Balancer (CNLB)

CNE provides CNLB for managing the ingress and egress network as an alternate

to the existing Load Balancing of Virtual Machine (LBVM), lb-controller, and

egress-controller solutions. You can enable or disable this feature only during a fresh

CNE installation. When this feature is enabled, CNE automatically uses CNLB to control

ingress traffic. To manage the egress traffic, you must preconfigure the egress network

details in the cnlb.ini file before installing CNE.

For more information about enabling and configuring CNLB, see Oracle Communications Cloud Native Core, Cloud Native Environment User Guide, and Oracle Communications Cloud Native Core, Cloud Native Environment Installation, Upgrade, and Fault Recovery Guide.

Network Attachment Definitions for CNLB

A Network Attachment Definition (NAD) is a resource used to set up a network attachment, in this case, a secondary network interface to a pod. NRF supports two types of CNLB NADs:

- Ingress Network Attachment Definitions

Ingress NADs are used to handle inbound traffic only. This traffic enters the CNLB application through an external interface service IP address and is routed internally using interfaces within CNLB networks.

Naming Convention:

nf-<service_network_name>-int - Egress Only Network Attachment Definitions

Egress Only NADs enable outbound traffic only. An NRF Egress Gateway pod can initiate traffic and route it through a CNLB application, translating the source IP address to an external egress IP address. An egress NAD contains network information to create interfaces for NRF Egress Gateway pods and routes to external subnets.

- Prerequisites:

- Ingress NADs are already created for the desired internal networks.

- Destination (egress) subnet addresses are known

beforehand and defined under the

cnlb.inifile'segress_destvariable to generate NADs. - The use of an Egress NAD on a deployment can be combined with Ingress NADs to route traffic through specific CNLB apps.

- Naming Convention:

nf-<service_network_name>-egr

- Prerequisites:

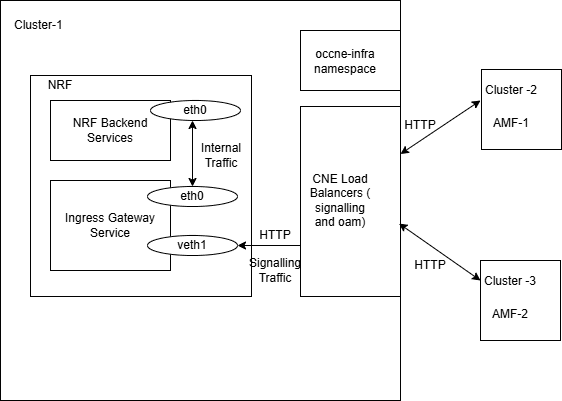

Ingress Traffic Segregation

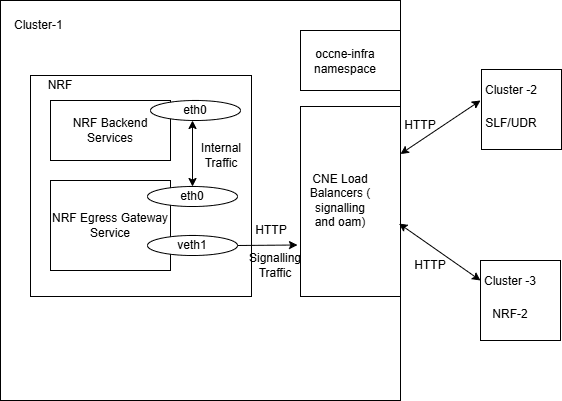

Figure 4-4 Ingress Traffic Segregation

Here AMF-1 and AMF-2 send messages to the Ingress Gateway of the NRF. Internal traffic between NRF Backend and the Ingress Gateway travels through the default interface, eth0, which is connected to the pods. The signaling traffic between AMF-1 and NRF, as well as between AMF-2 and NRF, travels through a new interface, veth1, created using the Multus plugin. The Ingress service IPs and ports will be set up in Cloud Native Load Balancers (CNLBs), which will direct traffic to the Ingress Gateway. This gateway will then handle the requests and route them to the NRF.

Egress Traffic Segregation

The following image describes the traffic segregation at Egress Gateway.

Figure 4-5 Egress Traffic Segregation

Here NRF is connected to SLF and another NRF, which are on different PLMNs and different networks. Internal traffic between NRF Backend and the NRF Egress Gateway passes through the default interface, eth0, which is connected to the pods. The signaling traffic between NRF and SLF, as well as between NRF and NRF-2, travels through a new interface, veth1, created using the Multus plugin. Egress IPs will be defined at the CNLBs, which will perform Source Network Address Translation (SNAT) on the IP addresses in requests sent to SLF and NRF-2.

Managing Traffic Segregation

Enable

This feature is disabled by default. To enable this feature, you need to

configure the network attachment annotations in the

ocnrf_custom_values_25.1.203.yaml file.

Configuration

For more information about Traffic Segregation configuration, see " Configuring Traffic Segregation" section in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Observe

There are no Metrics, KPIs, or Alerts available for this feature.

Maintain

To resolve any alerts at the system or application level, see NRF Alerts section. If the alerts persist, perform the following:

- Collect the logs: For more information on how to collect logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

- Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.5 Support for Server Header

NRF handles various requests from consumer Network Functions (NFs) and other network entities over HTTP protocol. On receiving these requests, NRF validates and processes them before responding to these requests. When NRF sends an error response, it includes the source of the error to troubleshoot and take corrective measures.

This feature offers the support for Server Header in NRF responses, which contain information about the origin of an error response.

With this feature, NRF adds or passes the server header in the error responses as described in the following scenarios:

- When NRF acts as a standalone server.

- In NRF Forwarding scenarios, NRF propagates the server header received from peer NRF to the Consumer NF without any changes.

- In NRF Roaming scenarios, NRF propagates the server header received from SEPP or peer NRFs to the Consumer NF without any changes.

This enhancement improves NRF’s error-handling scenarios by determining the originator of the error response for better troubleshooting.

When the server header feature is enabled, NRF adds the server header while generating the error responses.

The server header format is as follows:

<NRF>-<NrfInstanceId>Where,

<NRF>is the NF type.<NrfInstanceId>is the unique identifier of the NRF instance.

For example: NRF-6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c

The following image illustrates the NRF behavior while processing the error responses:

Figure 4-6 Server Header Details

- Consumer or Client NF sends requests to NRF.

- NRF receives the requests from the Consumer or Client NF and validates them for further processing.

- In case, NRF sends an error response while processing the incoming requests, it adds the server header {NRF-<NrfInstanceId>} in the error response and sends it back to the Consumer or Client NF.

Managing Support for Server Header

Enable

- Enable using REST API:

- Global: Set the value of the

enabledattribute totruein the {apiRoot}/nrf/nf-common-component/v1/igw/serverheaderdetails configuration API. For more information about the API, see "Server Header Details" section in Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide. - Route level: Set the value of the

enabledattribute totruein the {apiRoot}/nrf/nf-common-component/v1/igw/routesconfiguration configuration API. For more information about the API, see "Routes Configuration" section in Oracle Communications Cloud Native Core, Network Repository Function REST Specification Guide.

- Global: Set the value of the

- Enable using CNC Console:

- Global: Switch Enabled on the Server Header Details page to enable the feature. For more information about enabling the feature using CNC Console, see Server Header Details.

- Route level: Switch Enabled on the Routes Configuration page to enable the feature. For more information about enabling the feature using CNC Console, see Routes Configuration.

For more information about server header propagation in service-mesh deployment, see Adding Filters for Server Header Propagation.

Configure

Perform the configurations in the following sequence to configure this feature using REST API or CNC Console:

- Configure {apiRoot}/nrf/nf-common-component/v1/igw/errorcodeserieslist to update the errorcodeserieslist that is used to list the configurable exception or error for an error scenario in Ingress Gateway.

- Perform the following global configuration in {apiRoot}/nrf/nf-common-component/v1/igw/serverheaderdetails API.

- (Optional) Perform the following configuration at route level based on

the

idattribute in {apiRoot}/nrf/nf-common-component/v1/igw/routesconfiguration API.Note:

- If the feature is not configured at route level, global configuration is taken into consideration.

- Route-level configuration will take precedence over the global configuration.

Observability

Metrics

There are no metrics added to this feature.

Alerts

There are no alerts added or updated for this feature.

KPIs

There are no KPIs related to this feature.

Maintain

If you encounter alerts at system or application levels, see the NRF Alerts section for resolution steps.

In case the alerts still persist, perform the following:

1. Collect the logs: For more information on collecting logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

2. Raise a service request: See My Oracle Support for more information on how to raise a service request.

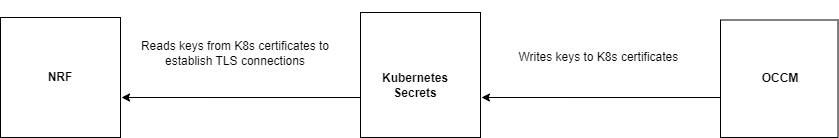

4.6 Support for TLS

- Handshake Protocol: Exchanges the security parameters of a connection. Handshake messages are supplied to the TLS record layer.

- Record Protocol: Receives the messages to be transmitted, fragments the data into multiple blocks, secures the records, and then transmits the result. Received data is delivered to higher-level peers.

From Release 24.2.0 onwards, NRF supports TLS 1.3 for all Consumer NFs, Producer NFs, Data Director, SBI Interfaces, and interfaces where TLS 1.2 was supported. TLS 1.2 will continue to be supported.

TLS Handshake

This section describes the differences between TLS 1.2 and TLS 1.3 and the advantages of TLS 1.3 over TLS 1.2 and earlier versions.

Figure 4-7 TLS Handshake

Step 1: The connection or handshake starts when the client sends a “client hello” message to the server. This message consists of cryptographic information such as supported protocols and supported cipher suites. It also contains a random value or random byte string.

Step 2: To respond to the “client hello” message, the server sends the “server hello” message. This message contains the CipherSuite that the server has selected from the options provided by the client. The server also sends its certificate along with the session ID and another random value.

Step 3: The client verifies the certificate sent by the server. When the verification is complete, it sends a byte string and encrypts it using the public key of the server certificate.

Step 4: When the server receives the secret, both the client and server generate a master key along with session keys (ephemeral keys). These session keys are used to symmetrically encrypt the data.

Step 5: The client sends an “HTTP Request” message to the server to enable the server to switch to symmetric encryption using the session keys.

Step 6: To respond to the client’s “HTTP Request” message, the server does the same and switches its security state to symmetric encryption. The server concludes the handshake by sending an HTTP response.

Step 7: The client-server handshake is completed in two round-trips.

TLS 1.3Step 1: The connection or handshake starts when the client sends a “client hello” message to the server. The client sends the list of supported cipher suites. The client also sends its key share for that particular key agreement protocol.

Step 2: To respond to the “client hello” message, the server sends the key agreement protocol that it has chosen. The “Server Hello” message comprises the server key share, server certificate, and the “Server Finished” message.

Step 3: The client verifies the server certificate, generates keys as it has the key share of the server, and sends the “Client Finished” message along with an HTTP request.

Step 4: The server completes the handshake by sending an HTTP response.

The following digital signature algorithms are supported in TLS handshake:

Table 4-3 Digital Signature Algorithms

| Algorithm | Key Size (Bits) | Elliptic Curve (EC) |

|---|---|---|

| RS256 (RSA) | 2048 | NA |

| 4096 This is the recommended value. | NA | |

| ES256 (ECDSA) | NA | SECP384r1

This is the recommended value. |

Comparison Between TLS 1.2 and TLS 1.3

The following table provides a comparison of TLS 1.2 and TLS 1.3:

Table 4-4 Comparison of TLS 1.2 and TLS 1.3

| Feature | TLS 1.2 | TLS 1.3 |

|---|---|---|

| TLS Handshake |

|

|

| Cipher Suites |

|

|

| Round-Trip Time (RTT) | This has a high RTT during the TLS handshake. | This has low RTT during the TLS handshake. |

| Perfect Forward Secrecy (PFS) | This doesn't support PFS. | TLS 1.3 supports PFS. PFS ensures that each session key is completely independent of long-term private keys, which are keys that are used for an extended period to decrypt encrypted data. |

| Privacy | This is less secure, as the ciphers used are weak. | This is more secure, as the ciphers used are strong. |

| Performance | This has high latency and a less responsive connection. | This has low latency and a more responsive connection. |

Advantages of TLS 1.3

TLS 1.3 handshake offers the following improvements over earlier versions:

- all handshake messages after the ServerHello are encrypted.

- improves efficiency in the handshake process by requiring fewer round trips than TLS 1.2. It also uses cryptographic algorithms that are faster.

- better security than TLS 1.2. It addresses known vulnerabilities in the handshake process.

- got rid of data compression.

The following table describes the TLS versions supported in the client and server side. The last column indicates which version will be used.

When NRF is acting as a client or a server, it can have different TLS versions.

The following table gives information about which TLS version will be used when you have various combinations of TLS versions between the server and the client.

Table 4-5 TLS Version Used

| Client Support | Server Support | TLS Version Used |

|---|---|---|

| TLS 1.2, TLS 1.3 | TLS 1.2, TLS 1.3 | TLS 1.3 |

| TLS 1.3 | TLS 1.3 | TLS 1.3 |

| TLS 1.3 | TLS 1.2, TLS 1.3 | TLS 1.3 |

| TLS 1.2, TLS 1.3 | TLS v1.3 | TLS 1.3 |

| TLS 1.2 | TLS 1.2, TLS 1.3 | TLS 1.2 |

| TLS 1.2, TLS 1.3 | TLS 1.2 | TLS 1.2 |

| TLS 1.3 | TLS 1.2 |

Sends an error message. For more information about the error message, see "Troubleshooting TLS Version Compatibilities" section in Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide. |

| TLS 1.2 | TLS 1.3 |

Sends an error message. For more information about the error message, see "Troubleshooting TLS Version Compatibilities" section in Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide. |

Note:

- If Egress Gateway is deployed with both the versions of TLS that is TLS 1.2 and TLS 1.3, then Egress Gateway as client will send both versions of TLS in the client hello message during the handshake and the server needs to decide which version to be used.

- If Ingress Gateway is deployed with both the version of TLS that is with TLS 1.2 and TLS 1.3, then Ingress Gateway as the server will use the TLS version received from the client in the server hello message during the handshake.

- This feature does not work in ASM deployment.

Managing Support for TLS 1.2 and TLS 1.3

Enable

This feature can be enabled or disabled at the time of NRF deployment using the following Helm Parameters:

- enableIncomingHttps - This flag is used for

enabling/disabling HTTPS/2.0 (secured TLS) in the Ingress Gateway. If the

value is set to false, NRF will not accept any HTTPS/2.0 (secured) traffic.

If the value is set to true, NRF will accept HTTPS/2.0 (secured) Traffic.

Note: Do not change

For more information on enabling this flag, see the "Enabling HTTPS at Ingress Gateway" section in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.&enableIncomingHttpsRefreference variable. - enableOutgoingHttps -This flag is used for

enabling/disabling HTTPS/2.0 (secured TLS) in the Egress Gateway. If the

value is set to false, NRF will not accept any HTTPS/2.0 (secured) traffic.

If the value is set to true, NRF will accept HTTPS/2.0 (secured) traffic.

For more information on enabling this flag, see the "Enabling HTTPS at Egress Gateway" section in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Configure

You can configure this feature using Helm parameters.

The following parameters in the Ingress Gateway and Egress Gateway microservices must be customized to support TLS 1.2 or TLS 1.3.

- Generate HTTPS certificates for both the Ingress and Egress Gateways. Ensure that the certificates are correctly configured for secure communication. After generating the certificates, create a Kubernetes secret for each Gateway (Ingress and Egress). Then, configure these secrets to be used by the respective Gateways. For more information about HTTPS configuration, generating certificates, and creating secrets, see the "Configuring Secrets for Enabling HTTPS" section in the Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Open the

ocnrf_custom_values_25.1.203.yamlfile. - Configure the following parameters under Ingress Gateway and

Egress Gateway parameters section.

- Parameters required to support TLS 1.2:

service.ssl.tlsVersionindicates the TLS version.cipherSuitesindicates supported cipher suites.allowedCipherSuitesindicates allowed cipher suites.messageCopy.security.tlsVersionindicates the TLS version for establishing communication between Kafka and NF when security is enabled.

- Parameters required to support TLS 1.3:

service.ssl.tlsVersionindicates the TLS version.cipherSuitesindicates the supported cipher suites.allowedCipherSuitesindicates the allowed cipher suites.messageCopy.security.tlsVersionindicates the TLS version for establishing communication between Kafka and NF when security is enabled.clientDisabledExtensionis used to disable the extension sent by messages originated by clients during the TLS handshake with the server.serverDisabledExtensionis used to disable the extension sent by messages originated by servers during the TLS handshake with the client.tlsNamedGroupsis used to provide a list of values sent in the supported_groups extension. These are comma-separated values.clientSignatureSchemesis used to provide a list of values sent in the signature_algorithms extension.

For more information about configuring the values of the above-mentioned parameters, see the "Ingress Gateway Microservice" and "Egress Gateway Microservice" sections in Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Parameters required to support TLS 1.2:

- Save the file.

- Install NRF. For more information about installation procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Run Helm upgrade, if you are enabling this feature after NRF deployment. For more information about upgrade procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Note:

- NRF does not prioritize cipher suites based on priorities. To select a cipher based on priorities, you must write the cipher suites in decreasing order of priority.

- NRF does not prioritize supported groups based on priorities. To select a supported group based on priorities, you must write the supported group values in the decreasing order of priority.

- If you want to provide values for the signature_algorithms extension

using the clientSignatureSchemes parameter, the following

comma-separated values must be provided to deploy the services:

- rsa_pkcs1_sha512

- rsa_pkcs1_sha384

- rsa_pkcs1_sha256

- The mandatory extensions as listed in RFC 8446 cannot be disabled using

the clientDisabledExtension attribute on the client or using the

serverDisabledExtension attribute on the server side. The

following is the list of the extensions that cannot be disabled:

- supported_versions

- key_share

- supported_groups

- signature_algorithms

- pre_shared_key

Metrics

Following metrics are added in the NRF Gateways Metrics section:

oc_ingressgateway_incoming_tls_connectionsoc_egressgateway_outgoing_tls_connectionssecurity_cert_x509_expiration_seconds

For more information about metrics, see NRF Metrics section.

Alerts

Following alerts are added in the NRF Alerts section:

Note:

Alert gets raised for every certificate that will expire in the above time frame. For example, NRF supports both RSA and ECDSA. So, we have configured two certificates. Accordingly, let us suppose RSA certificate is about to expire in 6 months in this situation only one alert will be raised and if both are about to expire then two alerts will be raised. Moreover, alerts can be differentiated using "serialNumber" tag.For example, serialNumber=4661 is used for RSA and serialNumber =4662 is used for ECDSA.

Maintain

If you encounter alerts at system or application levels, see NRF Alerts section for resolution steps.

In case the alert persists, perform the following:

- Collect the logs and Troubleshooting Scenarios: For more information on how to collect logs and troubleshooting information, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

- Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.7 Error Messages Enhancement

4.7.1 Error Response Enhancement for NRF

NRF receives the

message requests from Consumer NFs and may send error responses while processing them.

The error response generated by NRF included the error description in the detail attribute of

the ProblemDetails. This description is used to identify the issue and

troubleshoot them.

ProblemDetails before the error details

enhancement is as follows:

{

“title”: “Not Found”,

“status”: 404,

“detail”: “NRF Forwarding Error Occurred”,

“cause”: “UNSPECIFIED_MSG_FAILURE”

}

With the enhanced error response mechanism, NRF sends additional information such

as server FQDN, NF service name, vendor NF name, and application error Id in the

detail attribute of the ProblemDetails. This

enhancement provides more information about the error and troubleshoot them.

ProblemDetails is as

follows:

{

“title”:“<title of Problem Details>”,

“status”:“<status code of Problem Details>”,

“detail”:“<Server-FQDN>:<NF Service>:<Application specific problem detail>:<VendorNF>-<App-Error-Id>“,

“cause”:“<cause value of Problem Details>”

}{

“title”: “Not Found”,

“status”: 404,

“detail”: “NRF-d5g.oracle.com: Nnrf_NFManagement: NRF Forwarding Error Occurred: ONRF-REG-NFPRFWD-E0299; 404; NRF-d5g.oracle.com: Nnrf_NFManagement: Could not find Profile records for NfInstanceId=8cfc6828-bd5d-4a3a-93d4-6bdd848d6bab: ONRF-REG-NFPR-E1004”,

“cause”: “UNSPECIFIED_MSG_FAILURE”

}The updated format for the detail attribute will be sent only

for the error responses generated by NRF application. For all other scenarios (like response generated by the

underlying stack) the detail attribute will not provide the information

in the updated format.

Note:

All responses sent by NRF application towards the peer follows this format. All internal microservice responses and OAM responses may or may not follow this format.The following table describes the detail attribute of the

ProblemDetails parameter:

Table 4-6 Detail Attributes of the ProblemDetails

| Attribute | Description |

|---|---|

<Server-FQDN> |

Indicates the NRF FQDN. It is obtained from the

nfFqdn Helm parameter.

Sample Value: NRF-d5g.oracle.com |

<NF Service> |

Indicates the service name. This service name can be

3GPP defined NRF

service name or an internal one.

Possible Values:

|

<Application specific problem

detail> |

Indicates a short description of the error. Sample Value:

|

<VendorNF> |

Indicates the Oracle NF Type. For NRF, this value is always ONRF. Here, O is the prefixed value. NF type is obtained from thenfType Helm parameter.

|

<App-Error-Id> |

Indicates the application specific error Id. This Id is a unique error Id which includes the Microservice ID, Category, and the Error Code. The format of Where,

Sample Value:

|

Table 4-7 Microservice Ids List

| Microservice Ids | Microservice Name |

|---|---|

REG |

nfregistration |

SUB |

nfsubscription |

DIS |

nfdiscovery |

ACC |

nfaccesstoken |

CDS |

nrfcachedata |

AUD |

nrfauditor |

CFG |

nrfconfiguration |

ART |

nrfartisan |

IGW |

ingressgateway |

EGW |

egressgateway |

ARS |

alternate-route |

Table 4-8 List of Categories

| Category Id | Category Description |

|---|---|

ACAUTHN |

Authentication during Access Token service operation. |

ACAUTHZ |

Authorization during Access Token service operation. |

ACCOPT |

Access Token configuration. |

ACFWD |

Access Token service operation during forwarding. |

ACROAM |

Access Token service operation during roaming. |

ACTOK |

Access Token service operation. |

AUTHOPT |

Authentication options configuration. |

CNCCDTN |

CNCC Controller configuration. |

CSHTOPT |

Control shutdown options configuration. |

DEREG |

NFDeregister service operation. |

DISAUTH |

NFDiscover service operation during authorization. |

DISC |

NFDiscover service operation. |

DISCOPT |

Discovery options configuration. |

DISCSLF |

SLF for NFDiscover. |

DISCFWD |

NFDiscover service operation during forwarding. |

DISROAM |

NFDiscover service operation during roaming. |

FULLUPD |

NFUpdate with Full Replacement (PUT) service operation. |

FWDOPT |

Forwarding options configuration. |

GENOPT |

General options configuration. |

GROPT |

GeoRedundancy options configuration. |

GTSUB |

Get subscription. |

GWTHFOR |

Growth configuration for forwarding. |

GWTHOPT |

Growth options configuration. |

HB |

NFHeartbeat service operation. |

HBAUD |

Heart beat for NRF Auditor service operation. |

HBRMAUD |

Remote heart beat for NRF Auditor. |

INVSROP |

UnSupported HTTP Method for service operation. |

LOGOPT |

Logging options configuration. |

MGMTOPT |

Management options configuration. |

NFDTLS |

Fetching NF State data |

NFINSTS |

Get remote discovery instance and remote instance data. |

NFLR |

NFListRetrieval service operation. |

NFPR |

NFProfileRetrieval service operation. |

NFPRAUD |

NRF Auditor service operation for NF profiles. |

NFPRFWD |

NFProfileRetrieval service operation during forwarding. |

NPTRDRG |

NRF Artisan for notification event to DNS NAPTR on deleting AMF profile. |

NPTROPT |

NAPTR options configuration. |

NPTRREC |

Status API for DNS NAPTR record. |

NPTRREG |

NRF Artisan for NAPTR registration. |

NPTRUPD |

NRF Artisan for DNS NAPTR update. |

NRFCFG |

Fetching NRF Configuration. |

NRFOPER |

NRF operation state configuration. |

PARTUPD |

NFUpdate with Partial Replacement (PATCH) service operation. |

POPROPT |

Pod protection options configuration. |

REGN |

NFRegister service operation. |

RELDVER |

NRF Auditor service operation for reloading site and network version info. |

ROAMOPT |

Roaming options configuration. |

RTNPTR |

Manual cache update of DNS NAPTR records. |

SCRNOPT |

Screening options configuration. |

SCRNRUL |

Screening rules configuration. |

SLFOPT |

SLF options configuration. |

SNOTIF |

NFStatusNotify service operation. |

SUBAUD |

NRF Auditor service operation for subscription. |

SUBDTLS |

Retrieve subscription details based on query attributes for configuring the non-signaling API. |

SUBFWD |

NFStatusSubscribe service operation during forwarding. |

SUBRAUD |

NRF Auditor service operation for remote subscription. |

SUBROAM |

NFStatusSubscribe service operation during roaming. |

SUBSCR |

NFStatusSubscribe service operation. |

UNKSROP |

UnSupported media type. |

UNSFWD |

NFStatusUnsubscribe service operation during forwarding. |

UNSROAM |

NFStatusUnsubscribe service operation during roaming. |

UNSUBSC |

NFStatusUnsubscribe service operation. |

UPDSUBS |

NFStatusSubscribe update (PATCH) service operation. |

UPSFWD |

NFStatusSubscribe update (PATCH) service operation during forwarding. |

UPSROAM |

NFStatusSubscribe update (PATCH) service operation during roaming. |

The error response codes are available in the Error Response Code Detailssection.

Managing the Error Response Enhancements for NRF

This section explains the procedure to enable and configure the feature.

Enable

This feature is an enhancement to the existing detail

attribute in the ProblemDetails and hence there is no specific flag to

enable or disable this feature.

Configure

You can configure the feature using Helm.

Helm

- Open the

ocnrf_custom_values_25.1.203.yamlfile. - Configure the following parameters under global parameters:

global.nfFqdnparameter to indicate the NRF FQDN.global.nfTypeparameter to indicate the NF type.global.maxDetailsLengthis used to determine the maximum length limit of compiled error string by NRF in the details field.

- Save the file.

- Install NRF. For more information about installation procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

- Run Helm upgrade, if you are enabling this feature after NRF deployment. For more information about upgrade procedure, see Oracle Communications Cloud Native Core, Network Repository Function Installation, Upgrade, and Fault Recovery Guide.

Observability

Metrics

There are no new metrics added to this feature.

Alerts

There are no alerts added or updated for this feature.

KPIs

There are no new KPIs related to this feature.

Maintain

If you encounter alerts at system or application levels, see the NRF Alerts section for resolution steps.

In case the alerts still persist, perform the following:

1. Collect the logs: For more information on collecting logs, see Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

2. Raise a service request: See My Oracle Support for more information on how to raise a service request.

4.7.2 Error Log Messages Enhancement

NRF uses the logs to register the system events along with their date and time of occurrence. They also provide important details about the chain of events that could have led to an error or problem. These log details are further used to identify the source of the error and troubleshoot them. NRF supports various log levels that can be set for a microservice with any of the following values:

- TRACE

- DEBUG

- INFO

- WARN

- ERROR

For more information on details of these log levels, see the “Log Levels” section in Oracle Communications Cloud Native Core, Network Repository Function Troubleshooting Guide.

With this feature, NRF adds additional information to the existing “ERROR” log messages. This additional information can provide more details about the error which can help to identify the problem details, error generating entity and subscriber information.

The Error Log Messages Enhancement feature adds the following additional attributes to the existing "ERROR" logs with appropriate values during the failure scenarios:

errorStatuserrorTitleerrorDetailserrorCausesenderreceiversubscriberId

For more information about the new attributes, see Table 4-9.

Sample enhanced log details for the NRF Subscription Microservice:

{

"instant": {

"epochSecond": 1717077558,

"nanoOfSecond": 256932930

},

"thread": "boundedElastic-8",

"level": "ERROR",

"loggerName": "com.oracle.cgbu.cne.nrf.routes.SubscriptionHandler",

"message": "Response sent: 500 Subscription global limit breached for uri : http://ocnrf-ingressgateway.nrf1-ns/nnrf-nfm/v1/subscriptions with the problem cause : INSUFFICIENT_RESOURCES and problem details : NRF-d5g.oracle.com: Nnrf_NFManagement: Subscription global limit breached: ONRF-SUB-SUBSCR-E2003",

"endOfBatch": false,

"loggerFqcn": "org.apache.logging.log4j.spi.AbstractLogger",

"threadId": 39547,

"threadPriority": 5,

"messageTimestamp": "2024-05-30T13:59:18.256+0000",

"configuredLevel": "WARN",

"subsystem": "NfSubscribe",

"processId": "1",

"nrfTxId": "nrf-tx-2103278974",

"ocLogId": "1717077558225_77_ocnrf-ingressgateway-854464d548-426bz:1717077558237_110_ocnrf-nfsubscription-766d45f5c7-t4xp8",

"xRequestId": "",

"numberOfRetriesAttempted": "",

"hostname": "ocnrf-nfsubscription-766d45f5c7-t4xp8",

"errorStatus": "500",

"errorTitle": "Subscription global limit breached",

"errorDetails": "NRF-d5g.oracle.com: Nnrf_NFManagement: Subscription global limit breached: ONRF-SUB-SUBSCR-E2003",

"errorCause": "INSUFFICIENT_RESOURCES",

"sender": "NRF-6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c",

"subscriberId": "imsi-345012123123126"

}In the above example, the following new attributes are added to the error logs.

"errorStatus": "500",

"errorTitle": "Subscription global limit breached",

"errorDetails": "NRF-d5g.oracle.com: Nnrf_NFManagement: Subscription global limit breached: ONRF-SUB-SUBSCR-E2003",

"errorCause": "INSUFFICIENT_RESOURCES",

"sender": "NRF-6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c",

"subscriberId": "imsi-345012123123126"The additionalErrorLogging feature flag is added to the

logging API for the supported microservices. When the feature flag is enabled, it adds

the errorStatus, errorTitle,

errorDetails, errorCause, sender,

receiver attributes to the ERROR logs.

Additionally, the logSubscriberInfo flag is added to the logging API for

the supported microservices. When additionalErrorLogging and

logSubscriberInfo are enabled, the subscriber information is also

added to the ERROR logs.

When the logSubscriberInfo flag is enabled and if the

subscriberId information is available, then NRF adds the

subscriberId value in the log messages. If

logSubscriberInfo is disabled, and the

subscriberId information is still available NRF adds the

subscriberId value as XXXX in the log messages.

Note:

The subscriberId value can be any of the following:

- 3GPP-Sbi-Correlation-Info header present in the request header received at NRF.

- 3GPP-Sbi-Correlation-Info header present in the response header received from the peer in the case of NRF acting as a client.

- Either SUPI or GPSI values or both SUPI and GPSI values.

For more information about the subscriberId attribute,

see Table 4-9.

Example with both SUPI and GPSI values:

{

"instant": {

"epochSecond": 1717489820,

"nanoOfSecond": 998846286

},

"thread": "@608f310a-49",

"level": "ERROR",

"loggerName": "com.oracle.cgbu.cne.nrf.service.NFDiscoveryService",

"message": "Response sent: 503 null for uri : /nnrf-disc/v1/nf-instances?{target-nf-type=[UDR], requester-nf-type=[UDM], supi=[imsi-10000005], gpsi=[msisdn-77195225555]} with the problem cause : null and problem details : NRF-d5g.oracle.com: Nnrf_NFDiscovery: SLF Lookup Error Occurred: ONRF-DIS-DISCSLF-E0499; 503; null; 503; null",

"endOfBatch": false,

"loggerFqcn": "org.apache.logging.log4j.spi.AbstractLogger",

"threadId": 49,

"threadPriority": 5,

"messageTimestamp": "2024-06-04T08:30:20.998+0000",

"configuredLevel": "WARN",

"processId": "1",

"nrfTxId": "nrf-tx-2050587612",

"ocLogId": "1717489820750_308_ocnrf-ingressgateway-54594dfd87-9fq2k:1717489820763_73_ocnrf-nfdiscovery-744ff58c64-5cdh9",

"serviceOperation": "NFDiscover",

"xRequestId": "",

"requesterNfType": "UDM",

"targetNfType": "UDR",

"discoveryQuery": "/nnrf-disc/v1/nf-instances?{target-nf-type=[UDR], requester-nf-type=[UDM], supi=[imsi-10000005], gpsi=[msisdn-77195225555]}",

"hostname": "ocnrf-nfdiscovery-744ff58c64-5cdh9",

"subsystem": "discoveryNfInstances",

"errorStatus": "503",

"errorDetails": "NRF-d5g.oracle.com: Nnrf_NFDiscovery: SLF Lookup Error Occurred: ONRF-DIS-DISCSLF-E0499; 503; null; 503; null",

"sender": "NRF-6faf1bbc-6e4a-4454-a507-a14ef8e1bc5c",

"subscriberId": "imsi-10000005;msisdn-77195225555"

}Note:

The log with updates attributes will be printed only for the error responses generated by NRF application. For all other scenarios (like error responses generated by the underlying stack) the log with updated attributes will not be printed.Mapping Error Log Attributes

The following table explains the mapping of the new attributes with the

existing attributes of ProblemDetails, subscriberId,

sender, and receiver in the error logs.

Table 4-9 Mapping Error Log Attributes

| New Attribute in Logs | Value when NRF acting as Standalone Server(Example: NFRegister, NFUpdate, NFListRetrieval, NFProfileRetrieval service operations and so on) | Value when NRF is acting as Standalone Client(Example: NfStatusNotify service operation) | Value when NRF acting as both Server and Client(Example: NRF Forwarding, Roaming, SLF) | |

|---|---|---|---|---|

| As Server | As Client | |||

errorStatus |

status sent by NRF in

ProblemDetails of HTTP response

|

status received byNRF in

ProblemDetails of HTTP response

|

status sent by NRF in

ProblemDetails of HTTP response

|

status received by NRF in

ProblemDetails of HTTP response

|

errorTitle |

title sent by NRF in

ProblemDetails of HTTP response

|

title received by NRF in

ProblemDetails of HTTP response

|

title sent by NRF in

ProblemDetails of HTTP response