8 Fault Recovery

This chapter provides information about fault recovery for OCNADD deployment.

8.1 Overview

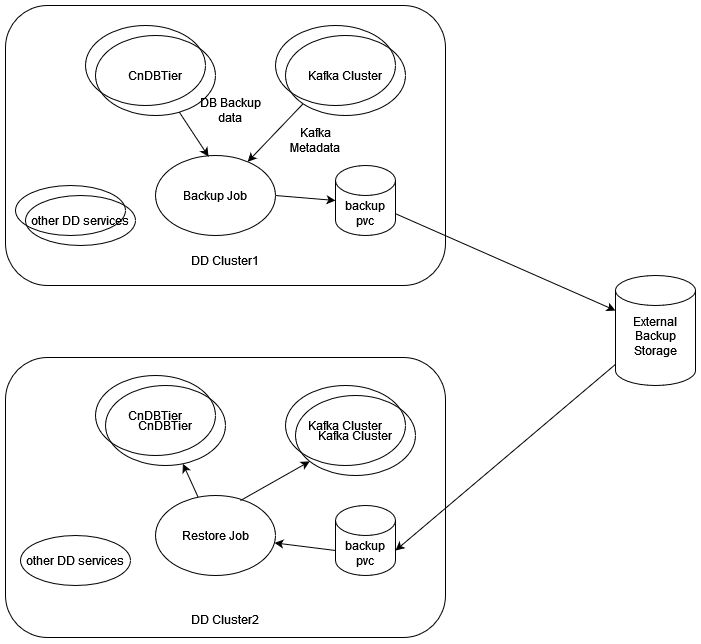

This section describes procedures to perform the backup and restore for the Oracle Communications Network Analytics Data Director (OCNADD) deployment. The backup and restore procedures will be used in the fault recovery of the OCNADD. The OCNADD operators can take only the OCNADD instance specific database and required OCNADD Kafka metadata backup and restore them either on the same or a different Kubernetes cluster.

The backup and restore procedures are helpful in the following scenarios:

- OCNADD fault recovery

- OCNADD cluster migration

- OCNADD setup replication from production to development or staging

- OCNADD cluster upgrade to new CNE version or K8s version

The OCNADD backup contains the following data:

- OCNADD database(s) backup

- OCNADD Kafka metadata backup including the topics and partitions information

Note:

If the deployed helm charts and the customizedocnadd-custom-values-25.1.200.yaml for the current deployment is stored in the customer helm or artifact

repository, then the helm chart and ocnadd-custom-values-25.1.200.yaml backup is not required.

OCNADD Database(s) Backup

- Configuration data: This data is exclusive for the given OCNADD instance. Therefore, an exclusive logical database is created and used by an OCNADD instance to store its configuration data and operator driven configuration. Operators can configure the OCNADD instance specific configurations using the Configuration UI service through the Cloud Native Configuration Console.

- Alarm configuration data: This data is also exclusive to the given OCNADD instance. Therefore, an exclusive logical database is created and used by an OCNADD Alarm service instance to store its alarm configuration and alarms.

- Health monitoring data: This data is also exclusive to the given OCNADD instance. Therefore, an exclusive logical database is created and used by an OCNADD Health monitoring service instance to store the health profile of various other services.

The database backup job uses the mysqldump utility.

The Scheduled regular backups helps in:

- Restoring the stable version of the data directory databases

- Minimize significant loss of data due to upgrade or rollback failure

- Minimize loss of data due to system failure

- Minimize loss of data due to data corruption or deletion due to external input

- Migration of the database information from one site to another site

OCNADD Kafka Metadata Backup

The OCNADD Kafka metadata backup contains the following information:

- Created topics information

- Created partitions per topic information

8.1.1 Fault Recovery Impact Areas

The following table shares information about impact of OCNADD fault recovery scenarios:

Table 8-1 OCNADD Fault Recovery Scenarios Impact Information

| Scenario | Requires Fault Recovery or Reinstallation of CNE? | Requires Fault Recovery or Reinstallation of cnDBTier? | Requires Fault Recovery or Reinstallation of Data Director? |

|---|---|---|---|

| Scenario 1: Deployment Failure Recovering OCNADD when its deployment is corrupted |

No | No | Yes |

| Scenario 2: cnDBTier Corruption | No | Yes | No

However, it requires to restore the databases from backup and Helm upgrade of the same OCNADD version to update the OCNADD configuration. For example, change in cnDBTier service information, such as cnDB endpoints, DB credentials, and so on. |

| Scenario 3: Database Corruption Recovering from corrupted OCNADD configuration database |

No | No | No

However, it requires to restore the databases from old backup. |

| Scenario 4: Site Failure Complete site failure due to infrastructure failure, for example, hardware, CNE, and so on. |

Yes | Yes | Yes |

8.1.2 Prerequisites

Before you run any fault recovery procedure, ensure that the following prerequisites are met:

- cnDBTier must be in a healthy state and available on a new or newly installed site where the restore needs to be performed

- Automatic backup should be enabled for OCNADD.

- Docker images used during the last installation or upgrade must be retained in the external data storage or repository

- The

ocnadd-custom-values-25.1.200.yamlused at the time of OCNADD deployment must be retained. If theocnadd-custom-values-25.1.200.yamlfile is not retained, it is required to be recreated manually. This task increases the overall fault recovery time.

Important:

Do not change DB Secret or cnDBTier MySQL FQDN or IP or PORT configurations during backup and restore.8.2 Backup and Restore Flow

Important:

- It is recommended to keep the backup storage in the external storage that can be shared between different clusters. This is required, so that in an event of a fault, the backup is accessible on the other clusters. The backup job should create a PV or PVC from the external storage provided for the backup.

- In case the external storage is not made available for the backup storage, the customer should take care to copy the backups from the associated backup PV in the cluster to the external storage. The security and connectivity to the external storage should be managed by the customer. To copy the backup from the backup PV to the external server, follow Verifying OCNADD Backup.

- The restore job should have access to the external storage so that the backup from the external storage can be used for the restoration of the OCNADD services. In case the external storage is not available, the backup should be copied from the external storage to the backup PV in the new cluster. For information on the procedure, see Verifying OCNADD Backup.

- In case of two site redundancy feature is enabled then respective site backup should be used to restore the site during failure recovery.

Note:

At a time, only one among the three backup jobs (ocnaddmanualbackup, ocnaddverify or ocnaddrestore) can be running. If any existing backup job is running, that job needs to be deleted to spawn the new job.

kubectl delete job.batch/<ocnadd*> -n <namespace>

where namespace = Namespace of OCNADD deployment

ocnadd* = Running jobs in the namespace (ocnaddmanualbackup, ocnaddverify or ocnaddrestore)

Example:

kubectl delete job.batch/ocnaddverify -n ocnadd-deployBackup

- The OCNADD backup is managed using the backup job created at the time of installation.

The backup job runs as a cron job and takes the daily backup of the

following:

- OCNADD databases for configuration, alarms, and health monitoring

- OCNADD Kafka metadata including topics and partitions, which are previously created

- The automated backup job spawns as a container and takes the backup

at the scheduled time. The backup file

OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2is created and stored in the PV mounted on the path/work-dir/backupby the backup container. - On-demand backup can also be created by creating the backup container. For more information, see Performing OCNADD Manual Backup.

- The backup can be stored on external storage.

Restore

- The OCNADD restore job must have access to the backups from the backup PV/PVC.

- The restore uses the latest backup file available in the backup storage if the BACKUP_FILE argument is not given.

- The restore job performs the restore in the following order:

- Restore the OCNADD database(s) on the cnDBTier.

- Restore the Kafka metadata.

8.3 OCNADD Backup

- Automated backup

- Manual backup

Automated Backup

- This is managed by the automated K8s job configured during the installation of the OCNADD. For more information, see Updating the OCNADD Backup Cronjob step.

- It is a scheduled job and runs daily at the configured time to

collect the OCNADD backup and creates the backup file

OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2.

Manual Backup

- This is managed by an on-demand job.

- A new K8s job will be created on executing the Performing OCNADD Manual Backup procedure.

- The job completes after taking the backup. Follow Verifying OCNADD Backup procedure to verify the generated backup.

8.4 Performing OCNADD Backup Procedures

8.4.1 Performing OCNADD Manual Backup

Note:

If you have used OCCM to create certificates, then use theocnadd_manualBackup_occm.yaml file. Here is the

file

template:# ocnadd_manualBackup_occm.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ocnaddmanualbackup

namespace: ocnadd-deploy

spec:

template:

metadata:

name: ocnaddmanualbackup

labels:

role: backup

spec:

automountServiceAccountToken: false

volumes:

- name: backup-vol

persistentVolumeClaim:

claimName: backup-mysql-pvc

- name: config-vol

configMap:

name: config-backuprestore-scripts

- name: client-server-certificate-client

secret:

secretName: ocnaddbackuprestore-secret-client

- name: client-server-certificate-server

secret:

secretName: ocnaddbackuprestore-secret-server

- name: client-server-certificate-ca

secret:

secretName: occm-ca-secret

- name: truststore-keystore-volume

emptyDir: {}

serviceAccountName: ocnadd-deploy-sa-ocnadd

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

containers:

- name: ocnaddmanualbackup

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/ocdd.repo/ocnaddbackuprestore:2.0.10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

volumeMounts:

- mountPath: "work-dir"

name: backup-vol

- mountPath: "config-backuprestore-scripts"

name: config-vol

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs

readOnly: true

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

env:

- name: HOME

value: /home/ocnadd

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

- name: DB_USER

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_USER

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_PASSWORD

- name: BACKUP_DATABASES

value: ALL

- name: BACKUP_ARG

value: ALL

command:

- /bin/sh

- -c

- |

cp /config-backuprestore-scripts/*.sh /home/ocnadd

chmod +x /home/ocnadd/*.sh

cp /config-backuprestore-scripts/command.properties /home/ocnadd

chmod 660 /home/ocnadd/command.properties

sed -i "s*\$KS_PASS*$KS_PASS*" /home/ocnadd/command.properties

sed -i "s*\$TS_PASS*$TS_PASS*" /home/ocnadd/command.properties

mkdir /work-dir/backup

echo "Executing manual backup script"

bash /home/ocnadd/backup.sh $BACKUP_DATABASES $BACKUP_ARG

ls -lh /work-dir/backup

initContainers:

- name: ocnaddinitcontainer

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/utils.repo/jdk21-openssl:1.1.0

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

capabilities:

drop: ["ALL"]

env:

- name: SERVER_CERT_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-servercert.pem

- name: CLIENT_CERT_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientcert.pem

- name: SERVER_KEY_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-serverprivatekey.pem

- name: CLIENT_KEY_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientprivatekey.pem

- name: SERVER_KEY_STORE

value: /var/securityfiles/keystore/serverKeyStore.p12

- name: CLIENT_KEY_STORE

value: /var/securityfiles/keystore/clientKeyStore.p12

- name: TRUST_STORE

value: /var/securityfiles/keystore/trustStore.p12

- name: CA_CERT_FILE

value: /var/securityfiles/certs/ca/cacert.pem

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

command: ['/bin/sh']

args: ['-c', "openssl pkcs12 -export -inkey $CLIENT_KEY_FILE -in $CLIENT_CERT_FILE -out $CLIENT_KEY_STORE -password pass:$KS_PASS && keytool -importcert -file $CA_CERT_FILE -alias ocnaddcacert -keystore $TRUST_STORE -storetype PKCS12 -storepass $TS_PASS -noprompt;"]

volumeMounts:

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs/client

- name: client-server-certificate-server

mountPath: /var/securityfiles/certs/server

- name: client-server-certificate-ca

mountPath: /var/securityfiles/certs/ca

restartPolicy: Never

Perform the following steps to take the manual backup:

- Go to

custom-templatesfolder in the extracted ocnadd-release package and update theocnadd_manualBackup.yamlor theocnadd_manualBackup_occm.yamlfile with the following information:- Value for BACKUP_DATABASES can be set to ALL (that is, healthdb_schema, configuration_schema, and alarm_schema) or the individual DB names can also be passed. By default, the value is 'ALL'.

- Value of BACKUP_ARG can be set to ALL, DB, or KAFKA. By default, the value is ALL.

- Update other values as

follows:

apiVersion: batch/v1 kind: Job metadata: name: ocnaddmanualbackup namespace: ocnadd-deploy #---> update the namespace ------------ spec: serviceAccountName: ocnadd-deploy-sa-ocnadd #---> update the service account name. Format:<serviceAccount>-sa-ocnadd ------------ containers: - name: ocnaddmanualbackup image: <repo-path>/ocdd.repo/ocnaddbackuprestore:2.0.10 #---> update repository path ------------ initContainers: - name: ocnaddinitcontainer image: <repo-path>/utils.repo/jdk21-openssl:1.1.0 #---> update repository path env: - name: BACKUP_DATABASES value: ALL - name: BACKUP_ARG value: ALL

- Run the below command to run the

job:

kubectl create -f ocnadd_manualBackup.yamlOr, use the following command if OCCM is used:

kubectl create -f ocnadd_manualBackup_occm.yaml

8.4.2 Verifying OCNADD Backup

Caution:

The connectivity between the external storage through either PV/PVC or network connectivity must be ensured.

Note:

If you have used OCCM to create certificates, then use theocnadd_verify_backup_occm.yaml file. Here is the

file

template:# ocnadd_verify_backup_occm.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ocnaddverify

namespace: ocnadd-deploy

spec:

template:

metadata:

name: ocnaddverify

spec:

automountServiceAccountToken: false

volumes:

- name: backup-vol

persistentVolumeClaim:

claimName: backup-mysql-pvc

- name: config-vol

configMap:

name: config-backuprestore-scripts

- name: client-server-certificate-client

secret:

secretName: ocnaddbackuprestore-secret-client

- name: client-server-certificate-server

secret:

secretName: ocnaddbackuprestore-secret-server

- name: client-server-certificate-ca

secret:

secretName: occm-ca-secret

- name: truststore-keystore-volume

emptyDir: {}

serviceAccountName: ocnadd-deploy-sa-ocnadd

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

containers:

- name: ocnaddverify

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/ocdd.repo/ocnaddbackuprestore:2.0.10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

volumeMounts:

- mountPath: "work-dir"

name: backup-vol

- mountPath: "config-backuprestore-scripts"

name: config-vol

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs

readOnly: true

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

env:

- name: HOME

value: /home/ocnadd/

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

- name: DB_USER

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_USER

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_PASSWORD

command:

- /bin/sh

- -c

- |

cp /config-backuprestore-scripts/*.sh /home/ocnadd/

chmod +x /home/ocnadd/*.sh

cp /config-backuprestore-scripts/command.properties /home/ocnadd

chmod 660 /home/ocnadd/command.properties

sed -i "s*\$KS_PASS*$KS_PASS*" /home/ocnadd/command.properties

sed -i "s*\$TS_PASS*$TS_PASS*" /home/ocnadd/command.properties

mkdir -p /work-dir/backup

echo "Checking backup path"

ls -lh /work-dir/backup

sleep 20m

initContainers:

- name: ocnaddinitcontainer

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/utils.repo/jdk21-openssl:1.1.0

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

capabilities:

drop: ["ALL"]

env:

- name: SERVER_CERT_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-servercert.pem

- name: CLIENT_CERT_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientcert.pem

- name: SERVER_KEY_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-serverprivatekey.pem

- name: CLIENT_KEY_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientprivatekey.pem

- name: SERVER_KEY_STORE

value: /var/securityfiles/keystore/serverKeyStore.p12

- name: CLIENT_KEY_STORE

value: /var/securityfiles/keystore/clientKeyStore.p12

- name: TRUST_STORE

value: /var/securityfiles/keystore/trustStore.p12

- name: CA_CERT_FILE

value: /var/securityfiles/certs/ca/cacert.pem

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

command: ['/bin/sh']

args: ['-c', "openssl pkcs12 -export -inkey $CLIENT_KEY_FILE -in $CLIENT_CERT_FILE -out $CLIENT_KEY_STORE -password pass:$KS_PASS && keytool -importcert -file $CA_CERT_FILE -alias ocnaddcacert -keystore $TRUST_STORE -storetype PKCS12 -storepass $TS_PASS -noprompt;"]

volumeMounts:

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs/client

- name: client-server-certificate-server

mountPath: /var/securityfiles/certs/server

- name: client-server-certificate-ca

mountPath: /var/securityfiles/certs/ca

restartPolicy: Never- Go to the

custom-templatesfolder in the extracted ocnadd-release package and update theocnadd_verify_backup.yamlor theocnadd_verify_backup_occm.yamlfile with the following information:- Sleep time is configurable, update it if required (the default value is set to 10m).

- Update other values as

follows:

apiVersion: batch/v1 kind: Job metadata: name: ocnaddverify namespace: ocnadd-deploy #---> update the namespace ------------ spec: serviceAccountName: ocnadd-sa-ocnadd #---> update the service account name. Format:<serviceAccount>-sa-ocnadd ------------ containers: - name: ocnaddverify image: <repo-path>/ocdd.repo/ocnaddbackuprestore:2.0.10 #---> update repository path ------------ initContainers: - name: ocnaddinitcontainer image: <repo-path>/utils.repo/jdk21-openssl:1.1.0 #---> update repository path

- Run the below command to create the

job:

kubectl create -f ocnadd_verify_backup.yamlOr, use the following command if OCCM is used:

kubectl create -f ocnadd_verify_backup_occm.yaml - If the external storage is used as PV/PVC, then enter the ocnaddverify-xxxx

container using the following commands:

kubectl exec -it <ocnaddverify-xxxx> -n <ocnadd namespace> -- bash- Change the directory to

/work-dir/backupand inside the latest backup fileOCNADD_BACKUP_DD-MM-YYYY_hh-mm-ss.tar.bz2, verify the DB backup and Kafka metadata backup files.

8.4.3 Retrieving the OCNADD Backup Files

- Run the Verifying OCNADD Backup procedure to spawn the

ocnaddverify-xxxx. - Go to the running

ocnaddverifypod to identify and retrieve the desired backup folder using the following commands:- Run the following command to access the

pod:

kubectl exec -it <ocnaddverify-xxxx> -n <ocandd-namespace> -- bashwhere,

<ocnadd-namespace>is the namespace where the ocnadd management group services are running.<ocnaddverify-xxxx>is the backup verification pod in the same namespace. - Change the directory to

/work-dir/backupand identify the backup file "OCNADD_BACKUP_DD-MM-YYYY_hh-mm-ss.tar.bz2". - Exit the

ocnaddverifypod.

- Run the following command to access the

pod:

- Copy the backup file from the pod to the local bastion server by copying the file

OCNADD_BACKUP_DD-MM-YYYY_hh-mm-ss.tar.bz2, and run the following command:kubectl cp -n <ocnadd-namespace> <ocnaddverify-xxxx>:/work-dir/backup/<OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2> <OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2>where,

<ocnadd-namespace>is the namespace where the ocnadd management group services are running.<ocnaddverify-xxxx>is the backup verification pod in the same namespace.For example:

kubectl cp -n ocnadd ocnaddverify-drwzq:/work-dir/backup/OCNADD_BACKUP_10-05-2023_08-00-05.tar.bz2 OCNADD_BACKUP_10-05-2023_08-00-05.tar.bz2

8.4.4 Copying and Restoring the OCNADD backup

- Retrieve the OCNADD backup file.

- Perform the Verifying OCNADD Backup procedure to spawn the

ocnaddverify-xxxx. - Copy the backup file from the local bastion server to the running

ocnaddverify pod, run the following

command:

kubectl cp <OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2> <ocnaddverify-xxxx>:/work-dir/backup/<OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2> -n <ocnadd-namespace>For example:

kubectl cp OCNADD_BACKUP_10-05-2023_08-00-05.tar.bz2 ocnaddverify-mrdxn:/work-dir/backup/OCNADD_BACKUP_10-05-2023_08-00-05.tar.bz2 -n ocnadd - Go to

ocnaddverifypod and path,/workdir/backup/OCNADD_BACKUP_DD-MM-YYYY_hh-mm-ss.tar.bz2to verify if the backup has been copied. - Restore OCNADD using the procedure defined in Creating OCNADD Restore Job.

- Restart the

ocnaddalarm,ocnaddhealthmonitoring, andocnaddadminsvcpods. - Restart the

ocnaddconfigurationafter the ocnaddadmin service has been restarted completely.

8.5 Disaster Recovery Scenarios

This chapter describes the disaster recovery procedures for different recovery scenarios.

8.5.1 Scenario 1: Deployment Failure

This section describes how to recover OCNADD when the OCNADD deployment corrupts.

For more information, see Restoring OCNADD.

8.5.2 Scenario 2: cnDBTier Corruption

This section describes how to recover the cnDBTier corruption. For more information, see Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide. After the cnDBTier recovery, restore the OCNADD database from the previous backup.

To restore the OCNADD database, execute the procedure Creating OCNADD Restore Job by setting BACKUP_ARG to DB.

8.5.3 Scenario 3: Database Corruption

This section describes how to recover from the corrupted OCNADD database.

Perform the following steps to recover the OCNADD configuration database (DB) from the corrupted database:

- Retain the working ocnadd backup by following Retrieving the OCNADD Backup Files procedure.

- Drop the existing Databases by accessing the MySql DB.

- Perform the Copying and Restoring the OCNADD backup procedure to restore the backup.

8.5.4 Scenario 4: Site Failure

This section describes how to perform fault recovery when the OCNADD site has software failure.

Perform the following steps in case of a complete site failure:

- Run the Cloud Native Environment (CNE) installation procedure to install a new Kubernetes cluster. For more information, see Oracle Communications Cloud Native Environment Installation, Upgrade, and Fault Recovery Guide.

- Run the cnDBTier installation procedure. For more information, see Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide.

- For cnDBTier fault recovery, take a data backup from an older site and restore it to a new site. For more information about cnDBTier backup, see "Create On-demand Database Backup" and to restore the database to a new site, see "Restore DB with Backup" in Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide.

- Restore OCNADD. For more information, see Restoring OCNADD.

8.6 Restoring OCNADD

Perform this procedure to restore OCNADD when a fault event has occurred or deployment is corrupted.

Note:

This procedure expects the OCNADD backup folder is retained.- Get the retained backup file

"

OCNADD_BACKUP_DD-MM-YYYY_hh-mm-ss.tar.bz2". - Get the Helm charts that was used in the earlier deployment.

- Run the following command to uninstall the corrupted OCNADD deployment:

Management Group or any Worker

Group:

helm uninstall <release_name> --namespace <namespace>Where,

<release_name>is the release name of the ocnadd deployment which is being uninstalled.<namespace>is the namespace of OCNADD deployment which is being uninstalled.For example: To uninstall the Management Group

helm uninstall ocnadd-mgmt --namespace dd-mgmt-group - Install the Management Group or any Worker Group that was corrupted and uninstalled in the previous step using the helm charts that were used in the earlier deployment. For the installation procedure see, Installing OCNADD.

- To verify whether OCNADD installation is complete, see Verifying OCNADD Installation.

- Follow procedure Copying and Restoring the OCNADD backup

8.7 Creating OCNADD Restore Job

Note:

If you have used OCCM to create certificates, then use theocnadd_restore_occm.yaml file. Here is the file

template:# ocnadd_restore_occm.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ocnaddrestore

namespace: ocnadd-deploy

spec:

template:

metadata:

name: ocnaddrestore

labels:

role: backup

spec:

automountServiceAccountToken: false

volumes:

- name: backup-vol

persistentVolumeClaim:

claimName: backup-mysql-pvc

- name: config-vol

configMap:

name: config-backuprestore-scripts

- name: client-server-certificate-client

secret:

secretName: ocnaddbackuprestore-secret-client

- name: client-server-certificate-server

secret:

secretName: ocnaddbackuprestore-secret-server

- name: client-server-certificate-ca

secret:

secretName: occm-ca-secret

- name: truststore-keystore-volume

emptyDir: {}

serviceAccountName: ocnadd-deploy-sa-ocnadd

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

containers:

- name: ocnaddrestore

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/ocdd.repo/ocnaddbackuprestore:2.0.10

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

volumeMounts:

- mountPath: "work-dir"

name: backup-vol

- mountPath: "config-backuprestore-scripts"

name: config-vol

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs

readOnly: true

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

env:

- name: HOME

value: /home/ocnadd/

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

- name: DB_USER

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_USER

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: db-secret

key: MYSQL_PASSWORD

- name: BACKUP_ARG

value: ALL

- name: BACKUP_FILE

value: ""

command:

- /bin/sh

- -c

- |

cp /config-backuprestore-scripts/*.sh /home/ocnadd/

chmod +x /home/ocnadd/*.sh

cp /config-backuprestore-scripts/command.properties /home/ocnadd

chmod 660 /home/ocnadd/command.properties

sed -i "s*\$KS_PASS*$KS_PASS*" /home/ocnadd/command.properties

sed -i "s*\$TS_PASS*$TS_PASS*" /home/ocnadd/command.properties

echo "Executing restore script"

ls -lh /work-dir/backup

bash /home/ocnadd/restore.sh $BACKUP_ARG $BACKUP_FILE

sleep 5m

initContainers:

- name: ocnaddinitcontainer

image: ocdd-docker.dockerhub-phx.oci.oraclecorp.com/utils.repo/jdk21-openssl:1.1.0

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

capabilities:

drop: ["ALL"]

env:

- name: SERVER_CERT_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-servercert.pem

- name: CLIENT_CERT_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientcert.pem

- name: SERVER_KEY_FILE

value: /var/securityfiles/certs/server/ocnaddbackuprestore-serverprivatekey.pem

- name: CLIENT_KEY_FILE

value: /var/securityfiles/certs/client/ocnaddbackuprestore-clientprivatekey.pem

- name: SERVER_KEY_STORE

value: /var/securityfiles/keystore/serverKeyStore.p12

- name: CLIENT_KEY_STORE

value: /var/securityfiles/keystore/clientKeyStore.p12

- name: TRUST_STORE

value: /var/securityfiles/keystore/trustStore.p12

- name: CA_CERT_FILE

value: /var/securityfiles/certs/ca/cacert.pem

- name: KS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: keystorekey

- name: TS_PASS

valueFrom:

secretKeyRef:

name: occm-truststore-keystore-secret

key: truststorekey

command: ['/bin/sh']

args: ['-c', "openssl pkcs12 -export -inkey $CLIENT_KEY_FILE -in $CLIENT_CERT_FILE -out $CLIENT_KEY_STORE -password pass:$KS_PASS && keytool -importcert -file $CA_CERT_FILE -alias ocnaddcacert -keystore $TRUST_STORE -storetype PKCS12 -storepass $TS_PASS -noprompt;"]

volumeMounts:

- name: truststore-keystore-volume

mountPath: /var/securityfiles/keystore

- name: client-server-certificate-client

mountPath: /var/securityfiles/certs/client

- name: client-server-certificate-server

mountPath: /var/securityfiles/certs/server

- name: client-server-certificate-ca

mountPath: /var/securityfiles/certs/ca

restartPolicy: NeverFollow the below steps to create and run OCNADD restore job:

- Restore the OCNADD database by following below steps:

- Go to the

custom-templatesfolder inside the extractedocnadd-releasepackage and update theocnadd_restore.yamlor theocnadd_restore_occm.yamlfile based on the restore requirements:- The value of BACKUP_ARG can be set to DB, KAFKA, and ALL. By default, the value is 'ALL'.

- The value of BACKUP_FILE can be set to folder name which needs to be restored, if not mentioned the latest backup will be used.

- Update other values as

below:

apiVersion: batch/v1 kind: Job metadata: name: ocnaddrestore namespace: ocnadd-deploy #---> update the namespace ------------ spec: serviceAccountName: ocnadd-deploy-sa-ocnadd #---> update the service account name. Format:<serviceAccount>-sa-ocnadd ------------ containers: - name: ocnaddrestore image: <repo-path>/ocdd.repo/ocnaddbackuprestore:2.0.10 #---> update repository path ------------ initContainers: - name: ocnaddinitcontainer image: <repo-path>/utils.repo/jdk21-openssl:1.1.0 #---> update repository path env: - name: BACKUP_ARG value: ALL - name: BACKUP_FILE value: "" #---> update the backup file name which needs to be restored, if not mentioned the latest backup will be used for example "OCNADD_Backup_DD-MM-YYYY_hh-mm-ss.tar.bz2"

- Go to the

- Run the following command to run the restore

job:

kubectl create -f ocnadd_restore.yamlOr, use the following command if OCCM is used:

kubectl create -f ocnadd_restore_occm.yamlNote:

Make sure to delete all the backup, restore, and verify jobs before creating the restore job. Related jobs areocnaddbackup,ocnaddrestore,ocnaddverify, andocnaddmanualbackup. - Wait for the restore job to be completed. It usually takes 10 to 15 minutes or more depending upon the size of the backup.

- Restart the below services in the provided order:

ocnaddhealthmonitoringocnaddalarmocnaddadminsvc

- Restart the

ocnaddconfigurationafter the step 4 has been completed. - Restart

ocnaddfilterservice for all the worker group after the restore job is completed. - To restart the Redundancy Agent pods post OCNADD Restore, see Two-Site Redundancy Fault Recovery.

Note:

If the backup is not available for the mentioned date, the pod will be in an error state, notifying the backup is not available for the given date: $DATE. In such case, provide the correct backup dates and repeat the procedure.8.8 Configuring Backup and Restore Parameters

To configure backup and restore parameters, configure the parameters listed in the following table:

Note:

For information about backup and restore procedure, see Backup and Restore Flow section.Table 8-2 Backup and Restore Parameters

| Parameter Name | Data Type | Range | Default Value | Mandatory(M)/Optional(O)/Conditional(C) | Description |

|---|---|---|---|---|---|

| BACKUP_STORAGE | STRING | - | 20Gi | M | Persistent Volume storage to keep the OCNADD backups |

| MYSQLDB_NAMESPACE | STRING | - | occne-cndbtierone | M | Mysql Cluster Namespace |

| BACKUP_CRONEXPRESSION | STRING | - | 0 8 * * * | M | Cron expression to schedule backup cronjob |

| BACKUP_ARG | STRING | - | ALL | M | KAFKA, DB, or ALL backup |

| BACKUP_FILE | STRING | - | - | O | Backup folder name which needs to be restored |

| BACKUP_DATABASES | STRING | - | ALL | M | Individual databases or all databases backup that need to be taken |

8.9 Two-Site Redundancy Fault Recovery

This section describes how to perform fault recovery of the OCNADD sites with Two-Site Redundancy enabled.

Scenario 1: When DB backup is available for both sites

- Follow the generic recovery procedure based on the failure scenarios described in the section "Fault Recovery."

- Use the respective site's backup during the restore procedure.

- Once the recovery is completed, restart the Redundancy Agent pods of the Primary site and the Secondary site.

Scenario 2: When DB backup is not available on one of the mated sites

- Access any one of the pods of the working site and run the below curl command to

delete Redundancy Configuration:

-

kubectl exec -it n <namespace> <pod> -- bashFor example:kubectl exec -it -n ocnadd-deploy ocnaddadmin-xxxxxx -- bash curl -k --cert-type P12 --cert /var/securityfiles/keystore/serverKeyStore.p12:$OCNADD_SERVER_KS_PASSWORD --location -X DELETE 'https://ocnaddconfiguration:12590/ocnadd-configuration/v1/tsr-configure/<workergroup name>?sync=false'Where,

<worker-group-name>is the namespace of the worker group with the cluster. For example,ocnadd-wg1:cluster-name

-

- Follow the generic recovery procedure based on the failure scenarios described in the section Disaster Recovery Scenarios.

- Once the recovery is completed, restart the Redundancy Agent pods first on the Primary site, then on the Secondary site.

- Re-create the Redundancy Configuration from the Primary UI.

Note:

If the DB was lost on the Primary site and the user wants the Secondary site configuration to be restored on the Primary site, then set the Way to Bidirectional while creating the Redundancy Configuration.