3 Installing ATS for Different Network Analytics Suite Products

This section describes how to install ATS for different Network Analytics Suite products. It includes:

3.1 Installing ATS for NWDAF

This section describes the resource requirements and ATS installation procedures for NWDAF:

3.1.1 Software Requirements

This section describes the software requirements to install ATS for NWDAF. Install the following software bearing the versions mentioned in the table below:

Table 3-1 Software Requirements

| Software | Version |

|---|---|

| Kubernetes | 1.20.7, 1.21.7, 1.22.5 |

| Helm | 3.1.2, 3.5.0, 3.6.3, 3.8.0 |

| Podman | 2.2.1, 3.2.3, 3.3.1 |

Supported CNE versions are: Release 1.9.x ,1.10.x, and 22.1.x.

To verify the CNE version, run the following command:

echo $OCCNE_VERSIONTo verify the Helm and Kubernetes versions installed in the CNE, run the following commands:

- Verify Kubernetes version:

kubectl version - Verify Helm version:

helm3 version

3.1.2 Environment Setup

This section describes steps to ensure the environment setup facilitates the correct installation of ATS for NWDAF.

Network Access

The Kubernetes cluster hosts must have network access to the following:

- Local docker image repository, where the OCATS NWDAF images are available.

To verify if the Kubernetes cluster hosts have network access to the local docker image repository, retrieve any image with tag name to check connectivity by running the following command:

docker pull <docker-repo>/<image-name>:<image-tag>Where,

docker-repois the IP address or host name of the repository,image-nameis the docker image name andimage-tagis the tag the image used for the NWDAF pod. - Local helm repository, where the OCATS NWDAF helm charts are available.

To verify if the Kubernetes cluster hosts have network access to the local helm repository, run the following command:

helm repo update

Client Machine Requirement

Listed below are the Client Machine requirements for a successful ATS installation for NWDAF:

- Network access to the Helm repository and Docker image repository.

- Helm repository must be configured on the client.

- Network access to the Kubernetes cluster.

- The environment settings to run the

kubectland docker commands. The environment should have privileges to create a namespace in the Kubernetes cluster. - The Helm client must be installed. The environment should be configured such that the Helm install command deploys the software in the Kubernetes cluster.

cnDBTier Requirement

NWDAF supports cnDBTier in a vCNE environment. cnDBTier must be up and active in case of containerized CNE. For more information, see Oracle Communications cnDBTier Installation, Upgrade, and Fault Recovery Guide.

3.1.3 Resource Requirements

This section describes the ATS resource requirements for NWDAF.

NWDAF Pods Resource Requirements Details

- NWDAF Suite

- NWDAF Notification Consumer Simulator

Table 3-2 NWDAF Pods Resource Requirements Details

| Microservice | vCPUs Required per Pod | Memory Required per Pod (GB) | Storage PVC Required per Pod (GB) | Replicas (regular deployment) | Replicas (ATS deployment) | CPUs Required - Total | Memory Required - Total (GB) | Storage PVC Required - Total (GB) |

|---|---|---|---|---|---|---|---|---|

| ocn-ats-nwdaf-service | 4 | 3 | 0 | 1 | 1 | 4 | 4 | 0 |

| ocn-ats-nwdaf-notify-service | 1 | 2 | 0 | 1 | 1 | 1 | 2 | 0 |

| ocats-nwdaf-notify-nginx | 5 | 1 | 0 | 1 | 1 | 5 | 1 | 0 |

3.1.4 Downloading the ATS Package

Locating and Downloading ATS Images

To locate and download the ATS image from MOS:

- Log in to My Oracle Support using the appropriate credentials.

- Select the Patches & Updates tab.

- In the Patch Search Window, click Product or Family (Advanced).

- Enter Oracle Communications Cloud Native Core Network Data Analytics Function in the Product field.

- From the Release drop-down, select "Oracle Communications Cloud Native Core Network Data Analytics Function <release_number>" Where, <release_number> indicates the required release number of OCNWDAF.

- Click Search. The Patch Advanced Search Results list appears.

- Select the required ATS patch from the list. The Patch Details window appears.

- Click Download. The File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to download the NWDAF ATS package file.

- Extract the release package ZIP file. The package is named as nwdaf-pkg-<marketing-release-number>.tgz. For example, nwdaf-pkg-23.3.0.0.tgz.

- Untar the NWDAF package file to the specific directory, tar -xvf nwdaf-pkg-<marketing-release-number>.tgz. The NWDAF directory has the following package structure:

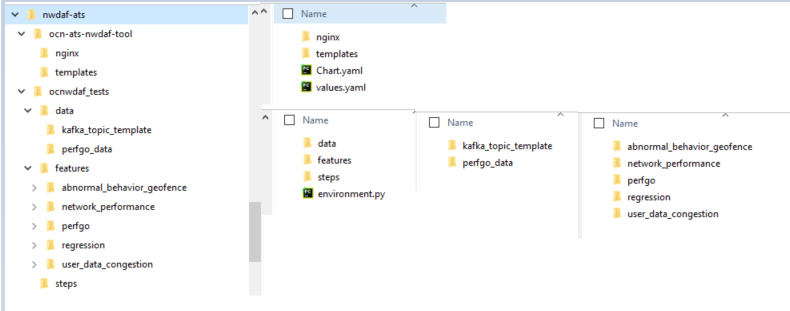

# Root - images - tar of images - sha 256 of images - troubleshooting/ - nfDataCapture.sh - ocn-nwdaf-helmChart/ - helmChart - templates - charts - values.yaml - charts.yaml - nwdaf-pre-installer.tar.gz - simulator-helmChart - templates - charts - values.yaml - charts.yaml - nwdaf-ats/ - ocn-ats-nwdaf-tool -ngnix -templates - ocnwdaf_tests -data - kafka_topic_template - perfgo_data -features - abnormal_behavior_geofence - network_performance - perfgo - regression - user_data_congestion -stepsFigure 3-1 OCNWDAF Folder Structure

3.1.5 Pushing the Images to Customer Docker Registry

Follow the procedure described below to push the NWDAF ATS docker images to the docker repository:

Prerequisites

- Oracle Linux 8 environment

- NWDAF 23.3.0.0.0 package

Note:

The NWDAF deployment package includes:- Ready-to-use docker images in the images

tarorzipfile. - Helm charts to help orchestrate Containers in Kubernetes.

The communication between NWDAF service pods is preconfigured in the Helm charts. The NWDAF ATS uses the following services:

Table 3-3 NWDAF ATS Services

| Service Name | Docker Image Name | Image Tag |

|---|---|---|

ocats-nwdaf |

ocats-nwdaf |

23.3.0.0.0 |

ocats-nwdaf-notify |

ocats-nwdaf-notify |

23.3.0.0.0 |

ocats-nwdaf-notify-nginx |

nwdaf-cap4c-nginx |

1.20 |

- Verify the checksums of tarballs mentioned in file

Readme.txt. - Run the following command to extract the contents of the tar file:

tar -xvf nwdaf-pkg-<marketing-release-number>.tgzOr

To extract the files, run the command:

unzip nwdaf-pkg-<marketing-release-number>.zipThe

nwdaf-pkg-<marketing-release-number>.tarfile contains the following NWDAF ATS images:ocats-nwdaf-notifyocats-nwdafnwdaf-cap4c-nginx

- Navigate to the folder path ./installer, and extract the zip file. Run the following command:

unzip nwdaf-ats.zipThe zip folder contains the following files:ocn-ats-nwdaf-tool ngnix templates ocnwdaf_tests data kafka_topic_template perfgo_data features abnormal_behavior_geofence network_performance perfgo regression user_data_congestion steps - Run the following command to push the Docker images to the Docker Repository:

docker load --input <image_file_name.tar>Example:

docker load --input images - Run the following command to push the NWDAF ATS Docker files to the Docker Registry:

docker tag <image-name>:<image-tag> <docker-repo>/<image-name>:<image-tag> docker push <docker_repo>/<image_name>:<image-tag>Where,

<docker-repo>indicates the repository where the downloaded images can be pushed. - Run the following command to verify if the images are loaded:

docker images - Run the following command to push the Helm charts to the Helm repository:

helm cm-push --force <chart name>.tgz <helm repo>

3.1.6 Configuring ATS

This section describes how to configure ATS for NWDAF.

3.1.6.1 Creating and Verifying NWDAF Console Namespaces

This section explains how to create a new namespace or verify an existing namespace in the system.

Run the following command to verify if the required namespace exists in the system:

$ kubectl get namespaces

If the namespace exists, continue with the NWDAF ATS installation. If the required namespace is not available, create a namespace using the following command:

$ kubectl create namespace <required namespace>

For example:

$ kubectl create namespace ocats-nwdaf

Naming Convention for Namespaces:

The naming convention for namespaces must:

- start and end with an alphanumeric character

- contain a maximum of "63" characters

- contain only alphanumeric characters or '-'

3.1.6.2 Updating values.yaml File

- In the installation package, navigate to

*root/installer/nwdaf-ats/ocn-ats-nwdaf-tool - Edit the values.yaml file.

For example:

vim values.yamlUpdate the following parameters in the values.yaml file:imageRegistry: Provide the registry where the images are located.imageVersion: Verify the value is 23.3.0.0.0.

Note:

Ensure that the image registry path is correct, and is pointing to the docker registry where the ATS docker images are located.3.1.6.3 Deploying NWDAF ATS in the Kubernetes Cluster

To deploy ATS, perform the following steps:

- The

values.yamlfile is located insideocn-ats-nwdaf-tooldirectory. The namespace, docker image or tag can be updated in thevalues.yamlfile. - Run the following command to deploy the NWDAF ATS and its consumers in the same namespace where NWDAF suite is installed:

helm install <installation name> <path to the chart directory> -n $K8_NAMESPACEFor example:

helm install ocnwdaf-ats ocn-ats-nwdaf-tool/Note:

Ensure NWDAF ATS is installed in the same namespace where the NWDAF suite is installed. - Perform Helm installation with proxy command, run the following command:

helm install <installation name> <path to the chart directory> -n $K8_NAMESPACE --set ocatsNwdaf.config.env.JAVA_OPTS="\\-Dhttps\\.proxyHost\\=<proxy_domain>\\ \\-Dhttps\\.proxyPort\\=<proxy_port>\\ \\-Dhttp\\.nonProxyHosts\\=localhost\\,127.0.0.1\\,\\<no_proxy_host>"For example:

helm install ocnwdaf-ats ocn-ats-nwdaf-tool/ --set ocatsNwdaf.config.env.JAVA_OPTS="\\-Dhttps\\.proxyHost\\=www-proxy.us.oracle.com\\ \\-Dhttps\\.proxyPort\\=80\\ \\-Dhttp\\.nonProxyHosts\\=localhost\\,127.0.0.1\\,\\.oracle.com\\,\\.oraclecorp\\.com"Note:

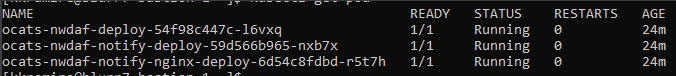

Provide the ocatsNwdaf.config.env.JAVA_OPTS field in the proxy configuration. This allows access to the internet to download the required plugins and components required for a successful ATS installation. - Run the following command to verify the ATS deployment status:

kubectl get deployments -n <namespace_name>Example:

Figure 3-2 Sample Output

3.1.6.4 Verifying ATS Deployment

Run the following command to verify ATS deployment:

helm status <release_name>Once ATS is deployed, run the following commands to check the pod and service deployment:

To check pod deployment, run the command:

kubectl get pod –n <namespace_name>To check service deployment, run the command:

kubectl get service -n <namespace_name>Once the installation (service and pod deployment) is successfully running, track the progress of the ATS Jenkins preconfiguration process, run the following command:

kubectl exec -it <ats_pod_name> -- tail -f /var/lib/jenkins/.jenkins/jenkins-configure.logFor example:

kubectl exec -it ocats-nwdaf-deploy-787d4f5f84-5vmv5 -- tail -f /var/lib/jenkins/.jenkins/jenkins-configure.logWait for the preconfiguration process to complete, the following message is displayed:

Jenkins configuration finish successfully

3.2 Installing ATS for OCNADD

3.2.1 Resource Requirements

This section describes the ATS resource requirements for OCNADD.

Overview - Total Number of Resources

- OCNADD SUT

- ATS

Table 3-4 OCNADD - Total Number of Resources

| Resource Name | CPU | Memory (GB) |

|---|---|---|

| OCNADD SUT Totals | 41 | 258 |

| ATS Totals | 10 | 14 |

| Grand Total OCNADD ATS | 51 | 272 |

OCNADD Pods Resource Requirement Details

This section describes the resource requirements, which are needed to deploy OCNADD ATS successfully.

Table 3-5 OCNADD Pods Resource Requirement Details

| OCNADD Service | CPUs Required per Pod | Memory Required per Pod (GB) | # Replicas (regular deployment) | # Replicas (ATS deployment) | CPUs Required - Total | Memory Required - Total (GB) |

|---|---|---|---|---|---|---|

| ocnaddconfiguration | 1 | 1 | 1 | 1 | 1 | 1 |

| ocnaddalarm | 1 | 1 | 1 | 1 | 1 | 1 |

| ocnaddadmin | 1 | 1 | 1 | 1 | 1 | 1 |

| ocnaddhealthmonitoring | 1 | 1 | 1 | 1 | 1 | 1 |

| ocnadduirouter | 1 | 1 | 1 | 1 | 1 | 1 |

| ocnaddscpaggregation | 2 | 2 | 1 | 1 | 2 | 2 |

| ocnaddnrfaggregation | 2 | 2 | 1 | 1 | 2 | 2 |

| ocnaddseppaggregation | 2 | 2 | 1 | 1 | 2 | 2 |

| ocnaddadapter | 3 | 4 | 8 | 1 | 3 | 4 |

| ocnaddkafka | 5 | 48 | 4 | 4 | 20 | 192 |

| zookeeper | 1 | 2 | 3 | 3 | 3 | 6 |

| ocnaddgui | 2 | 1 | 1 | 1 | 2 | 1 |

| ocnaddcache | 1 | 22 | 2 | 2 | 2 | 44 |

| OCNADD SUT Totals | 41 CPU | 258 GB | ||||

For more information about OCNADD Pods Resource Requirements, see the "Resource Requirements" section in Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

ATS Resource Requirement details for OCNADD

This section describes the ATS resource requirements, which are needed to deploy OCNADD ATS successfully.

Table 3-6 ATS Resource Requirement Details

| Microservice | CPUs Required per Pod | Memory Required per Pod (GB) | # Replicas (regular deployment) | # Replicas (ATS deployment) | CPUs Required - Total | Memory Required - Total (GB) |

|---|---|---|---|---|---|---|

| ATS Behave | 2 | 1 | 1 | 1 | 2 | 1 |

| OCNADD Producer Stub (SCP,NRF,SEPP) | 6 | 12 | 1 | 1 | 6 | 12 |

| OCNADD Consumer Stub | 2 | 1 | 1 | 1 | 2 | 1 |

| ATS Totals | 10 | 14 | ||||

3.2.2 Downloading the ATS Package

Locating and Downloading ATS Images

To locate and download the ATS Image from MOS:

- Log in to My Oracle Support using the appropriate credentials.

- Select the Patches & Updates tab.

- In the Patch Search window, click Product or Family (Advanced).

- Enter Oracle Communications Network Analytics Data Director in the Product field.

- Select Oracle Communications Network Analytics Data Director <release_number> from the Release drop-down.

- Click Search. The Patch Advanced Search Results list appears.

- Select the required ATS patch from the list. The Patch Details window appears.

- Click Download. The File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to download the OCNADD ATS package file.

- Untar the zip file to access all the ATS Images. The <p********_<release_number>_Tekelec>.zip directory has following files:

ocats-ocnadd-tools-pkg-23.3.0.tgz ocats-ocnadd-tools-pkg-23.3.0-README.txt ocats-ocnadd-tools-pkg-23.3.0.tgz.sha256 ocats-ocnadd-custom-configtemplates-23.3.0.zip ocats-ocnadd-custom-configtemplates-23.3.0-README.txtTheocats-ocnadd-tools-pkg-23.3.0-README.txtfile has all the information required for the package.Theocats-ocnadd-tools-pkg-23.3.0.tgzfile has the following images and charts packaged as tar files:ocats-ocnadd-tools-pkg-23.3.0.tgz | |_ _ _ocats-ocnadd-pkg-23.3.0.tgz | |_ _ _ _ _ _ ocats-ocnadd-23.3.0.tgz (Helm Charts) | |_ _ _ _ _ _ ocats-ocnadd-image-23.3.0.tar (Docker Images) | |_ _ _ _ _ _ OCATS-ocnadd-Readme.txt | |_ _ _ _ _ _ ocats-ocnadd-23.3.0.tgz.sha256 | |_ _ _ _ _ _ ocats-ocnadd-image-23.3.0.tar.sha256 | |_ _ _ _ _ _ ocats-ocnadd-data-23.3.0.tgz (ATS test scripts and Jenkins data) | |_ _ _ _ _ _ ocats-ocnadd-data-23.3.0.tgz.sha256 | | |_ _ _ocstub-ocnadd-pkg-23.3.0.tgz |_ _ _ _ _ _ ocstub-ocnadd-23.3.0.tgz (Helm Charts) |_ _ _ _ _ _ ocstub-ocnadd-image-23.3.0.tar (Docker Images) |_ _ _ _ _ _ OCSTUB-ocnadd-Readme.txt |_ _ _ _ _ _ ocstub-ocnadd-23.3.0.tgz.sha256 |_ _ _ _ _ _ ocstub-ocnadd-image-23.3.0.tar.sha256In addition to the above images and charts, there is anocats-ocnadd-custom-configtemplates-23.3.0.zipfile in the package file.ocats-ocnadd-custom-configtemplates-23.3.0.zip | |_ _ _ocats-ocnadd-custom-values_23.3.0.yaml (Custom values file for installation) | |_ _ _ocats_ocnadd_custom_serviceaccount_23.3.0.yaml (Template to create custom service account) - Copy the tar file to the CNE, OCI, or Kubernetes cluster where you want to deploy ATS.

3.2.3 Pushing the Images to Customer Docker Registry

Preparing to deploy ATS and Stub Pod in Kubernetes Cluster

To deploy ATS and Stub Pod in Kubernetes Cluster:

- Run the following command to extract tar file content.

tar -xvf ocats-ocnadd-tools-pkg-23.3.0.tgzThe output of this command is:

ocats-ocnadd-pkg-23.3.0.tgz ocstub-ocnadd-pkg-23.3.0.tgz ocats-ocnadd-custom-configtemplates-23.3.0.zip - Run the following command to extract the helm charts and docker images of ATS.

tar -xvf ocats-ocnadd-pkg-23.3.0.tgzThe output of this command is:

ocats-ocnadd-23.3.0.tgz ocats-ocnadd-23.3.0.tgz.sha256 ocats-ocnadd-data-23.3.0.tgz ocats-ocnadd-data-23.3.0.tgz.sha256 ocats-ocnadd-image-23.3.0.tar ocats-ocnadd-image-23.3.0.tar.sha256 OCATS-ocnadd-Readme.txtNote:

Theocats-ocnadd-Readme.txtfile has all the information required for the package. - Run the following command to untar the ocstub package.

tar -xvf ocstub-ocnadd-pkg-23.3.0.tgzThe output of this command is:

ocstub-ocnadd-image-23.3.0.tar ocstub-ocnadd-23.3.0.tgz.sha256 ocstub-ocnadd-image-23.3.0.tar.sha256 ocstub-ocnadd-23.3.0.tgz OCSTUB-ocnadd-Readme.txt OCSTUB_OCNADD_Installation_Readme.txt - Run the following command to extract the content of the custom configuration templates:

tar -xvf ocats-ocnadd-custom-configtemplates-23.3.0.zipThe output of this command is:

ocats-ocnadd-custom-values_23.3.0.yaml (Custom yaml file for deployment of OCATS-OCNADD) ocats_ocnadd_custom_serviceaccount_23.3.0.yaml (Custom yaml file for service account creation to help the customer if required) - Run the following commands in your cluster to load the ATS docker image, '

ocats-ocnadd-image-23.3.0.tar', and push it to your registry.$ docker load -i ocats-ocnadd-image-23.3.0.tar $ docker tag docker.io/ocnaddats.repo/ocats-ocnadd:23.3.0 <local_registry>/ocnaddats.repo/ocats-ocnadd:23.3.0 $ docker push <local_registry>/ocnaddats.repo/ocats-ocnadd:23.3.0 - Run the following commands in your cluster to load the Stub docker images

ocstub-ocnadd-image-23.3.0.tarand push it to your registry.$ docker load -i ocstub-ocnadd-image-23.3.0.tar $ docker tag docker.io/simulator.repo/ocddconsumer:2.0.8 <local_registry>/simulator.repo/ocddconsumer:2.0.8 $ docker tag docker.io/simulator.repo/oraclenfproducer:2.0.8 <local_registry>/simulator.repo/oraclenfproducer:2.0.8 $ docker tag docker.io/utils.repo/jdk17-openssl:1.0.6 <local_registry>/utils.repo/jdk17-openssl:1.0.6 $ docker push <local_registry>/simulator.repo/ocddconsumer:2.0.8 $ docker push <local_registry>/simulator.repo/oraclenfproducer:2.0.8 $ docker push <local_registry>/utils.repo/jdk17-openssl:1.0.6 - Update the image name and tag in the

ocats-ocnadd-custom-values.yamlandocnaddsimulator/values.yamlfiles of simulator Helm as required. Forocats-ocnadd-custom-values.yamlupdate the 'image.repository' with respectivelocal_registry.For

ocnaddsimulator/values.yamlupdate the 'repo.REPO_HOST_PORT' and 'initContainers.repo.REPO_HOST_PORT' with respectivelocal_registry.

3.2.4 Configuring ATS

3.2.4.1 Enabling Static Port

- To enable static port:

- In the ocats-ocnadd-custom-values.yaml file under service section, set the staticNodePortEnabled parameter value to 'true' and staticNodePort parameter value with valid nodePort.

service: customExtension: labels: {} annotations: {} type: LoadBalancer port: "8080" staticNodePortEnabled: true staticNodePort: "32385" staticLoadBalancerIPEnabled:false staticLoadBalancerIP: ""

Note:

ATS supports static port. By default, this feature is not available.Note:

To enablestaticLoadBalancerIP, set thestaticLoadBalancerIPEnabledparameter value to 'true' andstaticLoadBalancerIPparameter value with validLoadBalancer IPvalue. By default, this is set to false. - In the ocats-ocnadd-custom-values.yaml file under service section, set the staticNodePortEnabled parameter value to 'true' and staticNodePort parameter value with valid nodePort.

3.2.5 Deploying ATS and Stub in Kubernetes Cluster

Note:

It is important to ensure that all the three components, ATS, Stub, and OCNADD are in the same namespace.For the test cases to run successfully, ensure the intraTLSEnalbled parameter value in the Jenkins pipeline script is identical to the value in the OCNADD deployment. The Alert Manager does not support HTTPS connections. When intraTLSEnalbled is set to true, the following Alert Manager test cases may fail:

- Verify the OCNADD_CONSUMER_ADAPTER_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

- Verify the OCNADD_ADMIN_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

- Verify the OCNADD_NRF_AGGREGATION_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

- Verify the OCNADD_SCP_AGGREGATION_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

- Verify the OCNADD_ALARM_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

- Verify the OCNADD_HEALTH_MONITORING_SVC_DOWN alert, this alert is raised when deployments of OCNADD are broken.

ATS and Stub support Helm deployment.

kubectl create namespace <namespace_name>Note:

- It is recommended to use the

<release_name>asocnadd-simwhile installing stubs. - The ATS deployment with OCNADD does not support the Persistent Volume (PV) feature. Therefore, the default value of the deployment.PVEnabled parameter in

ocats-ocnadd-custom-values.yamlmust not be changed. By default, the parameter value is set to false.

Deploying ATS:

helm install -name <release_name> ocats-ocnadd-23.3.0.tgz --namespace <namespace_name> -f <values-yaml-file>

helm install -name ocats ocats-ocnadd-23.3.0.tgz --namespace ocnadd -f ocats-ocnadd-custom-values.yamlNote:

Before you deploy stubs, update the parameter oraclenfproducer.PRODUCER_TYPE to REACTIVE in the ocnaddsimulator/values.yaml file. The default value of the parameter is NATIVE.helm install -name <release_name> <ocstub-ocnadd-chart> --namespace <namespace_name> Note:

For more details about installing the stub, refer to theOCSTUB_OCNADD_Installation_Readme.txt file.

Example:

helm install -name ocnadd-sim ocnaddsimulator --namespace ocnadd

3.2.6 Verifying ATS Deployment

Run the following command to verify ATS deployment.

helm status <release_name>

-n <namespace>

Once ATS and Stub are deployed, run the following commands to check the pod and service deployment:

To check pod deployment:

kubectl get pod -n ocnaddTo check service deployment:

kubectl get service -n ocnadd