6 Fault Recovery

This chapter explains how to perform fault recovery for OCNWDAF deployment.

6.1 Introduction

This section describes the procedures to perform fault recovery for Oracle Communications Networks Data Analytics Function (OCNWDAF) deployment. The OCNWDAF operators can take database backup and restore it either on the same or a different cluster. The OCNWDAF stores data in the following storage’s:

- MySQL NDB Cluster

- Apache Kafka

Note:

This section describes the recovery procedures to restore OCNWDAF databases only. To restore all the databases that are part of cnDBTier, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.Note:

You must take database backup and restore it either on the same or a different cluster. It uses the OCNWDAF database to run any command or follow instructions.6.2 Fault Recovery Impact

This section provides an overview of Fault recovery scenarios and the impacted areas.

The following table provides information about the impacted areas during Oracle Communications Network data Analytics Function Fault recovery:

Table 6-1 Fault Recovery Impacted Area

| Scenario | Requires Fault Recovery or re-install of Kafka? | Requires Fault Recovery or re-install of MySQL? | Other |

|---|---|---|---|

| Deployment Failure | No | No | Uninstall and re-install Helm. |

| cnDBTier Corruption | Yes | Yes |

Restore MySQL from backup and ensure it is not re-installed. |

| When cnDBTier failed in a Single Site | No | Yes |

Restore MySQL from backup and ensure it is not re-installed. |

| Configuration Database Corruption | No | Yes |

Backup and restore of configuration database is required. |

| Site Failure | No | No | Uninstall and re-install Helm. |

6.3 Prerequisites

- Ensure that cnDBTier is in a healthy state and available. Run the following command to check the status of cnDBTier service:

kubectl -n <namespace> exec <management node pod> -- ndb_mgm -e show - Enable the automatic backup on cnDBTier by scheduling regular backups. The regular backups help in fault recovery with the following tasks:

- Restore stable version of the network function databases

- Minimize significant loss of data due to upgrades or roll back failures

- Minimize loss of data due to system failure

- Minimize loss of data due to data corruption or deletion due to external input

- Migrate network function database information from one site to another

- Docker images used during the previous installation or upgrade must be retained in the external data source

6.4 Fault Recovery Scenarios

This chapter describes the fault recovery procedures for various scenarios.

6.4.1 Deployment Failure

This scenario describes how to recover OCNWDAF when the deployment corrupts.

To recover OCNWDAF:

- Run the following command to uninstall OCNWDAF:

For more information about uninstalling OCNWDAF, see the Uninstalling OCNWDAF chapter in Oracle Communication Networks Data Analytics Function Installation and Fault Recovery Guide.helm uninstall <release_name> --namespace <namespace> Example: helm uninstall oc-nwdaf --namespace oc-nwdaf - Install OCNWDAF as described in the Installing OCNWDAF chapter in Oracle Communication Networks Data Analytics Function Installation and Fault Recovery Guide. Use the back up of custom values file to reinstall the OCNWDAF.

Restore OCNWDAF, cnDBTier, and OCNWDAF database (DB) as described in Restoring OCNWDAF and cnDBTier.

6.4.2 cnDBTier Corruption

This section describes how to recover database when the data replication is broken due to database corruption and cnDBTier has failed in single site.

When the database corrupts, the database on all the other sites may also corrupt due to data replication. It depends on the replication status after the corruption has occurred. If the data replication is broken due to database corruption, then cnDBTier fails in either single or multiple sites (not all sites). And if the data replication is successful, then database corruption replicates to all the cnDBTier sites and cnDBTier fails in all sites.

If corrupted database replicated to mated sites, refer to the procedure When cnDBTier failed in a Single Site.

6.4.3 When cnDBTier failed in a Single Site

This section describes how to recover database when the data replication is broken due to database corruption and cnDBTier has failed in a single site.

To recover database:

- Uninstall OCNWDAF helm chart. For information about uninstalling OCNWDAF, see the section Uninstalling OCNWDAF .

- For cnDBTier fault recovery:

- Create on-demand backup from mated site that has health replication with failed site. For more information about cnDBTier backup, see the Create On-demand Database Backup chapter in the Oracle Communications Cloud Native Core cnDBTier, Installation, Upgrade, and Fault Recovery Guide.

- Use the backup data from mate site for restore. For more information about cnDBTier restore, see the Restore Georeplication Failure chapter in Oracle Communications Cloud Native Core cnDBTier, Installation, Upgrade, and Fault Recovery Guide.

- Install OCNWDAF Helm chart. For more information about installing OCNWDAF, see the section Installing OCNWDAF.

6.4.4 Configuration Database Corruption

This scenario describes how to recover OCNWDAF when its configuration database corrupts.

The configuration database is stored in a site exclusive database along with its tables. Thus, corruption of configuration database impacts only a particular site.

- Transfer the <backup_ filename >.sql.gz file to the SQL node where user want to restore it.

- Log into MySQL NDB Cluster's SQL node on the new DB cluster and create a new database where the database needs to be restored.

- For details on creating database, user and adding permissions, see Configuring Database, Creating Users, and Granting Permissions section in the Preinstallation Chapter in Oracle Communications Networks Data Analytics Function Installation Guide.

Note:

The database name created in the above step should be same as the database name created in the following step. - To restore the database to the new database created use the following command:

gunzip < <backup_filename>.sql.gz | mysql -h127.0.0.1 –u <username> -p <backup-database-name>Enter the password when prompted.

Example:

gunzip < OCNWDAFdbBackup.sql.gz | mysql -h127.0.0.1 -u dbuser -p OCNWDAFdb

6.4.5 Site Failure

This section describes how to perform fault recovery when the site has a software failure.

It is assumed that the user has cnDBTier and OCNWDAFinstalled on multiple sites with automatic data replication and backup enabled.

To recover the failed sites:

- Run the Cloud Native Environment (CNE) installation procedure to install a new cluster. For more information, see Oracle Communications Cloud Native Core, Cloud Native Environment (CNE) Installation Guide, Upgrade, and Fault Recovery Guide.

- For cnDBTier fault recovery:

- Take on-demand backup from the mate site that has health replication with the failed site or sites. For more information about on-demand backup, see the Create On-demand Database Backup chapter in the Oracle Communications Cloud Native Core, cnDBTier Installation Guide, Upgrade, and Fault Recovery Guide.

- Use the backup data from the mate site to restore the database. For more information about database restore, see the Restore Georeplication Failure chapter in the Oracle Communications Cloud Native Core, cnDBTier Installation Guide, Upgrade, and Fault Recovery Guide.

- Install OCNWDAF Helm chart. For more information about installing OCNWDAF, see the Installing OCNWDAF section.

6.4.6 Kafka Issues

Kafka related issues (and solution) are described in the following sections.

Scenario 1

OCNWDAFmicroservices need to consume Kafka messages again from the beginning. This scenario can occur if any of the OCNWDAF microservices stopped consuming messages or failed to process them correctly. For example, when a DB downtime occurs, none of the messages consumed by the microservices will be processed correctly and we need to "retry" them.

Current Kafka configuration in OCNWDAFis based on transactions. Restart the microservice that needs to consume such messages and the messages from the last offset in Kafka are consumed. After restarting the service, the messages backlog is not consumed, change the microservice configuration stored in the Spring Cloud Configuration.

Solution

- Ensure the microservice consumer configuration is

auto-offset-reset: earliest. This will process the topic partition at the earliest offset (current setup value). - Redeploy the microservice.

Scenario 2

OCNWDAF microservices must ignore all messages in Kafka. This scenario can occur when the data sources like AMF, SMF, UDM, and so on are generating wrong data and overloading OCNWDAF.

Solution 1

- Delete and recreate the topic.

OR

- Update the retention Kafka retention policy

- Stop the consumer microservice.

- Check the current retention policy, run the command:

kafka-configs --zookeeper <zkhost>:2181 --describe --entity-type topics --entity-name <topic name> - Update the retention policy in Kafka to a very low value (for example,1 min per instance):

kafka-configs.sh --zookeeper <zkhost>:2181 --entity-type topics --alter --entity-name <topic name> --add-config retention.ms=60000 - Wait for Kafka service to delete the messages.

- Set the retention policy value.

- Redeploy the microservice.

Solution 2

Follow the procedure below, to retain the wrong messages and allow the Kafka service to delete the messages according to the retention policy.

- Change Kafka consumer configuration to

auto-offset-reset: latest. This will process the messages from the latest partition offset. - Redeploy the microservice.

6.4.7 Microservice Deployment Issue

Follow the procedure below to resolve microservice deployment issue:

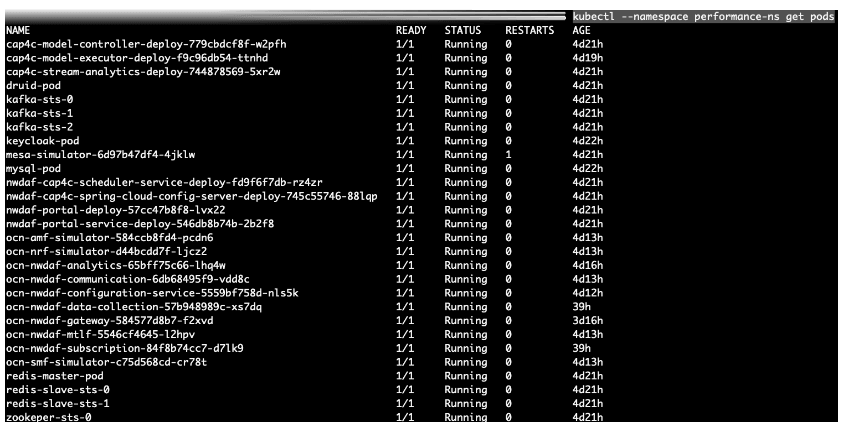

- Run the following command to obtain information on the pods deployed and their status:

kubectl --namespace [namespace name] get pods --sort-by='.status.containerStatuses[0].restartCount'Sample Output:Figure 6-1 Sample Output

- Observe the Restarts column, the ideal value is "0", indicating the pods have not re-started. If the column Status is not Running and the value of the Restarts is constantly increasing implies an underlying problem with the microservice or any dependency.

- Refer to the Oracle Communications Networks Data Analytics Function Troubleshooting Guide to resolve the issue.

- If required, re-deploy the microservice.

In a Kubernetes setup, at least one pod of each microservice must be deployed so, if the problem root cause is due to a dependency, re-deploying the microservice is not required. It is sufficient to fix the dependency and wait for Kubernetes to retry the deployment.

If there are any changes in the Spring Cloud Config properties of the microservice, refreshing the property through its actuator with a POST request to http://{host}:{port}/actuator/refresh. If the issue is not resolved (not all properties support online refresh), redeploy the microservice.

6.5 Backup and Restore

This chapter describes the Backup and Restore procedures.

6.5.1 OCNWDAF Database Backup and Restore

Introduction

Perform this procedure to take a backup of the OCNWDAF database (DB) and restore the database on a different cluster. This procedure is for on-demand backup and restore of OCNWDAF DB. The commands used for these procedures are provided by the MYSQL Network Database(NDB) cluster.

Prerequisite

Ensure that the MYSQL NDB cluster is in a healthy state, and each of its database node is in running state. Run the following command to check the status of cnDBTier service:kubectl -n <namespace> exec <management node pod> -- ndb_mgm -e show<namespace>is the namespace where cnDBTier is deployed<management node pod>is the management node pod of cnDBTier

Example:

[cloud-user@vcne2-bastion-1 ~]$ kubectl -n nwdafsvc exec ndbmgmd-0 -- ndb_mgm -e show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=1 @10.233.86.202 (mysql-8.0.22 ndb-8.0.22, Nodegroup: 0, *)

id=2 @10.233.81.144 (mysql-8.0.22 ndb-8.0.22, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=49 @10.233.81.154 (mysql-8.0.22 ndb-8.0.22)

id=50 @10.233.86.2 (mysql-8.0.22 ndb-8.0.22)

[mysqld(API)] 2 node(s)

id=56 @10.233.81.164 (mysql-8.0.22 ndb-8.0.22)

id=57 @10.233.96.39 (mysql-8.0.22 ndb-8.0.22)

[cloud-user@vcne2-bastion-1 ~]$OCNWDAF DB Backup

If the OCNWDAF database backup is required, do the following:- Log in to any of the SQL node or API node, and then run the following command to take dump of the database:

kubectl exec -it <sql node> -n <namespace> bash mysqldump --quick -h127.0.0.1 –u <username> -p <databasename>| gzip > <backup_filename>.sql.gzWhere,<sql node>is the SQL node of cnDBTier<namespace>is the namespace where cnDBTier is deployed<username>is the database username<databasename>is the name of the database that has to be backed up<backup_filename>is the name of the backup dump file

- Enter the OCNWDAF database name and password in the command when prompted.

Example:

kubectl exec -it ndbmysqld-0 -n nwdaf bash mysqldump --quick -h127.0.0.1 -u dbuser -p nwdafdb | gzip > NWDAFdbBackup.sql.gzNote:

Ensure that there is enough space on the directory to save the backup file.

OCNWDAF RESTORE

If OCNWDAF database restore is required, do the following:

- Transfer the

<backup_ filename>.sql.gzfile to the SQL node where you want to restore it. - Log in to the SQL node of the MYSQL NDB cluster on the new DB cluster and create a new database where the database needs to be restored

- To restore the database to the new database created, run the following command:

gunzip < <backup_filename>.sql.gz | mysql -h127.0.0.1 –u <username> -p <databaseName >Example:

gunzip < nwdafdbBackup.sql.gz | mysql -h127.0.0.1 -u dbuser -p newnwdafdb - Enter the password when prompted.

6.5.2 Restoring OCNWDAF and cnDBTier

Perform this procedure to restore OCNWDAF, cnDBTier, and OCNWDAF database (DB).

Prerequisites:

- Take a backup of the

custom_values.yamlfile that was used for installing OCNWDAF. - Take a backup of the OCNWDAFdatabase and restore the database as described in Restoring OCNWDAF and cnDBTier Perform this task once every evening.

- Run the following command to uninstall the corrupted OCNWDAF deployment:

helm uninstall <release_name> --namespace <namespace>Where,<release_name>is a name used to track this installation instance.<namespace>is the release number.

Example:

helm uninstall ocnwdaf --namespace nwdafsvc - Install OCNWDAFusing the backed up copy of the

custom_values.yamlfile.For information about installing OCNWDAF using Helm, see Installation Tasks.

- Install cnDBTier as described in Oracle Communication Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

- Restore OCNWDAFDB as described in Restoring OCNWDAF and cnDBTier

- Install OCNWDAFusing the backed up copy of the

custom_values.yamlfile.For information about installing OCNWDAF, see Installation Tasks

- In the backed up copy of the

custom_values.yamlfile, update these values:dbHost/dbPort/dbAppUserSecretName/dbPrivilegedUserSecretName.# DB Connection Service IP Or Hostname with secret name. database: dbHost: "mysql-connectivity-service.nwdafsvc" dbPort: "3306" # K8s Secret containing Database/user/password for all services of OCNWDAF interacting with DB. dbAppUserSecretName: "seppuser-secret" # K8s Secret containing Database/user/password for DB Hooks for creating tables dbPrivilegedUserSecretName: "nwdafprivilegeduser-secret" - Run the following Helm upgrade command to update the secrets for OCNWDAF control plane microservices, such as configuration, subscription, audit, and notification, to connect to the DB with changed DB Secret or DB FQDN, IP, or Port:

helm upgrade <release name> -f <custom_values.yaml> --namespace <namespace> <helm-repo>/chart_name --version <helm_version>Example:

helm upgrade ocnwdaf -f /home/cloud-user/1.12/values.yaml /home/cloud-user/1.11.0/ocnwdaf --namespace nwdafsvc

6.5.3 Kafka Backup and Restore

Backup Zookeeper State Data

dataDir field in the ~/kafka/config/zookeeper.properties configuration file. Read the value of this field to determine the directory to backup. By default, dataDir points to the /tmp/zookeeper directory.

Note:

If thedataDir points to some other directory in your installation, use that value instead of /tmp/zookeeper in the following commands.

-

Run the command

~/kafka/config/zookeeper.properties.Sample output:# the directory where the snapshot is stored. dataDir=/tmp/zookeeper # the port at which the clients will connect clientPort=2181 # disable the per-ip limit on the number of connections since this is a non-production config maxClientCnxns=0 - Create a compressed archive file of the contents in the directory. Run the command:

tar -czf /home/kafka/zookeeper-backup.tar.gz /tmp/zookeeper/* - Run the command

ls, to verify if thetarfile is created. The output will display the compressed filezookeeper-backup.tar.gz. - A backup of the Zookeeper State Data is successfully created.

Backup Kafka Topics and Messages

log.dirs field specifies in the ~/kafka/config/server.properties configuration file. Read the value of this field to determine the directory to backup. By default, log.dirs points to the /tmp/kafka-logs directory.

Note:

If thelog.dirs points to some other directory in your installation, use that value instead of /tmp/kafka-logs in the following commands.

-

Run the command

~/kafka/config/server.properties.Sample output:############################# Log Basics ############################# # A comma separated list of directories under which to store log files log.dirs=/tmp/kafka-logs # The default number of log partitions per topic. More partitions allow greater # parallelism for consumption, but this will also result in more files across # the brokers. num.partitions=1 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. # This value is recommended to be increased for installations with data dirs located in RAID array. num.recovery.threads.per.data.dir=1 - Stop the Kafka service so that the data in the

log.dirsis in a consistent state. Runexitto become a non-root user, then run the command:sudo systemctl stop kafka - Once the Kafka service is stopped, login as Kafka root user. Run the command:

sudo -iu kafkaNote:

Always stop or start the Kafka and Zoo Keeper services as non-root sudo user, as in the Apache Kafka installation prerequisite the kafka user is restricted as a security precaution. This prerequisite disables sudo access for the kafka user, hence commands fail to execute. - Create a compressed archive file of the contents in the directory. Run the command:

tar -czf /home/kafka/kafka-backup.tar.gz /tmp/kafka-logs/* - Run the command

ls, to verify if thetarfile is created. The output will display the compressed filekafka-backup.tar.gz. - Start the Kafka service, run

exitto become a non-root user, then run the command:sudo systemctl start kafka - Once the Kafka service is started, login as Kafka root user. Run the command:

sudo -iu kafka - A backup of the Kafka Data is successfully created.

Restore Zookeeper State Data

- To prevent data directories from receiving invalid data during the restoration period, stop both Zookeeper and Kafka. Run the following commands:

- Run

exitto become a non-root user, then run the command:sudo systemctl stop kafka - Stop the Zookeeper service:

sudo systemctl stop zookeeper

- Run

- Login as Kafka root user. Run the command:

sudo -iu kafka - Delete the existing cluster data directory, run the command:

rm -r /tmp/zookeeper/* - Restore the backedup data, run the command:

tar -C /tmp/zookeeper -xzf /home/kafka/zookeeper-backup.tar.gz --strip-components 2Note:

The-Cflag specifies thetarto change to the directory/tmp/zookeeperbefore extracting the data. Specifying--strip 2flag in the command ensurestarextracts the archive’s contents in the directory/tmp/zookeeper/and not in any another directory (such as/tmp/zookeeper/tmp/zookeeper/) inside it. - Cluster state data is successfully restored.

Restore Kafka Data and Messages

- Delete the existing Kafka directory, run the command:

rm -r /tmp/kafka-logs/* - After directory deletion, the Kafka installation is similar to a fresh installation with no topics or messages present in it. Restore the backed up data, extract the files:

tar -C /tmp/kafka-logs -xzf /home/kafka/kafka-backup.tar.gz --strip-components 2The

-Cflag specifiestarto change to the directory/tmp/kafka-logsbefore extracting the data. Specifying--strip 2flag ensures that the archive’s contents are extracted in/tmp/kafka-logs/and not in another directory (such as/tmp/kafka-logs/kafka-logs/) inside it. - Run

exitto become a non-root user, then run the command:sudo systemctl start kafkaStart the Zookeeper servicesudo systemctl start zookeeper - Login as Kafka root user. Run the command:

sudo -iu kafka - Kafka data is successfully restored.

Verify the Restoration

- Once Kafka is successfully running, run the following command to read the messages:

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic <TopicName> --from-beginningNote:

If Kafka has not started up properly, you might encounter the following warning:Output [2018-09-13 15:52:45,234] WARN [Consumer clientId=consumer-1, groupId=console-consumer-87747] Connection to node -1 could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)Always wait for Kafka to start fully before performing this step.

- Retry the previous step if you encountered a warning, or run the following command (as non-root sudo user) to restart Kafka:

sudo systemctl restart kafka - The following message is displayed if restoration is successful:

Output <Topic Message>Note:

If you do not see this message, check if you missed out procedure steps in the previous section and run them.A successful verification implies, data has been backed up and restored correctly in a single Kafka installation.