4 Running Test Cases Using ATS

4.1 Running OCNADD Test Cases using ATS

4.1.1 Prerequisites

- The ATS version must be compatible with the OCNADD release.

- The ATS and Stub must be deployed in the namespace based on the OCANDD deployment mode, see Note.

- Enable

ocnaddbsfaggregationandocnaddpcfaggregationon OCNADD charts in order to allow BSF and PCF-related test cases to execute without any issues. Follow the steps below to enable BSF and PCF aggregation services in the OCNADD charts.- If OCNADD is Already Installed:

- Modify the

ocnadd-custom-values.yamlfiles for both the management and worker group(s). Setocnaddbsfaggregationandocnaddpcfaggregationtotrue, as shown in the example below:global: ocnaddalarm: enabled: true ocnaddconfiguration: enabled: true ocnaddhealthmonitoring: enabled: true ocnaddscpaggregation: enabled: true ocnaddnrfaggregation: enabled: true ocnaddseppaggregation: enabled: true ocnaddbsfaggregation: enabled: true # previously false ocnaddpcfaggregation: enabled: true # previously false ocnaddbackuprestore: enabled: true ocnaddkafka: enabled: true ocnaddadmin: enabled: true ocnadduirouter: enabled: true ocnaddgui: enabled: true ocnaddfilter: enabled: true ocnaddexport: enabled: true ocnaddnonracleaggregation: enabled: true ocnaddredundancyagent: enabled: true - Upgrade the Helm Charts:

- Management

Group:

$ helm upgrade <management-group-release-name> -f <management-group-custom-values> -n <management-group-ns> <ocnadd-helm-chart-location> - Worker

Group:

$ helm upgrade <worker-group-release-name> -f <worker-group-custom-values> -n <worker-group-ns> <ocnadd-helm-chart-location>

- Management

Group:

After the upgrade, the

ocnaddpcfaggregationandocnaddbsfaggregationpods should be spawned in the corresponding namespaces. - Modify the

- If OCNADD is Not Installed:

Follow the installation steps from the Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide. During installation, ensure

ocnaddpcfaggregationandocnaddbsfaggregationare enabled in theocnadd-custom-values.yamlfor both management and worker group(s), as shown above.

- If OCNADD is Already Installed:

- For OCANDD deployments in which ACL is enabled,

perform the following steps:

Note:

Skip these steps if ACL is not enabled in the OCNADD deployment.- Set the Jenkins pipeline variables

INTRA_TLS_ENABLEDandACL_ENABLEDtotrue. - Enable Aggregation Services: Follow the same

steps mentioned earlier to enable

ocnaddbsfaggregationandocnaddpcfaggregationin the charts. - Update the

ssl.truststore.password,

ssl.keystore.password, and

ssl.key.password in

ocnadd_tests/data/admin.properties file

inside the ATS pod as follows:

- Access the Kafka pod from the

OCNADD worker group or the default group

deployment in which the SCRAM user configuration

was added while enabling the ACL. Run the

following command, in this example

kafka-broker-0is the Kafka pod:kubectl exec -it kafka-broker-0 -n <namespace> -- bash - Extract the ssl parameters

from the Kafka broker environments, run the

following

command:

env | grep -i pass - Use the truststore and

keystore passwords retrieved from the above

command output to update the

ocnadd_tests/data/admin.properties file of

the ATS pod, run the following commands:

kubectl exec -it ocats-ocats-ocnadd-xxxx-xxxx -n <namespace> -- bash$ vi ocnadd_tests/data/admin.propertiesSample output:

security.protocol=SASL_SSL sasl.mechanism=PLAIN sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="ocnadd" password="ocnadd"; ssl.truststore.location=/var/securityfiles/keystore/trustStore.p12 ssl.truststore.password=<truststore pass> ssl.keystore.location=/var/securityfiles/keystore/keyStore.p12 ssl.keystore.password=<keystore pass> ssl.key.password=<keystore pass>

- Access the Kafka pod from the

OCNADD worker group or the default group

deployment in which the SCRAM user configuration

was added while enabling the ACL. Run the

following command, in this example

- Make sure to create the SCRAM User configuration if not created already by following the steps mentioned under 'Update SCRAM Configuration with Users' in Oracle Communications Network Analytics Data Director User Guide.

- Set the Jenkins pipeline variables

Note:

- Clear Previous Configurations: Delete any existing DataFeeds and Filter configurations from previous executions before triggering a new Jenkins pipeline.

- Centralized Deployment Mode: If ATS and Stub are

deployed in OCNADD Centralized mode, exclude the tag

NotSupportedForCentralizedDDin the Jenkins UI to avoid test failures from unsupported scenarios.Jenkins UI:

- Set

FilterWithTagstoYes - Enter

NotSupportedForCentralizedDDinScenario_Exclude_Tags - Click Submit

- Set

- Kafka cluster with KRaft controller mode: If ATS and Stub are deployed in

OCNADD Kafka cluster deployment with KRaft controller mode, exclude the tag

NotSupportedForKafkaWithoutZookeeperin the Jenkins UI to avoid test failures from unsupported scenarios.

4.1.2 Logging in to ATS

Running ATS

[ocnadd@k8s-bastion ~]$ helm status ocats -n ocnadd-deploy

NAME: ocats

LAST DEPLOYED: Sat Nov 3 03:48:27 2022

NAMESPACE: ocnadd-deploy

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

# Copyright 2018 (C), Oracle and/or its affiliates. All rights reserved.

Thank you for installing ocats-ocnadd.

Your release is named ocats , Release Revision: 1.

To learn more about the release, try:

$ helm status ocats

$ helm get ocats[ocnadd@k8s-bastion ~]$ kubectl get pod -n ocnadd-deploy | grep ocats

ocats-ocats-ocnadd-54ffddb548-4j8cx 1/1 Running 0 9h

[ocnadd@k8s-bastion ~]$ kubectl get svc -n ocnadd-deploy | grep ocats

ocats-ocats-ocnadd LoadBalancer 10.20.30.40 <pending> 8080:12345/TCP 9hFor more information on verifying ATS deployment, see Verifying ATS Deployment.

Note:

If LoadBalancer IP is provided, then give <LoadBalancer IP>:8080Figure 4-1 ATS Login

- Enter the login credentials. Click

Sign in. The following screen appears.

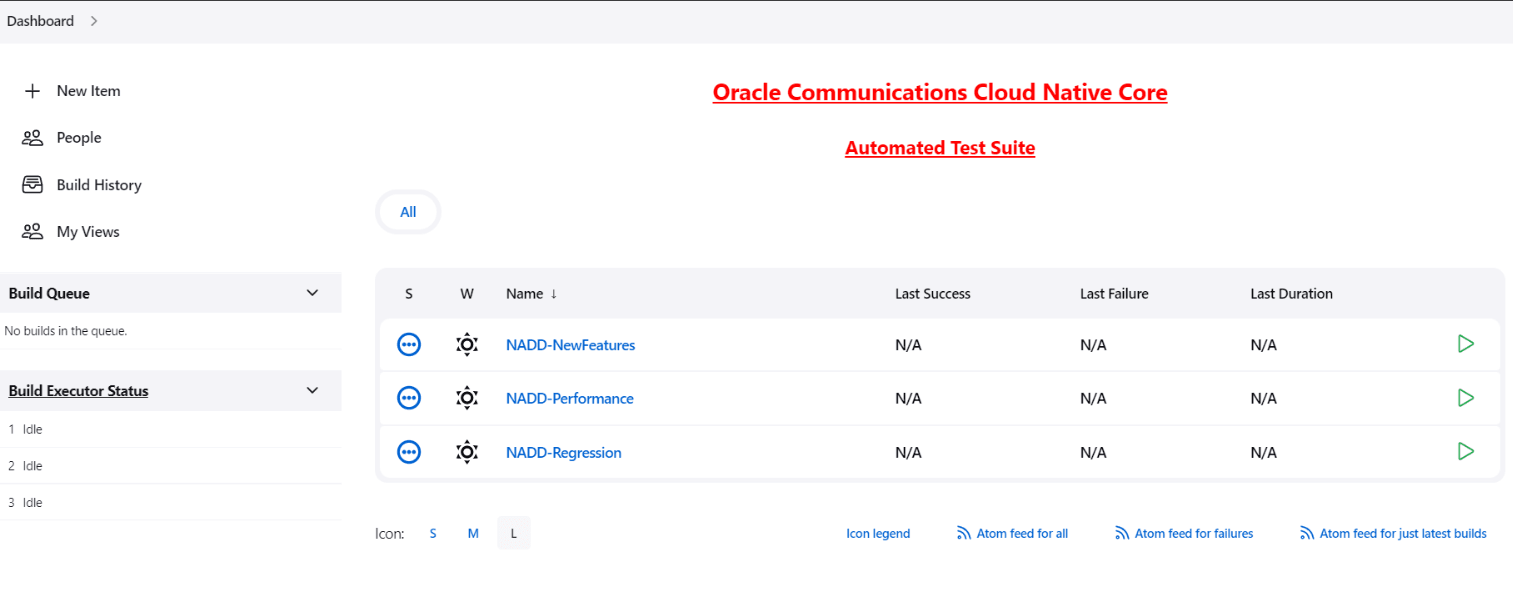

Figure 4-2 OCNADD Pre-Configured Pipelines

OCNADD ATS has three pre-configured pipelines.

- OCNADD-NewFeatures: This pipeline has all the test cases delivered as part of OCNADD ATS - 25.1.202.

- OCNADD-Performance: This pipeline is not operational as of now. It is reserved for future releases of ATS.

- OCNADD-Regression: This pipeline is not operational as of now. It is reserved for future releases of ATS.

4.1.3 OCNADD-NewFeatures Pipeline

OCNADD-NewFeatures Pipeline

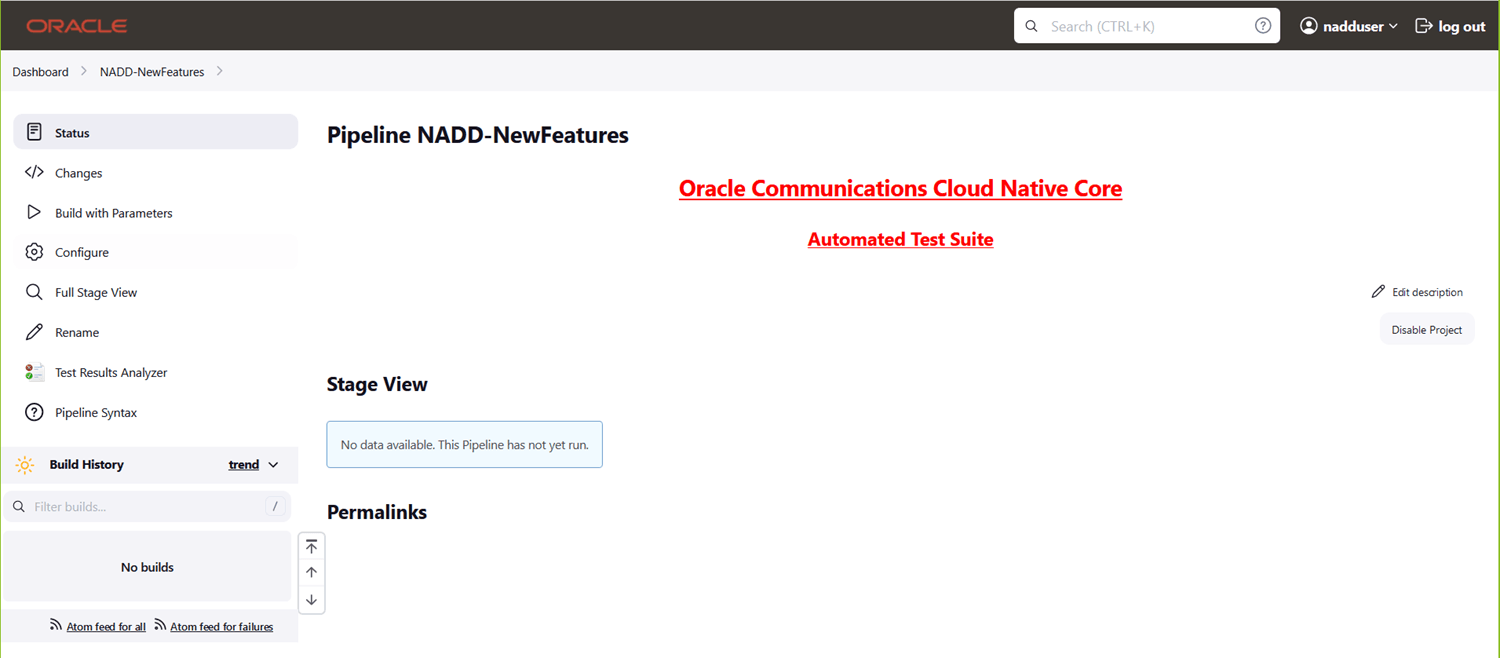

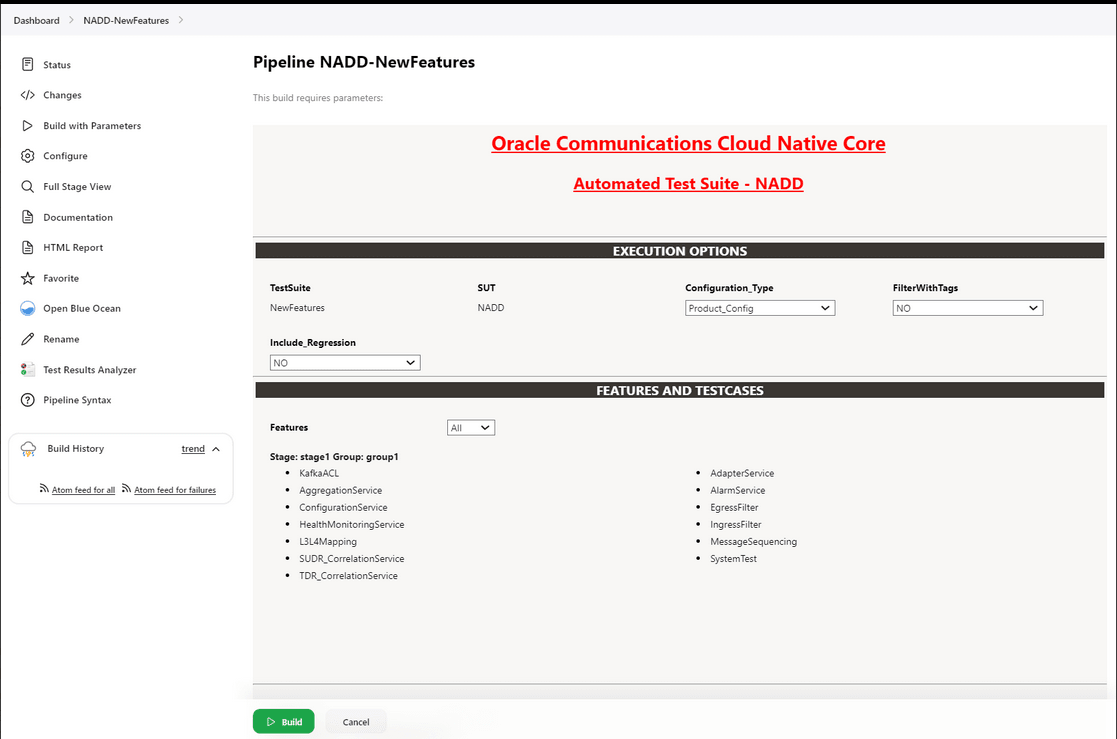

- Click OCNADD-NewFeatures in the Name column. The following screen appears:

Figure 4-3 Configuring OCNADD-New Features

In the above screen:

In the above screen:- Click Configure to configure OCNADD-New Features.

- Click Build History box to view all the previous pipeline executions, and the Console Output of each execution.

- The Stage View represents the previously run pipelines for reference.

- The Test Results Analyzer is the plugin integrated into the OCNADD-ATS. This option can be used to display the build-wise history of all the tests. It provides a consolidated graphical representation of all past tests.

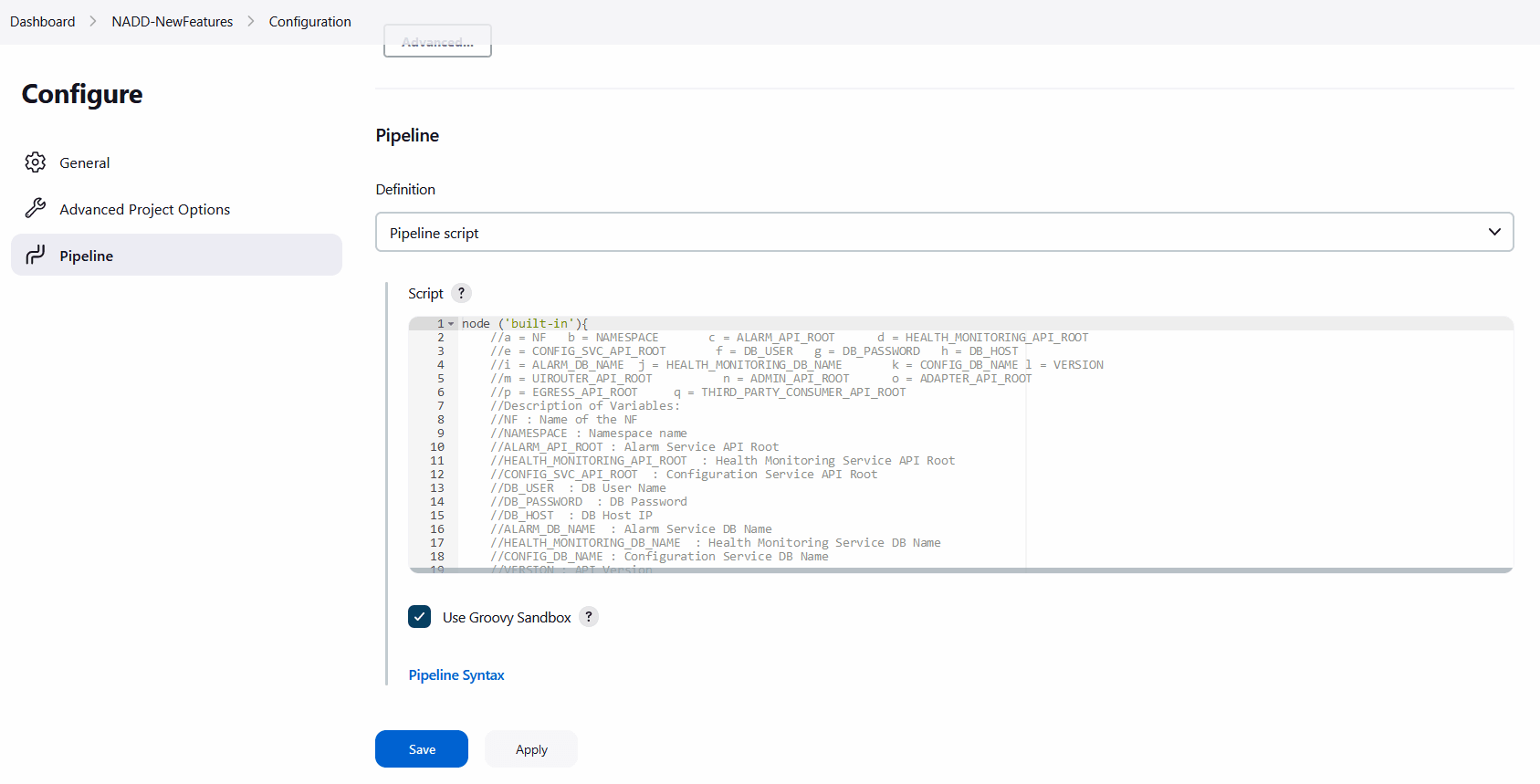

- Click Configure. Once the page loads completely, click the Pipeline

tab:

Note:

Make sure that the Configure page loads completely before you perform any action on it. Also, do not modify any configuration other than shown below.The Pipeline section of the configuration page appears as follows:Figure 4-4 Pipeline Section

Important:

Remove the existing default content of the pipeline script and copy the following script content.The content of the pipeline script is as follows:

node ('built-in'){ //a = NF b = NAMESPACE c = DB_HOST d = DB_USER e = DB_PASSWORD //f = ALARM_DB_NAME g = HEALTH_MONITORING_DB_NAME h = CONFIG_DB_NAME //i = ALARM_API_ROOT j = HEALTH_MONITORING_API_ROOT k = CONFIG_SVC_API_ROOT //l = UIROUTER_API_ROOT m = ADMIN_API_ROOT n = THIRD_PARTY_CONSUMER_API_ROOT //o = BACKUP_RESTORE_IMG_PATH p = ALERT_MANAGER_URI q = PROMETHEUS_URI r = INTRA_TLS_ENABLED //s = RERUN_COUNT t = ACL_ENABLED u = WORKER_GROUP v = MANAGEMENT_GROUP w = MTLS_ENABLED x = CERTIFICATE_FILE y = CERTIFICATE_KEY //z=DOMAIN_NAME //Description of Variables: //NF : Name of the NF //NAMESPACE : Namespace name //DB_HOST : DB Host IP //DB_USER : DB User Name //DB_PASSWORD : DB Password //ALARM_DB_NAME : Alarm Service DB Name //HEALTH_MONITORING_DB_NAME : Health Monitoring Service DB Name //CONFIG_DB_NAME : Configuration Service DB Name //ALARM_API_ROOT : Alarm Service API Root //HEALTH_MONITORING_API_ROOT : Health Monitoring Service API Root //CONFIG_SVC_API_ROOT : Configuration Service API Root //UIROUTER_API_ROOT : UI Router API Root //ADMIN_API_ROOT : Admin Service API Root //THIRD_PARTY_CONSUMER_API_ROOT : Third Pary Consumer API Root //BACKUP_RESTORE_IMG_PATH : Repository path for Backup restore image //ALERT_MANAGER_URI : Alert Manager API Root //PROMETHEUS_URI : Prometheus URI //INTRA_TLS_ENABLED : IntraTLS Value true/false //RERUN_COUNT : ReRun Count for Failed Tests //ACL_ENABLED : ACL Enabled true/false //WORKER_GROUP : Worker Group Namespace name //MANAGEMENT_GROUP : Management Group Namespace name //MTLS_ENABLED : mTls Enabled value //CERTIFICATE_FILE : OCATS certificate file name //CERTIFICATE_KEY : OCATS certificate key file name //DOMAIN_NAME : Domain Name sh ''' sh /var/lib/jenkins/ocnadd_tests/preTestConfig-NewFeatures-NADD.sh \ -a NADD \ -b <ocnadd-namespace> \ -c <DB_HOST> \ -d <DB_USER> \ -e <DB_PASSWORD> \ -f alarm_schema \ -g healthdb_schema \ -h configuration_schema \ -i ocnaddalarm.<management-group-namespace>.svc.<domainName>:9099 \ -j ocnaddhealthmonitoring.<management-group-namespace>.svc.<domainName>:12591 \ -k ocnaddconfiguration.<management-group-namespace>.svc.<domainName>:12590 \ -l ocnaddbackendrouter.<management-group-namespace>.svc.<domainName>:8988 \ -m ocnaddadminservice.<management-group-namespace>.svc.<domainName>:9181 \ -n ocnaddthirdpartyconsumer.<worker-group-namespace>.svc.<domainName>:9094 \ -o <repo-path>/ocdd.repo/ocnaddbackuprestore:<tag> \ -p occne-kube-prom-stack-kube-alertmanager.occne-infra.svc.<domainName>:80/<clusterName> \ -q occne-kube-prom-stack-kube-prometheus.occne-infra.svc.<domainName>:80/<clusterName>/prometheus/api/v1/query \ -r true \ -s 0 \ -t false \ -u <worker-group-namespace:clusterName> \ -v <management-group-namespace> \ -w false \ -x <OCATS_certificate_file_name> \ -y <OCATS_certificate_key_file_name> \ -z <domainName> ''' if(env.Include_Regression && "${Include_Regression}" == "YES"){ sh '''sh /var/lib/jenkins/common_scripts/merge_jenkinsfile.sh''' load "/var/lib/jenkins/ocnadd_tests/jenkinsData/Jenkinsfile-NADD-Merged" } else{ load "/var/lib/jenkins/ocnadd_tests/jenkinsData/Jenkinsfile-NADD-NewFeatures" } }You can modify pipeline script parameters from "-b" to "-q" on the basis of your deployment environment, click on 'Save' after making the necessary changes.

The description of all the script parameters is as follows:

- a: Name of the NF to be tested in capital letters (NADD).

- b: Namespace in which the NADD is deployed (ocnadd-deploy).

- c: DB Host IP as provided during the deployment of NADD (10.XX.XX.XX).

- d: DB Username as provided during the deployment of NADD.

- e: DB Password as provided during the deployment of NADD.

- f: DB Schema Name of the

ocnaddalarmmicroservice as provided during the deployment of NADD (alarm_schema). - g: DB Schema Name of the

ocnaddhealthmonitoringmicroservice as provided during the deployment of NADD (healthdb_schema). - h: DB Schema Name of the

ocnaddconfigurationmicroservice as provided during the deployment of NADD (configuration_schema). - i: API root endpoint to reach the

ocnaddalarmmicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddalarm.<management-group-namespace>.svc.<domainName>:9099. - j: API root endpoint to reach the

ocnaddhealthmonitoringmicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddhealthmonitoring.<management-group-namespace>.svc.<domainName>:12591. - k: API root endpoint to reach the

ocnaddconfigurationmicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddconfiguration.<management-group-namespace>.svc.<domainName>:12590. - l: API root endpoint to reach the

ocnaddbackendroutermicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddbackendrouter.<management-group-namespace>.svc.<domainName>:8988. - m: API root endpoint to reach the

ocnaddadminservicemicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddadminservice.<management-group-namespace>.svc.<domainName>:9181. - n: API root endpoint to reach the

ocnaddthirdpartyconsumermicroservice of NADD. Update the Management Group and Cluster Domain Name inocnaddthirdpartyconsumer.<worker-group-namespace>.svc.<domainName>:9094. - o:

ocnaddbackuprestoreimage path. - p: Alert Manager API root endpoint.

- q: URI for Prometheus.

- r: Set the

IntraTLSvalue to either "true" or "false" based on the OCNADD deployment (with IntraTLS enabled or disabled). - s: Rerun count for failed test cases. The default value is set to '2'. It can be set to '0', '1', or more, based on user requirements.

- t: Set the

ACL_ENABLEDvalue to either "true" or "false" based on the OCNADD deployment (with ACL enabled or disabled). - u: Worker group namespace name along with the cluster name.

- v: Management group namespace name.

- w: Set the

MTLS_ENABLEDvalue to either "true" or "false" based on the OCNADD deployment. - x: OCATS Certificate file name.

- y: OCATS Certificate key file name.

- z: Cluster Domain Name.

Note:

If ATS and Stub are on a OCNADD in non-centralized deployment mode, then u: <worker-group-namespace:clusterName> and v: <management-group-namespace> will be in the same namespace.Running OCNADD Test Cases

- Click the Build with Parameters link available in the left navigation pane of the NADD-NewFeatures Pipeline screen. The following page appears:

Figure 4-5 Pipeline NADD_NewFeatures

- Select Configuration_Type as Product_Config.

- Set Include_Regression to 'NO' from the drop-down list.

- In Select_Option:

- Select All to run all the feature test cases and click the Build button to run the pipeline.

- Choose Single/MultipleFeatures to run the specific feature test cases and click the Build button to run the pipeline.

- Choose MultipleFeature/MultipleTestCases to select multiple features and multiple test cases within the selected features and click the Build button to run the pipeline.

4.1.4 OCNADD-NewFeatures Documentation

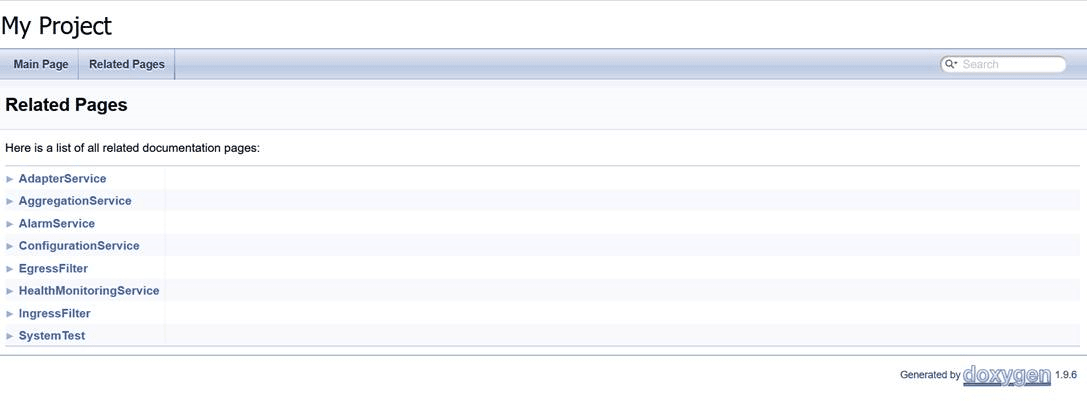

The NADD-NewFeatures pipeline has a HTML report of all the feature files that can be tested as part of the OCNADD ATS release. Follow the procedure listed below to view all the OCNADD functionalities:

- On the Pipeline NADD-NewFeatures page click Documentation in the left navigation pane. All test cases provided as part of the OCNADD ATS release are displayed on the screen.

Note:

- The Documentation option appears on the screen only if NADD-NewFeatures pipeline test cases are executed at least once.

- Use the Firefox browser to open the Documentation, other browsers are not supported.

Figure 4-6 Documentation

- Click any functionality to view its test cases and scenarios for each test case.

- To exit, click Back to NADD-NewFeatures on the top-left corner of the screen.

4.1.5 Troubleshooting ATS

This section provides troubleshooting procedures for some common ATS test case failures.

Offset Count Mismatch Results in Test Case Failure

Problem

Test cases fail due to the offset count mismatch. Delay in third-party consumers receiving messages results in this failure.

Example: The test case failed due to offset count of the MAIN topic(which is 186) does not match with off set count of the third-party Consumer(which is 155)

Then Compare the offset change in MAIN topic and consumer ... failed in 0.000s

Assertion Failed: The increase in offset counts is not matching, increase in offset of MAIN : 186 , increase in offset of Consumer : 155

Captured stdout:

{'Location': 'http://ocnaddconfiguration:12590/ocnadd-configuration/configure/v3/app-oracle-cipher', 'content-length': '0'}

['OCL', '2023-08-13T19:49:49.944Z', 'INFO', '1', '---', '[', 'scheduling-1]', 'c', '.o', '.c', '.c', '.o', '.c', '.c', '.ConsumerController', ':', '|', '*TOTAL*', '|', '0', '(0', ')', '|']

['OCL', '2023-08-13T19:49:49.944Z', 'INFO', '1', '---', '[', 'scheduling-1]', 'c', '.o', '.c', '.c', '.o', '.c', '.c', '.ConsumerController', ':', '+------------------------------+-------------------------------+-------------------------------+--------------------------------------+']

['OCL', '2023-08-13T19:50:14.952Z', 'INFO', '1', '---', '[', 'scheduling-1]', 'c', '.o', '.c', '.c', '.o', '.c', '.c', '.ConsumerController', ':', '|', '*TOTAL*', '|', '155', '(0', ')', '|']

['OCL', '2023-08-13T19:50:14.952Z', 'INFO', '1', '---', '[', 'scheduling-1]', 'c', '.o', '.c', '.c', '.o', '.c', '.c', '.ConsumerController', ':', '+------------------------------+-------------------------------+-------------------------------+--------------------------------------+']

Solution

The test cases which failed due to offset count mismatch, pass when a test case rerun is performed.

Assertion Failed: Status code:404 did not match with 204

Problem

The test cases fail due to 'Assertion Failed: Status code:404 did not match with 204'. This error occurs when any previous scenario abruptly fails without executing all the steps of the scenario.

Sample Error Message

Example:

Given delete already existing data feeds ... failed in 0.346s

Assertion Failed: FAILED SUB-STEP: Given delete already existing configurations

Substep info: Assertion Failed: Status code:404 did not match with 204

Traceback (of failed substep):

File "/env/lib64/python3.9/site-packages/behave/model.py", line 1329, in run

match.run(runner.context)

File "/env/lib64/python3.9/site-packages/behave/matchers.py", line 98, in run

self.func(context, *args, **kwargs)

File "/var/lib/jenkins/cncats/ocnftest/ocnadd_steps.py", line 1906, in step_impl

assert False , 'Status code:{} did not match with 204'.format(context.response.status_code)Solution

The test cases which failed due to previous scenario failures, pass when a test case rerun is performed.

OCNADD Pods Enter a 'Image Pull' Error State

Problem

The OCNADD pods enter a 'Image Pull' error state after a few test cases are executed. This occurs when a test case appends a suffix to the image name and its execution is incomplete.

Solution

Correct the image name by editing the pods deployment and rerun the test suite.