11 Upgrading the OSM Cloud Native Environment

This chapter describes the tasks you perform in order to apply a change or upgrade to a component in the cloud native environment.

Creating a detailed upgrade plan can be a complex process. It is useful to start by mapping your use case to an upgrade path. These upgrade paths identify a set of sequenced activities that align to a CD stage. Once you know the activity sequence, you can then look for the detailed steps involved in each to come up with the comprehensive set of steps to be performed.

Upgrade paths consist of activities that fall into the following two main categories:

- Operational Procedures

- Component Upgrade Procedures

Operational Procedures

There are many different operational procedures and all of these affect the operating state of OSM. OSM cloud native provides the mechanism to change the operational state as described in "Running Operational Procedures".

The flowcharts in this chapter use the following image to depict an operational procedure:

Description of the illustration operational-procedure.png

Component Upgrade Procedures

These are the actual set of steps to perform a component upgrade and can be one of the following types:

- OSM Cloud Native Procedures: OSM cloud native owns the

component and therefore the upgrade procedure for that component. OSM cloud

native provides the mechanism to perform the upgrade via the scripts that are

bundled with the OSM cloud native toolkit.

An example of this is a change to a value in an OSM cloud native specification file (shape, project, and instance).

The flowcharts in this chapter use the following image to depict an OSM cloud native owned procedure.

Description of the illustration osm-cn-owned-procedure.png - External Procedures: These procedures are for components that

are part of the OSM cloud native operating environment, but are out of the

control of OSM cloud native. OSM cloud native does not determine how to apply

the upgrade, but provides recommendations on the operational state of OSM

accompanying the upgrade.

An example would be updating the operating system on a worker node.

The flowcharts in this chapter use the following image to depict an external upgrade procedure.

Description of the illustration external-upgrade-procedure-image.png - Miscellaneous upgrade procedures: There are some procedures that require special handling and are not captured in any of the upgrade paths. These are described in "Miscellaneous Upgrade Procedures".

Rolling Restart

Occasionally, you may need to restart OSM managed servers in a rolling fashion, one at a time. This does not result in downtime, but only reduced capacity for a limited period. A rolling restart can be triggered by invoking the restart-instance.sh script. This script can restart the whole instance in a rolling fashion, or only the admin server or all the managed servers in a rolling fashion. Some operations may automatically trigger rolling restart. These include online cartridge deployment and certain changes (image updates, tuning parameter changes, and so on) pushed via the upgrade-instance.sh script.

Identifying Your Upgrade Path

In order to prepare your detailed plan for an upgrade, you need to be able to map your upgrade use case to an upgrade path. Some common use cases are detailed in the following charts. If your use case is not listed, see "Upgrade Path Flow Chart", which guides you through the decision making process to prepare a specific upgrade path.

Table 11-1 Common Upgrade Paths

| Upgrade Type | Component | Upgrade Path | Requires Changing Image? |

|---|---|---|---|

| Cartridge Management | Deploy new cartridge version |

Online change, online cartridge deployment OR Offline change, offline cartridge deployment |

No |

| Cartridge Management | Redeploy a cartridge against an existing cartridge version |

Offline change, offline cartridge deployment |

No |

| Cartridge Management | Fast undeploy cartridge version |

Offline change, offline cartridge deployment OR Online change, online cartridge deployment |

No |

| Cartridge Management | Purge cartridge version | Online Change, external procedure, Manual restart | No |

| Configuration and Tuning | OSM cluster size (scaling up or down) | Online change, application upgrade | Not applicable |

| Configuration and Tuning | Java parameters (memory, GC, and so on) | Online change, application upgrade | Not applicable |

| Configuration and Tuning | WebLogic domain configuration (WDT such as JMS Queue configuration) | Online change, application upgrade | No |

| Configuration and Tuning | OSM configuration parameters (traditionally, oms-config.xml) | Online change, application upgrade | No |

| Database Storage Management | Create partition and clone database statistics | Offline Change, PDB upgrade | No |

| Database Storage Management | Online row-based order purge | Online Change, external procedure | No |

| Database Storage Management | Purge partition | Online Change, external procedure | No |

| Security parameters | New, renamed or deleted secrets passed to cartridges | Online change, application upgrade | No |

| Security parameters | Secrets value (For example, changing password) | Online change, external procedure, Manual restart | No |

| Software Upgrade and Patching | OSM release or patch upgrade with Database change | Offline change, PDB upgrade | Yes |

| Software Upgrade and Patching | Fusion MiddleWare upgrade | Online change, application upgrade (some exceptions needing offline change) | Yes |

| Software Upgrade and Patching | OSM patch upgrade without Database change | Online Change, application upgrade (some exceptions needing offline change) | Yes |

| Software Upgrade and Patching | Fusion MiddleWare overlay patches (for example, PSU or one-off patch) | Online Change, application upgrade (some exceptions needing offline change) | Yes |

| Software Upgrade and Patching | Java upgrade | Online Change, application upgrade | Yes |

| Software Upgrade and Patching | Linux | Online Change, application upgrade | Yes |

| Software Upgrade and Patching | Custom code or third-party tool (custom image) | Online Change, application upgrade (some exceptions needing offline change) | Yes |

| Software Upgrade and Patching | OSM cloud native toolkit | The release dictates the constraints. | Not applicable |

| Shared infrastructure | Operating system or hardware on worker node | Online change, external procedure | No |

| Shared infrastructure | Docker | Online change, external procedure | No |

| Shared infrastructure | WebLogic Operator minor upgrade (backward compatible) | Online change, external procedure | No |

| Shared infrastructure | WebLogic Operator major upgrade (non-backward compatible) | Online change, external procedure | No |

Once you understand the activities in your upgrade path, you can begin to map out the sequence of activities that you need to perform.

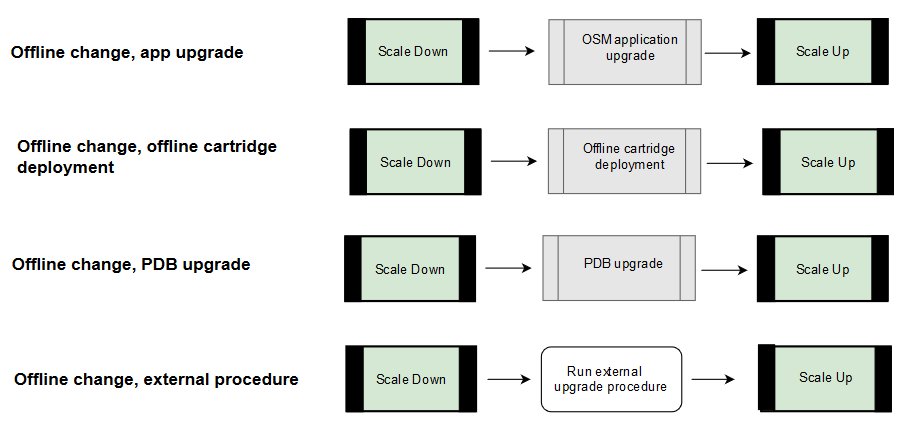

Offline Change Upgrade Paths

Offline changes are defined as those requiring OSM to be shutdown before the change can be applied.

All offline upgrades must start with a Scale Down procedure and end with a Scale Up procedure. You can find the explicit steps to perform these activities in Running Operational Procedures.

Once the cluster has been scaled down, you will need to perform either an external procedure (referencing documentation for the component) or follow an OSM cloud native owned procedure. See "OSM Cloud Native Upgrade Procedures" for details.

As an example, if your use case is to re-deploy an existing cartridge version, then the upgrade path would be "Offline change, offline cartridge deployment", the second flow in the above flow chart. The actual steps involve the following:

- Scale Down

- Edit the instance specification file to set cluster size to 0.

- Run upgrade-instance.sh.

- Offline cartridge deployment

- Edit the project specification file to change the cartridge version.

- Run manage-cartridges.sh with option sync.

- Scale Up

- Edit the instance specification file to return cluster size to original (1-18).

- Run upgrade-instance.sh.

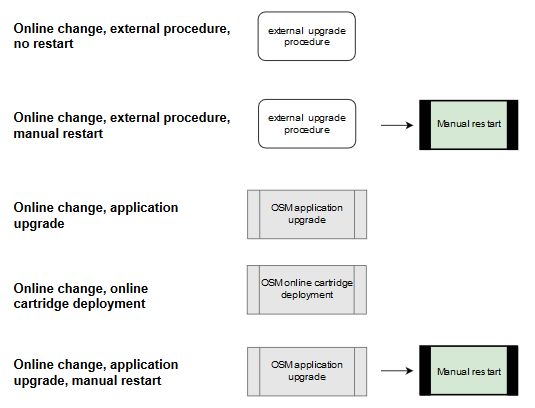

Online Change Upgrade Paths

Online changes are changes for which OSM can remain running while the component upgrade is performed. There is, therefore, no operational procedure at the start of the flow, but some paths include a rolling restart after the upgrade procedure is performed.

The component upgrade will either be an external procedure (referencing documentation for the component) or follow an OSM cloud native owned procedure described in "OSM Cloud Native Upgrade Procedures".

If explicit post-upgrade operational activities are required, you can find details in "Running Operational Procedures".

The following flowchart illustrates online change upgrade paths:

OSM Cloud Native Upgrade Procedures

The OSM cloud native owned upgrade procedures are:

- PDB upgrade

- OSM application upgrade

- Online cartridge deployment

- Offline cartridge deployment

Change or upgrade procedures that are dictated by OSM cloud native are applied using the scripts and the configuration provided in the toolkit.

PDB Upgrade Procedure

Changes impacting the PDB can be found in any of the specification files - project, instance or shape.

Examples include updating the OSM DB Installer image.

To perform a PDB upgrade procedure:

- Make the necessary modifications in your specification files.

- Invoke $OSM_CNTK/scripts/install-osmdb.sh with the command

appropriate for your use case.

To see a list of options, invoke with -h.

OSM Application Upgrade

Changes impacting the OSM application can be found in any of the specification files - project, instance or shape.

Examples include changing an existing value, changing the OSM image or supplying something new such as a secret or a new WDT extension.

To perform OSM application upgrade:

- Make the necessary modifications in your specification files.

- Invoke $OSM_CNTK/scripts/upgrade-instance.sh to push out the changes you just made to the running instance. This also triggers introspection for upgrade paths where introspection is required.

- In upgrade paths where a manual restart is required, restart the instance. See "Restarting the Instance" for details.

Offline Cartridge Deployment

Offline deployment mode supports deployment of new cartridges, deployment of new versions of existing cartridges, fast undeploy and re-deployment of existing cartridge versions with changes.

Changes impacting the cartridges can be found in the project specification file.

In order to perform an offline deployment, you must not have managed servers running.

To perform an offline cartridge deployment:

- Scale down your managed server count. See “Scaling Down the Cluster” for more details.

- Deploy the cartridges:

- Make the necessary modifications in your project specification.

- Run the following

command:

$OSM_CNTK/scripts/manage-cartridges.sh -p project -i instance -s spec_Path –c sync

- Scale up your managed server count. See “Scaling Up the Cluster” for more details.

Online Cartridge Deployment

Online deployment mode supports deployment of new cartridges, deployment of new versions of existing cartridges and fast undeploy. It does not support re-deployment of existing cartridges.

The changes impacting the cartridges can be found in the project specification file.

In order to perform an online deployment, you must have a minimum of two managed servers running.

To perform an online cartridge deployment:

- If necessary, scale up your managed server count (2 or more). See “Scaling Up the Cluster” for more details.

- Deploy the cartridges:

- Make the necessary modifications in your project specification.

- Invoke the following

script:

$OSM_CNTK/scripts/manage-cartridges.sh -p project -i instance -s spec_Path -c sync -o

Note:

If the changes to the cartridges in the project specification include more than one kind of update (new cartridge, new version, existing version, undeploy), if it includes redeploy of existing versions, then you must use offline cartridge deployment. Alternatively, if possible, break up the operational activity into two parts: one set of changes that satisfy the online deployment and then following that, a second set with all the cartridge redeployment changes to be done offline.Upgrades to Infrastructure

From the point of view of OSM instances, upgrades to the cloud infrastructure fall into two categories: rolling upgrades and one-time upgrades.

Note:

All infrastructure upgrades must continue to meet the supported types and versions listed in the OSM documentation's certification statement.Rolling upgrades are where, with proper high-availability planning (like anti-affinity rules), the instance as a whole remains available as parts of it undergo temporary outages. Examples of this are Kubernetes worker node OS upgrades, Kubernetes version upgrades and Docker version upgrades.

One-time upgrades affect a given instance all at once. The instance as a whole suffers either an operational outage or a control outage. Examples of this are WebLogic Operator upgrade and perhaps Ingress Controller upgrade.

Kubernetes and Docker Infrastructure Upgrades

Follow standard Kubernetes and Docker practices to upgrade these components. The impact at any point should be limited to one node - master (Kubernetes and OS) or worker (Kubernetes, OS, and Docker). If a worker node is going to be upgraded, drain and cordon the node first. This will result in all pods moving away to other worker nodes. This is assuming your cluster has the capacity for this - you may have to temporarily add a worker node or two. For OSM instances, any pods on the cordoned worker will suffer an outage until they come up on other workers. However, their messages and orders are redistributed to surviving managed server pods and processing continues at a reduced capacity until the affected pods relocate and initialize. As each worker undergoes this process in turn, pods continue to terminate and start up elsewhere, but as long as the instance has pods in both affected and unaffected nodes, it will continue to process orders.

WebLogic Operator Upgrade

To upgrade the WebLogic Operator, follow the Operator documentation. As long as the target version can co-exist in a Kubernetes cluster with the current version, a phased cutover can be performed. In this, you will perform a fresh install of the new version of the Operator into a new namespace. RBAC will be arranged here, identical to your existing Operator namespace. Once the new Operator is functioning, for each OSM cloud native project, un-register it from the old Operator and register it with the new Operator. This can be done at your convenience on a per-project basis. When all projects have been switched to the new Operator, the old Operator can be safely deleted.

export WLSKO_NS=old-namespace $OSM_CNTK/scripts/unregister-namespace -p project -t wlsko

export WLSKO_NS=new-namespace $OSM_CNTK/scripts/register-namespace -p project -t wlsko All instances with the transitioned project are impacted by this operation. However, there is no order processing outage during the transition. There is a control outage - where no changes can be pushed to the instances (upgrade-instance.sh or delete-instance.sh). Also, during the control outage, the termination of a pod does not immediately trigger healing. However, once the transition of the project is complete, the new Operator will react to any changed state (whether in the cluster, like pod termination, or in pushed changes, like instance upgrades) and run the required actions.

Ingress Controller Upgrade

Follow the documentation of your chosen Ingress Controller to perform an upgrade. Depending on the Ingress Controller used and its deployment in your Kubernetes environment, the OSM instances it serves may see a wide set of impacts, ranging from no impact at all (if the Ingress Controller supports a clustered approach and can be upgraded that way) to a complete outage.

To take the sample of Traefik that OSM cloud native toolkit uses as an Ingress Controller illustration:

An approach identical to that of WebLogic Operator upgrade can be followed for Traefik upgrade. The new Traefik can be installed into a new namespace, and one-by-one, projects can be unregistered from the old Traefik and registered with the new Traefik.

export TRAEFIK_NS=old-namespace $OSM_CNTK/scripts/unregister-namespace -p project -t traefik

export TRAEFIK_NS=new-namespace $OSM_CNTK/scripts/register-namespace -p project -t traefikDuring this transition, there will be an outage in terms of the outside world interacting with OSM. Any data that flows through the ingress will be blocked until the new Traefik takes over. This includes GUI traffic, order injection, API queries, and SAF responses from external systems. This outage will affect all the instances in the project being transitioned.

Miscellaneous Upgrade Procedures

This section describes miscellaneous upgrade scenarios.

Network File System (NFS)

If an instance is created successfully, but a change to the NFS configuration is required, then the change cannot be made to a running OSM instance. In this case, the procedure is as follows:

- Perform a fast delete. See "Running Operational Procedures" for details.

- Update the

nfsdetails in the instance specification. - Start the instance.

Running Operational Procedures

This section describes the tasks you perform on the OSM server in response to a planned upgrade to the OSM cloud native environment. You must consider if the change in the environment fundamentally affects OSM processing to the extent that OSM should not run when the upgrade is applied or OSM can run during the upgrade but must be restarted to properly process the change.

The operational procedures are performed using the OSM cloud native specification files and scripts.

- Trigger introspection

- Scaling down the cluster

- Scaling up the cluster

- Restarting the cluster

- Fast delete

- Shutting down the cluster

- Starting up the cluster

Triggering Introspection

When any of the specification files have changed, invoke the upgrade-instance.sh script to trigger the operator's introspector to examine the change and apply it to the running instance.

Scaling Down the Cluster

The scaling down procedure described here is only in the context of the upgrade flow diagram. Hence, scaling down is down to 0 managed servers. A generalized scaling can change the cluster size down to a value between 0 and 18 (both inclusive) in any desired increment or decrement.

To scale down the cluster, edit the instance specification and change the

clusterSize parameter to 0. This terminates all the managed server pods, but leaves the admin

server up and running.

$OSM_CNTK/scripts/upgrade-instance.sh -p project -i instance -s $SPEC_PATHScaling Up the Cluster

The scaling up procedure described here is only in the context of the upgrade flow diagram. Hence, scaling up is up to the initial cluster size. A generalized scaling can change the cluster size up to a value between 0 and 18 (both inclusive) in any desired increment or decrement.

To scale up the cluster, edit the instance specification and change the value

of the clusterSize parameter to its original value to

return the cluster to its previous operational state.

$OSM_CNTK/scripts/upgrade-instance.sh -p project -i instance -s $SPEC_PATHRestarting the Instance

The OSM cloud native toolkit provides a script (restart-instance.sh) that you can use to perform different flavors of restarts on a running instance of OSM cloud native.

Following is the usage of the restart-instance.sh script

restart-instance.sh parameters

-p projectName : mandatory

-i instanceName : mandatory

-s specPath : mandatory; locations of specification files

-m customExtPath : optional; locations of custom extension files

-r restartType : mandatory; what kind of restart is requested

# specPath and customExtPath take a colon(:) delimited list of directories

# restartType can take the following values:

* full: Restarts the whole instance (rolling restart)

* admin: Restarts the WebLogic Admin Server only

* ms: Restarts all the Managed Servers (rolling restart)

# or just -h for help$OSM_CNTK/scripts/restart-instance.sh -p project -i instance -s $SPEC_PATH -r fullFast Delete

When the entire domain, including the admin server, needs to be taken offline, then the full shutdown and full startup procedures follow. This can be used to perform a "fast delete" or "dehydration" of the domain, instead of a full delete-instance operation where you may have to be concerned about the secrets and other pre-requisites being deleted. To quickly restore the domain, simply perform the startup procedure.

Shutting Down the Cluster

serverStartPolicy parameter to

NEVER. This terminates all the

pods.# Operational control parameters

# scope - domain or cluster

serverStartPolicy: NEVER $OSM_CNTK/scripts/upgrade-instance.sh -p project -i instance -s $SPEC_PATHStarting Up the Cluster

serverStartPolicy parameter to

IF_NEEDED. This starts up all the

pods.# Operational control parameters

# scope - domain or cluster

serverStartPolicy: IF_NEEDED $OSM_CNTK/scripts/upgrade-instance.sh -p project -i instance -s $SPEC_PATHUpgrade Path Flow Chart

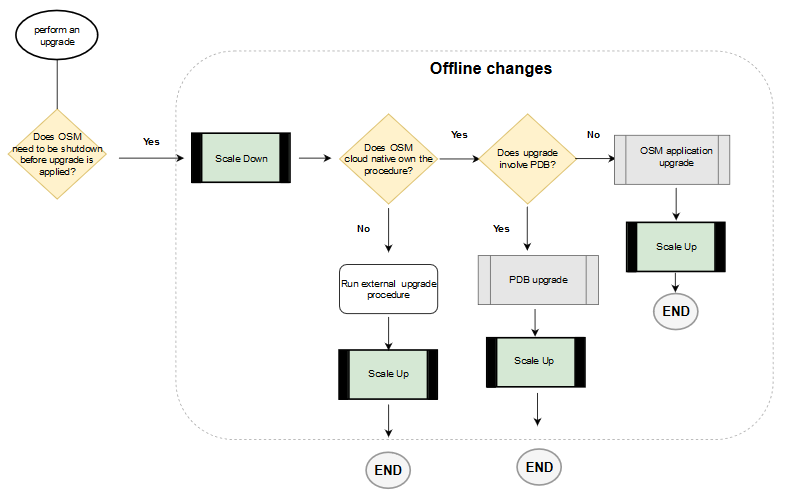

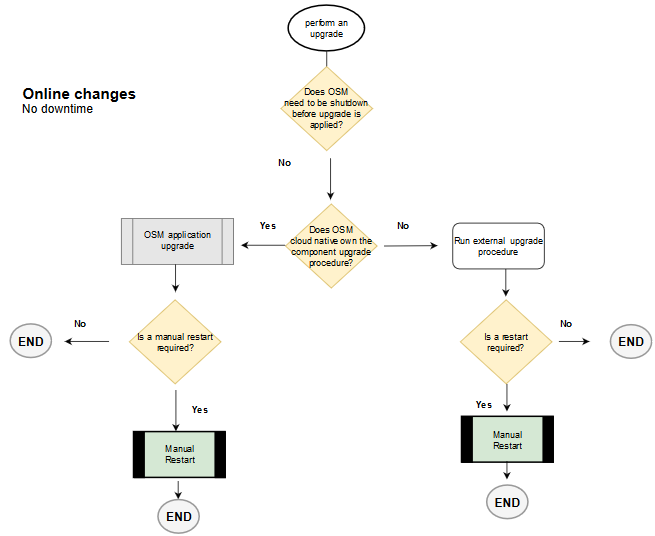

When comparing and contrasting the different flows, identifying common steps or divergences, it can be useful to have a combined view of the flowcharts along with the main decision points. This can be useful when trying to automate parts of the process.

The first decision to make is whether OSM can be running when you apply the change. Typically, OSM needs to be shutdown for PDB impacting scenarios and the exceptions listed in the "Exceptions" section.

The following flowchart illustrates the flow for offline upgrades and various scenarios.

Figure 11-3 Upgrade Path Flow for Offline Changes

The following flowchart illustrates the flow for online upgrades and various scenarios.

Figure 11-4 Upgrade Path Flow for Online Changes